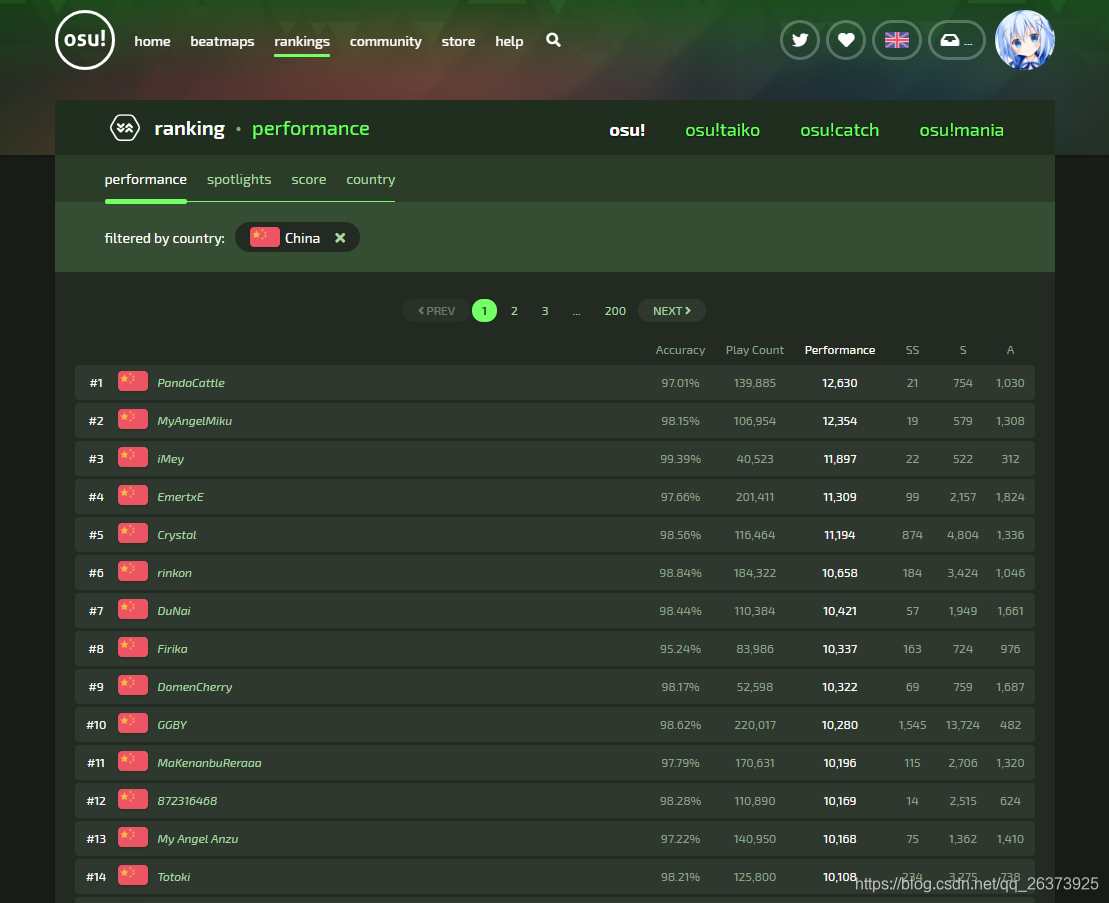

osu!是一个国际性的音乐游戏,地区排行榜长这样:

这是地址 https://osu.ppy.sh/rankings/osu/performance?country=CN

因为总共有200页,所以写了个脚本抓数据

可以对各个地区的玩家进行分析:有哪些玩家疑似开了小号,谁pp/pc涨很快,等等等等

地区不光是我所展示的CN,也可以是US、HK、TW等等

爬取数据用到了 BeautifulSoup、requests、lxml

保存数据用到了 json、sqlalchemy

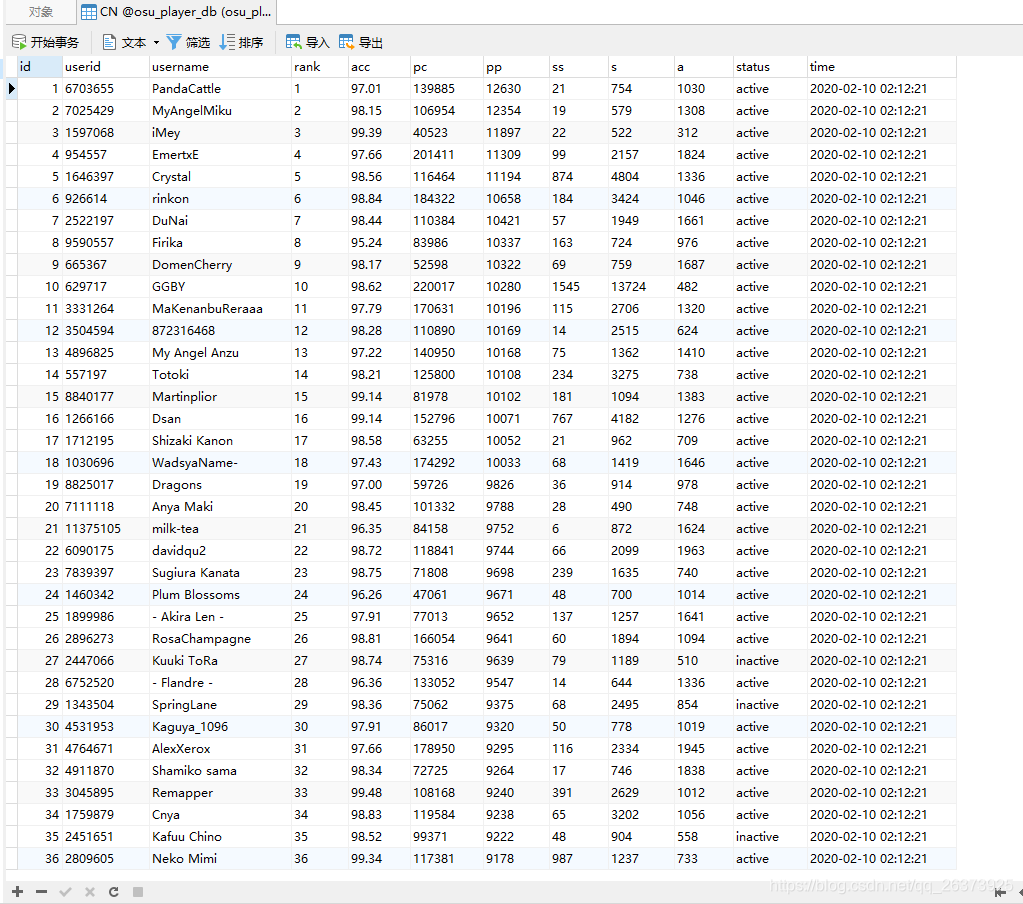

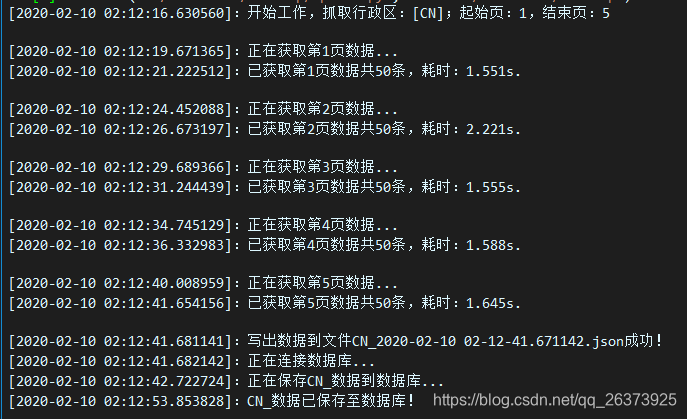

爬取结果如下:

mysql数据库。

用到了sqlalchemy

status是哪来的?

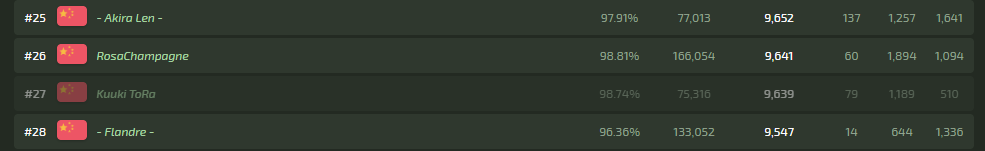

可以看到在下图中,排名#27的玩家背景色更深,说明此玩家已经有几十天没打成绩了。

所以 active 和 inactive 就是指玩家近期 活跃 或 不活跃 的意思

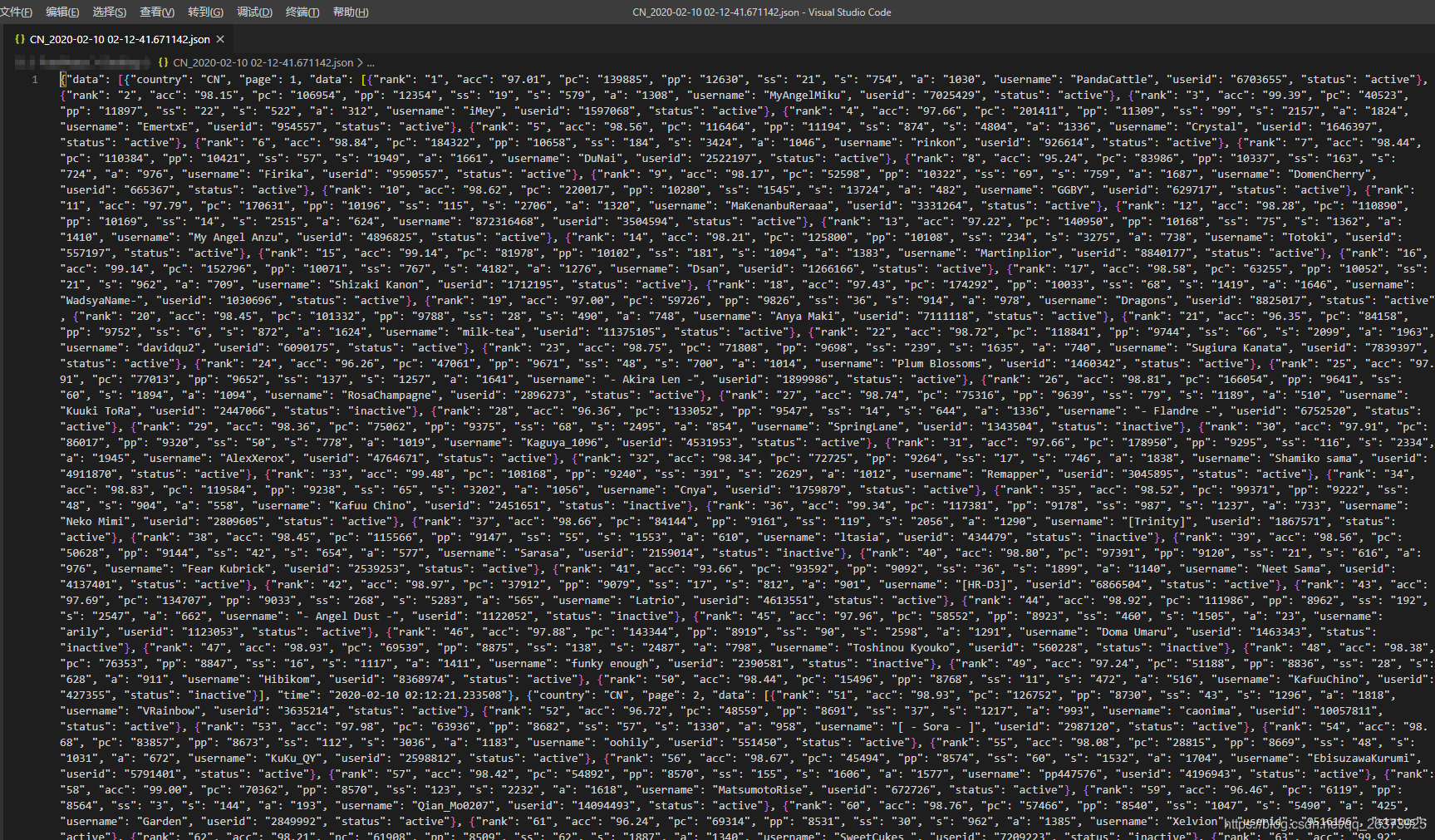

下面这个是json文件,没格式化,所以看起来一团糟

脚本运行:

代码

文件1 spider.py:爬虫主程序

'''

主程序

@author: PurePeace

@time: 2020年2月10日 02:03:09

'''

import time, datetime, re, requests, random, json, mysqlSave

from bs4 import BeautifulSoup

# get now timeString or timeStamp

def getTime(needFormat=0, formatMS=True):

if needFormat != 0:

return datetime.datetime.now().strftime(f'%Y-%m-%d %H:%M:%S{r".%f" if formatMS else ""}')

else:

return time.time()

# breakTime:每爬一页休息几秒

def getPage(country='CN', index=1, breakTime=3, datas=[], startTime=0):

if datas != None and len(datas) > 0:

print(f'[{getTime(1)}]:已获取第{index}页数据共{len(datas)}条,耗时:{round(getTime()-startTime, 3)}s.\n')

return datas

time.sleep(breakTime + random.random())

header = {'User-Agent': 'Mozilla/5.0 (iPhone; CPU iPhone OS 11_0 like Mac OS X) AppleWebKit/604.1.38 (KHTML, like Gecko) Version/11.0 Mobile/15A372 Safari/604.1'}

print(f'[{getTime(1)}]:正在获取第{index}页数据...')

startTime = getTime()

try:

page = requests.get(f'https://osu.ppy.sh/rankings/osu/performance?country={country}&page={index}', headers=header, timeout=30)

soup = BeautifulSoup(page.text, 'lxml')

datas = soup.find_all('tr', attrs={'class':'ranking-page-table__row'})

except:

datas = []

if len(datas) == 0 or datas == None:

print(f'[{getTime(1)}]:获取第{index}页数据时失败,稍后进行重试...')

time.sleep(breakTime * 3 + random.random())

return getPage(country, index, breakTime, datas, startTime)

def userData(pageData, index=0):

needs = ('rank','acc','pc','pp','ss','s','a')

number = re.compile(r'\d+\.?\d*')

se = pageData[index].find_all('td', attrs={'class': 'ranking-page-table__column'})

user = se.pop(1).find('a', attrs={'class': 'ranking-page-table__user-link-text js-usercard'})

userid = user.attrs.get('data-user-id')

username = user.text.strip()

data = { k: number.findall(se[i].text.replace(',',''))[0] for i, k in enumerate(needs) }

data['username'], data['userid'] = username, userid

if 'ranking-page-table__row--inactive' in pageData[index].attrs['class']: data['status'] = 'inactive'

else: data['status'] = 'active'

return data

def fetchData(startPage=1, endPage=1, country='CN'):

data = []

print(f'[{getTime(1)}]:开始工作,抓取行政区:[{country}];起始页:{startPage},结束页:{endPage}\n')

for idx in range(startPage, endPage+1):

thisPage = getPage(country, index=idx)

userDatas = [userData(thisPage, index=i) for i in range(len(thisPage))]

data.append({'country': country, 'page': idx, 'data': userDatas, 'time': getTime(1)})

return {'data': data, 'country': country, 'time': getTime(1)}

def saveData(data):

fileName = f'{data.get("country")}_{data.get("time").replace(":","-")}.json'

with open(fileName, 'w', encoding='utf-8') as outFile:

json.dump(data, outFile, ensure_ascii=False)

print(f'[{getTime(1)}]:写出数据到文件{fileName}成功!')

mysqlSave.addData(data)

result = fetchData(startPage=1, endPage=5, country='CN')

saveData(result)

文件2 mysqlSave.py:用sqlalchemy保存数据至mysql(不同地区自动建表,如CN/US等等)

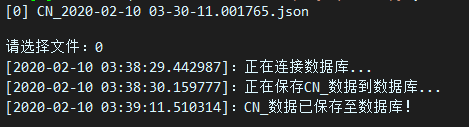

同时可手动选择保存的json数据文件上传mysql数据库,如图

'''

保存数据至mysql

@author: PurePeace

@time: 2020年2月10日 02:03:09

'''

import datetime, time

from sqlalchemy import Integer, Column, String, DateTime, create_engine

from sqlalchemy.orm import sessionmaker

from sqlalchemy.ext.declarative import declarative_base

Base = declarative_base()

class Country(Base):

__tablename__ = 'UNKNOWN'

__table_args__ = {'mysql_engine': 'InnoDB','mysql_charset': 'utf8'}

id = Column(Integer, primary_key=True, autoincrement=True)

userid = Column(String(100))

username = Column(String(100))

rank = Column(String(100))

acc = Column(String(100))

pc = Column(String(100))

pp = Column(String(100))

ss = Column(String(100))

s = Column(String(100))

a = Column(String(100))

status = Column(String(100))

time = Column(DateTime)

# get now timeString or timeStamp

def getTime(needFormat=0, formatMS=True):

if needFormat != 0:

return datetime.datetime.now().strftime(f'%Y-%m-%d %H:%M:%S{r".%f" if formatMS else ""}')

else:

return time.time()

def addData(data):

print(f'[{getTime(1)}]:正在连接数据库...')

engine = create_engine('mysql+pymysql://数据库用户名:密码@地址:端口号/数据库名')

DBSession = sessionmaker(bind=engine)

country = data.get('country', 'UNKNOWN')

Country.__table__.name = country

Base.metadata.create_all(engine)

session = DBSession()

print(f'[{getTime(1)}]:正在保存{country}_数据到数据库...')

for page in data['data']:

for user in page['data']:

session.add(

Country(

userid=user['userid'],

username=user['username'],

rank = user['rank'],

acc = user['acc'],

pc = user['pc'],

pp = user['pp'],

ss = user['ss'],

s = user['s'],

a = user['a'],

status = user['status'],

time = page['time']

)

)

session.commit()

session.close()

print(f'[{getTime(1)}]:{country}_数据已保存至数据库!')

# 手动上传json数据至数据库(请将json文件放置于同一目录下)

if __name__ == '__main__':

import os

files = [i for i in os.listdir() if '.json' in i]

for idx, it in enumerate(files):

print(f'[{idx}] {it}')

fileName = files[int(input('请选择文件:'))]

with open(fileName, 'r', encoding='utf-8') as file:

addData(json.load(file))

结束。