数据集

提取码:krry

源码:

import pandas as pd

import numpy as np

from sklearn.linear_model import LogisticRegression

#加载数据

def load_data(path):

data = pd.read_csv(path, sep='\t', names=[i for i in range(22)])

data = np.array(data).tolist()

x = []; y = []

for i in range(len(data)):

y.append(data[i][-1])

del data[i][-1]

x.append(data[i])

x = np.array(x)

y = np.array(y)

return x, y

def sigmoid(z):

return 1 / (1 + np.exp(-z))

def gradient(X, Y, w):

res = []

for k in range(22):

sum = 0.0

for i in range(len(Y)):

sum += (-(Y[i] - np.dot(w.T, X[i])) * X[i, k])

res.append(sum)

res = np.array(res)

return res

#基于梯度下降法

def logistics():

train_x, train_y = load_data('horse_colic/horseColicTraining.txt')

test_x, test_y = load_data('horse_colic/horseColicTest.txt')

X = np.column_stack((np.ones((299, 1), float), train_x))

Y = train_y

Y = Y.reshape(299, 1)

learning_rate = 0.00001

step = 200 #迭代次数

w = np.random.random((22, 1))

while step > 0:

res = gradient(X, Y, w)

for i in range(len(w)):

w[i] -= (learning_rate * res[i])

step -= 1

test_x = np.column_stack((np.ones((67, 1), float), test_x)) #最前面加上一列1

sum1 = 0

for i in range(len(test_y)):

res = np.dot(w.T, test_x[i])

res = sigmoid(res)

if res > 0.5 and test_y[i] == 1:

sum1 += 1

elif res <= 0.5 and test_y[i] == 0:

sum1 += 1

else:

continue

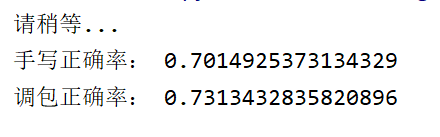

print('手写正确率:', sum1 / len(test_y))

#基于sklearn包

def sklearn_logistics():

train_x, train_y = load_data('horse_colic/horseColicTraining.txt')

test_x, test_y = load_data('horse_colic/horseColicTest.txt')

clf = LogisticRegression(C=1.0, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, max_iter=100, multi_class='ovr', n_jobs=1,

penalty='l2', random_state=None, solver='liblinear', tol=0.0001,

verbose=0, warm_start=False)

clf.fit(train_x, train_y)

print('调包正确率:', clf.score(test_x, test_y))

if __name__ == '__main__':

print('请稍等...')

logistics()

sklearn_logistics()

结果不是很理想!!