kafka的部分安装可以参考,如果已经有kafka环境可以跳过:https://blog.51cto.com/mapengfei/1926065

新建包,com.mafei.apitest,新建一个scala Object类,

package com.mafei.apitest

import java.util.Properties

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.api.scala.createTypeInformation

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer011

//获取传感器数据

case class SensorReading(id: String,timestamp: Long, temperature: Double)

object SourceTest {

def main(args: Array[String]): Unit = {

//创建执行环境

val env = StreamExecutionEnvironment.getExecutionEnvironment

// 1、从集合中读取数据

val dataList = List(

SensorReading("sensor1",1603766281,41),

SensorReading("sensor2",1603766282,42),

SensorReading("sensor3",1603766283,43),

SensorReading("sensor4",1603766284,44)

)

// val stream1 = env.fromCollection(dataList)

//

// stream1.print()

// val stream2= env.readTextFile("/opt/java2020_study/maven/flink1/src/main/resources/sensor.txt")

//

// stream2.print()

// 3、从kafka中读取数据

val properties = new Properties()

properties.setProperty("bootstrap.servers", "127.0.0.1:9092")

properties.setProperty("group.id", "consumer-group")

properties.setProperty("auto.offset.reset", "latest")

val streamKafka = env.addSource(new FlinkKafkaConsumer011[String]("sensor",new SimpleStringSchema(), properties))

streamKafka.print()

//执行

env.execute(" source test")

}

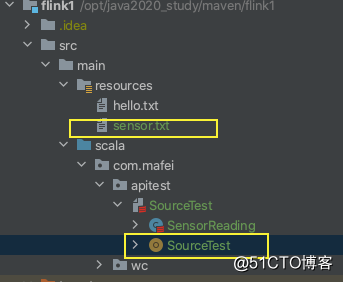

}代码结构图:

代码运行效果:

3> 这是从kafka取出来的

数据生产端(启动后黑框,直接随意输入,回车就发出去了):

// 开启producer,设置kafka的broker为127.0.0.1:9092 ,发到sensor的topic

/opt/kafka_2.11-0.10.2.0/bin/kafka-console-producer.sh --broker-list 127.0.0.1:9092 --topic sensor

如果要测试可以使用命令行消费:

/opt/kafka_2.11-0.10.2.0/bin/kafka-console-consumer.sh --topic sensor --bootstrap-server 127.0.0.1:9092 -consumer.config /opt/kafka_2.11-0.10.2.0/config/consumer.properties