三、c++版本部署,编写dockfile

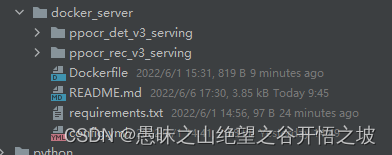

1、部署服务端

1.1、目录

1.2、dockerfile

FROM registry.baidubce.com/paddlepaddle/serving:0.9.0-cuda10.1-cudnn7-devel

COPY . /deploy

WORKDIR /deploy

# Install requirements

RUN pip config set global.index-url https://mirror.baidu.com/pypi/simple \

&& python3.7 -m pip install --upgrade setuptools \

&& python3.7 -m pip install --upgrade pip \

&& python3.7 -m pip install paddlepaddle-gpu==2.3.0.post101 \

-f https://www.paddlepaddle.org.cn/whl/linux/mkl/avx/stable.html \

&& pip3.7 install -r requirements.txt

ENTRYPOINT python3.7 -m paddle_serving_server.serve \

--model ppocr_det_v3_serving ppocr_rec_v3_serving \

--op GeneralDetectionOp GeneralInferOp --port 9293 --gpu_ids 0

1.3、requirements.txt

paddle-serving-client==0.9.0

paddle-serving-app==0.9.0

paddle-serving-server-gpu==0.9.0.post101

1.4、config.yml

#rpc端口, rpc_port和http_port不允许同时为空。当rpc_port为空且http_port不为空时,会自动将rpc_port设置为http_port+1

rpc_port: 18091

#http端口, rpc_port和http_port不允许同时为空。当rpc_port可用且http_port为空时,不自动生成http_port

http_port: 9998

#worker_num, 最大并发数。当build_dag_each_worker=True时, 框架会创建worker_num个进程,每个进程内构建grpcSever和DAG

##当build_dag_each_worker=False时,框架会设置主线程grpc线程池的max_workers=worker_num

worker_num: 10

#build_dag_each_worker, False,框架在进程内创建一条DAG;True,框架会每个进程内创建多个独立的DAG

build_dag_each_worker: False

dag:

#op资源类型, True, 为线程模型;False,为进程模型

is_thread_op: False

#重试次数

retry: 10

#使用性能分析, True,生成Timeline性能数据,对性能有一定影响;False为不使用

use_profile: True

tracer:

interval_s: 10

op:

det:

#并发数,is_thread_op=True时,为线程并发;否则为进程并发

concurrency: 2

#当op配置没有server_endpoints时,从local_service_conf读取本地服务配置

local_service_conf:

#client类型,包括brpc, grpc和local_predictor.local_predictor不启动Serving服务,进程内预测

client_type: local_predictor

#det模型路径

model_config: ./ppocr_det_v3_serving

#Fetch结果列表,以client_config中fetch_var的alias_name为准,不设置默认取全部输出变量

#fetch_list: ["sigmoid_0.tmp_0"]

#计算硬件ID,当devices为""或不写时为CPU预测;当devices为"0", "0,1,2"时为GPU预测,表示使用的GPU卡

devices: "0"

ir_optim: True

rec:

#并发数,is_thread_op=True时,为线程并发;否则为进程并发

concurrency: 1

#超时时间, 单位ms

timeout: -1

#Serving交互重试次数,默认不重试

retry: 1

#当op配置没有server_endpoints时,从local_service_conf读取本地服务配置

local_service_conf:

#client类型,包括brpc, grpc和local_predictor。local_predictor不启动Serving服务,进程内预测

client_type: local_predictor

#rec模型路径

model_config: ./ppocr_rec_v3_serving

#Fetch结果列表,以client_config中fetch_var的alias_name为准, 不设置默认取全部输出变量

#fetch_list:

#计算硬件ID,当devices为""或不写时为CPU预测;当devices为"0", "0,1,2"时为GPU预测,表示使用的GPU卡

devices: "0"

ir_optim: True

1.5、制作镜像指令

nvidia-docker build -t xx/ocr-server-qa:1.0.0.0630 .

nvidia-docker run -p 9293:9293 --name ocr-server-qa -d xx/ocr-server-qa:1.0.0.0630

nvidia-docker exec -it ocr-server-qa /bin/bash

docker logs -f ocr-server-qa

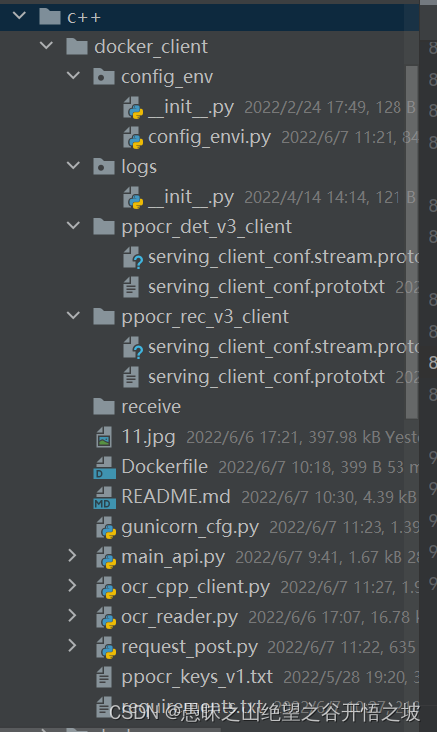

2、部署客户端

2.1、目录

2.2、dockerfile

FROM registry.baidubce.com/paddlepaddle/serving:0.9.0-cuda10.1-cudnn7-devel

COPY . /deploy

WORKDIR /deploy

RUN pip config set global.index-url https://mirror.baidu.com/pypi/simple \

&& pip3.7 install --upgrade setuptools \

&& pip3.7 install --upgrade pip \

&& pip3.7 install -r requirements.txt

EXPOSE 9539

ENTRYPOINT ["gunicorn", "-c", "gunicorn_cfg.py", "main_api:app"]

2.3、requirements.txt

paddle-serving-client==0.9.0

paddle-serving-app==0.9.0

paddle-serving-server-gpu==0.9.0.post101

Flask-RESTful==0.3.9

Flask==1.1.4

flask_cors==3.0.10

prometheus-flask-exporter==0.18.7

gunicorn==20.1.0

gevent==21.12.0

2.4、gunicore配置

# import multiprocessing

from config_env.config_envi import common

bind = "0.0.0.0:%s" % common['model_ip_port_client'].split(':')[1] # 绑定监听ip和端口号

# workers = multiprocessing.cpu_count() * 2 # 同时执行的进程数,推荐为当前CPU个数*2+1

workers = 1

threads = 8

# worker_class = "gevent" # sync, gevent,meinheld #工作模式选择,默认为sync,这里设定为gevent异步

# backlog = 2048 # 等待服务客户的数量,最大为2048,即最大挂起的连接数

# max_requests = 1000 # 默认的最大客户端并发数量

timeout = 600 # 进程沉默超时多少秒,杀死进程

# graceful_timeout = 30

daemon = False # 是否后台运行

reload = False # 当代码有修改时,自动重启workers。适用于开发环境。

capture_output = True # 将 stdout/stderr 重定向到错误日志中的指定文件。

loglevel = "info" # debug, info, warning, error, critical.

pidfile = "logs/gunicore.pid" # 设置进程id pid文件的文件名

accesslog = "logs/gunicorn_http.log" # 设置访问日志

access_log_format = '%(h)s %(l)s %(u)s %(t)s "%(r)s" %(s)s %(b)s "%(f)s" "%(a)s" %(T)s %(p)s'

errorlog = "logs/gunicorn_info.log" # 设置问题记录日志

2.5、config_envi.py配置

# -*- coding: utf-8 -*-

select_env = 'qa'

if select_env == 'prd':

# model

model_ip_port_server = 'xx:9293'

model_ip_port_client = 'xx:9539'

elif select_env == 'pre':

# model

model_ip_port_server = 'xx:9293'

model_ip_port_client = 'xx:9539'

elif select_env == 'qa':

# model

model_ip_port_server = 'xx:9293'

model_ip_port_client = 'xx:9539'

elif select_env == 'dev':

# model

model_ip_port_server = 'xx:9293'

model_ip_port_client = 'xx:9539'

common = {

# model

'model_ip_port_server': model_ip_port_server,

'model_ip_port_client': model_ip_port_client

}

2.6、ocr_cpp_client.py

# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# pylint: disable=doc-string-missing

import base64

from paddle_serving_client import Client

from ocr_reader import OCRReader

from config_env.config_envi import common

class ImageOCR(object):

def __init__(self):

self.client = Client()

# TODO:load_client need to load more than one client model.

# this need to figure out some details.

self.client.load_client_config(['ppocr_det_v3_client', 'ppocr_rec_v3_client'])

self.client.connect([common['model_ip_port_server']])

self.ocr_reader = OCRReader(char_dict_path="./ppocr_keys_v1.txt")

def cv2_to_base64(self, image):

return base64.b64encode(image).decode('utf8') # data.tostring()).decode('utf8')

def ocr_image(self, test_img_path):

with open(test_img_path, 'rb') as file:

image_data = file.read()

image = self.cv2_to_base64(image_data)

res_list = []

fetch_map = self.client.predict(feed={

"x": image}, fetch=[], batch=True)

one_batch_res = self.ocr_reader.postprocess(fetch_map, with_score=True)

for res in one_batch_res:

res_list.append(res[0])

res = ''.join(res_list)

return res

if __name__ == '__main__':

image_ocr = ImageOCR()

test_img_path_ = "./11.jpg"

print(image_ocr.ocr_image(test_img_path_))

2.7、ocr_reader.py

参考:官方代码

2.8、主函数main_api.py

from flask import Flask

from flask_restful import reqparse, Api, Resource

from flask_cors import *

from werkzeug.datastructures import FileStorage

from prometheus_flask_exporter import PrometheusMetrics

from ocr_cpp_client import ImageOCR

app = Flask(__name__)

app.config["JSON_AS_ASCII"] = False

app.config["RESTFUL_JSON"] = {

'ensure_ascii': False}

CORS(app, supports_credentials=True)

api = Api(app)

metrics = PrometheusMetrics(app)

Todos = {

'task': 'ocr',

}

parser = reqparse.RequestParser()

parser.add_argument(

'file',

required=False,

type=FileStorage,

location='files',

help="file is wrong.")

# Todo

# shows a single todo item and lets you delete a todo item

image_ocr = ImageOCR()

class OCRImg(Resource):

"""

pass

"""

def get(self):

return {

'task': Todos.get('task')}

def post(self):

args = parser.parse_args()

file_path = './receive/file.' + args['file'].filename.split('.')[-1]

args['file'].save(file_path)

results = image_ocr.ocr_image(file_path)

return results, 200

# Actually setup the Api resource routing here

api.add_resource(OCRImg, '/nlp/v1/%s/ocr_res' % Todos.get('task'))

if __name__ == '__main__':

app.run(debug=False, host='0.0.0.0', port=5000)

# server = pywsgi.WSGIServer(('0.0.0.0', 5000), app, log=LogWrite()) # 日志模块影响通信效率,在nginx端做

# server = pywsgi.WSGIServer(('0.0.0.0', 5000), app)

# server.serve_forever()

2.8、外部调用函数request_post.py

# -*- coding: utf-8 -*-

import os

import requests

from config_env.config_envi import common

def ocr_Paddle(root, name):

# root = 'E:\\png'

# name = '2.png'

file = os.path.join(root, name)

files_t = {

'file': (name, open(file, 'rb'))}

headers = {

'File-Name': name}

r = requests.post("http://%s/nlp/v1/ocr/ocr_res" % common['model_ip_port_client'], files=files_t, headers=headers)

print(eval(r.text))

return eval(r.text)

if __name__ == '__main__':

ocr_Paddle('./', '11.jpg')

2.9、制作镜像指令

nvidia-docker build -t xx/ocr-client-qa:1.0.0.0630 .

nvidia-docker run -p 9539:9539 --name ocr-client-qa -d xx/ocr-client-qa:1.0.0.0630

nvidia-docker exec -it ocr-client-qa /bin/bash