上一话

复现Object Detection,网络架构有:

1.SSD: Single Shot MultiBox Detector(√)

2.RetinaNet

3.Faster RCNN

4.YOLO系列

....

代码:

1.复现SSD

1.2anchor(PriorBox)

这里的anchor表示的是SSD原代码中的 PriorBox 也就是 DefaultBox,中文也说 先验框 或者 默认框,用于在特征图(也可以说是在原图上,因为feature map 和原图的关系可以用比例来表示)上构造box,例如图 1(b),(c)在8x8 feature map与4x4 feature map 产生的anchors,8x8 feature map能框住蓝色gt的猫,4x4 feature map则能框住红色gt的狗,从8x8 feature map到4x4 feature map,特征图越来越小,分辨率越来越低,语义信息越丰富。

feature map size

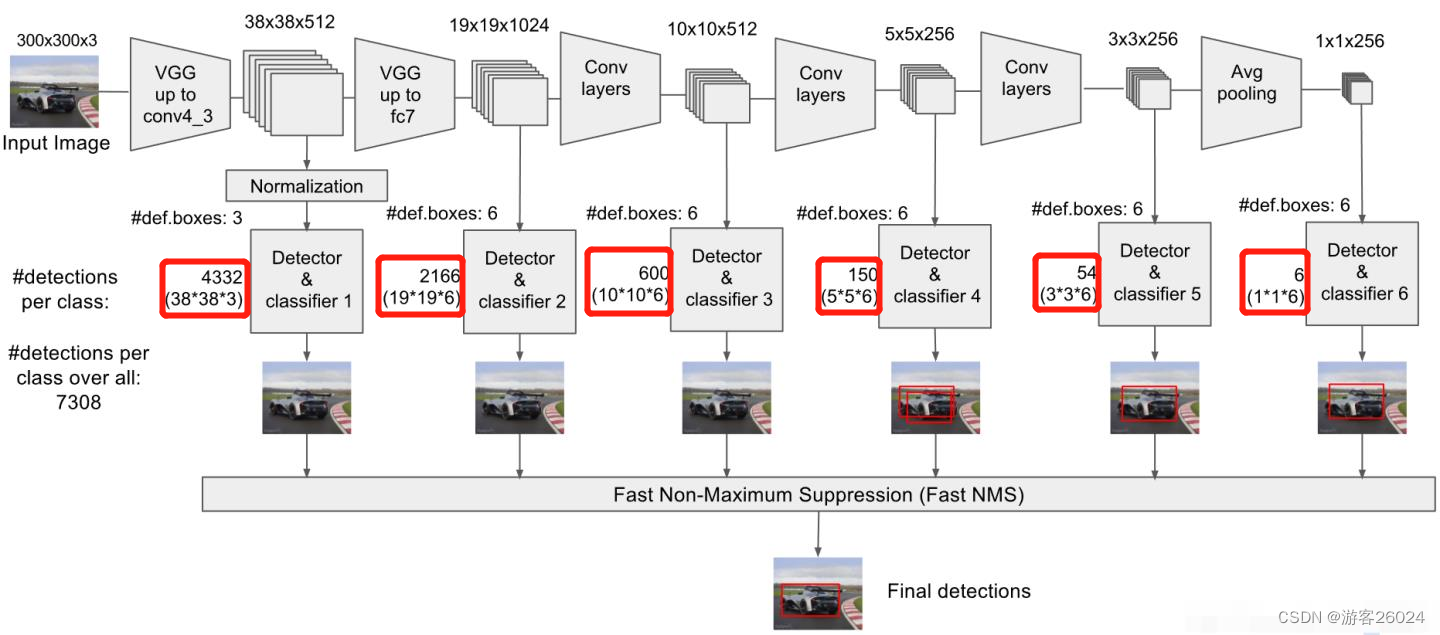

图2 为SSD的网络架构,可以看出conv4_3的feature map size为38x38,conv7的feature map size为19x19,conv8_2的feature map size为10x10,conv9_2的feature map size为5x5,conv10_2的feature map size为3x3,conv11_2的feature map size为1x1.

conv feature map size

conv4_3 38x38

conv7 19x19

conv8_2 10x10

conv9_2 5x5

conv10_2 3x3

conv11_2 1x1

表示 先验框 或者 默认框(anchor)与原图的比例

SSD论文中给出公式

其中 表示特征图个数,一般为5,因为conv4_3的特征图是单独设置

表示先验框 或者 默认框 (anchor)与原图比例的最小值,0.2

表示先验框 或者 默认框 (anchor)与原图比例的最大值,0.9

但是这个 也可以有其他方式设置,比如按照数据集来设置

voc数据集的 ,在代码中表示min_sizes

,在代码中表示max_sizes

coco数据集的 ,在代码中表示min_sizes

,在代码中表示max_sizes

aspect ratios 表示先验框 或者 默认框 (anchor)长宽比 可设置为 ,

其中conv4_3的feature map可设置4个长宽比

conv7的 feature map可设置的6个长宽比

conv8_2的feature map可设置的6个长宽比

conv9_2的feature map可设置的6个长宽比

conv10_2的feature map可设置的4个长宽比

conv11_2的feature map可设置的4个长宽比

所以当aspect ratios为1时不仅有 这个比例,还有

例如voc数据集所设置的anchor长宽比,其中

feature map anchor s_k{aspect ratios} number anchor

38*38 21{1/2,1,2}, sqrt(21*45){1} 38*38*4

19*19 45{1/3,1/2,1,2,3}, sqrt(45*99){1} 19*19*6

10*10 99{1/3,1/2,1,2,3}, sqrt(99*153){1} 10*10*6

5*5 153{1/3,1/2,1,2,3}, sqrt{153*207}{1} 5*5*6

3*3 207{1/2,1,2}, sqrt(207*261){1} 3*3*4

1*1 261{1/2,1,2}. sqrt(261*315){1} 1*1*4

total number anchors: 38*38*4+19*19*6+10*10*6+5*5*6+3*3*4+1*1*4=8732anchor根据长宽比所产生的w与h

当aspect ratios !=1时,w = s_k * sqrt(aspect ratios) , h = s_k / sqrt(aspect ratios) 。

当aspect_ratios == 1时,w,h = s_k, 或者 w,h= sqrt(s_k*s_(k+1))

每组anchor的中心点 , 由上计算

,+0.5是为将其移至中心点,或者可以解释为误差

概述:首先计算f_k(300/step_i),在通过f_k计算每组anchor的中心点cx,cy,计算两个aspect ratios=1时的s_k,再通过s_k计算w,h;接着计算aspect ratios! =1时的s_k,再通过s_k计算w,h。

原代码

class PriorBox(object):

"""Compute priorbox coordinates in center-offset form for each source

feature map.

"""

def __init__(self, cfg):

super(PriorBox, self).__init__()

self.image_size = cfg['min_dim']

# number of priors for feature map location (either 4 or 6)

self.num_priors = len(cfg['aspect_ratios'])

self.variance = cfg['variance'] or [0.1]

self.feature_maps = cfg['feature_maps']

self.min_sizes = cfg['min_sizes']

self.max_sizes = cfg['max_sizes']

self.steps = cfg['steps']

self.aspect_ratios = cfg['aspect_ratios']

self.clip = cfg['clip']

self.version = cfg['name']

for v in self.variance:

if v <= 0:

raise ValueError('Variances must be greater than 0')

def forward(self):

mean = []

for k, f in enumerate(self.feature_maps):

for i, j in product(range(f), repeat=2):

f_k = self.image_size / self.steps[k]

# unit center x,y

cx = (j + 0.5) / f_k

cy = (i + 0.5) / f_k

# aspect_ratio: 1

# rel size: min_size

s_k = self.min_sizes[k]/self.image_size

mean += [cx, cy, s_k, s_k]

# aspect_ratio: 1

# rel size: sqrt(s_k * s_(k+1))

s_k_prime = sqrt(s_k * (self.max_sizes[k]/self.image_size))

mean += [cx, cy, s_k_prime, s_k_prime]

# rest of aspect ratios

for ar in self.aspect_ratios[k]:

mean += [cx, cy, s_k*sqrt(ar), s_k/sqrt(ar)]

mean += [cx, cy, s_k/sqrt(ar), s_k*sqrt(ar)]

# back to torch land

output = torch.Tensor(mean).view(-1, 4)

if self.clip:

output.clamp_(max=1, min=0)

return output

if __name__ == "__main__":

# SSD300 CONFIGS

voc = {

'num_classes': 21,

'lr_steps': (80000, 100000, 120000),

'max_iter': 120000,

'feature_maps': [38, 19, 10, 5, 3, 1],

'img_size': 300,

'steps': [8, 16, 32, 64, 100, 300],

'min_sizes': [30, 60, 111, 162, 213, 264],

'max_sizes': [60, 111, 162, 213, 264, 315],

'aspect_ratios': [[2], [2, 3], [2, 3], [2, 3], [2], [2]],

'variance': [0.1, 0.2],

'clip': True,

'name': 'VOC',

}

box = PriorBox(voc)

print('box.shape:', box().shape)

print('box:\n',box())结果

box.shape: torch.Size([8732, 4])

box:

tensor([[0.0133, 0.0133, 0.1000, 0.1000],

[0.0133, 0.0133, 0.1414, 0.1414],

[0.0133, 0.0133, 0.1414, 0.0707],

...,

[0.5000, 0.5000, 0.9612, 0.9612],

[0.5000, 0.5000, 1.0000, 0.6223],

[0.5000, 0.5000, 0.6223, 1.0000]])修改后的代码

import torch

from torch import nn

from math import sqrt as sqrt

import torch.nn.functional as F

from torch.cuda.amp import autocast

from itertools import product as product

class SSDAnchors(nn.Module):

"""

anchors: 38x38x4+19x19x6+10x10x6+5x5x6+3x3x4+1x1x4=8732

"""

def __init__(self, img_size=300, feature_maps=[38, 19, 10, 5, 3, 1],

steps=[8, 16, 32, 64, 100, 300],

aspect_ratios=[[2], [2, 3], [2, 3], [2, 3], [2], [2]],

clip=True, variance=[0.1, 0.2], version='VOC',

min_sizes=[30, 60, 111, 162, 213, 264],

max_sizes=[60, 111, 162, 213, 264, 315],

):

super(SSDAnchors, self).__init__()

if img_size == 300:

self.img_size = img_size

else:

self.img_size = 300

if feature_maps == [38, 19, 10, 5, 3, 1]:

self.feature_maps = feature_maps

else:

self.feature_maps = [38, 19, 10, 5, 3, 1]

if steps == [8, 16, 32, 64, 100, 300]:

self.steps = steps

else:

self.steps = [8, 16, 32, 64, 100, 300]

if aspect_ratios == [[2], [2, 3], [2, 3], [2, 3], [2], [2]]:

self.aspect_ratios = aspect_ratios

else:

self.aspect_ratios = [[2], [2, 3], [2, 3], [2, 3], [2], [2]]

if clip == True:

self.clip = clip

else:

self.clip = True

if variance == [0.1, 0.2]:

self.variance = variance

else:

self.variance = [0.1, 0.2]

if version == 'VOC':

self.version = version

if min_sizes == [30, 60, 111, 162, 213, 264]:

self.min_sizes = min_sizes

else:

self.min_sizes = [30, 60, 111, 162, 213, 264]

if max_sizes == [60, 111, 162, 213, 264, 315]:

self.max_sizes = max_sizes

else:

self.max_sizes = [60, 111, 162, 213, 264, 315]

elif version == 'COCO':

self.version = version

if min_sizes == [21, 45, 99, 153, 207, 261]:

self.min_sizes = min_sizes

else:

self.min_sizes = [21, 45, 99, 153, 207, 261]

if max_sizes == [45, 99, 153, 207, 261, 315]:

self.max_sizes = max_sizes

else:

self.max_sizes = [45, 99, 153, 207, 261, 315]

else:

raise ValueError("Dataset type is error!")

@autocast()

def forward(self):

mean = []

for k, f in enumerate(self.feature_maps):

for i, j in product(range(f), repeat=2):

# feature_map of k-th

f_k = self.img_size / self.steps[k]

# center

cx = (i + 0.5) / f_k

cy = (j + 0.5) / f_k

# r==1, size = s_k

s_k = self.min_sizes[k] / self.img_size

mean += [cx, cy, s_k, s_k]

# r==1, size = sqrt(s_k * s_(k+1))

s_k_plus = self.max_sizes[k] / self.img_size

s_k_prime = sqrt(s_k * s_k_plus)

mean += [cx, cy, s_k_prime, s_k_prime]

# ration != 1

for r in self.aspect_ratios[k]:

mean += [cx, cy, s_k * sqrt(r), s_k / sqrt(r)]

mean += [cx, cy, s_k / sqrt(r), s_k * sqrt(r)]

# torch

anchors = torch.tensor(mean).view(-1, 4)

# norm [0,1]

if self.clip:

anchors.clamp_(max=1, min=0)

# anchor boxes

return anchors

if __name__ == "__main__":

# voc

anchors1 = SSDAnchors(version='VOC')

print(anchors1.min_sizes)

print(anchors1().shape)

print(anchors1())

# coco

anchor2 = SSDAnchors(version='COCO')

print(anchor2.min_sizes)

print(anchor2().shape)

print(anchor2())修改后的结果

# voc

[30, 60, 111, 162, 213, 264]

torch.Size([8732, 4])

tensor([[0.0133, 0.0133, 0.1000, 0.1000],

[0.0133, 0.0133, 0.1414, 0.1414],

[0.0133, 0.0133, 0.1414, 0.0707],

...,

[0.5000, 0.5000, 0.9612, 0.9612],

[0.5000, 0.5000, 1.0000, 0.6223],

[0.5000, 0.5000, 0.6223, 1.0000]])

# coco

[21, 45, 99, 153, 207, 261]

torch.Size([8732, 4])

tensor([[0.0133, 0.0133, 0.0700, 0.0700],

[0.0133, 0.0133, 0.1025, 0.1025],

[0.0133, 0.0133, 0.0990, 0.0495],

...,

[0.5000, 0.5000, 0.9558, 0.9558],

[0.5000, 0.5000, 1.0000, 0.6152],

[0.5000, 0.5000, 0.6152, 1.0000]])

Process finished with exit code 0注意:

此代码生成的anchor,产生的中心点的坐标是在0-1之间归一化的(cx, cy, w, h)。若要投射到300x300的img上,需要将其乘以300。

未完...