安装Scrapy,打开cmd 输入 pip install scrapy即可

创建项目

scrapy startproject tencent

创建爬虫

scrapy genspider hr tencent.com

items.py讲解

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class PachongItem(scrapy.Item):

# define the fields for your item here like:

#在此处定义项目的字段,如

# name = scrapy.Field()

pass

Field()继承的是一个字典,字典自然是{key:value}

middlewares.py

这个是一个下载中间件和爬虫中间件,里面封装了2个类分别是

class PachongSpiderMiddleware:#爬虫中间件

pass

class PachongDownloaderMiddleware:#下载中间件

pass

pipelines.py

# Define your item pipelines here

#在此处定义项目管道

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

#别忘了将管道添加到项目管道设置中

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

class PachongPipeline:

def process_item(self, item, spider):

return item

如果要用爬虫,千万别忘记打开这个管道,

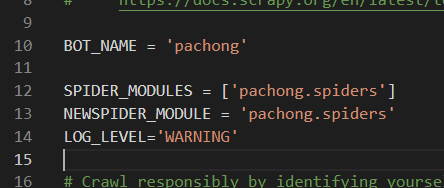

settings.py

再来看看最后一个文件,

这个文件简单来介绍一下,

#scrapy项目名

BOT_NAME = 'pachong'#这个是我创建的爬虫名称

SPIDER_MODULES = ['pachong.spiders']#我的额爬虫再pachong目录下。

NEWSPIDER_MODULE = 'pachong.spiders'

#robots是否遵守

ROBOTSTXT_OBEY = True#这里是robots协议,可以注释掉。

#这里是最大的并发量,他默认的是16,如果需要调整就把下面注释打开把调整32的数字

#Configure maximum concurrent requests performed by Scrapy (default: 16)

CONCURRENT_REQUESTS = 32

#这个代码是下载延迟,默认是注释的没有下载延迟,如果太快了可以打开限制速度

#DOWNLOAD_DELAY = 3

#下面是一个比较重要的

# Override the default request headers:

#覆盖默认请求头:

#这里是一个请求报头,我们需要添加我们网址的请求头

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

}

# Enable or disable spider middlewares

#启用或禁用spider中间件

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

SPIDER_MIDDLEWARES = {

'pachong.middlewares.PachongSpiderMiddleware': 543,

}

# Enable or disable downloader middlewares

#启用或禁用下载程序中间件

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

DOWNLOADER_MIDDLEWARES = {

'pachong.middlewares.PachongDownloaderMiddleware': 543,

}

# Configure item pipelines

#配置项目管道

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

#ITEM_PIPELINES = {

# 'pachong.pipelines.PachongPipeline': 300,

#}

下面我们先运行一下程序,再命令端输入

scrapy crawl hr

我们会得到如下数据

2021-03-24 09:51:05 [scrapy.utils.log] INFO: Scrapy 2.4.1 started (bot: pachong)

09:51:05------------启用时间

Scrapy 2.4.1--------程序版本

(bot: pachong)-----项目名

2021-03-24 09:51:05 [scrapy.utils.log] INFO: Versions: lxml 4.4.1.0, libxml2 2.9.5, cssselect 1.1.0, parsel 1.6.0, w3lib 1.22.0, Twisted 20.3.0, Python 3.7.4 (tags/v3.7.4:e09359112e, Jul 8 2019, 20:34:20) [MSC v.1916 64 bit (AMD64)], pyOpenSSL 19.1.0 (OpenSSL 1.1.1h 22 Sep 2020), cryptography 3.2.1, Platform Windows-10-10.0.18362-SP0

lxml 4.4.1.0------程序版本

Twisted 20.3.0----程序版本

Python 3.7.4------程序版本

Windows-10-10.0.18362-SP0----------系统版本

2021-03-24 09:51:05 [scrapy.utils.log] DEBUG: Using reactor: twisted.internet.selectreactor.SelectReactor

2021-03-24 09:51:05 [scrapy.crawler] INFO: Overridden settings:

{

'BOT_NAME': 'pachong',

'NEWSPIDER_MODULE': 'pachong.spiders',

'ROBOTSTXT_OBEY': True,

'SPIDER_MODULES': ['pachong.spiders']}

'ROBOTSTXT_OBEY': True,-----这里我们看到这个协议是true

2021-03-24 09:51:05 [scrapy.extensions.telnet] INFO: Telnet Password: 28a8213a0b08cff4

2021-03-24 09:51:05 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.logstats.LogStats']

2021-03-24 09:51:05 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware',

'scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'pachong.middlewares.PachongDownloaderMiddleware',

'scrapy.downloadermiddlewares.retry.RetryMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2021-03-24 09:51:05 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'pachong.middlewares.PachongSpiderMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2021-03-24 09:51:05 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2021-03-24 09:51:05 [scrapy.core.engine] INFO: Spider opened

Spider opened---这里有一个opened,就是我们的爬虫,下面开始加载我们的爬虫

2021-03-24 09:51:05 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2021-03-24 09:51:05 [hr] INFO: Spider opened: hr

2021-03-24 09:51:05 [hr] INFO: Spider opened: hr

2021-03-24 09:51:05 [scrapy.extensions.telnet] INFO: Telnet console listening on 127.0.0.1:6023

2021-03-24 09:51:06 [scrapy.downloadermiddlewares.redirect] DEBUG: Redirecting (302) to <GET https://tencent.com/robots.txt> from <GET http://tencent.com/robots.txt>

Redirecting (302)----请求失败

2021-03-24 09:51:06 [scrapy.core.engine] DEBUG: Crawled (404) <GET https://tencent.com/robots.txt> (referer: None)

Crawled (404)----请求失败

2021-03-24 09:51:06 [scrapy.downloadermiddlewares.redirect] DEBUG: Redirecting (302) to <GET https://tencent.com/> from <GET http://tencent.com/>

DEBUG: Redirecting (302)----请求失败

2021-03-24 09:51:06 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://tencent.com/> (referer: None)

DEBUG: Crawled (200)----请求成功

2021-03-24 09:51:07 [scrapy.core.engine] INFO: Closing spider (finished)

我们发现请求他的robots协议失败,但是请求他的主网站成功,我们需要修改一下爬虫设置。

Closing spider (finished)------到这里爬虫就结束了。跳过下面这段,先看下文,

2021-03-24 09:51:07 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{

'downloader/request_bytes': 864,

'downloader/request_count': 4,

'downloader/request_method_count/GET': 4,

'downloader/response_bytes': 1185,

'downloader/response_count': 4,

'downloader/response_status_count/200': 1,

'downloader/response_status_count/302': 2,

'downloader/response_status_count/404': 1,

'elapsed_time_seconds': 1.168874,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2021, 3, 24, 1, 51, 7, 78523),

'log_count/DEBUG': 4,

'log_count/INFO': 12,

'response_received_count': 2,

'robotstxt/request_count': 1,

'robotstxt/response_count': 1,

'robotstxt/response_status_count/404': 1,

'scheduler/dequeued': 2,

'scheduler/dequeued/memory': 2,

'scheduler/enqueued': 2,

'scheduler/enqueued/memory': 2,

'start_time': datetime.datetime(2021, 3, 24, 1, 51, 5, 909649)}

2021-03-24 09:51:07 [scrapy.core.engine] INFO: Spider closed (finished)

我们修改一下settings.py文件

ROBOTSTXT_OBEY = False #把他改成Flase

再添加一个请求头

DEFAULT_REQUEST_HEADERS = {

'Connection': 'keep-alive',

'sec-ch-ua': '"Google Chrome";v="89", "Chromium";v="89", ";Not A Brand";v="99"',

'sec-ch-ua-mobile': '?0',

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.90 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Sec-Fetch-Site': 'same-origin',

'Sec-Fetch-Mode': 'navigate',

'Sec-Fetch-User': '?1',

'Sec-Fetch-Dest': 'document',

'Referer': 'https://www.douban.com/',

'Accept-Language': 'zh-CN,zh;q=0.9',

}

2021-03-24 10:28:12 [scrapy.utils.log] INFO: Scrapy 2.4.1 started (bot: pachong)

2021-03-24 10:28:12 [scrapy.utils.log] INFO: Versions: lxml 4.4.1.0, libxml2 2.9.5, cssselect 1.1.0, parsel 1.6.0, w3lib 1.22.0, Twisted 20.3.0, Python 3.7.4 (tags/v3.7.4:e09359112e, Jul 8 2019, 20:34:20) [MSC v.1916 64 bit (AMD64)], pyOpenSSL 19.1.0 (OpenSSL 1.1.1h 22 Sep 2020), cryptography 3.2.1, Platform Windows-10-10.0.18362-SP0

2021-03-24 10:28:12 [scrapy.utils.log] DEBUG: Using reactor: twisted.internet.selectreactor.SelectReactor

2021-03-24 10:28:12 [scrapy.crawler] INFO: Overridden settings:

{

'BOT_NAME': 'pachong',

'NEWSPIDER_MODULE': 'pachong.spiders',

'SPIDER_MODULES': ['pachong.spiders']}

2021-03-24 10:28:12 [scrapy.extensions.telnet] INFO: Telnet Password: fc28b3411aa3a1b4

2021-03-24 10:28:12 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.logstats.LogStats']

2021-03-24 10:28:12 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'scrapy.downloadermiddlewares.retry.RetryMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2021-03-24 10:28:12 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2021-03-24 10:28:12 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2021-03-24 10:28:12 [scrapy.core.engine] INFO: Spider opened

2021-03-24 10:28:12 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2021-03-24 10:28:12 [scrapy.extensions.telnet] INFO: Telnet console listening on 127.0.0.1:6023

2021-03-24 10:28:13 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://www.douban.com/> (referer: https://www.douban.com/)

DEBUG: Crawled (200)---我们把robots协议关闭后没有了,加入请求头现在就可以访问了。

****************************************************************************************************

<200 https://www.douban.com/>

****************************************************************************************************

2021-03-24 10:28:13 [scrapy.core.engine] INFO: Closing spider (finished)

2021-03-24 10:28:13 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{

'downloader/request_bytes': 666,

'downloader/request_count': 1,

'downloader/request_method_count/GET': 1,

'downloader/response_bytes': 18450,

'downloader/response_count': 1,

'downloader/response_status_count/200': 1,

'elapsed_time_seconds': 0.872716,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2021, 3, 24, 2, 28, 13, 404170),

'log_count/DEBUG': 1,

'log_count/INFO': 10,

'response_received_count': 1,

'scheduler/dequeued': 1,

'scheduler/dequeued/memory': 1,

'scheduler/enqueued': 1,

'scheduler/enqueued/memory': 1,

'start_time': datetime.datetime(2021, 3, 24, 2, 28, 12, 531454)}

2021-03-24 10:28:13 [scrapy.core.engine] INFO: Spider closed (finished)

竟然请求成功了,为了我们观看结果方便,我们需要把多余的日志关闭,

再settings.py文件中加入一句话

LOG_LEVEL='WARNING'

然后我们再运行一下代码得到的就是没有log的信息了,直接是数据,如下:

****************************************************************************************************

<200 https://www.douban.com/>

<class 'scrapy.http.response.html.HtmlResponse'>

{

'name': '了不起的文明现场——一线考古队长带你探秘历史'}

<class 'str'>

{

'name': '人人听得懂用得上的法律课'}

<class 'str'>

{

'name': '如何读透一本书——12堂阅读写作训练课'}

<class 'str'>

{

'name': '52倍人生——戴锦华大师电影课'}

<class 'str'>

{

'name': '我们的女性400年——文学里的女性主义简史'}

<class 'str'>

{

'name': '用性别之尺丈量世界——18堂思想课解读女性问题'}

<class 'str'>

{

'name': '哲学闪耀时——不一样的西方哲学史'}

<class 'str'>

{

'name': '读梦——村上春树长篇小说指南'}

<class 'str'>

{

'name': '拍张好照片——跟七七学生活摄影'}

<class 'str'>

{

'name': '白先勇细说红楼梦——从小说角度重解“红楼”'}

<class 'str'>

****************************************************************************************************

接下来就应该开始说怎么爬取数据了,是不是已经发现我上面已经将部分数据爬下来了。

是的,用的解析器就是Scrapy自带的requests.xpath属性进行定位取值,使用功能和lxml一样

例如:

html=response.xpath('//ul[@class="time-list"]/li/a[2]/text()')

但是我们取回来的值是这样的

[<Selector xpath='//ul[@class="time-list"]/li/a[2]/text()' data='了不起的文明现场——一线考古队长带你探秘历史'>, <Selector xpath='//ul[@class="time-list"]/li/a[2]/text(s="time-list"]/li/a[2]/text()' data='52倍人生——戴锦华大师电影课'>, <Selector xpath='//ul[@class="time-list"]/li/a[2]/text()' data='我们的女性400年——文学里的女性主义简

史'>, <Selector xpath='//ul[@class="time-list"]/li/a[2]/text()' data='用性别之尺丈量世界——18堂思想课解读女性问题'>, <Selector xpath='//ul[@class="time-list"]/li/a[2]/text()' data='哲学闪耀时——不一样的西方哲学史'>, <Selector xpath='//ul[@class="time-list"]/li/a[2]/text()' data='读梦——村上春树长篇小说指南'>, <Selector xpath='//ul[@class="time-list"]/li/a[2]/text()' data='拍张好照片——跟七七学生活摄影'>, <Selector xpath='//ul[@class="time-list"]/li/a[2]/text()' data='白先勇细说红楼梦——从小说角度重

解“红楼”'>]

我们用lxml取出来的值是列表,但是再这里也是列表,但是我们发现没有他不是单单的列表,需要,分为了三块。

<Selector xpath='XXX' data='xxx'>

第一个是属性,第二个是我们取值的路径,第三个才是我们要的值。

其实再我们Scrapy中给我们封装了一些方法,如xpath、css 在selector对象中,

但是我们要获取selector对象中的date有这么几个方法

#旧方法

extract_first()----》返回值

extract----》返回列表

#新方法

get()----》返回值

getall()----》返回列表

新方法旧方法都可以使用,旧方法找不到会返回一个None,新方法找不到会返回一个空。

我们用新发get()试试

html=response.xpath('//ul[@class="time-list"]/li/a[2]/text()').get()

返回

了不起的文明现场——一线考古队长带你探秘历史

说明对了。好了竟然返回了,我们来讲讲这么运行

第一个方法,在scrapy.cfg同级目录创建一个PY文件

代码如下:

from scrapy import cmdline

cmdline.execute('scrapy crawl hr'.split())

这样就可以运行我们的框架爬虫了