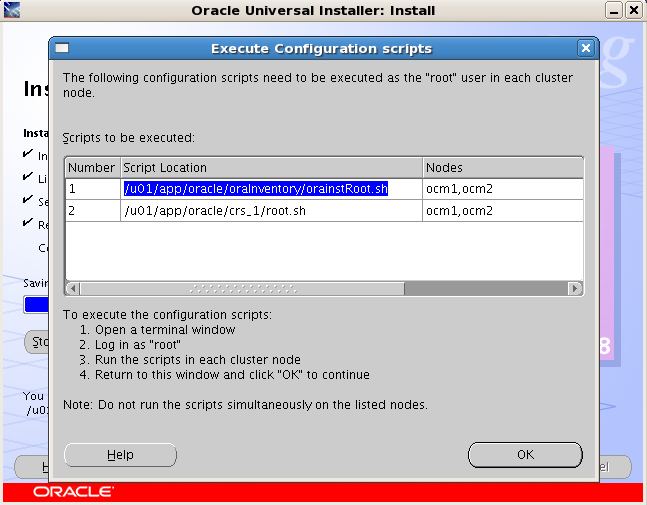

cluster安装到最后环节出现

运行完

在rac1 上执行:/opt/ora10g/oraInventory/orainstRoot.sh

在rac2 上执行:/opt/ora10g/oraInventory/orainstRoot.sh

在rac1 上执行:/opt/ora10g/product/10.2.0/crs_1/root.sh

都没问题

看其他经验指导,rac2上的/opt/ora10g/product/10.2.0/crs_1/root.sh会报错

提前修改一些文件:

每个节点(有一个文档提到全改)

先修改vipca 文件,

[root@rac2 opt]# vi /opt/ora10g/product/10.2.0/crs_1/bin/vipca

找到如下内容:

if [ "$arch" = "i686" -o "$arch" = "ia64" ]

then

LD_ASSUME_KERNEL=2.4.19

export LD_ASSUME_KERNEL

fi

#End workaround

在fi 后新添加一行:

unset LD_ASSUME_KERNEL

再修改srvctl 文件

[root@rac2 opt]# vi /opt/ora10g/product/10.2.0/crs_1/bin/srvctl

找到如下内容:

LD_ASSUME_KERNEL=2.4.19

export LD_ASSUME_KERNEL

同样在其后新增加一行:

unset LD_ASSUME_KERNEL

然后任意节点

我在2号节点[root@rac2 ~]

/opt/oracle/otk/home/oracle/product/10.2.0/crs/bin/oifcfg setif -global eth0/192.168.100.0:public

/opt/oracle/otk/home/oracle/product/10.2.0/crs/bin/oifcfg setif -global eth1/10.10.17.0:cluster_interconnect

/opt/oracle/otk/home/oracle/product/10.2.0/crs/bin/oifcfg getif

最后在rac2 上执行:/opt/ora10g/product/10.2.0/crs_1/root.sh

运行完报错

然后任意节点

[root@rac2 cfgtoollogs]# export DISPLAY=192.168.100.1:0.0

[root@rac2 cfgtoollogs]# xhost+

[root@rac2 cfgtoollogs]# export LANG=c

[root@rac2 cfgtoollogs]# /opt/oracle/otk/home/oracle/product/10.2.0/crs/bin/vipca

成功配置完毕

然后回到一号节点orale运行的安装界面上点击ok

(我在提示rac2上运行/opt/ora10g/product/10.2.0/crs_1/root.sh时,没有运行vipca之前,就retry了几次,没过去就给强制关闭了,然后报了个The "/opt/oracle/otk/home/oracle/product/10.2.0/crs/cfgtoollogs/configToolFailedCommands" script contains all commands that failed, were skipped or were cancelled. This file may be used to run these configuration assistants outside of OUI. Note that you may have to update this script with passwords (if any) before executing the same的错误,

我找到日志/opt/oracle/otk/home/oraInventory/logs里发现可以再次运行/opt/oracle/otk/home/oracle/product/10.2.0/crs/oui/bin/ouica.sh

我就在关闭后的rac1下的oracle用户下运行

-bash-3.2$ /opt/oracle/otk/home/oracle/product/10.2.0/crs/oui/bin/ouica.sh

Configuration directory is set to /opt/oracle/otk/home/oracle/product/10.2.0/crs/cfgtoollogs. All xml files under the directory will be processed

-bash-3.2$ /opt/oracle/otk/home/oracle/product/10.2.0/crs/cfgtoollogs

-bash: /opt/oracle/otk/home/oracle/product/10.2.0/crs/cfgtoollogs: is a directory

-bash-3.2$ cd /opt/oracle/otk/home/oracle/product/10.2.0/crs/cfgtoollogs

-bash-3.2$ ls

cfgfw configToolAllCommands configToolFailedCommands oui vipca

-bash-3.2$ ./configToolAllCommands

然后出现了Checking existence of VIP node application (required)

Check failed.

Check failed on nodes:

rac2,rac1

然后我才回到rac2 root下运行vipca,配置成功后,再次运行 ./configToolAllCommands

Checking existence of VIP node application (required)

Check passed.

Post-check for cluster services setup was successful.

)

随后各个节点均显示crs正常

-bash-3.2$ /opt/oracle/otk/home/oracle/product/10.2.0/crs/bin/crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora.rac1.gsd application ONLINE ONLINE rac1

ora.rac1.ons application ONLINE ONLINE rac1

ora.rac1.vip application ONLINE ONLINE rac1

ora.rac2.gsd application ONLINE ONLINE rac2

ora.rac2.ons application ONLINE ONLINE rac2

ora.rac2.vip application ONLINE ONLINE rac2