tiup重新部署,multiple TiKV instances are deployed at the same host but location label missing

故障现象:tiup方式单机部署tidb集群后,集群关闭状态下通过tiup cluster destory xxx_cluster命令删除了集群,开始重新部署v4.0.7时报错

[root@onetest2 ~]# tiup cluster deploy tidb4_cluster v4.0.7 test4.yaml --user root -p

Starting component `cluster`: /root/.tiup/components/cluster/v1.2.1/tiup-cluster deploy tidb4_cluster v4.0.7 test4.yaml --user root -p

Error: check TiKV label failed, please fix that before continue:

192.168.30.20:20160:

multiple TiKV instances are deployed at the same host but location label missing

192.168.30.20:20161:

multiple TiKV instances are deployed at the same host but location label missing

192.168.30.20:20162:

multiple TiKV instances are deployed at the same host but location label missing

Verbose debug logs has been written to /root/logs/tiup-cluster-debug-2020-10-28-22-31-56.log.

Error: run `/root/.tiup/components/cluster/v1.2.1/tiup-cluster` (wd:/root/.tiup/data/SEis7lq) failed: exit status 1

查看详细日志:

{"MemoryLimit":"","CPUQuota":"","IOReadBandwidthMax":"","IOWriteBandwidthMax":"","LimitCORE":""},"Arch":"amd64","OS":"linux"}],"TiFlashServers":null,"PDServers":[{"Host":"192.168.30.20","ListenHost":"","SSHPort":22,"Imported":false,"Name":"pd-192.168.30.20-2379","ClientPort":2379,"PeerPort":2380,"DeployDir":"/tidb-deploy/pd-2379","DataDir":"/tidb-data/pd-2379","LogDir":"","NumaNode":"","Config":null,"ResourceControl":{"MemoryLimit":"","CPUQuota":"","IOReadBandwidthMax":"","IOWriteBandwidthMax":"","LimitCORE":""},"Arch":"amd64","OS":"linux"}],"PumpServers":null,"Drainers":null,"CDCServers":null,"TiSparkMasters":null,"TiSparkWorkers":null,"Monitors":[{"Host":"192.168.30.20","SSHPort":22,"Imported":false,"Port":9090,"DeployDir":"/tidb-deploy/prometheus-9090","DataDir":"/tidb-data/prometheus-9090","LogDir":"","NumaNode":"","Retention":"","ResourceControl":{"MemoryLimit":"","CPUQuota":"","IOReadBandwidthMax":"","IOWriteBandwidthMax":"","LimitCORE":""},"Arch":"amd64","OS":"linux","RuleDir":""}],"Grafana":[{"Host":"192.168.30.20","SSHPort":22,"Imported":false,"Port":3000,"DeployDir":"/tidb-deploy/grafana-3000","ResourceControl":{"MemoryLimit":"","CPUQuota":"","IOReadBandwidthMax":"","IOWriteBandwidthMax":"","LimitCORE":""},"Arch":"amd64","OS":"linux","DashboardDir":""}],"Alertmanager":null}}

2020-10-28T22:06:59.963+0800 INFO Execute command finished {"code": 1, "error": "check TiKV label failed, please fix that before continue:\n192.168.30.20:20160:\n\tmultiple TiKV instances are deployed at the same host but location label missing\n192.168.30.20:20161:\n\tmultiple TiKV instances are deployed at the same host but location label missing\n192.168.30.20:20162:\n\tmultiple TiKV instances are deployed at the same host but location label missing\n", "errorVerbose": "check TiKV label failed, please fix that before continue:\n192.168.30.20:20160:\n\tmultiple TiKV instances are deployed at the same host but location label missing\n192.168.30.20:20161:\n\tmultiple TiKV instances are deployed at the same host but location label missing\n192.168.30.20:20162:\n\tmultiple TiKV instances are deployed at the same host but location label missing\n\ngithub.com/pingcap/tiup/pkg/cluster.(*Manager).Deploy\n\tgithub.com/pingcap/tiup@/pkg/cluster/manager.go:1091\ngithub.com/pingcap/tiup/components/cluster/command.newDeploy.func1\n\tgithub.com/pingcap/tiup@/components/cluster/command/deploy.go:81\ngithub.com/spf13/cobra.(*Command).execute\n\tgithub.com/spf13/[email protected]/command.go:842\ngithub.com/spf13/cobra.(*Command).ExecuteC\n\tgithub.com/spf13/[email protected]/command.go:950\ngithub.com/spf13/cobra.(*Command).Execute\n\tgithub.com/spf13/[email protected]/command.go:887\ngithub.com/pingcap/tiup/components/cluster/command.Execute\n\tgithub.com/pingcap/tiup@/components/cluster/command/root.go:249\nmain.main\n\tgithub.com/pingcap/tiup@/components/cluster/main.go:23\nruntime.main\n\truntime/proc.go:203\nruntime.goexit\n\truntime/asm_amd64.s:1357"}

日志中并没有太多有用的详细信息,还是关键信息“multiple TiKV instances are deployed at the same host but location label missing”

tikv实例中label是用来作甚的?

根据官方文档的解释:设置 label 的初衷就是为了让 tikv 尽可能在物理层面分散,PD 调度器根据 TiKV 的拓扑信息,会自动在后台通过调度使得 Region 的各个副本尽可能隔离,从而使得数据容灾能力最大化。

如果所有的 tikv 都部署在一台主机上就没有必要设置 label ,我这个环境就是单机部署,是没必要设置label的,但不知道为啥删除后重新安装就遇到需要我设置label的问题,那按照提示设置下呗。

部署命令tiup cluster deploy tidb4_cluster v4.0.7 test4.yaml --user root -p 中test4.yaml配置文件未设置label前:

# # Global variables are applied to all deployments and used as the default value of # # the deployments if a specific deployment value is missing. global: user: "tidb" ssh_port: 22 deploy_dir: "/tidb-deploy" data_dir: "/tidb-data" # # Monitored variables are applied to all the machines. monitored: node_exporter_port: 9100 blackbox_exporter_port: 9115 server_configs: tidb: log.slow-threshold: 300 tikv: readpool.storage.use-unified-pool: false readpool.coprocessor.use-unified-pool: true pd: replication.enable-placement-rules: true replication.location-labels: - host pd_servers: - host: 192.168.30.20 tidb_servers: - host: 192.168.30.20 tikv_servers: - host: 192.168.30.20 port: 20160 status_port: 20180 - host: 192.168.30.20 port: 20161 status_port: 20181 - host: 192.168.30.20 port: 20162 status_port: 20182 monitoring_servers: - host: 192.168.30.20 grafana_servers: - host: 192.168.30.20

解决方案:设置label后,可以部署成功:

# # Global variables are applied to all deployments and used as the default value of

# # the deployments if a specific deployment value is missing.

global:

user: "tidb"

ssh_port: 22

deploy_dir: "/tidb-deploy"

data_dir: "/tidb-data"

# # Monitored variables are applied to all the machines.

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

server_configs:

tidb:

log.slow-threshold: 300

tikv:

readpool.storage.use-unified-pool: false

readpool.coprocessor.use-unified-pool: true

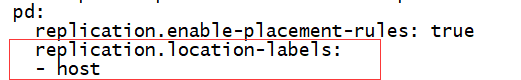

pd:

replication.enable-placement-rules: true

replication.location-labels:

- host

pd_servers:

- host: 192.168.30.20

tidb_servers:

- host: 192.168.30.20

tikv_servers:

- host: 192.168.30.20

port: 20160

status_port: 20180

config:

server.labels: { host: "192.168.30.20" }

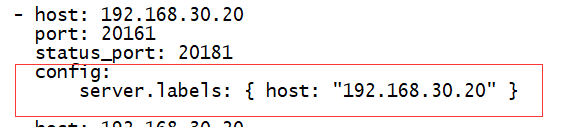

- host: 192.168.30.20

port: 20161

status_port: 20181

config:

server.labels: { host: "192.168.30.20" }

- host: 192.168.30.20

port: 20162

status_port: 20182

config:

server.labels: { host: "192.168.30.20" }

monitoring_servers:

- host: 192.168.30.20

grafana_servers:

- host: 192.168.30.20

添加内容: