Author: Yu Kai

Preface

In recent years, the trend of cloud-native enterprise infrastructure has become stronger and stronger. From the initial IaaS to the current microservices, customers' demands for granular refinement and observability have become stronger. In order to meet customers' higher performance and higher density, container networks have been developing and evolving at a high speed, which inevitably brings extremely high thresholds and challenges to customers' observability of cloud native networks. In order to improve the observability of cloud native networks and make it easier for customers and front-line students to increase the readability of business links, ACK Industrial Research and AES jointly established a series of cloud native network data plane observability to help customers and Students in the front and back lines understand the cloud native network architecture system, simplify the observability threshold of the cloud native network, optimize the experience of customer operation and maintenance and after-sales students in handling difficult problems, and improve the stability of the links of the cloud native network.

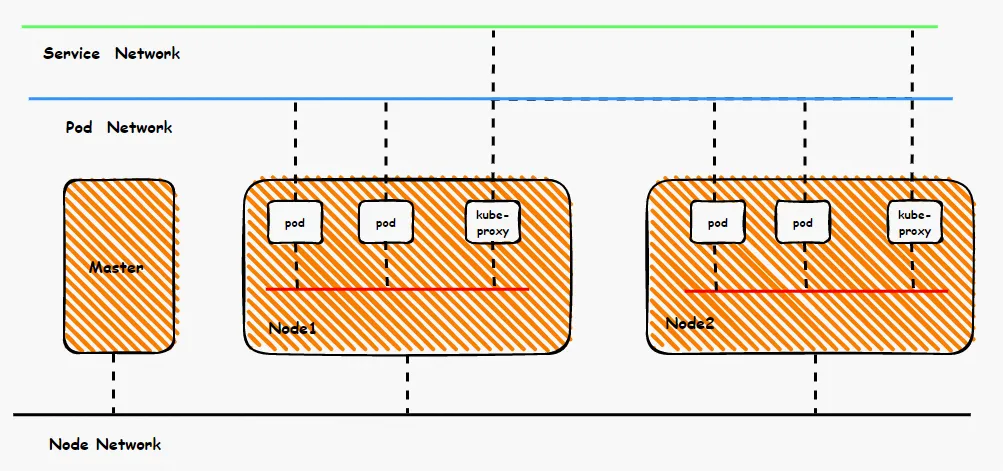

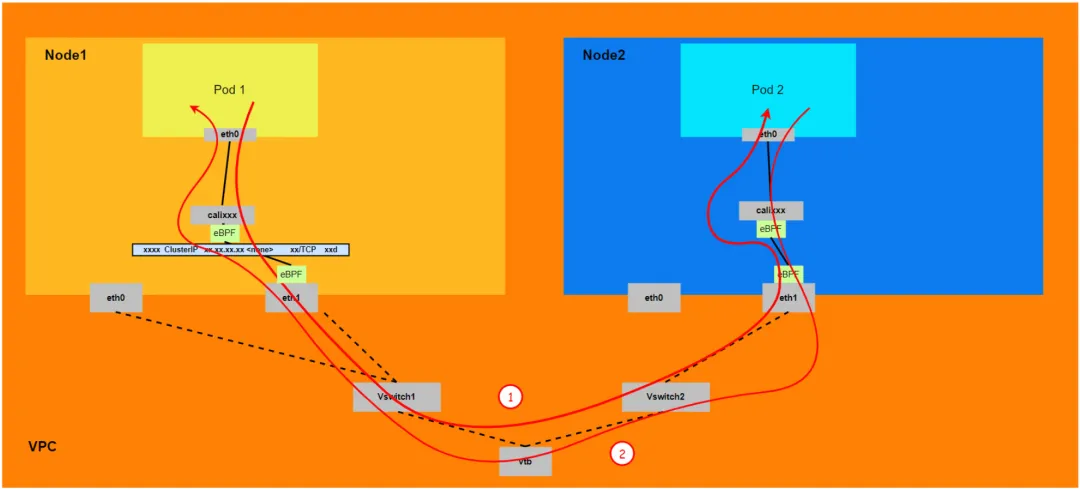

From a bird's eye view of the container network, the entire container network can be divided into three parts: Pod network segment, Service network segment and Node network segment. These three networks need to achieve interconnection and access control, so what are the technical principles for implementation? What is the entire link and what are the limitations? What is the difference between Flannel and Terway? What is the network performance in different modes? These require customers to make choices based on their own business scenarios before building containers. After the construction is completed, the relevant architecture cannot be changed, so customers need to fully understand the characteristics of each architecture. For example, the following figure is a simplified diagram. The Pod network must realize network interoperability and control between Pods in the same ECS, and also realize access between Pods in different ECSs. The backend of Pods accessing SVC may be in the same ECS or other ECSs. , In these different modes, the data link forwarding mode is different, and the performance results from the business side are also different.

This article is the seventh part of [Panorama Analysis of Container Network Data Links]. It mainly introduces the forwarding links of data plane links in Kubernetes Terway DataPath V2 mode. First, by understanding the data plane forwarding links in different scenarios, we can identify customers. The data surface performance of the access link in different scenarios can help customers further optimize the business architecture; on the other hand, by in-depth understanding of the forwarding link, when encountering container network jitter, customer operation and maintenance and Alibaba Cloud students can know what is happening. Deploy and observe manually which link points are deployed to further identify the direction and cause of the problem.

Series 1: Panoramic analysis of Alibaba Cloud container network data links (1) - Flannel

Series 2: Panoramic analysis of Alibaba Cloud container network data links (2) - Terway ENI

Series Three: Panoramic Analysis of Alibaba Cloud Container Network Data Links (3) - Terway ENIIP

Series 4: Panoramic analysis of Alibaba Cloud container network data links (4) - Terway IPVLAN+EBPF

Series 5: Panoramic analysis of Alibaba Cloud container network data links (5) - Terway ENI-Trunking

Series 6: Panoramic analysis of Alibaba Cloud container network data links (6) - ASM Istio

Terway DataPath V2 pattern architecture design

The elastic network interface (ENI) supports the function of configuring multiple auxiliary IPs. A single elastic network interface (ENI) can be assigned 6 to 20 auxiliary IPs according to the instance specifications. The ENI multi-IP mode uses this auxiliary IP to allocate to the container, thus greatly improving the efficiency. The size and density of Pod deployment. In terms of network connectivity, Terway currently supports two solutions: veth pair policy routing and IPVLAN. However, the community abandoned IPVLAN support after cilium v1.12 [ 1] . In order to unify the consistency of the data plane, the data plane of ACK The evolution is consistent with the community, which facilitates the integration of cloud native capabilities and reduces differentiation. Starting from v1.8.0, Terway no longer supports IPvlan tunnel acceleration, but uses DataPath V2 to unify the data plane.

The CIDR network segment used by the Pod is the same network segment as the CIDR of the node.

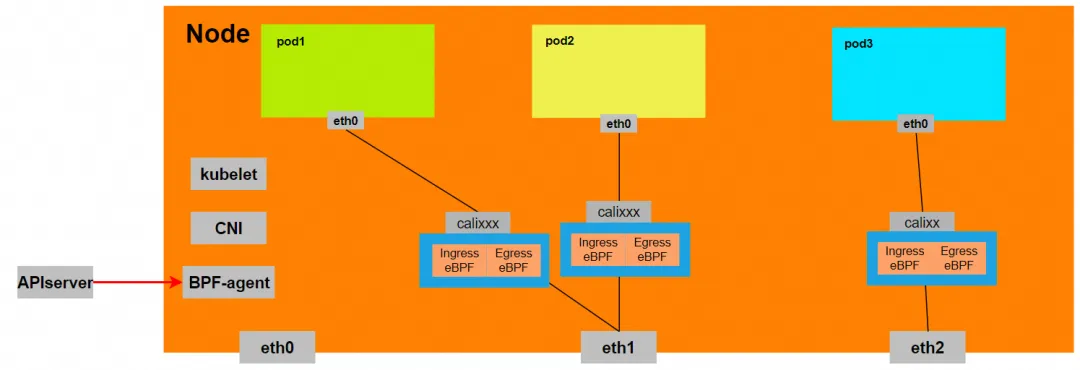

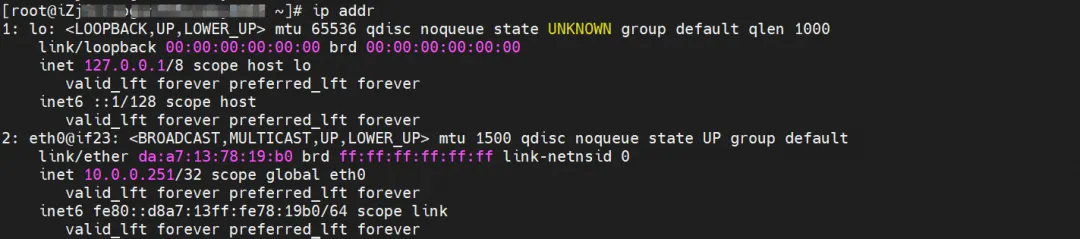

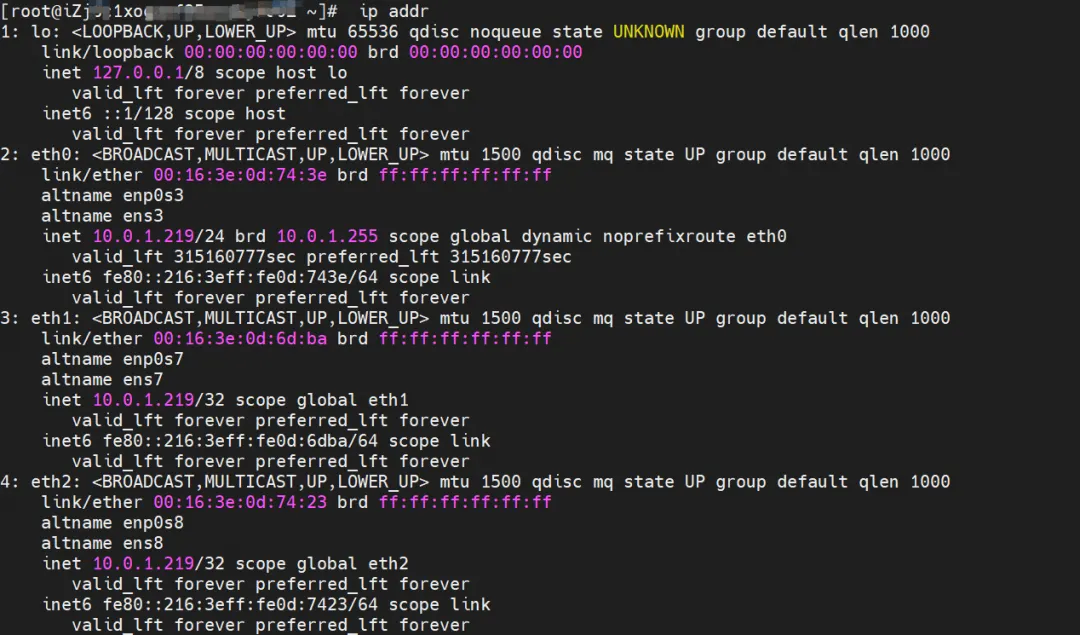

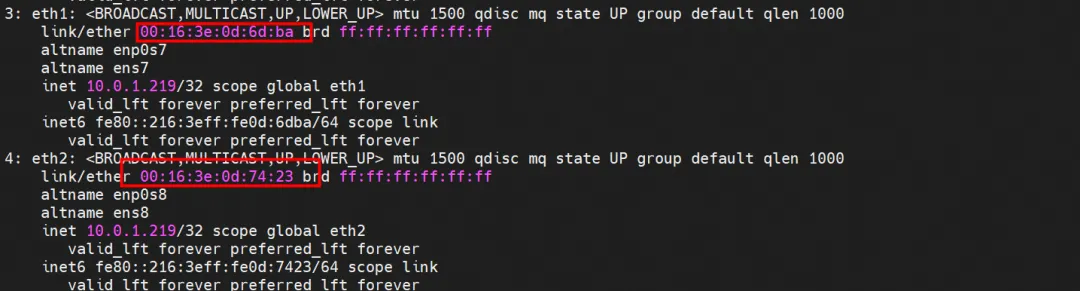

There is a network card eth0 inside the Pod, and the IP of eth0 is the IP of the Pod. The MAC address of this network card is inconsistent with the MAC address of ENI on the console. At the same time, there are multiple ethx network cards on ECS, which means that the ENI attached network card is not directly connected. Loaded into the Pod's network namespace.

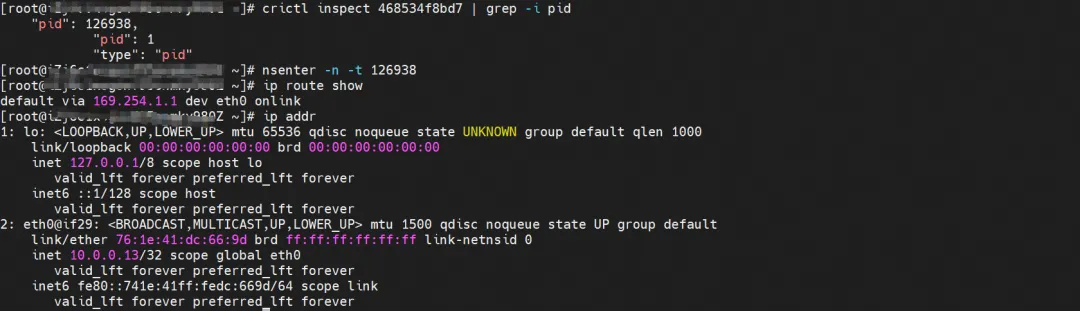

There is only a default route pointing to eth0 in the Pod, which means that when the Pod accesses any address segment, eth0 is the unified entrance and exit.

At the same time, there are multiple ethx network cards on ECS, which means that the ENI attached network card is not directly mounted to the Pod's network namespace.

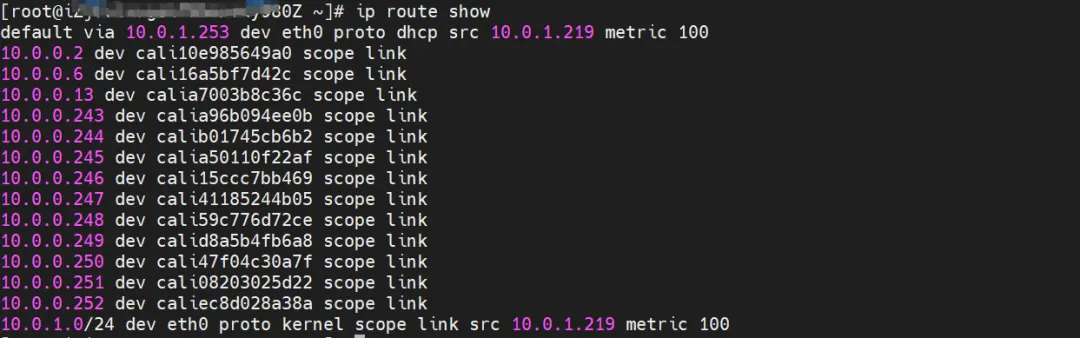

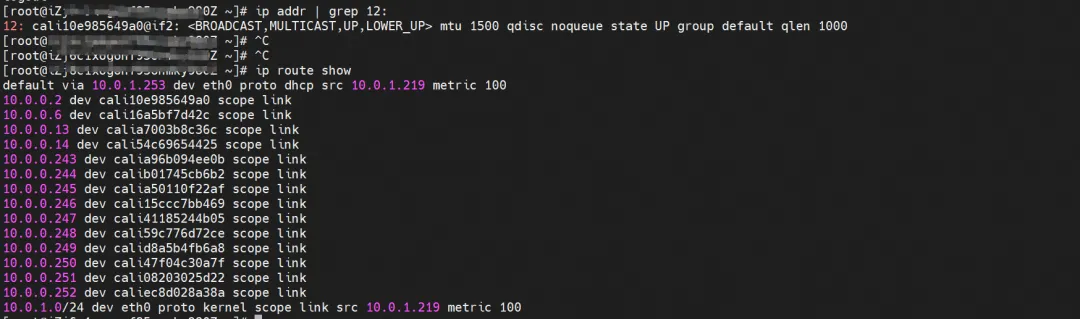

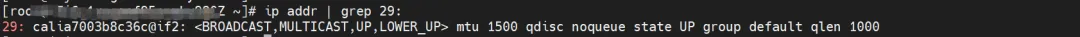

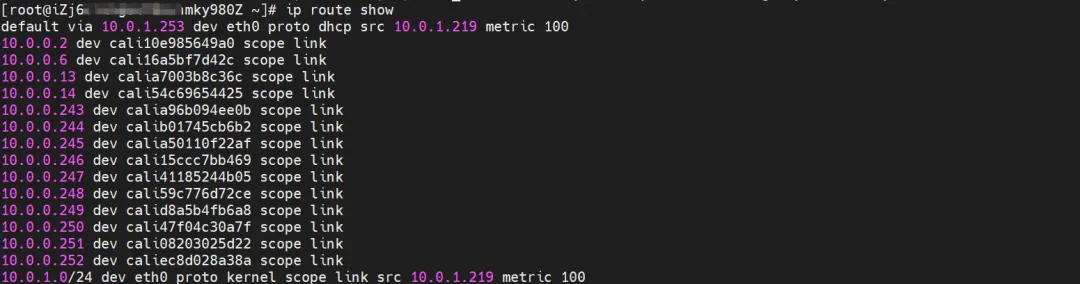

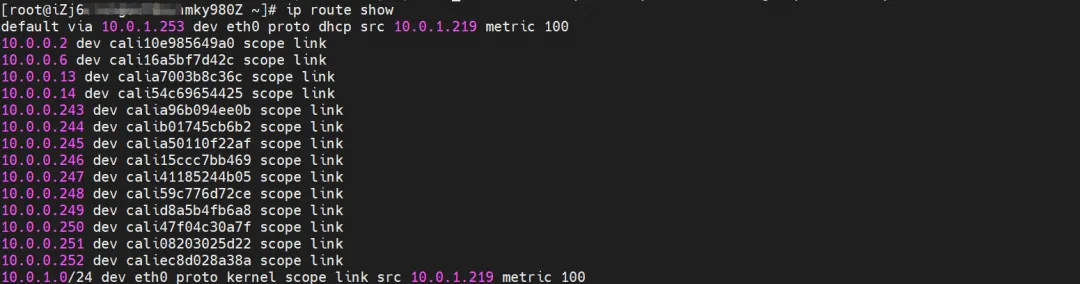

It can be obtained through OS Linux Routing that all traffic destined for the Pod IP will be forwarded to the calixx virtual card corresponding to the Pod. Therefore, the network namespace of ECS OS and Pod has established a complete inbound and outbound link configuration.

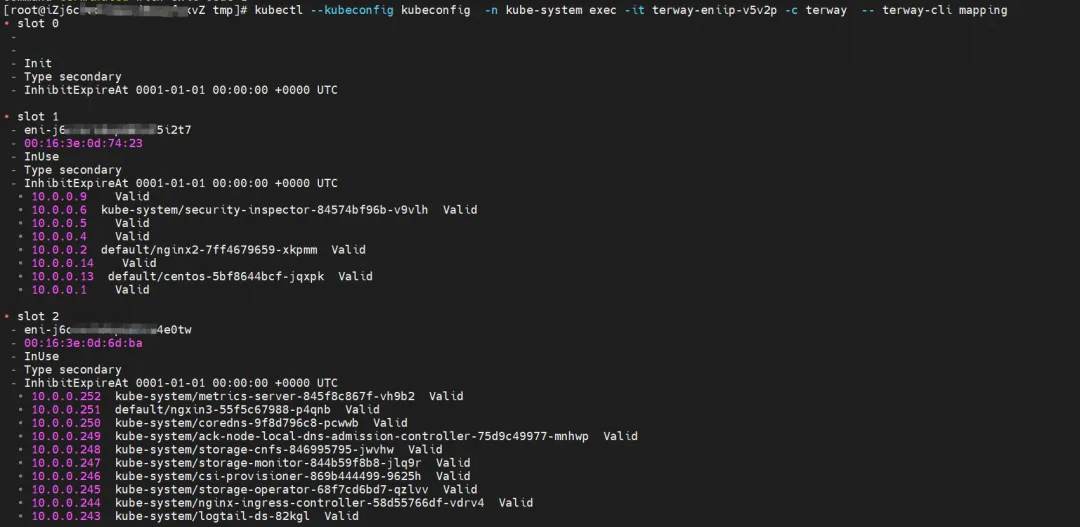

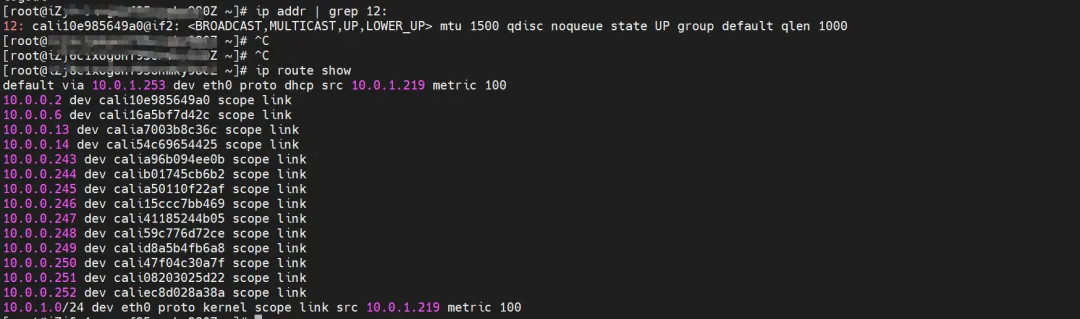

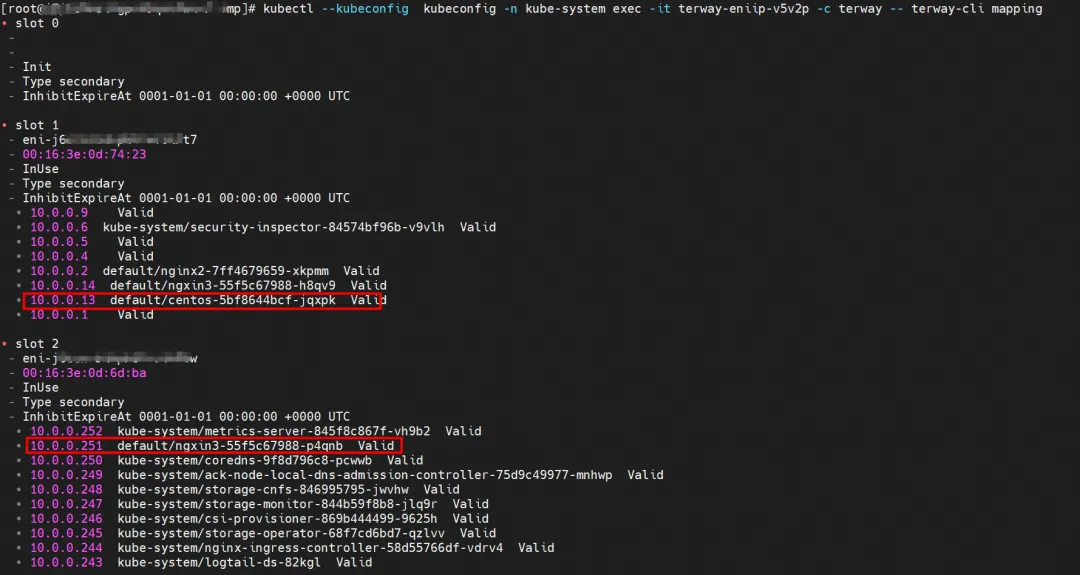

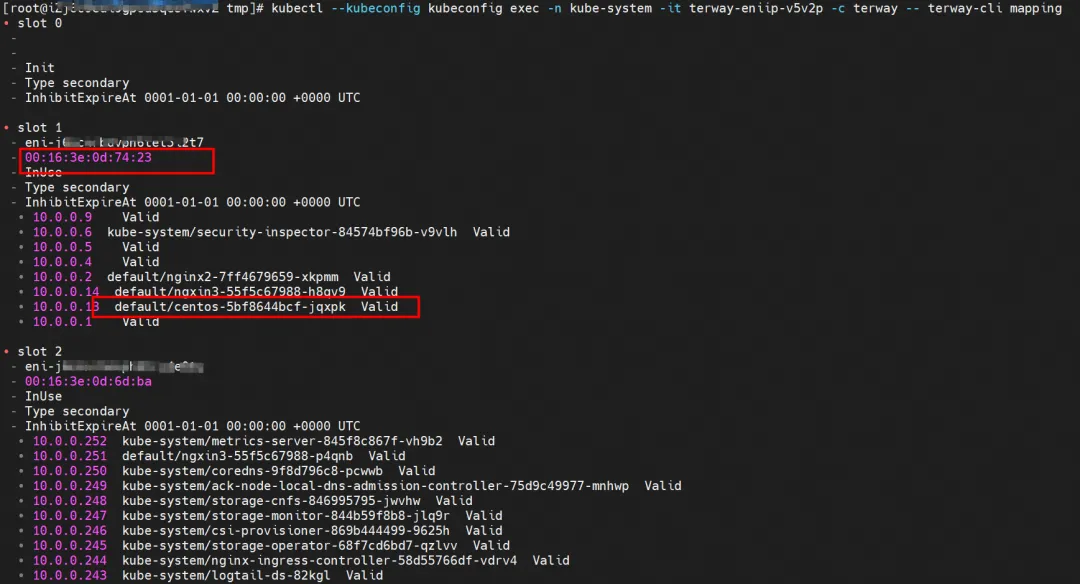

For the implementation of ENI multi-IP, this is similar to the principle of ACK container network data link (Terway ENIIP) [ 2] . Terway Pod is deployed on each node through Daemonset. You can use the terway-cli mapping command to get the number of attached ENIs on the node, the MAC address, and the IP on each ENI.

For Pod to access SVC, the container uses various methods to forward the request to the ECS layer where the Pod is located, and the netfilter module in ECS implements the resolution of the SVC IP. However, since the data link needs to be switched from the network namespace of the Pod to the network namespace of the OS of the ECS, passing through the kernel protocol stack in the middle, performance loss will inevitably occur. If there is a mechanism to pursue high concurrency and high performance, it may not be possible. Not fully meeting customer needs. Compared with the IPVLAN mode, which deploys eBPF inside the Pod for SVC address translation, the eBPF in the DataPath V2 mode listens on the calixxx network card of each Pod to accelerate Pod and Pod access, and transfer the address of the Pod's access to the SVC IP. For link acceleration, model comparison can refer to using Terway network plug-in [ 3] .

BPF Routing

After the 5.10 kernel, Cilium has added the eBPF Host-Routing function and two new redirect methods, bpf_redirect_peer and bpf_redirect_neigh.

-

bpf_redirect_peer

The data packet is sent directly to the interface eth0 in the veth pair Pod without passing through the lxc interface of the host, so that the data packet enters the CPU backlog queue less once and obtains better forwarding performance.

-

bpf_redirect_neigh

Used to fill in the src and dst mac addresses of pod egress traffic. The traffic does not need to go through the kernel's route protocol stack processing.

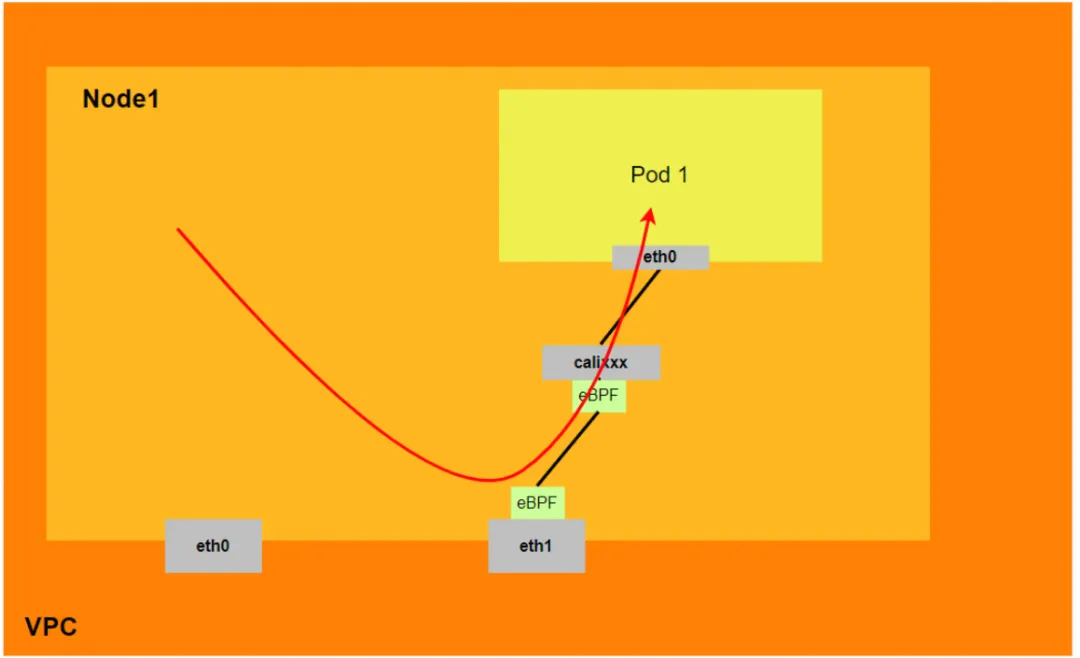

Therefore, the overall Terway DataPath V2 model can be summarized as:

- Currently, low-version kernels (lower than 4.2) do not support eBPF acceleration. Aliyun2 can obtain partial acceleration capabilities, and Aliyun3 can obtain complete acceleration capabilities.

- Nodes need to go through the Host's protocol stack to access Pods. Pod-to-Pod access and Pod access to SVC do not go through the Host's protocol stack.

- In DataPath V2 mode, if a Pod accesses the SVC IP, the SVC IP is converted by eBPF on the Pod's veth pair calixxx network card to the IP of an SVC backend Pod, and then the data link bypasses the Host protocol stack. In other words, the SVC IP will only be captured in the veth pair of the source Pod, but neither the destination Pod nor the ECS where the destination Pod is located will be captured.

Terway DataPath V2 mode container network data link analysis

Based on the characteristics of the container network, the network links in Terway datapathv2 mode can be roughly divided into two large SOP scenarios: providing external services using Pod IP and providing external services using SVC. This can be further subdivided into 11 different small SOP scenarios. SOP scenario.

After combing and merging the data links of these 15 scenarios, these scenarios can be summarized into the following 8 typical scenarios:

- Access the Pod IP and access the Pod from the same node

- Access Pod IP, mutual access between Pods on the same node (Pods belong to the same ENI)

- Access Pod IP, mutual access between Pods on the same node (Pods belong to different ENIs)

- Access Pod IP, mutual access between Pods between different nodes

- SVC Cluster IP/External IP accessed by Pods in the cluster, SVC backend Pod and client Pod belong to the same ECS and ENI

- SVC Cluster IP/External IP accessed by Pods in the cluster, SVC backend Pod and client Pod belong to the same ECS but different ENI

- The SVC Cluster IP/External IP accessed by Pods in the cluster, SVC backend Pod and client Pod belong to different ECS

- Access SVC External IP from outside the cluster

Scenario 1: Access Pod IP, access Pod from the same node (including node access backend SVC ClusterIP of the same node)

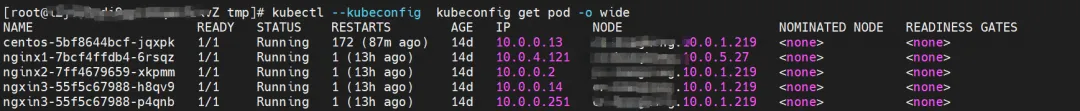

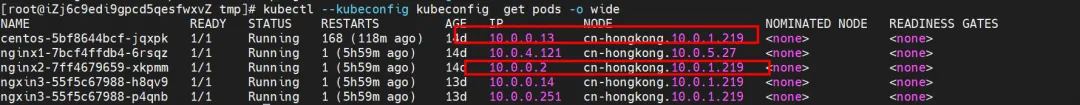

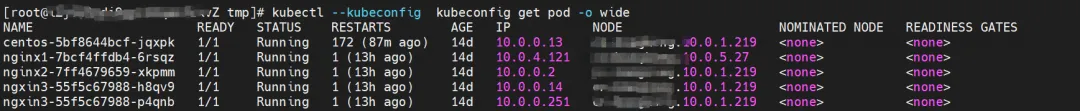

environment

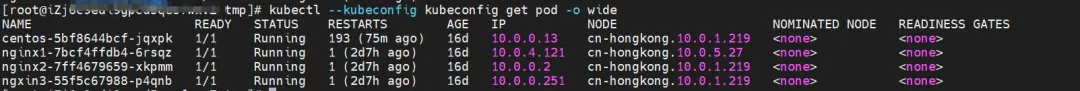

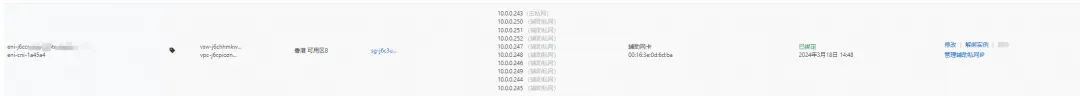

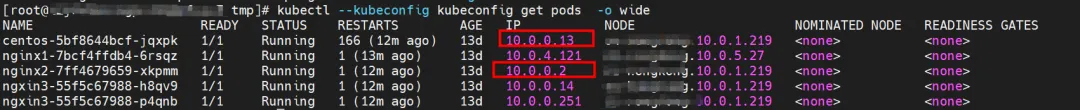

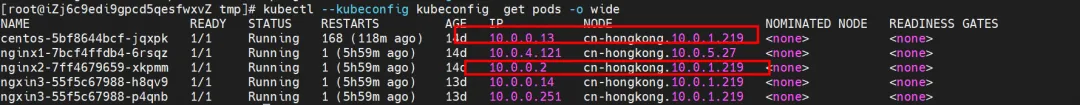

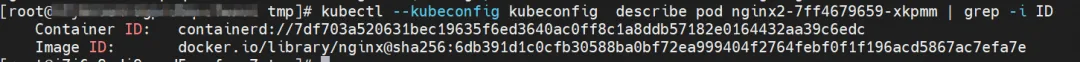

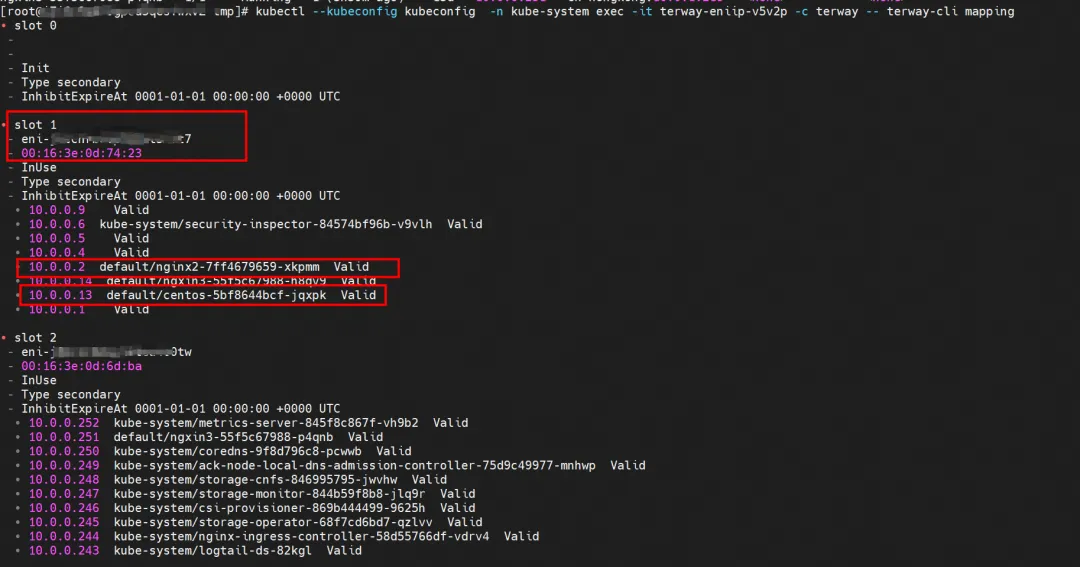

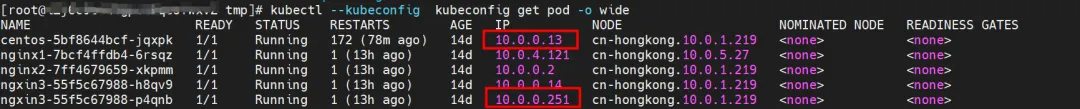

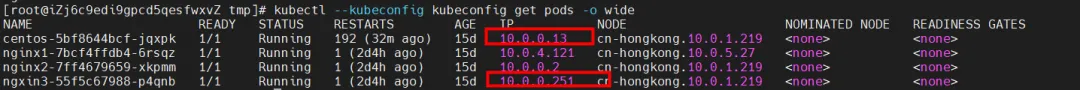

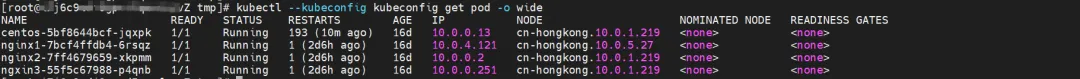

nginx2-7ff4679659-xkpmm exists on node xxx.10.0.1.219, with IP address 10.0.0.2.

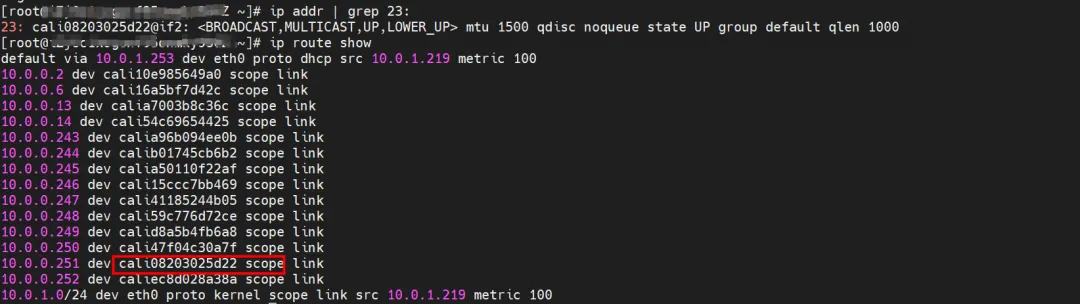

kernel routing

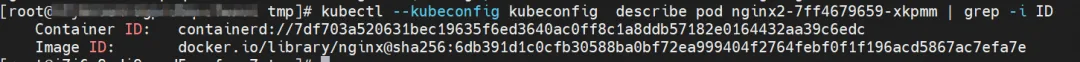

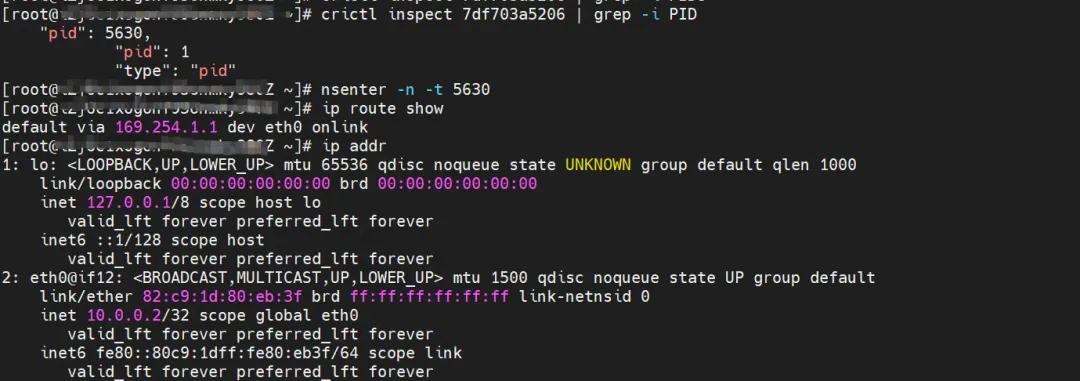

nginx2-7ff4679659-xkpmm, IP address 10.0.0.2, the PID of the container in the host is 5630, and the container network namespace has a default route pointing to the container eth0.

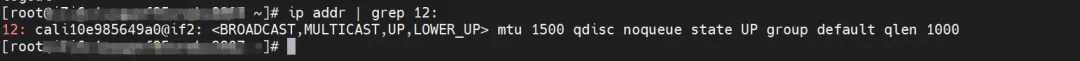

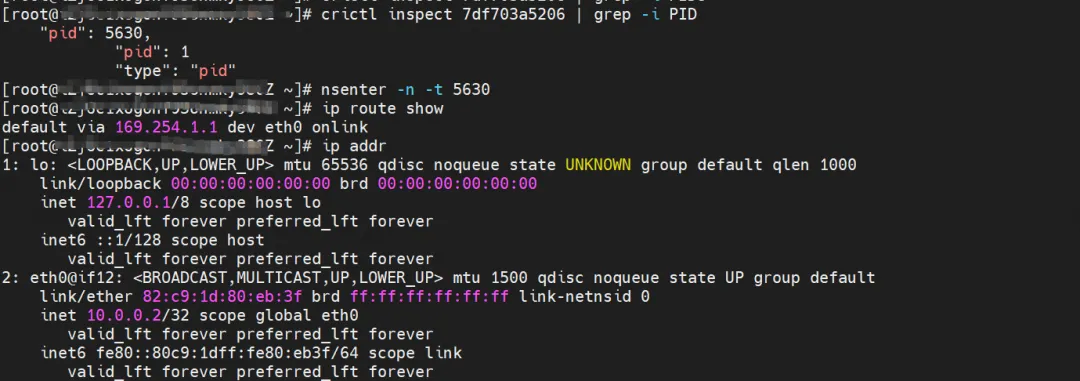

The corresponding veth pair of container eth0 in ECS OS is cali10e985649a0. In ECS OS, there is a route pointing to the Pod IP and the next hop is calixxx. From the previous article, we can know that the calixxx network card is a pair with veth1 in each Pod.

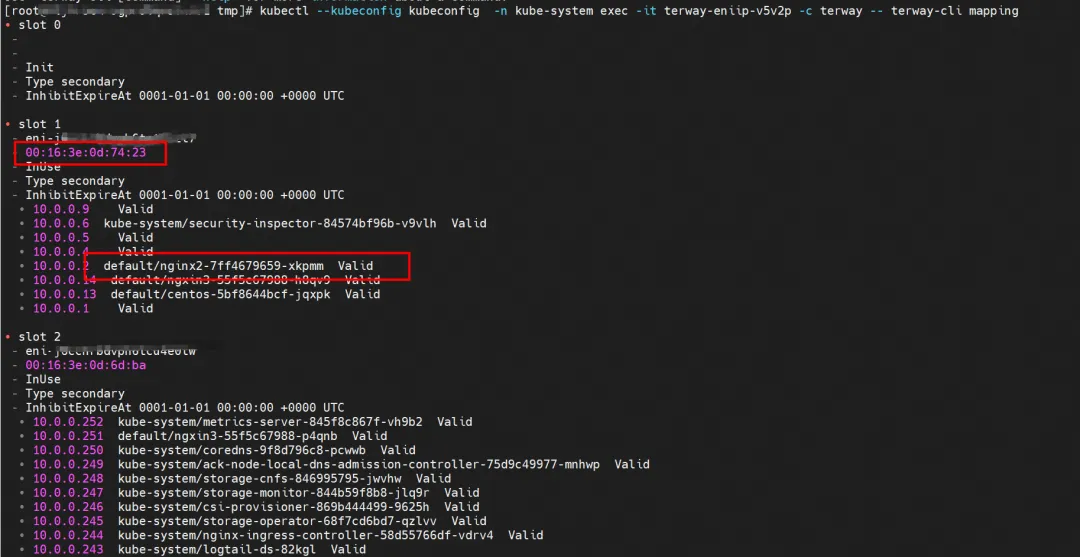

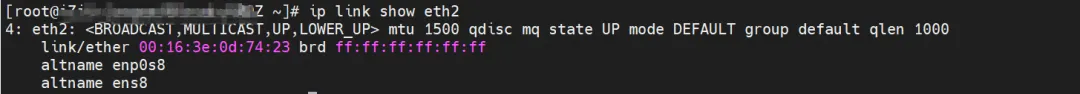

Through the following command, we can obtain nginx2-7ff4679659-xkpmm. The IP address 10.0.0.2 is assigned to the eth2 accessory network card by terway.

kubectl --kubeconfig kubeconfig -n kube-system exec -it terway-eniip-v5v2p -c terway -- terway-cli mapping

summary

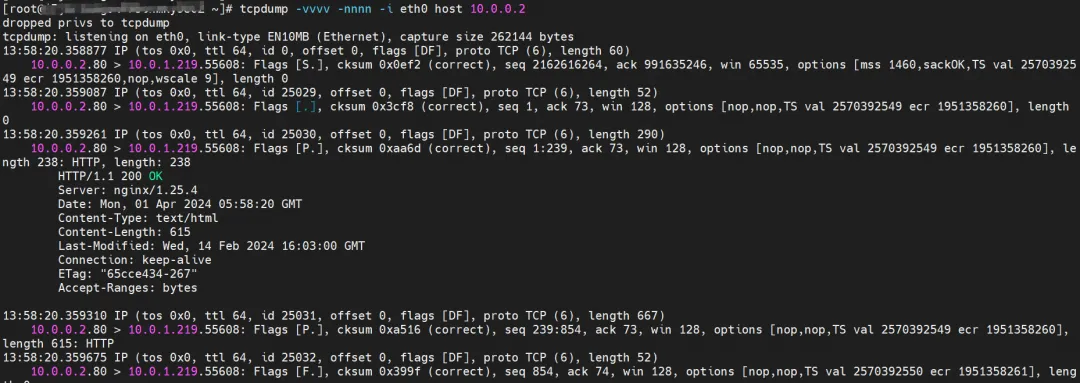

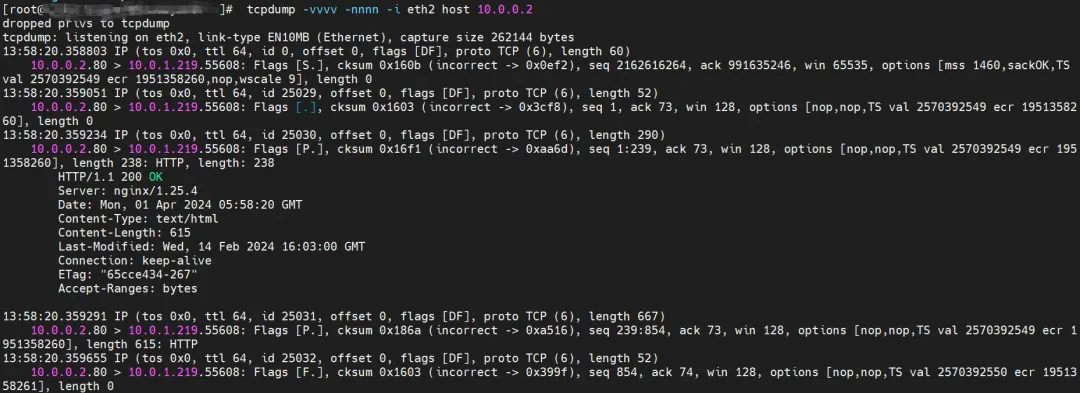

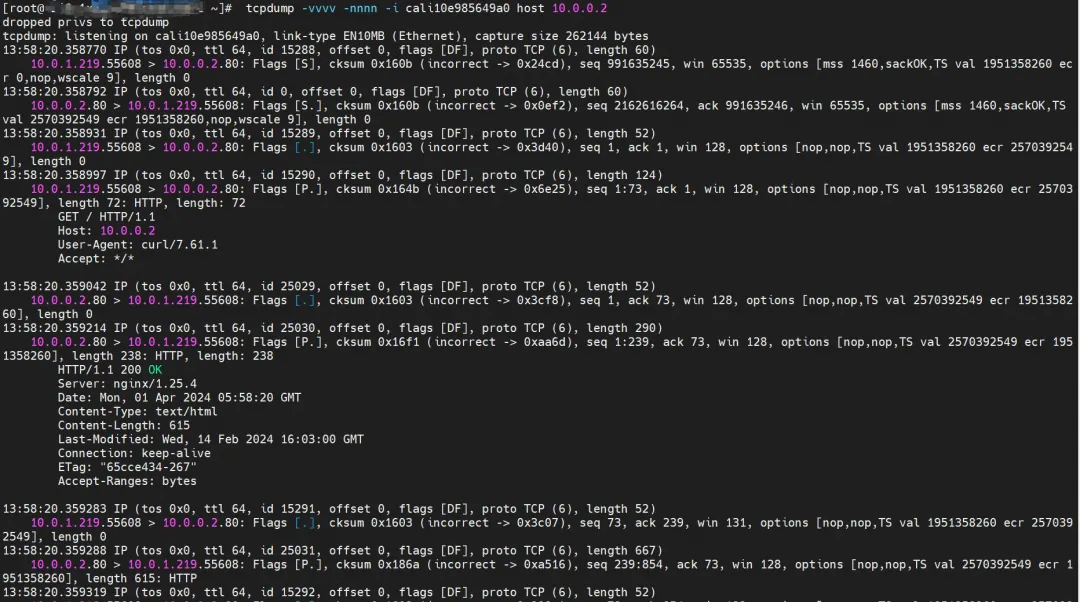

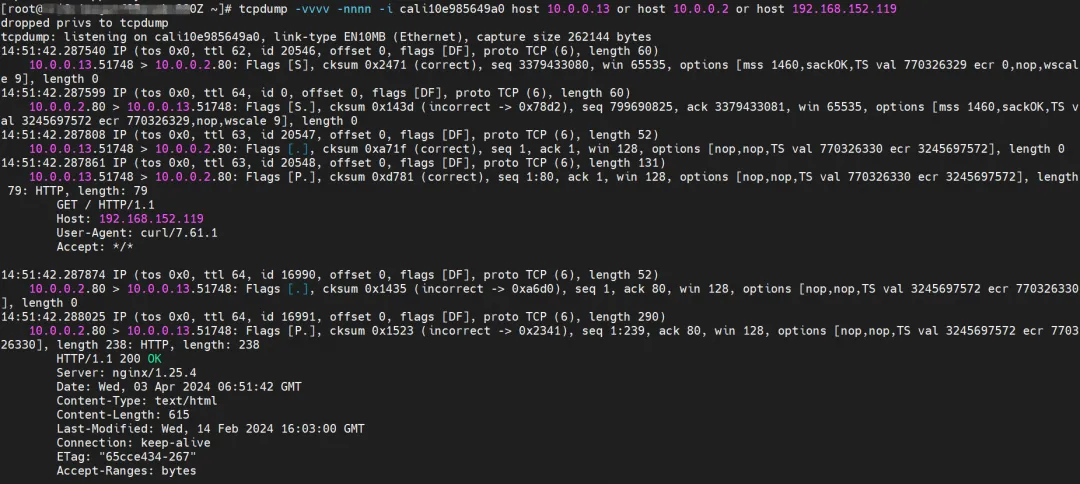

ECS's eth0 captured the data returned from nginx2-7ff4679659-xkpmm, but did not capture the sent data.

ECS's eth1 also captured the data returned from nginx2-7ff4679659-xkpmm, but did not capture the sent data.

cali10e985649a0 can capture sent and received packets.

The subsequent summary will no longer show data packet observations.

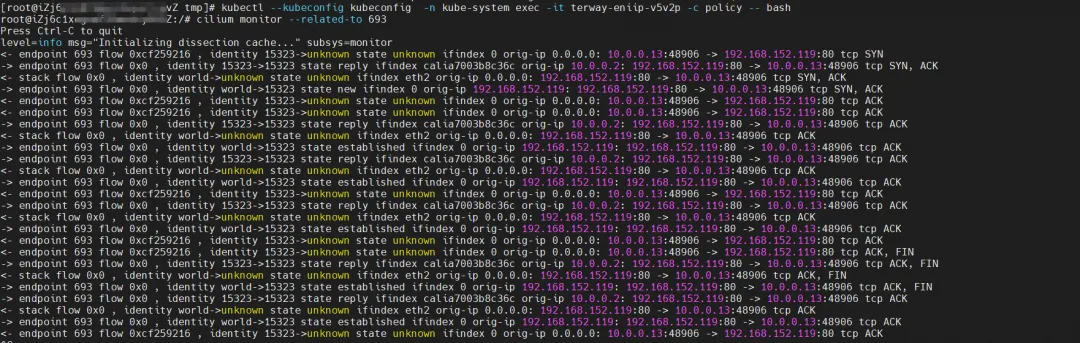

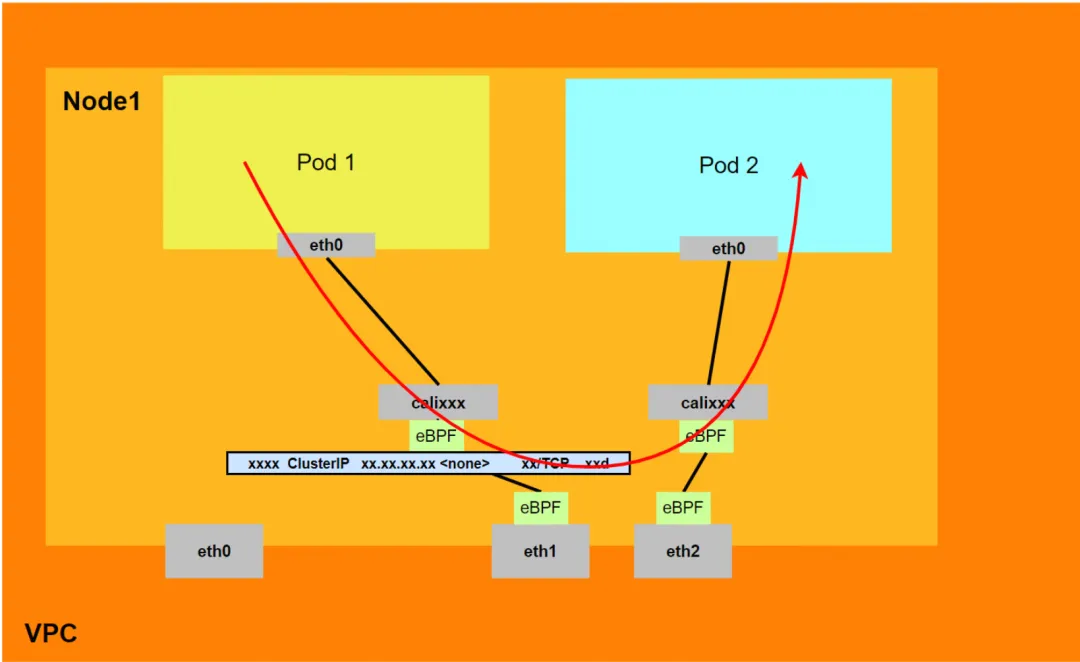

Data link forwarding diagram (Aliyun2&Aliyun3):

- The entire link passes through the network protocol stack of ECS and Pod.

- The entire request link is: ECS OS -> calixxx -> ECS Pod eth0

Scenario 2: Access Pod IP, mutual access between Pods on the same node (Pods belong to the same ENI)

environment

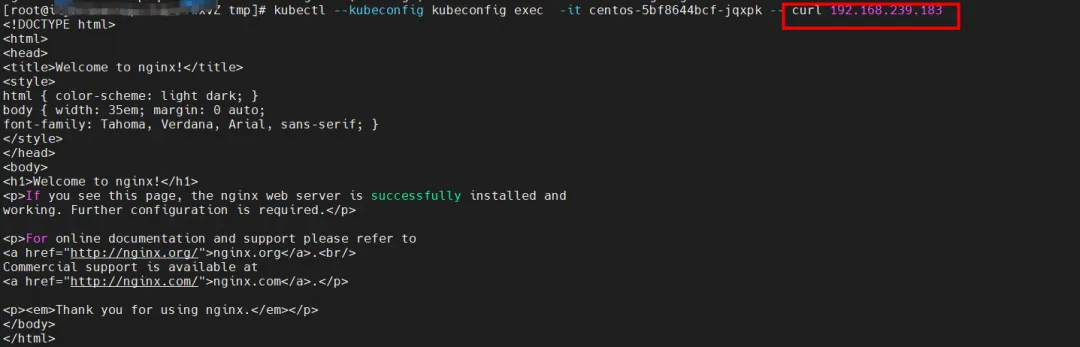

nginx2-7ff4679659-xkpmm, IP address 10.0.0.2 and centos-5bf8644bcf-jqxpk, IP address 10.0.0.13 exist on the xxx.10.0.1.219 node.

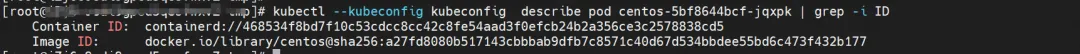

kernel routing

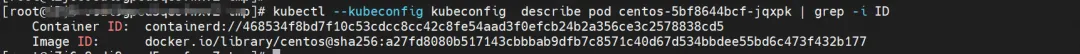

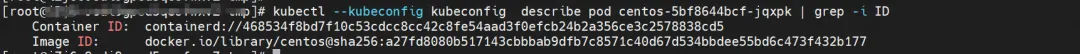

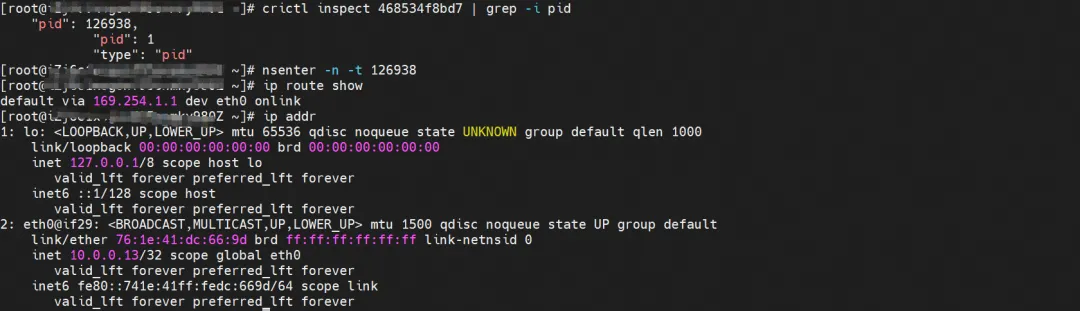

centos-5bf8644bcf-jqxpk, IP address 10.0.0.13, the PID of the container in the host is 126938, and the container network namespace has a default route pointing to the container eth0.

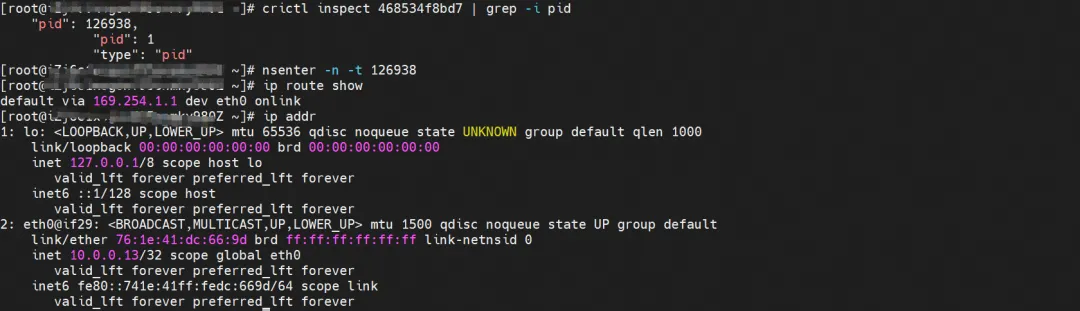

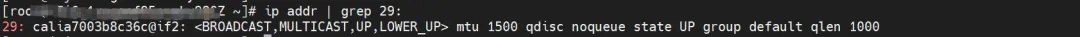

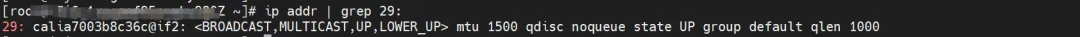

The corresponding veth pair of this container eth0 in ECS OS is calia7003b8c36c. In ECS OS, there is a route pointing to the Pod IP and the next hop is calixxx. From the previous article, we can know that the calixxx network card is a pair with veth1 in each Pod.

nginx2-7ff4679659-xkpmm, IP address 10.0.0.2, the PID of the container in the host is 5630, and the container network namespace has a default route pointing to the container eth0.

The corresponding veth pair of container eth0 in ECS OS is cali10e985649a0. In ECS OS, there is a route pointing to the Pod IP and the next hop is calixxx. From the previous article, we can know that the calixxx network card is a pair with veth1 in each Pod.

Through the following command, we can get that nginx2-7ff4679659-xkpmm and centos-5bf8644bcf-jqxpk are assigned to the same eth2 accessory network card by terway.

kubectl --kubeconfig kubeconfig -n kube-system exec -it terway-eniip-v5v2p -c terway -- terway-cli mapping

summary

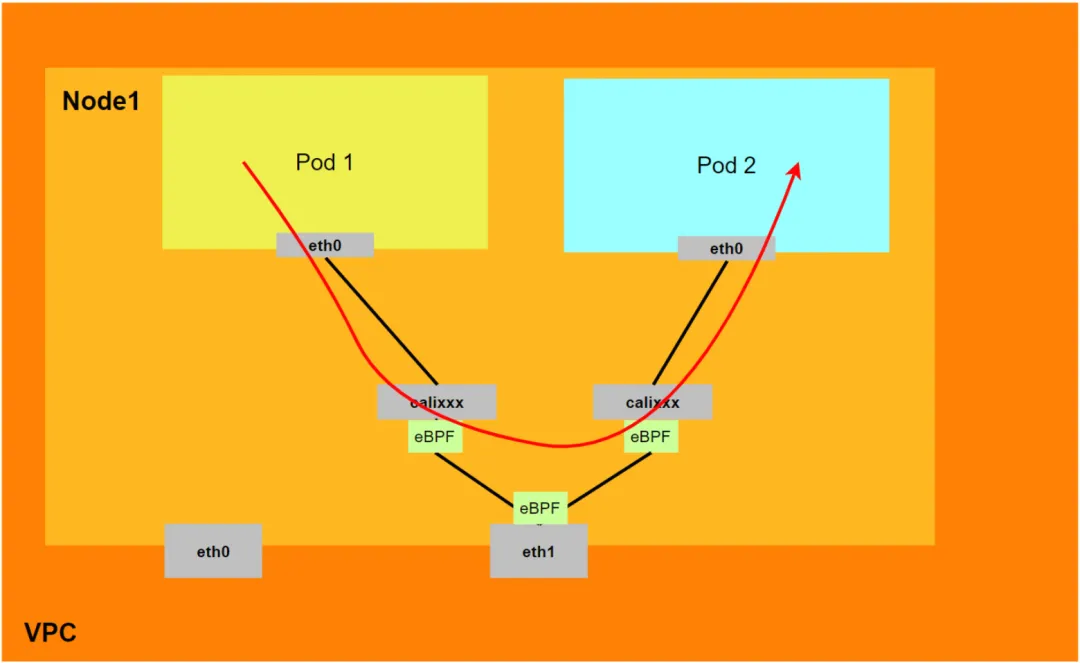

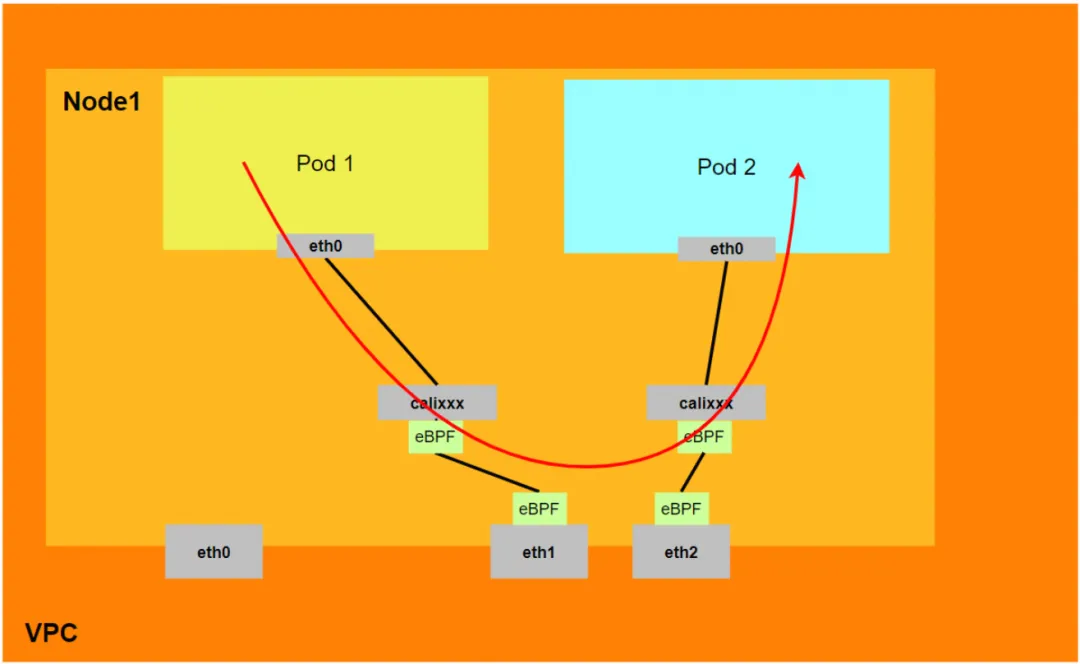

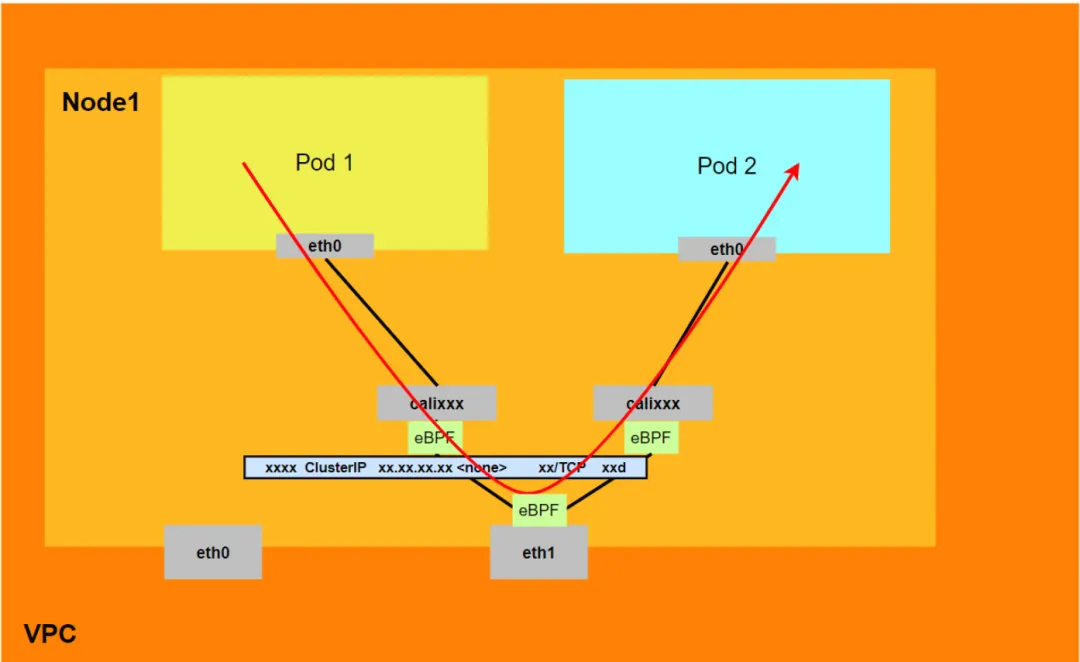

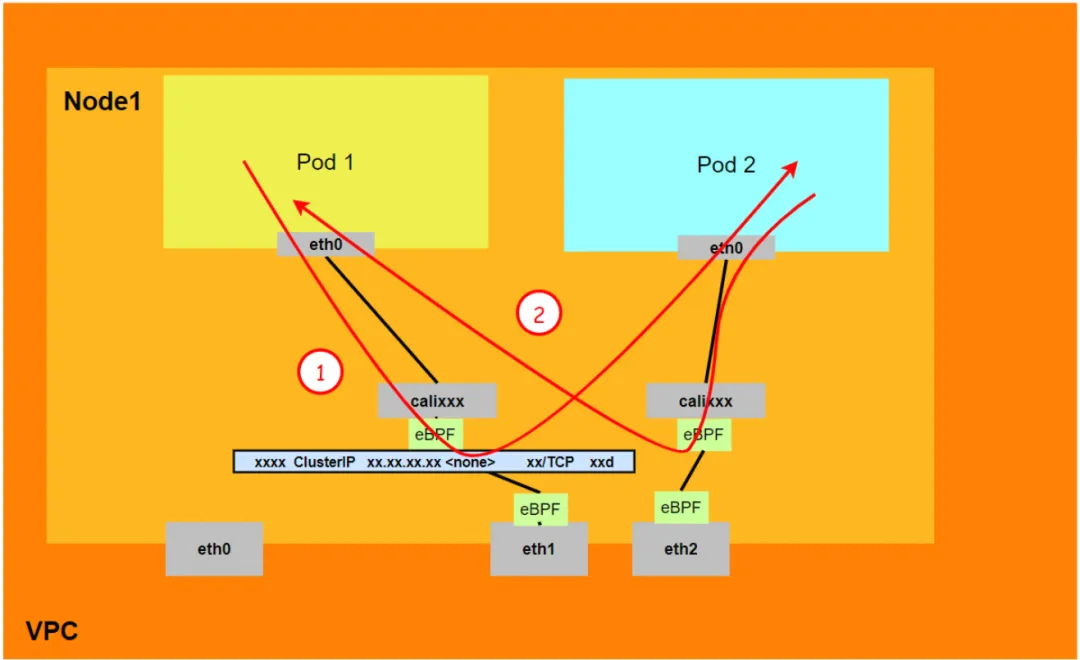

Aliyun2:

- Will not go through the secondary network card assigned to the Pod.

- The entire link passes through the Pod's network protocol stack. When the link is forwarded to the peer through the Pod's network namespace, it will be accelerated by eBPF and bypass the OS protocol stack.

- The entire request link is: ECS Pod1 -> Pod1 calixxx -> Pod2 calixxx -> ECS Pod2.

Aliyun3:

- Will not go through the secondary network card assigned to the Pod.

- The entire link will be accelerated directly to the destination Pod through the eBPF ingress and will not go through the destination Pod's calixxx network card.

- The entire request link is:

<!---->

- Direction: ECS Pod1 -> Pod1 calixxx -> ECS Pod2.

- Return direction: ECS Pod2 -> Pod2 calixxx -> ECS Pod1.

Scenario 3: Access Pod IP, mutual access between Pods on the same node (Pods belong to different ENIs)

environment

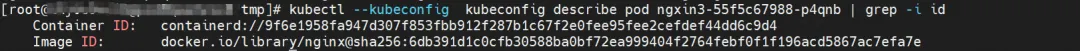

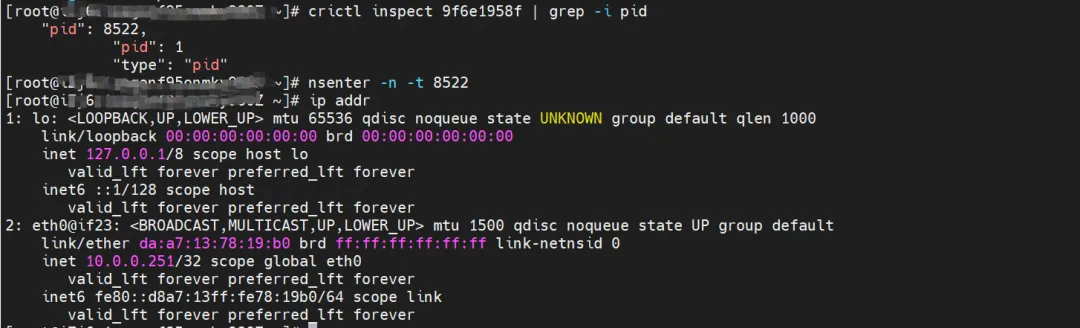

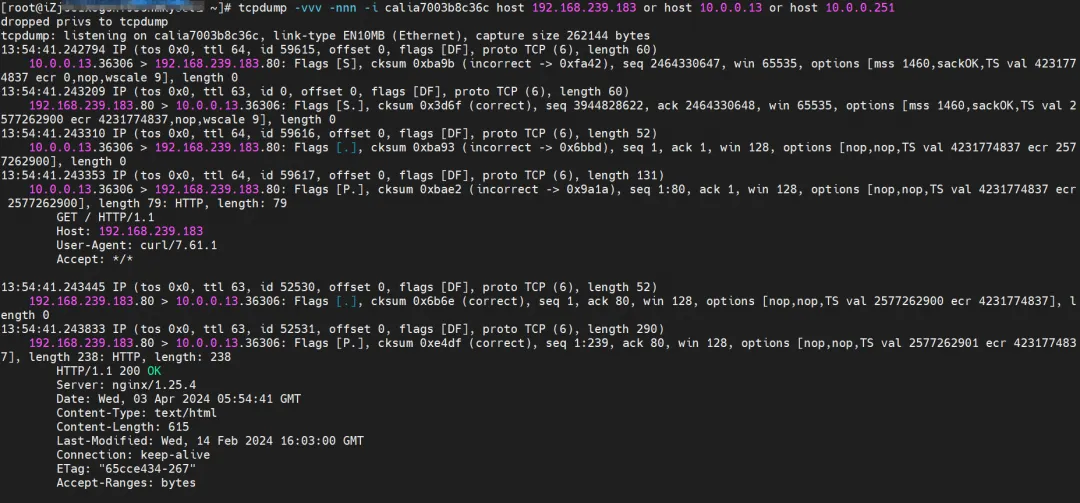

There are two Pods, ngxin3-55f5c67988-p4qnb and centos-5bf8644bcf-jqxpk, on the xxx.10.0.1.219 node, with IP addresses 10.0.0.251 and 10.0.0.13 respectively.

For the terway Pod of this node, use the terway-cli mapping command to obtain that the two IPs (10.0.0.251 and 10.0.0.13) belong to different ENI network cards and are considered eth1 and eth2 at the OS level.

kernel routing

centos-5bf8644bcf-jqxpk, IP address 10.0.0.13, the PID of the container in the host is 126938, and the container network namespace has a default route pointing to the container eth0.

The corresponding veth pair of this container eth0 in ECS OS is calia7003b8c36c. In ECS OS, there is a route pointing to the Pod IP and the next hop is calixxx. From the previous article, we can know that the calixxx network card is a pair with veth1 in each Pod.

ngxin3-55f5c67988-p4qnb, IP address 10.0.0.251, the PID of the container in the host is 5630, and the container network namespace has a default route pointing to the container eth0.

The corresponding veth pair of this container eth0 in ECS OS is cali08203025d22. In ECS OS, there is a route pointing to the Pod IP and the next hop is calixxx. From the previous article, we can know that the calixxx network card is a pair with veth1 in each Pod.

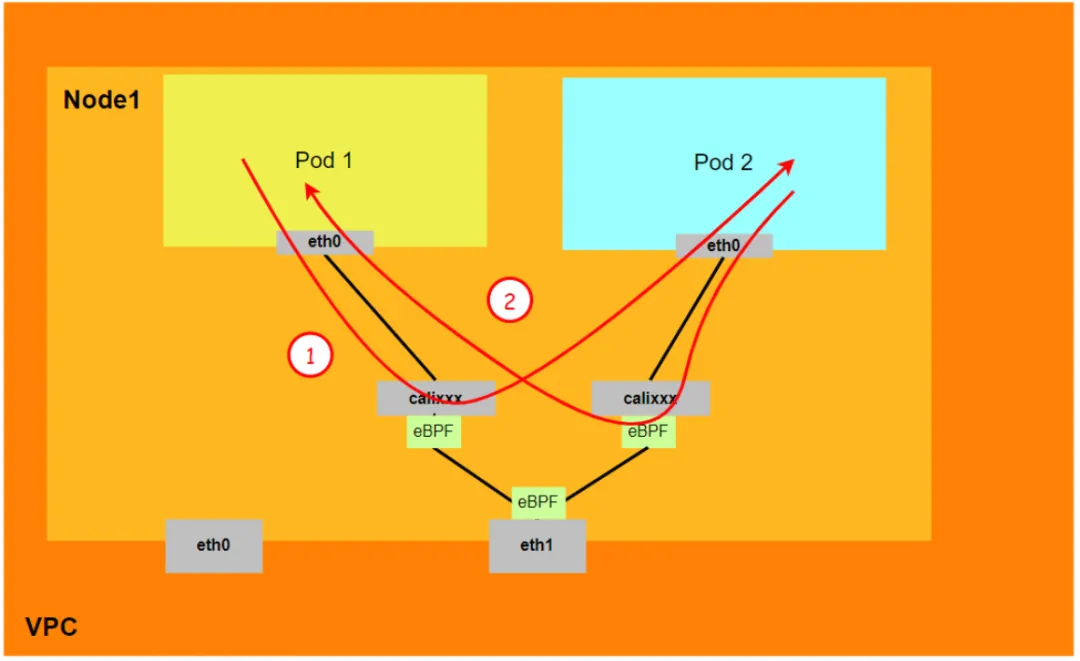

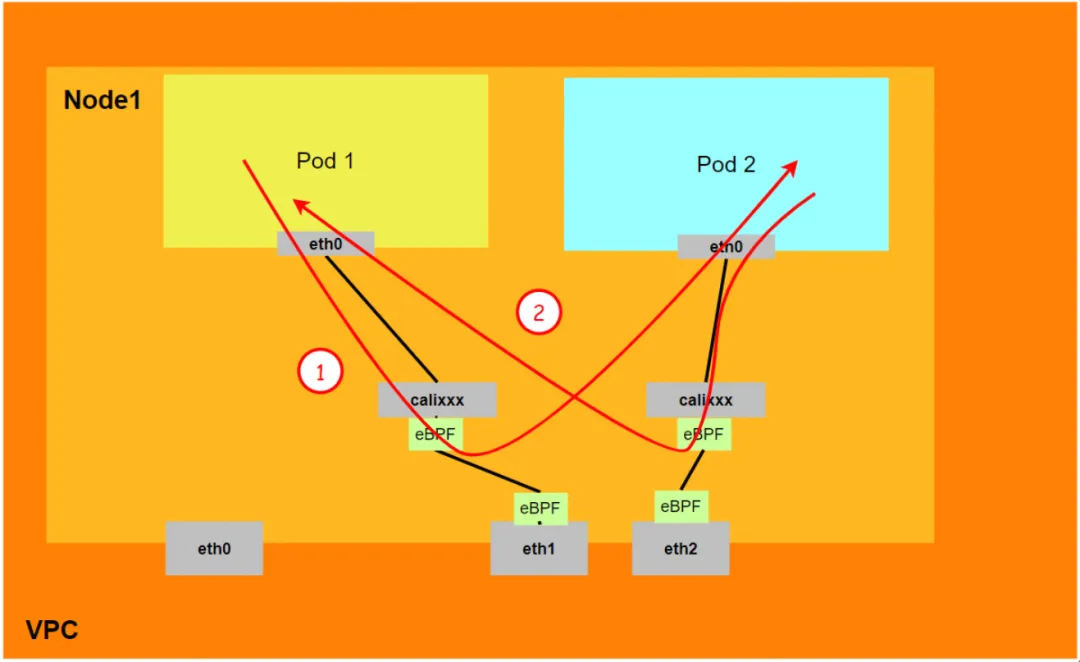

summary

Aliyun2:

- Will not go through the secondary network card assigned to the Pod.

- The entire link passes through the Pod's network protocol stack. When the link is forwarded to the peer through the Pod's network namespace, it will be accelerated by eBPF and bypass the OS protocol stack.

- The entire request link is: ECS Pod1 -> Pod1 calixxx -> Pod2 calixxx -> ECS Pod2.

Aliyun3:

- Will not go through the secondary network card assigned to the Pod.

- The entire link will be accelerated directly to the destination Pod through the eBPF ingress and will not go through the destination Pod's calixxx network card.

- The entire request link is:

- Direction: ECS Pod1 -> Pod1 calixxx -> ECS Pod2.

- Return direction: ECS Pod2 -> Pod2 calixxx -> ECS Pod1.

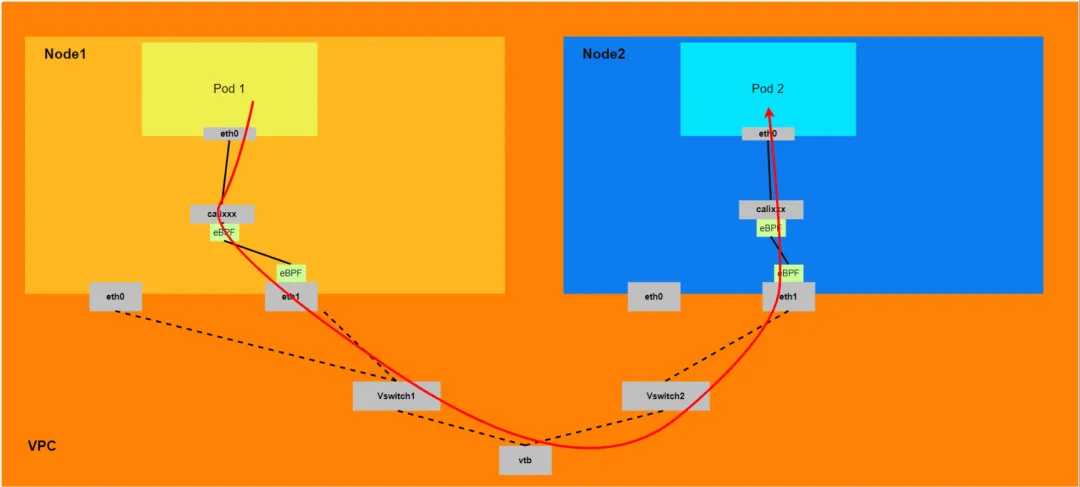

Scenario 4: Access Pod IP, mutual access between Pods between different nodes

environment

centos-5bf8644bcf-jqxpk exists on the xxx.10.0.1.219 node, and the IP address is 10.0.0.13.

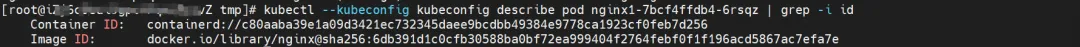

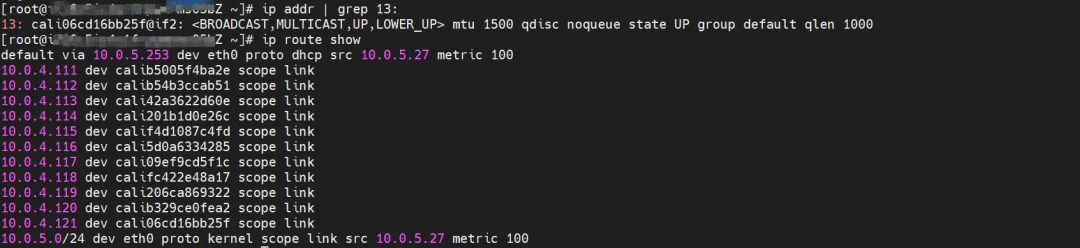

nginx1-7bcf4ffdb4-6rsqz exists on the xxx.10.0.5.27 node, and the IP address is 10.0.4.121.

You can use the terway-cli show factory command to obtain the ENI network card whose IP 10.0.0.13 belongs to centos-5bf8644bcf-jqxpk and whose MAC address is 00:16:3e:0d:74:23 on xxx.10.0.1.219.

In the same way, the IP 10.0.4.121 of nginx1-7bcf4ffdb4-6rsqz belongs to the ENI network card with the MAC address 00:16:3e:0c:ef:6c on xxx.10.0.5.27.

kernel routing

centos-5bf8644bcf-jqxpk, IP address 10.0.0.13, the PID of the container in the host is 126938, and the container network namespace has a default route pointing to the container eth0.

The corresponding veth pair of this container eth0 in ECS OS is calia7003b8c36c. In ECS OS, there is a route pointing to the Pod IP and the next hop is calixxx. From the previous article, we can know that the calixxx network card is a pair with veth1 in each Pod.

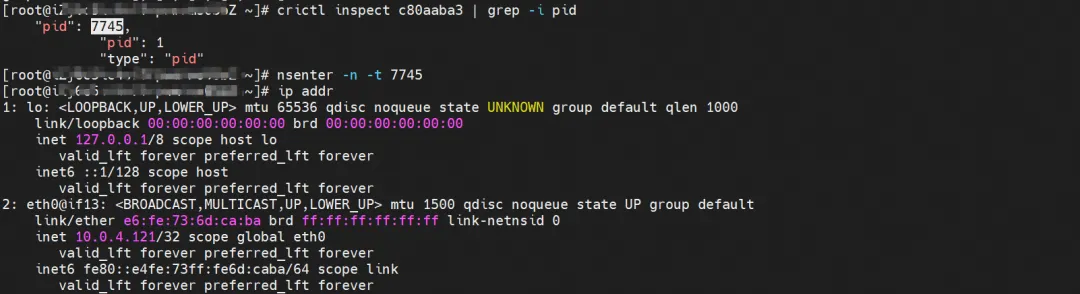

nginx1-7bcf4ffdb4-6rsqz, IP address 10.0.4.121, the PID of the container in the host is 7745, and the container network namespace has a default route pointing to the container eth0.

The corresponding veth pair of this container eth0 in ECS OS is cali06cd16bb25f. In ECS OS, there is a route pointing to the Pod IP and the next hop is calixxx. From the previous article, we can know that the calixxx network card is a pair with veth1 in each Pod.

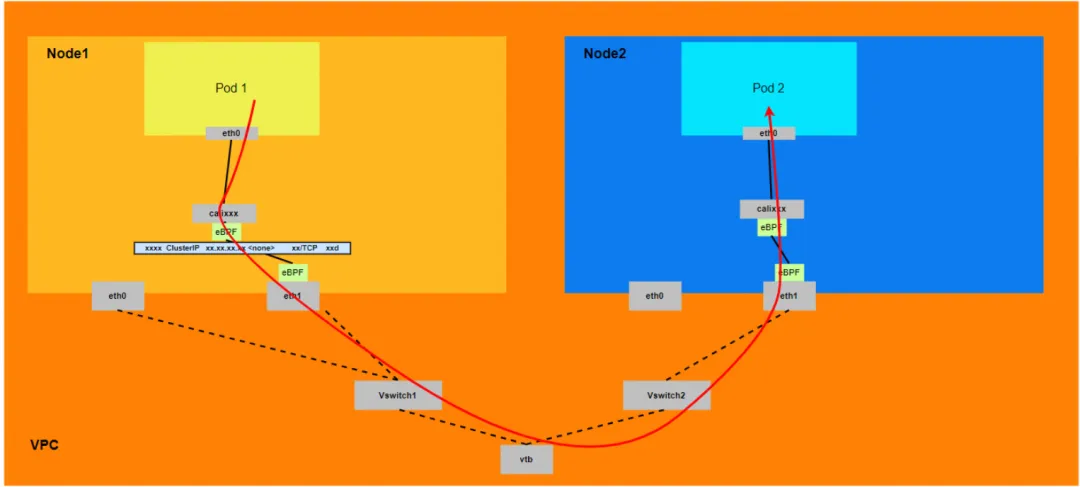

summary

Aliyun2:

- It will pass through the network namespace of the host OS, but the protocol stack link will be accelerated by eBPF.

- The entire link needs to go out of ECS from the ENI network card to which the client Pod belongs and then enter ECS from the ENI network card to which the destination Pod belongs.

- The entire request link is ECS1 Pod1 -> ECS1 Pod1 calixxx -> ECS1 ENI ethx -> VPC -> ECS2 ENI ethx -> ECS2 Pod2 calixxx -> ECS2 Pod2.

Aliyun3:

- The entire link needs to start from the ENI network card to which the client Pod belongs, then pass through ECS and then enter ECS from the ENI network card to which the destination Pod belongs.

- The entire request link is:

- Direction: ECS1 Pod1 -> ECS1 Pod1 calixxx -> ECS1 ENI ethx -> VPC -> ECS2 ENI ethx -> ECS2 Pod2.

- Direction: ECS2 Pod2 -> ECS2 Pod2 calixxx -> ECS2 ENI ethx -> VPC -> ECS1 ENI ethx -> ECS1 Pod1.

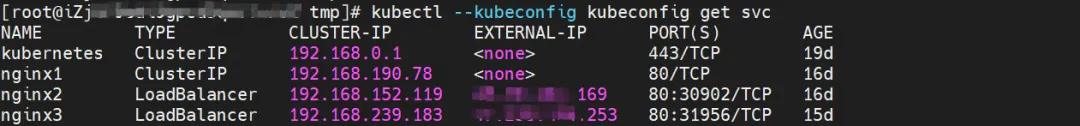

Scenario 5: The SVC Cluster IP/External IP accessed by the Pod in the cluster, the SVC backend Pod and the client Pod belong to the same ECS and the same ENI

environment

nginx2-7ff4679659-xkpmm, IP address 10.0.0.2 and centos-5bf8644bcf-jqxpk, IP address 10.0.0.13 exist on the xxx.10.0.1.219 node.

Among them, nginx2-7ff4679659-xkpmm Pod is the endpoint of SVC nginx2.

kernel routing

Kernel routing is similar to Scenario 2 and will not be described here.

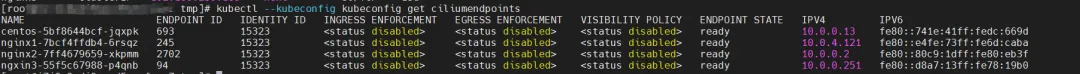

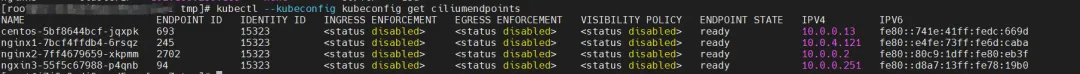

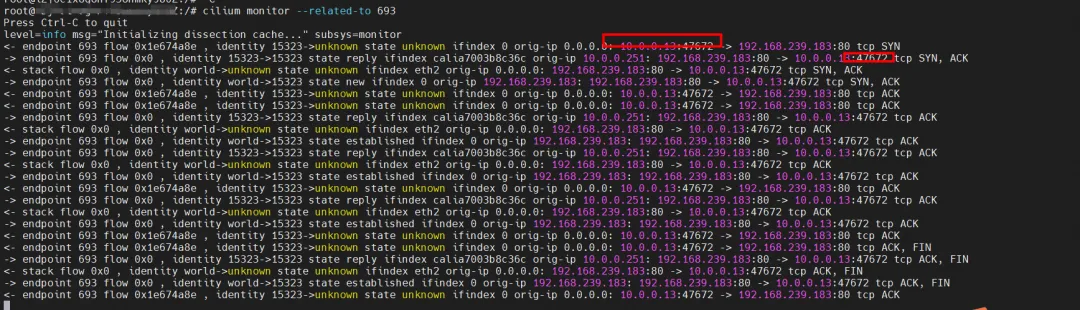

Regarding eBPF, in datapathv2, eBPF listens on the calixxx network card at the OS level, not inside the Pod. Using cilium's ability to call eBPF, you can get the centos-5bf8644bcf-jqxpk and nginx2-7ff4679659-xkpmm identity IDs as 693 and 2702 respectively through the graphical command.

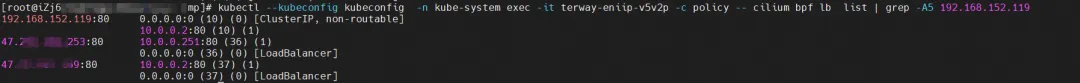

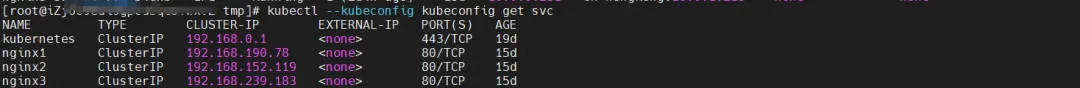

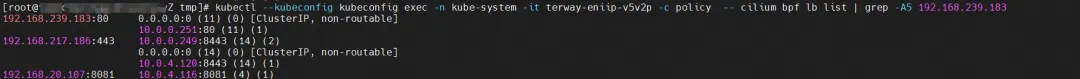

Find the Terway Pod of the ECS where the Pod is located as terway-eniip-v5v2p. Run the cilium bpf lb list | grep -A5 192.168.152.119 command in the Terway Pod to get the backend recorded in eBPF for Cluster IP 192.168.152.119:80 as 10.0 .0.2:80.

summary

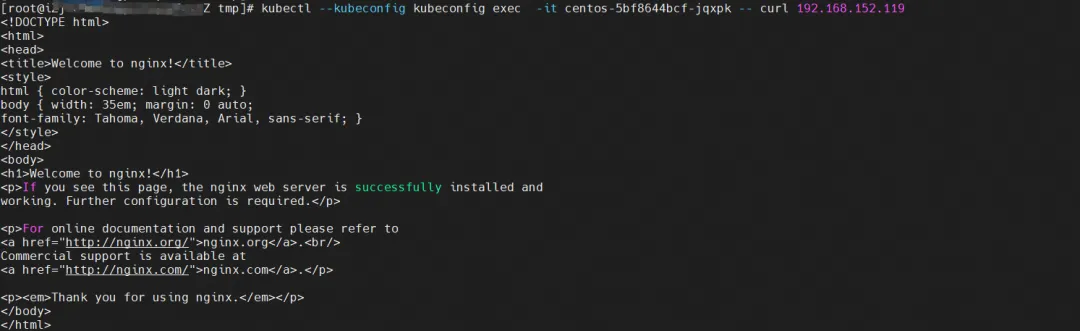

Access SVC from the client Pod centos-5bf8644bcf-jqxpk.

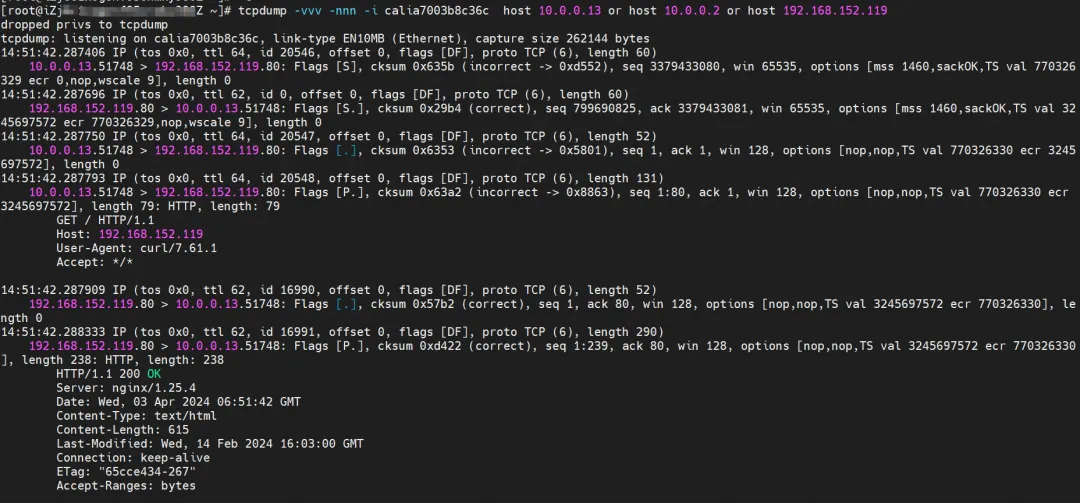

The client's centos-6c48766848-znkl8 calia7003b8c36c network card observation can capture the SVC IP and the client's Pod IP.

Observed on the ENI of the attached network card to which Pod centos-6c48766848-znkl8 belongs, no relevant traffic packets were captured, indicating that the traffic has undergone eBPF conversion from the client's calixx network card to ENI.

In the cali10e985649a0 observation of Pod nginx2-7ff4679659-xkpmmI, only the Pod IPs of centos and nginx can be captured.

Cilium provides a monitor function. Using cilium monitor --related-to <endpoint ID>, you can get: the source Pod IP accesses the SVC IP 192.168.152.119, and is then resolved to the SVC backend Pod IP 10.0.0.2. Description SVC IP is forwarded directly at the tc layer.

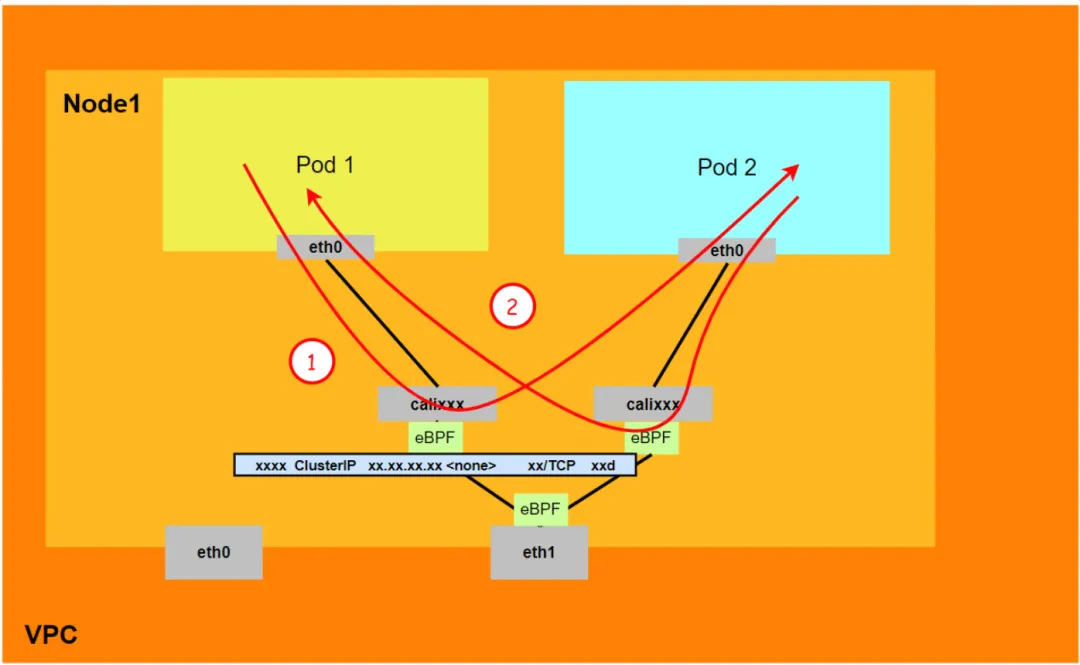

Aliyun2:

- The entire link passes through the Pod's network protocol stack. When the link is forwarded to the peer through the Pod's network namespace, it will be accelerated by eBPF and bypass the OS protocol stack.

- The entire link request does not go through the ENI assigned by the Pod.

- The SVC IP is converted into the IP of the SVC backend Pod through eBPF on the calixxx network card of the client Pod, and subsequent nodes cannot capture the SVC IP.

- The entire request link is: ECS Pod1 -> Pod1 calixxx -> Pod2 calixxx -> ECS Pod2.

Aliyun3:

-

The entire link passes through the Pod's network protocol stack. When the link is forwarded to the peer through the Pod's network namespace, it will be accelerated by eBPF and bypass the OS protocol stack.

-

The entire link request does not go through the ENI assigned by the Pod.

-

The SVC IP is converted into the IP of the SVC backend Pod through eBPF on the calixxx network card of the client Pod, and subsequent nodes cannot capture the SVC IP.

-

The entire link will be accelerated directly to the destination Pod through the eBPF ingress and will not go through the destination Pod's calixxx network card.

-

The entire request link is:

- Direction: ECS Pod1 -> Pod1 calixxx -> ECS Pod2.

- Return direction: ECS Pod2 -> Pod2 calixxx -> ECS Pod1.

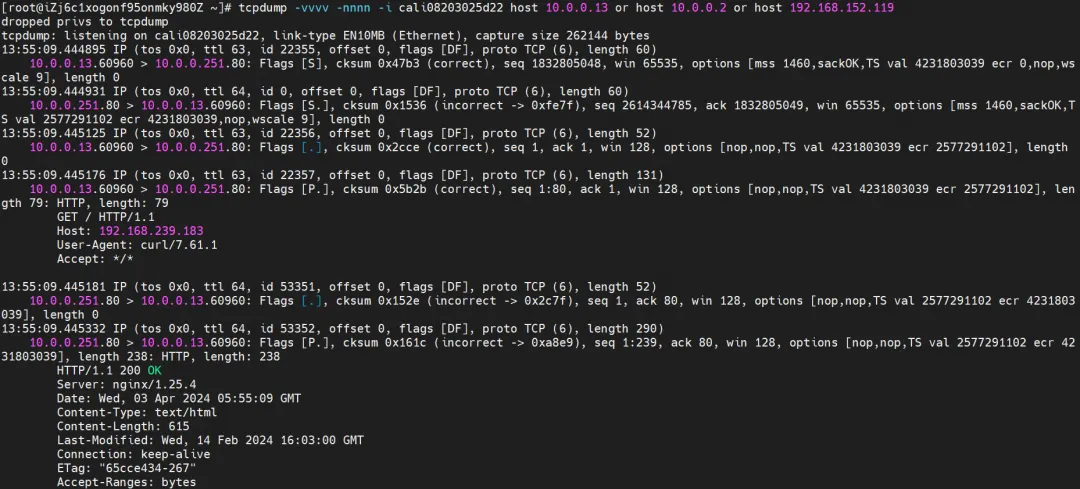

Scenario 6: The SVC Cluster IP/External IP accessed by the Pod in the cluster, the SVC backend Pod and the client Pod belong to the same ECS but different ENI

environment

There are ngxin3-55f5c67988-p4qnb, IP address 10.0.0.251 and centos-5bf8644bcf-jqxpk, IP address 10.0.0.13 on the xxx.10.0.1.219 node.

Among them, ngxin3-55f5c67988-p4qnb Pod is the endpoint of SVC nginx3.

kernel routing

Kernel routing is similar to Scenario 3 and will not be described here.

Regarding eBPF, in datapathv2, eBPF listens on the calixxx network card at the OS level, not inside the Pod. Using cilium's ability to call eBPF, you can get the centos-5bf8644bcf-jqxpk and ngxin3-55f5c67988-p4qnb identity IDs as 693 and 94 respectively through the graphical command.

Find the Terway Pod of the ECS where the Pod is located as terway-eniip-v5v2p. Run the cilium bpf lb list | grep -A5 192.168.239.183 command in the Terway Pod. You can get that the backend recorded in eBPF for Cluster IP 192.168.239.183:80 is 10.0. .0.2:80.

summary

Access SVC from the client Pod centos-5bf8644bcf-jqxpk.

The client's centos-6c48766848-znkl8 calia7003b8c36c network card observation can capture the SVC IP and the client's Pod IP.

Observed on the attached network card ENI of Pod centos-6c48766848-znkl8 and the attached network card ENI of ngxin3-55f5c67988-p4qnb, no relevant traffic was captured, indicating that the traffic was converted from the client's calixx network card to SVC-related traffic by eBPF After the endpoint, it is directly short-circuited to the destination calixxx network card.

When observing cali08203025d22 to which Pod ngxin3-55f5c67988-p4qnb belongs, only the Pod IPs of centos and nginx can be captured.

Cilium provides a monitor function. Using cilium monitor --related-to <endpoint ID>, you can get: the source Pod IP accesses the SVC IP 192.168.239.183, and is then resolved to the SVC backend Pod IP 110.0.0.251. Description SVC IP is forwarded directly at the tc layer.

If subsequent sections involve SVC IP access, if there is any similarity, no detailed explanation will be given.

Aliyun2:

- It will pass through the network namespace of the host OS, but the protocol stack link will be accelerated by eBPF.

- The entire link request does not go through the ENI assigned by the Pod.

- The SVC IP is converted into the IP of the SVC backend Pod through eBPF on the calixxx network card of the client Pod, and subsequent nodes cannot capture the SVC IP.

- The entire request link is ECS1 Pod1 -> ECS1 Pod1 calixxx -> ECS2 Pod2 calixxx -> ECS2 Pod2.

Aliyun3:

- Will not go through the secondary network card assigned to the Pod.

- The entire link will be accelerated directly to the destination Pod through the eBPF ingress and will not go through the destination Pod's calixxx network card.

- The SVC IP is converted into the IP of the SVC backend Pod through eBPF on the calixxx network card of the client Pod, and subsequent nodes cannot capture the SVC IP.

- The entire request link is:

<!---->

- Direction: ECS Pod1 -> Pod1 calixxx -> ECS Pod2.

- Return direction: ECS Pod2 -> Pod2 calixxx -> ECS Pod1.

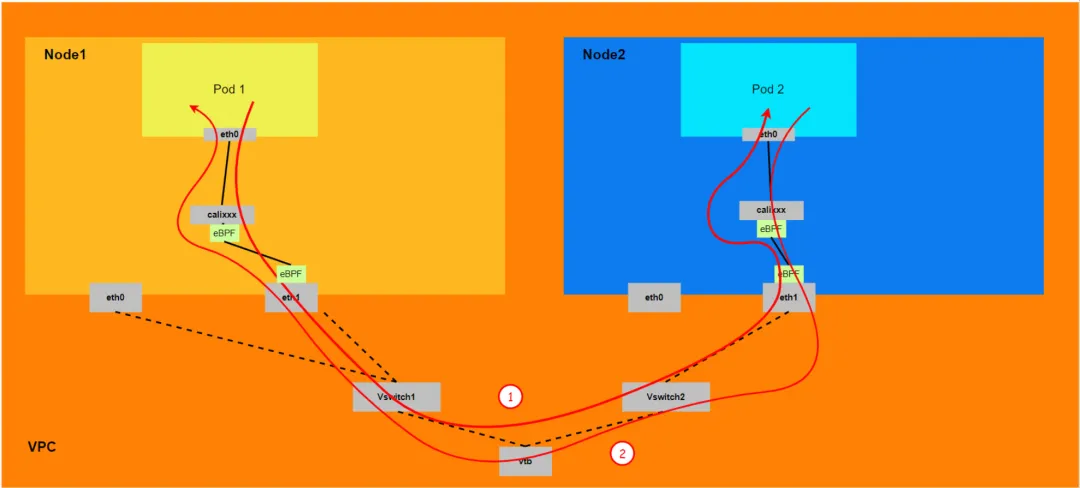

Scenario 7: The SVC Cluster IP/External IP accessed by the Pod in the cluster, the SVC backend Pod and the client Pod belong to different ECS

environment

centos-5bf8644bcf-jqxpk exists on the xxx.10.0.1.219 node, and the IP address is 10.0.0.13.

nginx1-7bcf4ffdb4-6rsqz exists on the xxx.10.0.5.27 node, and the IP address is 10.0.4.121.

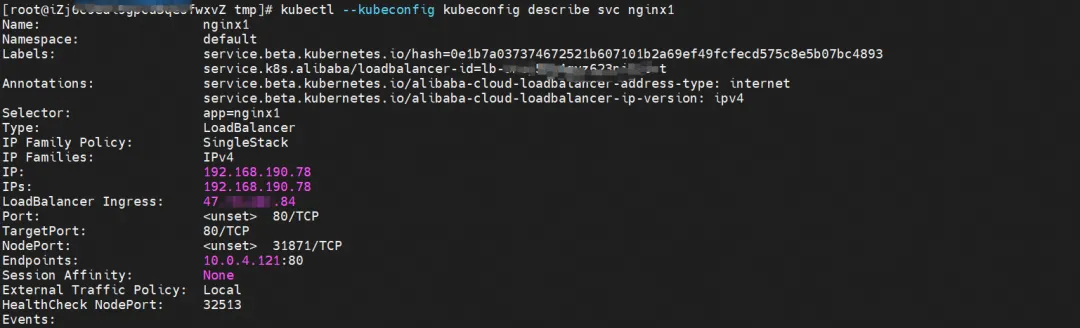

Among them, nginx1-7bcf4ffdb4-6rsqz Pod is the endpoint of SVC nginx1.

kernel routing

Pod accesses SVC's Cluster IP, and SVC's backend Pod and client Pod are deployed on different ECSs. This architecture is similar to Scenario 4: Mutual access between Pods between different nodes [ 4] , except that this scenario is Pod access. Cluster IP of SVC. eBPF forwarding of Cluster IP is described. For details, see Scenario 5 and Scenario 6.

summary

Aliyun2:

- It will pass through the network namespace of the host OS, but the protocol stack link will be accelerated by eBPF.

- The entire link needs to go out of ECS from the ENI network card to which the client Pod belongs and then enter ECS from the ENI network card to which the destination Pod belongs.

- The entire request link is ECS1 Pod1 -> ECS1 Pod1 calixxx -> ECS1 ENI ethx -> VPC -> ECS2 ENI ethx -> ECS2 Pod2 calixxx -> ECS2 Pod2.

Aliyun3:

-

The entire link needs to start from the ENI network card to which the client Pod belongs, then pass through ECS and then enter ECS from the ENI network card to which the destination Pod belongs.

-

The entire request link is:

- Direction: ECS1 Pod1 -> ECS1 Pod1 calixxx -> ECS1 ENI ethx -> VPC -> ECS2 ENI ethx -> ECS2 Pod2.

- Direction: ECS2 Pod2 -> ECS2 Pod2 calixxx -> ECS2 ENI ethx -> VPC -> ECS1 ENI ethx -> ECS1 Pod1.

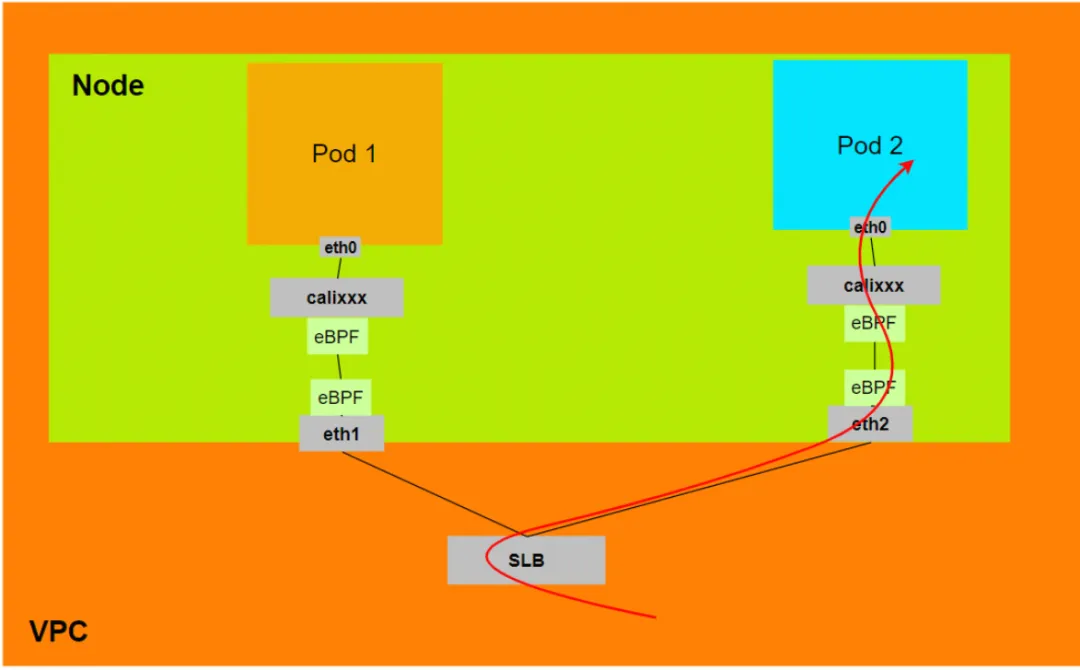

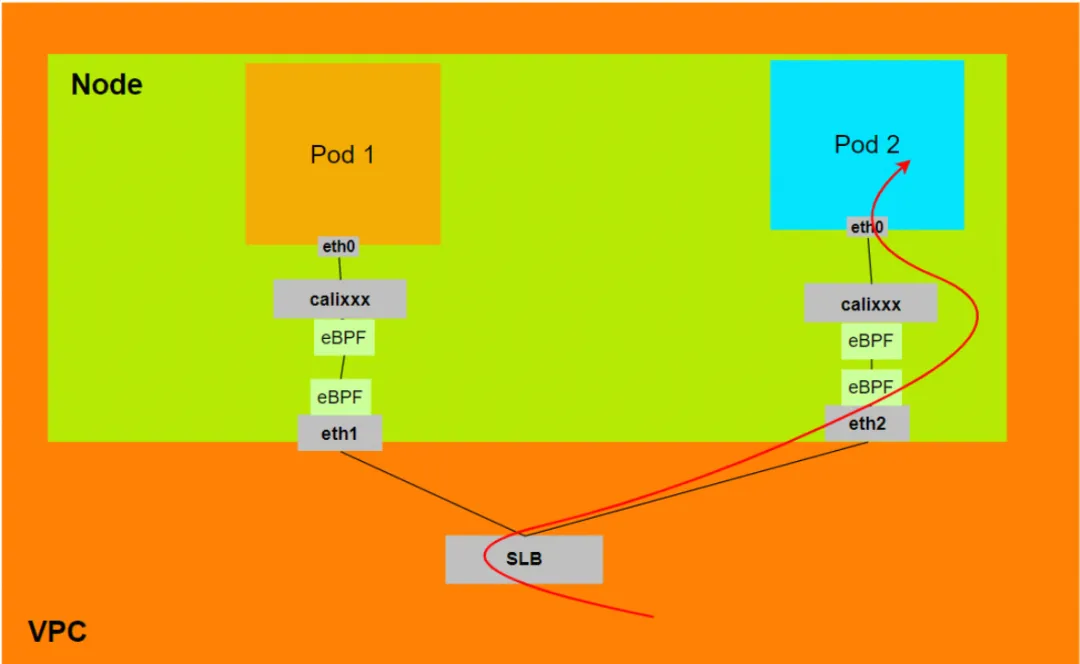

Scenario 8: Accessing SVC External IP from outside the cluster

environment

nginx1-7bcf4ffdb4-6rsqz exists on the xxx.10.0.5.27 node, and the IP address is 10.0.4.121.

By describing SVC, you can get that nginx Pod has been added to the backend of SVC nginx. The Cluster IP of SVC is 192.168.190.78.

kernel routing

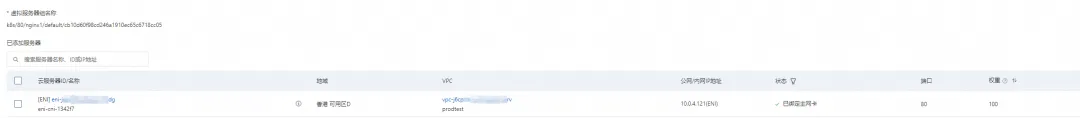

In the SLB console, you can get the backend server group of the lb-3nsj50u4gyz623nitxxx virtual server group which is the ENI eni-j6cgs979ky3evxxx of the two backend nginx Pods.

From the perspective of the outside of the cluster, the back-end virtual server group of SLB is the ENI network card to which the back-end Pod of SVC belongs, and the IP address of the intranet is the address of the Pod.

summary

Aliyun2:

- When ExternalTrafficPolicy is in Local or Cluster mode, SLB will only mount the ENI assigned by the Pod to the SLB virtual server group.

- The data link will pass through the calixxx network card of the Pod's Veth

- Data link: Client -> SLB -> Pod ENI + Pod Port -> ECS1 Pod1 calixxx -> ECS1 Pod1 eth0.

Aliyun3:

- When ExternalTrafficPolicy is in Local or Cluster mode, SLB will only mount the ENI assigned by the Pod to the SLB virtual server group.

- The data link will be accelerated by eBPF on the ECS's attached network card, bypassing the calixxx network card of the Pod's Veth, and directly enter the Pod's network namespace.

- Data link: Client -> SLB -> Pod ENI + Pod Port -> ECS1 Pod1 eth0.

Related Links:

[1] Deprecated IPVLAN support

https://docs.cilium.io/en/v1.12/operations/upgrade/#deprecated-options

[2] ACK container network data link (Terway ENIIP)

[3] Using Terway network plug-in

https://help.aliyun.com/zh/ack/ack-managed-and-ack-dedicated/user-guide/work-with-terway

[4] Mutual access between Pods between different nodes https://help.aliyun.com/zh/ack/ack-managed-and-ack-dedicated/user-guide/ack-network-fabric-terway-eni-trunking #RS9Nc

The Google Python Foundation team was laid off. Google confirmed the layoffs, and the teams involved in Flutter, Dart and Python rushed to the GitHub hot list - How can open source programming languages and frameworks be so cute? Xshell 8 opens beta test: supports RDP protocol and can remotely connect to Windows 10/11. When passengers connect to high-speed rail WiFi , the "35-year-old curse" of Chinese coders pops up when they connect to high-speed rail WiFi. MySQL's first long-term support version 8.4 GA AI search tool Perplexica : Completely open source and free, an open source alternative to Perplexity. Huawei executives evaluate the value of open source Hongmeng: It still has its own operating system despite continued suppression by foreign countries. German automotive software company Elektrobit open sourced an automotive operating system solution based on Ubuntu.