Editor's note: Currently, large language models have become a hot topic in the field of natural language processing. Are LLMs really “smart”? What inspirations have they brought to us? In response to these questions, Darveen Vijayan brings us this thought-provoking article.

The author mainly explains two points: First, LLMs should be regarded as a word calculator, which works by predicting the next word, and should not be classified as "intelligent" at this stage. Second, despite their current limitations, LLMs provide us with an opportunity to reflect on the nature of human intelligence. We should keep an open mind, constantly pursue new knowledge and new understandings of knowledge, and actively communicate with others to expand our cognitive boundaries.

Whether LLMs are smart or not is probably still controversial. But one thing is certain, they have brought innovation to the field of natural language processing and provided new dimensions of thinking about the nature of human intelligence. This article is worth reading carefully and chewing on for every large model tool user and AI practitioner.

Author | Darveen Vijayan

Compiled | Yue Yang

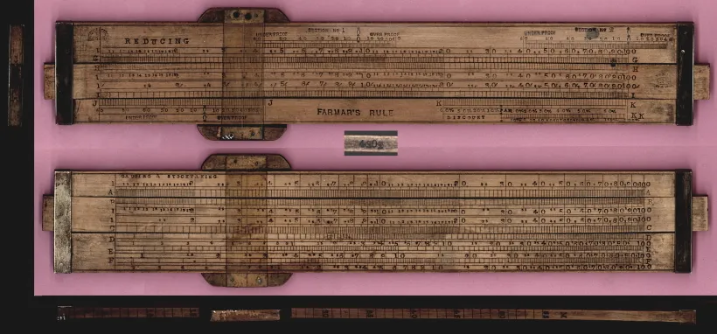

In the early 17th century, a mathematician and astronomer named Edmund Gaunt faced an unprecedented astronomical challenge - to calculate the complex motions of the planets and predict solar eclipses, astronomers needed to rely not only on intuition, but also on Master complex logarithmic operations and trigonometric equations. So, like any good innovator, Gunter decided to invent an analog computing device! The device he created eventually became known as the slide rule[1].

The slide rule is a 30 cm long rectangular wooden block consisting of a fixed frame and a sliding part. The fixed frame houses a fixed logarithmic scale, while the sliding part houses a movable scale. To use a slide rule, you need to understand the basic principles of logarithms and how to align the scales for multiplication, division, and other mathematical operations. It is necessary to slide the movable part so that the numbers line up, read the result, and pay attention to the position of the decimal point. Oops, it’s really too complicated!

slide rule

slide rule

Some 300 years later, in 1961, the Bell Punch Company introduced the first desktop electronic calculator, the "ANITA Mk VII." Over the ensuing decades, electronic calculators became increasingly complex and had more and more functions. Jobs that previously required manual calculations take less time, allowing employees to focus on more analytical and creative work. Therefore, modern electronic calculators not only make work more efficient, they also enable people to solve problems better.

The calculator was a major change in the way math was done, but what about language?

Think about how you structure your sentences. First, you need to have an idea (what does this sentence mean). Next, you need to master a bunch of vocabulary (have enough vocabulary). Then, you need to be able to put these words into sentences correctly (grammar required). Oops, it’s still so complicated!

As early as 50,000 years ago, when modern Homo sapiens first created language, the way we generate words for language has remained largely unchanged.

Arguably, we are still like Gunter using a slide rule when it comes to constructing sentences!

It’s fair to say we’re still in Gunther’s era of using a slide rule when it comes to generating sentences!

If you think about it, using appropriate vocabulary and correct grammar is following the rules of language.

It's similar to math, math is full of rules so I can figure out 1+1=2 and how the calculator works!

We need a calculator for words!

What we need is a calculator but for words!

Yes, different languages need to follow different rules, but only by obeying the rules of language can the language be understood. One clear difference between language and mathematics is that mathematics has fixed and certain answers, whereas there may be many reasonable words that fit into a sentence.

Try filling in the following sentence: I ate a _________. (I ate a _________.) Imagine the words that might come next. There are approximately 1 million words in the English language. Many words could be used here, but certainly not all.

Answering "black hole" is equivalent to saying 2+2=5. Also, answering "apple" is inaccurate. why? Because of grammatical restrictions!

In the past few months, Large Language Models (LLMs) [2] have taken the world by storm. Some people call it a major breakthrough in the field of natural language processing, while others regard it as the dawn of a new era of artificial intelligence (AI).

LLM has proven to be very good at generating human-like text, which raises the bar for language-based AI applications. With its vast knowledge base and excellent contextual understanding, LLM can be applied in various fields, from language translation and content generation to virtual assistants and chatbots for customer support.

Are we at a similar turning point now as we were with electronic calculators in the 1960s?

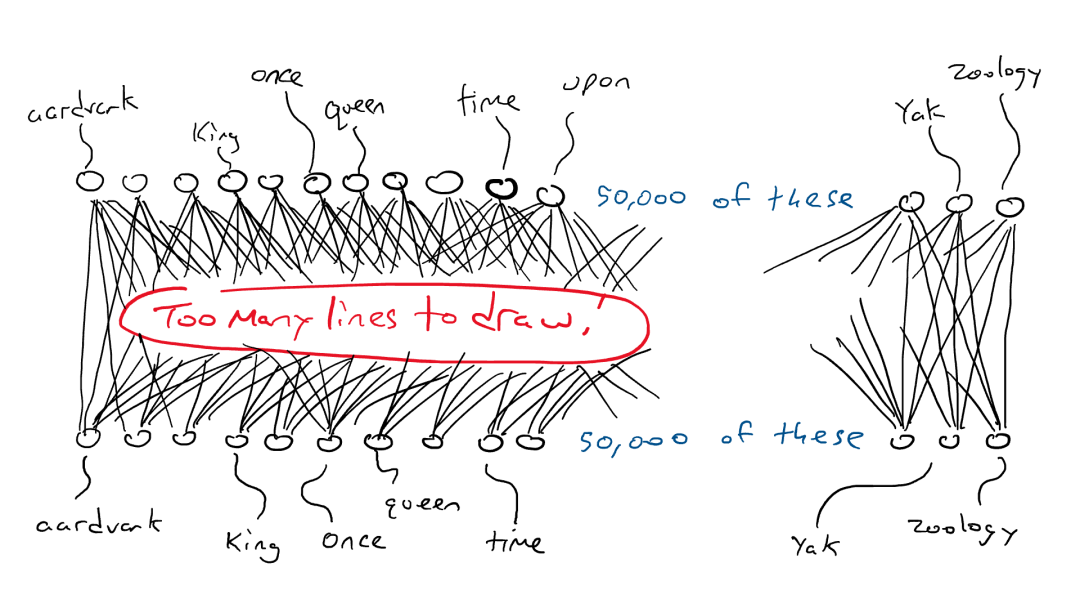

Before answering this question, let us understand how LLM works? LLM is based on a Transformer neural network and is used to calculate and predict the next best-fit word in a sentence. To build a powerful Transformer neural network, it needs to be trained on a large amount of text data. This is why the "predict next word or token" approach works so well: because there is a lot of easily available training data. LLM takes an entire sequence of words as input and predicts the next most likely word. To learn the most likely next word, they warmed up by devouring all of Wikipedia data, then devouring piles of books, and finally devouring the entire Internet.

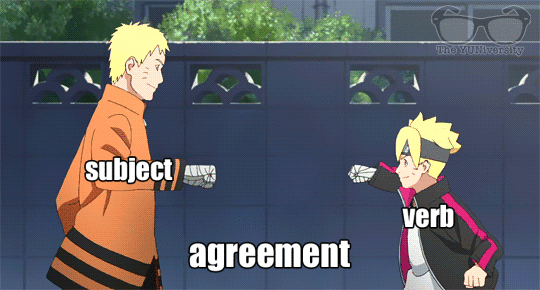

We have established earlier that language contains rules and patterns. The model will implicitly learn these rules through all these sentences to complete the task of predicting the next word.

deep neural network

After a singular noun, the probability of a verb ending in "s" increases in the next word. Similarly, when reading Shakespeare's works, the probability of occurrence of words such as "doth" and "wherefore" increases.

During training, the model learns these language patterns and eventually becomes a language expert!

But is it enough? Is it enough to simply learn the rules of a language?

But is that enough? Is learning linguistic rules enough?

Language is complex and a word can have multiple meanings depending on context.

Therefore, self-attention is required. Simply put, self-attention is a technique that language learners use to understand the relationships between different words in a sentence or article. Just like you would focus on different parts of a story to understand it, self-attention allows LLM to place more emphasis on certain words in a sentence when processing information. This way, the model can better understand the overall meaning and context of the text, rather than blindly predicting the next word based solely on language rules.

self-attention mechanism

If I think of a big language model as a word calculator that simply predicts the next word, how can it answer all my questions?

If LLMs are calculators for words, just predicting the next word, how can it answer all my questions?

When you ask a large language model to handle a task that requires thinking, and it succeeds, it's likely because it has seen the task you gave it in thousands of examples. Even if you have some very unique requirements, such as:

"Write a poem about a killer whale eating chicken"

Write me a poem about an orca eating a chicken

Large language models can also successfully complete the tasks you assigned:

Amidst the waves, a sight unseen, An orca hunts, swift and keen, In ocean’s realm, the dance begins, As a chicken’s fate, the orca wins.

With mighty jaws, it strikes the prey, Feathers float, adrift away, In nature’s way, a tale is spun, Where life and death become as one.

~ ChatGPT

Not bad, right? This is thanks to its self-attention mechanism, which allows it to effectively fuse and match relevant information to construct a reasonable and coherent answer.

During training, large language models (LLMs) learn to identify patterns, associations, and relationships between words (and phrases) in the data. After extensive training and fine-tuning, LLM can exhibit new features such as language translation, summary generation, question answering, and even creative writing. Although the model is not directly taught certain tasks or skills, by learning and training on large amounts of data, the model can demonstrate capabilities beyond expectations and perform extremely well

So, are large language models intelligent?

Are Large Language Models intelligent?

Electronic calculators have been around for more than sixty years. This tool made "leapfrogging" advances in technology, but was never considered smart. why?

The Turing Test is a simple way to determine whether a machine has human intelligence: if a machine can converse with humans in a way that makes them indistinguishable, then it is considered to have human intelligence. .

A calculator has never undergone a Turing test [3] because it does not communicate using the same language as humans, only mathematical language. However, large language models generate human language. Its entire training process revolves around imitating human speech. So it's not surprising that it can "speak to humans in a way that makes them indistinguishable."

Therefore, using the term "intelligent" to describe large language models is a bit tricky, because there is no clear consensus on the true definition of intelligence. One way to tell if something is intelligent is if it can do something interesting, useful, and with a certain level of complexity or creativity. Large language models certainly fit this definition. However, I don't entirely agree with this interpretation.

I define intelligence as the ability to expand the boundaries of knowledge.

I define intelligence as the ability to expand the frontiers of knowledge.

As of this writing, machines that work by predicting the next token/word still cannot expand the boundaries of knowledge.

However, it can be inferred and filled based on existing data. It can neither clearly understand the logic behind the words nor understand the existing body of knowledge. It cannot generate innovative ideas or deep insights. It can only provide relatively general answers, but cannot generate breakthrough ideas.

Faced with the inability of machines to generate innovative thinking and in-depth insights, what impact or implications does it have on us humans?

So, what does this mean for us humans?

We should think of large language models (LLMs) more as calculators of words. Our thinking process should not be completely dependent on large models, but should be regarded as an aid to our thinking and expression rather than a substitute.

At the same time, as the number of parameters in these large models grows exponentially, we may feel increasingly overwhelmed and out of our depth. My advice on this is to always remain curious about seemingly unrelated ideas. Sometimes we encounter some seemingly unrelated or contradictory ideas, but through our observation, perception, experience, learning and communication with others, we can find that there may be some connection between these ideas, or these ideas Might be reasonable. (Translator's Note: This connection may come from our observation, understanding and interpretation of things, or new ideas derived by correlating knowledge and concepts in different fields. We should keep an open mind, not only Limit yourself to superficial intuitions, but observe, perceive, experience, learn, and communicate with others to discover deeper meanings and connections) We should not be content to just stay in the known realm, but should actively explore new ones. fields, constantly expanding our cognitive boundaries. We should also constantly pursue new knowledge or new understandings of already acquired knowledge and combine them with existing knowledge to create new insights and ideas.

If you are able to think and act the way I describe, then all forms of technology, whether it's a calculator or a large language model, will become a tool you can leverage rather than an existential threat you need to worry about.

END

References

[3] https://en.wikipedia.org/wiki/Turing_test

This article was compiled by Baihai IDP with the authorization of the original author. If you need to reprint the translation, please contact us for authorization.

Original link:

https://medium.com/the-modern-scientist/large-language-models-a-calculator-for-words-7ab4099d0cc9

RustDesk suspends domestic services due to rampant fraud Apple releases M4 chip Taobao (taobao.com) restarts web version optimization work High school students create their own open source programming language as a coming-of-age gift - Netizens' critical comments: Relying on the defense Yunfeng resigned from Alibaba, and plans to produce in the future The destination for independent game programmers on the Windows platform . Visual Studio Code 1.89 releases Java 17. It is the most commonly used Java LTS version. Windows 10 has a market share of 70%, and Windows 11 continues to decline. Open Source Daily | Google supports Hongmeng to take over; open source Rabbit R1; Docker supports Android phones; Microsoft’s anxiety and ambitions; Haier Electric has shut down the open platform