文章目录

kubeadm 安装k8s 1.12

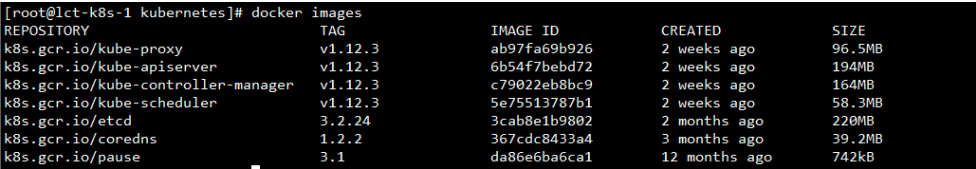

产品需要从k8s 1.6升级到1.12.3最新稳定版,目前kubeadm已经GA,所以这次也准备用上,替代以前的大量ansible脚本工作,由于要支持旧业务的heapster等组件,升级过程有点曲折,特此记录。

1 安装环境准备

1.1检查以下两个文件的输出:

#确保这两个值为1

cat /proc/sys/net/bridge/bridge-nf-call-ip6tables

cat /proc/sys/net/bridge/bridge-nf-call-iptables

#如果这两个值不为1,则进行如下操作:

#创建/etc/sysctl.d/k8s.conf文件,添加如下内容:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

#执行下面两条命令使修改生效。

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

1.2 关闭selinux,firewalld,swap等

1.3 ntp配置

- 服务器默认已经安装了ntpd和ntpdate服务。如果没有安装,请自行yum安装。

systemctl enable ntpd

systemctl start ntpd

-

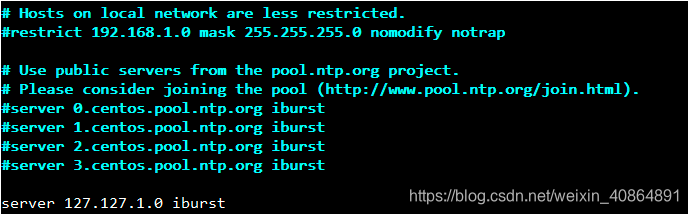

配置/etc/ntp.conf

其中主节点配置如下:

-

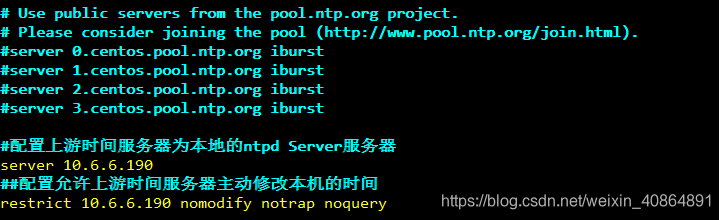

从节点配置如下:

-

注意统一时区为上海

cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

-

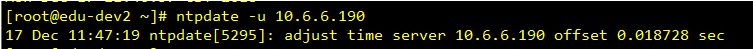

在从节点上同步时间:

-

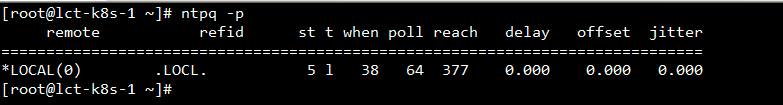

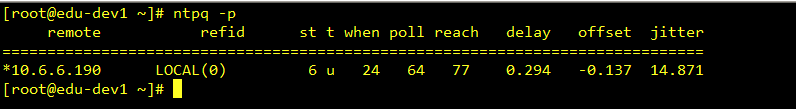

查看ntp状态

主节点:

从节点:

2 安装docker

yum install -y yum-utils device-mapper-persistent-data

yum install -y docker-ce-18.06.0.ce-3.el7.x86_64

#安装docker 18.06版本,如果之前安装过更高版本的docker,可能在yum时报错conflict,需要rpm -e 指定的版本

3 k8s环境准备

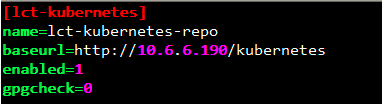

3.1 将阿里云的kubernetes yum源 sync到本地

3.2 引用自身repo

这里省略了安装nginx等过程,请自行查找自建yum源方法~

3.3 配置hosts,以及各节点SSH免密

3.4 安装kubeadm,kubectl,kubelet

yum install -y kubeadm-1.12.3-0.x86_64 kubectl-1.12.3-0.x86_64 kubelet-1.12.3-0.x86_64

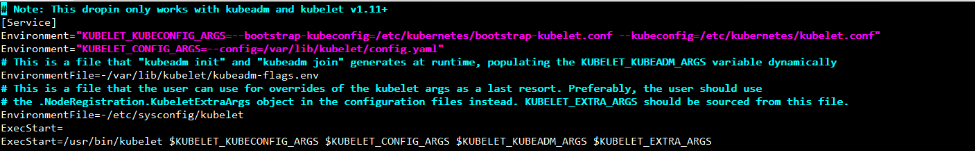

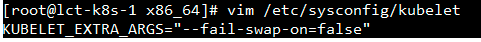

3.5 配置kubelet

systemctl enable kubelet

注意:这里只enable,不可start

通过观察kubeadm的配置文件,可以看出kubelet启动时的启动参数都从哪里读

3.6 kubeadm init安装主节点

kubeadm init --kubernetes-version=v1.12.3 --pod-network-cidr=172.30.0.0/16 --service-cidr=10.96.0.0/16 --ignore-preflight-errors=Swap

#也可以使用kubeadm config images pull 来预先下载镜像

由于Kubeadm安装的master节点,默认带有taint,不接受一般的pod调度,所以coredns pod无法创建成功

将admin的kubeconfig文件拷贝到各节点的 /root/.kube目录下

cp /etc/kubernetes/admin.conf /root/.kube/config

kubectl taint nodes node1 node-role.kubernetes.io/master-

#可以去掉污点,让master节点参与工作负载

#安装flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# flannel 修改为如下配置

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"ipMasq": false,

"plugins": [

{

"type": "flannel",

"ipMasq": false,

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "172.30.0.0/16",

"Backend": {

"Type": "host-gw"

}

}

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: amd64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.10.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.10.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq=false

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

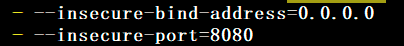

需要在主节点开启非安全端口,需要在/etc/kubernetes/manifests/kube-apiserver.yaml 进行如下配置:

3.7 从节点安装

从节点安装kubeadm,kubelet,kubectl与主节点相同;

# 从节点joib到集群

kubeadm join 10.6.6.190:6443 --token i3r115.q4ucaz0uio489438 --discovery-token-ca-cert-hash sha256:eb9daf9067902d23069c3910caefc5651d3156809fec18afee17e158c57f6afe --ignore-preflight-errors=Swap

#如果从节点的镜像不是预先导入的,则也需要通过代理拉取镜像。

#如果忘记了token和ca hash,通过以下命令生成新token和查找hash

kubeadm token create

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

主节点scp ~/.kube/config 到从节点对应目录

3.8 heapster安装

wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/rbac/heapster-rbac.yaml

wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/heapster.yaml

wget <https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/influxdb.yaml>

#注意!HeapSter会出错 报错信息如下:

E0823 04:43:05.012122 1 manager.go:101] Error in scraping containers from kubelet:192.168.1.67:10255: failed to get all container stats from Kubelet URL "http://192.168.1.67:10 255/stats/container/": Post http://192.168.1.67:10255/stats/container/: dial tcp 192.168.1.67:10255: getsockopt: connection refused

#解决 在yaml文件中进行如下配置

- --source=kubernetes:https://kubernetes.default?kubeletHttps=true&kubeletPort=10250&insecure=true

- --sink=influxdb:http://monitoring-influxdb.kube-system.svc.cluster.local:8086

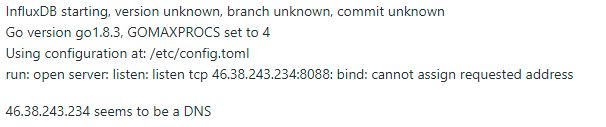

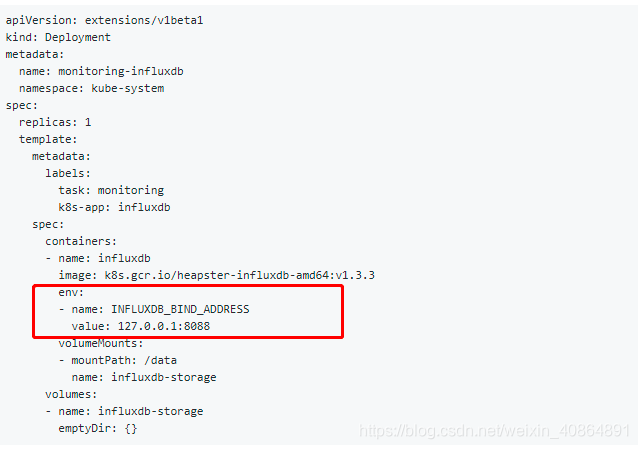

influxDb会因为dns的原因,解析错误的地址和8088端口,去尝试绑定

解决:

再次注意:

k8s 1.12版本的 proxy api接口改变了

原来是/api/v1/proxy/namespaces/xxx/pods/xxx

现在是/api/v1/namespaces/xxx/pods/xxx/proxy

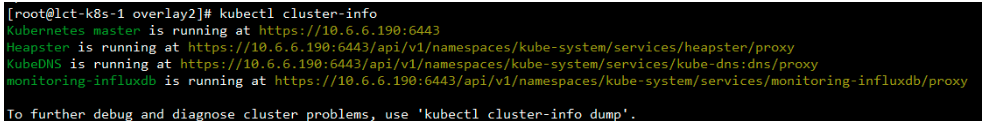

通过kubectl cluster-info 可以获取服务列表

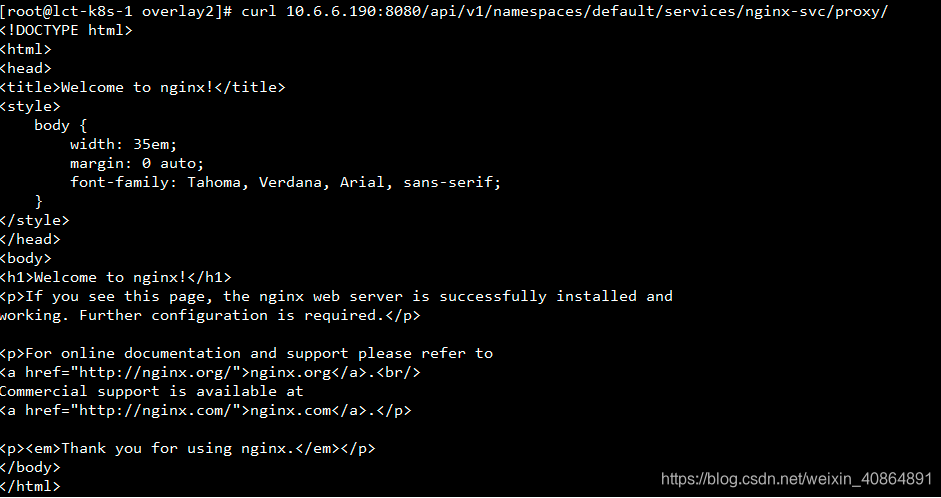

访问一个nginx服务

此时heapster的访问路径改为:

curl 10.6.6.190:8080/api/v1/namespaces/kube-system/services/heapster/proxy/api/v1/model/nodes/lct-k8s-1/metrics/cpu/usage

3.9 删除节点操作

#在master节点上执行:

kubectl drain node2 --delete-local-data --force --ignore-daemonsets

kubectl delete node node2

#在node2上执行:

kubeadm reset

ifconfig cni0 down

ip link delete cni0

ifconfig flannel.1 down

ip link delete flannel.1

rm -rf /var/lib/cni/

3.10 解决CNI0 SNAT问题

# 添加一条规则,放在首位

iptables -t nat -I POSTROUTING 1 -s 172.30.0.0/16 -d 172.30.0.0/16 -j ACCEPT

#删除此条规则

iptables -t nat -D POSTROUTING -s 172.30.0.0/16 -d 172.30.0.0/16 -j ACCEPT

3.11 添加gpu支持

- 安装nv-docker

# If you have nvidia-docker 1.0 installed: we need to remove it and all existing GPU containers

docker volume ls -q -f driver=nvidia-docker | xargs -r -I{} -n1 docker ps -q -a -f volume={} | xargs -r docker rm -f sudo yum remove nvidia-docker

# Add the package repositories distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.repo | \ sudo tee /etc/yum.repos.d/nvidia-docker.repo

# Install nvidia-docker2 and reload the Docker daemon configuration

sudo yum install -y nvidia-docker2 sudo pkill -SIGHUP dockerd

# Test nvidia-smi with the latest official CUDA image

docker run --runtime=nvidia --rm nvidia/cuda:9.0-base nvidia-smi

- 安装device-plugin

kubectl create -f https://raw.githubusercontent.com/NVIDIA/k8s-device-plugin/v1.11/nvidia-device-plugin.yml

- kubelet配置

--feature-gates=DevicePlugins=true

- 重启kubelet服务