import numpy as np from scipy.linalg import toeplitz import time toep = list(i for i in range(1,501)) A = np.random.normal(size= (200, 500))

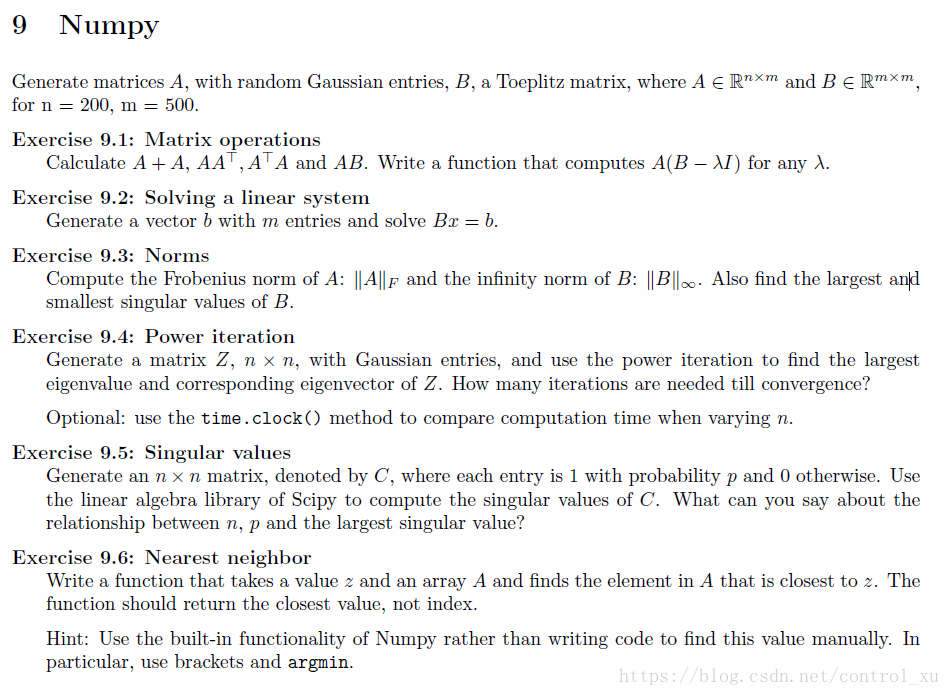

# Exercise 9.1: Matrix operations

def fun1(A, B, lam):

tmp = B - lam* np.eye(B.shape[0], B.shape[1])

return np.dot(A, tmp)

B = toeplitz(toep)

print("A is:")

print(A)

print("\nB is:")

print(B)

print("\nA+A is:")

print(A+A)

print("\nA*A.T is:")

print(np.dot(A, A.T))

print("\nA.T*A is:")

print(np.dot(A.T, A))

print("\nA*B is:")

print(np.dot(A,B))

print("fun1 test:")

print(fun1(A,B, 0.5))

# Exercise 9.2: Solving a linear system

# 先用no.linalg.inv求逆,再相乘

def fun2(B, b):

return np.dot(np.linalg.inv(B) ,b)

b = np.linspace(0,1,500)

print("\n求Bx = b的解:")

print(fun2(B, b))

用numpy.linalg中的svd分解,返回3个参数,中间的sigma即为由特征值组成的向量。

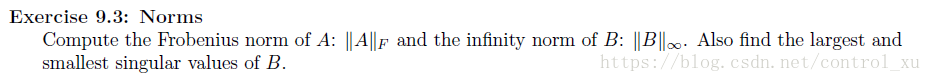

# Exercise 9.3: Norms

# 求A的范数

print("\nA的范数", np.linalg.norm(A))

# 求B的无穷范数

print("\nB的无穷范数:", np.linalg.norm(B, np.inf))

u, sigma, v = np.linalg.svd(B)

print("\n最大特征值:", max(sigma))

print("最小特征值:", min(sigma))

结果如图所示

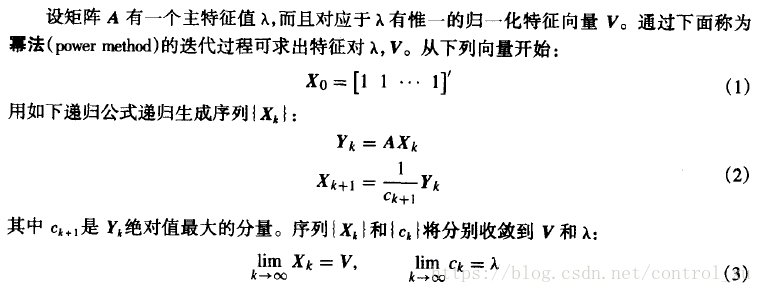

幂迭代方法,如图所示,摘自数值方法第四版第一章关于幂方法求最大特征值和特征向量的

import numpy as np

import time

def power_ite(Z):

start_time = time.clock()

eigen_vector = np.ones((200,1))

y = np.dot(Z, eigen_vector)

eigen_value = y[0, 0]

maxium = abs(y[0, 0])

i = 1

while i < y.size:

if abs(y[i, 0]) > maxium:

eigen_value = y[i, 0]

maxium = abs(y[i, 0])

i += 1

eigen_vector = y / eigen_value

last_eigen = eigen_value + 10e-4

diff = abs(eigen_value - last_eigen)

cnt = 1

while diff >= 10e-5:

cnt += 1

y = np.dot(Z, eigen_vector)

last_eigen = eigen_value

eigen_value = y[0, 0]

maxium = abs(y[0, 0])

i = 1

while i < y.size:

if abs(y[i, 0]) > maxium:

eigen_value = y[i, 0]

maxium = abs(y[i, 0])

i += 1

eigen_vector = y / eigen_value

diff = abs(eigen_value - last_eigen)

return cnt, eigen_vector, eigen_value, time.clock() - start_time

Z = np.random.randn(200, 200)

# Z = np.random.normal(size=(200,200))

cnt, eigen_vector_z, eigen_value, using_time = power_ite(Z)

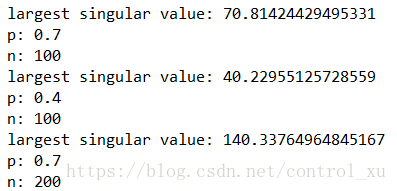

print("eigen vector:")

print(eigen_vector_z)

print("iteration counter:", cnt)

print("eigen value:", eigen_value)

print("time uesd:",using_time)

输出如图所示。特征向量过长就不展示了。当然这个迭代方法有一定几率会无法收敛。

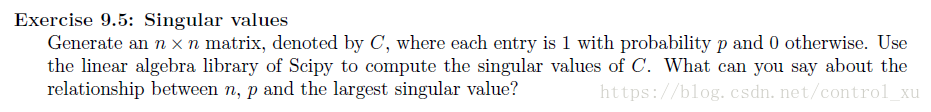

# Exercise 9.5: Singular values

n = 100

t, p = 1, 0.7

C = np.random.binomial(t, p, (n,n))

u, sigma, v = np.linalg.svd(C)

largest_singular_value = max(sigma)

print("largest singular value:",largest_singular_value)

print("p:",p)

print("n:",n)

t, p = 1, 0.4

C = np.random.binomial(t, p, (n,n))

u, sigma, v = np.linalg.svd(C)

largest_singular_value = max(sigma)

print("largest singular value:",largest_singular_value)

print("p:",p)

print("n:",n)

n = 200

t, p = 1, 0.7

C = np.random.binomial(t, p, (n,n))

u, sigma, v = np.linalg.svd(C)

largest_singular_value = max(sigma)

print("largest singular value:",largest_singular_value)

print("p:",p)

print("n:",n)

# 规律np = largest singular value

结果如图所示

可以观察发现,最大特征值≈n*p

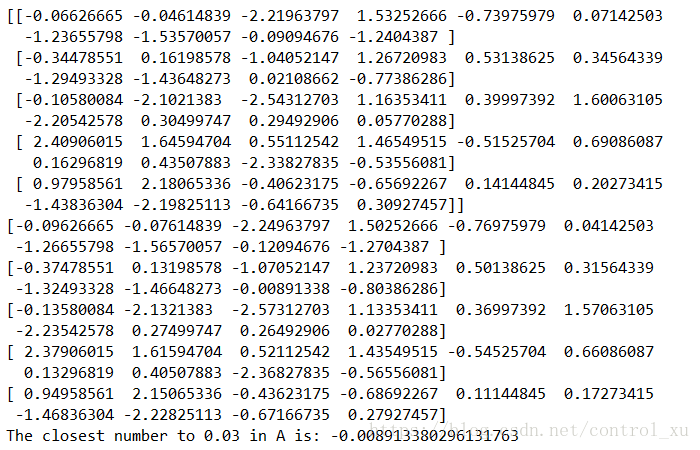

按照提示,使用numpy.argmin()

查找文档得知,argmin接收一个(多维)数组,返回这个数组中最小数的下标。另外可以使用参数指定返回下标的格式,默认是返回平面化的一维数组下标。之后可以用numpy.unralvel_index转换成对应在原数组中的坐标。

# Exercise 9.6: Nearest neighbor

def fun_9_6(A, z):

tmp = abs(A - z)

ind = np.unravel_index(np.argmin(tmp, axis=None), tmp.shape)

return A[ind]

A = np.random.normal(size= (5, 10))

print(A)

print("The closest number to", 0.03, "in A is:",fun_9_6(A,0.03))

输出如图所示

验证正确。