一、简单线性回归

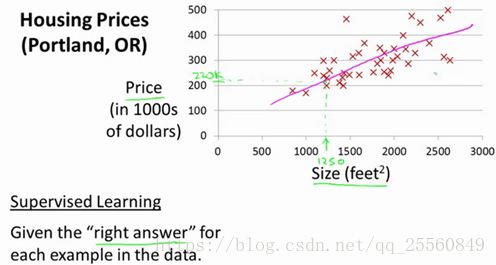

线性回归模型用来解决回归问题,思想简单,实现容易,是许多强大的非线性模型的基础,结果具有很好的解释性,蕴含机器学习中的很多重要思想。首先从简单线性回归开始,即特征只有1个。以波士顿房价为例,我们有一堆的样本数据,在图上绘制出的红叉即房子面积和价格的关系,现在的目标就是找到一个方程来拟合这些数据,并用得到的方程来预测房屋的价格。

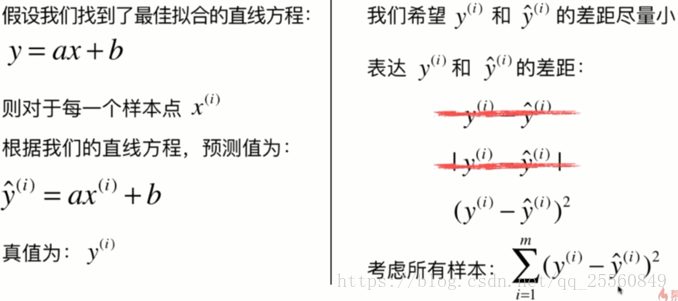

通过分析问题,确定问题的损失函数或者效用函数,通过最优化损失函数或者效用函数,获得机器学习的模型,几乎所有参数学习算法都是这个思路。线性回归、逻辑回归、多项式回归、Svm、神经网络等等都是如此。

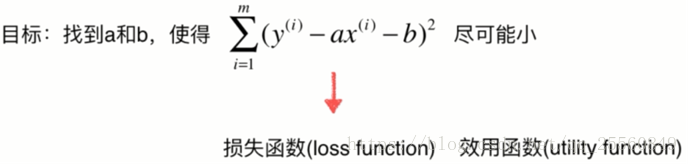

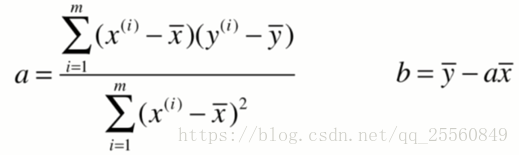

为了使损失函数最小,就是典型的最小二乘法问题,即最小化误差的平方。最终的推导的结果如下。

1.最小二乘法推导过程

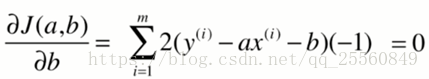

首先对b进行求导。

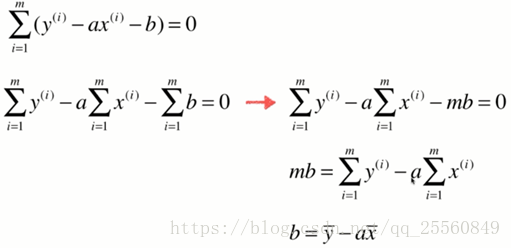

再对a进行求导,同时将b的结果代入。

最终可以得到a。

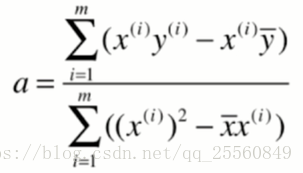

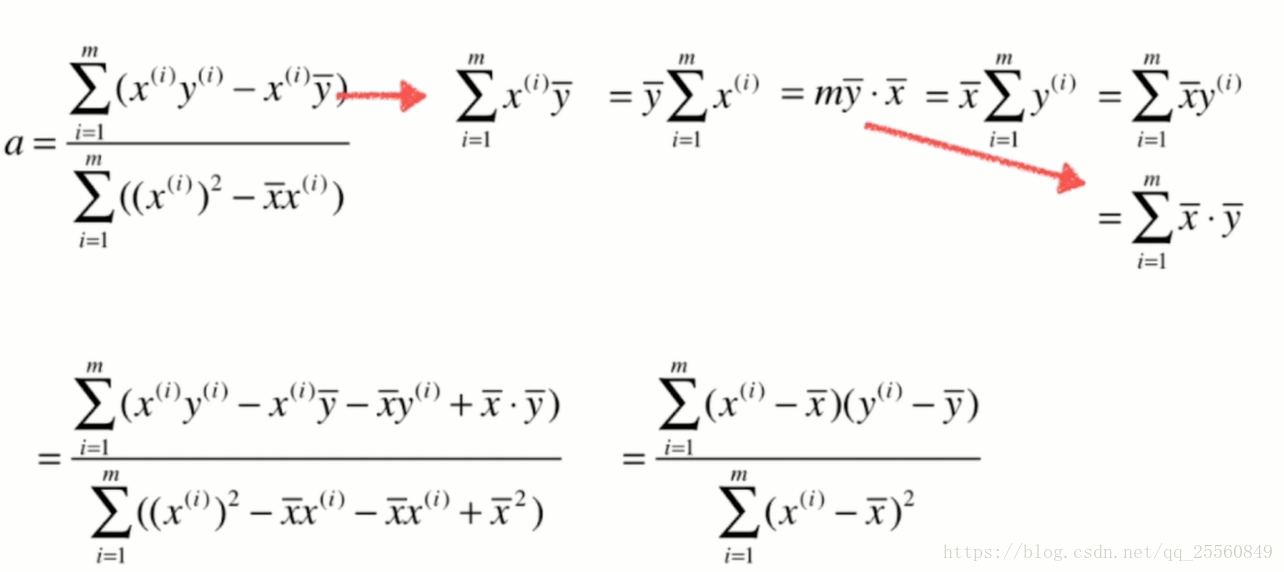

对于a形式进行整理,可以在编程时,优化计算的速度。

2.简单线性回归的实现

使用jupyter,创建Python3文件。

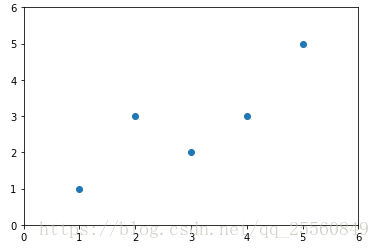

import numpy as np import matplotlib.pyplot as plt x = np.array([1.,2.,3.,4.,5.]) y = np.array([1.,3.,2.,3.,5.]) plt.scatter(x,y) plt.axis([0,6,0,6]) plt.show()

x_mean = np.mean(x)

y_mean = np.mean(y)

num = 0.0 #代表分子

d =0.0 #代表分母

for x_i,y_i in zip(x,y):

num += (x_i-x_mean)*(y_i-y_mean)

d += (x_i-x_mean)**2

a = num/d

b = y_mean-a*x_mean

y_hat = a*x +b

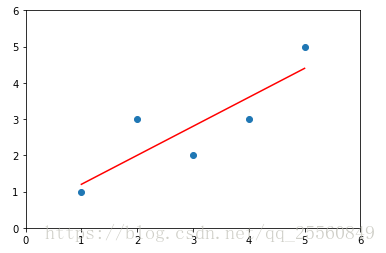

plt.scatter(x,y)

plt.plot(x,y_hat,color='r')

plt.axis([0,6,0,6])

plt.show()

3.SimpleLinearRegression1.py

将上面的代码进行封装。

import numpy as np

from .metrics import r2_score

class SimpleLinearRegression1:

def __init__(self):

"""初始化Simple Linear Regression模型"""

self.a_ = None

self.b_ = None

def fit(self, x_train, y_train):

"""根据训练数据集x_train, y_train训练Simple Linear Regression模型"""

assert x_train.ndim == 1, \

"Simple Linear Regressor can only solve single feature training data."

assert len(x_train) == len(y_train), \

"the size of x_train must be equal to the size of y_train"

x_mean = np.mean(x_train)

y_mean = np.mean(y_train)

num = 0.0

d = 0.0

for x, y in zip(x_train,y_train):

num += (x -x_mean)*(y-y_mean)

d += (x-x_mean)**2

self.a_ = num/d

self.b_ = y_mean- self.a_*x_mean

return self

def predict(self, x_predict):

"""给定待预测数据集x_predict,返回表示x_predict的结果向量"""

assert x_predict.ndim == 1, \

"Simple Linear Regressor can only solve single feature training data."

assert self.a_ is not None and self.b_ is not None, \

"must fit before predict!"

return np.array([self._predict(x) for x in x_predict])

def _predict(self, x_single):

"""给定单个待预测数据x,返回x的预测结果值"""

return self.a_ * x_single + self.b_

def score(self, x_test, y_test):

"""根据测试数据集 x_test 和 y_test 确定当前模型的准确度"""

y_predict = self.predict(x_test)

return r2_score(y_test, y_predict)

def __repr__(self):

return "SimpleLinearRegression()"

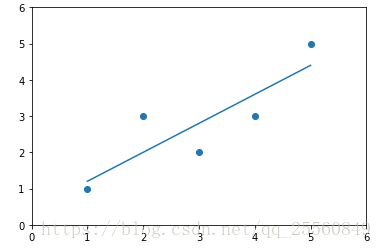

下面开始测试,数据还是原来的x,y

import numpy as np import matplotlib.pyplot as plt x = np.array([1.,2.,3.,4.,5.]) y = np.array([1.,3.,2.,3.,5.]) from ml.SimpleLinearRegression1 import SimpleLinearRegression1 reg1 = SimpleLinearRegression1() reg1.fit(x,y) y_hat1 = reg1.predict(x) plt.scatter(x,y) plt.plot(x,y_hat1) plt.axis([0,6,0,6]) plt.show()

4.SimpleLinearRegression.py

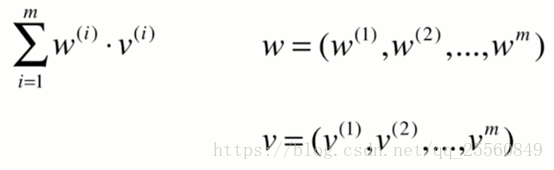

对于simplelinearregression1中a的求解,可以使用之前推导得到的优化形式进行,这就是用向量化的方法求a。

对于优化的a可以理解成如下的形式,这样就可以使用向量化运算,会比for循环快很多。

import numpy as np

from .metrics import r2_score

class SimpleLinearRegression:

def __init__(self):

"""初始化Simple Linear Regression模型"""

self.a_ = None

self.b_ = None

def fit(self, x_train, y_train):

"""根据训练数据集x_train, y_train训练Simple Linear Regression模型"""

assert x_train.ndim == 1, \

"Simple Linear Regressor can only solve single feature training data."

assert len(x_train) == len(y_train), \

"the size of x_train must be equal to the size of y_train"

x_mean = np.mean(x_train)

y_mean = np.mean(y_train)

self.a_ = (x_train - x_mean).dot(y_train - y_mean) / (x_train - x_mean).dot(x_train - x_mean)

self.b_ = y_mean - self.a_ * x_mean

return self

def predict(self, x_predict):

"""给定待预测数据集x_predict,返回表示x_predict的结果向量"""

assert x_predict.ndim == 1, \

"Simple Linear Regressor can only solve single feature training data."

assert self.a_ is not None and self.b_ is not None, \

"must fit before predict!"

return np.array([self._predict(x) for x in x_predict])

def _predict(self, x_single):

"""给定单个待预测数据x,返回x的预测结果值"""

return self.a_ * x_single + self.b_

def score(self, x_test, y_test):

"""根据测试数据集 x_test 和 y_test 确定当前模型的准确度"""

y_predict = self.predict(x_test)

return r2_score(y_test, y_predict)

def __repr__(self):

return "SimpleLinearRegression()"

性能测试。

import numpy as np import matplotlib.pyplot as plt from ml.SimpleLinearRegression1 import SimpleLinearRegression1 from ml.SimpleLinearRegression import SimpleLinearRegression reg1 = SimpleLinearRegression1() reg2 = SimpleLinearRegression() m= 1000000 big_x = np.random.random(size=m) big_y = big_x*2.0 + 3.0 +np.random.normal(size=m) % timeit reg1.fit(big_x,big_y) % timeit reg2.fit(big_x,big_y)

很明显,使用向量化的方式能够极大提高速度。

二、衡量线性回归法的指标 MSE,RMS,MAE

1.MSE、RMS、MAE

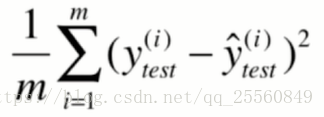

均方误差:MSE

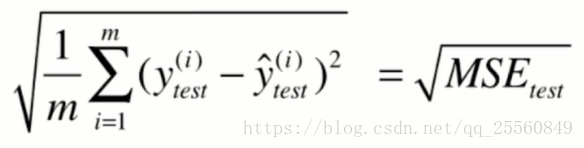

均方根误差:RMS,有放大误差结果的作用。

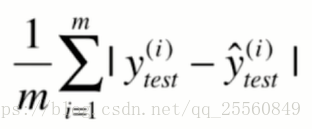

平均绝对误差:MAE

2.波士顿房价

以波士顿房价为例对算法对指标进行运算。

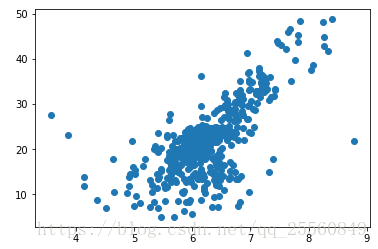

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets boston = datasets.load_boston() #print(boston.DESCR) #boston.feature_names,查看特征值,现在只取出RM这个特征 x = boston.data[:,5] y = boston.target plt.scatter(x,y) plt.show()

对于大于50的点,默认都设为了50,所以为了避免这些点影响计算,需要去除掉。

x = x[ y < 50.0] y = y[ y < 50.0] plt.scatter(x,y) plt.show()

from ml.model_selection import train_test_split from ml.SimpleLinearRegression import SimpleLinearRegression x_train,x_test,y_train,y_test = train_test_split(x,y,seed=666) reg = SimpleLinearRegression() reg.fit(x_train,y_train) plt.scatter(x_train,y_train) plt.plot(x_train,reg.predict(x_train),color='red') plt.show()

y_predict = reg.predict(x_test) mse = np.sum((y_predict-y_test)**2)/len(y_test) from math import sqrt rmse =sqrt(mse) mae = np.sum(np.absolute(y_predict-y_test))/ len(y_test) print(mse,rmse,mae)

现在修改metrics.py文件,将MSE,RMSE,MAE模块放进去。

import numpy as np

from math import sqrt

def accuracy_score(y_true, y_predict):

"""计算y_true和y_predict之间的准确率"""

assert len(y_true) == len(y_predict), \

"the size of y_true must be equal to the size of y_predict"

return np.sum(y_true == y_predict) / len(y_true)

def mean_squared_error(y_true, y_predict):

"""计算y_true和y_predict之间的MSE"""

assert len(y_true) == len(y_predict), \

"the size of y_true must be equal to the size of y_predict"

return np.sum((y_true - y_predict)**2) / len(y_true)

def root_mean_squared_error(y_true, y_predict):

"""计算y_true和y_predict之间的RMSE"""

return sqrt(mean_squared_error(y_true, y_predict))

def mean_absolute_error(y_true, y_predict):

"""计算y_true和y_predict之间的RMSE"""

assert len(y_true) == len(y_predict), \

"the size of y_true must be equal to the size of y_predict"

return np.sum(np.absolute(y_true - y_predict)) / len(y_true)

def r2_score(y_true, y_predict):

"""计算y_true和y_predict之间的R Square"""

return 1 - mean_squared_error(y_true, y_predict)/np.var(y_true)

import ml.metrics as mm mm.mean_absolute_error(y_test,y_predict) mm.mean_squared_error(y_test,y_predict) mm.root_mean_squared_error(y_test,y_predict)

现在尝试用sklearn中的方法。由于sklearn没有RMSE,所以还需要通过MSE进行运算得到RMSE。

import sklearn.metrics as sm sm.mean_absolute_error(y_test,y_predict) sm.mean_squared_error(y_test,y_predict)

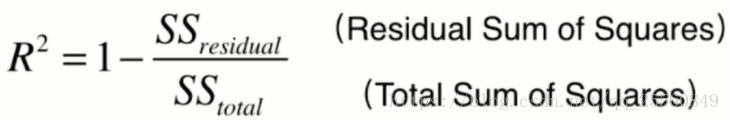

3.R Square

除了上面的评估指标,还可以用R^2来评价。

为什么使用这种方式会好?

分母中:y的均值减去真值后求平方,这里也将y的均值作为模型,称之为baseline model。分子中:模型预测值减去真值,即预测的误差。所以最终用1减去二者相除的结果,其实就是衡量准确度。R^2越大越好,如果模型都是正确的,则为1。如果模型等于基准模型,则为0。如果值小于0,说明模型还不如基准模型,很可能不存在任何线性关系。对R^2进行变形后,可以更方便计算。

1- sm.mean_squared_error(y_test,y_predict) / np.var(y_test)

【自己编写的metrics和sklearn中都封装了r2模块,可以进行调用运算】

sm.r2_score(y_test,y_predict)

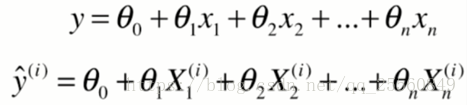

三、多元线性回归

1.求解思路

对上面的式子引入X0变量。

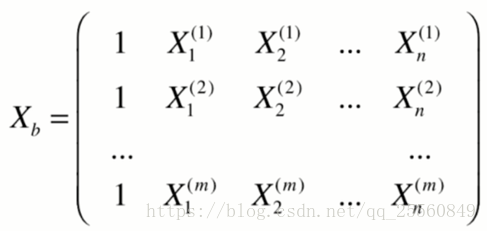

因此样本矩阵可以表示为:

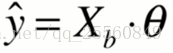

最终预测值可以表示为:

因此目标函数可以表示为如下,求得的解成为多元线性回归的正规方程解。

优点是不需要对数据进行归一化。缺点是时间复杂度为立方级别,优化后也会达到2.4次方,运算耗时间,另外一种求目标函数最小值的方法是梯度下降法,这在后续博客会有介绍。其实对于大多数问题,能够直接公式的解毕竟比较少,使用梯度下降法求解的比较多。

2.编程实现

这里θ区分截距和系数。

LinearRegression.py

import numpy as np

from .metrics import r2_score

class LinearRegression:

def __init__(self):

"""初始化Linear Regression模型"""

self.coef_ = None

self.intercept_ = None #截距

self._theta = None #系数

def fit_normal(self, X_train, y_train):

"""根据训练数据集X_train, y_train训练Linear Regression模型"""

assert X_train.shape[0] == y_train.shape[0], \

"the size of X_train must be equal to the size of y_train"

X_b = np.hstack([np.ones((len(X_train), 1)), X_train])

self._theta = np.linalg.inv(X_b.T.dot(X_b)).dot(X_b.T).dot(y_train)

self.intercept_ = self._theta[0]

self.coef_ = self._theta[1:]

return self

def predict(self, X_predict):

"""给定待预测数据集X_predict,返回表示X_predict的结果向量"""

assert self.intercept_ is not None and self.coef_ is not None, \

"must fit before predict!"

assert X_predict.shape[1] == len(self.coef_), \

"the feature number of X_predict must be equal to X_train"

X_b = np.hstack([np.ones((len(X_predict), 1)), X_predict])

return X_b.dot(self._theta)

def score(self, X_test, y_test):

"""根据测试数据集 X_test 和 y_test 确定当前模型的准确度"""

y_predict = self.predict(X_test)

return r2_score(y_test, y_predict)

def __repr__(self):

return "LinearRegression()"

现在测试代码,可以发现最终得到的预测分数比之前高,这是因为使用的特征值更多了。

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets boston = datasets.load_boston() x = boston.data #使用所有特征值 y = boston.target x = x[ y < 50.0] y = y[ y < 50.0] from ml.model_selection import train_test_split x_train,x_test,y_train,y_test = train_test_split(x,y,seed=666) from ml.LinearRegression import LinearRegression reg = LinearRegression() reg.fit_normal(x_train,y_train) reg.coef_ reg.intercept_ reg.score(x_test,y_test)

对于sklearn中的所封装的LinearRegression,最终得到的结果是一致的。

from sklearn.linear_model import LinearRegression reg = LinearRegression() reg.fit(x_train,y_train) reg.coef_ reg.intercept_ reg.score(x_test,y_test)

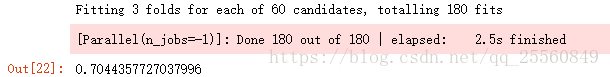

KNN Regressor

对于knn也有回归的算法。

from sklearn.neighbors import KNeighborsRegressor knnreg = KNeighborsRegressor() knnreg.fit(x_train,y_train) knnreg.score(x_test,y_test)

对超参数进行运算

from sklearn.neighbors import KNeighborsRegressor

from sklearn.model_selection import GridSearchCV

param_grid = [

{

'weights': ['uniform'],

'n_neighbors':[i for i in range(1,11)]

},

{

'weights': ['distance'],

'n_neighbors': [i for i in range(1,11)],

'p': [i for i in range(1,6)]

}

]

knnreg = KNeighborsRegressor()

grid_search = GridSearchCV(knnreg,param_grid,n_jobs=-1,verbose =1)

grid_search.fit(x_train,y_train)

grid_search.best_params_

#grid_search.best_score_使用的是交叉验证的方式,score的运算方式和回归中的score不一样

grid_search.best_estimator_.score(x_test,y_test)