文章目录

Redis单节点有什么问题?

- 单点故障

- 容量有限

- 承受压力有限

Redis集群搭建原则

AKF 扩展立方、CAP 原则

准备工作

- 使用 /root/soft/redis-5.0.5/utils 下的 install_server.sh 安装6379、6380、6381 这3个服务

- 复制 /etc/redis/ 下的配置文件到 test 目录

- 修改配置文件

- daemonize no //前台运行

- appendonly no //禁用aof

- #logfile /var/log/redis_6379.log //禁用日志文件,让日志输出来控制台

- 删除 /var/lib/redis 目录下的持久化文件,文件夹保留

- 启动 6379、6380、6381 三个实例 redis-server ~/test/6379.conf

- 启动 3 个对应端口的客户端 redis-cli -p 6379

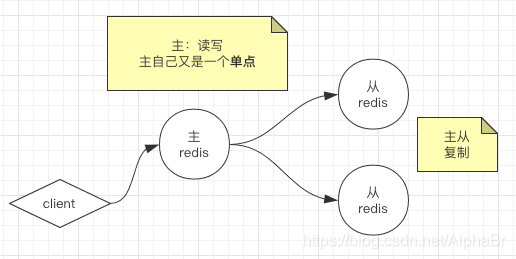

主从

主备和主从区别是什么

主备:客户端只访问主,主挂了,备顶上。

主从:客户端同时访问主和从,可以配置从只读或者读写

主从复制搭建

- replicaof localhost 6379 // Redis 5.0以后使用 replicaof 追随

- 同步时,主会生成rdb文件全量同步给slave

191:M 18 Apr 2020 09:31:12.823 * Starting BGSAVE for SYNC with target: disk 191:M 18 Apr 2020 09:31:12.823 * Background saving started by pid 374 374:C 18 Apr 2020 09:31:12.828 * DB saved on disk 374:C 18 Apr 2020 09:31:12.831 * RDB: 0 MB of memory used by copy-on-write 191:M 18 Apr 2020 09:31:12.923 * Background saving terminated with success 191:M 18 Apr 2020 09:31:12.923 * Synchronization with replica 127.0.0.1:6380 succeeded - 同步时slave会先清空自己的内容

256:S 18 Apr 2020 09:31:12.923 * MASTER <-> REPLICA sync: Flushing old data 256:S 18 Apr 2020 09:31:12.923 * MASTER <-> REPLICA sync: Loading DB in memory - 未开启 aof 时,slave 挂掉后,重新连回主,增量更新

191:M 18 Apr 2020 09:45:11.727 # Connection with replica 127.0.0.1:6381 lost. 191:M 18 Apr 2020 09:45:53.210 * Replica 127.0.0.1:6381 asks for synchronization 191:M 18 Apr 2020 09:45:53.211 * Partial resynchronization request from 127.0.0.1:6381 accepted. Sending 135 bytes of backlog starting from offset 1202. - 开启 aof 后,slave 挂掉后,重新连回主,全量更新

- 主挂了以后,slave 可使用 REPLICAOF no one 把自己变成主

配置文件中几个与主从复制相关的配置

#主从复制过程中 从是否支持查

replica-serve-stale-data yes

#从节点只支持读

replica-read-only yes

#默认先经常磁盘IO生成rdb文件,再走网络传输。大带宽时走直接走网络速度更快

repl-diskless-sync no

#增量复制

repl-backlog-size 1mb

#主至少需要几个从节点才能写入

min-replicas-to-write 3

#指定网络延迟的最大值

min-replicas-max-lag 10

现在虽然我们已经是主从集群了对吧,但是主节点还是一个单节点,不能保证高可用。如果主挂了就凉凉了。我们总不能每次手动让从顶上去当主吧。Redis也想到了这点。Redis中可以使用哨兵来监控主的健康状态。

哨兵

新建哨兵配置文件

[root@d3c5a983ba7b test]# vi 26379.conf

[root@d3c5a983ba7b test]# cat 26379.conf

port 26379

sentinel monitor mymaster 127.0.0.1 6379 2

拷贝 2 份,端口分别为 26380,26381,修改文件内端口号

[root@d3c5a983ba7b test]# cp 26379.conf 26380.conf

[root@d3c5a983ba7b test]# cp 26379.conf 26381.conf

[root@d3c5a983ba7b test]# vi 26380.conf

[root@d3c5a983ba7b test]# vi 26381.conf

[root@d3c5a983ba7b test]# cat 26380.conf

port 26380

sentinel monitor mymaster 127.0.0.1 6379 2

[root@d3c5a983ba7b test]# cat 26381.conf

port 26381

sentinel monitor mymaster 127.0.0.1 6379 2

sentinel monitor mymaster 127.0.0.1 6379 2

这行配置指示 Sentinel 去监视一个名为 mymaster 的主服务器, 这个主服务器的 IP 地址为 127.0.0.1 , 端口号为 6379 , 而将这个主服务器判断为失效至少需要 2 个 Sentinel 同意

启动哨兵

[root@d3c5a983ba7b test]# redis-server ./26379.conf --sentinel

447:X 19 Apr 2020 07:54:19.933 # +monitor master mymaster 127.0.0.1 6379 quorum 2

447:X 19 Apr 2020 07:54:19.939 * +slave slave 127.0.0.1:6381 127.0.0.1 6381 @ mymaster 127.0.0.1 6379

447:X 19 Apr 2020 07:54:19.940 * +slave slave 127.0.0.1:6380 127.0.0.1 6380 @ mymaster 127.0.0.1 6379

447:X 19 Apr 2020 07:57:20.638 * +fix-slave-config slave 127.0.0.1:6381 127.0.0.1 6381 @ mymaster 127.0.0.1 6379

447:X 19 Apr 2020 07:57:20.638 * +fix-slave-config slave 127.0.0.1:6380 127.0.0.1 6380 @ mymaster 127.0.0.1 6379

3台哨兵都起起来以后,尝试挂掉端口为6379这台主。哨兵发现后,会选出一台从作为主,并把6379置为从。

哨兵间通信

哨兵间通过发布订阅来获取其他哨兵的信息

[root@d3c5a983ba7b test]# redis-cli -p 6381

127.0.0.1:6381> PSUBSCRIBE *

Reading messages... (press Ctrl-C to quit)

1) "psubscribe"

2) "*"

3) (integer) 1

1) "pmessage"

2) "*"

3) "__sentinel__:hello"

4) "127.0.0.1,26379,2dac5b63b2192f8550346684105c5c3dc4afec83,1,mymaster,127.0.0.1,6380,1"

1) "pmessage"

2) "*"

3) "__sentinel__:hello"

4) "127.0.0.1,26381,714399b0f7896e9431ed38a67bf0310ecdb5bb9b,1,mymaster,127.0.0.1,6380,1"

1) "pmessage"

2) "*"

3) "__sentinel__:hello"

4) "127.0.0.1,26380,197f21b433e1e5f2cefc8c24976e32c5a56926e6,1,mymaster,127.0.0.1,6380,1"

1) "pmessage"

2) "*"

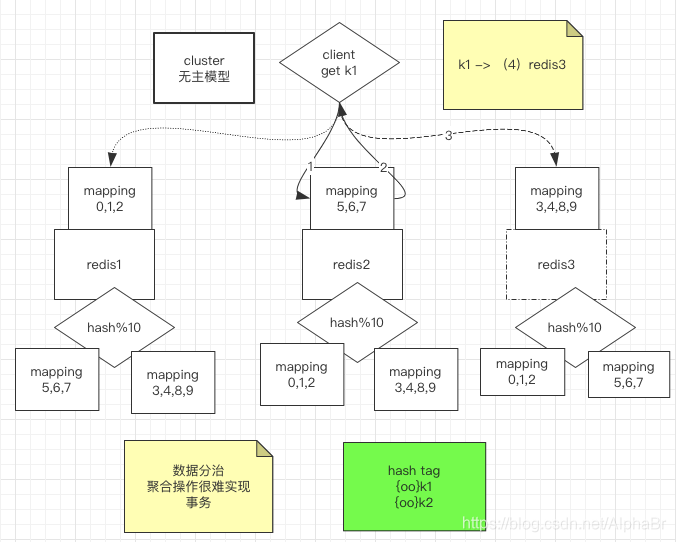

Cluster集群

Redis 集群没有使用一致性hash, 而是引入了 哈希槽的概念.

Redis 集群有16384个哈希槽,每个key通过CRC16校验后对16384取模来决定放置哪个槽.集群的每个节点负责一部分hash槽,举个例子,比如当前集群有3个节点,那么:

节点 A 包含 0 到 5500号哈希槽.

节点 B 包含5501 到 11000 号哈希槽.

节点 C 包含11001 到 16384号哈希槽.

这种结构很容易添加或者删除节点. 比如如果我想新添加个节点D, 我需要从节点 A, B, C中得部分槽到D上. 如果我想移除节点A,需要将A中的槽移到B和C节点上,然后将没有任何槽的A节点从集群中移除即可. 由于从一个节点将哈希槽移动到另一个节点并不会停止服务,所以无论添加删除或者改变某个节点的哈希槽的数量都不会造成集群不可用的状态.

使用 create-cluster 启动集群时,先分槽位

[root@d3c5a983ba7b create-cluster]# ./create-cluster start

Starting 30001

Starting 30002

Starting 30003

Starting 30004

Starting 30005

Starting 30006

[root@d3c5a983ba7b create-cluster]# ./create-cluster create

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 127.0.0.1:30005 to 127.0.0.1:30001

Adding replica 127.0.0.1:30006 to 127.0.0.1:30002

Adding replica 127.0.0.1:30004 to 127.0.0.1:30003

[root@d3c5a983ba7b create-cluster]# redis-cli -c -p 30001

127.0.0.1:30001> set k1 aaa

-> Redirected to slot [12706] located at 127.0.0.1:30003

OK

127.0.0.1:30003> get k1

"aaa"

事务

使用 { } 前缀

127.0.0.1:30003> set {xx}k1 abc

OK

127.0.0.1:30003> watch {xx}k1

OK

127.0.0.1:30003> MULTI

OK

127.0.0.1:30003> set {xx}k2 222

QUEUED

127.0.0.1:30003> exec

1) OK

手动分槽位

先清以下环境

[root@d3c5a983ba7b create-cluster]# ./create-cluster stop

Stopping 30001

Stopping 30002

Stopping 30003

Stopping 30004

Stopping 30005

Stopping 30006

[root@d3c5a983ba7b create-cluster]# ./create-cluster clean

[root@d3c5a983ba7b create-cluster]# ll

total 8

-rwxrwxr-x 1 root root 2344 May 15 2019 create-cluster

-rw-rw-r-- 1 root root 1317 May 15 2019 README

启动6台机器

[root@d3c5a983ba7b create-cluster]# ./create-cluster start

Starting 30001

Starting 30002

Starting 30003

Starting 30004

Starting 30005

Starting 30006

[root@d3c5a983ba7b create-cluster]# redis-cli --cluster help

[root@d3c5a983ba7b create-cluster]# redis-cli --cluster create 127.0.0.1:30001 127.0.0.1:30002 127.0.0.1:30003 127.0.0.1:30004 127.0.0.1:30005 127.0.0.1:30006 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 127.0.0.1:30005 to 127.0.0.1:30001

Adding replica 127.0.0.1:30006 to 127.0.0.1:30002

Adding replica 127.0.0.1:30004 to 127.0.0.1:30003

>>> Trying to optimize slaves allocation for anti-affinity

[WARNING] Some slaves are in the same host as their master

M: 88f139122e9d732d7ec8212610e37dd651abe715 127.0.0.1:30001

slots:[0-5460] (5461 slots) master

M: ada54ad012927c376cd0b565e8d712d7624a12fd 127.0.0.1:30002

slots:[5461-10922] (5462 slots) master

M: 5486505019e08ebddab6b17ebfad4e472b0a5ce5 127.0.0.1:30003

slots:[10923-16383] (5461 slots) master

S: f9a9e50ad599fad5813c2122cd9f1399b55d8310 127.0.0.1:30004

replicates 88f139122e9d732d7ec8212610e37dd651abe715

S: b7732b54b2104a9abda708ce5ab5990a3501a5d8 127.0.0.1:30005

replicates ada54ad012927c376cd0b565e8d712d7624a12fd

S: 48fcd0568951bf256d8465ca9c846181630a0e77 127.0.0.1:30006

replicates 5486505019e08ebddab6b17ebfad4e472b0a5ce5

Can I set the above configuration? (type 'yes' to accept): yes

[root@d3c5a983ba7b create-cluster]# redis-cli -c -p 30001

127.0.0.1:30001> set k1 222

-> Redirected to slot [12706] located at 127.0.0.1:30003

OK

reshard

redis-cli --cluster reshard 127.0.0.1:30001

然后输入要移动的槽位,从哪里移

[root@d3c5a983ba7b create-cluster]# redis-cli --cluster reshard 127.0.0.1:30001

>>> Performing Cluster Check (using node 127.0.0.1:30001)

M: 88f139122e9d732d7ec8212610e37dd651abe715 127.0.0.1:30001

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: b7732b54b2104a9abda708ce5ab5990a3501a5d8 127.0.0.1:30005

slots: (0 slots) slave

replicates ada54ad012927c376cd0b565e8d712d7624a12fd

M: ada54ad012927c376cd0b565e8d712d7624a12fd 127.0.0.1:30002

slots:[5461-11922] (6462 slots) master

1 additional replica(s)

S: f9a9e50ad599fad5813c2122cd9f1399b55d8310 127.0.0.1:30004

slots: (0 slots) slave

replicates 88f139122e9d732d7ec8212610e37dd651abe715

M: 5486505019e08ebddab6b17ebfad4e472b0a5ce5 127.0.0.1:30003

slots:[11923-16383] (4461 slots) master

1 additional replica(s)

S: 48fcd0568951bf256d8465ca9c846181630a0e77 127.0.0.1:30006

slots: (0 slots) slave

replicates 5486505019e08ebddab6b17ebfad4e472b0a5ce5

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)?

[root@d3c5a983ba7b create-cluster]# redis-cli --cluster check 127.0.0.1:30001

127.0.0.1:30001 (88f13912...) -> 0 keys | 5461 slots | 1 slaves.

127.0.0.1:30002 (ada54ad0...) -> 0 keys | 6462 slots | 1 slaves.

127.0.0.1:30003 (54865050...) -> 1 keys | 4461 slots | 1 slaves.

[OK] 1 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 127.0.0.1:30001)

M: 88f139122e9d732d7ec8212610e37dd651abe715 127.0.0.1:30001

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: b7732b54b2104a9abda708ce5ab5990a3501a5d8 127.0.0.1:30005

slots: (0 slots) slave

replicates ada54ad012927c376cd0b565e8d712d7624a12fd

M: ada54ad012927c376cd0b565e8d712d7624a12fd 127.0.0.1:30002

slots:[5461-11922] (6462 slots) master

1 additional replica(s)

S: f9a9e50ad599fad5813c2122cd9f1399b55d8310 127.0.0.1:30004

slots: (0 slots) slave

replicates 88f139122e9d732d7ec8212610e37dd651abe715

M: 5486505019e08ebddab6b17ebfad4e472b0a5ce5 127.0.0.1:30003

slots:[11923-16383] (4461 slots) master

1 additional replica(s)

S: 48fcd0568951bf256d8465ca9c846181630a0e77 127.0.0.1:30006

slots: (0 slots) slave

replicates 5486505019e08ebddab6b17ebfad4e472b0a5ce5

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.