前言

本节学习线性回归法

- 简单容易

- 良好解释性

- 强大模型的基础

本节内容包括

- 自己实现底层逻辑

- 使用scikit的库

1、简单线性回归

原理

简单线性回归就是只有一个特征

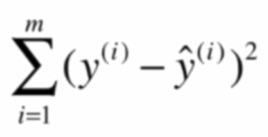

目标是使下面这个差尽可能小

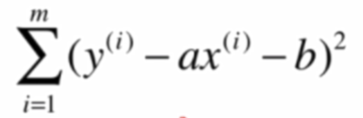

代入y得到

称为损失函数(loss)

参数学习算法都是确定loss,并让他最小

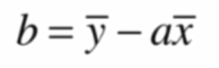

方法是最小二乘法,就是求偏导,得到参数a,b

实现

简单实现如下

import numpy as np

"""简单线性回归函数"""

class SimpleLinearRegression1:

def __init__(self):

"""初始化Simple Linear Regression 模型"""

self.a_ = None

self.b_ = None

def fit(self, x_train, y_train):

"""根据训练数据集x_train,y_train训练Simple Linear Regression模型"""

assert x_train.ndim == 1, \

"Simple Linear Regressor can only solve single feature training data."

assert len(x_train) == len(y_train), \

"the size of x_train must be equal to the size of y_train"

x_mean = np.mean(x_train)

y_mean = np.mean(y_train)

num = 0.0

d = 0.0

for x, y in zip(x_train, y_train):

num += (x - x_mean) * (y - y_mean)

d += (x - x_mean) ** 2

self.a_ = num / d

self.b_ = y_mean - self.a_ * x_mean #最小二乘法

return self

def predict(self, x_predict):

"""给定待预测数据集x_predict,返回表示x_predict的结果向量"""

assert x_predict.ndim == 1, \

"Simple Linear Regressor can only solve single feature training data."

assert self.a_ is not None and self.b_ is not None, \

"must fit before predict!"

return np.array([self._predict(x) for x in x_predict])

def _predict(self, x_single):

"""给定单个待预测数据x,返回x的预测结果值"""

return self.a_ * x_single + self.b_

def __repr__(self):

return "SimpleLinearRegression1()"向量化处理如下

import numpy as np

"""向量化"""

class SimpleLinearRegression2:

def __init__(self):

"""初始化Simple Linear Regression模型"""

self.a_ = None

self.b_ = None

def fit(self, x_train, y_train):

"""根据训练数据集x_train,y_train训练Simple Linear Regression模型"""

assert x_train.ndim == 1, \

"Simple Linear Regressor can only solve single feature training data."

assert len(x_train) == len(y_train), \

"the size of x_train must be equal to the size of y_train"

x_mean = np.mean(x_train)

y_mean = np.mean(y_train)

self.a_ = (x_train - x_mean).dot(y_train - y_mean) / (x_train - x_mean).dot(x_train - x_mean) #点乘

self.b_ = y_mean - self.a_ * x_mean

return self

def predict(self, x_predict):

"""给定待预测数据集x_predict,返回表示x_predict的结果向量"""

assert x_predict.ndim == 1, \

"Simple Linear Regressor can only solve single feature training data."

assert self.a_ is not None and self.b_ is not None, \

"must fit before predict!"

return np.array([self._predict(x) for x in x_predict])

def _predict(self, x_single):

"""给定单个待预测数据x_single,返回x_single的预测结果值"""

return self.a_ * x_single + self.b_

def __repr__(self):

return "SimpleLinearRegression2()"2、性能评价

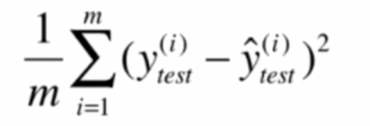

衡量指标有以下几个

均方误差MSE

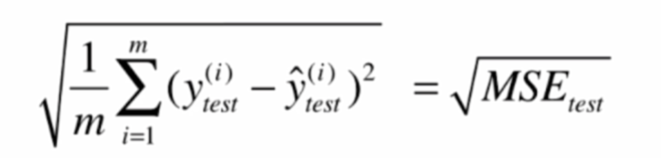

均方根误差RMSE

和MSE的选择是量纲的问题

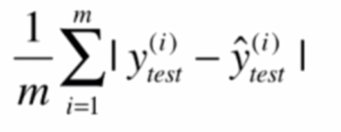

平均绝对误差MAE

R squared

分子是使用模型预测产生的错误,分母是与x无关的baseline model

越大越好,小于0则说明模型问题很大,可能没有线性关系

实现如下

import numpy as np

from math import sqrt

"""衡量指标"""

def accuracy_score(y_true, y_predict):

"""计算y_true和y_predict之间的准确率"""

assert len(y_true) == len(y_predict), \

"the size of y_true must be equal to the size of y_predict"

return np.sum(y_true == y_predict) / len(y_true)

def mean_squared_error(y_true, y_predict):

"""计算y_true和y_predict之间的MSE"""

assert len(y_true) == len(y_predict), \

"the size of y_true must be equal to the size of y_predict"

return np.sum((y_true - y_predict)**2) / len(y_true)

def root_mean_squared_error(y_true, y_predict):

"""计算y_true和y_predict之间的RMSE"""

return sqrt(mean_squared_error(y_true, y_predict))

def mean_absolute_error(y_true, y_predict):

"""计算y_true和y_predict之间的MAE"""

return np.sum(np.absolute(y_true - y_predict)) / len(y_true)

def r2_score(y_true, y_predict):

"""计算y_true和y_predict之间的R Square"""

return 1 - mean_squared_error(y_true, y_predict)/np.var(y_true)3、多元线性回归

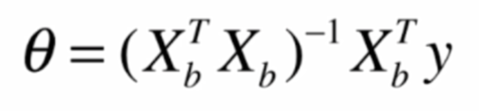

说白了就是矩阵

正规方程解

- 时间复杂度O(n^3)

- 不需要归一化处理

实现如下

import numpy as np

from sklearn.metrics import r2_score

"""多元线性回归"""

class LinearRegression:

def __init__(self):

"""初始化Linear Regression模型"""

self.coef_ = None

self.intercept_ = None #截距

self._theta = None #θ

def fit_normal(self, X_train, y_train):

"""根据训练数据集X_train, y_train训练Linear Regression模型"""

assert X_train.shape[0] == y_train.shape[0], \

"the size of X_train must be equal to the size of y_train"

X_b = np.hstack([np.ones((len(X_train), 1)), X_train]) #矩阵

self._theta = np.linalg.inv(X_b.T.dot(X_b)).dot(X_b.T).dot(y_train) #正规方程解

self.intercept_ = self._theta[0]

self.coef_ = self._theta[1:]

return self

def predict(self, X_predict):

"""给定待预测数据集X_predict,返回表示X_predict的结果向量"""

assert self.intercept_ is not None and self.coef_ is not None, \

"must fit before predict!"

assert X_predict.shape[1] == len(self.coef_), \

"the feature number of X_predict must be equal to X_train"

X_b = np.hstack([np.ones((len(X_predict), 1)), X_predict])

return X_b.dot(self._theta)

def score(self, X_test, y_test):

"""根据测试数据集 X_test 和 y_test 确定当前模型的准确度"""

y_predict = self.predict(X_test)

return r2_score(y_test, y_predict)

def __repr__(self):

return "LinearRegression()"4、使用scikit的库

import numpy as np

import matplotlib.pyplot as plt

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

"""scikit里的线性回归"""

# 准备数据集

boston = datasets.load_boston()

X = boston.data

y = boston.target

X = X[y < 50.0]

y = y[y < 50.0]

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=666)

# 线性回归

lin_reg = LinearRegression()

lin_reg.fit(X_train, y_train)

score = lin_reg.score(X_test, y_test)

print(score)

"""kNN Regressor"""

from sklearn.preprocessing import StandardScaler

from sklearn.neighbors import KNeighborsRegressor

from sklearn.model_selection import GridSearchCV

#准备数据集

standardScaler = StandardScaler()

standardScaler.fit(X_train, y_train)

X_train_standard = standardScaler.transform(X_train)

X_test_standard = standardScaler.transform(X_test)

# kNN Regressor

knn_reg = KNeighborsRegressor()

knn_reg.fit(X_train_standard, y_train)

score = knn_reg.score(X_test_standard, y_test)

print(score)

# 网格搜索

param_grid = [

{

"weights": ["uniform"],

"n_neighbors": [i for i in range(1, 11)]

},

{

"weights": ["distance"],

"n_neighbors": [i for i in range(1, 11)],

"p": [i for i in range(1,6)]

}

]

knn_reg = KNeighborsRegressor()

grid_search = GridSearchCV(knn_reg, param_grid, n_jobs=-1, verbose=1)

grid_search.fit(X_train_standard, y_train)

print(grid_search.best_params_) #最好参数

print(grid_search.best_score_) #最好的结果

print(grid_search.best_estimator_.score(X_test_standard, y_test)) #回归算法结果结语

本节较为全面的学习了线性回归法

这是很多模型的基础

相比kNN

线性回归对数据有假设