离线处理之业务数据采集、生成用户画像、推广效果分析以及知识点总结

- 1. Azkaban周期性调度任务

- 1.1. 总览

- 1.2. 调度脚本

- 1.3. [Azkaban安装并设置定时任务Schedule以及邮件发送接收](https://blog.csdn.net/aizhenshi/article/details/80828726?utm_source=blogxgwz5)

- 2. 业务数据采集

- 2.1. 后台通过`logback`把业务接口日志写入到本地文件

- 2.2. 通过Flume采集数据到Kafka

- 2.3. Storm消费Kafka数据,写入Hbase

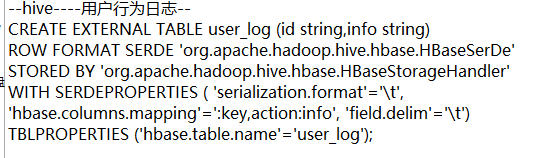

- 2.4. [Hive和Hbase的整合](https://blog.csdn.net/fanjianhai/article/details/106016931)

- 2.5. 通过Sqoop把业务数据从PostgreSql导入Hive数仓

- 3. 生成用户画像

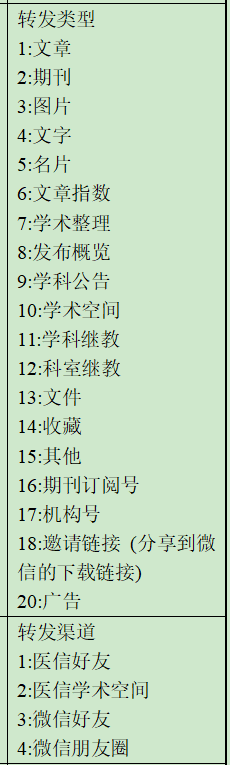

- 4. 推广效果分析

- 5. 知识点总结

- 5.1. Tomcat

- 5.1.1. [Tomcat使用详细教程](https://blog.csdn.net/weixin_39657319/article/details/83268378)

- 5.1.2. [用脚本实现windows与linux之间文件的传输 ](https://blog.csdn.net/xqhrs232/article/details/78403080)

- 5.2. Hive Hql常用方法总结

- 5.2.1. [ROW_NUMBER() OVER函数的基本用法](https://jingyan.baidu.com/article/9989c74604a644f648ecfef3.html)

- 5.2.2. [SQL语言-- SELECT CASE WHEN THEN](https://blog.csdn.net/qq_34777600/article/details/81699270)

- 5.2.3. [Hive列转行 (Lateral View + explode)详解](https://zhuanlan.zhihu.com/p/115913870)

- 5.2.4. [HiveSQL行转列lateral view explore()以及连接concat_ws()和列转行collect_list()&collect_set()区别的使用案例](https://blog.csdn.net/weixin_39043567/article/details/90666521)

- 5.2.5. [Hive SQL grouping sets 用法](https://www.cnblogs.com/Allen-rg/p/10648231.html)

- 6. 寄语:知己知彼,不狂不馁,仔细地找准了自己生命的目标,板浆摇橹向人生茫茫之海努力划去。

1. Azkaban周期性调度任务

1.1. 总览

1.2. 调度脚本

-

system_pre.propertiesdbUrl=jdbc:postgresql://rm-2zeqbua7952ni0c14.pg.rds.aliyuncs.com:3433/medchat userName=****** password=****** -

bass_get_data.jobtype=command command=sh sqoop_coll.sh ${dbUrl} ${userName} ${password} -

bass_serv_profile.jobtype=command command=sh bass_serv_profile.sh dependencies=bass_get_data -

bass_serv_profile.sh#!/bin/bash echo "run profile start `date '+%Y-%m-%d,%H:%m:%s'`" lastDay=`date -d "yesterday" +%Y-%m-%d` #生成用户画像 hive -hiveconf lastDay=$lastDay -f serv_profile.sql echo "run profile end `date '+%Y-%m-%d,%H:%m:%s'`" -

bass_ad_analysis.jobtype=command command=sh bass_ad_analysis.sh ${dbUrl} ${userName} ${password} dependencies=bass_serv_profile -

bass_ad_analysis.sh#!/bin/bash echo "run ad analysis start `date '+%Y-%m-%d,%H:%m:%s'`" dbUrl=$1 userName=$2 password=$3 lastDay=`date -d "yesterday" +%Y-%m-%d` #广告效果分析 hive -hiveconf lastDay=$lastDay -hiveconf dbUrl=$dbUrl -hiveconf userName=$userName -hiveconf password=$password -f ad_analysis.sql echo "run analysis end `date '+%Y-%m-%d,%H:%m:%s'`"

1.3. Azkaban安装并设置定时任务Schedule以及邮件发送接收

2. 业务数据采集

2.1. 后台通过logback把业务接口日志写入到本地文件

2.1.1. logback配置文件

2.1.2. 拦截器当中记录接口日志

2.1.3. 本地日志目录

2.1.4. 日志格式

{

"time":"2020-07-17 09:06:06.897",

"modelName":"MINI-PORTAL",

"host":"172.17.176.152",

"thread":"http-nio-8902-exec-62",

"level":"INFO ",

"file":"MedChatLogger.java:110",

"source":"adFile",

"adLogonName":"",

"os":"",

"operFlag":true,

"inputParam":{

"logonName":[

"15165428830"

],

"logonPwd":[

"******"

]

},

"channel":"ANDROID",

"language":"en",

"isNeedSync":false,

"remote":"114.247.227.197",

"message":"User login successfully",

"version":"1.3.0-preview",

"mac":"A1000037DFFE57",

"url":"/html/gateway/api.ajax",

"token":"",

"result":[

{

"token":"49f1428d2aea459fb795799b8f841254",

"isCanPublish":true,

"isCanAudit":false,

"isBelongEditorialBoard":false,

"isBelongTeam":true,

"isHaveEducation":false,

"isHaveWorkExperience":false,

"authFlag":"Y",

"isForcedAuth":false,

"servId":"100034",

"imPwd":"5ED47664D3C844ED075D121089B10DD8",

"pushId":"100034",

"pushAlias":"MOBILE",

"servName":"文飞扬",

"servIcon":"doctor/headportrait/person/1566811863179164958.jpg",

"gender":"",

"servInfoDegree":"1",

"isVoice":false,

"isShock":false,

"isCertApply":false,

"isHaveChannel":true,

"cityName":"Yanbian Korean Autonomous Prefecture",

"isSpecialServ":false,

"isShowZone":true,

"isHaveAuth":true,

"isHaveProfile":false,

"isCanNotice":false,

"dutyDesc":"",

"channelEduPublishType":"SPEECH",

"mainDesc":"北京医院",

"noticeInfo":{

"noticeChatId":null,

"noticeChatName":null

},

"isSwitchPhone":false,

"isShowMobile":true,

"isOpenEduInvite":false,

"isAnonymous":false,

"paymentSalt":"MTUxNjU1Mjg4NjQ=",

"isRealName":false,

"isHavePaymentPassword":false,

"maxBankCardCount":"5",

"menuArr":[

"ARTICLE",

"PEER",

"CHAT",

"ME"

],

"servIdentityType":"DOCTOR",

"isRemindDisturb":false,

"eduCount":"1",

"valueAddedService":"0"

}

],

"subErrcode":"",

"carrier":"",

"GATEWAY_MEDCHAT":"GATEWAY_MEDCHAT",

"errCode":"",

"model":"",

"adServId":"0",

"net":"",

"apiType":"LOGIN"

}

{

"time":"2020-07-17 10:26:59.535",

"modelName":"MINI-PORTAL",

"host":"172.17.176.152",

"thread":"http-nio-8902-exec-82",

"level":"INFO ",

"file":"MedChatLogger.java:110",

"source":"adFile",

"apiType":"QUERY_CEDU_PLATFORM_INFO_LIST@AD_INFO",

"adServId":"100242",

"result":[

{

"adId":"657"

}

]

}

2.2. 通过Flume采集数据到Kafka

2.2.1. Flume配置文件

# Each channel's type is defined.

#agent.channels.memoryChannel.type = memory

# Other config values specific to each type of channel(sink or source)

# can be defined as well

# In this case, it specifies the capacity of the memory channel

#agent.channels.memoryChannel.capacity = 100

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

#a1.sources.r1.type = exec

#a1.sources.r1.command = tail -F /opt/log/ad-mini-portal3.log

a1.sources.r1.type = TAILDIR

a1.sources.r1.positionFile = /home/medchat/apache-flume-1.8.0-bin/logfile_stats/taildir_position.json

a1.sources.r1.filegroups = f1 f2 f3

a1.sources.r1.filegroups.f1 = /home/medchat/medchat-mini-portal/apache-tomcat/logs/doctor/ad-mini-portal.*log

a1.sources.r1.filegroups.f2 = /home/medchat/medchat-console/apache-tomcat-8.0.21/logs/console/ad-console.*log

a1.sources.r1.filegroups.f3 = /home/medchat/medchat-portal/apache-tomcat-8.0.21/logs/portal-yxck/ad-portal.*log

a1.sources.r1.fileHeader = true

# Describe the sink

a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.k1.kafka.bootstrap.servers= 172.17.176.160:9092,172.17.176.159:9092,172.17.176.158:9092

a1.sinks.k1.kafka.topic= adMiniPortal

a1.sinks.k1.serializer.class=kafka.serializer.StringEncoder

a1.sinks.k1.kafka.producer.acks=1

a1.sinks.k1.custom.encoding=UTF-8

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

2.3. Storm消费Kafka数据,写入Hbase

2.3.1. LogReaderSpout.java

package com.nuhtech.marketing.rta.storm.spouts;

import java.time.Duration;

import java.util.Map;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.storm.spout.SpoutOutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichSpout;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Values;

import com.nuhtech.marketing.rta.util.KafkaUtil;

import com.nuhtech.medchat.core.util.PropConfigUtil;

public class LogReaderSpout extends BaseRichSpout {

private static final String TOPIC = PropConfigUtil.getProperty("kafka.topic");

private transient KafkaConsumer<String, String> consumer;

private transient SpoutOutputCollector collector;

@Override

public void open(Map conf, TopologyContext context, SpoutOutputCollector collector) {

this.collector = collector;

this.consumer = KafkaUtil.getConsumer(TOPIC);

}

@Override

public void nextTuple() {

ConsumerRecords<String, String> messageList = consumer.poll(Duration.ofSeconds(4));

messageList.forEach(message -> {

if (message.value() != null && !"".equals(message.value())) {

this.collector.emit(new Values(message.value()));

}

});

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("value"));

}

}

2.3.2. UserLogBolt.java

package com.nuhtech.marketing.rta.storm.bolts;

import java.io.IOException;

import java.sql.Timestamp;

import java.util.HashMap;

import java.util.Map;

import net.sf.json.JSONArray;

import net.sf.json.JSONObject;

import org.apache.storm.topology.BasicOutputCollector;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseBasicBolt;

import org.apache.storm.tuple.Tuple;

import com.nuhtech.marketing.core.logger.MarketingLogger;

import com.nuhtech.marketing.core.util.HbaseUtil;

import com.nuhtech.medchat.core.util.DatetimeUtil;

public class UserLogBolt extends BaseBasicBolt {

private static final long serialVersionUID = -5627408245880685574L;

private static String tableName = "user_log";

@Override

public void execute(Tuple input, BasicOutputCollector collector) {

String log = input.getStringByField("value");

try {

JSONObject jsonObject = JSONObject.fromObject(log);

recordAll(log, jsonObject);

recordAds(jsonObject);

} catch (Exception e) {

MarketingLogger.error(e);

}

}

//记录所有行为日志

public void recordAll(String log, JSONObject jsonObject) throws IOException {

Timestamp timeStamp = DatetimeUtil.string2Timestamp(jsonObject.getString("time"));

String adServId = jsonObject.getString("adServId");

String time = DatetimeUtil.date2StringDateTimeNoLine(timeStamp);

int id = (int) ((Math.random() * 9 + 1) * 10000);

String servId = String.format("%08d", Long.parseLong(adServId));

//rowKey:14位时间戳+8位servId+5位随机Id

String rowKey = time + "|" + servId + "|" + id;

Map<String, Object> map = new HashMap<String, Object>();

map.put("rowKey", rowKey);

map.put("columnFamily", "action");

map.put("columnName", "info");

map.put("columnValue", log);

HbaseUtil.insertOnly(tableName, map);

}

//广告统计相关

public void recordAds(JSONObject jsonObject) throws IOException {

String adServId = jsonObject.getString("adServId");

String apiType = jsonObject.getString("apiType");

if (apiType.indexOf("@AD_INFO") != -1) {

JSONArray jsonArray = jsonObject.getJSONArray("result");

String ids = jsonArray.getJSONObject(0).getString("adId");

String[] idsArr = ids.split(",");

for (String id : idsArr) {

String rowKey = adServId + "|" + id;

HbaseUtil.autoIncrementColumn("realtime_ad_stat", rowKey, "show_cnt", 1);

}

}

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

}

}

2.3.3 ToHbaseBolt.java

package com.nuhtech.marketing.rta.storm.bolts;

import java.io.IOException;

import java.util.Map;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.BasicOutputCollector;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseBasicBolt;

import org.apache.storm.tuple.Tuple;

import com.nuhtech.marketing.core.logger.MarketingLogger;

public class ToHbaseBolt extends BaseBasicBolt {

private static final long serialVersionUID = -5627408245880685574L;

private transient Table table;

@SuppressWarnings("rawtypes")

@Override

public void prepare(Map stormConf, TopologyContext context) {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "192.168.1.23:2181,192.168.1.24:2181,192.168.1.25:2181");

try (Connection conn = ConnectionFactory.createConnection(conf)) {

table = conn.getTable(TableName.valueOf("realtime_ad_stat"));

} catch (IOException e) {

MarketingLogger.error(e);

}

}

@Override

public void execute(Tuple input, BasicOutputCollector collector) {

String action = input.getStringByField("action");

String rowkey = input.getStringByField("rowkey");

Long pv = input.getLongByField("cnt");

try {

if ("view".equals(action)) {

table.incrementColumnValue(Bytes.toBytes(rowkey), Bytes.toBytes("stat"), Bytes.toBytes("view_cnt"), pv);

}

if ("click".equals(action)) {

table.incrementColumnValue(Bytes.toBytes(rowkey), Bytes.toBytes("stat"), Bytes.toBytes("click_cnt"), pv);

}

} catch (IOException e) {

MarketingLogger.error(e);

}

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

// Do nothing

}

}

2.4. Hive和Hbase的整合

2.4.1. HIve和Hbase的表关联

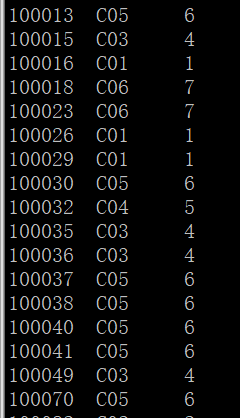

2.4.2. Hive数据样式

2.4.3. Hbase数据样式

2.4.4. Hive和Hbase关联后,操作一方同样会影响另一方数据

2.5. 通过Sqoop把业务数据从PostgreSql导入Hive数仓

#!/bin/bash

echo "sqoop coll start `date '+%Y-%m-%d,%H:%m:%s'`"

if [ $# -eq 3 ] ;then

echo "the args you input is right"

CONNECTURL=$1

USERNAME=$2

PASSWORD=$3

lastDay=`date -d "yesterday" +%Y-%m-%d`

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table f_serv --null-string '\\N' --null-non-string '\\N' --fields-terminated-by ',' --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table f_serv --hive-overwrite

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table f_serv_identity --null-string '\\N' --null-non-string '\\N' --fields-terminated-by ',' --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table f_serv_identity --hive-overwrite

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table f_serv_base --null-string '\\N' --null-non-string '\\N' --fields-terminated-by ',' --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table f_serv_base --hive-overwrite

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table s_hospital --null-string '\\N' --null-non-string '\\N' --fields-terminated-by ',' --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table s_hospital --hive-overwrite

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table f_channel_subscribe --null-string '\\N' --null-non-string '\\N' --fields-terminated-by ',' --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table f_channel_subscribe --hive-overwrite

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table mr_editorial_board_member --null-string '\\N' --null-non-string '\\N' --fields-terminated-by ',' --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table mr_editorial_board_member --hive-overwrite

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table e_presidium_member --null-string '\\N' --null-non-string '\\N' --fields-terminated-by ',' --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table e_presidium_member --hive-overwrite

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table e_rostrum_member --null-string '\\N' --null-non-string '\\N' --fields-terminated-by ',' --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table e_rostrum_member --hive-overwrite

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table e_edu_member --null-string '\\N' --null-non-string '\\N' --fields-terminated-by ',' --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table e_edu_member --hive-overwrite

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table mr_article_read_record --columns "read_record_id, article_id, serv_id, read_start_time, read_end_time, duration, update_datetime, create_datetime, thesis_type, spread_plan_id" --where "create_datetime::date='${lastDay}'" --hive-partition-key "dt" --hive-partition-value "${lastDay}" --null-string '\\N' --null-non-string '\\N' --fields-terminated-by ',' --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table mr_article_read_record --hive-overwrite

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table mr_article_laud --columns "article_laud_id, article_id, serv_id,update_datetime, create_datetime, thesis_type, spread_plan_id" --where "create_datetime::date='${lastDay}'" --hive-partition-key "dt" --hive-partition-value "${lastDay}" --null-string '\\N' --null-non-string '\\N' --fields-terminated-by ',' --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table mr_article_laud --hive-overwrite

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table mr_article_comment --columns "article_comment_id,article_id,serv_id,comment_type,ref_article_comment_id,content,ref_serv_id,status,update_datetime,create_datetime,content_original,thesis_type,spread_plan_id" --where "create_datetime::date='${lastDay}'" --hive-partition-key "dt" --hive-partition-value "${lastDay}" --null-string '\\N' --null-non-string '\\N' --fields-terminated-by '\001' --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table mr_article_comment --hive-overwrite

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table mr_forward --columns "forward_id,serv_id,forward_type,forward_channel,refer_id,status,update_datetime,create_datetime,content,source_serv_id,target_serv_ids,target_group_id" --where "create_datetime::date='${lastDay}'" --hive-partition-key "dt" --hive-partition-value "${lastDay}" --null-string '\\N' --null-non-string '\\N' --fields-terminated-by '\001' --hive-drop-import-delims --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table mr_forward --hive-overwrite

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table mr_favorite --columns "favorite_id,serv_id,favorite_type,type,favorite_json,favorite_serv_id,refer_id,content,duration,favorite_url,status,update_datetime,create_datetime,file_size,refer_type" --where "create_datetime::date='${lastDay}'" --hive-partition-key "dt" --hive-partition-value "${lastDay}" --null-string '\\N' --null-non-string '\\N' --fields-terminated-by '\001' --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table mr_favorite --hive-overwrite

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table sp_spread_plan --columns "spread_plan_id,service_provider_id,scheme_id,audit_status,publish_status,release_date,exp_date,update_datetime,create_datetime" --null-string '\\N' --null-non-string '\\N' --fields-terminated-by ',' --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table sp_spread_plan --hive-overwrite

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --table sp_plan_filter --columns "seq_nbr, spread_plan_id, serv_id, update_datetime, create_datetime" --where "create_datetime::date='${lastDay}'" --hive-partition-key "dt" --hive-partition-value "${lastDay}" --null-string '\\N' --null-non-string '\\N' --fields-terminated-by '\001' --delete-target-dir --num-mappers 1 --hive-import --hive-database default --hive-table sp_plan_filter --hive-overwrite

hadoop fs -test -e /user/hive/warehouse/mr_plan_article

if [ $? -ne 0 ]; then

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --query "select a.article_id,a.sale_article_id,b.spread_plan_id from mr_article a,sp_sale_article b where a.dispatch_type='SALE' and a.sale_article_id=b.sale_article_id and b.spread_plan_id is not null and \$CONDITIONS" --fields-terminated-by '\001' --m 1 --lines-terminated-by '\n' --hive-drop-import-delims --split-by article_id --target-dir /user/hive/warehouse/mr_plan_article

else

hdfs dfs -rm -r /user/hive/warehouse/mr_plan_article

sqoop import --connect $CONNECTURL --username $USERNAME --password $PASSWORD --query "select a.article_id,a.sale_article_id,b.spread_plan_id from mr_article a,sp_sale_article b where a.dispatch_type='SALE' and a.sale_article_id=b.sale_article_id and b.spread_plan_id is not null and \$CONDITIONS" --fields-terminated-by '\001' --m 1 --lines-terminated-by '\n' --hive-drop-import-delims --split-by article_id --target-dir /user/hive/warehouse/mr_plan_article

fi

else

echo "the args you input is error"

fi

echo "sqoop coll end `date '+%Y-%m-%d,%H:%m:%s'`"

-

f_serv用户表

-

f_serv_identity用户身份信息表

-

f_serv_base用户基本信息表

-

s_hospital医院表

-

f_channel_subscribe学科订阅表

-

mr_editorial_board_member学术组织成员表

-

e_presidium_member主席团成员表

-

e_rostrum_member主席台成员表

-

e_edu_member会场成员表

-

mr_article_read_record文章阅读表

-

mr_article_laud文章点赞表

-

mr_article_comment文章评论表

-

mr_forward用户转发表

-

mr_favorite用户收藏表

-

sp_spread_plan推广计划表

-

sp_plan_filter广告过滤表

-

注意点

3. 生成用户画像

3.1. hive分区设置

set hive.exec.dynamic.partition=true;

set hive.exec.dynamic.partition.mode=nonstrict;

3.2. 用户登录日志

insert overwrite table s_login_log partition(dt) -- 分区

select

get_json_object(info, '$.result[0].servId'),

get_json_object(info, '$.modelName'), -- 后台模型名称

get_json_object(info, '$.channel') , -- terminal_type 终端类型

get_json_object(info, '$.model'), -- phone_model 手机机型

get_json_object(info, '$.time'), -- create_datetime 创建时间

substr(get_json_object(info, '$.time'),1,10) -- 分区字段

from user_log

where

get_json_object(info, '$.apiType')='LOGIN' and get_json_object(info, '$.operFlag')='true' and substr(get_json_object(info, '$.time'),1,10)='${hiveconf:lastDay}';

3.3. 用户终端类型和机型

insert into serv_terminal

select

serv_id,terminal_type,phone_model

from

(select

serv_id,terminal_type,phone_model,max(create_datetime)

from s_login_log

where dt='${hiveconf:lastDay}'

group by

serv_id,terminal_type,phone_model

) a;

3.4. 用户最高委员会职务

insert overwrite table mr_newspaper_duty_highest

select

serv_id,newspaper_title,newspaper_title_order

from

(select row_number() over (partition by serv_id order by newspaper_title_order) top,*

from

(select t.serv_id,n.newspaper_title,n.newspaper_title_name,newspaper_title_order

from

mr_editorial_board_member t,mr_newspaper_title n

where

t.newspaper_title = n.newspaper_title

)as b

) as c

where

top<=1

order by serv_id,newspaper_title_order;

3.5. 用户信息

insert overwrite table f_serv_info

select

a.serv_id,a.identity,g.terminal_type,

case when length(b.province_code)!=6 then null else b.province_code end province_code,b.city_code,

case when b.gender in('F','M') then b.gender else null end gender,

case when a.identity='DOCTOR' and c.hospital_duty is not null then c.hospital_duty else 'DOC_QT' end hospital_duty,

case when a.identity='DOCTOR' and c.hospital_title is not null then c.hospital_title else 'OTH' end hospital_title,

case when a.identity='DOCTOR' then c.department_id else null end department_id,

case when a.identity='DOCTOR' and f.department_type_id is not null then f.department_type_id else 27 end department_type_id,

case when a.identity='DOCTOR' then d.hospital_id else null end hospital_id,

case when a.identity='DOCTOR' and d.hospital_level!='' then d.hospital_level else null end hospital_level,

e.newspaper_title

from f_serv a

left join f_serv_base b on(a.serv_id=b.serv_id)

left join f_serv_identity c on(a.serv_id=c.serv_id)

left join s_hospital d on(c.hospital_id=d.hospital_id)

left join mr_newspaper_duty_highest e on(a.serv_id=e.serv_id)

left join s_department f on(c.department_id=f.department_id)

left join serv_terminal g on(a.serv_id=g.serv_id)

where a.auth_flag in('Y','R');

3.6. 画像信息

-

个人信息标签insert overwrite table f_serv_tag_value partition(module='basic_info') select serv_id, mp['key'], mp['value'] from( select a.serv_id, array(map('key', 'identity', 'value', a.identity), map('key', 'device_type', 'value', a.terminal_type), map('key', 'gender', 'value',a.gender), map('key', 'province_code', 'value', a.province_code), map('key', 'city_code', 'value', a.city_code), map('key', 'hospital_id', 'value', a.hospital_id), map('key', 'department_id', 'value', a.department_id), map('key', 'department_type_id', 'value', a.department_type_id), map('key', 'hospital_title', 'value', a.hospital_title), map('key', 'hospital_duty', 'value', a.hospital_duty), map('key', 'hospital_level', 'value', a.hospital_level) ) arr from f_serv_info a ) s lateral view explode(arr) arrtable as mp;

-

频道标签insert overwrite table f_serv_tag_value partition(module='channel_subscribe') select serv_id, mp['key'], mp['value'] from (select a.serv_id,array(map('key', 'channel_subscribe', 'value', a.channel_list)) arr from (select serv_id,concat_ws(',',collect_list(cast(channel_id as string))) as channel_list from f_channel_subscribe group by serv_id ) a )s lateral view explode(arr) arrtable as mp;

-

委员会标签insert overwrite table f_serv_tag_value partition(module='newspaper_title') select serv_id, mp['key'], mp['value'] from( select a.serv_id,array(map('key', 'newspaper_title', 'value', a.newspaper_title)) arr from (select serv_id,concat_ws(',',collect_set(newspaper_title)) newspaper_title from mr_editorial_board_member group by serv_id) a )s lateral view explode(arr) arrtable as mp;

-

演讲台标签insert overwrite table f_serv_tag_value partition(module='edu') select serv_id, mp['key'], mp['value'] from( select b.serv_id,array(map('key', 'channel_edu', 'value', b.channel_edu_id)) arr from (select serv_id,concat_ws(',',collect_set(cast(channel_edu_id as string))) channel_edu_id from (select channel_edu_id,serv_id from e_presidium_member union select channel_edu_id,serv_id from e_rostrum_member union select channel_edu_id,serv_id from e_edu_member) a group by serv_id) b )s lateral view explode(arr) arrtable as mp;

3.7. 生成用户画像

insert overwrite table f_serv_profile

select

serv_id,

concat('{', concat_ws(',', collect_set(concat('"', tag, '"', ':', '"', value, '"'))), '}') as json_string

from f_serv_tag_value where serv_id is not null

group by serv_id ;

insert into table serv_sysc select serv_id,profile from f_serv_profile ;

4. 推广效果分析

4.1. 采集微论文曝光数据

insert into table mr_article_show_record partition(dt='${hiveconf:lastDay}')

select

get_json_object(info, '$.apiType') apiType,

num adId,

get_json_object(info, '$.adServId') servId,

get_json_object(info, '$.time') time

from user_log LATERAL VIEW explode(split(get_json_object(info, '$.result[0].adId'),',')) zqm AS num

where

get_json_object(info, '$.apiType') like '%@ARTICLE_INFO' and substr(get_json_object(info, '$.time'),1,10)='${hiveconf:lastDay}';

4.2. 采集广告曝光数据

insert overwrite table mr_ad_show_record partition(dt='${hiveconf:lastDay}')

select

get_json_object(info, '$.apiType') apiType,

num adId,

get_json_object(info, '$.adServId') servId,

get_json_object(info, '$.time') time

from user_log LATERAL VIEW explode(split(get_json_object(info, '$.result[0].adId'),',')) zqm AS num

where

get_json_object(info, '$.apiType') like '%@AD_INFO' and substr(get_json_object(info, '$.time'),1,10)='${hiveconf:lastDay}';

合并微文论曝光数据insert into table mr_ad_show_record partition(dt='${hiveconf:lastDay}') select a.api_type, b.spread_plan_id, a.serv_id, a.show_time from mr_article_show_record a,mr_plan_article b where a.article_id=b.article_id and a.dt='${hiveconf:lastDay}'

4.3. 曝光量多维度统计

insert overwrite table rp_spread_analysis_detail partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,

b.identity,

b.terminal_type,

b.province_code,

b.gender,

b.hospital_duty,

b.hospital_title,

b.department_type_id,

b.hospital_level,

b.newspaper_title,

'show' analysis_type,

GROUPING__ID,

count(a.serv_id) active_num

from mr_ad_show_record a

join f_serv_info b on(a.serv_id=b.serv_id)

where a.dt='${hiveconf:lastDay}'

group by a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title

grouping sets ((a.spread_plan_id,b.identity),(a.spread_plan_id,b.terminal_type),(a.spread_plan_id,b.province_code),(a.spread_plan_id,b.gender),(a.spread_plan_id,b.hospital_duty),(a.spread_plan_id,b.hospital_title),(a.spread_plan_id,b.department_type_id),(a.spread_plan_id,b.hospital_level),(a.spread_plan_id,b.newspaper_title));

-- 点击量多维度统计

insert into table rp_spread_analysis_detail partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title,'click' analysis_type,GROUPING__ID,count(a.serv_id) active_num

from

(select spread_plan_id,serv_id from mr_article_read_record where thesis_type='ADVERT' and dt='${hiveconf:lastDay}'

union all

select p.spread_plan_id,t.serv_id from mr_article_read_record t,mr_plan_article p where t.article_id=p.article_id and t.thesis_type='ARTICLE' and t.dt='${hiveconf:lastDay}') a

join f_serv_info b on(a.serv_id=b.serv_id)

group by a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title

grouping sets ((a.spread_plan_id,b.identity),(a.spread_plan_id,b.terminal_type),(a.spread_plan_id,b.province_code),(a.spread_plan_id,b.gender),(a.spread_plan_id,b.hospital_duty),(a.spread_plan_id,b.hospital_title),(a.spread_plan_id,b.department_type_id),(a.spread_plan_id,b.hospital_level),(a.spread_plan_id,b.newspaper_title));

--阅读量多维度统计

insert into table rp_spread_analysis_detail partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title,'read' analysis_type,GROUPING__ID,count(a.serv_id) active_num

from

(select spread_plan_id,serv_id from mr_article_read_record where thesis_type='ADVERT' and duration>=2 and dt='${hiveconf:lastDay}'

union all

select p.spread_plan_id,t.serv_id from mr_article_read_record t,mr_plan_article p where t.article_id=p.article_id and t.thesis_type='ARTICLE' and t.duration>=2 and t.dt='${hiveconf:lastDay}') a

join f_serv_info b on(a.serv_id=b.serv_id)

group by a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title

grouping sets ((a.spread_plan_id,b.identity),(a.spread_plan_id,b.terminal_type),(a.spread_plan_id,b.province_code),(a.spread_plan_id,b.gender),(a.spread_plan_id,b.hospital_duty),(a.spread_plan_id,b.hospital_title),(a.spread_plan_id,b.department_type_id),(a.spread_plan_id,b.hospital_level),(a.spread_plan_id,b.newspaper_title));

--点赞量多维度统计

insert into table rp_spread_analysis_detail partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title,'up' analysis_type,GROUPING__ID,count(a.serv_id) active_num

from

(select spread_plan_id,serv_id from mr_article_laud where thesis_type='ADVERT' and dt='${hiveconf:lastDay}'

union all

select p.spread_plan_id,t.serv_id from mr_article_laud t,mr_plan_article p where t.article_id=p.article_id and t.thesis_type='ARTICLE' and t.dt='${hiveconf:lastDay}') a

join f_serv_info b on(a.serv_id=b.serv_id)

group by a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title

grouping sets ((a.spread_plan_id,b.identity),(a.spread_plan_id,b.terminal_type),(a.spread_plan_id,b.province_code),(a.spread_plan_id,b.gender),(a.spread_plan_id,b.hospital_duty),(a.spread_plan_id,b.hospital_title),(a.spread_plan_id,b.department_type_id),(a.spread_plan_id,b.hospital_level),(a.spread_plan_id,b.newspaper_title));

--评论量多维度统计

insert into table rp_spread_analysis_detail partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title,'comment' analysis_type,GROUPING__ID,count(a.serv_id) active_num

from

(select spread_plan_id,serv_id from mr_article_comment where thesis_type='ADVERT' and dt='${hiveconf:lastDay}'

union all

select p.spread_plan_id,t.serv_id from mr_article_comment t,mr_plan_article p where t.article_id=p.article_id and t.thesis_type='ARTICLE' and t.dt='${hiveconf:lastDay}') a

join f_serv_info b on(a.serv_id=b.serv_id)

group by a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title

grouping sets ((a.spread_plan_id,b.identity),(a.spread_plan_id,b.terminal_type),(a.spread_plan_id,b.province_code),(a.spread_plan_id,b.gender),(a.spread_plan_id,b.hospital_duty),(a.spread_plan_id,b.hospital_title),(a.spread_plan_id,b.department_type_id),(a.spread_plan_id,b.hospital_level),(a.spread_plan_id,b.newspaper_title));

--收藏量多维度统计

insert into table rp_spread_analysis_detail partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title,'coll' analysis_type,GROUPING__ID,count(a.serv_id) active_num

from

(select refer_id spread_plan_id,serv_id from mr_favorite where refer_type='ADVERT' and dt='${hiveconf:lastDay}'

union all

select p.spread_plan_id,t.serv_id from mr_favorite t,mr_plan_article p where t.refer_id=p.article_id and t.refer_type='ARTICLE' and t.dt='${hiveconf:lastDay}') a

join f_serv_info b on(a.serv_id=b.serv_id)

group by a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title

grouping sets ((a.spread_plan_id,b.identity),(a.spread_plan_id,b.terminal_type),(a.spread_plan_id,b.province_code),(a.spread_plan_id,b.gender),(a.spread_plan_id,b.hospital_duty),(a.spread_plan_id,b.hospital_title),(a.spread_plan_id,b.department_type_id),(a.spread_plan_id,b.hospital_level),(a.spread_plan_id,b.newspaper_title));

--转发量多维度统计

insert into table rp_spread_analysis_detail partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title,'forward' analysis_type,GROUPING__ID,count(a.serv_id) active_num

from

(select refer_id spread_plan_id,serv_id from mr_forward where forward_type='20' and dt='${hiveconf:lastDay}'

union all

select p.spread_plan_id,t.serv_id from mr_forward t,mr_plan_article p where t.refer_id=p.article_id and t.forward_type='1' and t.dt='${hiveconf:lastDay}') a

join f_serv_info b on(a.serv_id=b.serv_id)

group by a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title

grouping sets ((a.spread_plan_id,b.identity),(a.spread_plan_id,b.terminal_type),(a.spread_plan_id,b.province_code),(a.spread_plan_id,b.gender),(a.spread_plan_id,b.hospital_duty),(a.spread_plan_id,b.hospital_title),(a.spread_plan_id,b.department_type_id),(a.spread_plan_id,b.hospital_level),(a.spread_plan_id,b.newspaper_title));

--问卷多维度统计

insert into table rp_spread_analysis_detail partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title,'answer' analysis_type,GROUPING__ID,count(a.serv_id) active_num

from

(select spread_plan_id,serv_id from sp_serv_reply where dt='${hiveconf:lastDay}') a

join f_serv_info b on(a.serv_id=b.serv_id)

group by a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title

grouping sets ((a.spread_plan_id,b.identity),(a.spread_plan_id,b.terminal_type),(a.spread_plan_id,b.province_code),(a.spread_plan_id,b.gender),(a.spread_plan_id,b.hospital_duty),(a.spread_plan_id,b.hospital_title),(a.spread_plan_id,b.department_type_id),(a.spread_plan_id,b.hospital_level),(a.spread_plan_id,b.newspaper_title));

--用户不敢兴趣维度统计

insert into table rp_spread_analysis_detail partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title,'filter' analysis_type,GROUPING__ID,count(a.serv_id) active_num

from

(select spread_plan_id,serv_id from sp_plan_filter where dt='${hiveconf:lastDay}') a

join f_serv_info b on(a.serv_id=b.serv_id)

group by a.spread_plan_id,b.identity,b.terminal_type,b.province_code,b.gender,b.hospital_duty,b.hospital_title,b.department_type_id,b.hospital_level,b.newspaper_title

grouping sets ((a.spread_plan_id,b.identity),(a.spread_plan_id,b.terminal_type),(a.spread_plan_id,b.province_code),(a.spread_plan_id,b.gender),(a.spread_plan_id,b.hospital_duty),(a.spread_plan_id,b.hospital_title),(a.spread_plan_id,b.department_type_id),(a.spread_plan_id,b.hospital_level),(a.spread_plan_id,b.newspaper_title));

4.4. 不同维度进行曝光量的统计

--统计身份维度

insert overwrite table rp_spread_analysis partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,'identity' attr_type,a.identity attr_value,a.show_num,a.click_num,0 click_rate,a.read_num,a.up_num,a.comment_num,a.forward_num ,a.coll_num,'${hiveconf:lastDay}' total_date,a.answer_num,a.filter_num

from (

select

spread_plan_id,identity,

sum(if(analysis_type='show',active_num,0)) as show_num,

sum(if(analysis_type='click',active_num,0)) as click_num,

sum(if(analysis_type='read',active_num,0)) as read_num ,

sum(if(analysis_type='up',active_num,0)) as up_num,

sum(if(analysis_type='comment',active_num,0)) as comment_num,

sum(if(analysis_type='coll',active_num,0)) as coll_num,

sum(if(analysis_type='forward',active_num,0)) as forward_num,

sum(if(analysis_type='answer',active_num,0)) as answer_num,

sum(if(analysis_type='filter',active_num,0)) as filter_num

from rp_spread_analysis_detail

where dt='${hiveconf:lastDay}' and bin(grouping_id)='11111111'

group by spread_plan_id,identity) a;

--统计医院等级维度

insert into table rp_spread_analysis partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,'hospitalLevel' attr_type,a.hospital_level attr_value,a.show_num,a.click_num,0 click_rate,a.read_num,a.up_num,a.comment_num,a.forward_num ,a.coll_num,'${hiveconf:lastDay}' total_date,a.answer_num,a.filter_num

from (

select spread_plan_id,hospital_level,

sum(if(analysis_type='show',active_num,0)) as show_num,

sum(if(analysis_type='click',active_num,0)) as click_num,

sum(if(analysis_type='read',active_num,0)) as read_num ,

sum(if(analysis_type='up',active_num,0)) as up_num ,

sum(if(analysis_type='comment',active_num,0)) as comment_num,

sum(if(analysis_type='coll',active_num,0)) as coll_num,

sum(if(analysis_type='forward',active_num,0)) as forward_num,

sum(if(analysis_type='answer',active_num,0)) as answer_num,

sum(if(analysis_type='filter',active_num,0)) as filter_num

from rp_spread_analysis_detail

where dt='${hiveconf:lastDay}' and bin(grouping_id)='111111101'

group by spread_plan_id,hospital_level) a;

--统计职称维度

insert into table rp_spread_analysis partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,'hospitalTitle' attr_type,a.hospital_title attr_value,a.show_num,a.click_num,0 click_rate,a.read_num,a.up_num,a.comment_num,a.forward_num ,a.coll_num,'${hiveconf:lastDay}' total_date,a.answer_num,a.filter_num

from (

select

spread_plan_id,hospital_title,

sum(if(analysis_type='show',active_num,0)) as show_num,

sum(if(analysis_type='click',active_num,0)) as click_num,

sum(if(analysis_type='read',active_num,0)) as read_num ,

sum(if(analysis_type='up',active_num,0)) as up_num ,

sum(if(analysis_type='comment',active_num,0)) as comment_num,

sum(if(analysis_type='coll',active_num,0)) as coll_num,

sum(if(analysis_type='forward',active_num,0)) as forward_num,

sum(if(analysis_type='answer',active_num,0)) as answer_num,

sum(if(analysis_type='filter',active_num,0)) as filter_num

from rp_spread_analysis_detail

where dt='${hiveconf:lastDay}' and bin(grouping_id)='111110111'

group by spread_plan_id,hospital_title) a;

--统计职务维度

insert into table rp_spread_analysis partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,'hospitalDuty' attr_type,a.hospital_duty attr_value,a.show_num,a.click_num,0 click_rate,a.read_num,a.up_num,a.comment_num,a.forward_num ,a.coll_num,'${hiveconf:lastDay}' total_date,a.answer_num,a.filter_num

from (

select

spread_plan_id,hospital_duty,

sum(if(analysis_type='show',active_num,0)) as show_num,

sum(if(analysis_type='click',active_num,0)) as click_num,

sum(if(analysis_type='read',active_num,0)) as read_num ,

sum(if(analysis_type='up',active_num,0)) as up_num ,

sum(if(analysis_type='comment',active_num,0)) as comment_num,

sum(if(analysis_type='coll',active_num,0)) as coll_num,

sum(if(analysis_type='forward',active_num,0)) as forward_num,

sum(if(analysis_type='answer',active_num,0)) as answer_num,

sum(if(analysis_type='filter',active_num,0)) as filter_num

from rp_spread_analysis_detail

where dt='${hiveconf:lastDay}' and bin(grouping_id)='111101111'

group by spread_plan_id,hospital_duty) a;

--统计性别维度

insert into table rp_spread_analysis partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,'sex' attr_type,a.gender attr_value,a.show_num,a.click_num,0 click_rate,a.read_num,a.up_num,a.comment_num,a.forward_num ,a.coll_num,'${hiveconf:lastDay}' total_date,a.answer_num,a.filter_num

from (

select

spread_plan_id,gender,

sum(if(analysis_type='show',active_num,0)) as show_num,

sum(if(analysis_type='click',active_num,0)) as click_num,

sum(if(analysis_type='read',active_num,0)) as read_num ,

sum(if(analysis_type='up',active_num,0)) as up_num ,

sum(if(analysis_type='comment',active_num,0)) as comment_num,

sum(if(analysis_type='coll',active_num,0)) as coll_num,

sum(if(analysis_type='forward',active_num,0)) as forward_num,

sum(if(analysis_type='answer',active_num,0)) as answer_num,

sum(if(analysis_type='filter',active_num,0)) as filter_num

from rp_spread_analysis_detail

where dt='${hiveconf:lastDay}' and bin(grouping_id)='111011111'

group by spread_plan_id,gender) a;

--统计机型维度

insert into table rp_spread_analysis partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,'os' attr_type,a.terminal_type attr_value,a.show_num,a.click_num,0 click_rate,a.read_num,a.up_num,a.comment_num,a.forward_num ,a.coll_num,'${hiveconf:lastDay}' total_date,a.answer_num,a.filter_num

from (

select

spread_plan_id,terminal_type,

sum(if(analysis_type='show',active_num,0)) as show_num,

sum(if(analysis_type='click',active_num,0)) as click_num,

sum(if(analysis_type='read',active_num,0)) as read_num ,

sum(if(analysis_type='up',active_num,0)) as up_num ,

sum(if(analysis_type='comment',active_num,0)) as comment_num,

sum(if(analysis_type='coll',active_num,0)) as coll_num,

sum(if(analysis_type='forward',active_num,0)) as forward_num,

sum(if(analysis_type='answer',active_num,0)) as answer_num,

sum(if(analysis_type='filter',active_num,0)) as filter_num

from rp_spread_analysis_detail

where dt='${hiveconf:lastDay}' and bin(grouping_id)='101111111'

group by spread_plan_id,terminal_type) a;

--统计省份维度

insert into table rp_spread_analysis partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,'provinceCode' attr_type,a.province_code attr_value,a.show_num,a.click_num,0 click_rate,a.read_num,a.up_num,a.comment_num,a.forward_num ,a.coll_num,'${hiveconf:lastDay}' total_date,a.answer_num,a.filter_num

from (

select

spread_plan_id,province_code,

sum(if(analysis_type='show',active_num,0)) as show_num,

sum(if(analysis_type='click',active_num,0)) as click_num,

sum(if(analysis_type='read',active_num,0)) as read_num ,

sum(if(analysis_type='up',active_num,0)) as up_num ,

sum(if(analysis_type='comment',active_num,0)) as comment_num,

sum(if(analysis_type='coll',active_num,0)) as coll_num,

sum(if(analysis_type='forward',active_num,0)) as forward_num,

sum(if(analysis_type='answer',active_num,0)) as answer_num,

sum(if(analysis_type='filter',active_num,0)) as filter_num

from rp_spread_analysis_detail

where dt='${hiveconf:lastDay}' and bin(grouping_id)='110111111'

group by spread_plan_id,province_code) a;

--统计科室分类维度

insert into table rp_spread_analysis partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,'department' attr_type,a.department_id attr_value,a.show_num,a.click_num,0 click_rate,a.read_num,a.up_num,a.comment_num,a.forward_num ,a.coll_num,'${hiveconf:lastDay}' total_date,a.answer_num,a.filter_num

from (

select

spread_plan_id,department_id,

sum(if(analysis_type='show',active_num,0)) as show_num,

sum(if(analysis_type='click',active_num,0)) as click_num,

sum(if(analysis_type='read',active_num,0)) as read_num ,

sum(if(analysis_type='up',active_num,0)) as up_num ,

sum(if(analysis_type='comment',active_num,0)) as comment_num,

sum(if(analysis_type='coll',active_num,0)) as coll_num,

sum(if(analysis_type='forward',active_num,0)) as forward_num,

sum(if(analysis_type='answer',active_num,0)) as answer_num,

sum(if(analysis_type='filter',active_num,0)) as filter_num

from rp_spread_analysis_detail

where dt='${hiveconf:lastDay}' and bin(grouping_id)='111111011'

group by spread_plan_id,department_id) a;

--统计委员会职务维度

insert into table rp_spread_analysis partition(dt='${hiveconf:lastDay}')

select

a.spread_plan_id,'boardTitle' attr_type,a.newspaper_title attr_value,a.show_num,a.click_num,0 click_rate,a.read_num,a.up_num,a.comment_num,a.forward_num ,a.coll_num,'${hiveconf:lastDay}' total_date,a.answer_num,a.filter_num

from (

select

spread_plan_id,newspaper_title,

sum(if(analysis_type='show',active_num,0)) as show_num,

sum(if(analysis_type='click',active_num,0)) as click_num,

sum(if(analysis_type='read',active_num,0)) as read_num ,

sum(if(analysis_type='up',active_num,0)) as up_num ,

sum(if(analysis_type='comment',active_num,0)) as comment_num,

sum(if(analysis_type='coll',active_num,0)) as coll_num,

sum(if(analysis_type='forward',active_num,0)) as forward_num,

sum(if(analysis_type='answer',active_num,0)) as answer_num,

sum(if(analysis_type='filter',active_num,0)) as filter_num

from rp_spread_analysis_detail

where dt='${hiveconf:lastDay}' and bin(grouping_id)='111111110'

group by spread_plan_id,newspaper_title) a;

4.5. 统计日汇总维度

insert overwrite table rp_spread partition(dt='${hiveconf:lastDay}')

select

spread_plan_id,sum(show_num),sum(click_num),0,sum(read_num),sum(up_num),sum(comment_num),sum(forward_num),sum(coll_num),total_date,sum(answer_num),sum(filter_num)

from rp_spread_analysis

where total_date='${hiveconf:lastDay}'and attr_type='identity'

group by spread_plan_id,total_date;

4.6. 同步统计结果

SELECT dboutput('${hiveconf:dbUrl}', '${hiveconf:userName}', '${hiveconf:password}', 'INSERT INTO rp_spread (spread_plan_id, show_num, click_num, read_num, up_num, comment_num, forward_num,coll_num,answer_num,filter_num, total_date,create_datetime) VALUES (?,?,?,?,?,?,?,?,?,?,?,?);', spread_plan_id, show_num, click_num, read_num, up_num, comment_num, forward_num,coll_num,answer_num,filter_num, total_date, current_timestamp)

FROM rp_spread where total_date='${hiveconf:lastDay}';

SELECT dboutput('${hiveconf:dbUrl}', '${hiveconf:userName}', '${hiveconf:password}', 'INSERT INTO rp_spreed_analysis (spread_plan_id, attr_type, attr_value, show_num, click_num, read_num, up_num, comment_num, forward_num, coll_num,answer_num,filter_num, total_date, create_datetime) VALUES (?,?,?,?,?,?,?,?,?,?,?,?,?,?);',spread_plan_id, attr_type, attr_value, show_num, click_num, read_num, up_num, comment_num, forward_num, coll_num,answer_num,filter_num, total_date, current_timestamp)

FROM rp_spread_analysis where total_date='${hiveconf:lastDay}';

SELECT dboutput('${hiveconf:dbUrl}', '${hiveconf:userName}', '${hiveconf:password}', 'INSERT INTO rp_analysis_log (log_type,log_info,create_datetime) VALUES (?,?,?);', 'item_analysis','success', current_timestamp);