前言

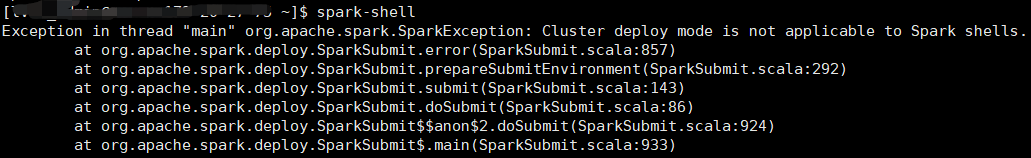

在正常的CDH6.1后台运行spark-shell进入scala交互界面报错。

报错信息:

报错信息如下:

Exception in thread "main" org.apache.spark.SparkException: Cluster deploy mode is not applicable to Spark shells.

at org.apache.spark.deploy.SparkSubmit.error(SparkSubmit.scala:857)

at org.apache.spark.deploy.SparkSubmit.prepareSubmitEnvironment(SparkSubmit.scala:292)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:143)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:924)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:933)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

具体为:

解决方案

使用命令:

spark-shell --master yarn --deploy-mode client

运行成功进入交互页面: