在java连接集群的时候出错,完整报错如下:

20/07/30 11:04:11 WARN TaskSetManager: Lost task 1.0 in stage 0.0 (TID 1, 192.168.0.102, executor 0): java.lang.ClassCastException: cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.rdd.MapPartitionsRDD.f of type scala.Function3 in instance of org.apache.spark.rdd.MapPartitionsRDD

at java.io.ObjectStreamClass$FieldReflector.setObjFieldValues(ObjectStreamClass.java:2133)

at java.io.ObjectStreamClass.setObjFieldValues(ObjectStreamClass.java:1305)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2251)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2169)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2027)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1535)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2245)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2169)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2027)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1535)

at java.io.ObjectInputStream.readObject(ObjectInputStream.java:422)

at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:76)

at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:115)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:83)

at org.apache.spark.scheduler.Task.run(Task.scala:127)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:444)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1377)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:447)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:748)

20/07/30 11:04:11 INFO TaskSetManager: Lost task 0.0 in stage 0.0 (TID 0) on 192.168.0.102, executor 0: java.lang.ClassCastException (cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.rdd.MapPartitionsRDD.f of type scala.Function3 in instance of org.apache.spark.rdd.MapPartitionsRDD) [duplicate 1]

20/07/30 11:04:11 INFO TaskSetManager: Starting task 0.1 in stage 0.0 (TID 2, 192.168.0.102, executor 0, partition 0, PROCESS_LOCAL, 7452 bytes)

20/07/30 11:04:11 INFO TaskSetManager: Starting task 1.1 in stage 0.0 (TID 3, 192.168.0.102, executor 0, partition 1, PROCESS_LOCAL, 7452 bytes)

20/07/30 11:04:11 INFO TaskSetManager: Lost task 0.1 in stage 0.0 (TID 2) on 192.168.0.102, executor 0: java.lang.ClassCastException (cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.rdd.MapPartitionsRDD.f of type scala.Function3 in instance of org.apache.spark.rdd.MapPartitionsRDD) [duplicate 2]

20/07/30 11:04:11 INFO TaskSetManager: Starting task 0.2 in stage 0.0 (TID 4, 192.168.0.102, executor 0, partition 0, PROCESS_LOCAL, 7452 bytes)

20/07/30 11:04:11 INFO TaskSetManager: Lost task 1.1 in stage 0.0 (TID 3) on 192.168.0.102, executor 0: java.lang.ClassCastException (cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.rdd.MapPartitionsRDD.f of type scala.Function3 in instance of org.apache.spark.rdd.MapPartitionsRDD) [duplicate 3]

20/07/30 11:04:11 INFO TaskSetManager: Starting task 1.2 in stage 0.0 (TID 5, 192.168.0.102, executor 0, partition 1, PROCESS_LOCAL, 7452 bytes)

20/07/30 11:04:11 INFO TaskSetManager: Lost task 0.2 in stage 0.0 (TID 4) on 192.168.0.102, executor 0: java.lang.ClassCastException (cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.rdd.MapPartitionsRDD.f of type scala.Function3 in instance of org.apache.spark.rdd.MapPartitionsRDD) [duplicate 4]

20/07/30 11:04:11 INFO TaskSetManager: Starting task 0.3 in stage 0.0 (TID 6, 192.168.0.102, executor 0, partition 0, PROCESS_LOCAL, 7452 bytes)

20/07/30 11:04:11 INFO TaskSetManager: Lost task 1.2 in stage 0.0 (TID 5) on 192.168.0.102, executor 0: java.lang.ClassCastException (cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.rdd.MapPartitionsRDD.f of type scala.Function3 in instance of org.apache.spark.rdd.MapPartitionsRDD) [duplicate 5]

20/07/30 11:04:11 INFO TaskSetManager: Starting task 1.3 in stage 0.0 (TID 7, 192.168.0.102, executor 0, partition 1, PROCESS_LOCAL, 7452 bytes)

20/07/30 11:04:11 INFO TaskSetManager: Lost task 0.3 in stage 0.0 (TID 6) on 192.168.0.102, executor 0: java.lang.ClassCastException (cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.rdd.MapPartitionsRDD.f of type scala.Function3 in instance of org.apache.spark.rdd.MapPartitionsRDD) [duplicate 6]

20/07/30 11:04:11 ERROR TaskSetManager: Task 0 in stage 0.0 failed 4 times; aborting job

20/07/30 11:04:11 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

20/07/30 11:04:11 INFO TaskSetManager: Lost task 1.3 in stage 0.0 (TID 7) on 192.168.0.102, executor 0: java.lang.ClassCastException (cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.rdd.MapPartitionsRDD.f of type scala.Function3 in instance of org.apache.spark.rdd.MapPartitionsRDD) [duplicate 7]

20/07/30 11:04:11 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

20/07/30 11:04:11 INFO TaskSchedulerImpl: Cancelling stage 0

20/07/30 11:04:11 INFO TaskSchedulerImpl: Killing all running tasks in stage 0: Stage cancelled

20/07/30 11:04:11 INFO DAGScheduler: ResultStage 0 (count at TestSparkJava.java:21) failed in 2.319 s due to Job aborted due to stage failure: Task 0 in stage 0.0 failed 4 times, most recent failure: Lost task 0.3 in stage 0.0 (TID 6, 192.168.0.102, executor 0): java.lang.ClassCastException: cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.rdd.MapPartitionsRDD.f of type scala.Function3 in instance of org.apache.spark.rdd.MapPartitionsRDD

at java.io.ObjectStreamClass$FieldReflector.setObjFieldValues(ObjectStreamClass.java:2133)

at java.io.ObjectStreamClass.setObjFieldValues(ObjectStreamClass.java:1305)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2251)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2169)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2027)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1535)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2245)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2169)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2027)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1535)

at java.io.ObjectInputStream.readObject(ObjectInputStream.java:422)

at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:76)

at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:115)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:83)

at org.apache.spark.scheduler.Task.run(Task.scala:127)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:444)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1377)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:447)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:748)

Driver stacktrace:

20/07/30 11:04:11 INFO DAGScheduler: Job 0 failed: count at TestSparkJava.java:21, took 2.376507 s

Exception in thread "main" org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 0.0 failed 4 times, most recent failure: Lost task 0.3 in stage 0.0 (TID 6, 192.168.0.102, executor 0): java.lang.ClassCastException: cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.rdd.MapPartitionsRDD.f of type scala.Function3 in instance of org.apache.spark.rdd.MapPartitionsRDD

at java.io.ObjectStreamClass$FieldReflector.setObjFieldValues(ObjectStreamClass.java:2133)

at java.io.ObjectStreamClass.setObjFieldValues(ObjectStreamClass.java:1305)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2251)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2169)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2027)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1535)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2245)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2169)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2027)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1535)

at java.io.ObjectInputStream.readObject(ObjectInputStream.java:422)

at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:76)

at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:115)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:83)

at org.apache.spark.scheduler.Task.run(Task.scala:127)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:444)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1377)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:447)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:748)

Driver stacktrace:

at org.apache.spark.scheduler.DAGScheduler.failJobAndIndependentStages(DAGScheduler.scala:2023)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2(DAGScheduler.scala:1972)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2$adapted(DAGScheduler.scala:1971)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1971)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$handleTaskSetFailed$1(DAGScheduler.scala:950)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$handleTaskSetFailed$1$adapted(DAGScheduler.scala:950)

at scala.Option.foreach(Option.scala:407)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:950)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2203)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2152)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2141)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:752)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2093)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2114)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2133)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2158)

at org.apache.spark.rdd.RDD.count(RDD.scala:1227)

at org.apache.spark.api.java.JavaRDDLike.count(JavaRDDLike.scala:455)

at org.apache.spark.api.java.JavaRDDLike.count$(JavaRDDLike.scala:455)

at org.apache.spark.api.java.AbstractJavaRDDLike.count(JavaRDDLike.scala:45)

at TestSparkJava.main(TestSparkJava.java:21)

Caused by: java.lang.ClassCastException: cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.rdd.MapPartitionsRDD.f of type scala.Function3 in instance of org.apache.spark.rdd.MapPartitionsRDD

at java.io.ObjectStreamClass$FieldReflector.setObjFieldValues(ObjectStreamClass.java:2133)

at java.io.ObjectStreamClass.setObjFieldValues(ObjectStreamClass.java:1305)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2251)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2169)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2027)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1535)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2245)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2169)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2027)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1535)

at java.io.ObjectInputStream.readObject(ObjectInputStream.java:422)

at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:76)

at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:115)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:83)

at org.apache.spark.scheduler.Task.run(Task.scala:127)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:444)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1377)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:447)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:748)

20/07/30 11:04:11 INFO SparkContext: Invoking stop() from shutdown hook

20/07/30 11:04:11 INFO SparkUI: Stopped Spark web UI at http://Desktop:4040

20/07/30 11:04:11 INFO StandaloneSchedulerBackend: Shutting down all executors

20/07/30 11:04:11 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asking each executor to shut down

20/07/30 11:04:11 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

20/07/30 11:04:11 INFO MemoryStore: MemoryStore cleared

20/07/30 11:04:11 INFO BlockManager: BlockManager stopped

20/07/30 11:04:11 INFO BlockManagerMaster: BlockManagerMaster stopped

20/07/30 11:04:11 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

20/07/30 11:04:11 INFO SparkContext: Successfully stopped SparkContext

20/07/30 11:04:11 INFO ShutdownHookManager: Shutdown hook called

20/07/30 11:04:11 INFO ShutdownHookManager: Deleting directory /tmp/spark-639e7f1e-b13f-4996-938c-ae65fb283f2e这个报错的特殊之处在于:

local没事,但是如果连接真实集群就有上述报错.

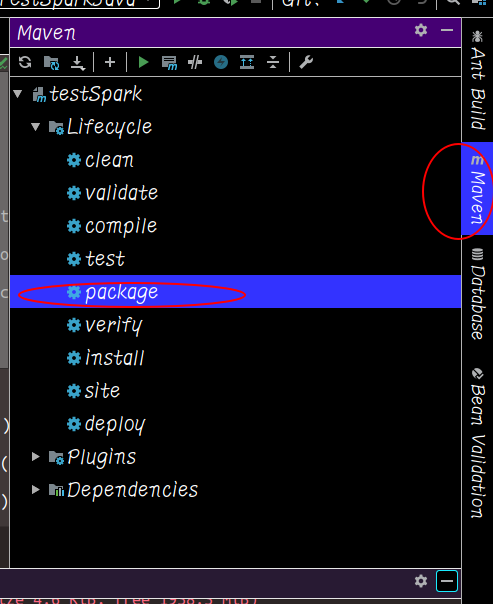

解决方案如下:

增加setJars,setJars里面的路径是你mvn package后生成的jar包的路径

完整解决方案如下:

import org.apache.spark.api.java.*;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.function.Function;

public class TestSparkJava

{

public static void main(String[] args)

{

String logFile = "/home/appleyuchi/IdeaProjects/SpringBoot2EnterPrise/第4章-SpringBoot的数据访问/testSpark/ab.txt";

SparkConf conf = new SparkConf().setMaster("spark://Desktop:7077")

.setJars(new String[]{"/home/appleyuchi/IdeaProjects/SpringBoot2EnterPrise/第4章-SpringBoot的数据访问/testSpark/target/testSpark-1.0-SNAPSHOT.jar"})

.setAppName("TestSpark");

JavaSparkContext sc = new JavaSparkContext(conf);

JavaRDD<String> logData = sc.textFile(logFile).cache();

long numAs = logData.filter(new Function<String, Boolean>()

{

public Boolean call(String s) { return s.contains("0");

}

}).count();

long numBs = logData.filter(new Function<String, Boolean>()

{

public Boolean call(String s) { return s.contains("1");

}

}).count();

System.out.println("Lines with 0: " + numAs + ", lines with 1: " + numBs);

sc.stop();

}

}