前一段时间实现了在VS2015下编译并且调用yolo动态链接库进行目标检测的任务:

https://blog.csdn.net/stjuliet/article/details/87731998

https://blog.csdn.net/stjuliet/article/details/87884976

由于项目需要,进一步需要将调用转移到QT Creator下,经过一系列的踩坑终于把这个需求也实现了。网上关于QT下对yolo动态链接库调用的教程真是少之又少,而且很多都写得很简单让读者看了觉得摸不着头脑,所以自己还是尽量写详细一点吧。

step1 运行环境和前期准备

一、基础环境

1、Windows 10 64位

2、QT5.7.1 / QT Creator 4.2.0(Community)

下载地址:http://download.qt.io/archive/qt/

(qt-opensource-windows-x86-msvc2015_64-5.7.1.exe)

有关QT Creator设置编译器和调试器的方法,请参考:

https://blog.csdn.net/maobush/article/details/66969284

3、OpenCV 3.4.0

https://opencv.org/releases.html

原则上OpenCV3.0以上版本都可以,但是还是建议使用3.4.0及以下版本,3.4.1版本有bug。

解压以后记得把库路径(D:\OpenCV340\opencv\build\x64\vc14\bin)添加到环境变量中,以免后续测试时报错。

二、GPU环境

1、CUDA9.0

https://developer.nvidia.com/cuda-80-ga2-download-archive 8.0版本

https://developer.nvidia.com/cuda-90-download-archive 9.0版本

CUDA是NVIDIA推出的通用并行计算架构,该架构能够解决复杂的计算问题。

2、cuDNN7.0

https://developer.nvidia.com/cudnn

cuDNN的全称为NVIDIA CUDA® Deep Neural Network library,是NVIDIA专门针对深度神经网络(Deep Neural Networks)中的基础操作而设计的基于GPU的加速库。需要注册一个NVIDIA官网的账号才能下载。

cuDNN的版本需要与CUDA版本对应,下载的时候根据选定的CUDA版本进行选择即可。

GPU环境的配置可参考:

https://blog.csdn.net/stjuliet/article/details/84640094

step2 QT配置OpenCV和调用yolo动态链接库

一、所有需要的文件清单如下:

1、动态链接库(均在darknet-master\build\darknet\x64目录下)

(1)yolo_cpp_dll.lib

(2)yolo_cpp_dll.dll

(3)pthreadGC2.dll

(4)pthreadVC2.dll

2、OpenCV库(取决于使用debug还是release模式)

(1)opencv_world340d.dll

(2)opencv_world340.dll

3、YOLO模型文件(第一个文件在darknet-master\build\darknet\x64\data目录下,第二个文件在darknet-master\build\darknet\x64目录下,第三个文件需要自己下载,下载链接见前文)

(1)coco.names

(2)yolov3.cfg

(3)yolov3.weights

4、头文件

(1)yolo_v2_class.hpp

头文件包含了动态链接库中具体的类的定义,调用时需要引用,这个文件在darknet-master\build\darknet目录下的yolo_console_dll.sln中,将其复制到记事本保存成.hpp文件即可。

二、QT调用外部库

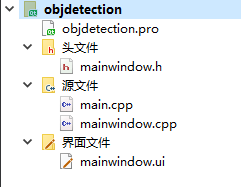

1、打开QT Creator,新建QT Widgets Application,会自动生成以下文件,在.ui界面中放置一个label和一个button按钮,并且建立信号和槽:

2、将(一)中所有文件全部拷入所建QT工程目录下,在.pro文件中添加以下语句调用外部库,main.cpp文件不需要更改:

INCLUDEPATH+=D:\OpenCV340\opencv\build\include

E:\QtCodes\objdetection

LIBS+=D:\OpenCV340\opencv\build\x64\vc14\lib\opencv_world340d.lib

E:\QtCodes\objdetection\yolo_cpp_dll.lib

3、

(1)在mainwindow.h中添加以下代码:

#ifndef MAINWINDOW_H

#define MAINWINDOW_H

#include <QMainWindow>

#include <QWidget>

#include <QImage>

#include <QFileDialog>

#include <QTimer>

#include <opencv2/opencv.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <yolo_v2_class.hpp>

namespace Ui {

class MainWindow;

}

class MainWindow : public QMainWindow

{

Q_OBJECT

public:

explicit MainWindow(QWidget *parent = 0);

~MainWindow();

private slots:

void on_pushbutton();

void on_pushbutton_video();

private:

Ui::MainWindow *ui;

cv::Mat Image;

cv::VideoCapture capture;

};

#endif // MAINWINDOW_H

(2)在mainwindow.cpp中添加以下代码:

#include "mainwindow.h"

#include "ui_mainwindow.h"

#include <opencv2/opencv.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <yolo_v2_class.hpp>

#ifdef _WIN32

#define OPENCV

#define GPU

#endif

using namespace cv;

#pragma comment(lib, "E:/QtCodes/objdetection/yolo_cpp_dll.lib")//引入链接库

MainWindow::MainWindow(QWidget *parent) :

QMainWindow(parent),

ui(new Ui::MainWindow)

{

ui->setupUi(this);

}

MainWindow::~MainWindow()

{

delete ui;

}

static cv::Scalar obj_id_to_color(int obj_id) {

int const colors[6][3] = { { 1,0,1 },{ 0,0,1 },{ 0,1,1 },{ 0,1,0 },{ 1,1,0 },{ 1,0,0 } };

int const offset = obj_id * 123457 % 6;

int const color_scale = 150 + (obj_id * 123457) % 100;

cv::Scalar color(colors[offset][0], colors[offset][1], colors[offset][2]);

color *= color_scale;

return color;

}

void draw_boxes(cv::Mat mat_img, std::vector<bbox_t> result_vec, std::vector<std::string> obj_names,

int current_det_fps = -1, int current_cap_fps = -1)

{

int const colors[6][3] = { { 1,0,1 },{ 0,0,1 },{ 0,1,1 },{ 0,1,0 },{ 1,1,0 },{ 1,0,0 } };

for (auto &i : result_vec) {

cv::Scalar color = obj_id_to_color(i.obj_id);

cv::rectangle(mat_img, cv::Rect(i.x, i.y, i.w, i.h), color, 2);

if (obj_names.size() > i.obj_id) {

std::string obj_name = obj_names[i.obj_id];

if (i.track_id > 0) obj_name += " - " + std::to_string(i.track_id);

cv::Size const text_size = getTextSize(obj_name, cv::FONT_HERSHEY_COMPLEX_SMALL, 1.2, 2, 0);

int const max_width = (text_size.width > i.w + 2) ? text_size.width : (i.w + 2);

cv::rectangle(mat_img, cv::Point2f(std::max((int)i.x - 1, 0), std::max((int)i.y - 30, 0)),

cv::Point2f(std::min((int)i.x + max_width, mat_img.cols-1), std::min((int)i.y, mat_img.rows-1)),

color, CV_FILLED, 8, 0);

putText(mat_img, obj_name, cv::Point2f(i.x, i.y - 10), cv::FONT_HERSHEY_COMPLEX_SMALL, 1.2, cv::Scalar(0, 0, 0), 2);

}

}

if (current_det_fps >= 0 && current_cap_fps >= 0) {

std::string fps_str = "FPS detection: " + std::to_string(current_det_fps) + " FPS capture: " + std::to_string(current_cap_fps);

putText(mat_img, fps_str, cv::Point2f(10, 20), cv::FONT_HERSHEY_COMPLEX_SMALL, 1.2, cv::Scalar(50, 255, 0), 2);

}

}

std::vector<std::string> objects_names_from_file(std::string const filename) {

std::ifstream file(filename);

std::vector<std::string> file_lines;

if (!file.is_open()) return file_lines;

for(std::string line; getline(file, line);) file_lines.push_back(line);

std::cout << "object names loaded \n";

return file_lines;

}

void MainWindow::on_pushbutton()

{

Image=imread("E:/QtCodes/objdetection/test.jpg");

imshow("t",Image);

QImage disImage;

disImage= QImage((const unsigned char*)(Image.data), Image.cols, Image.rows, QImage::Format_RGB888);

ui->label->setPixmap(QPixmap::fromImage(disImage));

ui->label->resize(ui->label->pixmap()->size());

}

void MainWindow::on_pushbutton_video()

{

std::string names_file = "D:/yolobuild/coco.names";

std::string cfg_file = "D:/yolobuild/yolov3.cfg";

std::string weights_file = "D:/yolobuild/yolov3.weights";

Detector detector(cfg_file,weights_file,0);

//std::vector<std::string> obj_names = objects_names_from_file(names_file); //调用获得分类对象名称

//或者使用以下四行代码也可实现读入分类对象文件

std::vector<std::string> obj_names;

std::ifstream ifs(names_file.c_str());

std::string line;

while (getline(ifs, line)) obj_names.push_back(line);

capture.open("E:/QtCodes/objdetection/test.avi");

if (!capture.isOpened())

{

printf("文件打开失败");

}

cv::Mat frame;

while (true)

{

capture >> frame;

std::vector<bbox_t> result_vec = detector.detect(frame);

draw_boxes(frame, result_vec, obj_names);

cv::namedWindow("test", CV_WINDOW_NORMAL);

cv::imshow("test", frame);

cv::waitKey(3);

}

}

4、本来在VS2015下只需要完成前三步即可,在QT中不知道哪里出了问题,detect传参一直无法接受Mat类型,无奈修改了yolo_v2_class.hpp文件:

将class Detector 下的一对OPENCV标签删除

#ifdef OPENCV

std::vector<bbox_t> detect(cv::Mat mat, float thresh = 0.2, bool use_mean = false)

{

if(mat.data == NULL)

throw std::runtime_error("Image is empty");

auto image_ptr = mat_to_image_resize(mat);

return detect_resized(*image_ptr, mat.cols, mat.rows, thresh, use_mean);

}

std::shared_ptr<image_t> mat_to_image_resize(cv::Mat mat) const

{

if (mat.data == NULL) return std::shared_ptr<image_t>(NULL);

cv::Size network_size = cv::Size(get_net_width(), get_net_height());

cv::Mat det_mat;

if (mat.size() != network_size)

cv::resize(mat, det_mat, network_size);

else

det_mat = mat; // only reference is copied

return mat_to_image(det_mat);

}

static std::shared_ptr<image_t> mat_to_image(cv::Mat img_src)

{

cv::Mat img;

cv::cvtColor(img_src, img, cv::COLOR_RGB2BGR);

std::shared_ptr<image_t> image_ptr(new image_t, [](image_t *img) { free_image(*img); delete img; });

std::shared_ptr<IplImage> ipl_small = std::make_shared<IplImage>(img);

*image_ptr = ipl_to_image(ipl_small.get());

return image_ptr;

}

private:

static image_t ipl_to_image(IplImage* src)

{

unsigned char *data = (unsigned char *)src->imageData;

int h = src->height;

int w = src->width;

int c = src->nChannels;

int step = src->widthStep;

image_t out = make_image_custom(w, h, c);

int count = 0;

for (int k = 0; k < c; ++k) {

for (int i = 0; i < h; ++i) {

int i_step = i*step;

for (int j = 0; j < w; ++j) {

out.data[count++] = data[i_step + j*c + k] / 255.;

}

}

}

return out;

}

static image_t make_empty_image(int w, int h, int c)

{

image_t out;

out.data = 0;

out.h = h;

out.w = w;

out.c = c;

return out;

}

static image_t make_image_custom(int w, int h, int c)

{

image_t out = make_empty_image(w, h, c);

out.data = (float *)calloc(h*w*c, sizeof(float));

return out;

}

#endif // OPENCV

参考资料:

https://blog.csdn.net/qq_17550379/article/details/78504790

https://blog.csdn.net/u012483097/article/details/78916287

https://blog.csdn.net/qq295456059/article/details/52781726

Juliet 于 2019.3