Python爬虫系列(四):爬取腾讯新闻&知乎

一、爬取腾讯新闻

- 了解ajax加载

- 通过chrome的开发者工具,监控网络请求,并分析

- 用selenium完成爬虫

- 具体流程如下:

用selenium爬取https://news.qq.com/ 的热点精选 热点精选至少爬50个出来,存储成csv 每一行如下 标号(从1开始),标题,链接,…

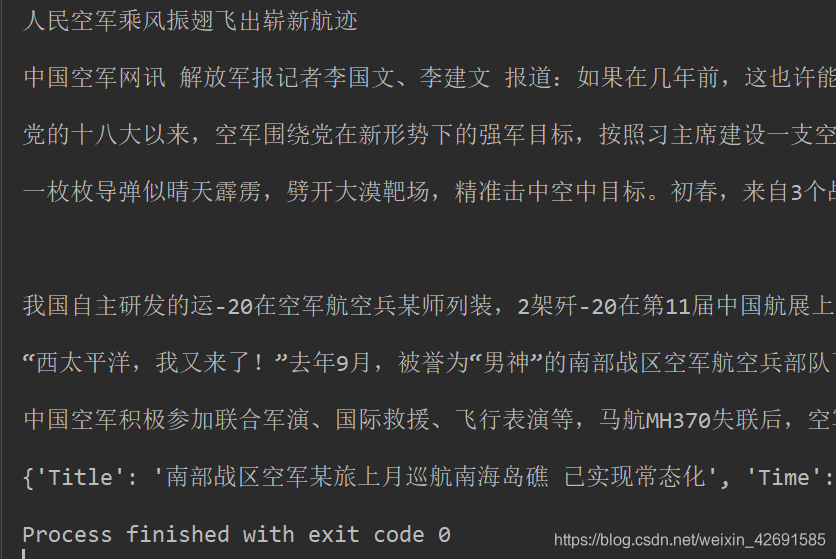

1)爬取给的腾讯新闻网页

#主要是从给定腾讯新闻网页中爬取新闻的题目,时间,正文,作者

import requests

from bs4 import BeautifulSoup

def getHTMLText(url):

try:

r = requests.get(url, timeout = 30)

r.raise_for_status()

#r.encoding = 'utf-8'

return r.text

except:

return ""

def getContent(url):

html = getHTMLText(url)

# print(html)

soup = BeautifulSoup(html, "html.parser")

title = soup.select("div.hd > h1")

print(title[0].get_text())

time = soup.select("div.a_Info > span.a_time")

print(time[0].string)

author = soup.select("div.qq_articleFt > div.qq_toolWrap > div.qq_editor")

print(author[0].get_text())

paras = soup.select("div.Cnt-Main-Article-QQ > p.text")

for para in paras:

if len(para) > 0:

print(para.get_text())

print()

#写入文件

fo = open("text.txt", "w+")

fo.writelines(title[0].get_text() + "\n")

fo.writelines(time[0].get_text() + "\n")

for para in paras:

if len(para) > 0:

fo.writelines(para.get_text() + "\n\n")

fo.writelines(author[0].get_text() + '\n')

fo.close()

#将爬取到的文章用字典格式来存

article = {

'Title' : title[0].get_text(),

'Time' : time[0].get_text(),

'Paragraph' : paras,

'Author' : author[0].get_text()

}

print(article)

def main():

url = "http://news.qq.com/a/20170504/012032.htm"

getContent(url);

main()

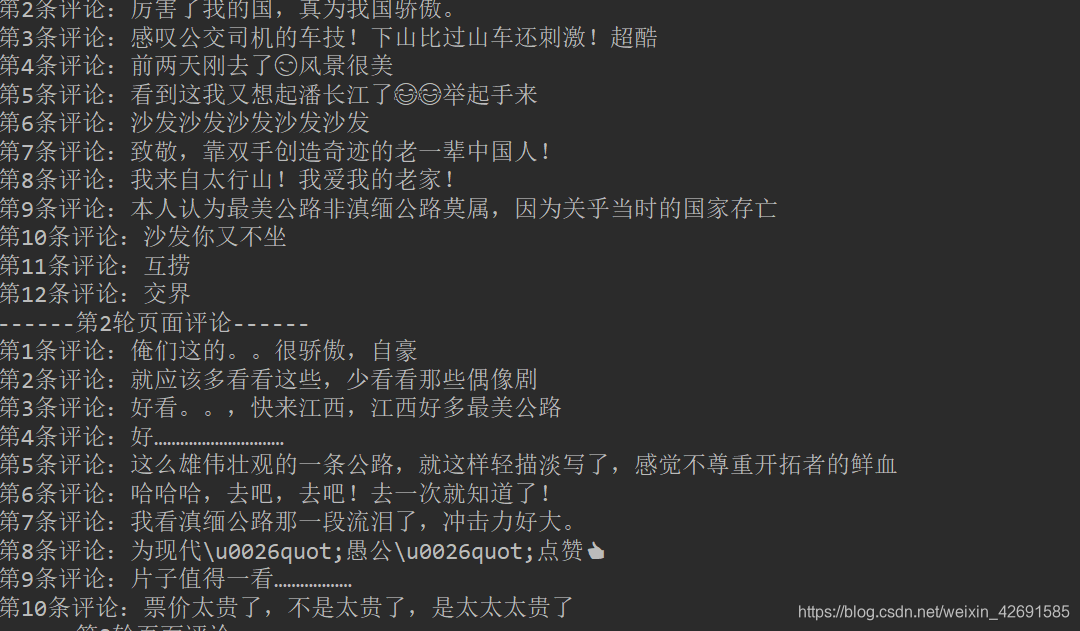

2) 爬取腾讯视频评论

# 爬取腾讯视频评论

import re

import random

import urllib.request

# 构建用户代理

uapools = [

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36",

"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36",

"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:71.0) Gecko/20100101 Firefox/71.0",

]

# 从用户代理池随机选取一个用户代理

def ua(uapools):

thisua = random.choice(uapools)

# print(thisua)

headers = ("User-Agent", thisua)

opener = urllib.request.build_opener()

opener.addheaders = [headers]

# 设置为全局变量

urllib.request.install_opener(opener)

# 获取源码

def get_content(page, lastId):

url = "https://video.coral.qq.com/varticle/3242201702/comment/v2?callback=_varticle3242201702commentv2&orinum=10&oriorder=o&pageflag=1&cursor=" + lastId + "&scorecursor=0&orirepnum=2&reporder=o&reppageflag=1&source=132&_=" + str(

page)

html = urllib.request.urlopen(url).read().decode("utf-8", "ignore")

return html

# 从源码中获取评论的数据

def get_comment(html):

pat = '"content":"(.*?)"'

rst = re.compile(pat, re.S).findall(html)

return rst

# 从源码中获取下一轮刷新页的ID

def get_lastId(html):

pat = '"last":"(.*?)"'

lastId = re.compile(pat, re.S).findall(html)[0]

return lastId

def main():

ua(uapools)

# 初始页面

page = 1576567187274

# 初始待刷新页面ID

lastId = "6460393679757345760"

for i in range(1, 6):

html = get_content(page, lastId)

# 获取评论数据

commentlist = get_comment(html)

print("------第" + str(i) + "轮页面评论------")

for j in range(1, len(commentlist)):

print("第" + str(j) + "条评论:" + str(commentlist[j]))

# 获取下一轮刷新页ID

lastId = get_lastId(html)

page += 1

main()

3)参考代码

3)参考代码

import time

from selenium import webdriver

driver = webdriver.Chrome(executable_path="D:/Python/Python37/chromedriver.exe")

driver.get("https://news.qq.com")

# 了解ajax加载

for i in range(1, 100):

time.sleep(2)

driver.execute_script("window.scrollTo(window.scrollX, %d);" % (i*200))

from bs4 import BeautifulSoup

html = driver.page_source

bsObj = BeautifulSoup(html, "lxml")

jxtits=bsObj.find_all("div", {"class": "jx-tit"})[0].find_next_sibling().find_all("li")

print("index", ",", "title", ",", "url")

for i, jxtit in enumerate(jxtits):

try:

text = jxtit.find_all("img")[0]["alt"]

except:

text = jxtit.find_all("div", {"class": "lazyload-placeholder"})[0].text

try:

url = jxtit.find_all("a")[0]["href"]

except:

print(jxtit)

print(i + 1, ",", text, ",", url)

二、爬取知乎

链接如下 :

https://www.zhihu.com/search?q=Datawhale&utm_content=search_history&type=content

用requests库实现,不能用selenium网页自动化

提示:

该链接需要登录,可通过github等,搜索知乎登录的代码实现,并理解其中的逻辑,此任务允许复制粘贴代码 。

与上面ajax加载类似,这次的ajax加载需要用requests完成爬取,最终存储样式随意,但是通过Chrome的开发者工具,分析出ajax的流程需要写出来 。

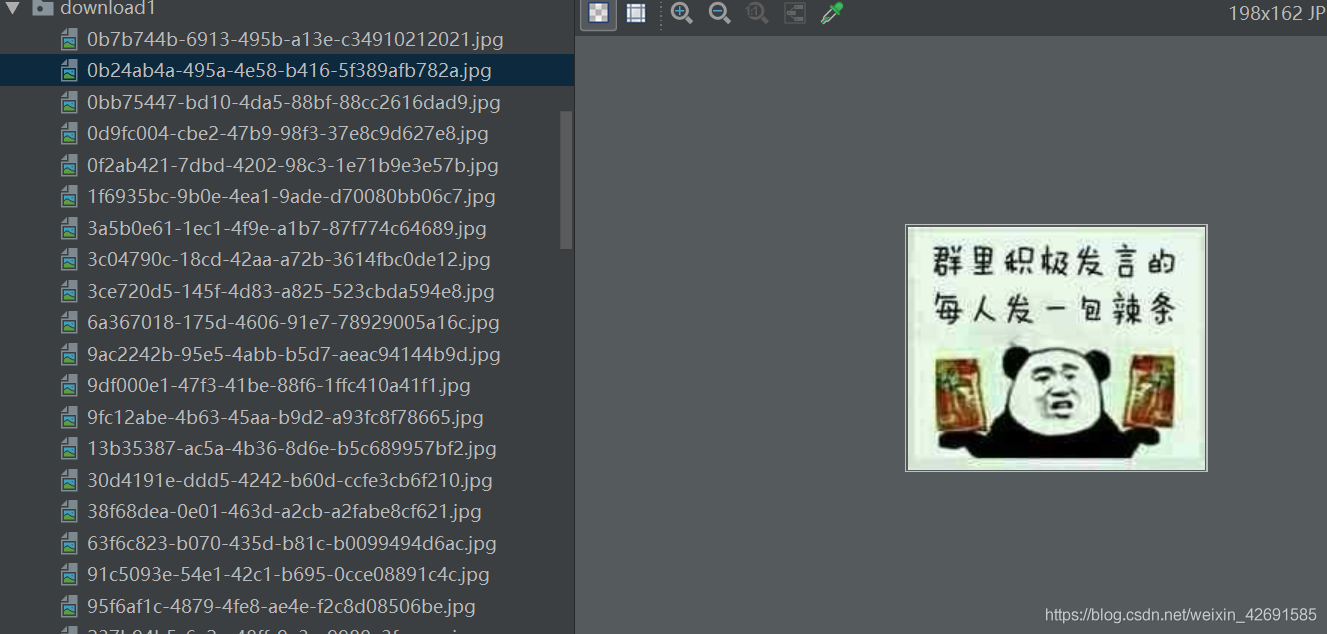

1)爬取知乎一个问题下面所有答案的图片

import requests

from bs4 import BeautifulSoup

import json

import time

import uuid

import datetime

import os

# 找到网站的答案的真实地址,并下载其data,然后从data中找到图片的下载地址,并将其下载到本地。其中offset表示从第几条回答开始请求,后面会用来循环。sort表示回答的排列顺序

def download(offset,sort):

# 将传入的offset字符串化

offset = str(offset)

# url中设置两个变量,offset和sort

url = 'https://www.zhihu.com/api/v4/questions/34243513/answers?include=data%5B%2A%5D.is_normal%2Cadmin_closed_comment%2Creward_info%2Cis_collapsed%2Cannotation_action%2Cannotation_detail%2Ccollapse_reason%2Cis_sticky%2Ccollapsed_by%2Csuggest_edit%2Ccomment_count%2Ccan_comment%2Ccontent%2Ceditable_content%2Cvoteup_count%2Creshipment_settings%2Ccomment_permission%2Ccreated_time%2Cupdated_time%2Creview_info%2Crelevant_info%2Cquestion%2Cexcerpt%2Crelationship.is_authorized%2Cis_author%2Cvoting%2Cis_thanked%2Cis_nothelp%2Cis_labeled%2Cis_recognized%2Cpaid_info%2Cpaid_info_content%3Bdata%5B%2A%5D.mark_infos%5B%2A%5D.url%3Bdata%5B%2A%5D.author.follower_count%2Cbadge%5B%2A%5D.topics&limit=5&offset=' + offset + '&platform=desktop&sort_by=' + sort

html = requests.get(url=url, headers={'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36 Edge/18.18363'}).text

res = json.loads(html)

# 创建一个文件夹用来存储

if not os.path.exists('download1'):

os.mkdir('download1')

# 因为list不能用字符串来索引,所以用enumerate创造一个可以遍历的索引序列

for i, item in enumerate(res['data']):

# 找到所有的图片下载地址标签

content = BeautifulSoup(item['content'], 'lxml')

imgurls = content.select('noscript img')

# 挨个获取图片下载地址和图片的格式,并将其保存到本地文件夹中

for imgurl in imgurls:

src = imgurl['src']

img = src[src.rfind('.'):]

with open(f'download1/{uuid.uuid4()}{img}', 'wb') as f:

f.write(requests.get(src).content)

# 因为知乎的offset是5,这里设置了一个循环,获得15个回答的所有图片

if __name__=='__main__':

print('开始爬取:', datetime.datetime.now())

for i in range(3):

download(offset=((i*5)if i!= 0 else 0), sort='default')

time.sleep(3)

download('5', 'default')

print('爬取完毕', datetime.datetime.now())

2)参考代码:

# -*- coding: utf-8 -*-

__author__ = 'zkqiang'

__zhihu__ = 'https://www.zhihu.com/people/z-kqiang'

__github__ = 'https://github.com/zkqiang/Zhihu-Login'

import base64

import hashlib

import hmac

import json

import re

import threading

import time

from http import cookiejar

from urllib.parse import urlencode

import execjs

import requests

from PIL import Image

class ZhihuAccount(object):

"""

使用时请确定安装了 Node.js(7.0 以上版本) 或其他 JS 环境

报错 execjs._exceptions.ProgramError: TypeError: 'exports' 就是没有安装

"""

def __init__(self, username: str = None, password: str = None):

self.username = username

self.password = password

self.login_data = {

'client_id': 'c3cef7c66a1843f8b3a9e6a1e3160e20',

'grant_type': 'password',

'source': 'com.zhihu.web',

'username': '',

'password': '',

'lang': 'en',

'ref_source': 'homepage',

'utm_source': ''

}

self.session = requests.session()

self.session.headers = {

'accept-encoding': 'gzip, deflate, br',

'Host': 'www.zhihu.com',

'Referer': 'https://www.zhihu.com/',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 '

'(KHTML, like Gecko) Chrome/66.0.3359.181 Safari/537.36'

}

self.session.cookies = cookiejar.LWPCookieJar(filename='./cookies.txt')

def login(self, captcha_lang: str = 'en', load_cookies: bool = True):

"""

模拟登录知乎

:param captcha_lang: 验证码类型 'en' or 'cn'

:param load_cookies: 是否读取上次保存的 Cookies

:return: bool

若在 PyCharm 下使用中文验证出现无法点击的问题,

需要在 Settings / Tools / Python Scientific / Show Plots in Toolwindow,取消勾选

"""

if load_cookies and self.load_cookies():

print('读取 Cookies 文件')

if self.check_login():

print('登录成功')

return True

print('Cookies 已过期')

self._check_user_pass()

self.login_data.update({

'username': self.username,

'password': self.password,

'lang': captcha_lang

})

timestamp = int(time.time() * 1000)

self.login_data.update({

'captcha': self._get_captcha(self.login_data['lang']),

'timestamp': timestamp,

'signature': self._get_signature(timestamp)

})

headers = self.session.headers.copy()

headers.update({

'content-type': 'application/x-www-form-urlencoded',

'x-zse-83': '3_1.1',

'x-xsrftoken': self._get_xsrf()

})

data = self._encrypt(self.login_data)

login_api = 'https://www.zhihu.com/api/v3/oauth/sign_in'

resp = self.session.post(login_api, data=data, headers=headers)

if 'error' in resp.text:

print(json.loads(resp.text)['error'])

if self.check_login():

print('登录成功')

return True

print('登录失败')

return False

def load_cookies(self):

"""

读取 Cookies 文件加载到 Session

:return: bool

"""

try:

self.session.cookies.load(ignore_discard=True)

return True

except FileNotFoundError:

return False

def check_login(self):

"""

检查登录状态,访问登录页面出现跳转则是已登录,

如登录成功保存当前 Cookies

:return: bool

"""

login_url = 'https://www.zhihu.com/signup'

resp = self.session.get(login_url, allow_redirects=False)

if resp.status_code == 302:

self.session.cookies.save()

return True

return False

def _get_xsrf(self):

"""

从登录页面获取 xsrf

:return: str

"""

self.session.get('https://www.zhihu.com/', allow_redirects=False)

for c in self.session.cookies:

if c.name == '_xsrf':

return c.value

raise AssertionError('获取 xsrf 失败')

def _get_captcha(self, lang: str):

"""

请求验证码的 API 接口,无论是否需要验证码都需要请求一次

如果需要验证码会返回图片的 base64 编码

根据 lang 参数匹配验证码,需要人工输入

:param lang: 返回验证码的语言(en/cn)

:return: 验证码的 POST 参数

"""

if lang == 'cn':

api = 'https://www.zhihu.com/api/v3/oauth/captcha?lang=cn'

else:

api = 'https://www.zhihu.com/api/v3/oauth/captcha?lang=en'

resp = self.session.get(api)

show_captcha = re.search(r'true', resp.text)

if show_captcha:

put_resp = self.session.put(api)

json_data = json.loads(put_resp.text)

img_base64 = json_data['img_base64'].replace(r'\n', '')

with open('./captcha.jpg', 'wb') as f:

f.write(base64.b64decode(img_base64))

img = Image.open('./captcha.jpg')

if lang == 'cn':

import matplotlib.pyplot as plt

plt.imshow(img)

print('点击所有倒立的汉字,在命令行中按回车提交')

points = plt.ginput(7)

capt = json.dumps({'img_size': [200, 44],

'input_points': [[i[0] / 2, i[1] / 2] for i in points]})

else:

img_thread = threading.Thread(target=img.show, daemon=True)

img_thread.start()

# 这里可自行集成验证码识别模块

capt = input('请输入图片里的验证码:')

# 这里必须先把参数 POST 验证码接口

self.session.post(api, data={'input_text': capt})

return capt

return ''

def _get_signature(self, timestamp: int or str):

"""

通过 Hmac 算法计算返回签名

实际是几个固定字符串加时间戳

:param timestamp: 时间戳

:return: 签名

"""

ha = hmac.new(b'd1b964811afb40118a12068ff74a12f4', digestmod=hashlib.sha1)

grant_type = self.login_data['grant_type']

client_id = self.login_data['client_id']

source = self.login_data['source']

ha.update(bytes((grant_type + client_id + source + str(timestamp)), 'utf-8'))

return ha.hexdigest()

def _check_user_pass(self):

"""

检查用户名和密码是否已输入,若无则手动输入

"""

if not self.username:

self.username = input('请输入手机号:')

if self.username.isdigit() and '+86' not in self.username:

self.username = '+86' + self.username

if not self.password:

self.password = input('请输入密码:')

@staticmethod

def _encrypt(form_data: dict):

with open('./encrypt.js') as f:

js = execjs.compile(f.read())

return js.call('Q', urlencode(form_data))

if __name__ == '__main__':

account = ZhihuAccount('', '')

account.login(captcha_lang='cn', load_cookies=False)