1.拉取镜像

[root@localhost conf]# docker pull logstash:7.8.1

Trying to pull repository docker.io/library/logstash ...

7.8.1: Pulling from docker.io/library/logstash

524b0c1e57f8: Already exists

4ea79d464a65: Pull complete

245cfcbe00e5: Pull complete

1b4d03815886: Pull complete

505552b55db2: Pull complete

d440869a711b: Pull complete

086ef50d80ce: Pull complete

11b8a22f5fe6: Pull complete

aece5f411b8b: Pull complete

f7f6ec9f2b6e: Pull complete

03353e162ddf: Pull complete

Digest: sha256:f7ff8907ac010e35df8447ea8ea32ea57d07fb261316d92644f168c63cf99287

Status: Downloaded newer image for docker.io/logstash:7.8.1

2.准备配置文件

1) 创建对应目录并授权

[root@localhost conf]# mkdir -p /opt/elk7/logstash/conf

[root@localhost conf]# cd /opt/elk7/logstash/conf/

[root@localhost conf]# ls

[root@localhost conf]# touch logstash.conf

[root@localhost conf]# touch logstash.yml

[root@localhost conf]# touch pipeline.yml

[root@localhost conf]# mkdir -p /opt/elk7/logstash/pipeline

[root@localhost conf]# mkdir -p /opt/elk7/logstash/data

[root@localhost conf]# chmod 777 -R /opt/elk7/logstash

2)准备logstash.yml

位置: /opt/elk7/logstash/conf ---- /usr/share/logstash/config

node.name: logstash-203

# 日志文件目录配置

path.logs: /usr/share/logstash/logs

# 验证配置文件及存在性

config.test_and_exit: false

# 配置文件改变时是否自动加载

config.reload.automatic: false

# 重新加载配置文件间隔

config.reload.interval: 60s

# debug模式 开启后会打印解析后的配置文件 包括密码等信息 慎用

# 需要同时配置日志等级为debug

config.debug: true

log.level: debug

# The bind address for the metrics REST endpoint.

http.host: 0.0.0.0

# 日志格式 json/plain

log.format: json

3)准备logstash.conf

位置: /opt/elk7/logstash/pipeline ---- /usr/share/logstash/pipeline

为了便于演示多通道和测试,这里选择data里面的两个文件进行测试。

期望效果: 修改test.log并保存 在logstash日志里面应该会有对应的输出

logstash-file.conf

input {

file{

path => "/usr/share/logstash/data/test.log"

codec => json

start_position => "beginning"

}

}

output {

stdout {

codec => rubydebug

}

}

logstash-file2.conf

input {

file{

path => "/usr/share/logstash/data/test2.log"

codec => plain

start_position => "beginning"

}

}

output {

stdout {

codec => rubydebug

}

}

4) 准备pipelines.yml

位置: /opt/elk7/logstash/conf ---- /usr/share/logstash/config

- pipeline.id: main

path.config: /usr/share/logstash/config/logstash-file.conf

- pipeline.id: file2

path.config: /usr/share/logstash/config/logstash-file2.conf

5) 日志配置文件log4j2.properties

位置: /opt/elk7/logstash/conf ---- /usr/share/logstash/config

status = error

name = LogstashPropertiesConfig

appender.console.type = Console

appender.console.name = plain_console

appender.console.layout.type = PatternLayout

appender.console.layout.pattern = [%d{ISO8601}][%-5p][%-25c]%notEmpty{[%X{pipeline.id}]}%notEmpty{[%X{plugin.id}]} %m%n

appender.json_console.type = Console

appender.json_console.name = json_console

appender.json_console.layout.type = JSONLayout

appender.json_console.layout.compact = true

appender.json_console.layout.eventEol = true

rootLogger.level = ${sys:ls.log.level}

rootLogger.appenderRef.console.ref = ${sys:ls.log.format}_console

6) jvm配置文件jvm.options

位置: /opt/elk7/logstash/conf ---- /usr/share/logstash/config

-Xmx512m

-Xms512m

7)启动参数配置startup.options

位置: /opt/elk7/logstash/conf ---- /usr/share/logstash/config

################################################################################

# These settings are ONLY used by $LS_HOME/bin/system-install to create a custom

# startup script for Logstash and is not used by Logstash itself. It should

# automagically use the init system (systemd, upstart, sysv, etc.) that your

# Linux distribution uses.

#

# After changing anything here, you need to re-run $LS_HOME/bin/system-install

# as root to push the changes to the init script.

################################################################################

# Override Java location

#JAVACMD=/usr/bin/java

# Set a home directory

LS_HOME=/usr/share/logstash

# logstash settings directory, the path which contains logstash.yml

LS_SETTINGS_DIR=/etc/logstash

# Arguments to pass to logstash

LS_OPTS="--path.settings ${LS_SETTINGS_DIR}"

# Arguments to pass to java

LS_JAVA_OPTS=""

# pidfiles aren't used the same way for upstart and systemd; this is for sysv users.

LS_PIDFILE=/var/run/logstash.pid

# user and group id to be invoked as

LS_USER=logstash

LS_GROUP=logstash

# Enable GC logging by uncommenting the appropriate lines in the GC logging

# section in jvm.options

LS_GC_LOG_FILE=/var/log/logstash/gc.log

# Open file limit

LS_OPEN_FILES=16384

# Nice level

LS_NICE=19

# Change these to have the init script named and described differently

# This is useful when running multiple instances of Logstash on the same

# physical box or vm

SERVICE_NAME="logstash"

SERVICE_DESCRIPTION="logstash"

# If you need to run a command or script before launching Logstash, put it

# between the lines beginning with `read` and `EOM`, and uncomment those lines.

###

## read -r -d '' PRESTART << EOM

## EOM

3.重新生成logstash容器并运行

docker run -it --name logstash \

-v /opt/elk7/logstash/conf:/usr/share/logstash/config \

-v /opt/elk7/logstash/data:/usr/share/logstash/data \

-v /opt/elk7/logstash/logs:/usr/share/logstash/logs \

-v /opt/elk7/logstash/pipeline:/usr/share/logstash/pipeline \

-d logstash:7.8.1

检查容器运行状态

[root@kf202 conf]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c5d28e4f25ed 172.16.10.205:5000/logstash:7.8.1 "/usr/local/bin/dock…" 2 hours ago Up 2 hours 5044/tcp, 0.0.0.0:9600->9600/tcp logstash

5dd146f7d16f 172.16.10.205:5000/kibana:7.8.1 "/usr/local/bin/dumb…" 6 hours ago Up 6 hours 0.0.0.0:5601->5601/tcp kibana

edf01440dbb2 elasticsearch:7.8.1 "/tini -- /usr/local…" 6 hours ago Up 6 hours 9200/tcp, 0.0.0.0:9203->9203/tcp, 9300/tcp, 0.0.0.0:9303->9303/tcp es-03

281a9e99e0d4 elasticsearch:7.8.1 "/tini -- /usr/local…" 6 hours ago Up 6 hours 9200/tcp, 0.0.0.0:9202->9202/tcp, 9300/tcp, 0.0.0.0:9302->9302/tcp es-02

1a0d40f6861a elasticsearch:7.8.1 "/tini -- /usr/local…" 6 hours ago Up 6 hours 0.0.0.0:9200->9200/tcp, 0.0.0.0:9300->9300/tcp es-01

检查容器运行日志

[root@kf202 conf]# docker logs -f logstash --tail 200

OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and will likely be removed in a future release.

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by com.headius.backport9.modules.Modules (file:/usr/share/logstash/logstash-core/lib/jars/jruby-complete-9.2.11.1.jar) to method sun.nio.ch.NativeThread.signal(long)

WARNING: Please consider reporting this to the maintainers of com.headius.backport9.modules.Modules

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

Sending Logstash logs to /usr/share/logstash/logs which is now configured via log4j2.properties

[2020-08-10T06:25:11,263][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"7.8.1", "jruby.version"=>"jruby 9.2.11.1 (2.5.7) 2020-03-25 b1f55b1a40 OpenJDK 64-Bit Server VM 11.0.7+10-LTS on 11.0.7+10-LTS +indy +jit [linux-x86_64]"}

[2020-08-10T06:25:12,924][INFO ][org.reflections.Reflections] Reflections took 40 ms to scan 1 urls, producing 21 keys and 41 values

[2020-08-10T06:25:13,830][INFO ][logstash.javapipeline ][file2] Starting pipeline {:pipeline_id=>"file2", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>500, "pipeline.sources"=>["/usr/share/logstash/pipeline/logstash-file2.conf"], :thread=>"#<Thread:0xc2e0c06@/usr/share/logstash/logstash-core/lib/logstash/java_pipeline.rb:122 run>"}

[2020-08-10T06:25:13,827][INFO ][logstash.javapipeline ][main] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>500, "pipeline.sources"=>["/usr/share/logstash/pipeline/logstash-file.conf"], :thread=>"#<Thread:0x15b73d10 run>"}

[2020-08-10T06:25:15,019][INFO ][logstash.inputs.file ][file2] No sincedb_path set, generating one based on the "path" setting {:sincedb_path=>"/usr/share/logstash/data/plugins/inputs/file/.sincedb_897786e80ff00d65a6928cab732327f8", :path=>["/usr/share/logstash/data/test2.log"]}

[2020-08-10T06:25:15,025][INFO ][logstash.inputs.file ][main] No sincedb_path set, generating one based on the "path" setting {:sincedb_path=>"/usr/share/logstash/data/plugins/inputs/file/.sincedb_e6a3fcb43f05e42e8f9d3130699f14de", :path=>["/usr/share/logstash/data/test.log"]}

[2020-08-10T06:25:15,041][INFO ][logstash.javapipeline ][main] Pipeline started {"pipeline.id"=>"main"}

[2020-08-10T06:25:15,042][INFO ][logstash.javapipeline ][file2] Pipeline started {"pipeline.id"=>"file2"}

[2020-08-10T06:25:15,100][INFO ][logstash.agent ] Pipelines running {:count=>2, :running_pipelines=>[:main, :file2], :non_running_pipelines=>[]}

[2020-08-10T06:25:15,138][INFO ][filewatch.observingtail ][main][cf77cbf866922c4bd1db2874cf9f2e93205e6dd41b95c29ad607347574c6d414] START, creating Discoverer, Watch with file and sincedb collections

[2020-08-10T06:25:15,148][INFO ][filewatch.observingtail ][file2][f967bb0285eea34805b3ab366df25a6fe116eb0521456be1bff642b6e58ab95b] START, creating Discoverer, Watch with file and sincedb collections

[2020-08-10T06:25:15,476][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

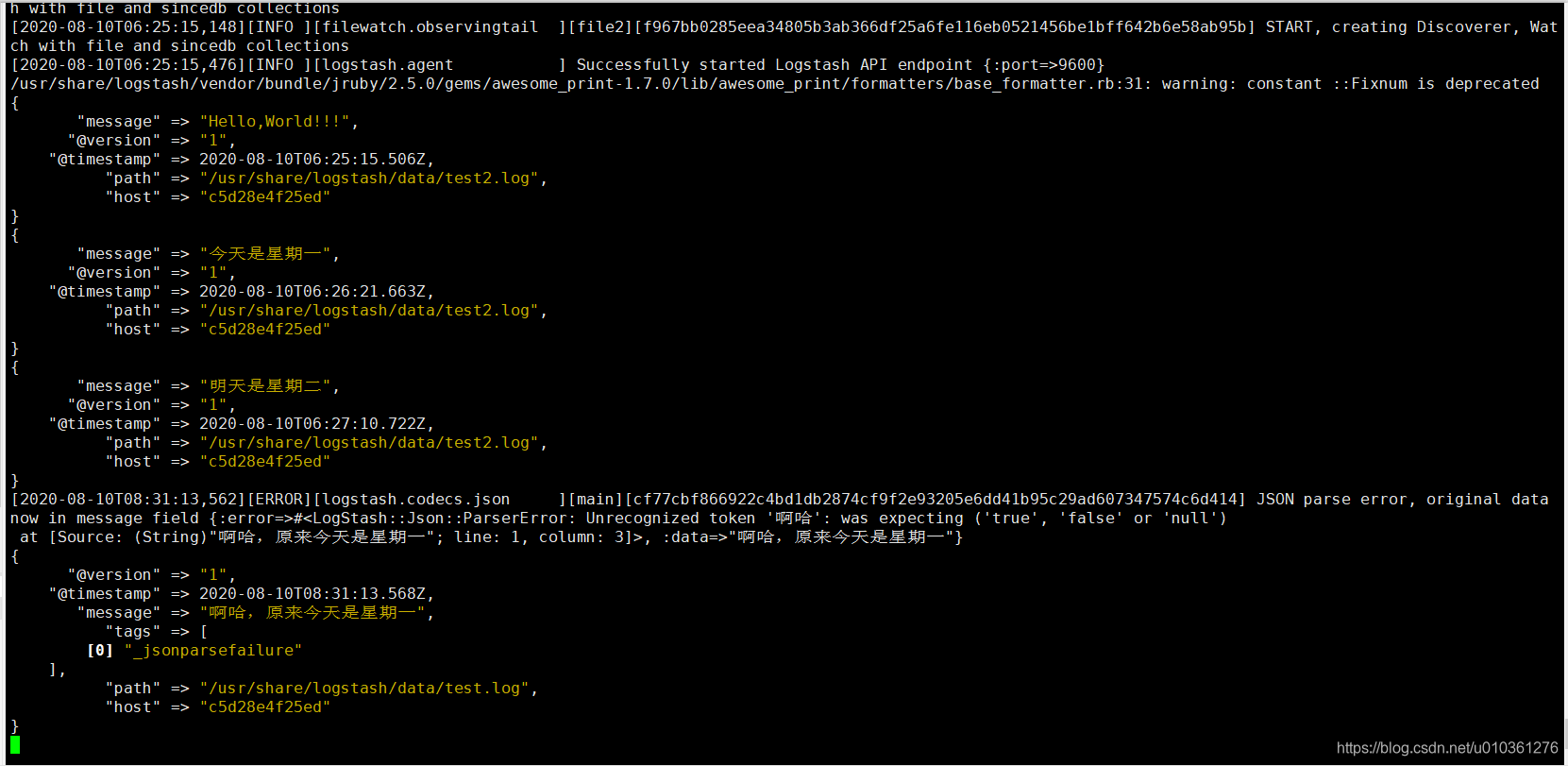

4.验证

通过vim在test.log和test2.log修改保存并观察logstash日志输出。

可以看到容器状态正常且日志打印正常。

至此一个文件作为输入打印到标准输出控制台的logstash搭建完成。

5.配置输出到elasticsearch中并指定索引

新建logstash-es.conf

位置: /opt/elk7/logstash/pipeline ---- /usr/share/logstash/pipeline

input {

file {

path => "/usr/share/logstash/data/test2.log"

codec => plain

start_position => "beginning"

}

}

output{

elasticsearch {

hosts => "172.16.10.202:9200"

index => "logstash-file-test-%{+YYYY.MM.dd}"

user => elastic

password => xxxxx

}

}

修改pipeline.yml

位置: /opt/elk7/logstash/conf ---- /usr/share/logstash/config

- pipeline.id: main

path.config: /usr/share/logstash/config/logstash-file.conf

- pipeline.id: file2

path.config: /usr/share/logstash/config/logstash-file2.conf

- pipeline.id: es

path.config: /usr/share/logstash/config/logstash-es.conf

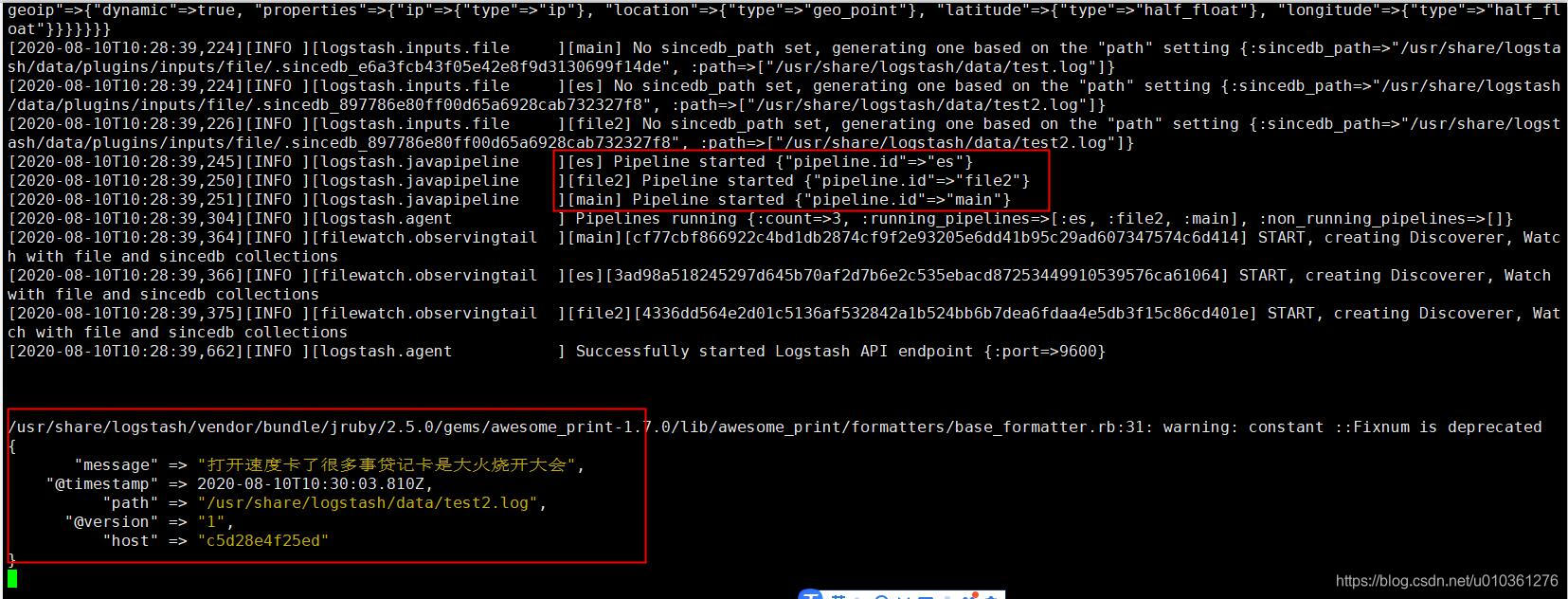

6.再次验证

[root@kf202 conf]# docker logs -f logstash --tail 50

[2020-08-10T10:07:34,605][WARN ][logstash.runner ] SIGTERM received. Shutting down.

[2020-08-10T10:07:34,696][INFO ][filewatch.observingtail ] QUIT - closing all files and shutting down.

[2020-08-10T10:07:34,697][INFO ][filewatch.observingtail ] QUIT - closing all files and shutting down.

[2020-08-10T10:07:35,745][INFO ][logstash.javapipeline ] Pipeline terminated {"pipeline.id"=>"file2"}

[2020-08-10T10:07:35,746][INFO ][logstash.javapipeline ] Pipeline terminated {"pipeline.id"=>"main"}

[2020-08-10T10:07:35,790][INFO ][logstash.runner ] Logstash shut down.

OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and will likely be removed in a future release.

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by com.headius.backport9.modules.Modules (file:/usr/share/logstash/logstash-core/lib/jars/jruby-complete-9.2.11.1.jar) to method sun.nio.ch.NativeThread.signal(long)

WARNING: Please consider reporting this to the maintainers of com.headius.backport9.modules.Modules

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

Sending Logstash logs to /usr/share/logstash/logs which is now configured via log4j2.properties

[2020-08-10T10:07:57,493][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"7.8.1", "jruby.version"=>"jruby 9.2.11.1 (2.5.7) 2020-03-25 b1f55b1a40 OpenJDK 64-Bit Server VM 11.0.7+10-LTS on 11.0.7+10-LTS +indy +jit [linux-x86_64]"}

[2020-08-10T10:07:58,555][ERROR][logstash.agent ] Failed to execute action {:action=>LogStash::PipelineAction::Create/pipeline_id:es, :exception=>"LogStash::ConfigurationError", :message=>"Expected one of [A-Za-z0-9_-], [ \\t\\r\\n], \"#\", \"{\", [A-Za-z0-9_], \"}\" at line 14, column 22 (byte 278) after output{\n elasticsearch {\n hosts => \"172.16.10.202:9200\"\n index => \"logstash-file-test-%{+YYYY.MM.dd}\"\n user => elastic\n password => cnhqd", :backtrace=>["/usr/share/logstash/logstash-core/lib/logstash/compiler.rb:58:in `compile_imperative'", "/usr/share/logstash/logstash-core/lib/logstash/compiler.rb:66:in `compile_graph'", "/usr/share/logstash/logstash-core/lib/logstash/compiler.rb:28:in `block in compile_sources'", "org/jruby/RubyArray.java:2577:in `map'", "/usr/share/logstash/logstash-core/lib/logstash/compiler.rb:27:in `compile_sources'", "org/logstash/execution/AbstractPipelineExt.java:181:in `initialize'", "org/logstash/execution/JavaBasePipelineExt.java:67:in `initialize'", "/usr/share/logstash/logstash-core/lib/logstash/java_pipeline.rb:44:in `initialize'", "/usr/share/logstash/logstash-core/lib/logstash/pipeline_action/create.rb:52:in `execute'", "/usr/share/logstash/logstash-core/lib/logstash/agent.rb:356:in `block in converge_state'"]}

[2020-08-10T10:07:59,701][INFO ][org.reflections.Reflections] Reflections took 44 ms to scan 1 urls, producing 21 keys and 41 values

[2020-08-10T10:08:00,546][INFO ][logstash.javapipeline ][file2] Starting pipeline {:pipeline_id=>"file2", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>500, "pipeline.sources"=>["/usr/share/logstash/pipeline/logstash-file2.conf"], :thread=>"#<Thread:0x345e4d08 run>"}

[2020-08-10T10:08:00,547][INFO ][logstash.javapipeline ][main] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>500, "pipeline.sources"=>["/usr/share/logstash/pipeline/logstash-file.conf"], :thread=>"#<Thread:0x370c3bcf@/usr/share/logstash/logstash-core/lib/logstash/pipeline_action/create.rb:54 run>"}

[2020-08-10T10:08:01,679][INFO ][logstash.inputs.file ][main] No sincedb_path set, generating one based on the "path" setting {:sincedb_path=>"/usr/share/logstash/data/plugins/inputs/file/.sincedb_e6a3fcb43f05e42e8f9d3130699f14de", :path=>["/usr/share/logstash/data/test.log"]}

[2020-08-10T10:08:01,680][INFO ][logstash.inputs.file ][file2] No sincedb_path set, generating one based on the "path" setting {:sincedb_path=>"/usr/share/logstash/data/plugins/inputs/file/.sincedb_897786e80ff00d65a6928cab732327f8", :path=>["/usr/share/logstash/data/test2.log"]}

[2020-08-10T10:08:01,693][INFO ][logstash.javapipeline ][file2] Pipeline started {"pipeline.id"=>"file2"}

[2020-08-10T10:08:01,700][INFO ][logstash.javapipeline ][main] Pipeline started {"pipeline.id"=>"main"}

[2020-08-10T10:08:01,770][INFO ][filewatch.observingtail ][main][cf77cbf866922c4bd1db2874cf9f2e93205e6dd41b95c29ad607347574c6d414] START, creating Discoverer, Watch with file and sincedb collections

[2020-08-10T10:08:01,775][INFO ][filewatch.observingtail ][file2][f967bb0285eea34805b3ab366df25a6fe116eb0521456be1bff642b6e58ab95b] START, creating Discoverer, Watch with file and sincedb collections

[2020-08-10T10:08:02,105][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

继续修改test2.log,可以看到console的日志输出

通过kibana查看es中的数据

可以看到是有数据进到es的。

注意:

1.由于是通过logstash向es发送索引创建命令,所以需要先在对应的test2.log文件中修改并保存后才会触发索引创建命令。

2.kibana中有个索引模式的概念,es中的索引需要通过kibana页面上操作创建索引模式后才会在kibana的Discovery模块中出现。

这里的索引模式我理解就是对现有索引按照一定规则进行归档匹配,匹配上的可以出现在一个匹配索引里。

到此文件-logstash-es的数据流转环境搭建就完成了。