文章目录

资源类型

在K8S中可以对两类资源进行限制:cpu和内存。

CPU的单位有:

正实数,代表分配几颗CPU,可以是小数点,比如0.5代表0.5颗CPU,意思是一颗CPU的一半时间。2代表两颗CPU。正整数m,也代表1000m=1,所以500m等价于0.5。

内存的单位:

正整数,直接的数字代表Bytek、K、Ki,Kilobytem、M、Mi,Megabyteg、G、Gi,Gigabytet、T、Ti,Terabytep、P、Pi,Petabyte

一:资源请求和Pod和容器的限制

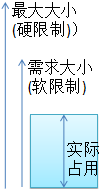

在K8S中,对于资源的设定是落在Pod里的Container上的,主要有两类,limits控制上限,requests控制下限。其位置在:

spec.containers[].resources.limits.cpu ‘//CPU上限’

spec.containers[].resources.limits.memory ‘//内存上限’

spec.containers[].resources.requests.cpu ‘//创建时候分配的基本CPU资源’

spec.containers[].resources.requests.memory ‘//创建时分配的基本内存资源’

1.1:编写yaml文件,并创建pod资源

这是一个例子。以下Pod具有两个容器。每个容器都有一个0.25 cpu和64MiB(2 26个字节)的内存请求。每个容器的限制为0.5 cpu和128MiB的内存。您可以说Pod的请求为0.5 cpu和128 MiB的内存,限制为1 cpu和256MiB的内存。

[root@master shuai]# vim shuai.yaml

apiVersion: v1

kind: Pod

metadata:

name: frontend

spec:

containers:

- name: db '//容器'

image: mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: "password"

resources: '//资源'

requests:

memory: "64Mi" '//使用基础内存为64M'

cpu: "250m" '//基础cpu使用为25%'

limits:

memory: "128Mi" '//容器内存上限为128M'

cpu: "500m"

- name: wp '//第二个容器'

image: wordpress

resources:

requests:

memory: "64Mi" '//容器内存上限为128M'

cpu: "250m" '//容器cpu上限为50%'

limits:

memory: "128Mi"

cpu: "500m"

- 创建yaml资源pod

[root@master shuai]# kubectl apply -f shuai.yaml

'//查看pod详细信息'

kubectl describe pod frontend

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 38s default-scheduler Successfully assigned default/frontend to 20.0.0.43

Normal Pulling 37s kubelet, 20.0.0.43 pulling image "mysql"

Normal Pulled 2s kubelet, 20.0.0.43 Successfully pulled image "mysql"

Normal Created 2s kubelet, 20.0.0.43 Created container

Normal Started 2s kubelet, 20.0.0.43 Started container

Normal Pulling 2s kubelet, 20.0.0.43 pulling image "wordpress"

'//容器是在node2节点创建的'

'//查看pod资源'

[root@master shuai]# kubectl get pods

NAME READY STATUS RESTARTS AGE

frontend 0/2 ContainerCreating 0 1s

- 查看node节点资源分配

[root@master shuai]# kubectl describe nodes 20.0.0.43

....省略信息....

Non-terminated Pods: (1 in total) '最大限制'

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits

--------- ---- ------------ ---------- --------------- -------------

default frontend 500m (12%) 1 (25%) 128Mi (3%) 256Mi (6%)

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 500m (12%) 1 (25%)

memory 128Mi (3%) 256Mi (6%)

Events: <none>

- node节点查看容器

[root@node2 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6de02857802f mysql "docker-entrypoint.s…" 29 seconds ago Exited (137) 24 seconds ago k8s_db_frontend_default_d63eaeb1-0d46-11eb-a2d8-000c2984c1e3_2

f87aad7c178d wordpress "docker-entrypoint.s…" About a minute ago Up About a minute k8s_wp_frontend_default_d63eaeb1-0d46-11eb-a2d8-000c2984c1e3_0

二:pod的重启策略

pod的重启策略restartpolicy,在pod遇到故障之后的重启的动作称为重启策略

1.Always:当容器终止退出之后,总是总是重启容器,为默认策略

2.OnFailure:当容器异常退出之后(退出状态码为非0)时,重启容器

3.Never:当容器终止退出,从不重启容器

注意:k8s中不支持重启pod资源,这里说的重启指的是删除重建pod

2.1:查看pod资源的重启策略

[root@master shuai]# kubectl edit pod fontend

restartPolicy: Always '//重启默认策略是Always'

2.11:编写一个yaml文件

[root@master shuai]# vim shuai2.yaml

apiVersion: v1

kind: Pod

metadata:

name: foo

spec:

containers:

- name: busybox '//最小化内核'

image: busybox

args: '//参数'

- /bin/sh '//sell环境'

- -c '//指定'

- sleep 30; exit 3 '//休眠30秒,异常退出'

2.12:创建pod资源

[root@master shuai]# kubectl apply -f shuai2.yaml

'-w"查看状态'

[root@master shuai]# kubectl get pods -w

NAME READY STATUS RESTARTS AGE

foo 0/1 ContainerCreating 0 10s

foo 1/1 Running 0 17s

foo 0/1 Error 0 46s

foo 1/1 Running 1 50s

foo 0/1 Error 1 80s '//重启休眠30秒'

'//重启次数为2'

^C[root@master shuai]kubectl get pods

NAME READY STATUS RESTARTS AGE

foo 1/1 Running 2 104s

2.13:修改shua2.yaml的重启策略改为Never重启从不重启

apiVersion: v1

kind: Pod

metadata:

name: foo

spec:

containers:

- name: busybox

image: busybox

args:

- /bin/sh

- -c

- sleep 10;exit 3 '//这边设置休眠时间为10s'

restartPolicy: Never '//添加重启策略为从不重启'

'//删除原有yaml文件进行重新创建'

[root@master shuai]# kubectl delete -f shuai2.yaml

[root@master shuai]# kubectl apply -f shuai2.yaml

[root@master shuai]# kubectl get pods -w

NAME READY STATUS RESTARTS AGE

foo 1/1 Running 0 17s

foo 0/1 Error 0 26s '//这边已经不进行重启了,设置成功'

^C[root@master shuai]#

'//因为返回的是状态码3,所以显示的是error,如果删除这个异常状态码,那么显示的是completed'

三:K8S使用就绪和存活探针配置健康检查

pod的健康检查又被称为探针,来检查pod资源,探针的规则可以同时定义

探针的类型分为两类:

1、亲和性探针(LivenessProbe)

判断容器是否存活(running),若不健康,则kubelet杀死该容器,根据Pod的restartPolicy来操作。

- 若容器中不包含此探针,则kubelet人为该容器的亲和性探针返回值永远是success

2、就绪性探针(ReadinessProbe)

- 判断容器服务是否就绪(ready),若不健康,kubernetes会把Pod从service endpoints中剔除,后续在把恢复到Ready状态的Pod加回后端的Endpoint列表。这样就能保证客户端在访问service’时不会转发到服务不可用的pod实例上

- endpoint是service负载均衡集群列表,添加pod资源的地址

3.1:探针的三种检查方式

1、exec(最常用):执行shell命令返回状态码为0代表成功,exec检查后面所有pod资源,触发策略就执行

2、httpGet:发送http请求,返回200-400范围状态码为成功

3、tcpSocket :发起TCP Socket建立成功

(注意:)规则可以同时定义

livenessProbe 如果检查失败,将杀死容器,根据Pod的restartPolicy来操作。

ReadinessProbe 如果检查失败,kubernetes会把Pod从service endpoints中剔除。

3.2:使用exec方式检查

- 创建pod,下面是yaml文件

[root@master shuai]# vim shuai3.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

test: liveness

name: liveness-exec

spec:

containers:

- name: liveness

image: busybox

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy;sleep 30

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5

'//在配置文件中,您可以看到Pod具有单个Container。该periodSeconds字段指定kubelet应该每5秒执行一次活动性探测。该initialDelaySeconds字段告诉kubelet在执行第一个探测之前应等待5秒钟。为了执行探测,kubeletcat /tmp/healthy在目标容器中执行命令。如果命令成功执行,则返回0,并且kubelet认为该容器处于活动状态且健康。如果命令返回非零值,则kubelet将杀死容器并重新启动它'

- 容器启动时,它将执行以下命令

/bin/sh -c "touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600"

在容器寿命的前30秒内,有一个/tmp/healthy文件。因此,在前30秒内,该命令cat /tmp/healthy将返回成功代码。30秒后,cat /tmp/healthy返回失败代码。

- 创建pod资源

[root@master shuai]# kubectl apply -f shuai3.yaml

'//查看状态'

liveness-exec 0/1 ContainerCreating 0 9s

liveness-exec 1/1 Running 0 17s

liveness-exec 1/1 Running 1 47s '//容器重启'

'//查看pod资源'

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

liveness-exec 1/1 Running 2 2m48s

查看pod详细信息

[root@master shuai]# kubectl describe pod liveness-exec

'//下面是资源创建开启等信息'

Normal Scheduled 5m29s default-scheduler Successfully assigned default/liveness-exec to 20.0.0.43

Normal Pulled 2m42s (x3 over 5m13s) kubelet, 20.0.0.43 Successfully pulled image "busybox"

Normal Created 2m42s (x3 over 5m13s) kubelet, 20.0.0.43 Created container

Normal Started 2m42s (x3 over 5m13s) kubelet, 20.0.0.43 Started container

Warning Unhealthy 117s (x9 over 4m42s) kubelet, 20.0.0.43 Liveness probe failed: cat: can't open '/tmp/healthy': No such file or directory

Normal Pulling 102s (x4 over 5m29s) kubelet, 20.0.0.43 pulling image "busybox"

Normal Killing 26s (x4 over 4m13s) kubelet, 20.0.0.43 Killing container with id docker://liveness:Container failed liveness probe.. Container will be killed and recreated.

3.3:使用httpGet方式检查

-

另一种活动探针使用HTTP GET请求

-

创建pod资源、现在编写yaml文件

'//首先删除原有资源'

[root@master shuai]# kubectl delete -f shuai3.yaml

'//编写yaml文件'

apiVersion: v1

kind: Pod

metadata:

labels:

test: liveness

name: liveness-http

spec:

containers:

- name: nginx

image: nginx

# args:

# - /server

livenessProbe:

httpGet: '//探针方式'

path: /healthz

port: 8080

httpHeaders:

- name: Custom-Header

value: Awesome

initialDelaySeconds: 3

periodSeconds: 3

'//创建pod资源'

[root@master shuai]# kubectl apply -f shuai3.yaml

pod/liveness-http created

'//查看状态'

[root@master shuai]# kubectl get pods -w

NAME READY STATUS RESTARTS AGE

liveness-http 0/1 ContainerCreating 0 3s

liveness-http 1/1 Running 0 17s

^C

[root@master shuai]# kubectl get pods

NAME READY STATUS RESTARTS AGE

liveness-http 1/1 Running 0 28s

- 10秒后,查看Pod事件以验证活动性探测是否失败以及容器已重新启动:

Normal Scheduled 3m default-scheduler Successfully assigned default/liveness-http to 20.0.0.43

Normal Pulled 2m1s (x3 over 2m44s) kubelet, 20.0.0.43 Successfully pulled image "nginx"

Normal Created 2m1s (x3 over 2m44s) kubelet, 20.0.0.43 Created container

Normal Started 2m1s (x3 over 2m44s) kubelet, 20.0.0.43 Started container

Normal Pulling 112s (x4 over 3m) kubelet, 20.0.0.43 pulling image "nginx"

Warning Unhealthy 112s (x9 over 2m40s) kubelet, 20.0.0.43 Liveness probe failed: Get http://172.17.5.2:8080/healthz: dial tcp 172.17.5.2:8080: connect: connection refused

Normal Killing 112s (x3 over 2m34s) kubelet, 20.0.0.43 Killing container with id docker://nginx:Container failed liveness probe.. Container will be killed and recreated.

3.4:定义TCP活动探针

第三种类型的活动性探针使用TCP套接字。使用此配置,kubelet将尝试在指定端口上打开容器的套接字。如果可以建立连接,则认为该容器运行状况良好,如果不能,则认为该容器是故障容器。

创建pod资源,并编写yaml文件

[root@master shuai]# vim shuai4.yaml

apiVersion: v1

kind: Pod

metadata:

name: liveness-tcp

labels:

app: liveness-tcp

spec:

containers:

- name: liveness-tcp

image: nginx

ports:

- containerPort: 80

readinessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 5

periodSeconds: 10

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 15

TCP检查的配置与HTTP检查非常相似。此示例同时使用了就绪和活跃度探针。容器启动5秒后,kubelet将发送第一个就绪探测器。这将尝试连接到goproxy端口8080上的容器。如果探测成功,则Pod将标记为就绪。kubelet将继续每10秒运行一次此检查。

除了就绪探针之外,此配置还包括活动探针。容器启动后,kubelet将运行第一个活动探针15秒。就像就绪探针一样,这将尝试goproxy在端口8080上连接到 容器。如果活动探针失败,则容器将重新启动。

'创建并查看资源状态'

[root@master shuai]# kubectl apply -f shuai4.yaml

pod/liveness-tcp created

[root@master shuai]# kubectl get pods -w

NAME READY STATUS RESTARTS AGE

liveness-http 0/1 CrashLoopBackOff 6 6m43s

liveness-tcp 0/1 ContainerCreating 0 5s

liveness-tcp 0/1 Running 0 17s

[root@master shuai]# kubectl get pods

NAME READY STATUS RESTARTS AGE

liveness-tcp 0/1 Running 0 18s

- 15秒后查看pod事件以验证活动性探针

[root@master shuai]# kubectl describe pod liveness-tcp

....省略信息.........

Normal Scheduled 103s default-scheduler Successfully assigned default/liveness-tcp to 20.0.0.43

Warning Unhealthy 20s (x7 over 80s) kubelet, 20.0.0.43 Readiness probe failed: dial tcp 172.17.5.2:8080: connect: connection refused

Normal Pulling 19s (x2 over 102s) kubelet, 20.0.0.43 pulling image "nginx"

Warning Unhealthy 19s (x3 over 59s) kubelet, 20.0.0.43 Liveness probe failed: dial tcp 172.17.5.2:8080: connect: connection refused

Normal Killing 19s kubelet, 20.0.0.43 Killing container with id docker://liveness-tcp:Container failed liveness probe.. Container will be killed and recreated.

Normal Pulled 3s (x2 over 87s) kubelet, 20.0.0.43 Successfully pulled image "nginx"

Normal Created 3s (x2 over 87s) kubelet, 20.0.0.43 Created container

Normal Started 3s (x2 over 87s) kubelet, 20.0.0.43 Started container