文章目录

Kubernetes作为近几年最具颠覆性的容器编排技术,广泛应用与企业的生产环境中,相较于前几年的docker-swarm的编排方式,Kubernetes无疑是站在一个更高的角度对容器进行管理,方便日后项目的普适性,容易对架构进行扩展。

生产环境下更注重于集群的高可用,不同于测试环境的单主节点,在生产环境下需要配置至少两个主节点两个node节点,保证在主节点挂掉之后,node节点的kubelet还能访问到另一个主节点的apiserver等组件进行运作。

实验环境

服务器规划

| 主机名 | IP地址 | 资源分配 | 部署的服务 |

|---|---|---|---|

| nginx01 | 20.0.0.49 | 2G+4CPU | nginx、keepalived |

| nginx02 | 20.0.0.50 | 2G+4CPU | nginx、keepalived |

| VIP | 20.0.0.100 | 虚拟地址 | |

| master | 20.0.0.41 | 1G+2CPU | apiserver、scheduler、controller-manager、etcd |

| master2 | 20.0.0.44 | 1G+2CPU | apiserver、scheduler、controller-manager |

| node01 | 20.0.0.42 | 2G+4CPU | kubelet、kube-proxy、docker、flannel、etcd |

| node02 | 20.0.0.43 | 2G+4CPU | kubelet、kube-proxy、docker、flannel、etcd |

一:master2部署

[root@localhost ~]# hostnamectl set-hostname master2

[root@localhost ~]# su

[root@master2 ~]# iptables -F

[root@master2 ~]# setenforce 0

[root@master2 ~]# systemctl stop NetworkManager && systemctl disable NetworkManager

- 将master节点的kubernetes配置文件和启动脚本复制到master2节点

[root@master ~]# scp -r /opt/kubernetes/ root@20.0.0.44:/opt/

#master查看

[root@master2 ~]# ls /opt/kubernetes/

bin cfg ssl

- 复制master中的三个组件启动脚本到master2

[root@master ~]# scp /usr/lib/systemd/system/{

kube-apiserver,kube-controller-manager,kube-scheduler}.service root@20.0.0.44:/usr/lib/systemd/system/

root@20.0.0.44's password: '//输入密码'

kube-apiserver.service 100% 282 161.5KB/s 00:00

kube-controller-manager.service 100% 317 439.0KB/s 00:00

kube-scheduler.service 100% 281 347.7KB/s 00:00

- master2上修改apiserver配置文件的IP地址

[root@master2 ~]# cd /opt/kubernetes/cfg/

[root@master2 cfg]# ls

kube-apiserver kube-controller-manager kube-scheduler token.csv

[root@master2 cfg]# vim kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://20.0.0.41:2379,https://20.0.0.42:2379,https://20.0.0.43:2379 \

--bind-address=20.0.0.44 \ '//此处修改一下本机的IP'

--secure-port=6443 \

--advertise-address=20.0.0.44 '//此处修改本地IP'

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

.....省略信息......

'//controller-manager在k8s上篇设置的是本地监听的是127.0.0.1故不需修改IP'

[root@master2 cfg]# vim kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \

--v=4 \

--master=127.0.0.1:8080 \

--leader-elect=true \

--address=127.0.0.1 \

--service-cluster-ip-range=10.0.0.0/24 \

--cluster-name=kubernetes \

#kube-scheduler同样监听的是127.0.0.1就不多做演示

- 将master节点证书复制到master2节点上(master2上一定要有etcd证书,用来与etcd通信)

[root@master ~]# scp -r /opt/etcd/ root@20.0.0.44:/opt/

#master2查看

[root@master2 ~]# ls /opt/etcd/ssl/

ca-key.pem ca.pem server-key.pem server.pem

- 启动master2的三个组件服务

'//启动服务'

[root@master2 cfg]# systemctl start kube-apiserver.service

'//查看服务状态'

[root@master2 cfg]# systemctl status kube-apiserver.service

[root@master2 cfg]# systemctl start kube-controller-manager.service

[root@master2 cfg]# systemctl status kube-controller-manager.service

[root@master2 cfg]# systemctl start kube-scheduler.service

[root@master2 cfg]# systemctl status kube-scheduler.service

- 添加环境变量并查看状态

[root@master2 cfg]# echo export PATH=$PATH:/opt/kubernetes/bin >> /etc/profile

[root@master2 cfg]# source /etc/profile

[root@master2 cfg]# kubectl get node '//查看k8s的所有node节点'

NAME STATUS ROLES AGE VERSION

20.0.0.42 Ready <none> 2d19h v1.12.3

20.0.0.43 Ready <none> 2d16h v1.12.3

二:k8s负载均衡部署实现高可用

介绍

service:服务,是一个虚拟概念,逻辑上代理后端pod。众所周知,pod生命周期短,状态不稳定,pod异常后新生成的pod

ip会发生变化,之前pod的访问方式均不可达。通过service对pod做代理,service有固定的ip和port,ip:port组合自动关联后端pod,即使pod发生改变,kubernetes内部更新这组关联关系,使得service能够匹配到新的pod。这样,通过service提供的固定ip,用户再也不用关心需要访问哪个pod,以及pod是否发生改变,大大提高了服务质量。如果pod使用rc创建了多个副本,那么service就能代理多个相同的pod,通过kube-proxy,实现负载均衡

- 我们在部署单master时已经把证书的相关IP都全部加入到列表里面

cat > server-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"20.0.0.41", '//master1'

"20.0.0.44", '//master2'

"20.0.0.100", '//VIP反向代理地址'

"20.0.0.49", '//nginx代理master'

"20.0.0.50", '//nginx代理backup'

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes servercsr.json | cfssljson -bare server

nginx01:20.0.0.49

nginx02:20.0.0.50

2.1:nginx01、nginx02安装nginx服务

'//这边只演示nginx01 两台操作部署一样'

[root@nginx01 ~]# iptables -F

[root@nginx01 ~]# setenforce 0

#配置yum源

[root@nginx01 ~]# vim /etc/yum.repos.d/nginx.repo

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

gpgcheck=0

[root@nginx01 ~]# yum clean all

#下载nginx服务

[root@nginx01 ~]# yum install nginx -y

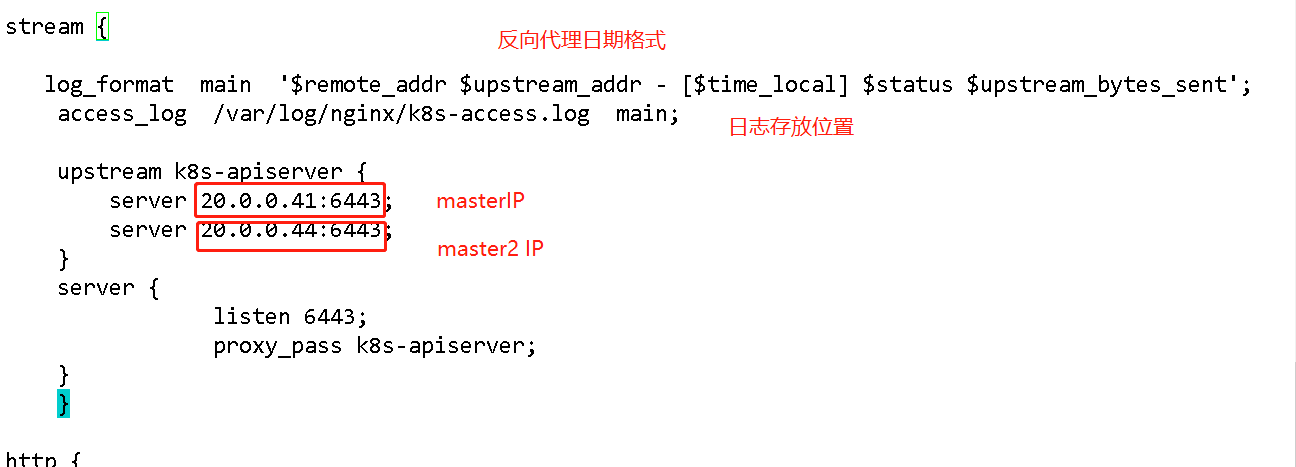

- 配置nginx配置文件,添加四层转发

vim /etc/nginx/nginx.conf

events {

worker_connections 1024;

}

----------------------下面添加---------------------------------------------------------------

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 20.0.0.41:6443;

server 20.0.0.44:6443;

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

-------------------上面添加------------------------------------------------------

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

- 启动nginx服务

[root@nginx01 ~]# nginx -t '//检查语法配置是否正确'

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@nginx01 ~]# systemctl start nginx '//开启服务'

[root@nginx01 ~]# netstat -ntap | grep nginx '//查看端口 可以发现端口是6443'

tcp 0 0 0.0.0.0:6443 0.0.0.0:* LISTEN 66366/nginx: master

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 66366/nginx: master

2.2:配置nginx部署keepalived服务

【部署过程一样,仅展示nginx01的过程】

'//部署keepalived服务'

[root@nginx01 ~]# yum -y install keepalived

'//修改配置文件'

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# 接收邮件地址

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

# 邮件发送地址

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/usr/local/nginx/sbin/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER #nginx02设置BACKUP

interface eth33 #设置真实网卡

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,nginx02设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

20.0.0.100/24

}

track_script {

check_nginx

}

}

2.3:创建监控脚本并启动服务

- ngin01与ngin02一样操作

[root@nginx01 ~]# mkdir /usr/local/nginx/sbin/ -p

[root@nginx01 ~]# vim /usr/local/nginx/sbin/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

'//添加执行权限'

[root@nginx01 ~]# chmod +x /usr/local/nginx/sbin/check_nginx.sh

'//启动服务并查看状态'

[root@nginx01 ~]# systemctl start keepalived.service

[root@nginx01 ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor pr

Active: active (running) since 五 2020-10-02 17:46:54 CST; 4min 17s ago

Process: 10783 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited,

Main PID: 10784 (keepalived)

-----省略信息-------

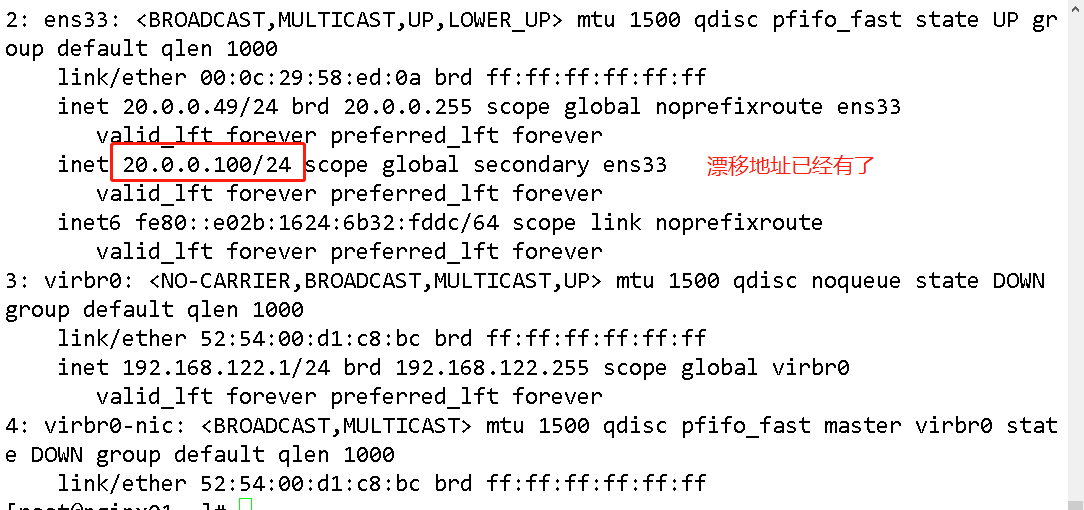

2.4:查看nginx01漂移地址

[root@nginx01 ~]# ip a

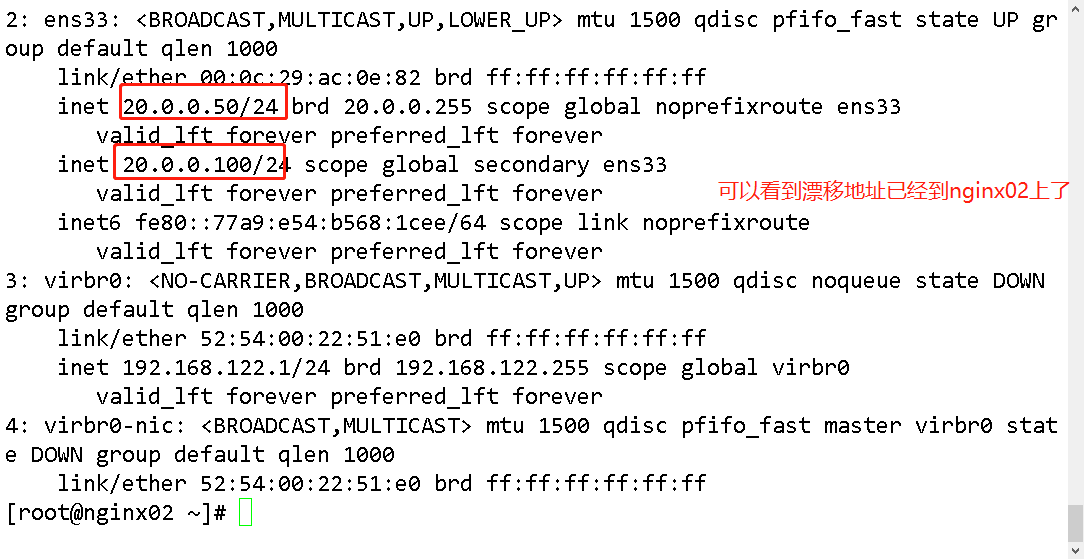

2.5:验证漂移地址

[root@nginx01 ~]# pkill nginx '//关闭nginx'

[root@nginx01 ~]# systemctl status keepalived '//查看keepalived状态已经关闭,证明脚本已经成功'

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)

Active: inactive (dead)

#查看漂移地址

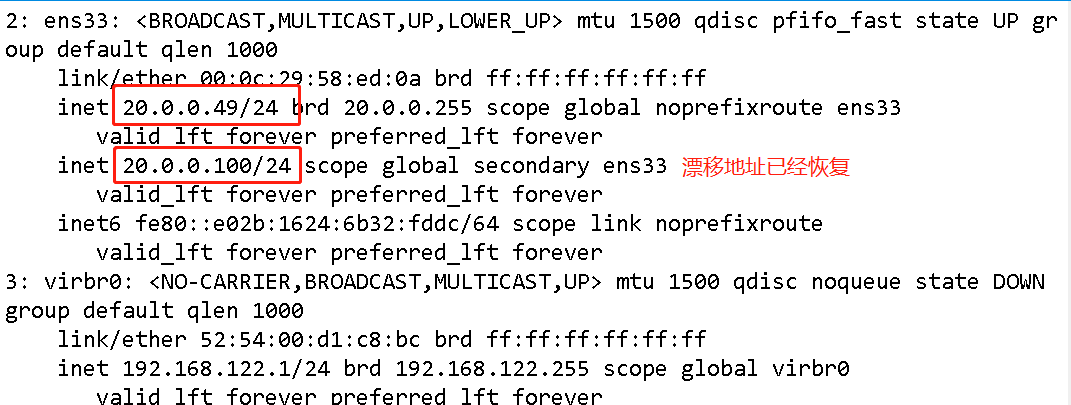

2.6:恢复漂移地址

'//重启nginx服务就行'

[root@nginx01 ~]# systemctl restart nginx

'//在重启keepalived服务'

[root@nginx01 ~]# systemctl restart keepalived.service

#查看漂移地址

2.7:修改node节点配VIP(bootstrap.kubeconfig)

'//这边只演示node1操作,node2一样操作'

[root@node1 ~]# vim /opt/kubernetes/cfg/bootstrap.kubeconfig

'//修改为VIP地址'

server: https://20.0.0.100:6443

[root@node1 ~]# vim /opt/kubernetes/cfg/kubelet.kubeconfig

server: https://20.0.0.100:6443

[root@node1 ~]# vim /opt/kubernetes/cfg/kube-proxy.kubeconfig

server: https://20.0.0.100:6443

#替换完成检查一下

[root@node2 ~]# cd /opt/kubernetes/cfg/

[root@node2 cfg]# grep 100 *

bootstrap.kubeconfig: server: https://20.0.0.100:6443

kubelet.kubeconfig: server: https://20.0.0.100:6443

kube-proxy.kubeconfig: server: https://20.0.0.100:6443

#重启服务

[root@node2 cfg]# systemctl restart kubelet.service

[root@node2 cfg]# systemctl restart kube-proxy.service

- 在nginx01查看nginx的k8s日志

'//反向代理了apiserver'

[root@nginx01 ~]# tail /var/log/nginx/k8s-access.log

20.0.0.42 20.0.0.41:6443 - [02/Oct/2020:18:19:39 +0800] 200 1115

20.0.0.42 20.0.0.44:6443 - [02/Oct/2020:18:19:39 +0800] 200 1115

20.0.0.43 20.0.0.44:6443 - [02/Oct/2020:18:19:45 +0800] 200 1114

20.0.0.43 20.0.0.44:6443 - [02/Oct/2020:18:19:45 +0800] 200 1115

- 在master测试–创建pod

[root@master ~]# kubectl run nginx --image=nginx

'//在node2查看一下,它只会选择其中一个node节点创建容器'

[root@node2 cfg]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest 7e4d58f0e5f3 3 weeks ago 133MB

centos 7 7e6257c9f8d8 7 weeks ago 203MB

'//master查看状态'

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-dbddb74b8-js4rs 1/1 Running 0 48s '//创建完成,运行中'

- 查看日志

[root@master ~]# kubectl logs nginx-dbddb74b8-js4rs

Error from server (Forbidden): Forbidden (user=system:anonymous, verb=get, resource=nodes, subresource=proxy) ( pods/log nginx-dbddb74b8-js4rs) '可以访问,但是没有日志产生'

'//可以看到会报错,这是以匿名身份查看是看不了的,必须要绑定用户角色'

'绑定身份'

[root@master ~]# kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

'再次查看已经可以查看了'

[root@master ~]# kubectl logs nginx-dbddb74b8-js4rs

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

#查看pod网络

[root@master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx-dbddb74b8-js4rs 1/1 Running 0 56m 172.17.5.2 20.0.0.43 <none>

- 在对应网段的node节点可以直接访问

[root@node2 cfg]# curl 172.17.5.2

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

#访问就会产生日志

回到master查看,已经产生访问记录了

[root@master ~]# kubectl logs nginx-dbddb74b8-js4rs

172.17.5.1 - - [02/Oct/2020:11:22:43 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"

结束~欢迎一起讨论学习