Fabric基于docker方式、多机kafka配置部署详细过程。

更多区块链技术与应用分类:

基于docker方式的多机kafka的Fabric网络搭建步骤如下。

分布式节点规划:

node1: kafka1 zookeeper1 orderer1

node2: kafka2 zookeeper2 orderer2 peer1

node3: kafka3 zookeeper3 orderer3 peer2

node4: kafka4 peer3

每台节点网络ip配置(vim /etc/hosts)

172.27.34.201 orderer1.trace.com zookeeper1 kafka1

172.27.34.202 orderer2.trace.com zookeeper2 kafka2 peer0.org1.trace.com org1.trace.com

172.27.34.203 orderer3.trace.com zookeeper3 kafka3 peer1.org2.trace.com org2.trace.com

172.27.34.204 kafka4 peer2.org3.trace.com org3.trace.com

环境需求

fabric v1.1

docker(17.06.2-ce或更高)

docker-compose(1.14.0或更高)

go( 1.9.x或更高)

docker安装

删除之前安装的

sudo yum remove docker docker-common docker-selinux docker-engine

安装一些依赖

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

下载安装rpm包

sudo yum install https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-17.06.2.ce-1.el7.centos.x86_64.rpm

go更新:

rm -rf /usr/local/go/

wget https://dl.google.com/go/go1.12.linux-amd64.tar.gz

docker镜像下载

docker pull registry.docker-cn.com/hyperledger/fabric-peer:x86_64-1.1.0

docker pull registry.docker-cn.com/hyperledger/fabric-orderer:x86_64-1.1.0

docker pull registry.docker-cn.com/hyperledger/fabric-tools:x86_64-1.1.0

docker pull hyperledger/fabric-couchdb

docker pull hyperledger/fabric-ca

docker pull hyperledger/fabric-ccenv

docker pull hyperledger/fabric-baseos

docker pull hyperledger/fabric-kafka

docker pull hyperledger/fabric-zookeeper

改标签:

docker tag b7bfddf508bc hyperledger/fabric-tools:latest

docker tag ce0c810df36a hyperledger/fabric-orderer:latest

docker tag b023f9be0771 hyperledger/fabric-peer:latest

删除相同的:

docker rmi registry.docker-cn.com/hyperledger/fabric-tools:x86_64-1.1.0

docker rmi registry.docker-cn.com/hyperledger/fabric-orderer:x86_64-1.1.0

docker rmi registry.docker-cn.com/hyperledger/fabric-peer:x86_64-1.1.0

保存镜像并发送到其他节点:

=>node1:

mkdir -p /data/fabric-images-1.1.0-release

docker save 5b31d55f5f3a > /data/fabric-images-1.1.0-release/fabric-ccenv.tar

docker save 1a804ab74f58 > /data/fabric-images-1.1.0-release/fabric-ca.tar

docker save d36da0db87a4 > /data/fabric-images-1.1.0-release/fabric-zookeeper.tar

docker save a3b095201c66 > /data/fabric-images-1.1.0-release/fabric-kafka.tar

docker save f14f97292b4c > /data/fabric-images-1.1.0-release/fabric-couchdb.tar

docker save 75f5fb1a0e0c > /data/fabric-images-1.1.0-release/fabric-baseos.tar

docker save b7bfddf508bc > /data/fabric-images-1.1.0-release/fabric-tools.tar

docker save ce0c810df36a > /data/fabric-images-1.1.0-release/fabric-orderer.tar

docker save b023f9be0771 > /data/fabric-images-1.1.0-release/fabric-peer.tar

docker save b023f9be0771 > /data/fabric-images-1.1.0-release/fabric-peer.tar

docker save b023f9be0771 > /data/fabric-images-1.1.0-release/fabric-peer.tar

cd /data/fabric-images-1.1.0-release

scp -r ./* [email protected]:/tmp/docker/fabric-images/

在node2,node3,node4:

docker load < fabric-baseos.tar

docker tag 75f5fb1a0e0c hyperledger/fabric-baseos:latest

docker load < fabric-ca.tar

docker tag 1a804ab74f58 hyperledger/fabric-ca:latest

docker load < fabric-ccenv.tar

node1:

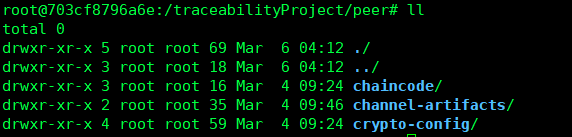

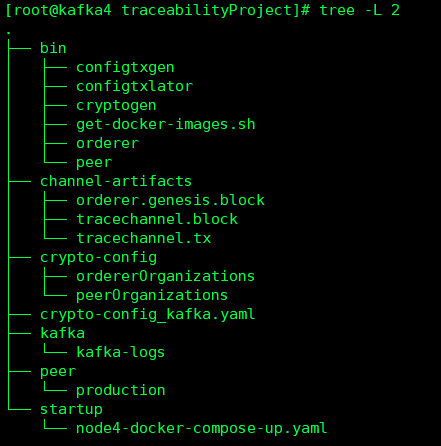

操作在/traceabilityProject下

下载二进制文件(v1.1版本):

一.配置文件设置:

生成节点所需配置文件:

./bin/cryptogen generate --config=./crypto-config_kafka.yaml

二.生成创始区块:

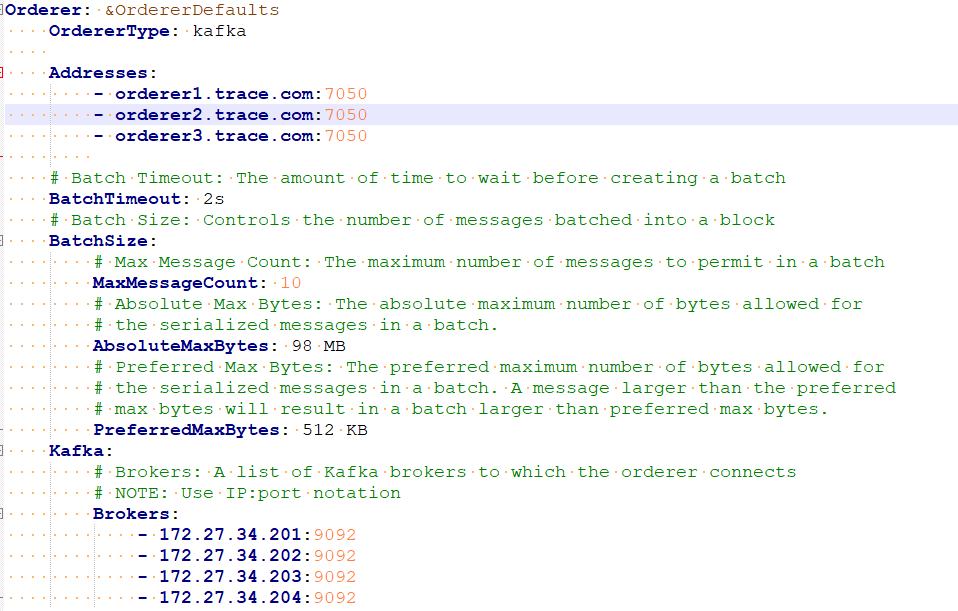

变更部分(集群地址):

将该文件放入/traceabilityProject内,然后运行在该目录下:

(必须改名为configtx.yaml文件)

export FABRIC_CFG_PATH=$PWD

mkdir ./channel-artifacts

./bin/configtxgen -profile TestOrgsOrdererGenesis -outputBlock ./channel-artifacts/orderer.genesis.block

三.生成通道配置文件:

./bin/configtxgen -profile TestOrgsChannel -outputCreateChannelTx ./channel-artifacts/tracechannel.tx -channelID tracechannel

四.启动环境与文件配置

说明:由于每次容器销毁后,网络和数据就都不存在了,这对于生产环境来说是无法接受的,因此,所以考虑数据持久化。

需要持久化数据的组件:Orderer、Peer、Kafka、zookeeper

在启动文件中加入:

Orderer:

environment: - ORDERER_FILELEDGER_LOCATION=/traceabilityProject/orderer/fileLedger volumes: - /traceabilityProject/orderer/fileLedger:/traceabilityProject/orderer/fileLedger

Peer:

environment: - CORE_PEER_FILESYSTEMPATH=/traceabilityProject/peer/production volumes: - /traceabilityProject/peer/production:/traceabilityProject/peer/production

Kafka:

environment: #Kafka数据也要持久化 - KAFKA_LOG.DIRS=/traceabilityProject/kafka/kafka-logs #数据挂载路径 volumes: - /traceabilityProject/kafka/kafka-logs:/traceabilityProject/kafka/kafka-logs

zookeeper:

volumes: #因为zookeeper默认数据存储路径为/data及/datalog - /traceabilityProject/zookeeper1/data:/data - /traceabilityProject/zookeeper1/datalog:/datalog

详细配置如下面yaml文档所示

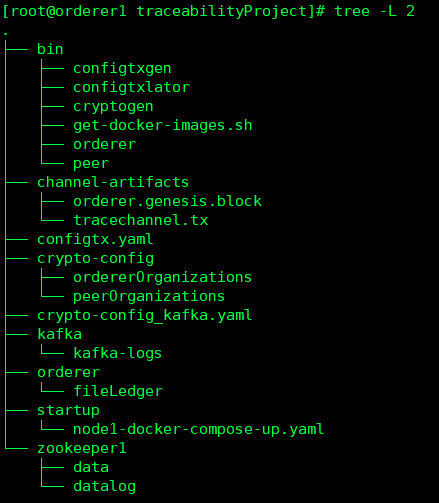

node1:

再创建kafka、orderer、zookeeper三个文件夹来持久存储数据

docker-compose -f ./node1-docker-compose-up.yaml up -d

停止容器则使用 :

docker-compose -f ./node1-docker-compose-up.yaml down

注:

在配置文件中volume中目录中,复制生成的相应配置文件(这些目录不能为空!)

(

创世区块目录要挂载文件而非文件夹,但docker-compose每次生成的都是文件夹,手动更改为创世区块文件:

- ../channel-artifacts/orderer.genesis.block:/traceabilityProject/orderer.genesis.block

)

将工作目录/traceabilityProject复制到其他节点:

scp -r /traceabilityProject/ [email protected]:/

scp -r /traceabilityProject/ [email protected]:/

scp -r /traceabilityProject/ [email protected]:/

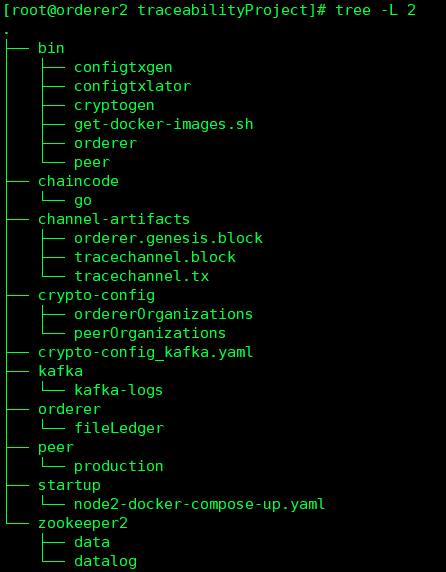

node2:

创建kafka、orderer、zookeeper、peer四个文件夹,来持久存储数据

docker-compose -f ./node2-docker-compose-up.yaml up -d

docker-compose -f ./node2-docker-compose-up.yaml down --remove-orphans

node2-docker-compose-up.yaml

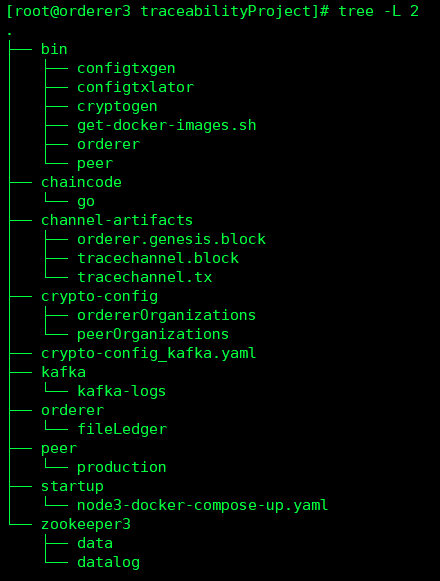

node3:

创建kafka、orderer、peer三个文件夹,来持久存储数据

node4:

创建kafka、peer两个文件夹,来持久存储数据

注意:

只有所有的zookeeper和kafka都启动后(四份文件同时启动,会有一些延时等待时间),orderer节点才不会宕掉。

(否则报错:

Cannot post CONNECT message = circuit breaker is open)

五.Peer节点操作(交易、通道、链码)

peer1(node2):

(1)进入容器

docker exec -it cli bash

查看容器工作目录下是否有文件,若没有则手动从主机拷贝到docker 容器中:

(2)创建通道

容器中

cd /traceabilityProject/peer/channel-artifacts

peer channel create -o orderer1.trace.com:7050 -c tracechannel -t 50 -f ./tracechannel.tx

(3)发送tracechannel.block给其他节点:

由于/traceabilityProject/peer/channel-artifacts是容器的挂载目录,所以物理机中已有该文件。

向两个peer节点拷贝:

退出容器,进入主机目录:/traceabilityProject/channel-artifacts

scp -r tracechannel.block [email protected]:/traceabilityProject/channel-artifacts

scp -r tracechannel.block [email protected]:/traceabilityProject/channel-artifacts

由于/traceabilityProject/channel-artifacts是cli容器中的volumes挂载点,在容器中。

(4)加入通道

再次进入容器目录/traceabilityProject/peer/channel-artifacts:

peer channel join -b tracechannel.block

(

完全重启指令:

docker-compose -f node1-docker-compose-up.yaml down --volumes --remove-orphans docker volume prune

)

(即使没有设置锚节点的情况下,整个Fabric网络仍然是能正常运行的)

(5)安装链码

peer chaincode install -n cc_producer -v 1.0 -p github.com/hyperledger/fabric/examples/chaincode/go/tireTraceability-Demo/main/

(6)初始化

peer chaincode instantiate -o orderer1.trace.com:7050 -C tracechannel -n cc_producer -v 1.0 -c '{"Args":["init"]}' -P "OR ('Org1MSP.member','Org2MSP.member','Org3MSP.member')"

pee2(node3):

docker exec -it cli bash

cd channel-artifacts/

peer channel join -b tracechannel.block

peer chaincode install -n cc_agency -v 1.0 -p github.com/hyperledger/fabric/chaincode/go/traceability/src/origin_agency/main/

peer chaincode instantiate -o orderer1.trace.com:7050 -C tracechannel -n cc_agency -v 1.0 -c '{"Args":["init"]}' -P "OR ('Org1MSP.member','Org2MSP.member','Org3MSP.member')"

peer3(node4):

docker exec -it cli bash

cd channel-artifacts/

peer channel join -b tracechannel.block

peer chaincode install -n cc_producer -v 1.0 -p github.com/hyperledger/fabric/chaincode/go/traceability/src/origin_producer/main/

peer chaincode install -n cc_agency -v 1.0 -p github.com/hyperledger/fabric/chaincode/go/traceability/src/origin_agency/main/

peer chaincode install -n cc_retailer -v 1.0 -p github.com/hyperledger/fabric/chaincode/go/traceability/src/origin_retailer/main/

peer chaincode instantiate -o orderer1.trace.com:7050 -C tracechannel -n cc_retailer -v 1.0 -c '{"Args":["init","A","B","C"]}' -P "OR ('Org1MSP.member','Org2MSP.member','Org3MSP.member')"

(每次重启容器,需再走一遍流程,加入通道、安装、,retailer上安装三个链码,但只初始化cc_retailer)

完全重启指令:

docker-compose -f node1-docker-compose-up.yaml down --volumes --remove-orphans

docker-compose -f node2-docker-compose-up.yaml down --volumes --remove-orphans

docker-compose -f node3-docker-compose-up.yaml down --volumes --remove-orphans

docker-compose -f node4-docker-compose-up.yaml down --volumes --remove-orphans docker volume prune

并删除持久化的数据

rm -rf kafka/* orderer/* zookeeper1/*

rm -rf kafka/* orderer/* peer/* zookeeper2/*

rm -rf kafka/* orderer/* peer/* zookeeper3/*

rm -rf kafka/* peer/*

关机前关闭容器时:

docker-compose -f /traceabilityProject/startup/node1-docker-compose-up.yaml down

docker-compose -f /traceabilityProject/startup/node2-docker-compose-up.yaml down

docker-compose -f /traceabilityProject/startup/node3-docker-compose-up.yaml down

docker-compose -f /traceabilityProject/startup/node4-docker-compose-up.yaml down