概括

从概念上讲,一个字节能表示0到255的整数。目前,对于所有的实时图像应用而言, 虽然有其他的表示形式,但一个像素通常由每个通道的一个字节表示。

一个OpenCV图像是.array类型的二维或三维数组。8位的灰度图像是一个含有字节值 的二维数组。一个24位的BGR图像是一个三维数组,它也包含了字节值。可使用表达式 访问这些值,例如image[0,0]或image[0, 0, 0]。第一个值代表像素的y坐标或行,0表示 顶部;第二个值是像素的x坐标或列,0表示最左边;第三个值(如果可用的话)表示颜色 通道。

C++接口:

1。处理图片

效果:(原图就不发了

application_trace.cpp

application_trace.cpp

#include <iostream>

#include <opencv2/core.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/videoio.hpp>

#include <opencv2/core/utils/trace.hpp>

using namespace cv;

using namespace std;

static void process_frame(const cv::UMat& frame)

{

CV_TRACE_FUNCTION(); // OpenCV Trace macro for function

imshow("Live", frame);

UMat gray, processed;

cv::cvtColor(frame, gray, COLOR_BGR2GRAY);

Canny(gray, processed, 32, 64, 3);

imshow("Processed", processed);

}

int main(int argc, char** argv)

{

CV_TRACE_FUNCTION();

cv::CommandLineParser parser(argc, argv,

"{help h ? | | help message}"

"{n | 100 | number of frames to process }"

"{@video | 0 | video filename or cameraID }"

);

if (parser.has("help"))

{

parser.printMessage();

return 0;

}

VideoCapture capture;

std::string video = parser.get<string>("@video");

if (video.size() == 1 && isdigit(video[0]))

capture.open(parser.get<int>("@video"));

else

capture.open(samples::findFileOrKeep(video)); // keep GStreamer pipelines

int nframes = 0;

if (capture.isOpened())

{

nframes = (int)capture.get(CAP_PROP_FRAME_COUNT);

cout << "Video " << video <<

": width=" << capture.get(CAP_PROP_FRAME_WIDTH) <<

", height=" << capture.get(CAP_PROP_FRAME_HEIGHT) <<

", nframes=" << nframes << endl;

}

else

{

cout << "Could not initialize video capturing...\n";

return -1;

}

int N = parser.get<int>("n");

if (nframes > 0 && N > nframes)

N = nframes;

cout << "Start processing..." << endl

<< "Press ESC key to terminate" << endl;

UMat frame;

for (int i = 0; N > 0 ? (i < N) : true; i++)

{

CV_TRACE_REGION("FRAME"); // OpenCV Trace macro for named "scope" region

{

CV_TRACE_REGION("read");

capture.read(frame);

if (frame.empty())

{

cerr << "Can't capture frame: " << i << std::endl;

break;

}

// OpenCV Trace macro for NEXT named region in the same C++ scope

// Previous "read" region will be marked complete on this line.

// Use this to eliminate unnecessary curly braces.

CV_TRACE_REGION_NEXT("process");

process_frame(frame);

CV_TRACE_REGION_NEXT("delay");

if (waitKey(1) == 27/*ESC*/)

break;

}

}

return 0;

}

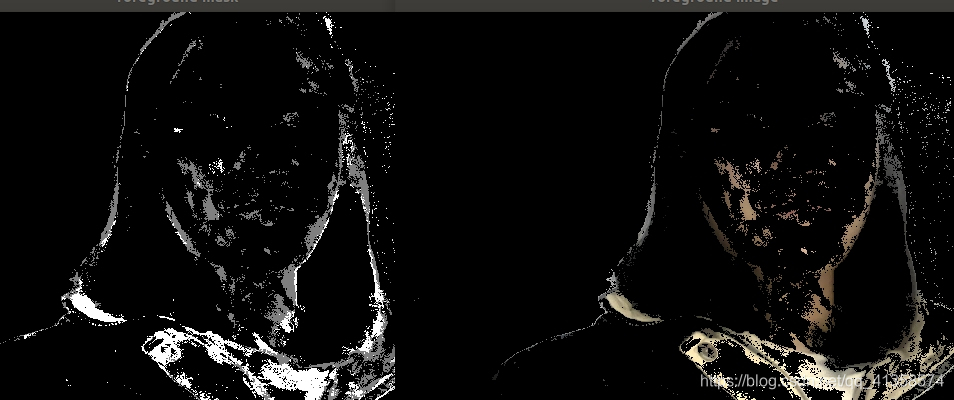

background segmentation(来自官方示例

#include "opencv2/core.hpp"

#include "opencv2/imgproc.hpp"

#include "opencv2/video.hpp"

#include "opencv2/videoio.hpp"

#include "opencv2/highgui.hpp"

#include <iostream>

using namespace std;

using namespace cv;

int main(int argc, const char** argv)

{

const String keys = "{c camera | 0 | use video stream from camera (device index starting from 0) }"

"{fn file_name | | use video file as input }"

"{m method | mog2 | method: background subtraction algorithm ('knn', 'mog2')}"

"{h help | | show help message}";

CommandLineParser parser(argc, argv, keys);

parser.about("This sample demonstrates background segmentation.");

if (parser.has("help"))

{

parser.printMessage();

return 0;

}

int camera = parser.get<int>("camera");

String file = parser.get<String>("file_name");

String method = parser.get<String>("method");

if (!parser.check())

{

parser.printErrors();

return 1;

}

VideoCapture cap;

if (file.empty())

cap.open(camera);

else

{

file = samples::findFileOrKeep(file); // ignore gstreamer pipelines

cap.open(file.c_str());

}

if (!cap.isOpened())

{

cout << "Can not open video stream: '" << (file.empty() ? "<camera>" : file) << "'" << endl;

return 2;

}

Ptr<BackgroundSubtractor> model;

if (method == "knn")

model = createBackgroundSubtractorKNN();

else if (method == "mog2")

model = createBackgroundSubtractorMOG2();

if (!model)

{

cout << "Can not create background model using provided method: '" << method << "'" << endl;

return 3;

}

cout << "Press <space> to toggle background model update" << endl;

cout << "Press 's' to toggle foreground mask smoothing" << endl;

cout << "Press ESC or 'q' to exit" << endl;

bool doUpdateModel = true;

bool doSmoothMask = false;

Mat inputFrame, frame, foregroundMask, foreground, background;

for (;;)

{

// prepare input frame

cap >> inputFrame;

if (inputFrame.empty())

{

cout << "Finished reading: empty frame" << endl;

break;

}

const Size scaledSize(640, 640 * inputFrame.rows / inputFrame.cols);

resize(inputFrame, frame, scaledSize, 0, 0, INTER_LINEAR);

// pass the frame to background model

model->apply(frame, foregroundMask, doUpdateModel ? -1 : 0);

// show processed frame

imshow("image", frame);

// show foreground image and mask (with optional smoothing)

if (doSmoothMask)

{

GaussianBlur(foregroundMask, foregroundMask, Size(11, 11), 3.5, 3.5);

threshold(foregroundMask, foregroundMask, 10, 255, THRESH_BINARY);

}

if (foreground.empty())

foreground.create(scaledSize, frame.type());

foreground = Scalar::all(0);

frame.copyTo(foreground, foregroundMask);

imshow("foreground mask", foregroundMask);

imshow("foreground image", foreground);

// show background image

model->getBackgroundImage(background);

if (!background.empty())

imshow("mean background image", background );

// interact with user

const char key = (char)waitKey(30);

if (key == 27 || key == 'q') // ESC

{

cout << "Exit requested" << endl;

break;

}

else if (key == ' ')

{

doUpdateModel = !doUpdateModel;

cout << "Toggle background update: " << (doUpdateModel ? "ON" : "OFF") << endl;

}

else if (key == 's')

{

doSmoothMask = !doSmoothMask;

cout << "Toggle foreground mask smoothing: " << (doSmoothMask ? "ON" : "OFF") << endl;

}

}

return 0;

}

效果:

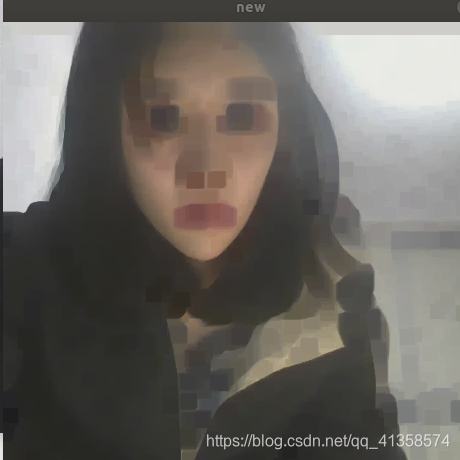

3。腐蚀

#include "opencv2/core.hpp"

#include "opencv2/imgproc.hpp"

#include "opencv2/video.hpp"

#include "opencv2/videoio.hpp"

#include "opencv2/highgui.hpp"

#include"opencv2/imgproc/imgproc.hpp"

#include <iostream>

using namespace std;

using namespace cv;

int main()

{

Mat img = imread("/home/heziyi/图片/7.jpg");

imshow("old",img);

Mat element = getStructuringElement(MORPH_RECT,Size(15,15));

Mat dimg;

erode(img,dimg,element);

imshow("new",dimg);

waitKey(0);

}

4。模糊:

int main()

{

Mat img = imread("/home/heziyi/图片/6.jpg");

imshow("old",img);

Mat element = getStructuringElement(MORPH_RECT,Size(15,15));

Mat dimg;

blur(img,dimg,Size(1,1));

imshow("new",dimg);

waitKey(0);

}

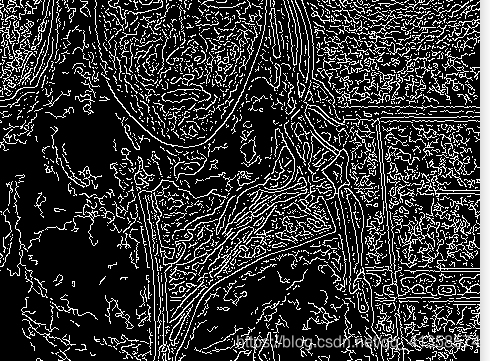

5。边缘检测:

#include "opencv2/core.hpp"

#include "opencv2/imgproc.hpp"

#include "opencv2/video.hpp"

#include "opencv2/videoio.hpp"

#include "opencv2/highgui.hpp"

#include"opencv2/imgproc/imgproc.hpp"

#include <iostream>

using namespace std;

using namespace cv;

int main()

{

Mat img = imread("/home/heziyi/图片/6.jpg");

imshow("old",img);

Mat element = getStructuringElement(MORPH_RECT,Size(15,15));

Mat dimg,edge,grayimg;

dimg.create(img.size(),img.type());

cvtColor(img,grayimg,COLOR_BGRA2GRAY);

blur(grayimg,edge,Size(3,3));

Canny(edge,edge,3,9,3);

imshow("new",edge);

waitKey(0);

}

VideoCapture 提供了摄像机或视频文件捕获视频的C++接口,作用是从视频文件或摄像头捕获视频并显示出来。

//循环显示每一帧

while(1)

{

Mat frame;//用于存储每一帧图像

capture>>frame;

imshow("picture",frame)

waitKey(30);//延时30毫秒

}

python接口:

使用numpy.array访问图像数据

将BGR图像在(0,0)处的像素转化为白 像素。

img[0,0] = [255, 255, 255]

通过三元数组的索引将像素的颜色值设为0

下面的代码可将图像所有的G (绿色)值设为0:

img[:,:,1]=0

cv2.imshow('ppp',img)

cv2.waitKey(30000)

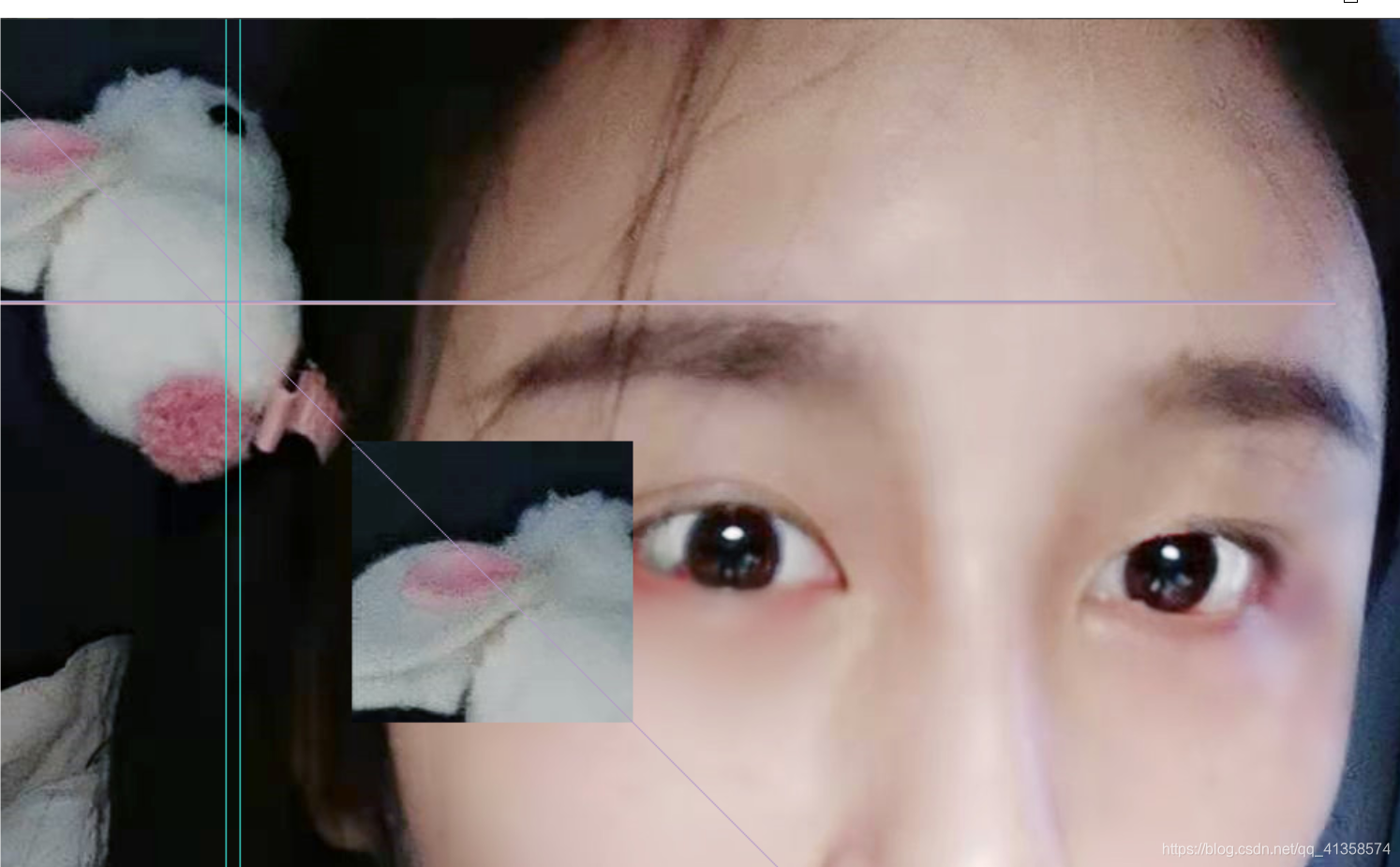

并将第一个区域的值分配给第二个区域(将图 像的一部分拷贝到该图像的另一个位置):

img[y,220]=[z,210,56]

my_roi = img[0:200 , 0:200]

img[300:500, 300:500] = my_roi

cv2.imshow('ppp',img)

cv2.waitKey(30000)

结果:

视频文件的读写

OpenCV提供了 VideoCapture类和VideoWriter类来支持各种格式的视频文件。支持 的格式类型会因系统的不同而变化,但应该都支持AVI格式。在到达视频文件末尾之前, VideoCapture类可通过read()函数来获取新的帧,每帧是一幅基于BGR格式的图像。

可将一幅图像传递给VideoWriter类的write()函数,该函数会将这幅图像加到 VideoWriter类所指向的文件中。下面给出了一个示例,该示例读取AVI文件的帧,并采用 YUV颜色编码将其写入另一个帧中:

import cv2

videoCapture = cv2.VideoCapture(1 MyInputVid.avi1)

fps = videoCapture.get(cv2.CAP_PROP_FPS)

size = (int(videoCapture.get(cv2.CAP_PROP_FRAME_WIDTH)),

int(videoCapture.get(cv2.CAP_PROP_FRAME_HEIGHT)))

videoWriter = cv2.VideoWriter(

'MyOutputVid.avi', cv2.VideoWriter_fourcc('I','4','2','0'),fps,size)

success, frame = videoCapture.read()

while success: # Loop until there are no more frames.

videoWriter.write(frame)

success, frame = videoCapture.read()