MobileNet v1/v2

卷积神经网络(CNN)已经普遍应用在计算机视觉领域,并且已经取得了不错的效果。图1为近几年来CNN在ImageNet竞赛的表现,可以看到为了追求分类准确度,模型深度越来越深,模型复杂度也越来越高,如深度残差网络(ResNet)其层数已经多达152层。

然而,在某些真实的应用场景如移动或者嵌入式设备,如此大而复杂的模型是难以被应用的。首先是模型过于庞大,面临着内存不足的问题,其次这些场景要求低延迟,或者说响应速度要快,想象一下自动驾驶汽车的行人检测系统如果速度很慢会发生什么可怕的事情。所以,研究小而高效的CNN模型在这些场景至关重要,至少目前是这样,尽管未来硬件也会越来越快。目前的研究总结来看分为两个方向:一是对训练好的复杂模型进行压缩得到小模型;二是直接设计小模型并进行训练。不管如何,其目标在保持模型性能(accuracy)的前提下降低模型大小(parameters size),同时提升模型速度(speed, low latency)。本文的主角MobileNet属于后者,其是Google最近提出的一种小巧而高效的CNN模型,其在accuracy和latency之间做了折中。

DW & PW 卷积

MobileNet_V1引入DW和PW卷积,减少了计算量和参数个数。

深度可分离卷积

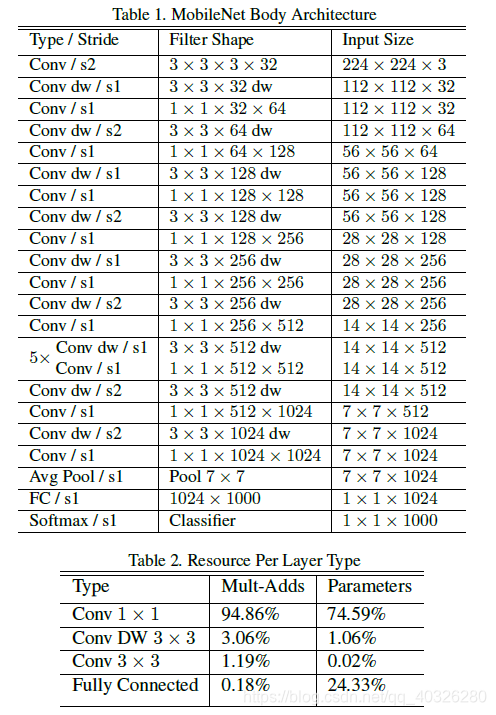

MobileNet的基本单元是深度级可分离卷积(depthwise separable convolution)

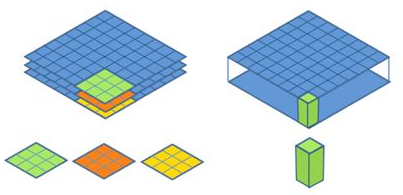

深度级可分离卷积其实是一种可分解卷积操作(factorized convolutions),其可以分解为两个更小的操作:depthwise convolution和pointwise convolution,如Figure 2所示。

Depthwise convolution和标准卷积不同,对于标准卷积其卷积核是用在所有的输入通道上(input channels),而depthwise convolution针对每个输入通道采用不同的卷积核,就是说一个卷积核对应一个输入通道,所以说depthwise convolution是depth级别的操作。而pointwise convolution其实就是普通的卷积,只不过其采用 1 x 1 1x1 1x1的卷积核。

上图中更清晰地展示了两种操作,左边为DW卷积,右边为PW卷积。

对于depthwise separable convolution,其首先是采用depthwise convolution对不同输入通道分别进行卷积,然后采用pointwise convolution将上面的输出再进行结合,这样其实整体效果和一个标准卷积是差不多的,但是会大大减少计算量和模型参数量。

假设卷积核大小为 D k × D k D_k\times D_k Dk×Dk,输入通道数为 M M M,输出通道数为 N N N,特征图的大小为 D F × D F D_F \times D_F DF×DF。

计算量压缩:

D k ⋅ D k ⋅ M ⋅ D F ⋅ D F + M ⋅ N ⋅ D F ⋅ D F D k ⋅ D k ⋅ M ⋅ N ⋅ D F ⋅ D F = 1 N + 1 D K 2 \frac{D_k\cdot D_k\cdot M\cdot D_F\cdot D_F + M\cdot N\cdot D_F\cdot D_F}{D_k\cdot D_k\cdot M\cdot N\cdot D_F\cdot D_F} = \frac{1}{N}+\frac{1}{D_K^2} Dk⋅Dk⋅M⋅N⋅DF⋅DFDk⋅Dk⋅M⋅DF⋅DF+M⋅N⋅DF⋅DF=N1+DK21

可以得出采用 3 × 3 3\times 3 3×3卷积一般可以减少8~9倍的计算量

参数压缩:

D K 2 × M + 1 × M × N D K 2 × M × N = 1 N + 1 D k 2 \frac{D_K^2\times M + 1 \times M\times N}{D_K^2\times M\times N} = \frac{1}{N}+\frac{1}{D_k^2} DK2×M×NDK2×M+1×M×N=N1+Dk21

若 D k D_k Dk = 3,参数量大约会减少到原来的 1/8 ~ 1/9

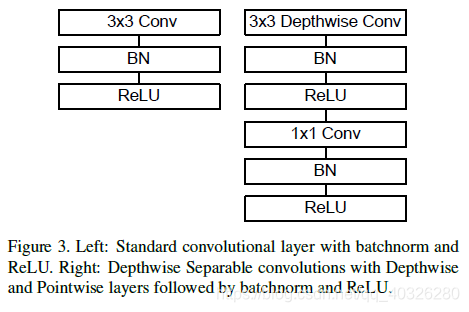

Conv卷积结构

论文中全部采用Conv实现,没有采用池化层,减少了一定的计算量

下面是MobileNet的卷积结构:

用Conv/s2,即步长为2的卷积代替Maxpooling + Conv,使得参数数量不变,计算量变为原来的 1/4 左右,且省去了MaxPool 的计算量

MobileNet模型精简

前面说的MobileNet的基准模型,但是有时候你需要更小的模型,那么就要对MobileNet瘦身了。

这里引入了两个超参数:width multiplier和resolution multiplier。

-

Width Multiplier( α \alpha α): Thinner Models

第一个参数

width multiplier主要是按比例减少通道数,该参数记为 α \alpha α ,其取值范围为 ( 0 , 1 ] (0,1] (0,1],那么输入与输出通道数将变成 α M \alpha M αM 和 α N \alpha N αN ,对于depthwise separable convolution,其计算量变为:D K × D K × α M × D F × D F + α M × α N × D F × D F D_{K}\times D_{K}\times \alpha M\times D_{F}\times D_{F}+ \alpha M\times \alpha N\times D_{F}\times D_{F} DK×DK×αM×DF×DF+αM×αN×DF×DF

- 所有层的 通道数(channel)乘以 α \alpha α(四舍五入),模型大小近似下降到原来的 α 2 \alpha^2 α2倍,计算量下降到原来的 α 2 \alpha^2 α2倍

- α ∈ ( 0 , 1 ] \alpha \in (0,1] α∈(0,1],典型值为1, 0.75, 0.5, 0.25,降低模型的宽度

-

Resolution Multiplier( ρ \rho ρ): Reduced Representation

因为主要计算量在后一项,所以

width multiplier可以按照比例降低计算量,其是参数量也会下降。第二个参数resolution multiplier主要是按比例降低特征图的大小,记为 ρ \rho ρ ,比如原来输入特征图是 224 × 224 224\times 224 224×224,可以减少为 192 × 192 192\times 192 192×192,加上resolution multiplier,depthwise separable convolution的计算量为:D K × D K × α M × ρ D F × ρ D F + α M × α N × ρ D F × ρ D F D_{K}\times D_{K}\times \alpha M\times \rho D_{F}\times \rho D_{F}+ \alpha M\times \alpha N\times \rho D_{F}\times \rho D_{F} DK×DK×αM×ρDF×ρDF+αM×αN×ρDF×ρDF

- 输入层的分辨率乘以 ρ \rho ρ参数(四舍五入),等价于所有层的分辨率乘以 ρ \rho ρ,模型大小不变,计算朗下降到原来的 ρ 2 \rho^2 ρ2倍

- ρ ∈ ( 0 , 1 ] \rho \in (0,1] ρ∈(0,1],降低输入图像的分辨率

- 输入层的分辨率乘以 ρ \rho ρ参数(四舍五入),等价于所有层的分辨率乘以 ρ \rho ρ,模型大小不变,计算朗下降到原来的 ρ 2 \rho^2 ρ2倍

要说明的是,resolution multiplier仅仅影响计算量,但是不改变参数量。引入两个参数会给肯定会降低MobileNet的性能,具体实验分析可以见paper,总结来看是在accuracy和computation,以及accuracy和model size之间做折中。

MobileNet_V1网络结构

MobileNetV2:Inverted Residuals and Linear Bottlenecks

主要改进点

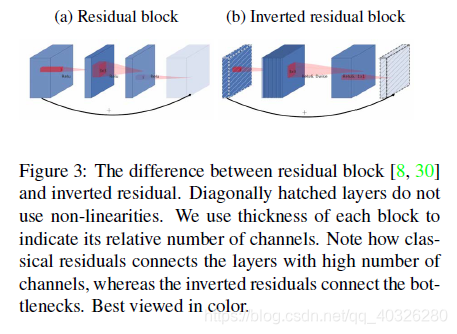

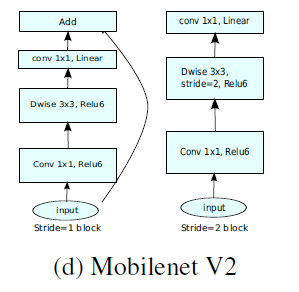

- 引入倒残差结构,先升维再降维,增强梯度的传播,显著减少推理期间所需的内存占用(Inverted Residuals)

- 去掉

Narrow layer(low dimension or depth)后的ReLU,保留特征多样性,增强网络的表达能力(Linear Bottlenecks) - 网络为全卷积,使得模型可以适应不同尺寸的图像;使用

RELU6(最高输出为 6)激活函数,使得模型在低精度计算下具有更强的鲁棒性 - MobileNetV2 Inverted residual block 如下所示,若需要下采样,可在

DW时采用步长为 2 的卷积 - 小网络使用小的扩张系数(expansion factor),大网络使用大一点的扩张系数(expansion factor),推荐是5~10,论文中 t = 6 t = 6t=6

倒残差结构(Inverted residual block)

ResNet的Bottleneck结构是降维->卷积->升维,是两边细中间粗

而MobileNetV2是先升维(6倍)-> 卷积 -> 降维,是沙漏形。

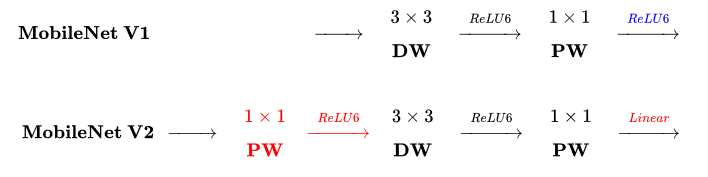

区别于MobileNetV1, MobileNetV2的卷积结构如下:

因为DW卷积不改变通道数,所以如果上一层的通道数很低时,DW只能在低维空间提取特征,效果不好。所以V2版本在DW前面加了一层PW用来升维。

同时V2去除了第二个PW的激活函数改用线性激活,因为激活函数在高维空间能够有效地增加非线性,但在低维空间时会破坏特征。由于第二个PW主要的功能是降维,所以不宜再加ReLU6。

当strides=1且输入特征矩阵与输出特征矩阵shape相同时才有shortcut连接

MobileNet_V2 realized by tensorflow2

import tensorflow as tf

import numpy as np

from tensorflow.keras import layers, Sequential, Model

class ConvBNReLU(layers.Layer):

def __init__(self, out_channel, kernel_size=3, strides=1, **kwargs):

super(ConvBNReLU, self).__init__(**kwargs)

self.conv = layers.Conv2D(filters=out_channel,

kernel_size=kernel_size,

strides=strides,

padding='SAME',

use_bias=False,

name='Conv2d')

self.bn = layers.BatchNormalization(momentum=0.9, epsilon=1e-5, name='BatchNorm')

self.activation = layers.ReLU(max_value=6.0) # ReLU6

def call(self, inputs, training=False, **kargs):

x = self.conv(inputs)

x = self.bn(x, training=training)

x = self.activation(x)

return x

class InvertedResidualBlock(layers.Layer):

def __init__(self, in_channel, out_channel, strides, expand_ratio, **kwargs):

super(InvertedResidualBlock, self).__init__(**kwargs)

self.hidden_channel = in_channel * expand_ratio

self.use_shortcut = (strides == 1) and (in_channel == out_channel)

layer_list = []

# first bottleneck does not need 1*1 conv

if expand_ratio != 1:

# 1x1 pointwise conv

layer_list.append(ConvBNReLU(out_channel=self.hidden_channel, kernel_size=1, name='expand'))

layer_list.extend([

# 3x3 depthwise conv

layers.DepthwiseConv2D(kernel_size=3, padding='SAME', strides=strides, use_bias=False, name='depthwise'),

layers.BatchNormalization(momentum=0.9, epsilon=1e-5, name='depthwise/BatchNorm'),

layers.ReLU(max_value=6.0),

#1x1 pointwise conv(linear)

# linear activation y = x -> no activation function

layers.Conv2D(filters=out_channel, kernel_size=1, strides=1, padding='SAME', use_bias=False, name='project'),

layers.BatchNormalization(momentum=0.9, epsilon=1e-5, name='project/BatchNorm')

])

self.main_branch = Sequential(layer_list, name='expanded_conv')

def call(self, inputs, **kargs):

if self.use_shortcut:

return inputs + self.main_branch(inputs)

else:

return self.main_branch(inputs)

MobileNet_V2网络结构

def _make_divisible(ch, divisor=8, min_ch=None):

"""

This function is taken from the original tf repo.

It ensures that all layers have a channel number that is divisible by 8

It can be seen here:

https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet/mobilenet.py

"""

if min_ch is None:

min_ch = divisor

new_ch = max(min_ch, int(ch + divisor / 2) // divisor * divisor)

# Make sure that round down does not go down by more than 10%.

if new_ch < 0.9 * ch:

new_ch += divisor

return new_ch

def MobileNet_V2(im_height=224,

im_width=224,

num_classes=1000,

alpha=1.0,

round_nearest=8,

include_top=True):

block = InvertedResidualBlock

input_channel = _make_divisible(32*alpha, round_nearest)

last_channel = _make_divisible(1280*alpha, round_nearest)

# t, c, n, s

inverted_residual_setting = [

[1, 16, 1, 1],

[6, 24, 2, 2],

[6, 32, 3, 2],

[6, 64, 4, 2],

[6, 96, 3, 1],

[6, 160, 3, 2],

[6, 320, 1, 1]

]

input_image = layers.Input(shape=(im_height, im_width, 3), dtype=tf.float32)

# conv1

x = ConvBNReLU(input_channel, strides=2, name='Conv')(input_image)

# building inverted residual blocks

for idx, (t, c, n, s) in enumerate(inverted_residual_setting):

output_channel = _make_divisible(c*alpha, round_nearest)

for i in range(n):

strides = s if i == 0 else 1

x = block(x.shape[-1], # n h w c

output_channel,

strides,

expand_ratio=t)(x)

# building last several layers

x = ConvBNReLU(last_channel, kernel_size=1, name='Conv_1')(x)

if include_top is True:

# building classifier

x = layers.GlobalAveragePooling2D()(x) # pool + flatten

x = layers.Dropout(0.2)(x)

output = layers.Dense(num_classes, name='Logits')(x)

else:

output = x

model = Model(inputs=input_image, outputs=output)

return model

def main():

#

gpus = tf.config.experimental.list_physical_devices("GPU")

if gpus:

try:

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

print(gpu)

except RuntimeError as e:

print(e)

exit(-1)

model = MobileNet_V2(im_height=224,

im_width=224,

num_classes=1000,

alpha=0.75,

round_nearest=8,

include_top=True)

model.build((None, 224, 224, 3))

model.summary()

data = tf.random.normal([4, 224, 224, 3])

pred = model.predict(data)

print("input shape:\n", data.shape)

print("output shape:\n", pred.shape)

if __name__ == '__main__':

main()

PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')

Model: "model_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_5 (InputLayer) [(None, 224, 224, 3)] 0

_________________________________________________________________

Conv (ConvBNReLU) (None, 112, 112, 24) 744

_________________________________________________________________

inverted_residual_block_52 ( (None, 112, 112, 16) 760

_________________________________________________________________

inverted_residual_block_53 ( (None, 56, 56, 24) 5568

_________________________________________________________________

inverted_residual_block_54 ( (None, 56, 56, 24) 9456

_________________________________________________________________

inverted_residual_block_55 ( (None, 28, 28, 24) 9456

_________________________________________________________________

inverted_residual_block_56 ( (None, 28, 28, 24) 9456

_________________________________________________________________

inverted_residual_block_57 ( (None, 28, 28, 24) 9456

_________________________________________________________________

inverted_residual_block_58 ( (None, 14, 14, 48) 13008

_________________________________________________________________

inverted_residual_block_59 ( (None, 14, 14, 48) 32736

_________________________________________________________________

inverted_residual_block_60 ( (None, 14, 14, 48) 32736

_________________________________________________________________

inverted_residual_block_61 ( (None, 14, 14, 48) 32736

_________________________________________________________________

inverted_residual_block_62 ( (None, 14, 14, 72) 39744

_________________________________________________________________

inverted_residual_block_63 ( (None, 14, 14, 72) 69840

_________________________________________________________________

inverted_residual_block_64 ( (None, 14, 14, 72) 69840

_________________________________________________________________

inverted_residual_block_65 ( (None, 7, 7, 120) 90768

_________________________________________________________________

inverted_residual_block_66 ( (None, 7, 7, 120) 185520

_________________________________________________________________

inverted_residual_block_67 ( (None, 7, 7, 120) 185520

_________________________________________________________________

inverted_residual_block_68 ( (None, 7, 7, 240) 272400

_________________________________________________________________

Conv_1 (ConvBNReLU) (None, 7, 7, 960) 234240

_________________________________________________________________

global_average_pooling2d_3 ( (None, 960) 0

_________________________________________________________________

dropout_3 (Dropout) (None, 960) 0

_________________________________________________________________

Logits (Dense) (None, 1000) 961000

=================================================================

Total params: 2,264,984

Trainable params: 2,238,984

Non-trainable params: 26,000

_________________________________________________________________

input shape:

(4, 224, 224, 3)

output shape:

(4, 1000)