一、问题

build cube,数据的时间范围是从20210114000000到20210115000000

#7 Step Name: Build Base Cuboid

在第7步报错:BufferOverflow! Please use one higher cardinality column for dimension column when build RAW cube!

#10 Step Name: Build N-Dimension Cuboid : level 3

第10步报错:BufferOverflow! Please use one higher cardinality column for dimension column when build RAW cube!

Kylin 版本:

apache-kylin-3.0.0-bin-cdh60

报错日志:

2021-01-25 19:00:47,570 ERROR [IPC Server handler 6 on 23018] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Task: attempt_1611538815675_0565_m_000002_0 - exited : java.io.IOException: Spill failed

at org.apache.hadoop.mapred.MapTask$MapOutputBuffer.checkSpillException(MapTask.java:1585)

at org.apache.hadoop.mapred.MapTask$MapOutputBuffer.access$300(MapTask.java:888)

at org.apache.hadoop.mapred.MapTask$MapOutputBuffer$Buffer.write(MapTask.java:1388)

at org.apache.hadoop.mapred.MapTask$MapOutputBuffer$Buffer.write(MapTask.java:1365)

at java.io.DataOutputStream.writeByte(DataOutputStream.java:153)

at org.apache.hadoop.io.WritableUtils.writeVLong(WritableUtils.java:273)

at org.apache.hadoop.io.WritableUtils.writeVInt(WritableUtils.java:253)

at org.apache.hadoop.io.Text.write(Text.java:330)

at org.apache.hadoop.io.serializer.WritableSerialization$WritableSerializer.serialize(WritableSerialization.java:98)

at org.apache.hadoop.io.serializer.WritableSerialization$WritableSerializer.serialize(WritableSerialization.java:82)

at org.apache.hadoop.mapred.MapTask$MapOutputBuffer.collect(MapTask.java:1163)

at org.apache.hadoop.mapred.MapTask$NewOutputCollector.write(MapTask.java:727)

at org.apache.hadoop.mapreduce.task.TaskInputOutputContextImpl.write(TaskInputOutputContextImpl.java:89)

at org.apache.hadoop.mapreduce.lib.map.WrappedMapper$Context.write(WrappedMapper.java:112)

at org.apache.kylin.engine.mr.steps.BaseCuboidMapperBase.outputKV(BaseCuboidMapperBase.java:88)

at org.apache.kylin.engine.mr.steps.HiveToBaseCuboidMapper.doMap(HiveToBaseCuboidMapper.java:45)

at org.apache.kylin.engine.mr.KylinMapper.map(KylinMapper.java:77)

at org.apache.hadoop.mapreduce.Mapper.run(Mapper.java:146)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:799)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:347)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:174)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:168)

Caused by: java.lang.RuntimeException: BufferOverflow! Please use one higher cardinality column for dimension column when build RAW cube!

at org.apache.kylin.measure.raw.RawSerializer.serialize(RawSerializer.java:84)

at org.apache.kylin.measure.raw.RawSerializer.serialize(RawSerializer.java:30)

at org.apache.kylin.measure.MeasureCodec.encode(MeasureCodec.java:76)

at org.apache.kylin.measure.BufferedMeasureCodec.encode(BufferedMeasureCodec.java:93)

at org.apache.kylin.engine.mr.steps.CuboidReducer.doReduce(CuboidReducer.java:112)

at org.apache.kylin.engine.mr.steps.CuboidReducer.doReduce(CuboidReducer.java:44)

at org.apache.kylin.engine.mr.KylinReducer.reduce(KylinReducer.java:77)

at org.apache.hadoop.mapreduce.Reducer.run(Reducer.java:171)

at org.apache.hadoop.mapred.Task$NewCombinerRunner.combine(Task.java:1831)

at org.apache.hadoop.mapred.MapTask$MapOutputBuffer.sortAndSpill(MapTask.java:1661)

at org.apache.hadoop.mapred.MapTask$MapOutputBuffer.access$1000(MapTask.java:888)

at org.apache.hadoop.mapred.MapTask$MapOutputBuffer$SpillThread.run(MapTask.java:1555)

二、解决思路

0 搜索引擎搜索报错

查看前10个连接,未发现有用信息

1 查看kylin的官网的常见问题

kylin方官网站

找到一篇博客

http://kylin.apache.org/blog/2016/05/29/raw-measure-in-kylin/

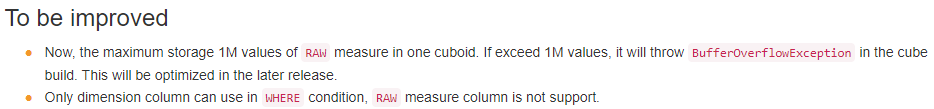

当前buffer大小为1M,如果超过1M,使用RAW的cube构建时会抛出BufferOverflowException

2 查看Kylin的JIRA

搜索关键词:BufferOverflow

发现两个有关的issue

https://issues.apache.org/jira/browse/KYLIN-1737

https://issues.apache.org/jira/browse/KYLIN-1718

但是已经在v1.5.3修复了

3 查看Kylin的邮件列表

Kylin 邮件列表

搜索关键词:BufferOverflow

发现一个邮件

http://apache-kylin.74782.x6.nabble.com/Discuss-Disable-hide-quot-RAW-quot-measure-in-Kylin-web-GUI-td6636.html

the raw messages for a dimension combination are persisted in one big

the raw messages for a dimension combination are persisted in one big

cell,; When too many rows dumped in one cell, will get BufferOverflow

error. This couldn’t be predicted, so for an modeler or analyst he doesn’t

know whether this feature could work when he creates the cube.

维度组合的原始消息保留在一个大单元格;当在一个单元格中转储的行太多时,将得到缓冲区溢出错误。这是无法预测的,所以对于一个建模者或分析师,他不知道此功能在创建多维数据集时是否有效。

1 根据这个猜测,可能一个ceil中包含的数据太多了。

我们知道在Hbase中,RowKey是由cuboid id 和维度组合对应的词典编码后的值组合而成,每个度量对应一列。

2 根据报错本身来看,需要增加一个基数较高的维度

3.1 重新创建新cube(在原来cube的基础上修改)

根据报错提示,在Dimensions这步增加一个高基数的维度

1 在选择维度的时候增加一个基数较高的维度

在Advanced Setting步骤,默认Advanced ColumnFamily这里所有的measure都在同一个ColumnFamily。此时会导致hbase里一个ceil过大

2 修改为分成2个ColumnFamily,每个ColumnFamily分一半measure。

建好后,重新构建,19年到20年的数据可以成功构建,但在构建20到21年数据时,又出现了同样报错。看来cube结构不是主要原因,结合之前可以正常构建任意天数的情况来看,猜测可能有部分与内存相关的配置和之前有不同的地方。

4 检查配置文件

vim setenv-tool.sh

发现如下配置

export KYLIN_JVM_SETTINGS="-Xms32g -Xmx32g -XX:MaxPermSize=1024m -XX:NewSize=6g -XX:MaxNewSize=6g -XX:SurvivorRatio=4 -XX:+CMSClassUnloadingEnabled -XX:+CMSParallelRemarkEnabled -XX:+UseConcMarkSweepGC -XX:+CMSIncrementalMode -XX:CMSInitiatingOccupancyFraction=70 -XX:+UseCMSInitiatingOccupancyOnly -XX:+DisableExplicitGC -XX:+HeapDumpOnOutOfMemoryError -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc:$KYLIN_HOME/logs/kylin.gc.$$ -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=64M"

官方给了两组参数配置,默认是低配,如下所示:

export KYLIN_JVM_SETTINGS="-Xms1024M -Xmx4096M -Xss1024K -XX`MaxPermSize=512M -verbose`gc -XX`+PrintGCDetails -XX`+PrintGCDateStamps -Xloggc`$KYLIN_HOME/logs/kylin.gc.$$ -XX`+UseGCLogFileRotation -XX`NumberOfGCLogFiles=10 -XX`GCLogFileSize=64M"

# export KYLIN_JVM_SETTINGS="-Xms16g -Xmx16g -XX`MaxPermSize=512m -XX`NewSize=3g -XX`MaxNewSize=3g -XX`SurvivorRatio=4 -XX`+CMSClassUnloadingEnabled -XX`+CMSParallelRemarkEnabled -XX`+UseConcMarkSweepGC -XX`+CMSIncrementalMode -XX`CMSInitiatingOccupancyFraction=70 -XX`+UseCMSInitiatingOccupancyOnly -XX`+DisableExplicitGC -XX`+HeapDumpOnOutOfMemoryError -verbose`gc -XX`+PrintGCDetails -XX`+PrintGCDateStamps -Xloggc`$KYLIN_HOME/logs/kylin.gc.$$ -XX`+UseGCLogFileRotation -XX`NumberOfGCLogFiles=10 -XX`GCLogFileSize=64M"

映像中之前用的配置是官方推荐的高性能配置,备份配置文件,将参数还原成官方推荐的高性能参数,重新build发现依旧报错。

5 分析数据,分段构建,找出不能构建的数据段

思路:遇到不能构建的时间段,就一分为二,先构建前一段;以此缩小不能成功构建数据的时间范围

- 一共2年的数据,先构建19 ~ 20年的,发现可以成功构建,再构建从20 ~ 21年的又出现了BufferOverflow

- 构建20年1月 ~ 20年7月的,发现可以成功构建。构建20年7月 ~ 21年1月1日的,发现可以成功构建。

- 构建2021年1月1日 ~ 2021年1月25日的,发现又报BufferOverflow的错误。

- 构建2021年1月1日 ~ 2021年1月10日的数据,可以构建成功。

- 构建2021年1月10日 ~ 2021年1月25日,报错

到这里发现,不能构建的只是近25日的数据

5.1观察2021年1月10日 ~ 2021年1月25日的数据和可以成功构建的数据对比发现

不能构建的这段数据内超过60%的浮点数其精度达到了小数点后7位;而其他可以构建的时间段的数据的精度只有10%的精度达到小数点后7位,90%的数据的精度为小数点后2位。**

2021年1月10日 ~ 2021年1月25日数据样例:

其他可以构建的时间段数据样例:

经过和业务端确认,2021年1月1日后客户确实要求不进行保留2位小数,有多少位就是多少位。根据下面范围进一步缩小,可以确定精度不是主要原因。

经过和业务端确认,2021年1月1日后客户确实要求不进行保留2位小数,有多少位就是多少位。根据下面范围进一步缩小,可以确定精度不是主要原因。

5.2进一步缩小范围发现2021-01-12和2021-01-14构建报错

只有20210112000000 ~ 20210114000000 这段时间的数据无法构建。

报错依旧是:

BufferOverflow! Please use one higher cardinality column for dimension column when build RAW cube!

下载2021-01-12和2021-01-14数据

hive -e "insert overwrite local directory '/var/lib/hadoop-hdfs/tmp/2021-01-12' row format delimited fields terminated by ',' select * from db.tablename where flight_date ='2021-01-12'";

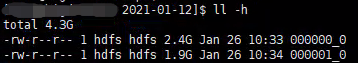

2021-01-12日数据量

2021-01-12数据节选

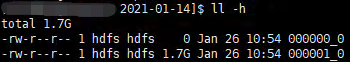

2021-01-14日数据量

2021-01-14日数据量

2021-01-14数据节选

发现2021-01-12和2021-01-14两天存在重复数据

hive -e "select count(*) from db.tablename where event_date='2021-01-12' group by pk";

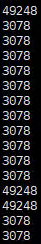

sql的查询结果:

根本问题:终于定位到了,即:存在大量重复数据,重复次数最高为49248次

导致构建base cubeid时,一个key对应的value太大,远超过1MB,报错中提示的添加一个高一点基数的维度也是想让单个key对应的value小一些。

解决:

truncate hive表,重新导入数据。

已可以正常构建

5.3 构建2021-01-13报错

报错阶段:

#10 Step Name: Build Cube In-Mem

日志:

Logged in as: dr.who

Application

Job

Overview

Counters

Configuration

Map tasks

Reduce tasks

Tools

Log Type: syslog

Log Upload Time: Tue Jan 26 10:57:09 +0800 2021

Log Length: 86830

2021-01-26 10:56:11,468 INFO [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: Created MRAppMaster for application appattempt_1611538815675_2243_000001

2021-01-26 10:56:11,568 INFO [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster:

/************************************************************

[system properties]

os.name: Linux

os.version: 3.10.0-862.el7.x86_64

java.home: /usr/java/jdk1.8.0_131/jre

java.runtime.version: 1.8.0_131-b11

java.vendor: Oracle Corporation

java.version: 1.8.0_131

java.vm.name: Java HotSpot(TM) 64-Bit Server VM

java.class.path: /opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001:/etc/hadoop/conf.cloudera.yarn:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/bcpkix-jdk15on-1.60.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jackson-mapper-asl-1.9.13-cloudera.1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-format-javadoc.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/metrics-core-3.0.1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/netty-handler-4.1.17.Final.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jersey-servlet-1.19.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/guice-4.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-thrift.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/spark-2.4.0-cdh6.2.0-yarn-shuffle.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-hdfs-client-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-protobuf.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/okhttp-2.7.5.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/paranamer-2.8.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-gridmix-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jetty-xml-9.3.25.v20180904.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/commons-compress-1.4.1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/aliyun-sdk-oss-2.8.3.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/fst-2.50.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jsp-api-2.1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/guava-11.0.2.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/zookeeper.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-hdfs-native-client-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jsr311-api-1.1.1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jersey-json-1.19.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/commons-cli-1.2.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jaxb-impl-2.2.3-1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-yarn-registry-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/asm-5.0.4.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-mapreduce-client-nativetask-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jackson-core-2.9.8.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-archives-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jetty-util-9.3.25.v20180904.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-yarn-server-common-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/bcprov-jdk15on-1.60.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jackson-annotations-2.9.8.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/log4j-core-2.8.2.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/avro.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/json-smart-2.3.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-mapreduce-client-common-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/wildfly-openssl-1.0.4.Final.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/kerb-server-1.0.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-pig-bundle.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jetty-http-9.3.25.v20180904.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-yarn-applications-unmanaged-am-launcher-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-hdfs-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jaxb-api-2.2.11.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-hdfs-native-client-3.0.0-cdh6.2.0-tests.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/kerby-xdr-1.0.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jersey-guice-1.19.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jackson-core-asl-1.9.13.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-mapreduce-client-core-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-encoding.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-archive-logs-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/gson-2.2.4.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-hdfs-client-3.0.0-cdh6.2.0-tests.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-yarn-server-web-proxy-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-distcp-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/json-simple-1.1.1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/re2j-1.1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/netty-common-4.1.17.Final.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/kerb-simplekdc-1.0.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/commons-math3-3.1.1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/json-io-2.5.1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/log4j-api-2.8.2.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jackson-jaxrs-1.9.13.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/snappy-java-1.1.4.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-auth-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-mapreduce-client-app-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/HikariCP-java7-2.4.12.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-format.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-cascading3.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/commons-collections-3.2.2.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jdom-1.1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/commons-beanutils-1.9.3.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jackson-module-jaxb-annotations-2.9.8.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-yarn-api-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-streaming-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/slf4j-log4j12.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/leveldbjni-all-1.8.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/audience-annotations-0.5.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jsch-0.1.54.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-azure-datalake-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/htrace-core4-4.1.0-incubating.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/stax2-api-3.1.4.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-openstack-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/zookeeper-3.4.5-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/curator-recipes-2.12.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-mapreduce-client-jobclient-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jackson-databind-2.9.8.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/accessors-smart-1.2.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-mapreduce-examples-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jetty-security-9.3.25.v20180904.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/tt-instrumentation-6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/httpcore-4.4.6.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/commons-daemon-1.0.13.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-common-3.0.0-cdh6.2.0-tests.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/zstd-jni-1.3.5-4.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jackson-jaxrs-json-provider-2.9.8.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-mapreduce-client-uploader-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/kerby-config-1.0.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-common-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-azure-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/okio-1.6.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/lz4-java-1.5.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jackson-jaxrs-base-2.9.8.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/commons-configuration2-2.1.1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/kerby-util-1.0.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-datajoin-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/xz-1.6.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-jackson.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-mapreduce-client-hs-plugins-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-hdfs-httpfs-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-aws-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-kafka-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/netty-codec-4.1.17.Final.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-avro.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/httpclient-4.5.3.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/objenesis-1.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-mapreduce-client-hs-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/log4j-1.2.17.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/netty-3.10.6.Final.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-yarn-server-tests-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jetty-server-9.3.25.v20180904.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/woodstox-core-5.0.3.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/kerb-admin-1.0.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/commons-io-2.6.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/curator-client-2.12.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/aws-java-sdk-bundle-1.11.271.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-column.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-format-sources.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/commons-net-3.1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-hdfs-nfs-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jersey-server-1.19.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/protobuf-java-2.5.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-generator.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-yarn-applications-distributedshell-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-kms-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/kerb-common-1.0.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/kerb-identity-1.0.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-sls-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/kerb-util-1.0.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-hadoop-bundle.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/azure-data-lake-store-sdk-2.2.9.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jcip-annotations-1.0-1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/netty-buffer-4.1.17.Final.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/javax.servlet-api-3.1.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-common.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/slf4j-api-1.7.25.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/ehcache-3.3.1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jackson-xc-1.9.13.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jetty-io-9.3.25.v20180904.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-mapreduce-client-jobclient-3.0.0-cdh6.2.0-tests.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/kafka-clients-2.1.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jetty-servlet-9.3.25.v20180904.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-scala_2.11.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/commons-lang-2.6.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jul-to-slf4j-1.7.25.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jettison-1.1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/commons-codec-1.11.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jsr305-3.0.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jetty-util-ajax-9.3.25.v20180904.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-rumen-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/avro-1.8.2-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-extras-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-aliyun-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/aopalliance-1.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/javax.inject-1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/curator-framework-2.12.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jetty-webapp-9.3.25.v20180904.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/kerb-core-1.0.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-hdfs-3.0.0-cdh6.2.0-tests.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/azure-keyvault-core-0.8.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-yarn-common-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-pig.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/kerb-client-1.0.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jersey-core-1.19.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/logredactor-2.0.7.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/commons-logging-1.1.3.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/kerb-crypto-1.0.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-cascading.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-nfs-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-mapreduce-client-shuffle-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/kerby-pkix-1.0.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-resourceestimator-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/guice-servlet-4.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/nimbus-jose-jwt-4.41.1.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/gcs-connector-hadoop3-1.9.10-cdh6.2.0-shaded.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-annotations-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/netty-transport-4.1.17.Final.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/ojalgo-43.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/kerby-asn1-1.0.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/azure-storage-5.4.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/commons-lang3-3.7.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-yarn-client-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/jersey-client-1.19.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/parquet-hadoop.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/mssql-jdbc-6.2.1.jre7.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/java-util-1.9.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/netty-resolver-4.1.17.Final.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/hadoop-yarn-server-nodemanager-3.0.0-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/netty-codec-http-4.1.17.Final.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/mr-framework/event-publish-6.2.0-shaded.jar::job.jar/job.jar:job.jar/classes/:job.jar/lib/*:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/hive-metastore-2.1.1-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/hive-metastore.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/hive-exec-2.1.1-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/hive-hcatalog-core-2.1.1-cdh6.2.0.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/hive-exec.jar:/opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/job.jar

java.io.tmpdir: /opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/tmp

user.dir: /opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001

user.name: yarn

************************************************************/

2021-01-26 10:56:11,616 INFO [main] org.apache.hadoop.security.SecurityUtil: Updating Configuration

2021-01-26 10:56:11,735 INFO [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: Executing with tokens: [Kind: YARN_AM_RM_TOKEN, Service: , Ident: (appAttemptId { application_id { id: 2243 cluster_timestamp: 1611538815675 } attemptId: 1 } keyId: 1810587767)]

2021-01-26 10:56:11,766 INFO [main] org.apache.hadoop.conf.Configuration: resource-types.xml not found

2021-01-26 10:56:11,766 INFO [main] org.apache.hadoop.yarn.util.resource.ResourceUtils: Unable to find 'resource-types.xml'.

2021-01-26 10:56:11,778 INFO [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: Using mapred newApiCommitter.

2021-01-26 10:56:11,779 INFO [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: OutputCommitter set in config null

2021-01-26 10:56:11,806 INFO [main] org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter: File Output Committer Algorithm version is 2

2021-01-26 10:56:11,806 INFO [main] org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2021-01-26 10:56:12,448 WARN [main] org.apache.hadoop.util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2021-01-26 10:56:12,482 INFO [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

2021-01-26 10:56:12,650 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.jobhistory.EventType for class org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler

2021-01-26 10:56:12,650 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.job.event.JobEventType for class org.apache.hadoop.mapreduce.v2.app.MRAppMaster$JobEventDispatcher

2021-01-26 10:56:12,651 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.job.event.TaskEventType for class org.apache.hadoop.mapreduce.v2.app.MRAppMaster$TaskEventDispatcher

2021-01-26 10:56:12,652 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.job.event.TaskAttemptEventType for class org.apache.hadoop.mapreduce.v2.app.MRAppMaster$TaskAttemptEventDispatcher

2021-01-26 10:56:12,652 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.commit.CommitterEventType for class org.apache.hadoop.mapreduce.v2.app.commit.CommitterEventHandler

2021-01-26 10:56:12,653 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.speculate.Speculator$EventType for class org.apache.hadoop.mapreduce.v2.app.MRAppMaster$SpeculatorEventDispatcher

2021-01-26 10:56:12,653 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.rm.ContainerAllocator$EventType for class org.apache.hadoop.mapreduce.v2.app.MRAppMaster$ContainerAllocatorRouter

2021-01-26 10:56:12,654 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncher$EventType for class org.apache.hadoop.mapreduce.v2.app.MRAppMaster$ContainerLauncherRouter

2021-01-26 10:56:12,687 INFO [main] org.apache.hadoop.mapreduce.v2.jobhistory.JobHistoryUtils: Default file system [hdfs://Aero:8020]

2021-01-26 10:56:12,703 INFO [main] org.apache.hadoop.mapreduce.v2.jobhistory.JobHistoryUtils: Default file system [hdfs://Aero:8020]

2021-01-26 10:56:12,716 INFO [main] org.apache.hadoop.mapreduce.v2.jobhistory.JobHistoryUtils: Default file system [hdfs://Aero:8020]

2021-01-26 10:56:12,736 INFO [main] org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler: Emitting job history data to the timeline server is not enabled

2021-01-26 10:56:12,777 INFO [main] org.apache.hadoop.yarn.event.AsyncDispatcher: Registering class org.apache.hadoop.mapreduce.v2.app.job.event.JobFinishEvent$Type for class org.apache.hadoop.mapreduce.v2.app.MRAppMaster$JobFinishEventHandler

2021-01-26 10:56:13,019 WARN [main] org.apache.hadoop.metrics2.impl.MetricsConfig: Cannot locate configuration: tried hadoop-metrics2-mrappmaster.properties,hadoop-metrics2.properties

2021-01-26 10:56:13,070 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Scheduled Metric snapshot period at 10 second(s).

2021-01-26 10:56:13,070 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: MRAppMaster metrics system started

2021-01-26 10:56:13,078 INFO [main] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Adding job token for job_1611538815675_2243 to jobTokenSecretManager

2021-01-26 10:56:13,176 INFO [main] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Not uberizing job_1611538815675_2243 because: not enabled; too much RAM;

2021-01-26 10:56:13,190 INFO [main] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Input size for job job_1611538815675_2243 = 130. Number of splits = 1

2021-01-26 10:56:13,191 INFO [main] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Number of reduces for job job_1611538815675_2243 = 1

2021-01-26 10:56:13,191 INFO [main] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: job_1611538815675_2243Job Transitioned from NEW to INITED

2021-01-26 10:56:13,192 INFO [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: MRAppMaster launching normal, non-uberized, multi-container job job_1611538815675_2243.

2021-01-26 10:56:13,212 INFO [main] org.apache.hadoop.ipc.CallQueueManager: Using callQueue: class java.util.concurrent.LinkedBlockingQueue queueCapacity: 100 scheduler: class org.apache.hadoop.ipc.DefaultRpcScheduler

2021-01-26 10:56:13,220 INFO [Socket Reader #1 for port 14786] org.apache.hadoop.ipc.Server: Starting Socket Reader #1 for port 14786

2021-01-26 10:56:13,399 INFO [main] org.apache.hadoop.yarn.factories.impl.pb.RpcServerFactoryPBImpl: Adding protocol org.apache.hadoop.mapreduce.v2.api.MRClientProtocolPB to the server

2021-01-26 10:56:13,400 INFO [IPC Server listener on 14786] org.apache.hadoop.ipc.Server: IPC Server listener on 14786: starting

2021-01-26 10:56:13,400 INFO [IPC Server Responder] org.apache.hadoop.ipc.Server: IPC Server Responder: starting

2021-01-26 10:56:13,400 INFO [main] org.apache.hadoop.mapreduce.v2.app.client.MRClientService: Instantiated MRClientService at bigdata002/192.168.212.107:14786

2021-01-26 10:56:13,430 INFO [main] org.eclipse.jetty.util.log: Logging initialized @2841ms

2021-01-26 10:56:13,593 INFO [main] org.apache.hadoop.security.authentication.server.AuthenticationFilter: Unable to initialize FileSignerSecretProvider, falling back to use random secrets.

2021-01-26 10:56:13,596 INFO [main] org.apache.hadoop.http.HttpRequestLog: Http request log for http.requests.mapreduce is not defined

2021-01-26 10:56:13,602 INFO [main] org.apache.hadoop.http.HttpServer2: Added global filter 'safety' (class=org.apache.hadoop.http.HttpServer2$QuotingInputFilter)

2021-01-26 10:56:13,652 INFO [main] org.apache.hadoop.http.HttpServer2: Added filter AM_PROXY_FILTER (class=org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter) to context mapreduce

2021-01-26 10:56:13,652 INFO [main] org.apache.hadoop.http.HttpServer2: Added filter AM_PROXY_FILTER (class=org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter) to context static

2021-01-26 10:56:13,655 INFO [main] org.apache.hadoop.http.HttpServer2: adding path spec: /mapreduce/*

2021-01-26 10:56:13,655 INFO [main] org.apache.hadoop.http.HttpServer2: adding path spec: /ws/*

2021-01-26 10:56:13,955 INFO [main] org.apache.hadoop.yarn.webapp.WebApps: Registered webapp guice modules

2021-01-26 10:56:13,956 INFO [main] org.apache.hadoop.http.HttpServer2: Jetty bound to port 24331

2021-01-26 10:56:13,958 INFO [main] org.eclipse.jetty.server.Server: jetty-9.3.25.v20180904, build timestamp: 2018-09-05T05:11:46+08:00, git hash: 3ce520221d0240229c862b122d2b06c12a625732

2021-01-26 10:56:13,994 INFO [main] org.eclipse.jetty.server.handler.ContextHandler: Started o.e.j.s.ServletContextHandler@771d1ffb{/static,jar:file:/opt/data/sdu1/yarn/nm/filecache/139190/3.0.0-cdh6.2.0-mr-framework.tar.gz/hadoop-yarn-common-3.0.0-cdh6.2.0.jar!/webapps/static,AVAILABLE}

2021-01-26 10:56:14,798 INFO [main] org.eclipse.jetty.server.handler.ContextHandler: Started o.e.j.w.WebAppContext@4d7a64ca{/,file:///opt/data/sdk1/yarn/nm/usercache/hdfs/appcache/application_1611538815675_2243/container_e25_1611538815675_2243_01_000001/tmp/jetty-0.0.0.0-24331-mapreduce-_-any-543770875093476617.dir/webapp/,AVAILABLE}{/mapreduce}

2021-01-26 10:56:14,815 INFO [main] org.eclipse.jetty.server.AbstractConnector: Started ServerConnector@6393bf8b{HTTP/1.1,[http/1.1]}{0.0.0.0:24331}

2021-01-26 10:56:14,815 INFO [main] org.eclipse.jetty.server.Server: Started @4225ms

2021-01-26 10:56:14,815 INFO [main] org.apache.hadoop.yarn.webapp.WebApps: Web app mapreduce started at 24331

2021-01-26 10:56:14,819 INFO [main] org.apache.hadoop.ipc.CallQueueManager: Using callQueue: class java.util.concurrent.LinkedBlockingQueue queueCapacity: 3000 scheduler: class org.apache.hadoop.ipc.DefaultRpcScheduler

2021-01-26 10:56:14,819 INFO [Socket Reader #1 for port 29851] org.apache.hadoop.ipc.Server: Starting Socket Reader #1 for port 29851

2021-01-26 10:56:14,823 INFO [IPC Server Responder] org.apache.hadoop.ipc.Server: IPC Server Responder: starting

2021-01-26 10:56:14,823 INFO [IPC Server listener on 29851] org.apache.hadoop.ipc.Server: IPC Server listener on 29851: starting

2021-01-26 10:56:14,839 INFO [main] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: nodeBlacklistingEnabled:true

2021-01-26 10:56:14,839 INFO [main] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: maxTaskFailuresPerNode is 3

2021-01-26 10:56:14,839 INFO [main] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: blacklistDisablePercent is 33

2021-01-26 10:56:14,842 INFO [main] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: 0% of the mappers will be scheduled using OPPORTUNISTIC containers

2021-01-26 10:56:14,897 INFO [main] org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider: Failing over to rm98

2021-01-26 10:56:14,940 INFO [main] org.apache.hadoop.mapreduce.v2.app.rm.RMCommunicator: maxContainerCapability: <memory:102400, vCores:56>

2021-01-26 10:56:14,940 INFO [main] org.apache.hadoop.mapreduce.v2.app.rm.RMCommunicator: queue: root.users.hdfs

2021-01-26 10:56:14,944 INFO [main] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Upper limit on the thread pool size is 500

2021-01-26 10:56:14,944 INFO [main] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: The thread pool initial size is 10

2021-01-26 10:56:14,956 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: job_1611538815675_2243Job Transitioned from INITED to SETUP

2021-01-26 10:56:14,958 INFO [CommitterEvent Processor #0] org.apache.hadoop.mapreduce.v2.app.commit.CommitterEventHandler: Processing the event EventType: JOB_SETUP

2021-01-26 10:56:14,966 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: job_1611538815675_2243Job Transitioned from SETUP to RUNNING

2021-01-26 10:56:15,065 INFO [eventHandlingThread] org.apache.hadoop.mapreduce.jobhistory.JobHistoryEventHandler: Event Writer setup for JobId: job_1611538815675_2243, File: hdfs://Aero:8020/user/hdfs/.staging/job_1611538815675_2243/job_1611538815675_2243_1.jhist

2021-01-26 10:56:15,072 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: task_1611538815675_2243_m_000000 Task Transitioned from NEW to SCHEDULED

2021-01-26 10:56:15,074 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: task_1611538815675_2243_r_000000 Task Transitioned from NEW to SCHEDULED

2021-01-26 10:56:15,075 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1611538815675_2243_m_000000_0 TaskAttempt Transitioned from NEW to UNASSIGNED

2021-01-26 10:56:15,076 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1611538815675_2243_r_000000_0 TaskAttempt Transitioned from NEW to UNASSIGNED

2021-01-26 10:56:15,077 INFO [Thread-60] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: mapResourceRequest:<memory:3072, vCores:1>

2021-01-26 10:56:15,094 INFO [Thread-60] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: reduceResourceRequest:<memory:16384, vCores:1>

2021-01-26 10:56:15,944 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Before Scheduling: PendingReds:1 ScheduledMaps:1 ScheduledReds:0 AssignedMaps:0 AssignedReds:0 CompletedMaps:0 CompletedReds:0 ContAlloc:0 ContRel:0 HostLocal:0 RackLocal:0

2021-01-26 10:56:15,975 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: getResources() for application_1611538815675_2243: ask=4 release= 0 newContainers=0 finishedContainers=0 resourcelimit=<memory:63488, vCores:144> knownNMs=3

2021-01-26 10:56:15,979 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=<memory:63488, vCores:144>

2021-01-26 10:56:15,979 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 1

2021-01-26 10:56:16,993 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Got allocated containers 1

2021-01-26 10:56:16,996 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Assigned container container_e25_1611538815675_2243_01_000002 to attempt_1611538815675_2243_m_000000_0

2021-01-26 10:56:16,997 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=<memory:60416, vCores:143>

2021-01-26 10:56:16,998 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 1

2021-01-26 10:56:16,998 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: After Scheduling: PendingReds:1 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:1 AssignedReds:0 CompletedMaps:0 CompletedReds:0 ContAlloc:1 ContRel:0 HostLocal:1 RackLocal:0

2021-01-26 10:56:17,043 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: The job-jar file on the remote FS is hdfs://Aero/user/hdfs/.staging/job_1611538815675_2243/job.jar

2021-01-26 10:56:17,046 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: The job-conf file on the remote FS is /user/hdfs/.staging/job_1611538815675_2243/job.xml

2021-01-26 10:56:17,053 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Adding #0 tokens and #1 secret keys for NM use for launching container

2021-01-26 10:56:17,053 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Size of containertokens_dob is 1

2021-01-26 10:56:17,053 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Putting shuffle token in serviceData

2021-01-26 10:56:17,089 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1611538815675_2243_m_000000_0 TaskAttempt Transitioned from UNASSIGNED to ASSIGNED

2021-01-26 10:56:17,093 INFO [ContainerLauncher #0] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_REMOTE_LAUNCH for container container_e25_1611538815675_2243_01_000002 taskAttempt attempt_1611538815675_2243_m_000000_0

2021-01-26 10:56:17,096 INFO [ContainerLauncher #0] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Launching attempt_1611538815675_2243_m_000000_0

2021-01-26 10:56:17,192 INFO [ContainerLauncher #0] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Shuffle port returned by ContainerManager for attempt_1611538815675_2243_m_000000_0 : 13562

2021-01-26 10:56:17,193 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: TaskAttempt: [attempt_1611538815675_2243_m_000000_0] using containerId: [container_e25_1611538815675_2243_01_000002 on NM: [bigdata003:8041]

2021-01-26 10:56:17,197 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1611538815675_2243_m_000000_0 TaskAttempt Transitioned from ASSIGNED to RUNNING

2021-01-26 10:56:17,197 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: task_1611538815675_2243_m_000000 Task Transitioned from SCHEDULED to RUNNING

2021-01-26 10:56:18,001 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: getResources() for application_1611538815675_2243: ask=4 release= 0 newContainers=0 finishedContainers=0 resourcelimit=<memory:60416, vCores:143> knownNMs=3

2021-01-26 10:56:21,008 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=<memory:84992, vCores:145>

2021-01-26 10:56:21,008 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 1

2021-01-26 10:56:21,274 INFO [Socket Reader #1 for port 29851] SecurityLogger.org.apache.hadoop.ipc.Server: Auth successful for job_1611538815675_2243 (auth:SIMPLE)

2021-01-26 10:56:21,308 INFO [IPC Server handler 2 on 29851] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID : jvm_1611538815675_2243_m_27487790694402 asked for a task

2021-01-26 10:56:21,308 INFO [IPC Server handler 2 on 29851] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID: jvm_1611538815675_2243_m_27487790694402 given task: attempt_1611538815675_2243_m_000000_0

2021-01-26 10:56:27,021 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=<memory:121856, vCores:148>

2021-01-26 10:56:27,021 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 1

2021-01-26 10:56:28,031 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Received completed container container_e25_1611538815675_2243_01_000002

2021-01-26 10:56:28,032 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=<memory:137216, vCores:150>

2021-01-26 10:56:28,032 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 1

2021-01-26 10:56:28,032 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: After Scheduling: PendingReds:1 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:0 AssignedReds:0 CompletedMaps:0 CompletedReds:0 ContAlloc:1 ContRel:0 HostLocal:1 RackLocal:0

2021-01-26 10:56:28,032 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1611538815675_2243_m_000000_0: [2021-01-26 10:56:27.156]Container killed on request. Exit code is 137

[2021-01-26 10:56:27.156]Container exited with a non-zero exit code 137.

[2021-01-26 10:56:27.158]Killed by external signal

2021-01-26 10:56:28,038 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1611538815675_2243_m_000000_0 transitioned from state RUNNING to FAILED, event type is TA_CONTAINER_COMPLETED and nodeId=bigdata003:8041

2021-01-26 10:56:28,039 INFO [ContainerLauncher #1] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_COMPLETED for container container_e25_1611538815675_2243_01_000002 taskAttempt attempt_1611538815675_2243_m_000000_0

2021-01-26 10:56:28,049 INFO [Thread-60] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: 1 failures on node bigdata003

2021-01-26 10:56:28,052 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1611538815675_2243_m_000000_1 TaskAttempt Transitioned from NEW to UNASSIGNED

2021-01-26 10:56:28,052 INFO [Thread-60] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Added attempt_1611538815675_2243_m_000000_1 to list of failed maps

2021-01-26 10:56:29,032 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Before Scheduling: PendingReds:1 ScheduledMaps:1 ScheduledReds:0 AssignedMaps:0 AssignedReds:0 CompletedMaps:0 CompletedReds:0 ContAlloc:1 ContRel:0 HostLocal:1 RackLocal:0

2021-01-26 10:56:29,036 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: getResources() for application_1611538815675_2243: ask=1 release= 0 newContainers=0 finishedContainers=0 resourcelimit=<memory:147968, vCores:152> knownNMs=3

2021-01-26 10:56:29,036 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=<memory:147968, vCores:152>

2021-01-26 10:56:29,036 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 1

2021-01-26 10:56:30,039 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Got allocated containers 1

2021-01-26 10:56:30,039 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Assigning container Container: [ContainerId: container_e25_1611538815675_2243_01_000003, AllocationRequestId: -1, Version: 0, NodeId: bigdata002:8041, NodeHttpAddress: bigdata002:8042, Resource: <memory:3072, vCores:1>, Priority: 5, Token: Token { kind: ContainerToken, service: 192.168.212.107:8041 }, ExecutionType: GUARANTEED, ] to fast fail map

2021-01-26 10:56:30,040 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Assigned from earlierFailedMaps

2021-01-26 10:56:30,040 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Assigned container container_e25_1611538815675_2243_01_000003 to attempt_1611538815675_2243_m_000000_1

2021-01-26 10:56:30,040 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=<memory:144896, vCores:151>

2021-01-26 10:56:30,040 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 1

2021-01-26 10:56:30,040 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: After Scheduling: PendingReds:1 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:1 AssignedReds:0 CompletedMaps:0 CompletedReds:0 ContAlloc:2 ContRel:0 HostLocal:1 RackLocal:0

2021-01-26 10:56:30,063 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1611538815675_2243_m_000000_1 TaskAttempt Transitioned from UNASSIGNED to ASSIGNED

2021-01-26 10:56:30,064 INFO [ContainerLauncher #2] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_REMOTE_LAUNCH for container container_e25_1611538815675_2243_01_000003 taskAttempt attempt_1611538815675_2243_m_000000_1

2021-01-26 10:56:30,064 INFO [ContainerLauncher #2] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Launching attempt_1611538815675_2243_m_000000_1

2021-01-26 10:56:30,076 INFO [ContainerLauncher #2] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Shuffle port returned by ContainerManager for attempt_1611538815675_2243_m_000000_1 : 13562

2021-01-26 10:56:30,076 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: TaskAttempt: [attempt_1611538815675_2243_m_000000_1] using containerId: [container_e25_1611538815675_2243_01_000003 on NM: [bigdata002:8041]

2021-01-26 10:56:30,077 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1611538815675_2243_m_000000_1 TaskAttempt Transitioned from ASSIGNED to RUNNING

2021-01-26 10:56:31,044 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: getResources() for application_1611538815675_2243: ask=1 release= 0 newContainers=0 finishedContainers=0 resourcelimit=<memory:144896, vCores:151> knownNMs=3

2021-01-26 10:56:33,048 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=<memory:153088, vCores:152>

2021-01-26 10:56:33,048 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 1

2021-01-26 10:56:33,141 INFO [Socket Reader #1 for port 29851] SecurityLogger.org.apache.hadoop.ipc.Server: Auth successful for job_1611538815675_2243 (auth:SIMPLE)

2021-01-26 10:56:33,153 INFO [IPC Server handler 2 on 29851] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID : jvm_1611538815675_2243_m_27487790694403 asked for a task

2021-01-26 10:56:33,154 INFO [IPC Server handler 2 on 29851] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID: jvm_1611538815675_2243_m_27487790694403 given task: attempt_1611538815675_2243_m_000000_1

2021-01-26 10:56:34,050 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=<memory:144896, vCores:151>

2021-01-26 10:56:34,050 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 1

2021-01-26 10:56:39,059 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Received completed container container_e25_1611538815675_2243_01_000003

2021-01-26 10:56:39,060 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=<memory:139776, vCores:151>

2021-01-26 10:56:39,060 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 1

2021-01-26 10:56:39,060 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1611538815675_2243_m_000000_1: [2021-01-26 10:56:38.735]Container killed on request. Exit code is 137

[2021-01-26 10:56:38.735]Container exited with a non-zero exit code 137.

[2021-01-26 10:56:38.737]Killed by external signal

2021-01-26 10:56:39,060 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: After Scheduling: PendingReds:1 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:0 AssignedReds:0 CompletedMaps:0 CompletedReds:0 ContAlloc:2 ContRel:0 HostLocal:1 RackLocal:0

2021-01-26 10:56:39,061 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1611538815675_2243_m_000000_1 transitioned from state RUNNING to FAILED, event type is TA_CONTAINER_COMPLETED and nodeId=bigdata002:8041

2021-01-26 10:56:39,062 INFO [ContainerLauncher #3] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_COMPLETED for container container_e25_1611538815675_2243_01_000003 taskAttempt attempt_1611538815675_2243_m_000000_1

2021-01-26 10:56:39,063 INFO [Thread-60] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: 1 failures on node bigdata002

2021-01-26 10:56:39,064 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1611538815675_2243_m_000000_2 TaskAttempt Transitioned from NEW to UNASSIGNED

2021-01-26 10:56:39,064 INFO [Thread-60] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Added attempt_1611538815675_2243_m_000000_2 to list of failed maps

2021-01-26 10:56:40,061 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Before Scheduling: PendingReds:1 ScheduledMaps:1 ScheduledReds:0 AssignedMaps:0 AssignedReds:0 CompletedMaps:0 CompletedReds:0 ContAlloc:2 ContRel:0 HostLocal:1 RackLocal:0

2021-01-26 10:56:40,063 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: getResources() for application_1611538815675_2243: ask=1 release= 0 newContainers=0 finishedContainers=0 resourcelimit=<memory:139776, vCores:151> knownNMs=3

2021-01-26 10:56:40,063 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=<memory:139776, vCores:151>

2021-01-26 10:56:40,063 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 1

2021-01-26 10:56:41,066 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Got allocated containers 1

2021-01-26 10:56:41,066 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Assigning container Container: [ContainerId: container_e25_1611538815675_2243_01_000004, AllocationRequestId: -1, Version: 0, NodeId: bigdata003:8041, NodeHttpAddress: bigdata003:8042, Resource: <memory:3072, vCores:1>, Priority: 5, Token: Token { kind: ContainerToken, service: 192.168.212.108:8041 }, ExecutionType: GUARANTEED, ] to fast fail map

2021-01-26 10:56:41,066 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Assigned from earlierFailedMaps

2021-01-26 10:56:41,067 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Assigned container container_e25_1611538815675_2243_01_000004 to attempt_1611538815675_2243_m_000000_2

2021-01-26 10:56:41,067 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=<memory:136704, vCores:150>

2021-01-26 10:56:41,067 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 1

2021-01-26 10:56:41,067 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: After Scheduling: PendingReds:1 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:1 AssignedReds:0 CompletedMaps:0 CompletedReds:0 ContAlloc:3 ContRel:0 HostLocal:1 RackLocal:0

2021-01-26 10:56:41,068 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1611538815675_2243_m_000000_2 TaskAttempt Transitioned from UNASSIGNED to ASSIGNED

2021-01-26 10:56:41,069 INFO [ContainerLauncher #4] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_REMOTE_LAUNCH for container container_e25_1611538815675_2243_01_000004 taskAttempt attempt_1611538815675_2243_m_000000_2

2021-01-26 10:56:41,069 INFO [ContainerLauncher #4] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Launching attempt_1611538815675_2243_m_000000_2

2021-01-26 10:56:41,082 INFO [ContainerLauncher #4] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Shuffle port returned by ContainerManager for attempt_1611538815675_2243_m_000000_2 : 13562

2021-01-26 10:56:41,083 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: TaskAttempt: [attempt_1611538815675_2243_m_000000_2] using containerId: [container_e25_1611538815675_2243_01_000004 on NM: [bigdata003:8041]

2021-01-26 10:56:41,083 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1611538815675_2243_m_000000_2 TaskAttempt Transitioned from ASSIGNED to RUNNING

2021-01-26 10:56:42,069 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerRequestor: getResources() for application_1611538815675_2243: ask=1 release= 0 newContainers=0 finishedContainers=0 resourcelimit=<memory:136704, vCores:150> knownNMs=3

2021-01-26 10:56:42,442 INFO [Socket Reader #1 for port 29851] SecurityLogger.org.apache.hadoop.ipc.Server: Auth successful for job_1611538815675_2243 (auth:SIMPLE)

2021-01-26 10:56:42,459 INFO [IPC Server handler 0 on 29851] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID : jvm_1611538815675_2243_m_27487790694404 asked for a task

2021-01-26 10:56:42,459 INFO [IPC Server handler 0 on 29851] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID: jvm_1611538815675_2243_m_27487790694404 given task: attempt_1611538815675_2243_m_000000_2

2021-01-26 10:56:49,082 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Received completed container container_e25_1611538815675_2243_01_000004

2021-01-26 10:56:49,082 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=<memory:139776, vCores:151>

2021-01-26 10:56:49,082 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 1

2021-01-26 10:56:49,082 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: After Scheduling: PendingReds:1 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:0 AssignedReds:0 CompletedMaps:0 CompletedReds:0 ContAlloc:3 ContRel:0 HostLocal:1 RackLocal:0

2021-01-26 10:56:49,082 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1611538815675_2243_m_000000_2: [2021-01-26 10:56:48.152]Container killed on request. Exit code is 137

[2021-01-26 10:56:48.152]Container exited with a non-zero exit code 137.

[2021-01-26 10:56:48.153]Killed by external signal

2021-01-26 10:56:49,083 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1611538815675_2243_m_000000_2 transitioned from state RUNNING to FAILED, event type is TA_CONTAINER_COMPLETED and nodeId=bigdata003:8041