文章目录

官网教程:

http://docs.saltstack.cn/topics/tutorials/walkthrough.html

server1 :192.168.1.11 master端

server2 : 192.168.1.12 minion端

server3 : 192.168.1.13 minion端

saltstack简介

- saltstack是一个配置管理系统,能够维护预定义状态的远程节点。

- saltstack是一个分布式远程执行系统,用来在远程节点上执行命令和查询数据。

- saltstack是运维人员提高工作效率、规范业务配置与操作的利器。

Salt的核心功能

-

使命令发送到远程系统是并行的而不是串行的

-

使用安全加密的协议

-

使用最小最快的网络载荷

-

提供简单的编程接口

-

Salt同样引入了更加细致化的领域控制系统来远程执行,使得系统成为目标不止可以通过主机名,还可以通过系统属性。

-

区别:

Salt 和ansible 最大的区别在于salt是具有客户端的,而 ansible 没有,salt 直接获取root权限进行访问

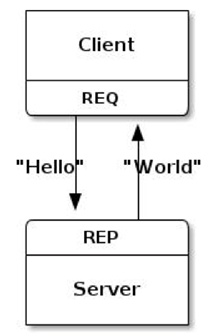

saltstack通信机制

- SaltStack 采用 C/S模式,minion与master之间通过ZeroMQ(轻量级)消息队列通信,默认监听4505端口。saltstack的优势就在于这个ZMQ,它运行起来的速度比ansible快很多

- Salt Master运行的第二个网络服务就是ZeroMQ REP系统,默认监听4506端口,就是接受客户端的回执的。

安装与配置

-

进入官网下载,或者使用国内的开源镜像网站(如清华大学镜像和中科大镜像网站或者阿里云)进行下载:

yum install https://mirrors.aliyun.com/saltstack/yum/redhat/salt-repo-latest-2.el7.noarch.rpm -

设置官方yum仓库

因为这条命令会产生一个新的yum源,然而这个yum源的镜像地址并不是非常快,我们把它换成aliyun的地址,会快一些[root@rhel7 yum.repos.d]# ls redhat.repo salt-latest.repo [root@rhel7 yum.repos.d]# cat salt-latest.repo [salt-latest] name=SaltStack Latest Release Channel for RHEL/Centos $releasever baseurl=https://mirrors.aliyun.com/saltstack/yum/redhat/7/$basearch/latest ##阿里云的镜像地址 failovermethod=priority enabled=1 gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/saltstack-signing-key更改后查看yum源是否配置好:

[root@rhel7 yum.repos.d]# yum repolist Loaded plugins: langpacks, product-id, search-disabled-repos, subscription- : manager This system is not registered with an entitlement server. You can use subscription-manager to register. repo id repo name status salt-latest/x86_64 SaltStack Latest Release Channel for RHEL/Centos 7Serv 83 repolist: 83在server2上做同样的配置。

master节点配置:server1

[root@rhel7 yum.repos.d]# yum install -y salt-master Loaded plugins: langpacks, product-id, search-disabled-repos, subscription- : manager This system is not registered with an entitlement server. You can use subscription-manager to register. Resolving Dependencies --> Running transaction check ---> Package salt-master.noarch 0:3000.3-1.el7 will be installed ......省略 --> Finished Dependency Resolution Error: Package: salt-3000.3-1.el7.noarch (salt-latest) ##注意这里的依赖性并没有得到解决 Requires: python-markupsafe Error: Package: salt-3000.3-1.el7.noarch (salt-latest) Requires: python-jinja2 You could try using --skip-broken to work around the problem You could try running: rpm -Va --nofiles --nodigest解决依赖性,成功安装

设置自启动 [root@server1 Downloads]# systemctl enable salt-master Created symlink from /etc/systemd/system/multi-user.target.wants/salt-master.service to /usr/lib/systemd/system/salt-master.service. 启动 [root@server1 Downloads]# systemctl start salt-master 安装用来查看已经打开的端口的包 [root@server1 Downloads]# yum install -y lsof Loaded plugins: langpacks, product-id, search-disabled-repos, subscription- : manager This system is not registered with an entitlement server. You can use subscription-manager to register. Nothing to do 接受请求 [root@server1 Downloads]# lsof -i :4506 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME salt-mast 11417 root 23u IPv4 82603 0t0 TCP *:4506 (LISTEN) 消息队列 [root@server1 Downloads]# lsof -i :4505 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME salt-mast 11411 root 15u IPv4 82565 0t0 TCP *:4505 (LISTEN)minion节点配置:server2,server3

yum install -y salt-minion #安装minion端 vim /etc/salt/minion #对minion端的配置文件进行配置 master: 192.168.1.11 #指定master端 systemctl enable salt-minion #设置自启动minion systemctl start salt-minion #开启自启动配置完minion端之后,查看master端

[root@server1 Downloads]# lsof -i :4506 #请求消息端口 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME salt-mast 14705 root 23u IPv4 104656 0t0 TCP *:4506 (LISTEN) # server2向server1发送的请求 salt-mast 14705 root 30u IPv4 115223 0t0 TCP server1:4506->192.168.1.12:51154 (ESTABLISHED) # server3向server1发送的请求 salt-mast 14705 root 31u IPv4 115229 0t0 TCP server1:4506->192.168.1.13:45888 (ESTABLISHED) [root@server1 Downloads]# lsof -i :4505 #消息队列 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME salt-mast 14699 root 15u IPv4 104627 0t0 TCP *:4505 (LISTEN)这时master端需要通过key去允许minion端的连接

[root@server1 Downloads]# salt-key -L Accepted Keys: Denied Keys: Unaccepted Keys: server2 server3 Rejected Keys:同意key:-A允许所有

[root@server1 Downloads]# salt-key -A The following keys are going to be accepted: Unaccepted Keys: server2 server3 Proceed? [n/Y] y Key for minion server2 accepted. Key for minion server3 accepted.再次查看

[root@server1 Downloads]# salt-key -L Accepted Keys: server2 server3 Denied Keys: Unaccepted Keys: Rejected Keys: #请求队列 [root@server1 Downloads]# lsof -i :4506 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME salt-mast 14705 root 23u IPv4 104656 0t0 TCP *:4506 (LISTEN) #消息队列(已经建立长链接,master发的时候,minion端就可以收到) [root@server1 Downloads]# lsof -i :4505 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME salt-mast 14699 root 15u IPv4 104627 0t0 TCP *:4505 (LISTEN) salt-mast 14699 root 17u IPv4 118817 0t0 TCP server1:4505->192.168.1.12:53022 (ESTABLISHED) salt-mast 14699 root 18u IPv4 118822 0t0 TCP server1:4505->192.168.1.13:41772 (ESTABLISHED)注意: 当我们更改了主机名时,要及时的去这个文件中更改,或者删除文件,重启minion端。因为salt会去读文件中的名字

[root@server2 salt]# cat minion_id server2 [root@server2 salt]# pwd /etc/salt

查看更详细的进程信息:

安装

[root@server1]# yum install -y python-setproctitle.x86_64

重启salt

[root@server1]# systemctl restart salt-master.service

[root@server1]# ps ax

...

19546 ? S 0:00 /usr/bin/python /usr/share/PackageKit/helpers/yum/yu

19554 ? Ss 0:00 /usr/bin/python /usr/bin/salt-master ProcessManager

19565 ? S 0:00 /usr/bin/python /usr/bin/salt-master Multiprocessing

消息队列

19578 ? Sl 0:00 /usr/bin/python /usr/bin/salt-master ZeroMQPubServer

19579 ? S 0:00 /usr/bin/python /usr/bin/salt-master EventPublisher

19582 ? S 0:00 /usr/bin/python /usr/bin/salt-master Maintenance

请求队列

19583 ? S 0:00 /usr/bin/python /usr/bin/salt-master ReqServer_Proce

维护队列

19584 ? Sl 0:00 /usr/bin/python /usr/bin/salt-master MWorkerQueue

19585 ? R 0:00 /usr/bin/python /usr/bin/salt-master MWorker-0

19586 ? R 0:00 /usr/bin/python /usr/bin/salt-master MWorker-1

19593 ? Sl 0:00 /usr/bin/python /usr/bin/salt-master FileserverUpdat

19594 ? R 0:00 /usr/bin/python /usr/bin/salt-master MWorker-2

19595 ? R 0:00 /usr/bin/python /usr/bin/salt-master MWorker-3

19598 ? R 0:00 /usr/bin/python /usr/bin/salt-master MWorker-4

19897 pts/1 R+ 0:00 ps ax

在master端测试ping在minion端的执行情况:

[root@server1 ~]# salt '*' test.ping #*表示在key中能识别的所有主机

server3:

True

server2:

True

指定key进行测试:

[root@server1 ~]# salt server2 test.ping

server2:

True

我们当前是以超户身份进行远程命令:

[root@server1 ~]# salt server2 cmd.run pwd

server2:

/root

[root@server1 ~]# salt server2 cmd.run 'touch /tmp/file'

server2:

[root@server2 yum.repos.d]# ls /tmp/file

/tmp/file

slatstack远程执行

- 测试连接

- 远程执行命令

salt命令

salt命令由三个主要部分组成:

salt '<target>' <function> [arguments]

-

target:指定哪些minion,默认的规则是使用glob匹配minion_id

salt '*' test.pingtargets也可以使用正则表达式:

salt -E 'server[1-3]' test.pingtargets也可以指定列表:

salt -L 'server2,server3' test.ping -

function是module提供的功能,salt内置了大量有效的functions

http://docs.saltstack.cn/ 查看中文手册 [root@server1 ~]# salt '*' cmd.run 'uname -a' server3: Linux server3 3.10.0-957.el7.x86_64 #1 SMP Thu Oct 4 20:48:51 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux server2: Linux server2 3.10.0-957.el7.x86_64 #1 SMP Thu Oct 4 20:48:51 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux -

arguments通过空格来界定参数

salt 'server2' sys.doc pkg #查看模块文档 salt 'server2' pkg.install httpd salt 'server2' pkg.remove httpd #这样就很简单的完成了远程安装 [root@server1 ~]# salt 'server2' pkg.remove httpd server2: ---------- httpd: ---------- new: old: 2.4.6-88.el7 [root@server1 ~]# salt 'server2' pkg.install httpd server2: ---------- httpd: ---------- new: 2.4.6-88.el7 old:

命令由4505端口推送,结果由4506接收

salt内置的执行模块(写文件时的用法)

http://docs.saltstack.cn/ref/modules/all/index.html

salt的数据都会保存在/var/cache/salt目录中

[root@server2 yum.repos.d]# cd /var/cache/salt

[root@server2 salt]# tree .

.

└── minion

├── extmods

└── proc

3 directories, 0 files

编写远程执行模块

除了由salt内置的执行模块,作为用户自己也可以再编写自己的模块。

[root@server1 ~]# vim /etc/salt/master

......

file_roots:

base:

- /srv/salt ##salt的base目录

记得重新启动服务:

[root@server1 ~]#

systemctl restart salt-master

创建目录:

[root@server1 ~]# cd /srv/salt ##salt的base目录,所有数据会被定义到这里

[root@server1 ~]# mkdir /srv/salt/_moudles ##模块固定存放位置

编写远程文件并执行:

[root@server1 _modules]# vim mydisk.py

def py():

return __salt__['cmd.run']('df -h') ##注意双下划线

[root@server1 _modules]# salt server2 saltutil.sync_modules ##同步到server2

server2:

- modules.mydisk

查看server2:

[root@server2 salt]# tree .

.

└── minion

├── extmods

│ └── modules

│ └── mydisk.py

├── files

│ └── base ##base目录时/srv/salt

│ └── _modules

│ └── mydisk.py

├── module_refresh

└── proc

7 directories, 3 files

[root@server2 salt]# pwd

/var/cache/salt

可见文件server2存到了/var/cache/salt/下,现在我们远程调用试试,调用mydisk文件的df方法:

[root@server1 _modules]# salt server2 mydisk.df

server2:

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/rhel-root 17G 3.7G 14G 22% /

devtmpfs 894M 0 894M 0% /dev

tmpfs 910M 140K 910M 1% /dev/shm

tmpfs 910M 11

tmpfs 910M 0 910M 0% /sys/fs/cgroup

/dev/nvme0n1p1 1014M 179M 836M 18% /boot

tmpfs 182M 12K 182M 1% /run/user/42

tmpfs 182M 0 182M 0% /run/user/0

配置管理

- salt状态系统的核心是SLS,或者叫SaLt State文件。

- SLS表示系统将会是什么样的一个状态,而且是以一种很简单的格式来包含这些数据,常被叫做配置管理。

sls文件的两种写法:

[root@server1 _modules]# cd /srv/salt ## 文件路径

[root@server1 salt]# vim apache.sls

[root@server1 salt]# cat apache.sls

httpd: ##软件包声明

pkg: ##状态模块声明

- installed ##函数声明

或写在一起:

[root@server1 salt]# cat apache.sls

httpd:

pkg.installed

推送过去,注意此时的推送命名方式,不要写错了:

[root@server1 salt]# salt server2 state.sls apache

server2:

----------

ID: httpd

Function: pkg.installed

Result: True

Comment: The following packages were installed/updated: httpd

Started: 16:53:51.502130

Duration: 22266.378 ms

Changes:

----------

httpd:

----------

new:

2.4.6-88.el7

old:

Summary for server2

------------

Succeeded: 1 (changed=1)

Failed: 0

------------

server2会先接收推送过来sls文件,再执行安装:

[root@server2 salt]# tree .

.

└── minion

├── accumulator

├── extmods

│ └── modules

│ ├── mydisk.py

│ └── mydisk.pyc

├── files

│ └── base

│ ├── apache.sls ##在这里

│ └── _modules

│ └── mydisk.py

├── highstate.cache.p

├── module_refresh

├── proc

└── sls.p

8 directories, 7 files

推送多个文件:

编写文件:

[root@server1 salt]# cat web.sls

web:

pkg.installed:

- pkgs:

- httpd

- php

推送过去:

[root@server1 salt]# salt server3 state.sls web

server3:

----------

ID: web

Function: pkg.installed

Result: True

Comment: The following packages were installed/updated: php

The following packages were already installed: httpd

Started: 17:17:57.767480

Duration: 23622.096 ms

Changes:

----------

libzip:

----------

new:

0.10.1-8.el7

old:

php:

----------

new:

5.4.16-46.el7

old:

php-cli:

----------

new:

5.4.16-46.el7

old:

php-common:

----------

new:

5.4.16-46.el7

old:

Summary for server3

------------

Succeeded: 1 (changed=1)

Failed: 0

------------

Total states run: 1

Total run time: 23.622 s

- sls文件命名:

1.sls文件以“.sls”后缀结尾,但在调用时不用写此后缀

2.使用子目录来做组织是个很好的选择

3.init.sls在一个子目录里面表示引导文件,也就表示子目录本身,所以apache/init.sls文件就是表示apache本身

4.如果同时存在“apache/init.sls”和“apache.sls”文件,则“apache/init.sls”被忽略,“apache.sls”将被用来表示apache

当sls文件越来越多的时候,我们采用下面的方式:

[root@server1 salt]# mkdir apache ##在base目录创建文件夹

[root@server1 salt]# mv apache.sls apache

[root@server1 salt]# mv web.sls apache ##将sls文件移入vim

[root@server1 salt]# salt server2 state.sls apache.web ## 推送时用 . 分隔

也可以用下面的方式,不用进入base目录:

[root@server1 apache]# mv web.sls init.sls ##入口文件

[root@server1 apache]# cd

[root@server1 ~]# salt server2 state.sls apache

server2:

----------

ID: web

Function: pkg.installed

Result: True

Comment: All specified packages are already installed

Started: 17:34:22.339829

Duration: 4003.125 ms

Changes:

Summary for server2

------------

Succeeded: 1

Failed: 0

------------

Total states run: 1

Total run time: 4.003 s

用init.sls文件开启httpd,并设置为开机自启动:

[root@server1 apache]# vim init.sls

web:

pkg.installed:

- pkgs:

- httpd

- php

service.running:

- name: httpd

- enable: True

[root@server1 apache]# salt server2 state.sls apache

server2:

----------

ID: web

Function: pkg.installed

Result: True

Comment: All specified packages are already installed

Started: 18:14:24.639825

Duration: 3726.521 ms

Changes:

----------

ID: web

Function: service.running

Name: httpd

Result: True

Comment: Service httpd has been enabled, and is in the desired state

Started: 18:14:28.368555

Duration: 546.061 ms

Changes:

----------

httpd:

True

Summary for server2

------------

Succeeded: 2 (changed=1)

Failed: 0

------------

Total states run: 2

Total run time: 4.273 s

检查是否开机自启动成功:

[root@server2 salt]# systemctl is-enabled httpd

enabled

[root@server2 salt]# tree .

.

└── minion

├── accumulator

├── extmods

│ └── modules

│ ├── mydisk.py

│ └── mydisk.pyc

├── files

│ └── base

│ ├── apache

│ │ └── init.sls

│ ├── apache.sls

│ └── _modules

│ └── mydisk.py

├── highstate.cache.p

├── module_refresh

├── pkg_refresh

├── proc

└── sls.p

9 directories, 9 files

更改配置文件:

[root@server1 apache]# vim init.sls

web:

pkg.installed:

- pkgs:

- httpd

- php

file.managed:

- source: salt://apache/files/httpd.conf ##源地址 /srv/salt/apache

- name: /etc/http/conf/httpd.conf ##目的地址

service.running:

- name: httpd

- enable: True

根据自己写的源地址创建目录文件:

[root@server1 apache]# mkdir files

将远程httpd配置文件拷贝到本地相应目录下:

[root@server1 apache]# scp 192.168.1.12:/etc/httpd/conf/httpd.conf ./files

[email protected]’s password:

httpd.conf 100% 11KB 5.2MB/s 00:00

对相应端口进行更改:

[root@server1 files]# vim httpd.conf

#Listen 12.34.56.78:80

Listen 8080 ##将默认的80端口改为8080

我们用 watch 来监控一下web声明

注意: 每个声明底下的模块只能使用一次,不然就会混乱,比如web地下只能有一个file模块,如果想再加的话,就再写一个声明

加watch:

[root@server1 apache]# cat init.sls

web:

pkg.installed:

- pkgs:

- httpd

- php

file.managed:

- source: salt://apache/files/httpd.conf

- name: /etc/httpd/conf/httpd.conf

service.running:

- name: httpd

- enable: True

- watch: ## 添加到这里

- file: web

运行:

[root@server1 apache]# salt server2 state.sls apache

server2:

----------

ID: web

Function: pkg.installed

Result: True

Comment: All specified packages are already installed

Started: 18:36:48.026370

Duration: 3586.607 ms

Changes:

----------

ID: web

Function: file.managed

Name: /etc/httpd/conf/httpd.conf

Result: True

Comment: File /etc/httpd/conf/httpd.conf updated

Started: 18:36:51.618049

Duration: 60.599 ms

Changes:

----------

diff:

---

+++

@@ -39,7 +39,7 @@

# prevent Apache from glomming onto all bound IP addresses.

#

#Listen 12.34.56.78:80

-Listen 80 ##原来的端口

+Listen 8080 ##更改之后的端口

#

# Dynamic Shared Object (DSO) Support

----------

ID: web

Function: service.running

Name: httpd

Result: True

Comment: Service restarted

Started: 18:36:51.796104

Duration: 1707.625 ms

Changes:

----------

httpd:

True

Summary for server2

------------

Succeeded: 3 (changed=2)

Failed: 0

------------

Total states run: 3

Total run time: 5.355 s

去server2确定一下端口是否更改:

[root@server2 salt]# netstat -tnlp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp6 0 0 :::8080 :::* LISTEN 12090/httpd

还可以有别的写法:

[root@server1 apache]# cat init.sls

web:

pkg.installed:

- pkgs:

- httpd

- php

service.running:

- name: httpd

- enable: True

- watch:

- file: /etc/httpd/conf/httpd.conf

/etc/httpd/conf/httpd.conf: ##将它name替换为声明

file.managed: ##file独立出来

- source: salt://apache/files/httpd.conf

配置Nginx(server3):

[root@server1 salt]# mkdir nginx

[root@server1 salt]# cd nginx/

[root@server1 nginx]# vim init.sls

nginx-install: ##安装

pkg.installed: ##解决依赖性

- pkgs:

- openssl-devel

- pcre-devel

- gcc

file.managed: ##推送源码包

- name: /mnt/nginx-1.18.0.tar.gz

- source: salt://nginx/files/nginx-1.18.0.tar.gz

cmd.run: ##编译安装

- name: cd /mnt/ && tar zxf nginx-1.18.0.tar.gz && cd nginx-1.18.0 && sed -i 's/CFLAGS="$CFLAGS -g"/#CFLAGS="$CFLAGS -g"/g' auto/cc/gcc && ./configure --prefix=/usr/local/nginx --with-http_ssl_module &> /dev/null && make &> /dev/null && make install &> /dev/null

- creates: /usr/local/nginx ##检测路径是否存在,若存在,就不再编译安装了

nginx-service:

file.managed: ##推送文件

- name: /usr/lib/systemd/system/nginx.service

- source: salt://nginx/files/nginx.service

service.running: ##启动服务

- name: nginx

下载源码包和脚本文件,并放入指定目录中:

[root@server1 nginx]# mkdir files

[root@server1 Downloads]# mv nginx-1.18.0.tar.gz /srv/salt/nginx/files/

[root@server1 files]# vim nginx.service

[Unit]

Description=The NGINX HTTP and reverse proxy server

After=syslog.target network-online.target remote-fs.target nss-lookup.target

Wants=network-online.target

[Service]

Type=forking

PIDFile=/usr/local/nginx/logs/nginx.pid

ExecStartPre=/usr/local/nginx/sbin/nginx -t

ExecStart=/usr/local/nginx/sbin/nginx

ExecReload=/usr/local/nginx/sbin/nginx -s reload

ExecStop=/bin/kill -s QUIT $MAINPID

PrivateTmp=true

[Install]

WantedBy=multi-user.target

运行:

[root@server1 nginx]# salt server3 state.sls nginx

server3:

----------

ID: nginx-install

Function: pkg.installed

Result: True

Comment: All specified packages are already installed

Started: 19:21:21.533896

Duration: 3654.499 ms

Changes:

----------

ID: nginx-install

Function: file.managed

Name: /mnt/nginx-1.18.0.tar.gz

Result: True

Comment: File /mnt/nginx-1.18.0.tar.gz updated

Started: 19:21:25.194941

Duration: 190.119 ms

Changes:

----------

diff:

New file

mode:

0644

...

Summary for server3

------------

Succeeded: 5 (changed=3)

Failed: 0

------------

Total states run: 5

Total run time: 60.242 s

去server3里面检验:

14314 ? Ss 0:00 nginx: master process /usr/local/nginx/sbin/nginx

14315 ? S 0:00 nginx: worker process

加入配置文件:

[root@server1 nginx]# cat init.sls

nginx-install:

pkg.installed:

- pkgs:

- openssl-devel

- pcre-devel

- gcc

file.managed:

- name: /mnt/nginx-1.18.0.tar.gz

- source: salt://nginx/files/nginx-1.18.0.tar.gz

cmd.run:

- name: cd /mnt/ && tar zxf nginx-1.18.0.tar.gz && cd nginx-1.18.0 && sed -i 's/CFLAGS="$CFLAGS -g"/#CFLAGS="$CFLAGS -g"/g' auto/cc/gcc && ./configure --prefix=/usr/local/nginx --with-http_ssl_module &> /dev/null && make &> /dev/null && make install &> /dev/null

- creates: /usr/local/nginx

/usr/local/nginx/conf/nginx.conf: ##把配置文件拷过去

file.managed:

- source: salt://nginx/files/nginx.conf

nginx-service:

file.managed:

- name: /usr/lib/systemd/system/nginx.service

- source: salt://nginx/files/nginx.service

service.running:

- name: nginx

- enable: True ##开机自启

- reload: True ##重新加载

- watch: ##监控

- file: /usr/local/nginx/conf/nginx.conf

[root@server1 files]# vim nginx.conf

worker_processes 2; ##配置文件里面设置两个worker

[root@server1 files]# salt server3 state.sls nginx

server3检验:

14314 ? Ss 0:00 nginx: master process /usr/local/nginx/sbin/nginx

14315 ? S 0:00 nginx: worker process

14910 ? S 0:00 [kworker/0:2]

14984 ? S 0:00 [kworker/0:0]

15037 ? R 0:00 [kworker/0:1]

15083 ? S 0:00 sleep 60

15129 pts/0 R+ 0:00 ps ax

使用include一下:

nginx-install:

pkg.installed:

- pkgs:

- openssl-devel

- pcre-devel

- gcc

file.managed:

- name: /mnt/nginx-1.18.0.tar.gz

- source: salt://nginx/files/nginx-1.18.0.tar.gz

cmd.run:

- name: cd /mnt/ && tar zxf nginx-1.18.0.tar.gz && cd nginx-1.18.0 && sed -i 's/CFLAGS="$CFLAGS -g"/#CFLAGS="$CFLAGS -g"/g' auto/cc/gcc && ./configure --prefix=/usr/local/nginx --with-http_ssl_module &> /dev/null && make &> /dev/null && make install &> /dev/null

- creates: /usr/local/nginx

include: ##这个是include的方式,默认只读下面的这个接口文件,将接下来要执行的部分放到这个接口文件中,就可以了

- nginx.service ##nginx/service.sls文件

创建接口文件:

[root@server1 nginx]# vim service.sls

/usr/local/nginx/conf/nginx.conf:

file.managed:

- source: salt://nginx/files/nginx.conf

nginx-service:

file.managed:

- name: /usr/lib/systemd/system/nginx.service

- source: salt://nginx/files/nginx.service

service.running:

- name: nginx

- enable: True

- reload: True

- watch:

- file: /usr/local/nginx/conf/nginx.conf

执行:

可以发现会优先执行include中的内容

[root@server1 nginx]# salt server3 state.sls nginx

server3:

----------

ID: /usr/local/nginx/conf/nginx.conf

Function: file.managed

Result: True

Comment: File /usr/local/nginx/conf/nginx.conf is in the correct state

Started: 21:19:07.209442

Duration: 65.347 ms

Changes:

----------

ID: nginx-service

Function: file.managed

Name: /usr/lib/systemd/system/nginx.service

Result: True

YAML语法

- 规则一:缩进

Salt需要每个缩进级别由两个空格组成,不要使用tab - 规则二:冒号

字典keys在YMAL中的表现形式是一个以冒号结尾的字符串

my_key:my_value - 规则三:段横杠

- 想要表示列表项,使用一个段横杠加一个空格

- list_value_one

-list_value_two

top文件

在Salt中,包含网络上的计算机分组与应用于它们的配置角色之间的映射关系的文件称为top file文件。默认情况下,顶级文件的名称为top.sls,之所以如此命名是因为它们始终存在于包含状态文件的目录层次结构的“顶部”中。该目录层次结构称为state tree状态树。

创建top.sls文件:

[root@server1 salt]# vim top.sls

base:

‘server2’: ##在server2中执行apache状态文件

- apache

‘server3’: ##在server3中执行nginx状态文件

- nginx

[root@server1 salt]# pwd ## 在base目录中

/srv/salt

此时salt将会在base目录下寻找apache.sls和nginx.sls文件

salt的base目录在master的配置文件中设置

推送:

[root@server1 salt]# salt '*' state.highstate

grains与pillar详解

grains

- grains是saltstack的一个组件,它被存放在minion端

- 当salt-minion启动时会把数据静态存储到grains当中,只有当minion重启时才会进行数据的更新

- 由于grains时静态数据,所以不推荐常去改它

- 应用场景:

信息查询,可做CMDB

在target中使用,匹配minion

在state模块中使用,配置管理模块

信息查询

用于查询minion端的IP,FQDN等信息

默认可用的grains

[root@server1 salt]# salt '*' grains.ls

server2:

- SSDs

- biosreleasedate

- biosversion

- cpu_flags

- cpu_model

- cpuarch

- cwd

- disks

- dns

- domain

- fqdn

- fqdn_ip4

- fqdn_ip6

查看每一项的值:

[root@server1 salt]# salt server3 grains.items

server3:

----------

SSDs:

- dm-0

- dm-1

- nvme0n1

biosreleasedate:

07/29/2019

biosversion:

6.00

取单项的值: items变item

[root@server1 salt]# salt server3 grains.item dns

server3:

----------

dns:

----------

domain:

ip4_nameservers:

- 192.168.1.1

- 114.114.114.114

ip6_nameservers:

nameservers:

- 192.168.1.1

- 114.114.114.114

options:

search:

sortlist:

自定义grains项

上述的grains可以在minion端的配置文件(/etc/salt/minion)去静态指定:

grains:

roles: apache

- webserver

- memcache

重启minion端,使对配置文件的更改生效:

[root@server2 salt]# systemctl restart salt-minion

在server1执行,就可以看到了:

[root@server1 salt]# salt server2 grains.item roles

server2:

----------

roles:

apache

还可以在etc/salt/grains中定义:这样可以不用重启服务

[root@server3 ~]# cat /etc/salt/grains

roles: nginx

[root@server1 salt]# salt server3 saltutil.sync_grains ##同步

server3:

[root@server1 salt]# salt server3 grains.item roles ##查看

server3:

----------

roles:

nginx

编写grains模块

在master端base下创建_grains目录:

[root@server1 salt]# mkdir _grains

def my_grains():

grains = {}

grains['salt'] = 'stack'

grains['hello'] = 'world'

return grains

同步到所有minion端

[root@server1 _grains]# salt '*' saltutil.sync_grains

server3:

- grains.my_grain

server2:

- grains.my_grain

调用:

[root@server1 _grains]# salt 'server2' grains.item hello

server2:

----------

hello:

world

[root@server1 _grains]# salt 'server2' grains.item salt

server2:

----------

salt:

stack

在server2查看是否被同步:

[root@server2 salt]# pwd

/var/cache/salt

[root@server2 salt]# tree .

.

└── minion

├── accumulator

├── extmods

│ ├── grains

│ │ ├── my_grain.py

│ │ └── my_grain.pyc

│ └── modules

│ ├── mydisk.py

│ └── mydisk.pyc

├── files

│ └── base

│ ├── apache

│ │ ├── files

│ │ │ └── httpd.conf

│ │ └── init.sls

│ ├── apache.sls

│ ├── _grains

│ │ └── my_grain.py ##同步过来的文件在这里

│ ├── _modules

│ │ └── mydisk.py

│ └── top.sls

├── highstate.cache.p

├── module_refresh

├── pkg_refresh

├── proc

└── sls.p

12 directories, 14 files

grains匹配应用

[root@server1 _grains]# salt -G roles:apache cmd.run hostname

server2:

server2

[root@server1 _grains]# salt -G roles:nginx cmd.run hostname

server3:

server3

[root@server1 _grains]# salt -G hello:world cmd.run hostname

server2:

server2

server3:

server3

[root@server1 _grains]# salt -G salt:stack cmd.run hostname

server2:

server2

server3:

server3

在top文件中匹配:

[root@server1 salt]# vim top.sls

base:

'roles:apache':

- match: grain

- apache

'roles:nginx':

- match: grain

- nginx:

注意对top文件的推送方式:

[root@server1 salt]# salt '*' state.highstate

server2:

......

------------

Total states run: 6

Total run time: 6.014 s

pillar

- 与grains一样也是一个数据系统,但是应用场景不同

- pillar是将信息动态的存放在master端,主要存放私密,敏感信息(如用户名,密码等),而且可以指定某一个minion端才可以看到对应的信息

- pillar更适合在配置管理中应用

声明pillar

pillar文件不能在/srv/salt上,因为这里的文件都会被推送到minion端去

在下面的文件中去定义pillar的base目录:

[root@server1 salt]# vim /etc/salt/master

##### Pillar settings #####

##########################################

# Salt Pillars allow for the building of global data that can be made selectively

# available to different minions based on minion grain filtering. The Salt

# Pillar is laid out in the same fashion as the file server, with environments,

# a top file and sls files. However, pillar data does not need to be in the

# highstate format, and is generally just key/value pairs.

pillar_roots:

base:

- /srv/pillar

#ext_pillar:

# - hiera: /etc/hiera.yaml

# - cmd_yaml: cat /etc/salt/yaml

并且重启master服务:

[root@server1 pillar]# systemctl restart salt-master.service

自定义pillar

[root@server1 srv]# mkdir /srv/pillar

##编辑top.sls文件

[root@server1 pillar]# vim top.sls

base:

'*':

- packages

##编辑packages.sls文件

[root@server1 pillar]# vim packages.sls

{% if grains['fqdn'] == 'server3' %}

package: nginx

{% elif grains['fqdn'] == 'server2' %}

package: httpd

{% endif %}

##刷新pillar数据:

[root@server1 pillar]# salt '*' saltutil.refresh_pillar

server2:

True

server3:

True

##查询pillar数据:

[root@server1 pillar]# salt '*' pillar.item package

server2:

----------

package:

httpd

server3:

----------

package:

nginx

开始查看:

[root@server1 pillar]# salt '*' pillar.items package

server2:

----------

package:

httpd ##获取到

server3:

----------

package:

nginx

在刷新前无法单独访问:

[root@server1 pillar]# salt '*' pillar.item package

server2:

---------- ##无法获取

package:

server3:

----------

package:

刷新后可以单独访问:

[root@server1 pillar]# salt server2 pillar.item package

server2:

----------

package:

httpd ##可获取

数据匹配

-

命令行匹配

[root@server1 pillar]# salt -I package:nginx cmd.run hostname ##-I 表示用 pillar 的方式查询 ,package 对应 nginx 的主机,在这个主机上执行 hostname server3: server3 -

state系统中的匹配:

[root@server1 salt]# cd apache/

[root@server1 apache]# vim init.sls

web:

pkg.installed:

- pkgs:

- { { pillar[‘package’] }} ##将这里改为从pillar中获取

- php

…

推送:

[root@server1 apache]# salt '*' state.highstate ##高级推送成功

[root@server1 apache]# salt server2 state.sls apache ##指定server2成功

[root@server1 apache]# salt server3 state.sls nginx ##指定server3不成功

对nginx进行更改:

[root@server1 salt]# cd nginx/

[root@server1 nginx]# ls

files init.sls service.sls

[root@server1 nginx]# vim service.sls

......

service.running:

- name: {

{ pillar['package'] }} ##用pillar去获取nginx

- enable: True

- reload: True

......

再次执行:

[root@server1 nginx]# salt server3 state.sls nginx

执行成功!

Jinja模板

简介

- Jinja是一种基于python的模板引擎,在sls文件里可以直接使用jinja模板来做一些操作

- 通过jinja模板可以为不同服务器定义各自的变量

- 两种分隔符:{%…%}和{ {…}},前者用于执行诸如for循环或赋值的语句,后者把表达式的结果打印到模板上

使用方式

1.使用控制结构包装条件:

[root@server1 salt]# pwd

/srv/salt

[root@server1 salt]# vim test.sls

/mnt/testfile:

/mnt/testfile:

file.append:

{% if grains['fqdn'] == 'server2'%}

- text: server2

{% elif grains['fqdn'] == 'server3' %}

- text: server3

{% endif %}

推送:

[root@server1 salt]# salt '*' state.sls test

查看:

[root@server2 salt]# cat /mnt/testfile

server2

[root@server3 ~]# cat /mnt/testfile

server3

2.jinja在普通文件中的使用:

[root@server1 salt]# cd apache/

[root@server1 apache]# ls

files init2.sls init.sls

[root@server1 apache]# vim init.sls

web:

pkg.installed:

- pkgs:

- {

{ pillar['package'] }}

- php

service.running:

- name: httpd

- enable: True

- watch:

- file: /etc/httpd/conf/httpd.conf

/etc/httpd/conf/httpd.conf:

file.managed:

- source: salt://apache/files/httpd.conf

- template: jinja ##使用jinja2模板

- context: ##直接更改

bind: 192.168.1.12

[root@server1 apache]# vim files/httpd.conf

Listen {

{ bind }}:80 ##监听server2的80端口

[root@server1 apache]# salt server2 state.sls apache ##推送

@@ -39,7 +39,7 @@

# prevent Apache from glomming onto all bound IP addresses.

#

#Listen 12.34.56.78:80

-Listen 8080

+Listen 192.168.1.12:80 ##更改后的端口

3.用pillar直接进行调用

[root@server1 pillar]# pwd

/srv/pillar

[root@server1 pillar]# cat packages.sls

{% if grains['fqdn'] == 'server3' %}

package: nginx

{% elif grains['fqdn'] == 'server2' %}

package: httpd

port: 8080

{% endif %}

[root@server1 files]# pwd

/srv/salt/apache/files

[root@server1 files]# vim httpd.conf

Listen {

{ bind }}:{

{ pillar['port'] }} ##使用jinja模板才可以调用pillar

执行:

[root@server1 apache]# salt server2 state.sls apache

...

#Listen 12.34.56.78:80 ##端口更改

-Listen 192.168.1.12:80

+Listen 192.168.1.12:8080

...

引用变量

[root@server1 files]# vim httpd.conf

Listen {

{ grains['ipv4'][-1] }}:{

{ pillar['port'] }}

[root@server1 files]# salt server2 state.sls apache

在state文件中引用:

[root@server1 files]# vim httpd.conf

Listen {

{ bind }}:{

{ pillar['port'] }}

在这里直接使用变量,然后去sls文件中做更改:

[root@server1 apache]# pwd

/srv/salt/apache

[root@server1 apache]# vim init.sls

......

/etc/httpd/conf/httpd.conf:

file.managed:

- source: salt://apache/files/httpd.conf

- template: jinja

- context:

bind: {

{ grains['ipv4'][-1] }} ##直接在这里引用

port: {

{ pillar['port'] }} ##-----pillar中的port-----

......

引用变量文件:

[root@server1 salt]# vim lib.sls

{%set port = 8000 %} ##-----临时文件的port-----

[root@server1 salt]# vim apache/files/httpd.conf

{% from 'lib.sls' import port %} ##放在文件开头

......

此时pillar和临时文件都指定了port,让我们看看他们的值是多少:

[root@server1 salt]# cat lib.sls

{%set port = 8000 %}

[root@server1 salt]# cat ../pillar/packages.sls

{% if grains['fqdn'] == 'server3' %}

package: nginx

{% elif grains['fqdn'] == 'server2' %}

package: httpd

port: 8080

{% endif %}

推送一下,看结果是谁生效:

[root@server1 salt]# salt server2 state.sls apache

#Listen 12.34.56.78:80

-Listen 192.168.1.12:8080

+Listen 192.168.122.1:8000

是8000端口生效,因为lib.sls是后面导进去的,所以这个会生效

server2收到的文件会缺少第一行,因为apache不能识别第一行的格式。

[root@server2 salt]# vim /etc/httpd/conf/httpd.conf ##缺少第一行

[root@server2 salt]# vim /var/cache/salt/minion/files/base/apache/files/httpd. ##第一行存在,因为是从master缓存中过来的

jinja模板做高可用

设定server2做master,server3做backup

[root@server1 salt]# mkdir keepalived

[root@server1 salt]# mkdir files

[root@server1 files]# scp server2:/etc/keepalived/keepalived.conf . #在server2上拷贝一份配置文件到当前目录

[root@server1 files]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

# vrrp_strict ##注释掉

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state {

{ STATE }} ##更改为引用变量

interface eth0

virtual_router_id 51

priority {

{ PRI }} ##变量

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.100 ##同一网段ip地址

}

}

用pillar指定变量:

[root@server1 files]# cat /srv/pillar/packages.sls

{% if grains['fqdn'] == 'server3' %}

package: nginx

state: BACKUP ##server3是备用节点

pri: 50

{% elif grains['fqdn'] == 'server2' %}

package: httpd

port: 8080

state: MASTER ##server2是主节点

pri: 100

{% endif %}

编写引导文件:

[root@server1 keepalived]# cat init.sls

kp-install: ##安装keepalive包

pkg.installed:

- pkgs:

- keepalived

file.managed: ##配置配置文件

- name: /etc/keepalived/keepalived.conf

- source: salt://keepalived/files/keepalived.conf

- template: jinja

- context:

STATE: {

{ pillar['state'] }} ##调用变量

PRI: {

{ pillar['pri'] }}

service.running: ##启动服务

- name: keepalived

- enable: True

- reload: True

- watch:

- file: kp-install

开始推送:

[root@server1 keepalived]# salt '*' state.sls keepalived

server2:

[root@server2 salt]# cat /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state MASTER ##master节点

interface eth0

virtual_router_id 51

priority 100 ##优先级为100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

server3:

[root@server3 ~]# cat /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state BACKUP ##backup节点

interface eth0

virtual_router_id 51

priority 50 ##优先级为50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.100

}

}

编写top文件实现高级推送(server2为master,server3为backup):

[root@server1 salt]# mkdir keepalived

[root@server1 keepalived]# mkdir files

拷贝keepalived配置文件到当前目录:

[root@server1 files]# scp server2:/etc/keepalived/keepalived.conf .

[root@server1 files]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

# vrrp_strict ##注释不用

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state {

{ STATE }} ##改为引用变量

interface eth0

virtual_router_id 51

priority {

{ PRI }} ##同上

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.9.100 ##同一网段的ip地址

}

}

编辑pillar文件指定变量值:

[root@server1 pillar]# pwd

/srv/pillar

[root@server1 pillar]# vim packages.sls

{% if grains['fqdn'] == 'server3' %}

package: nginx

state: BACKUP

pri: 50

{% elif grains['fqdn'] == 'server2' %}

package: httpd

port: 80

state: MASTER

pri: 100

{% endif %}

编写keepalived的入口文件;

[root@server1 keepalived]# vim init.sls

kp-install: ##安装keepalived

pkg.installed:

- pkgs:

- keepalived

file.managed:

- name: /etc/keepalived/keepalived.conf ##更改配置文件

- source: salt://keepalived/files/keepalived.conf

- template: jinja

- context:

STATE: {

{ pillar['state'] }} ##使用变量

PRI: {

{ pillar['pri'] }}

service.running: ##开始运行,启动服务

- name: keepalived

- enable: True

- reload: True

- watch:

- file: kp-install

推送测试:

[root@server1 keepalived]# vim init.sls

成功!server2和server3上keepalived的配置文件都已经配置完毕,且虚拟ip均为172.25.9.100

用top文件进行高级推送;

[root@server1 salt]# vim top.sls

base:

'server2': ##在server2上开启apache和高可用

- apache

- keepalived

'server3': ##在server3上开启nginx和高可用

- nginx

- keepalived

需要实现高可用,就要去掉apache中对ip地址的指定

[root@server1 apache]# vim init.sls

......

/etc/httpd/conf/httpd.conf:

file.managed:

- source: salt://apache/files/httpd.conf

- template: jinja

- context:

port: {

{ pillar['port'] }}

......

[root@server1 files]# vim httpd.conf

Listen {

{ port }}

开始高级推送:

[root@server1 apache]# salt '*' state.highstate

[root@server1 files]# curl 172.25.9.100

<!DOCTYPE html>

<html>

<head>

<title>Welcome to server2!</title>

关闭server2的keepalived

[root@server2 html]# systemctl stop keepalived.service

[root@server1 files]# curl 172.25.9.100

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title> ##已经切换到nginx

重新开启server2的keepalived[root@server1 files]# curl 172.25.9.100

<!DOCTYPE html>

<html>

<head>

<title>Welcome to server2!</title>

切换回server2!!

Job管理

简介

- master在下发指令任务时,会附带上产生的jid

- minion在接受到指令开始执行时,会在本地的/var/cache/salt/minion/proc目录下产生该jid命名的文件,用于在执行过程中,master查看当前任务的执行情况

- 指令执行完毕将结果传送给master后,删除该临时文件

Job缓存默认保存24小时:

# Set the number of hours to keep old job information in the job cache:

#keep_jobs: 24

master端Job缓存目录:

[root@server1 files]# cd /var/cache/salt/master/jobs

[root@server1 jobs]# ls

04 12 1e 32 3e 4e 5e 6c 73 81 8d 97 a6 af bc c8 d3 de f1

05 15 25 33 3f 56 61 6d 78 82 90 99 a9 b0 bf c9 d4 e1 f2

07 19 26 34 40 5b 63 6f 7c 88 94 9d ab b1 c3 cb d9 e6 f4

0c 1c 2a 39 4a 5c 64 70 7d 8a 95 a0 ad b5 c5 cc da e8 fa

11 1d 2c 3d 4d 5d 66 71 7f 8c 96 a5 ae bb c6 cd dc ed fb

管理

方式1:

minion端给master端发送一份,给数据库发送一份

下载数据库服务:

[root@server1 ~]# yum install -y mariadb-server

[root@server1 ~]# systemctl start mariadb

在minion端安装python-mysqldb模块,使得minion端可以发送数据给master

[root@server2 ~]# yum install MySQL-python.x86_64 -y

编辑minion的配置文件:

[root@server2 ~]# vim /etc/salt/minion

###### Returner settings ######

############################################

# Default Minion returners. Can be a comma delimited string or a list:

#

return: mysql ##指定返还给mysql

mysql.host: '192.168.1.11' ##返还主机的ip

mysql.user: 'salt' ##mysql用户

mysql.pass: 'salt' ##密码

mysql.db: 'salt'

mysql.port: 3306

重启minion端的服务

[root@server2 ~]# systemctl restart salt-minion

创建mysql数据库salt的库和表:

[root@server1 ~]# vim hello.sql

CREATE DATABASE `salt`

DEFAULT CHARACTER SET utf8

DEFAULT COLLATE utf8_general_ci;

USE `salt`;

--

-- Table structure for table `jids`

--

DROP TABLE IF EXISTS `jids`;

CREATE TABLE `jids` (

`jid` varchar(255) NOT NULL,

`load` mediumtext NOT NULL,

UNIQUE KEY `jid` (`jid`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- CREATE INDEX jid ON jids(jid) USING BTREE;

--

-- Table structure for table `salt_returns`

--

DROP TABLE IF EXISTS `salt_returns`;

CREATE TABLE `salt_returns` (

`fun` varchar(50) NOT NULL,

`jid` varchar(255) NOT NULL,

`return` mediumtext NOT NULL,

`id` varchar(255) NOT NULL,

`success` varchar(10) NOT NULL,

`full_ret` mediumtext NOT NULL,

`alter_time` TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

KEY `id` (`id`),

KEY `jid` (`jid`),

KEY `fun` (`fun`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

--

-- Table structure for table `salt_events`

--

DROP TABLE IF EXISTS `salt_events`;

CREATE TABLE `salt_events` (

`id` BIGINT NOT NULL AUTO_INCREMENT,

`tag` varchar(255) NOT NULL,

`data` mediumtext NOT NULL,

`alter_time` TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

`master_id` varchar(255) NOT NULL,

PRIMARY KEY (`id`),

KEY `tag` (`tag`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

导入:

[root@server1 Downloads]# mysql < hello.sql

[root@server1 Downloads]# mysql

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 12

Server version: 5.5.60-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| salt |

| test |

+--------------------+

5 rows in set (0.00 sec)

创建salt用户:

MariaDB [(none)]> grant all on salt.* to salt@localhost identified by 'salt';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> grant all on salt.* to salt@'%' identified by 'salt'; ##授权给所有主机

发布一个内容:

[root@server1 ~]# salt server2 cmd.run 'uname -a'

server2:

Linux server2 3.10.0-957.el7.x86_64 #1 SMP Thu Oct 4 20:48:51 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

登录数据库,用salt身份进行查看:

[root@server1 ~]# mysql -usalt -psalt

MariaDB [(none)]> use salt;

MariaDB [salt]> select * from salt_returns;

+---------+----------------------+---------------------------------------------------------------------------------------------------------+---------+---------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+---------------------+

| fun | jid | return | id | success | full_ret | alter_time |

+---------+----------------------+---------------------------------------------------------------------------------------------------------+---------+---------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+---------------------+

| cmd.run | 20200617210638243013 | "Linux server2 3.10.0-957.el7.x86_64 #1 SMP Thu Oct 4 20:48:51 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux" | server2 | 1 | {"fun_args": ["uname -a"], "jid": "20200617210638243013", "return": "Linux server2 3.10.0-957.el7.x86_64 #1 SMP Thu Oct 4 20:48:51 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux", "retcode": 0, "success": true, "fun": "cmd.run", "id": "server2"} | 2020-06-18 05:06:38 |

+---------+----------------------+---------------------------------------------------------------------------------------------------------+---------+---------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+---------------------+

2 rows in set (0.00 sec)

数据已经收到了。

方式2:

minion端发送给master,master又发送给数据库

注释掉minion端配置/etc/salt/minion的参数,去配置master端:

注释掉minion端配置/etc/salt/minion的参数,去配置master端:

[root@server1 ~]# yum install MySQL-python.x86_64 -y

[root@server1 ~]# vim /etc/salt/master

matser_job_cache: mysql

mysql.host: 'localhost'

mysql.user: 'salt'

mysql.pass: 'salt'

mysql.db: 'salt'

mysql.port: 3306

[root@server1 ~]# systemctl restart salt-master.service

[root@server1 ~]# salt '*' test.ping

查看数据库信息:

MariaDB [salt]> select * from salt_returns

-> ;

+-----------+----------------------+---------------------------------------------------------------------------------------------------------+---------+---------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+---------------------+

| fun | jid | return | id | success | full_ret | alter_time |

+-----------+----------------------+---------------------------------------------------------------------------------------------------------+---------+---------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+---------------------+

| cmd.run | 20200617204703728990 | "server2" | server2 | 1 | {"fun_args": ["hostname"], "jid": "20200617204703728990", "return": "server2", "retcode": 0, "success": true, "fun": "cmd.run", "id": "server2"} | 2020-06-18 04:47:03 |

| cmd.run | 20200617210638243013 | "Linux server2 3.10.0-957.el7.x86_64 #1 SMP Thu Oct 4 20:48:51 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux" | server2 | 1 | {"fun_args": ["uname -a"], "jid": "20200617210638243013", "return": "Linux server2 3.10.0-957.el7.x86_64 #1 SMP Thu Oct 4 20:48:51 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux", "retcode": 0, "success": true, "fun": "cmd.run", "id": "server2"} | 2020-06-18 05:06:38 |

| test.ping | 20200617212856955861 | true | server2 | 1 | {"fun_args": [], "jid": "20200617212856955861", "return": true, "retcode": 0, "success": true, "fun": "test.ping", "id": "server2"} | 2020-06-18 05:28:57 |

+-----------+----------------------+---------------------------------------------------------------------------------------------------------+---------+---------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+---------------------+

3 rows in set (0.00 sec)

列出所有job:

[root@server1 ~]# salt-run jobs.list_jobs

20200616214901854370:

----------

Arguments:

Function:

state.highstate

StartTime:

2020, Jun 16 21:49:01.854370

Target:

*

Target-type:

glob

User:

root

20200616214906895503:

----------

Arguments:

- 20200616214901854370

Function:

saltutil.find_job

StartTime:

2020, Jun 16 21:49:06.895503

Target:

- server2

Target-type:

list

User:

root

......

[root@server1 ~]# salt-run jobs.active ## 查看所有minion当前正在运行的jobs

[root@server1 ~]# salt-run jobs.lookup_jid 20200617212856955861 ##对应test.ping命令

server2:

True

server3:

True

salt-ssh和salt-syndic

salt-ssh

- salt-ssh可以独立运行,不需要minion端

- salt-ssh用的是sshpass进行密码交互的

- 以串行模式工作,性能下降

- 有些主机不允许安装minion端时就可以使用salt-ssh

安装:

[root@server1 ~]# yum install -y salt-ssh

[root@server1 ~]# cd /etc/salt

[root@server1 salt]# ls

cloud cloud.deploy.d cloud.profiles.d master minion pki proxy.d

cloud.conf.d cloud.maps.d cloud.providers.d master.d minion.d proxy roster

[root@server1 salt]# vim roster

server3:

host: 192.168.1.13

user: root

passwd: redhat

server2:

host: 192.168.1.12

user: root

passwd: redhat

关闭server2和server3上的minion端:

[root@server2 ~]# systemctl stop salt-minion

[root@server3 ~]# systemctl stop salt-minion

开始测试:

[root@server1 salt]# salt-ssh '*' test.ping -i

server2:

True

server3:

True

[root@server1 salt]# salt-ssh server2 cmd.run hostname

server2:

server2

salt-syndic

- 如果大家知道zabbix proxy的话那就很容易理解了,syndic其实就是个代理,隔离master与minion。

- Syndic必须要运行在master上,再连接到另一个topmaster上。

- Topmaster 下发的状态需要通过syndic来传递给下级master,minion传递给master的数据也是由syndic传递给topmaster。

- topmaster并不知道有多少个minion。

- syndic与topmaster的file_roots和pillar_roots的目录要保持一致。

如下图所示,当服务器越来越多,玛斯图尔也必然增多,这就造成master节点不好管理的现状,因此topmaster的出现就有了可能。我们在master上安装syndic,中心topmaster节点就靠这个管理master节点。中心节点不存储minion端信息,它只保存minion端信息。

在server4上安装salt,以及master端,并进行如下配置:

[root@server4 ~]# vim /etc/salt/master

# masters' syndic interfaces.

order_masters: True

在server1中,

安装syndic,

[root@server1 ~]# yum install salt-syndic -y

配置:

[root@server1 ~]# vim /etc/salt/master

# this master where to receive commands from.

syndic_master: 172.25.9.4

开启:

[root@server1 ~]# systemctl restart salt-master

[root@server1 ~]# systemctl start salt-syndic

在server4中查看:

[root@server4 ~]# salt-key -L

Accepted Keys:

Denied Keys:

Unaccepted Keys:

server1

Rejected Keys:

[root@server4 ~]# salt-key -A

The following keys are going to be accepted:

Unaccepted Keys:

server1

测试:

[root@server4 ~]# salt '*' test.ping

server3:

True

server2:

True

他的数据流向:

中心节点传递给syndic节点,syndic再传递给master,master给minion端。再按原路返回

salt-api简介

SaltStack 官方提供有REST API格式的 salt-api 项目,将使Salt与第三方系统集成变得尤为简单。

-

官方提供了三种api模块:

-

rest_cherrypy

-

rest_tornado

-

rest_wsgi

-

官方链接:https://docs.saltstack.com/en/latest/ref/netapi/all/index.html#all-netapi-modules

配置

安装:

[root@server1 ~]# yum install salt-api.noarch -y

生成证书:

[root@server1 ~]# cd /etc/pki/tls/private

[root@server1 private]# openssl genrsa 1024 > localhost.key

[root@server1 certs]# pwd

/etc/pki/tls/certs

[root@server1 certs]# testcert

编辑配置文件:

[root@server1 certs]# cd /etc/salt/master.d

[root@server1 master.d]# vim auth.conf

external_auth:

pam:

saltdev: ##所指定的用户

- .* ##开放权限

- '@wheel'

- '@runner'

- '@jobs'

添加用户:

[root@server1 master.d]# useadd saltdev

[root@server1 master.d]# passwd saltdev

启动rest_cherrypy模块:

[root@server1 master.d]# vim cert.conf

rest_cherrypy:

host: 172.25.9.1

port: 8000

ssl_crt: /etc/pki/tls/certs/localhost.crt

ssl_key: /etc/pki/tls/private/localhost.key

重启服务:

[root@server1 master.d]# systemctl restart salt-master

[root@server1 master.d]# systemctl restart salt-api

n[root@server1 master.d]# netstat -antlp|grep :8000

tcp 0 0 172.25.9.1:8000 0.0.0.0:* LISTEN 18681/salt-api

使用

获取认证tocken:

[root@server1 master.d]# curl -sSk https://172.25.9.1:8000/login -H 'Accept: application/x-yaml' -d username=saltdev -d password=redhat -d eauth=pam

return:

- eauth: pam

expire: 1592493252.951613

perms:

- .*

- '@wheel'

- '@runner'

- '@jobs'

start: 1592450052.951612

token: d83811a387b63eaf155c012de28db1adce35a01b

user: saltdev

推送:

[root@server1 master.d]# curl -sSk https://172.25.9.1:8000 -H 'Accept: application/x-yaml' -H 'X-Auth-Token: d83811a387b63eaf155c012de28db1adce35a01b' -d client=local -d tgt='*' -d fun=test.ping

return:

- server2: true

server3: true

成功!!