脚本实现的功能:MySQL+免密+hadoop+hive+zookeeper+hbase+sqoop一键安装

准备工作:

- 一台可以连外网且可以连xshell的机器,centos 7镜像

- mkdir /opt/download,且将以下版本软件传到download目录下

apache-flume-1.8.0-bin.tar.gz

jdk-8u111-linux-x64.tar.gz

hadoop-2.6.0-cdh5.14.2.tar.gz

kafka_2.11-0.11.0.2.gz

hadoop-native-64-2.6.0.tar

mysql-connector-java-5.1.32.jar

hbase-1.2.0-cdh5.14.2.tar.gz

sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz

hive-1.1.0-cdh5.14.2.tar.gz

zookeeper-3.4.5-cdh5.14.2.tar.gz - 新建.sh脚本,授权x,对以下代码修改IP和映射名,执行

- hadoop格式化时,需要连续手动输入三次yes,此处暂未解决

#!/bin/bash

mkdir /opt/software

#mysql

RST=`rpm -qa | grep mariadb`

if $RST; then

yum -y remove $RST

fi

yum -y install wget

wget http://repo.mysql.com/mysql-community-release-el7-5.noarch.rpm

rpm -ivh mysql-community-release-el7-5.noarch.rpm

yum -y install mysql-server

cat >/etc/my.cnf<<EOF

[client]

default-character-set=utf8

[mysqld]

default-storage-engine=INNODB

character-set-server=utf8

collation-server=utf8_general_ci

[mysql]

default-character-set=utf8

EOF

systemctl restart mysql

systemctl status mysql

#通过配置文件的方法跳过MySQL的登录检查,修改后要删掉次配置

`sed -i '/sql_mode=/i\\skip-grant-tables' /etc/my.cnf`

`systemctl restart mysql`

#modify password

`mysql -uroot -e "use mysql;update user set password=password('root') where user='root';flush privileges;grant all on *.* to root@'%' identified by 'root';"`

`sed -i '/skip-grant-tables/d' /etc/my.cnf`

#jdk

cd /opt/software

tar -zxf /opt/download/jdk-8u111-linux-x64.tar.gz

mv jdk1.8.0_111 jdk180

echo '#java'>>/etc/profile.d/my.sh

echo "export JAVA_HOME=/opt/software/jdk180">>/etc/profile.d/my.sh

echo 'export PATH=$JAVA_HOME/bin:$PATH'>>/etc/profile.d/my.sh

echo 'export CLASS_PATH=.:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/dt.jar'>>/etc/profile.d/my.sh

source /etc/profile

echo $JAVA_HOME

java -version

#免密

echo "192.168.221.205 single11">>/etc/hosts

yum -y install expect

expect << EOF

spawn ssh-keygen -t rsa

expect {

"save the key" {send "\r";exp_continue}

"Overwrite" {send "y\r";exp_continue}

"Enter passphrase" {send "\r";exp_continue}

"same passphrase" {send "\r"}

}

expect eof

EOF

cd /root/.ssh

cat id_rsa.pub >> authorized_keys

#hadoop

#配置时钟同步

yum -y install ntp

echo '*/1 * * * * /usr/sbin/ntpdate -u pool.ntp.org;clock -w'>/var/spool/cron/root

systemctl stop ntpd

systemctl start ntpd

systemctl status ntpd

systemctl enable ntpd.service

#安装hadoop

cd /opt/software

tar -zxf /opt/download/hadoop-2.6.0-cdh5.14.2.tar.gz

mv hadoop-2.6.0-cdh5.14.2 hadoop260

cd /opt/software/hadoop260/lib/native/

tar -xf /opt/download/hadoop-native-64-2.6.0.tar

cd /opt/software

chown -R root:root hadoop260

#配置环境变量

echo '#hadoop'>>/etc/profile.d/my.sh

echo "export HADOOP_HOME=/opt/software/hadoop260">>/etc/profile.d/my.sh

echo 'export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/lib:$PATH'>>/etc/profile.d/my.sh

source /etc/profile

echo $HADOOP_HOME

#配置hadoop-env.sh

cd /opt/software/hadoop260/etc/hadoop

sed -i '/export JAVA_HOME=${JAVA_HOME}/ s/${JAVA_HOME}/\/opt\/software\/jdk180/' /opt/software/hadoop260/etc/hadoop/hadoop-env.sh

#配置core-site.xml

coresitetxt="

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/software/hadoop260</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.221.205:9000</value>

</property>

<property>

<!--表示代理用户的组所属-->

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

<property>

<!--表示任意节点使用 hadoop集群的代理用户hadoop都能访问hdfs集群-->

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

"

for i in $coresitetxt

do

item=`echo $i`

`eval "sed -i '/<\/configuration>/i\$item' core-site.xml"`

done

#配置hdfs-site.xml

hdfssitetxt="

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/opt/software/hadoop260/tmp/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/opt/software/hadoop260/tmp/data</value>

</property>

"

for i in $hdfssitetxt

do

item=`echo $i`

`eval "sed -i '/<\/configuration>/i\$item' hdfs-site.xml"`

done

#配置mapred-site.txt

cp mapred-site.xml.template mapred-site.xml

mapredsitetxt="

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

"

for i in $mapredsitetxt

do

item=`echo $i`

`eval "sed -i '/<\/configuration>/i\$item' mapred-site.xml"`

done

#配置yarn-site.xml

yarnsitetxt="

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

"

for i in $yarnsitetxt

do

item=`echo $i`

`eval "sed -i '/<\/configuration>/i\$item' mapred-site.xml"`

done

cd /opt/software/hadoop260/sbin/

expect << EOF

spawn hdfs namenode -format

expect {

"ECDSA key fingerprint is" {send "yes\r";exp_continue}

"ECDSA key fingerprint is" {send "yes\r";exp_continue}

"ECDSA key fingerprint is" {send "yes\r"}

}

expect eof

EOF

source /etc/profile

start-dfs.sh

start-yarn.sh

jps

cd /opt/software

tar -zxf /opt/download/hive-1.1.0-cdh5.14.2.tar.gz

mv hive-1.1.0-cdh5.14.2 hive110

cd /opt/software/hive110/lib/

cp /opt/download/mysql-connector-java-5.1.32.jar ./

touch /opt/software/hive110/conf/hive-site.xml

cat >/opt/software/hive110/conf/hive-site.xml<<EOF

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/ xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/opt/software/hive110/warehouse</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.221.221:3306/hive110?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

</property>

<property>

<name>hive.metastore.local</name>

<value>true</value>

</property>

<property>

<name>hive.server2.authentication</name>

<value>NONE</value>

<description>

Expects one of [nosasl, none, ldap, kerberos, pam, custom].

Client authentication types.

NONE: no authentication check

LDAP: LDAP/AD based authentication

KERBEROS: Kerberos/GSSAPI authentication

CUSTOM: Custom authentication provider

(Use with property hive.server2.custom.authentication.class)

PAM: Pluggable authentication module

NOSASL: Raw transport

</description>

</property>

<property>

<name>hive.server2.thrift.client.user</name>

<value>root</value>

<description>Username to use against thrift client</description>

</property>

<property>

<name>hive.server2.thrift.client.password</name>

<value>root</value>

<description>Password to use against thrift client</description>

</property>

</configuration>

EOF

echo '#hive'>>/etc/profile.d/my.sh

echo "export HIVE_HOME=/opt/software/hive110">>/etc/profile.d/my.sh

echo 'export PATH=$HIVE_HOME/bin:$PATH'>>/etc/profile.d/my.sh

source /etc/profile

echo $HIVE_HOME

cd /opt/software/hive110/bin/

sleep 6s

./schematool -dbType mysql -initSchema

sleep 6s

nohup hive --service metastore>/dev/null 2>&1 &

sleep 6s

nohup hive --service hiveserver2>/dev/null 2>&1 &

sleep 6s

jps

cd /opt/software

tar -zxf /opt/download/zookeeper-3.4.5-cdh5.14.2.tar.gz

mv zookeeper-3.4.5-cdh5.14.2/ zookeeper345

cd zookeeper345/conf/

cp zoo_sample.cfg zoo.cfg

sed -i '/dataDir=\/tmp\/zookeeper/ s/\/tmp\/zookeeper/\/opt\/software\/zookeeper345\/mydata/' /opt/software/zookeeper345/conf/zoo.cfg

sed -i '/# the port at which the clients will connect/i\server.1=single11:2888:3888' /opt/software/zookeeper345/conf/zoo.cfg

mkdir /opt/software/zookeeper345/mydata

touch /opt/software/zookeeper345/mydata/myid

echo "1">/opt/software/zookeeper345/mydata/myid

echo "export ZK_HOME=/opt/software/zookeeper345">>/etc/profile.d/my.sh

echo 'export PATH=$ZK_HOME/bin:$PATH'>>/etc/profile.d/my.sh

source /etc/profile

zkServer.sh start

zkServer.sh status

jps

cd /opt/software/

tar -zxf /opt/download/hbase-1.2.0-cdh5.14.2.tar.gz

mv hbase-1.2.0-cdh5.14.2/ hbase120

chown -R root:root /opt/software/hbase120

cd /opt/software/hbase120/conf/

sed -i '28 i\export JAVA_HOME=/opt/software/jdk180\' /opt/software/hbase120/conf/hbase-env.sh

sed -i '29 i\export HBASE_MANAGES_ZK=false\' /opt/software/hbase120/conf/hbase-env.sh

hbasesitetxt="

<property>

<name>hbase.rootdir</name>

<value>hdfs://192.168.221.221:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/opt/software/zookeeper345/mydata</value>

</property>

"

for i in $hbasesitetxt

do

item=$i

`eval "sed -i '/<\/configuration>/i\$item' hbase-site.xml"`

done

echo "export HBASE_HOME=/opt/software/hbase120">>/etc/profile.d/my.sh

echo 'export PATH=$HBASE_HOME/bin:$PATH'>>/etc/profile.d/my.sh

source /etc/profile

start-hbase.sh

jps

#sqoop

cd /opt/software

tar -zxf /opt/download/sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz

mv sqoop-1.4.6.bin__hadoop-2.0.4-alpha/ sqoop146

cd /opt/software/sqoop146/lib/

cp /opt/download/mysql-connector-java-5.1.32.jar ./

cp /opt/software/hadoop260/share/hadoop/common/hadoop-common-2.6.0-cdh5.14.2.jar ./

cp /opt/software/hadoop260/share/hadoop/hdfs/hadoop-hdfs-2.6.0-cdh5.14.2.jar ./

cp /opt/software/hadoop260/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.0-cdh5.14.2.jar ./

cd /opt/software/sqoop146/conf/

cp sqoop-env-template.sh sqoop-env.sh

cat >>sqoop-env.sh <<EOF

#Set path to where bin/hadoop is available

export HADOOP_COMMON_HOME=/opt/software/hadoop260

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/opt/software/hadoop260/share/hadoop/mapreduce

#set the path to where bin/hbase is available

export HBASE_HOME=/opt/software/hbase120

#Set the path to where bin/hive is available

export HIVE_HOME=/opt/software/hive110

#Set the path for where zookeper config dir is

export ZOOCFGDIR=/opt/software/zookeeper345

EOF

cd /opt/software/sqoop146

mkdir mylog

echo "#sqoop">>/etc/profile.d/my.sh

echo "export SQ_HOME=/opt/software/sqoop146">>/etc/profile.d/my.sh

echo 'export PATH=$SQ_HOME/bin:$PATH'>>/etc/profile.d/my.sh

echo 'export LOGDIR=$SQ_HOME/mylog/'>>/etc/profile.d/my.sh

source /etc/profile

sqoop list-databases --connect jdbc:mysql://192.168.221.221:3306 --username root --password root

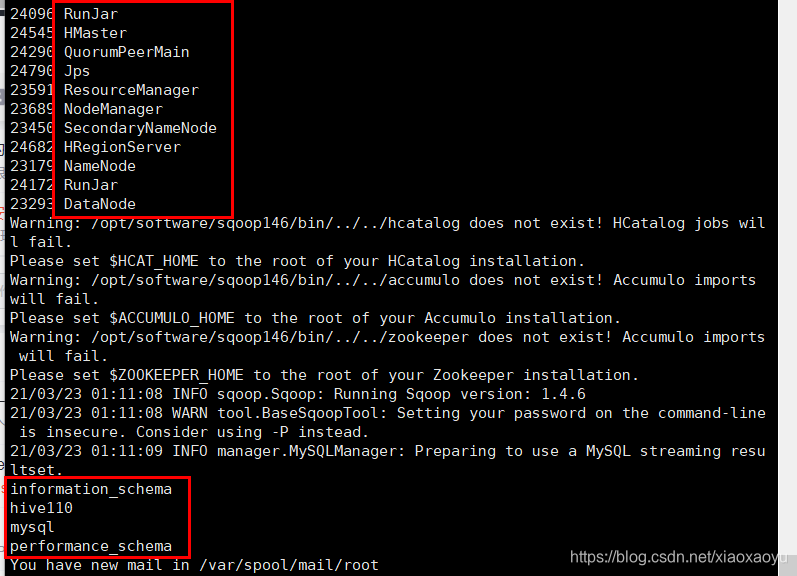

可以看到所有服务都有开启,且sqoop可以正常连接数据库