微信公众号:运维开发故事,作者:姜总

背景

NFS在k8s中作为volume存储已经没有什么新奇的了,这个是最简单也是最容易上手的一种文件存储。最近有一个需求需要在k8s中使用NFS存储,于是记录如下,并且还存在一些骚操作和过程中遇到的坑点,同时记录如下。

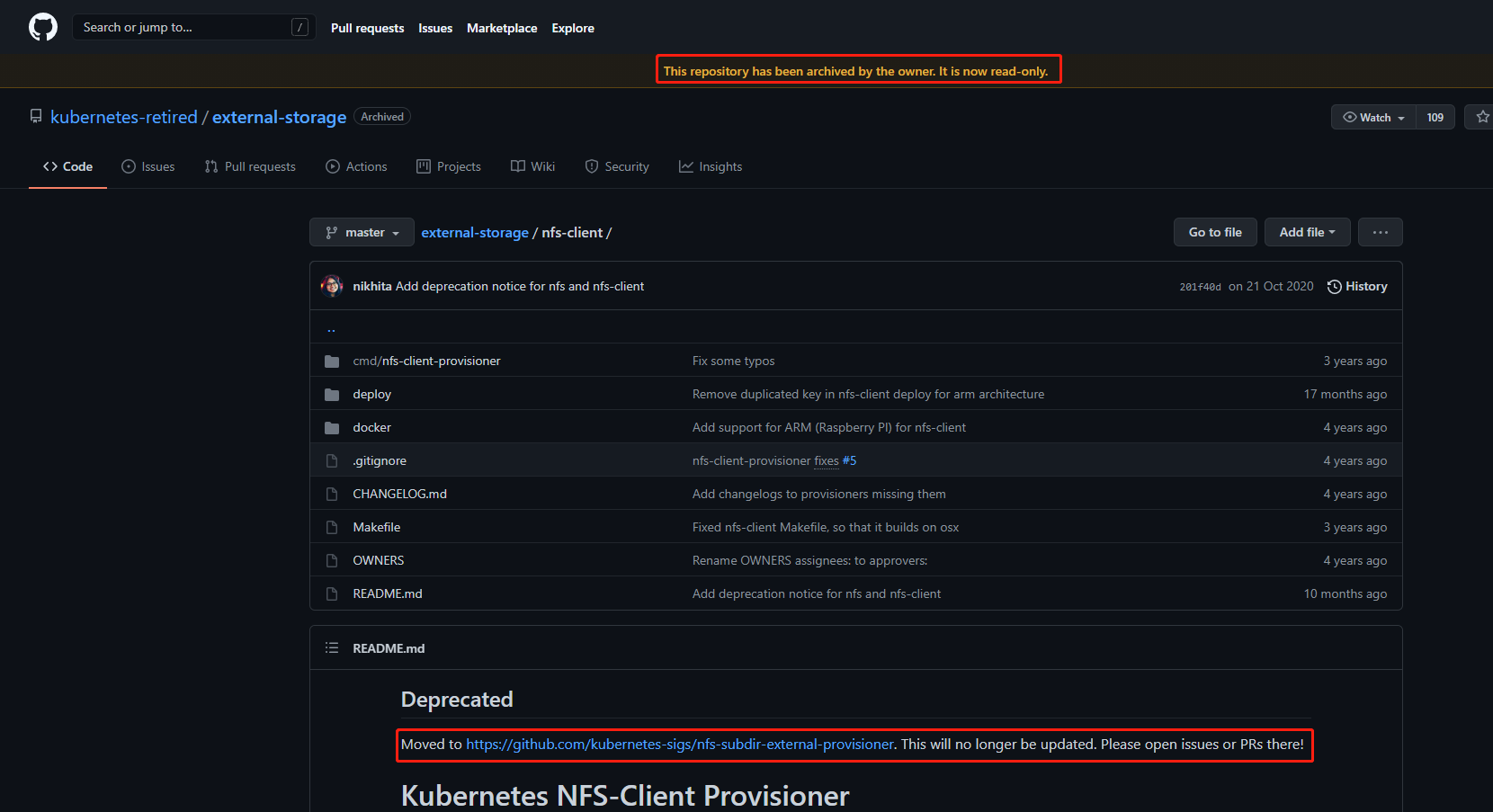

访问nfs provisioner的GitHub仓库会发现他提示你该仓库已经被个人归档并且状态已经是只读了。

老的NFS地址:https://github.com/kubernetes-retired/external-storage/tree/master/nfs-client

下面Deprecated表示仓库已经移动到了另外一个GitHub地址了,也就是说他这个仓库已经不在更新了,持续更新的仓库是Moved to 后面指定的仓库了。

新仓库地址:https://github.com/lorenzofaresin/nfs-subdir-external-provisioner

发现更新的仓库中相比老仓库多了一个功能:添加了一个参数pathPattern,实际上也就是通过设置这个参数可以配置PV的子目录。

带着好奇心我们来部署一下新的NFS,以下yaml配置文件可以在项目中的deploy目录中找到。我这里的配置根据我的环境稍微做了更改,比如NFS的服务的IP地址。你们根据实际情况修改成自己的nfs服务器地址和path路径。本次实践在k8s 1.19.0上

nfs-client-provisioner部署

class.yaml

$ cat class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"

deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: k8s.gcr.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 172.16.33.4

- name: NFS_PATH

value: /

volumes:

- name: nfs-client-root

nfs:

server: 172.16.33.4

path: /

rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

注意:

镜像无法拉取的话可以从国外机器拉取镜像然后再导入

rbac基本无需更改,配置子目录的时候需要更改class.yaml文件,后面会说

创建所有资源文件

kubectl apply -f class.yaml -f deployment.yaml -f rbac.yaml

通过一个简单的例子来创建pvc

$ cat test-pvc-2.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-pvc-2

namespace: nacos

spec:

storageClassName: "managed-nfs-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

$ cat test-nacos-pod-2.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nacos-c1-sit-tmp-1

labels:

appEnv: sit

appName: nacos-c1-sit-tmp-1

namespace: nacos

spec:

serviceName: nacos-c1-sit-tmp-1

replicas: 3

selector:

matchLabels:

appEnv: sit

appName: nacos-c1-sit-tmp-1

template:

metadata:

labels:

appEnv: sit

appName: nacos-c1-sit-tmp-1

spec:

dnsPolicy: ClusterFirst

containers:

- name: nacos

image: www.ayunw.cn/library/nacos/nacos-server:1.4.1

ports:

- containerPort: 8848

env:

- name: NACOS_REPLICAS

value: "1"

- name: MYSQL_SERVICE_HOST

value: mysql.ayunw.cn

- name: MYSQL_SERVICE_DB_NAME

value: nacos_c1_sit

- name: MYSQL_SERVICE_PORT

value: "3306"

- name: MYSQL_SERVICE_USER

value: nacos

- name: MYSQL_SERVICE_PASSWORD

value: xxxxxxxxx

- name: MODE

value: cluster

- name: NACOS_SERVER_PORT

value: "8848"

- name: PREFER_HOST_MODE

value: hostname

- name: SPRING_DATASOURCE_PLATFORM

value: mysql

- name: TOMCAT_ACCESSLOG_ENABLED

value: "true"

- name: NACOS_AUTH_ENABLE

value: "true"

- name: NACOS_SERVERS

value: nacos-c1-sit-0.nacos-c1-sit-tmp-1.nacos.svc.cluster.local:8848 nacos-c1-sit-1.nacos-c1-sit-tmp-1.nacos.svc.cluster.local:8848 nacos-c1-sit-2.nacos-c1-sit-tmp-1.nacos.svc.cluster.local:8848

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 500m

memory: 5Gi

requests:

cpu: 100m

memory: 512Mi

volumeMounts:

- name: data

mountPath: /home/nacos/plugins/peer-finder

subPath: peer-finder

- name: data

mountPath: /home/nacos/data

subPath: data

volumeClaimTemplates:

- metadata:

name: data

spec:

storageClassName: "managed-nfs-storage"

accessModes:

- "ReadWriteMany"

resources:

requests:

storage: 10Gi

查看pvc以及nfs存储中的数据

# ll

total 12

drwxr-xr-x 4 root root 4096 Aug 3 13:30 nacos-data-nacos-c1-sit-tmp-1-0-pvc-90d74547-0c71-4799-9b1c-58d80da51973

drwxr-xr-x 4 root root 4096 Aug 3 13:30 nacos-data-nacos-c1-sit-tmp-1-1-pvc-18b3e220-d7e5-4129-89c4-159d9d9f243b

drwxr-xr-x 4 root root 4096 Aug 3 13:31 nacos-data-nacos-c1-sit-tmp-1-2-pvc-26737f88-35cd-42dc-87b6-3b3c78d823da

# ll nacos-data-nacos-c1-sit-tmp-1-0-pvc-90d74547-0c71-4799-9b1c-58d80da51973

total 8

drwxr-xr-x 2 root root 4096 Aug 3 13:30 data

drwxr-xr-x 2 root root 4096 Aug 3 13:30 peer-finder

配置子目录

删除之前创建的class.yaml,添加pathPattern参数,然后重新生成sc

$ kubectl delete -f class.yaml

$ vi class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"

# 添加以下参数

pathPattern: "${.PVC.namespace}/${.PVC.annotations.nfs.io/storage-path}"

创建pvc来测试生成的PV目录是否生成了子目录

$ cat test-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-pvc-2

namespace: nacos

annotations:

nfs.io/storage-path: "test-path-two" # not required, depending on whether this annotation was shown in the storage class description

spec:

storageClassName: "managed-nfs-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Mi

创建资源

kubectl apply -f class.yaml -f test-pvc.yaml

查看结果

在mount了nfs的机器上查看生成的目录,发现子目录的确已经生成,并且子目录的层级是以"命名空间/注解名称"为规则的。刚好符合了上面StorageClass中定义的pathPattern规则。

# pwd

/data/nfs# ll nacos/

total 4

drwxr-xr-x 2 root root 4096 Aug 3 10:21 nacos-pvc-c1-pro

# tree -L 2 .

.

└── nacos

└── nacos-pvc-c1-pro

2 directories, 0 files

provisioner高可用

生产环境中应该尽可能的避免单点故障,因此此处考虑provisioner的高可用架构

更新后的provisioner配置如下:

$ cat nfs-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

spec:

# 因为要实现高可用,所以配置3个pod副本

replicas: 3

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

imagePullSecrets:

- name: registry-auth-paas

containers:

- name: nfs-client-provisioner

image: www.ayunw.cn/nfs-subdir-external-provisioner:v4.0.2-31-gcb203b4

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

# 设置高可用允许选举

- name: ENABLE_LEADER_ELECTION

value: "True"

- name: NFS_SERVER

value: 172.16.33.4

- name: NFS_PATH

value: /

volumes:

- name: nfs-client-root

nfs:

server: 172.16.33.4

path: /

重建资源

kubectl delete -f nfs-class.yaml -f nfs-deployment.yaml

kubectl apply -f nfs-class.yaml -f nfs-deployment.yaml

查看provisioner高可用是否生效

# kubectl get po -n kube-system | grep nfs

nfs-client-provisioner-666df4d979-fdl8l 1/1 Running 0 20s

nfs-client-provisioner-666df4d979-n54ps 1/1 Running 0 20s

nfs-client-provisioner-666df4d979-s4cql 1/1 Running 0 20s

# kubectl logs -f --tail=20 nfs-client-provisioner-666df4d979-fdl8l -n kube-system

I0803 06:04:41.406441 1 leaderelection.go:242] attempting to acquire leader lease kube-system/nfs-provisioner-baiducfs...

^C

# kubectl logs -f --tail=20 -n kube-system nfs-client-provisioner-666df4d979-n54ps

I0803 06:04:41.961617 1 leaderelection.go:242] attempting to acquire leader lease kube-system/nfs-provisioner-baiducfs...

^C

[root@qing-core-kube-master-srv1 nfs-storage]# kubectl logs -f --tail=20 -n kube-system nfs-client-provisioner-666df4d979-s4cql

I0803 06:04:39.574258 1 leaderelection.go:242] attempting to acquire leader lease kube-system/nfs-provisioner-baiducfs...

I0803 06:04:39.593388 1 leaderelection.go:252] successfully acquired lease kube-system/nfs-provisioner-baiducfs

I0803 06:04:39.593519 1 event.go:278] Event(v1.ObjectReference{

Kind:"Endpoints", Namespace:"kube-system", Name:"nfs-provisioner-baiducfs", UID:"3d5cdef6-57da-445e-bcd4-b82d0181fee4", APIVersion:"v1", ResourceVersion:"1471379708", FieldPath:""}): type: 'Normal' reason: 'LeaderElection' nfs-client-provisioner-666df4d979-s4cql_590ac6eb-ccfd-4653-9de5-57015f820b84 became leader

I0803 06:04:39.593559 1 controller.go:820] Starting provisioner controller nfs-provisioner-baiducfs_nfs-client-provisioner-666df4d979-s4cql_590ac6eb-ccfd-4653-9de5-57015f820b84!

I0803 06:04:39.694505 1 controller.go:869] Started provisioner controller nfs-provisioner-baiducfs_nfs-client-provisioner-666df4d979-s4cql_590ac6eb-ccfd-4653-9de5-57015f820b84!

通过successfully acquired lease kube-system/nfs-provisioner-baiducfs可以看到第三个pod成功被选举为leader节点了,高可用生效。

报错

在操作过程中遇到describe pod发现报错如下

Mounting arguments: --description=Kubernetes transient mount for /data/kubernetes/kubelet/pods/2ca70aa9-433c-4d10-8f87-154ec9569504/volumes/kubernetes.io~nfs/nfs-client-root --scope -- mount -t nfs 172.16.41.7:/data/nfs_storage /data/kubernetes/kubelet/pods/2ca70aa9-433c-4d10-8f87-154ec9569504/volumes/kubernetes.io~nfs/nfs-client-root

Output: Running scope as unit: run-rdcc7cfa6560845969628fc551606e69d.scope

mount: /data/kubernetes/kubelet/pods/2ca70aa9-433c-4d10-8f87-154ec9569504/volumes/kubernetes.io~nfs/nfs-client-root: bad option; for several filesystems (e.g. nfs, cifs) you might need a /sbin/mount.<type> helper program.

Warning FailedMount 10s kubelet, node1.ayunw.cn MountVolume.SetUp failed for volume "nfs-client-root" : mount failed: exit status 32

Mounting command: systemd-run

解决方式:

经排查原因是pod被调度到的节点上没有安装nfs客户端,只需要安装一下nfs客户端nfs-utils即可。