整体框架

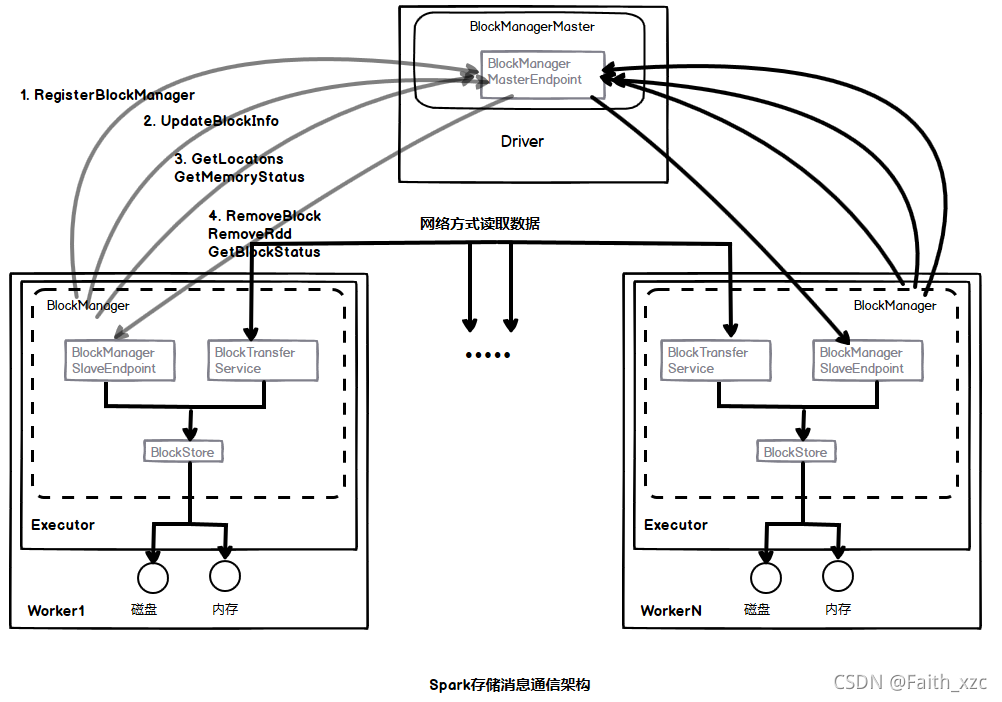

Spark的存储采取了主从模式,即Master / Slave模式,整个存储模块使用RPC的消息通信方式。其中:

- Master负责整个应用程序运行期间的数据块元数据的管理和维护

- Slave一方面负责将本地数据块的状态信息上报给Master,另一方面接受从Master传过来的执行命令。如获取数据块状态、删除RDD/数据块等命令。每个Slave存在数据传输通道,根据需要在Slave之间进行远程数据的读取和写入。

Spark的存储整体框架图如下:

根据Spark存储整体框架图,下面将根据数据生命周期中的消息进行通信过程分析。

(1)在应用程序启动时,SparkContext会创建Driver端的SparkEnv,在该SparkEnv中实例化BlockManager和BlockManagerMaster,在BlockManagerMaster内部创建消息通信的终端点BlockManagerMasterEndpoint。

在Executor启动时也会创建其SparkEnv, 在该SparkEnv中实例化BlockManager和负责网络数据传输服务的BlockTransferService。在BlockManager初始化过程中,一方面会加入BlockManagerMasterEndpoint 终端点的引用, 另一方面会创建Executor 消息通信的 BlockManagerSlaveEndpoint终端点, 并把该终端点的引用注册到Driver中, 这样Driver和 Executor相互持有通信终端点的引用, 可以在应用桯序执行过程中进行消息通信。

具体实现代码如下:

def registerOrLookupEndpoint(name: String, endpointCreator: => RpcEndpoint): RpcEndpointRef = {

...

//创建远程数据传输服务,使用Netty方式

val blockTransferService =

new NettyBlockTransferService(conf, securityManager, bindAddress, advertiseAddress,

blockManagerPort, numUsableCores)

//创建blockManagerMaster,如果是Driver端在blockManagerMaster内部则创建终端点BlockManagerMasterEndpoint

//如果是Executor,则创建BlockManagerMasterEndpoint的引用

val blockManagerMaster = new BlockManagerMaster(registerOrLookupEndpoint(

BlockManagerMaster.DRIVER_ENDPOINT_NAME,

new BlockManagerMasterEndpoint(rpcEnv, isLocal, conf, listenerBus)),

conf, isDriver)

//创建blockManager,如果是Driver端包含blockManagerMaster,如果是executor包含的是blockManagerMaster

//的引用,另外blockManager包含了远程数据传输服务,当BlockManager调用initialize()方法才生效

val blockManager = new BlockManager(executorId, rpcEnv, blockManagerMaster,

serializerManager, conf, memoryManager, mapOutputTracker, shuffleManager,

blockTransferService, securityManager, numUsableCores)

...

}

其中BlockManager调用initialize()方法的初始化如下:

def initialize(appId: String): Unit = {

//在Executor中启动远程数据传输服务,根据配置启动传输服务器blockTransferService,

//该服务器启动后等待其他节点发送请求消息

blockTransferService.init(this)

shuffleClient.init(appId)

blockReplicationPolicy = {

val priorityClass = conf.get(

"spark.storage.replication.policy", classOf[RandomBlockReplicationPolicy].getName)

val clazz = Utils.classForName(priorityClass)

val ret = clazz.newInstance.asInstanceOf[BlockReplicationPolicy]

logInfo(s"Using $priorityClass for block replication policy")

ret

}

//获取blockManager编号

val id =

BlockManagerId(executorId, blockTransferService.hostName, blockTransferService.port, None)

val idFromMaster = master.registerBlockManager(

id,

maxOnHeapMemory,

maxOffHeapMemory,

slaveEndpoint)

blockManagerId = if (idFromMaster != null) idFromMaster else id

//获取shuffle服务编号,如果启动外部shuffle服务,则加入外部Shuffle服务端口信息,

//否则使用使用blockManager编号

shuffleServerId = if (externalShuffleServiceEnabled) {

logInfo(s"external shuffle service port = $externalShuffleServicePort")

BlockManagerId(executorId, blockTransferService.hostName, externalShuffleServicePort)

} else {

blockManagerId

}

//如果外部shuffle服务启动并且为executor节点,则注册为外部shuffle服务

if (externalShuffleServiceEnabled && !blockManagerId.isDriver) {

registerWithExternalShuffleServer()

}

logInfo(s"Initialized BlockManager: $blockManagerId")

}

(2)写入、 更新或删除数据完毕后, 发送数据块的最新状态消息UpdateBlockinfo给BlockManagerMasterEndpoint终端点, 由其更新数据块的元数据。 该终端点的元数据存放在BlockManagerMasterEndpoint的3个HashMap中, 分别如下:

class BlockManagerMasterEndpoint(override val rpcEnv: RpcEnv,val isLocal: Boolean,conf: SparkConf,listenerBus: LiveListenerBus)

extends ThreadSafeRpcEndpoint with Logging {

...

//该HashMap中存放了BlockManagerId与BlockManagerInfo的对应,其中BlockManagerInfo

//包含了executor的内存使用情况、数据块的使用情况、已被缓存的数据块和executor终端点的引用

private val blockManagerInfo = new mutable.HashMap[BlockManagerId, BlockManagerInfo]

//该HashMap存放了BlockManagerId和executorId对应列表

private val blockManagerIdByExecutor = new mutable.HashMap[String, BlockManagerId]

//该HashMap存放了BlockManagerId和BlockId序列所对应的列表,原因在于一个数据块可能存储

//多个副本,保存在多个executor中

private val blockLocations = new JHashMap[BlockId, mutable.HashSet[BlockManagerId]]

...

}

(3)应用程序数据存储后, 在获取远程节点数据、 获取RDD执行的首选位置等操作时需要根据数据块的编号查询数据块所处的位置, 此时发送 GetLocations 或GetLocationsMultipleBlocklds等消息给BlockManagerMasterEndpoint终端点,通过对元数据的查询获取数据块的位置信息。

代码实现如下:

private def getLocations(blockId: BlockId): Seq[BlockManagerId] = {

//根据blockId判断是否包含数据块,如果包含,则返回其对应的BlockManagerId序列

if (blockLocations.containsKey(blockId)) blockLocations.get(blockId).toSeq

else Seq.empty

}

(4)Spark提供删除RDD、 数据块和广播变量等方式。 当数据需要删除时, 提交删除消息给BlockManagerSlaveEndpoint 终端点, 在该终端点发起删除操作, 删除操作一方面需要删除Driver端元数据信息,另一方面需要发送消息通知Executor,删除对应的物理数据。下面以RDD的unpersistRDD方法描述其删除过程。类调用关系图如下:

首先, 在SparkConext中调用unpersistRDD方法, 在该方法中发送removeRdd 消息给 BlockManagerMasterEndpoint终端点;然后, 该终端点接收到消息时, 从blockLocations列表中找出该ROD对应的数据存在BlockManagerld 列表, 查询完毕后, 更新blockLocations和 blockManagerlnfo两个数据块元数据列表; 然后, 把获取的BlockManagerld列表, 发送消息给所在BlockManagerSlaveEndpoint 终端点, 通知其删除该 Executor上的RDD, 删除时调用 BlockManager的removeRdd方法, 删除在Executor上RDD所对应的数据块。 其中在 BlockManagerMasterEndpoint终端点的removeRdd代码如下:

private def removeRdd(rddId: Int): Future[Seq[Int]] = {

//在blockLocations和blockManagerInfo中删除该RDD的数据元消息

//首先,根据RDD编号获取该RDD存储的数据块信息

val blocks = blockLocations.asScala.keys.flatMap(_.asRDDId).filter(_.rddId == rddId)

blocks.foreach {

blockId =>

//根据数据块信息找出这些数据块所在BlockManagerId列表,遍历这些列表并删除

//BlockManager包含该数据块的元数据,同时删除blockLocations对应数据块的元数据

val bms: mutable.HashSet[BlockManagerId] = blockLocations.get(blockId)

bms.foreach(bm => blockManagerInfo.get(bm).foreach(_.removeBlock(blockId)))

blockLocations.remove(blockId)

}

//最后发送RemoveRdd消息给executor,通知其删除RDD

val removeMsg = RemoveRdd(rddId)

val futures = blockManagerInfo.values.map {

bm =>

bm.slaveEndpoint.ask[Int](removeMsg).recover {

case e: IOException =>

logWarning(s"Error trying to remove RDD $rddId from block manager ${bm.blockManagerId}",

e)

0 // zero blocks were removed

}

}.toSeq

Future.sequence(futures)

}

存储级别

Spark虽是基于内存的计算,但RDD的数据集不仅可以存储在内存中,还可以使用persist方法或cache方法显示地将RDD的数据集缓存到内存或者磁盘中。persist的代码实现如下:

private def persist(newLevel: StorageLevel, allowOverride: Boolean): this.type = {

// TODO: Handle changes of StorageLevel

//如果RDD指定了非NONE的存储级别,该存储级别则不能进行修改

if (storageLevel != StorageLevel.NONE && newLevel != storageLevel && !allowOverride) {

throw new UnsupportedOperationException(

"Cannot change storage level of an RDD after it was already assigned a level")

}

//当RDD原来的存储级别为NONE时,可以对RDD进行持久化处理,在处理前需要先清除SparkContext

//中原来RDD相关的存储元数据,然后加入RDD的持久化信息

if (storageLevel == StorageLevel.NONE) {

sc.cleaner.foreach(_.registerRDDForCleanup(this))

sc.persistRDD(this)

}

//当RDD原来的存储级别为NONE时,把RDD存储级别修改为传入新值

storageLevel = newLevel

this

}

RDD第一次被计算时, persist方法会根据参数StorageLevel的设置采取特定的缓存策略,当RDD原本存储级别为NONE或者新传递进来的存储级别值与原来 的存储级别相等时才进行 操作,由于persist操作是控制操作的一种, 它只是改变了原RDD的元数据信息, 并没有进行数据的存储操作, 真正进行是RDD的iterator方法中。 对于cache方法而言, 它只是persist 方法的一个特例, 即persist方法的参数为MEMORY_ONLY的情况。

在StorageLevel类中, 根据useDisk、 useMemory、 useOffHeap、 deserialized 、replication5 个参数的组合, Spark提供了12种存储级别的缓存策略, 这可以将RDD持久化到内存、磁盘 和外部存储统, 或者是以序列化的方式持久化到内存中, 从至可以在集群的不同节点之间存储多份副本。代码实现如下:

class StorageLevel private(

private var _useDisk: Boolean,

private var _useMemory: Boolean,

private var _useOffHeap: Boolean,

private var _deserialized: Boolean,

private var _replication: Int = 1)

extends Externalizable {

...

object StorageLevel {

val NONE = new StorageLevel(false, false, false, false)

val DISK_ONLY = new StorageLevel(true, false, false, false)

val DISK_ONLY_2 = new StorageLevel(true, false, false, false, 2)

val MEMORY_ONLY = new StorageLevel(false, true, false, true)

val MEMORY_ONLY_2 = new StorageLevel(false, true, false, true, 2)

val MEMORY_ONLY_SER = new StorageLevel(false, true, false, false)

val MEMORY_ONLY_SER_2 = new StorageLevel(false, true, false, false, 2)

val MEMORY_AND_DISK = new StorageLevel(true, true, false, true)

val MEMORY_AND_DISK_2 = new StorageLevel(true, true, false, true, 2)

val MEMORY_AND_DISK_SER = new StorageLevel(true, true, false, false)

val MEMORY_AND_DISK_SER_2 = new StorageLevel(true, true, false, false, 2)

val OFF_HEAP = new StorageLevel(true, true, true, false, 1)

...

}

RDD存储调用

RDD与数据块Block之间的关系

RDD 包含多个 Partition, 每个 Partition 对应一个数据块 Block, 那么每个RDD 中包含一个或多个数据块 Block, 每个 Block 拥有唯一的编号BlockId,对应数据块编号规则:“rdd_” + rddId + ‘’_" + splitIndex。其中splitIndex为该数据块对应Partition的序列号。

在 persist 方法中并没有发生数据存储操作动作, 实际发生数据操作是任务运行过程中, RDD 调用 iterator 方法时发生的。 在调用过程中, 先根据数据块 Block 编号在 判断是否已经按照指定的存储级别进行存储, 如果存在该数据块 Block, 则从本地或远程节点读取数据; 如果不存在该数据块 Block, 则调用 RDD 的计算方法得出结果, 并把结果按照指定 的存储级别进行存储。 RDD 的 iterator 方法代码如下:

final def iterator(split: Partition, context: TaskContext): Iterator[T] = {

if (storageLevel != StorageLevel.NONE) {

//如果存在存储级别,尝试读取内存的数据进行迭代计算

getOrCompute(split, context)

} else {

//如果不存在存储级别,则直接读取数据进行迭代计算或者读取检查点结果进行迭代计算

computeOrReadCheckpoint(split, context)

}

}

其中调用的getOrCompute 方法是存储逻辑的核心, 代码如下:

private[spark] def getOrCompute(partition: Partition, context: TaskContext): Iterator[T] = {

//通过RDD的编号和partition序号获取数据块block的编号

val blockId = RDDBlockId(id, partition.index)

var readCachedBlock = true

// This method is called on executors, so we need call SparkEnv.get instead of sc.env.

//由于该方法由executor调用,可使用sparkEnv代替sc.env

//根据数据块block编号先读取数据,然后再更新数据,这里是读写数据的入口点(getOrElseUpdate)

SparkEnv.get.blockManager.getOrElseUpdate(blockId, storageLevel, elementClassTag, () => {

//如果数据块不在内存,则尝试读取检查点结果进行迭代计算

readCachedBlock = false

computeOrReadCheckpoint(partition, context)

}) match {

//对getOrElseUpdate返回结果进行处理,该结果表示处理成功,记录结果度量信息

case Left(blockResult) =>

if (readCachedBlock) {

val existingMetrics = context.taskMetrics().inputMetrics

existingMetrics.incBytesRead(blockResult.bytes)

new InterruptibleIterator[T](context, blockResult.data.asInstanceOf[Iterator[T]]) {

override def next(): T = {

existingMetrics.incRecordsRead(1)

delegate.next()

}

}

} else {

new InterruptibleIterator(context, blockResult.data.asInstanceOf[Iterator[T]])

}

//对getOrElseUpdate返回结果进行处理,该结果表示处理失败,把该结果返回给调用者,由其决定如何处理

case Right(iter) =>

new InterruptibleIterator(context, iter.asInstanceOf[Iterator[T]])

}

}

在getOrCompute调用getOrElseUpdate方法, 该方法是存储读写数据的入口点:

//该方法是存储读写数据的入口点

def getOrElseUpdate[T](

blockId: BlockId,

level: StorageLevel,

classTag: ClassTag[T],

makeIterator: () => Iterator[T]): Either[BlockResult, Iterator[T]] = {

// Attempt to read the block from local or remote storage. If it's present, then we don't need

// to go through the local-get-or-put path.

//读取数据块入口,尝试从本地数据或者远程读取数据

get[T](blockId)(classTag) match {

case Some(block) =>

return Left(block)

case _ =>

// Need to compute the block.

}

// Initially we hold no locks on this block.

//写输入入口

doPutIterator(blockId, makeIterator, level, classTag, keepReadLock = true) match {

case None =>

val blockResult = getLocalValues(blockId).getOrElse {

releaseLock(blockId)

throw new SparkException(s"get() failed for block $blockId even though we held a lock")

}

releaseLock(blockId)

Left(blockResult)

case Some(iter) =>

Right(iter)

}

}

读数据过程

BlockManager的get方法是读数据的入口点, 在读取时分为本地读取和远程节点读取两个步骤。本地读取使用getLocalValues方法,在该方法中根据不同的存储级别直接调用不同存储实现的方法:而远程节点读取使用getRemote Values方法,在getRemoteValues 方法中调用了 getRemoteBytes方法,在方法中调用远程数据传输服务类BlockTransferService的fetchBlockSync 进行处理,使用Netty的fetchBlocks方法获取数据。整个数据读取类调用如下:

本地读取

本地读取根据不同的存储级别分为内存和磁盘两种读取方式。其介绍分别如下:

1. 内存读取

在getLocalValues方法中,读取内存中的数据根据返回的是封装成BlockResult类型还是数据流,分别调用MemoryStore的getValues和getBytes两种方法,代码如下:

def getLocalValues(blockId: BlockId): Option[BlockResult] = {

...

//使用内存存储级别,并且数据存储在内存情况

if (level.useMemory && memoryStore.contains(blockId)) {

val iter: Iterator[Any] = if (level.deserialized) {

//如果存储时使用反序列化,则直接读取内存中的数据

memoryStore.getValues(blockId).get

} else {

//如果存储时未使用反序列化,则内存中的数据后做反序列化处理

serializerManager.dataDeserializeStream(

blockId, memoryStore.getBytes(blockId).get.toInputStream())(info.classTag)

}

// We need to capture the current taskId in case the iterator completion is triggered

// from a different thread which does not have TaskContext set; see SPARK-18406 for

// discussion.

//数据读取完毕后,返回数据及数据块大小、读取方法等信息

val ci = CompletionIterator[Any, Iterator[Any]](iter, {

releaseLock(blockId, taskAttemptId)

})

Some(new BlockResult(ci, DataReadMethod.Memory, info.size))

}

...

}

在MemoryStore的getValues和getBytes方法中,最终都是通过数据块编号获取内存中的数据, 其代码为:

val entry = en七ries.synchronized {

entries.get(blockld) }

2.磁盘读取

磁盘读取在getLocalValues方法中, 调用的是DiskStore的getBytes方法, 在读取磁盘中的数据后需要把这些数据缓存到内存中, 代码实现如下:

def getLocalValues(blockId: BlockId): Option[BlockResult] = {

...

else if (level.useDisk && diskStore.contains(blockId)) {

//从磁盘获取数据,由于保存到磁盘中的数据是序列化的,读取到的数据也是序列化的

val diskData = diskStore.getBytes(blockId)

val iterToReturn: Iterator[Any] = {

if (level.deserialized) {

//如果存储级别需要反序列化,则把读取数据反序列化,然后存储到内存中去

val diskValues = serializerManager.dataDeserializeStream(

blockId,

diskData.toInputStream())(info.classTag)

maybeCacheDiskValuesInMemory(info, blockId, level, diskValues)

} else {

//如果存储级别不需要反序列化,则直接把这些序列化数据存储到内存中

val stream = maybeCacheDiskBytesInMemory(info, blockId, level, diskData)

.map {

_.toInputStream(dispose = false) }

.getOrElse {

diskData.toInputStream() }

//返回的数据需进行反序列化处理

serializerManager.dataDeserializeStream(blockId, stream)(info.classTag)

}

}

//数据读取完毕后,返回数据及数据块大小,读取方法等信息

val ci = CompletionIterator[Any, Iterator[Any]](iterToReturn, {

releaseLockAndDispose(blockId, diskData, taskAttemptId)

})

Some(new BlockResult(ci, DataReadMethod.Disk, info.size))

...

}

在DiskStore中的getBytes方法中, 调用DiskBlockManager的getfile方法获取数据块所在文件的句柄。该文件名为数据块的文件名,文件所在一级目录和二级子目录索引值通过文件名的哈希值取模获取,其代码实现如下:

def getFile(filename: String): File = {

//根据文件名的哈希值获取一级目录和二级目录索引值,其中一级目录索引值为哈希值与一级目录个数的模,

// 而二级目录索引值为哈希值与二级子目录个数的模

val hash = Utils.nonNegativeHash(filename)

val dirId = hash % localDirs.length

val subDirId = (hash / localDirs.length) % subDirsPerLocalDir

//先通过一级目录和二级目录索引值获取该目录,然后判断该目录是否存在

val subDir = subDirs(dirId).synchronized {

val old = subDirs(dirId)(subDirId)

if (old != null) {

old

} else {

//如果该目录不存在则创建该目录,范围为00-63

val newDir = new File(localDirs(dirId), "%02x".format(subDirId))

if (!newDir.exists() && !newDir.mkdir()) {

throw new IOException(s"Failed to create local dir in $newDir.")

}

//判断该文件是否存在,如果不存在,则创建

subDirs(dirId)(subDirId) = newDir

newDir

}

}

//通过文件的路径获取文件的句柄并返回

new File(subDir, filename)

}

获取文件句柄后, 读取整个文件内容。其代码如下:

def getBytes(blockId: BlockId): BlockData = {

//获取数据块所在文件的句柄

val file = diskManager.getFile(blockId.name)

val blockSize = getSize(blockId)

securityManager.getIOEncryptionKey() match {

case Some(key) =>

new EncryptedBlockData(file, blockSize, conf, key)

case _ =>

new DiskBlockData(minMemoryMapBytes, maxMemoryMapBytes, file, blockSize)

}

}

远程读取

在远程节点读取数据的时候, Spark只提供了Netty远程读取方式,下面分析Netty远程数据读取过程。 在Spark中主要由下而两个类处理Netty远程数据读取:

- NettyBlockTransferService: 该类向Shuffle、 存储模块提供了数据存取的接口, 接收到数据存取的命令时, 通过Netty的RPC架构发送消息给指定节点, 请求进行数据存取操作。

- NettyBlockRpcServer: 当Executor启动时, 同时会启动RCP监听器, 当监听到消息 把消息传递到该类进行处理, 消息内容包括读取数据OpenBlocks 和写入数据Upload Block两种。

使用Netty处理远程数据读取流程如下:

(1)Spark远程读取数据入口为getRemoteValues, 然后调用getRemoteBytes方法, 在该方法中调用sortLocations方法先向BlockManagerMasterEndpoint 终端点发送SortLocations消息,请求据数据块所在的位置信息。 当Driver的终端点接收到请求消息时, 根据数据块的编号获取该数据块所在的位置列表, 根据是否是本地节点数据对位置列表进行排序。 其中BlockManager 类中的sortLocations方法代码片段如下:

private def sortLocations(locations: Seq[BlockManagerId]): Seq[BlockManagerId] = {

//获取数据块节点所在节点的信息

val locs = Random.shuffle(locations)

//从获取的节点信息中,优先读取本地节点数据

val (preferredLocs, otherLocs) = locs.partition {

loc => blockManagerId.host == loc.host }

blockManagerId.topologyInfo match {

case None => preferredLocs ++ otherLocs

case Some(_) =>

val (sameRackLocs, differentRackLocs) = otherLocs.partition {

loc => blockManagerId.topologyInfo == loc.topologyInfo

}

preferredLocs ++ sameRackLocs ++ differentRackLocs

}

}

获取数据块的位置列表后,在BlockManager.getRemoteBytes方法中调用BlockTransferService提供的fetchBlockSync方法进行读取远程数据。代码实现如下:

def getRemoteBytes(blockId: BlockId): Option[ChunkedByteBuffer] = {

...

var runningFailureCount = 0

var totalFailureCount = 0

//获取数据块的位置

val locations = sortLocations(blockLocations)

val maxFetchFailures = locations.size

var locationIterator = locations.iterator

while (locationIterator.hasNext) {

val loc = locationIterator.next()

logDebug(s"Getting remote block $blockId from $loc")

//通过blockTransferService提供的fetchBlockSync方法远程获取数据

val data = try {

blockTransferService.fetchBlockSync(

loc.host, loc.port, loc.executorId, blockId.toString, tempFileManager)

} catch {

...

}

//获取到数据后,返回该数据块

if (data != null) {

if (remoteReadNioBufferConversion) {

return Some(new ChunkedByteBuffer(data.nioByteBuffer()))

} else {

return Some(ChunkedByteBuffer.fromManagedBuffer(data))

}

}

logDebug(s"The value of block $blockId is null")

}

logDebug(s"Block $blockId not found")

None

}

(2)调用远程数据传输服务BlockTransferService的fetchBlockSync方法后,在该方法继续调用fetchBlocks方法。

override def fetchBlocks(

host: String,

port: Int,

execId: String,

blockIds: Array[String],

listener: BlockFetchingListener,

tempFileManager: DownloadFileManager): Unit = {

logTrace(s"Fetch blocks from $host:$port (executor id $execId)")

try {

val blockFetchStarter = new RetryingBlockFetcher.BlockFetchStarter {

override def createAndStart(blockIds: Array[String], listener: BlockFetchingListener) {

//根据远程节点的节点和端口创建通信客户端

val client = clientFactory.createClient(host, port)

//通过该客户端向指定节点发送获取数据消息

new OneForOneBlockFetcher(client, appId, execId, blockIds, listener,

transportConf, tempFileManager).start()

}

}

...

}

其中发送读取消息是在OneForOneBlockFetcher类中实现,在该类中的构造函数定义了该消息this.openMessage = new OpenBlocks(appld, execld, blocklds),然后在该类的start方法中向RPC客户端发送消息:

public void start() {

if (blockIds.length == 0) {

throw new IllegalArgumentException("Zero-sized blockIds array");

}

//通过客户端发送读取数据块的消息

client.sendRpc(openMessage.toByteBuffer(), new RpcResponseCallback() {

@Override

public void onSuccess(ByteBuffer response) {

try {

...

}

});

}

(3)远程节点的RPC服务端接收到客户端发送消息时, 在NettyBI ockRpcServer类中对悄息进行匹配。 如果是诮求读取悄息时, 则调用BlockManager的getBlockData方法议取该节点 卜的数据,读取的数据块封装为ManagedBuffer序列缓存在内存中,然后使用Netty提供的传输心迫, 把数据传递到谓求节点上, 完成远程传输任务。

override def receive(

client: TransportClient,

rpcMessage: ByteBuffer,

responseContext: RpcResponseCallback): Unit = {

val message = BlockTransferMessage.Decoder.fromByteBuffer(rpcMessage)

logTrace(s"Received request: $message")

message match {

case openBlocks: OpenBlocks =>

val blocksNum = openBlocks.blockIds.length

//调用blockManager的getBlockData读取该节点上的数据

val blocks = for (i <- (0 until blocksNum).view)

yield blockManager.getBlockData(BlockId.apply(openBlocks.blockIds(i)))

//注册ManagedBuffer序列,利用Netty传输通道进行传输数据

val streamId = streamManager.registerStream(appId, blocks.iterator.asJava,

client.getChannel)

logTrace(s"Registered streamId $streamId with $blocksNum buffers")

responseContext.onSuccess(new StreamHandle(streamId, blocksNum).toByteBuffer)

...

}

}

写数据过程

BlockManager的doPutlterator方法是写数据的入口点。 在该方法中, 根据数据是否缓存到内存中进行处理。 如果不缓存到内存中, 则调用BlockManager 的putIterator方法直接存储磁盘: 如果缓存到内存中, 则先判断数据存储级别是否进行了反序列化。如果设置反序列化, 则说明获取的数据为值类型, 调用putlteratorAsValues方法把数据存入内存;如果没有设置反序列化,则获取的数据为字节类型,调用putIteratorAsBytes方法把数据存入内存中。在把数据存入内存过程中,要判断在内存中展开该数据大小是否足够,当足够时调用BlockManager的putArray方法写入内存,否则把数据写入到磁盘。

在写入数据完成时,一方面把数据块的元数据发送给Driver端的BockManagerMasterEndpoint终端点,请求其更新数据元数据,另一方面判断是否需要创建数据副本,如果需要则调用replicate方法,把数据写到远程节点上,类似于读取远程节点数据,Spark提供Netty方式写输入。数据写入类调用关系如下:

通过上面的方法调用图, 可以知道在BlockManager 的 doPutlterator 方法中根据存储级别和 数据类型确定调用的方法, 当存储级别为内存时, 调用 MemoryStore的写入方法; 当存储级别为硬盘时, 调用 DiskStore的写入方法。 BlockManager.doPutlterator 代码如下所示:

private def doPutIterator[T](

blockId: BlockId,

iterator: () => Iterator[T],

level: StorageLevel,

classTag: ClassTag[T],

tellMaster: Boolean = true,

keepReadLock: Boolean = false): Option[PartiallyUnrolledIterator[T]] = {

//辅助类,用于获取数据块信息,并对写数据结果进行处理

doPut(blockId, level, classTag, tellMaster = tellMaster, keepReadLock = keepReadLock) {

info =>

val startTimeMs = System.currentTimeMillis

var iteratorFromFailedMemoryStorePut: Option[PartiallyUnrolledIterator[T]] = None

var size = 0L

//把数据写到内存中

if (level.useMemory) {

//如果设置反序列化,则说明获取的数据为数值类型,调用putIteratorAsValues方法

//把数据存入内存

if (level.deserialized) {

memoryStore.putIteratorAsValues(blockId, iterator(), classTag) match {

//写入数据成功,返回数据块的大小

case Right(s) =>

size = s

//数据写入内存失败,如果存储级别设置写入磁盘,则写到磁盘中,否则返回结果

case Left(iter) =>

// Not enough space to unroll this block; drop to disk if applicable

if (level.useDisk) {

logWarning(s"Persisting block $blockId to disk instead.")

diskStore.put(blockId) {

channel =>

val out = Channels.newOutputStream(channel)

serializerManager.dataSerializeStream(blockId, out, iter)(classTag)

}

size = diskStore.getSize(blockId)

} else {

iteratorFromFailedMemoryStorePut = Some(iter)

}

}

} else {

// !level.deserialized

//如果没有设置反序列化,则获取的数据类型为字节类型,调用putIteratorAsBytes方法

//把数据存到内存中

memoryStore.putIteratorAsBytes(blockId, iterator(), classTag, level.memoryMode) match {

//写入数据成功,返回数据块的大小

case Right(s) =>

size = s

//数据写入内存失败,如果存储级别设置写入磁盘,则写到磁盘中,否则返回结果

case Left(partiallySerializedValues) =>

if (level.useDisk) {

logWarning(s"Persisting block $blockId to disk instead.")

diskStore.put(blockId) {

channel =>

val out = Channels.newOutputStream(channel)

partiallySerializedValues.finishWritingToStream(out)

}

size = diskStore.getSize(blockId)

} else {

iteratorFromFailedMemoryStorePut = Some(partiallySerializedValues.valuesIterator)

}

}

}

}

//调用diskStore的put方法把数据写到磁盘中

else if (level.useDisk) {

diskStore.put(blockId) {

channel =>

val out = Channels.newOutputStream(channel)

serializerManager.dataSerializeStream(blockId, out, iterator())(classTag)

}

size = diskStore.getSize(blockId)

}

val putBlockStatus = getCurrentBlockStatus(blockId, info)

val blockWasSuccessfullyStored = putBlockStatus.storageLevel.isValid

if (blockWasSuccessfullyStored) {

// Now that the block is in either the memory or disk store, tell the master about it.

//如果成功写入,则把该数据块的元数据发送给Driver端

info.size = size

if (tellMaster && info.tellMaster) {

reportBlockStatus(blockId, putBlockStatus)

}

addUpdatedBlockStatusToTaskMetrics(blockId, putBlockStatus)

logDebug("Put block %s locally took %s".format(blockId, Utils.getUsedTimeMs(startTimeMs)))

//如果需要创建副本,则根据数据块编号获取数据复制到其他节点上

if (level.replication > 1) {

val remoteStartTime = System.currentTimeMillis

val bytesToReplicate = doGetLocalBytes(blockId, info)

// [SPARK-16550] Erase the typed classTag when using default serialization, since

// NettyBlockRpcServer crashes when deserializing repl-defined classes.

// TODO(ekl) remove this once the classloader issue on the remote end is fixed.

val remoteClassTag = if (!serializerManager.canUseKryo(classTag)) {

scala.reflect.classTag[Any]

} else {

classTag

}

try {

replicate(blockId, bytesToReplicate, level, remoteClassTag)

} finally {

bytesToReplicate.dispose()

}

logDebug("Put block %s remotely took %s"

.format(blockId, Utils.getUsedTimeMs(remoteStartTime)))

}

}

assert(blockWasSuccessfullyStored == iteratorFromFailedMemoryStorePut.isEmpty)

iteratorFromFailedMemoryStorePut

}

}

在Spark中写入数据分别内存和磁盘两种方式,其对应写入过程如下:

写入内存

在内存处理类 MemoryStore 中, 存在两种写入方法, 分别为 putlteratorAsValues 和putlteratorAsBytes。这两个方法区别在于写入内存的数据类型不同, putfteratorAsValues 针对的是值类型的数据写入, 而 putiteratorAsBytes 针对的是字节码数据的写入。 这两个方法写入内存过程基本类似, 下面以 putlteratorAsValues 讲解写入过程。

- (1)在数据块展升前,为该展开线程获取初始化内存,该内存大小为 unrollMemoryThreshold,获取完毕后返回是否成功的结果keepUnrolling 。

- (2)如果lterator[T]存在元素且keepUnrolling为真, 则继续向前遍历lterator[T], 内存展开元素的数量elementsUnrolled自增1。 如果遍历Iterator[T]到头或者keepUnrolling为假, 则跳到 步骤(4)。

- (3)当每memoryCheckPeriod即16次展升动作后,进行yi 次检查展开的内存大小是否超过当前分配的内存。 如果没有超过则继续展开, 如果不足则根据增长因子计算需要增加的内存大小, 然后根据该大小申请, 申请增加的内存大小: 当前展开大小*内存增长因子-当前分配的内 存大小。如果申请成功,则把内存大小加入到已使用内存中,而该展开线桯获取的内存大小为: 当前展开大小*内存增长因子。

- (4)判断数据块是否在内存中成功展开,如果展开失败,则记录内存不足并退出: 如果展开成功,则继续下一步骤。

- (5)先估算该数据块在内存中存储的大小, 然后比较数据块展开的内存和数据块在内存中存储的大小, 如果数据块展开的内存<=数据块存储的大小, 说明屈开内存的大小不足以存储数 据块, 需要申请它们之间的差值, 如果申请成功, 则调用transferUnrollToStorage方法处理:数据块展开的内存>数据块存储的大小,说明展开内存的大小足以存储数据块,那么先释放多余的内存, 然后调用transferUnrollToStorage方法处理。

- (6)在transferUnrollTotorage方法中释放该数据块在内存展开的空间,然后判断内存是否足够用于写入数据。如果有足够的内存,则把数据块放到内存的entries中,否则返回内存不足, 写入内存失败的消息。

private[storage] def putIteratorAsValues[T](

blockId: BlockId,

values: Iterator[T],

classTag: ClassTag[T]): Either[PartiallyUnrolledIterator[T], Long] = {

val valuesHolder = new DeserializedValuesHolder[T](classTag)

putIterator(blockId, values, classTag, MemoryMode.ON_HEAP, valuesHolder) match {

case Right(storedSize) => Right(storedSize)

case Left(unrollMemoryUsedByThisBlock) =>

val unrolledIterator = if (valuesHolder.vector != null) {

valuesHolder.vector.iterator

} else {

valuesHolder.arrayValues.toIterator

}

Left(new PartiallyUnrolledIterator(

this,

MemoryMode.ON_HEAP,

unrollMemoryUsedByThisBlock,

unrolled = unrolledIterator,

rest = values))

}

}

private def putIterator[T](

blockId: BlockId,

values: Iterator[T],

classTag: ClassTag[T],

memoryMode: MemoryMode,

valuesHolder: ValuesHolder[T]): Either[Long, Long] = {

require(!contains(blockId), s"Block $blockId is already present in the MemoryStore")

// Number of elements unrolled so far

var elementsUnrolled = 0

// Whether there is still enough memory for us to continue unrolling this block

var keepUnrolling = true

// Initial per-task memory to request for unrolling blocks (bytes).

val initialMemoryThreshold = unrollMemoryThreshold

// How often to check whether we need to request more memory

val memoryCheckPeriod = conf.get(UNROLL_MEMORY_CHECK_PERIOD)

// Memory currently reserved by this task for this particular unrolling operation

var memoryThreshold = initialMemoryThreshold

// Memory to request as a multiple of current vector size

val memoryGrowthFactor = conf.get(UNROLL_MEMORY_GROWTH_FACTOR)

// Keep track of unroll memory used by this particular block / putIterator() operation

var unrollMemoryUsedByThisBlock = 0L

// Request enough memory to begin unrolling

keepUnrolling =

reserveUnrollMemoryForThisTask(blockId, initialMemoryThreshold, memoryMode)

if (!keepUnrolling) {

logWarning(s"Failed to reserve initial memory threshold of " +

s"${Utils.bytesToString(initialMemoryThreshold)} for computing block $blockId in memory.")

} else {

unrollMemoryUsedByThisBlock += initialMemoryThreshold

}

// Unroll this block safely, checking whether we have exceeded our threshold periodically

while (values.hasNext && keepUnrolling) {

valuesHolder.storeValue(values.next())

if (elementsUnrolled % memoryCheckPeriod == 0) {

val currentSize = valuesHolder.estimatedSize()

// If our vector's size has exceeded the threshold, request more memory

if (currentSize >= memoryThreshold) {

val amountToRequest = (currentSize * memoryGrowthFactor - memoryThreshold).toLong

keepUnrolling =

reserveUnrollMemoryForThisTask(blockId, amountToRequest, memoryMode)

if (keepUnrolling) {

unrollMemoryUsedByThisBlock += amountToRequest

}

// New threshold is currentSize * memoryGrowthFactor

memoryThreshold += amountToRequest

}

}

elementsUnrolled += 1

}

// Make sure that we have enough memory to store the block. By this point, it is possible that

// the block's actual memory usage has exceeded the unroll memory by a small amount, so we

// perform one final call to attempt to allocate additional memory if necessary.

if (keepUnrolling) {

val entryBuilder = valuesHolder.getBuilder()

val size = entryBuilder.preciseSize

if (size > unrollMemoryUsedByThisBlock) {

val amountToRequest = size - unrollMemoryUsedByThisBlock

keepUnrolling = reserveUnrollMemoryForThisTask(blockId, amountToRequest, memoryMode)

if (keepUnrolling) {

unrollMemoryUsedByThisBlock += amountToRequest

}

}

if (keepUnrolling) {

val entry = entryBuilder.build()

// Synchronize so that transfer is atomic

memoryManager.synchronized {

releaseUnrollMemoryForThisTask(memoryMode, unrollMemoryUsedByThisBlock)

val success = memoryManager.acquireStorageMemory(blockId, entry.size, memoryMode)

assert(success, "transferring unroll memory to storage memory failed")

}

entries.synchronized {

entries.put(blockId, entry)

}

logInfo("Block %s stored as values in memory (estimated size %s, free %s)".format(blockId,

Utils.bytesToString(entry.size), Utils.bytesToString(maxMemory - blocksMemoryUsed)))

Right(entry.size)

} else {

// We ran out of space while unrolling the values for this block

logUnrollFailureMessage(blockId, entryBuilder.preciseSize)

Left(unrollMemoryUsedByThisBlock)

}

} else {

// We ran out of space while unrolling the values for this block

logUnrollFailureMessage(blockId, valuesHolder.estimatedSize())

Left(unrollMemoryUsedByThisBlock)

}

}

写入磁盘

Spark写入磁盘的方法调用了DiskStore的put方法, 该方法提供了写入文件的回调方法 writefunc。 在该方法中先获取写入文件句柄, 然后把数据序列化为数据流, 最后根据回调方法 把数据写入文件中。 其处理代码如下:

def put(blockId: BlockId)(writeFunc: WritableByteChannel => Unit): Unit = {

if (contains(blockId)) {

throw new IllegalStateException(s"Block $blockId is already present in the disk store")

}

logDebug(s"Attempting to put block $blockId")

val startTime = System.currentTimeMillis

//获取需要写入文件句柄,参见外部存储系统的读过程

val file = diskManager.getFile(blockId)

val out = new CountingWritableChannel(openForWrite(file))

var threwException: Boolean = true

try {

//使用回调方法,写入前需要把值类型数据序列化成数据流

writeFunc(out)

blockSizes.put(blockId, out.getCount)

threwException = false

} finally {

try {

out.close()

} catch {

case ioe: IOException =>

if (!threwException) {

threwException = true

throw ioe

}

} finally {

if (threwException) {

remove(blockId)

}

}

}

val finishTime = System.currentTimeMillis

logDebug("Block %s stored as %s file on disk in %d ms".format(

file.getName,

Utils.bytesToString(file.length()),

finishTime - startTime))

}