pytorch中的一些函数、trick小结

- 1.torch.clamp(input, min, max, out=None) → Tensor

- 2.torch.gather(input, dim, index, out=None, sparse_grad=False) → Tensor

- 3.torch.gt(input, other, out=None) →Tensor

- 4.torch.masked_select(input, mask, out=None) → Tensor

- 5.torch.tensor类型转换

- 6.optimizer的参数

- 7.加载部分预训练 pretrained 权重

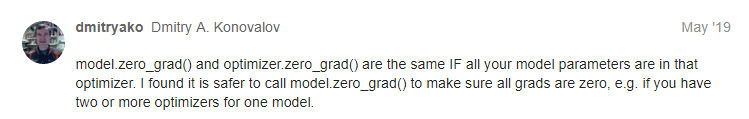

- 8.Model.zero_grad() or optimizer.zero_grad()

- 9.nn.functional.conv_transpose1d与nn.ConvTranspose1d

- 10.显示模型参数的两种方法

- 11.对不同类型的变量使用不同的优化器参数

- 12.如何保存及调用之前训练的best metric

- 13.加载torchvision中的模型

- 14. torch.bmm

- 参考

1.torch.clamp(input, min, max, out=None) → Tensor

Clamp all elements in input into the range [ min, max ] and return a resulting tensor:

例:

a = torch.randn(4)

a

#tensor([-1.7718, 1.6657, -0.6174, 0.7067])

torch.clamp(a, min=-0.5, max=0.5)

#tensor([-0.5000, 0.5000, -0.5000, 0.5000])

2.torch.gather(input, dim, index, out=None, sparse_grad=False) → Tensor

这个其实理解了变换关系,其实还好~

如官方文档中给出的这个例子:

对于一个三维tensor,分别给出了dim=0,1,2之后新tensor是如何生成的。

out[i][j][k] = input[index[i][j][k]][j][k] # if dim == 0

out[i][j][k] = input[i][index[i][j][k]][k] # if dim == 1

out[i][j][k] = input[i][j][index[i][j][k]] # if dim == 2

具体例子:

> t = torch.tensor([[1,2],[3,4]])

> index=torch.tensor([[0,0],[1,0]])

> torch.gather(t, 1, index)

tensor([[ 1, 1],

[ 4, 3]])

对于一个二维tensor,变换维度dim=1

则生成的这个tensor有:

[[t[0][index[0][0]],t[0][index[0][1]]],

[t[1][index[1][0]],t[1][index[1][1]]]

但这并不意味着输入tensor和index维度一致,请看下例。

> t = torch.tensor([[1,2],[3,4]])

> index=torch.tensor([[0],[1]])

> torch.gather(t, 1, index)

输出:tensor([[1],[4]])

可见,对于一个二维输入tensor,只要保证输入tensor和index 在0维度一致就可以了。另外,可见输出的size和index是相同的。

3.torch.gt(input, other, out=None) →Tensor

expand(*sizes) → Tensor

gt:greater than

>>> torch.gt(torch.tensor([[1, 2], [3, 4]]), torch.tensor([[1, 1], [4, 4]]))

#tensor([[False, True], [False, False]])

>>> x = torch.tensor([[1], [2], [3]])

>>> x.expand(3, 4)

tensor([[ 1, 1, 1, 1],

[ 2, 2, 2, 2],

[ 3, 3, 3, 3]])

4.torch.masked_select(input, mask, out=None) → Tensor

- input (Tensor) – the input tensor.

- mask (BoolTensor) – the tensor containing the binary mask to index with

- out (Tensor, optional) – the output tensor.

Returns a new ***1-D tensor*** which indexes the input tensor according to the boolean mask mask which is a BoolTensor.

>>> x = torch.randn(3, 4)

>>> x

tensor([[ 0.3552, -2.3825, -0.8297, 0.3477],

[-1.2035, 1.2252, 0.5002, 0.6248],

[ 0.1307, -2.0608, 0.1244, 2.0139]])

>>> mask = x.ge([0.5,0.5,0.5,0.5])

>>> mask

tensor([[False, False, False, False],

[False, True, True, True],

[False, False, False, True]])

>>> torch.masked_select(x, mask)

tensor([ 1.2252, 0.5002, 0.6248, 2.0139])

5.torch.tensor类型转换

tensor_name.type(dtype=torch.int8)

6.optimizer的参数

如下面这个optimizer:RMSprop,在pytorch中如何调用它的参数呢?

RMSprop (

Parameter Group 0

alpha: 0.99

centered: False

eps: 1e-08

lr: 0.01

momentum: 0

weight_decay: 0

)

以学习率lr为例,方法如下:

optimzer.state_dict()['param_groups'][0]['lr']

7.加载部分预训练 pretrained 权重

如果在传统模型的基础上进行了适当修改,又该如何加载预训练权重呢?

这里提供一种通过权重文件字典格式关键字匹配参考文献2 Pytorch迁移学习加载部分预训练权重来加载:

##################### 权重初始化r #######################

def model_init(model):

for m in model.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

model_init(models)

if os.path.isfile(path+pth_pre):#通过关键字加载部分预训练权重

model_dict = models.state_dict()

pretrained_dict=torch.load(path+pth,map_location='cpu')

pretrained_dict = {

k: v for k, v in pretrained_dict.items() if k in model_dict}

model_dict.update(pretrained_dict)

models.load_state_dict(model_dict)

print("weight initiation and pretrained weight loading!\n")

else:

print("weight initiation and no pretrained weight!\n")

先对新模型进行初始化,然后通过关键字匹配加载更新这部分参数的权重。

8.Model.zero_grad() or optimizer.zero_grad()

见注脚1。

当optimizer=optim.Optimizer(model.parameters())时

model.zero_grad()

与

optimizer.zero_grad()

是等价的。

也可以参考:pytorch AUTOMATIC DIFFERENTIATION PACKAGE - TORCH.AUTOGRAD

9.nn.functional.conv_transpose1d与nn.ConvTranspose1d

import torch

import torch.nn as nn

inputs = torch.tensor([[[1.,2.,3.]]])

weights = torch.tensor([[[1.,2.,3.]]])

print(nn.functional.conv_transpose1d(inputs, weights))

m = nn.ConvTranspose1d(1, 1, 3, stride=1, padding=0, output_padding=0,)

m.weight.data=weights

print(m(inputs))

输出结果如下:

tensor([[[ 1., 4., 10., 12., 9.]]])

tensor([[[ 1.2662, 4.2662, 10.2662, 12.2662, 9.2662]]],

grad_fn=<SqueezeBackward1>)

可见二者还是有所差别的。具体原因尚未找到。

10.显示模型参数的两种方法

可以通过m.state_dict()或m.parameters()显示module的参数。

不同的是m.state_dict()类型是collections.OrderedDict,而m.parameters()类型是generator。

import torch

x=torch.rand((1,2,10,10))

m=torch.nn.Conv2d(2, 3, 1,1,0)

y=f(x)

print(m.state_dict())

# OrderedDict([('weight', tensor([[[[-0.0010]],

# [[-0.3202]]],

# [[[ 0.0547]],

# [[-0.3170]]],

# [[[ 0.4429]],

# [[-0.3821]]]])),

# ('bias', tensor([-0.5540, 0.5680, -0.1624]))])

for p in m.parameters():

print(p)

# Parameter containing:

# tensor([[[[-0.0010]],

# [[-0.3202]]],

# [[[ 0.0547]],

# [[-0.3170]]],

# [[[ 0.4429]],

# [[-0.3821]]]], requires_grad=True)

# Parameter containing:

# tensor([-0.5540, 0.5680, -0.1624], requires_grad=True)

11.对不同类型的变量使用不同的优化器参数

如下代码段参考了https://github.com/WongKinYiu/PyTorch_YOLOv4/blob/master/train.py。它实现了对bias,weight及其他参数使用不同的参数。

pg0, pg1, pg2 = [], [], [] # optimizer parameter groups

for k, v in dict(model.named_parameters()).items():

if '.bias' in k:

pg2.append(v) # biases

elif 'Conv2d.weight' in k:

pg1.append(v) # apply weight_decay

else:

pg0.append(v) # all else

# use adam:

optimizer = optim.Adam(pg0, lr=hyp['lr0'], betas=(

hyp['momentum'], 0.999)) # adjust beta1 to momentum

# add pg1 with weight_decay

optimizer.add_param_group(

{

'params': pg1, 'weight_decay': hyp['weight_decay']})

# add pg2 (biases)

optimizer.add_param_group({

'params': pg2})

# print('Optimizer groups: %g .bias, %g conv.weight, %g other' %

# (len(pg2), len(pg1), len(pg0)))

del pg0, pg1, pg2

12.如何保存及调用之前训练的best metric

在pytorch训练中,经常需要获取上次训练得到的最佳metric,用于和新训练获得的metirc来进行比较,进而确定是否保存当前权重。

一种笨的方法就是使用上次保存的权重文件,eval一遍来获得这个metric,

这里介绍另外一种方法:

即在权重文件中保存上次训练得到的metric,在下一次重新训练时直接调用。

以metric precision为例,整个过程分两步

12.1 正常训练出现best metric 保存metric及weight文件

if val_pre > val_precision:

val_precision = val_pre

ckpt = {

'best_precision': val_precision,

'model': model.state_dict()}

torch.save(ckpt, weight_file)

print("weight is updated")

12.2 下次训练通过weight文件获取metric及权重

val_precision = 0

if (os.path.isfile(weight_file)):

ckpt = torch.load(weight_file, map_location=device) # load checkpoint

state_dict = {

k: v for k, v in ckpt['model'].items() if model.state_dict()[

k].numel() == v.numel()}

model.load_state_dict(state_dict)

if ckpt['best_precision'] is not None:

val_precision = ckpt['best_precision']

print(f"current best precision:{

val_precision:.5f}")

13.加载torchvision中的模型

13.1 各layer的引用方式

以vgg13为例:

import torchvision.models as models

vgg13=models.vgg13(pretrained=True)

显示模型:

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(15): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(16): ReLU(inplace=True)

(17): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(20): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(21): ReLU(inplace=True)

(22): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(23): ReLU(inplace=True)

(24): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=1000, bias=True)

)

)

如何引用并进一步修改模型呢?

以分类模型修改类别个数为例,详见参考5:

vgg13.classifier._modules['6']

#(6): Linear(in_features=4096, out_features=1000, bias=True)

也可以使用:

list(vgg13.children())[-1][-1]

#Linear(in_features=4096, out_features=1000, bias=True)

要修改分类个数为10:

vgg13.classifier._modules['6']=nn.Linear(in_features=4096, out_features=10, bias=True)

先存在这,怕以后用找不到~

14. torch.bmm

torch.bmm(input, mat2, *, deterministic=False, out=None) → Tensor

对矩阵input, 矩阵mat2中各batch对应子矩阵相乘后的结果(batch数目保持不变)。

如:

batch为1的矩阵进行bmm运算:

a=torch.tensor([[[1,2],[2,2]]]).type(torch.float)

#tensor([[[1., 2.],

# [2., 2.]]])

b=torch.tensor([[[2., 1., 1.],[1., 1., 3.]]])

#tensor([[[2., 1., 1.],

# [1., 1., 3.]]])

torch.bmm(a,b)

#tensor([[[4., 3., 7.],

# [6., 4., 8.]]])

batch大于1的矩阵进行bmm运算:

c=torch.stack((a,a),dim=1).view(2,2,2)

#tensor([[[1., 2.],

# [2., 2.]],

#

# [[1., 2.],

# [2., 2.]]])

d=torch.stack((b,b),dim=0).view(-1,2,3)

#tensor([[[2., 1., 1.],

# [1., 1., 3.]],

#

# [[2., 1., 1.],

# [1., 1., 3.]]])

torch.bmm(c,d)

#tensor([[[4., 3., 7.],

# [6., 4., 8.]],

# [[4., 3., 7.],

# [6., 4., 8.]]])

将a,b在batch维度各复制一份,分别生成c,d,此时batch=2。

再进行bmm运算。从结果来看确实是在各batch上进行矩阵运算。

参考

1.https://pytorch.org/

2. https://www.it610.com/article/1281460426923589632.htm

3. https://github.com/WongKinYiu/PyTorch_YOLOv4/blob/master/train.py

4. https://blog.csdn.net/SHU15121856/article/details/89707105

5. https://blog.csdn.net/andyL_05/article/details/108930240