目录

1. 使用sklearn计算accuracy(accuracy_score)、混淆矩阵(confusion_matrix)

2. 使用sklearn计算精确率precision、召回率recall、F1 score

参考:

https://blog.csdn.net/kan2281123066/article/details/103237273 代码:利用sklearn 计算 precision、recall、F1 score

https://blog.csdn.net/blythe0107/article/details/75003890 代码:sklearn的precision_score, recall_score, f1_score使用

https://blog.csdn.net/Urbanears/article/details/105033731 二分类和多分类问题下的评价指标详析(Precision, Recall, F1,Micro,Macro)

https://blog.csdn.net/wf592523813/article/details/95202448 二分类和多分类问题的评价指标总结

https://zhuanlan.zhihu.com/p/147663370 知乎:多分类模型Accuracy, Precision, Recall和F1-score的超级无敌深入探讨

https://zhuanlan.zhihu.com/p/59862986 知乎:详解sklearn的多分类模型评价指标

https://www.zhihu.com/question/51470349 知乎:对多分类数据的模型比较选择,应该参考什么指标?

0. 评估指标

- 精确度:precision,正确预测为正的,占全部预测为正的比例,TP / (TP+FP)

- 召回率:recall,正确预测为正的,占全部实际为正的比例,TP / (TP+FN)

- F1-score:精确率和召回率的调和平均数,2 * precision*recall / (precision+recall)

1. 使用sklearn计算accuracy(accuracy_score)、混淆矩阵(confusion_matrix)

举的这个例子,标签label为5类,是个多分类问题

- accuracy_score

- confusion_matrix

from sklearn.metrics import confusion_matrix

from sklearn.metrics import accuracy_score

from sklearn.metrics import precision_score, recall_score, f1_score

actual = data3['label'] #真实的类别标签(data3是个dataframe,label是其中的一列)

predicted = data3['Label'] #预测的类别标签

# 计算总的精度

acc = accuracy_score(actual, predicted)

print(acc)

# 计算混淆矩阵

confusion_matrix(actual, predicted)

'''

输出结果:

0.8969907407407407

array([[1032, 1, 0, 0, 0],

[ 3, 965, 17, 0, 15],

[ 0, 10, 42, 0, 6],

[ 0, 11, 3, 0, 0],

[ 0, 98, 103, 0, 286]])

'''2. 使用sklearn计算精确率precision、召回率recall、F1 score

- precision:precision_score https://scikit-learn.org/stable/modules/generated/sklearn.metrics.precision_score.html

- recall:recall_score https://scikit-learn.org/stable/modules/generated/sklearn.metrics.recall_score.html

- F1 score:f1_score https://scikit-learn.org/stable/modules/generated/sklearn.metrics.f1_score.html

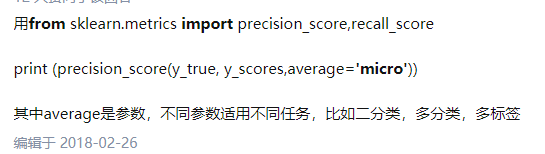

参数average有5个选项:{‘micro’微平均, ‘macro’宏平均, ‘samples’, ‘weighted’, ‘binary’},默认是default=’binary’二分类

p = precision_score(actual, predicted, average='micro')

p2 = precision_score(actual, predicted, average='macro')

p3 = precision_score(actual, predicted, average='weighted')

#p4 = precision_score(actual, predicted, average='samples')

r = recall_score(actual, predicted, average='micro')

r2 = recall_score(actual, predicted, average='macro')

r3 = recall_score(actual, predicted, average='weighted')

f1score = f1_score(actual, predicted, average='micro')

f1score2 = f1_score(actual, predicted, average='macro')

f1score3 = f1_score(actual, predicted, average='weighted')

#f1score4 = f1_score(actual, predicted, average='samples')

print(p,p2,p3)

print(r,r2,r3)

print(f1score,f1score2,f1score3)

'''

输出结果:

0.8969907407407407 0.614528783337003 0.9212413779093402

0.8969907407407407 0.6550877741326565 0.8969907407407407

0.8969907407407407 0.6041619746930149 0.8986671343868164

'''