计算图形对的基础矩阵

(心中若有梦,便不顾风雨兼程)奥利给

本节将探讨同一场景的两幅图像之间的投影关系。可以移动相机,从两个视角拍摄两幅照片;也可以使用两个相机,分别对同一个场景拍摄照片。如果这两个相机被刚性基线分割,我们就称之为立体视觉。

对极约束

公式推导

在第一帧坐标系下:

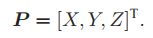

设P的空间位置

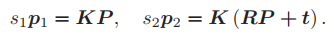

由针孔相机模型可知,两个像素点p1,p2的像素位置为

这里K为相机内参矩阵,R,t为两个坐标系的相机运动。具体来说,这里计算的是R21和t21因为它们把第一个坐标系下的坐标转换到第二个坐标系下。

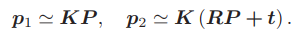

在使用齐次坐标时,一个向量将等于它自身乘上任意的非零常数。这种相等关系为尺度意义下相等。

上述投影关系可写为

现在取

这里的x1,x2,是两个像素点在归一化平面上的坐标,将上式代入投影关系方程,得

所以

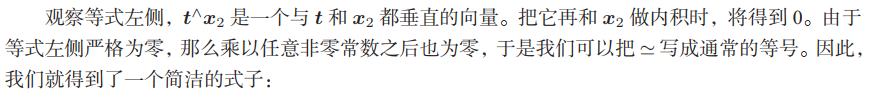

两边同乘以t^。相当于等式两侧同时与t做外积。

两侧同时左乘xT2

- 如果两幅图像之间有一定数量的已知匹配点,就可以利用方程组来计算图像对的基础矩阵。这样的匹配项至少要有 7 对。

示例程序

#include <iostream>

#include <vector>

#include <opencv2/core/core.hpp>

#include </home/jlm/3rdparty/opencv/opencv_contrib/modules/xfeatures2d/include/opencv2/xfeatures2d.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/calib3d/calib3d.hpp>

using namespace std;

int main(int argc, char** argv) {

cv::Mat image1 = cv::imread("church01.jpg",0);

cv::Mat image2 = cv::imread("church03.jpg", 0);

if(!image1.data || !image2.data)

{

return 0;

}

cv::imshow("Right Image", image1);

cv::imshow("Left Image", image2);

vector<cv::KeyPoint> keypoints1;

vector<cv::KeyPoint> keypoints2;

cv::Mat descriptors1, descriptors2;

cv::Ptr<cv::Feature2D> ptrFeature2D = cv::xfeatures2d::SIFT::create(74);

ptrFeature2D -> detectAndCompute(image1, cv::noArray(), keypoints1, descriptors1);

ptrFeature2D -> detectAndCompute(image2, cv::noArray(), keypoints2, descriptors2);

cout << "Number of SIFT points(1): " << keypoints1.size() << endl;

cout << "Number of SIFT points(2): " << keypoints2.size() << endl;

cv::Mat imageKP;

cv::drawKeypoints(image1, keypoints1,imageKP, cv::Scalar(255, 255, 255), cv::DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

cv::imshow("Right SIFT Features", imageKP);

cv::drawKeypoints(image2, keypoints2, imageKP, cv::Scalar(255, 255, 255), cv::DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

cv::imshow("Left SIFT Features", imageKP);

cv::BFMatcher matcher(cv::NORM_L2, true);

vector<cv::DMatch> matches;

matcher.match(descriptors1, descriptors2, matches);

cout << "Number of matched points: " << matches.size() << endl;

// Manually select few Matches

vector<cv::DMatch> selMatches;

selMatches.push_back(matches[2]);

selMatches.push_back(matches[5]);

selMatches.push_back(matches[16]);

selMatches.push_back(matches[19]);

selMatches.push_back(matches[14]);

selMatches.push_back(matches[34]);

selMatches.push_back(matches[29]);

// Draw the selected matches

cv::Mat imageMatches;

cv::drawMatches(image1, keypoints1,

image2, keypoints2,

selMatches,

imageMatches,

cv::Scalar(255, 255, 255),

cv::Scalar(255, 255, 255),

vector<char>(),

2);

cv::imshow("Matches", imageMatches);

vector<int> pointIndexes1;

vector<int> pointIndexes2;

// for(vector<cv::DMatch>::const_iterator it = selMatches.begin();

// it != selMatches.end(); ++it)

// {

// pointIndexes1.push_back(it -> queryIdx);

// pointIndexes2.push_back(it -> trainIdx);

// }

vector<cv::Point2f> selPoints1, selPoints2;

cv::KeyPoint::convert(keypoints1, selPoints1, pointIndexes1);

cv::KeyPoint::convert(keypoints2, selPoints2, pointIndexes2);

// check by drawing the points

vector<cv::Point2f>::const_iterator it = selPoints1.begin();

while( it != selPoints1.end())

{

cv::circle(image1, *it,3,cv::Scalar(255, 255, 255), 2);

++it;

}

it = selPoints2.begin();

while (it != selPoints2.end())

{

cv::circle(image2, *it, 3, cv::Scalar(255, 255, 255), 2);

++it;

}

cv::Mat fundamental = cv::findFundamentalMat(selPoints1,

selPoints2,

cv::FM_7POINT);

cout << "F-Matrix size= " << fundamental.rows << "," << fundamental.cols << endl;

cv::Mat fund(fundamental,cv::Rect(0, 0, 3, 3));

vector<cv::Vec3f> lines1;

cv::computeCorrespondEpilines(selPoints1,

1, // in image1 (can also be 2)

fund, // F Matrix

lines1); // vector of epipolar lines

cout << "size of F matrix: " << fund.rows << "x" << fund.cols << endl;

// for all epipolar lines

for (std::vector<cv::Vec3f>::const_iterator it = lines1.begin();

it != lines1.end(); ++it)

{

// draw the epipolar line between first and last column

cv::line(image2,cv::Point(0, -(*it)[2]/(*it)[1]),

cv::Point(image2.cols, -((*it)[2] + (*it)[0]*image2.cols)/(*it)[1]),

cv::Scalar(255, 255, 255));

}

// draw the left points corresponding epipolar lines in left image

vector<cv::Vec3f> lines2;

cv::computeCorrespondEpilines(cv::Mat(selPoints2), 2, fund, lines2);

for(auto it = lines2.begin(); it != lines2.end(); ++it){

// draw the epipolar line between first and last column

cv::line(image1, cv::Point(0, -(*it)[2]/(*it)[1]),

cv::Point(image1.cols, -((*it)[2]+(*it)[0]*image1.cols)/(*it)[1]),

cv::Scalar(255, 255, 255));

}

// combine both images

cv::Mat both(image1.rows, image1.cols+image2.cols, CV_8U);

image1.copyTo(both.colRange(0, image1.cols));

image2.copyTo(both.colRange(image1.cols, image1.cols+image2.cols));

cv::imshow("Epilines", both);

cv::waitKey();

return 0;

}