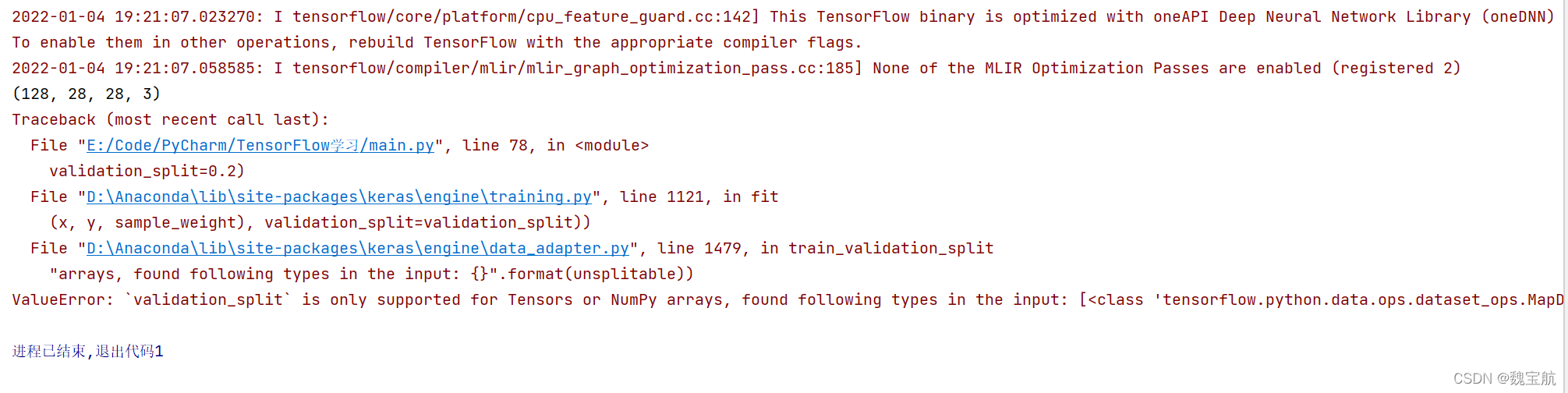

2022-01-04 19:21:07.023270: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX AVX2

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2022-01-04 19:21:07.058585: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:185] None of the MLIR Optimization Passes are enabled (registered 2)

Traceback (most recent call last):

File "E:/Code/PyCharm/TensorFlow学习/main.py", line 78, in <module>

validation_split=0.2)

File "D:\Anaconda\lib\site-packages\keras\engine\training.py", line 1121, in fit

(x, y, sample_weight), validation_split=validation_split))

File "D:\Anaconda\lib\site-packages\keras\engine\data_adapter.py", line 1479, in train_validation_split

"arrays, found following types in the input: {}".format(unsplitable))

ValueError: `validation_split` is only supported for Tensors or NumPy arrays, found following types in the input: [<class 'tensorflow.python.data.ops.dataset_ops.MapDataset'>]

问题原因:

如果使用validation_split分割验证集,那么输入的训练集必须是Tensor或者Numpy数据类型,不可以是Dataset迭代器类型

解决办法:

方案一:添加验证集

添加验证集,而不是使用validation_split进行分割

val_batches = tf.data.experimental.cardinality(train_dataset)

val_dataset = train_dataset.skip(val_batches // 5)

train_dataset = train_dataset.take(val_batches // 5)

使用训练集一部分作为验证集使用

方案二:将Dataset类型变成Numpy或者Tensor

这种方式只适合数据量不是特别大

# 前提要将所有数据作为一个批次加入的Dataset中

train_data, train_label = next(iter(train_datasets))