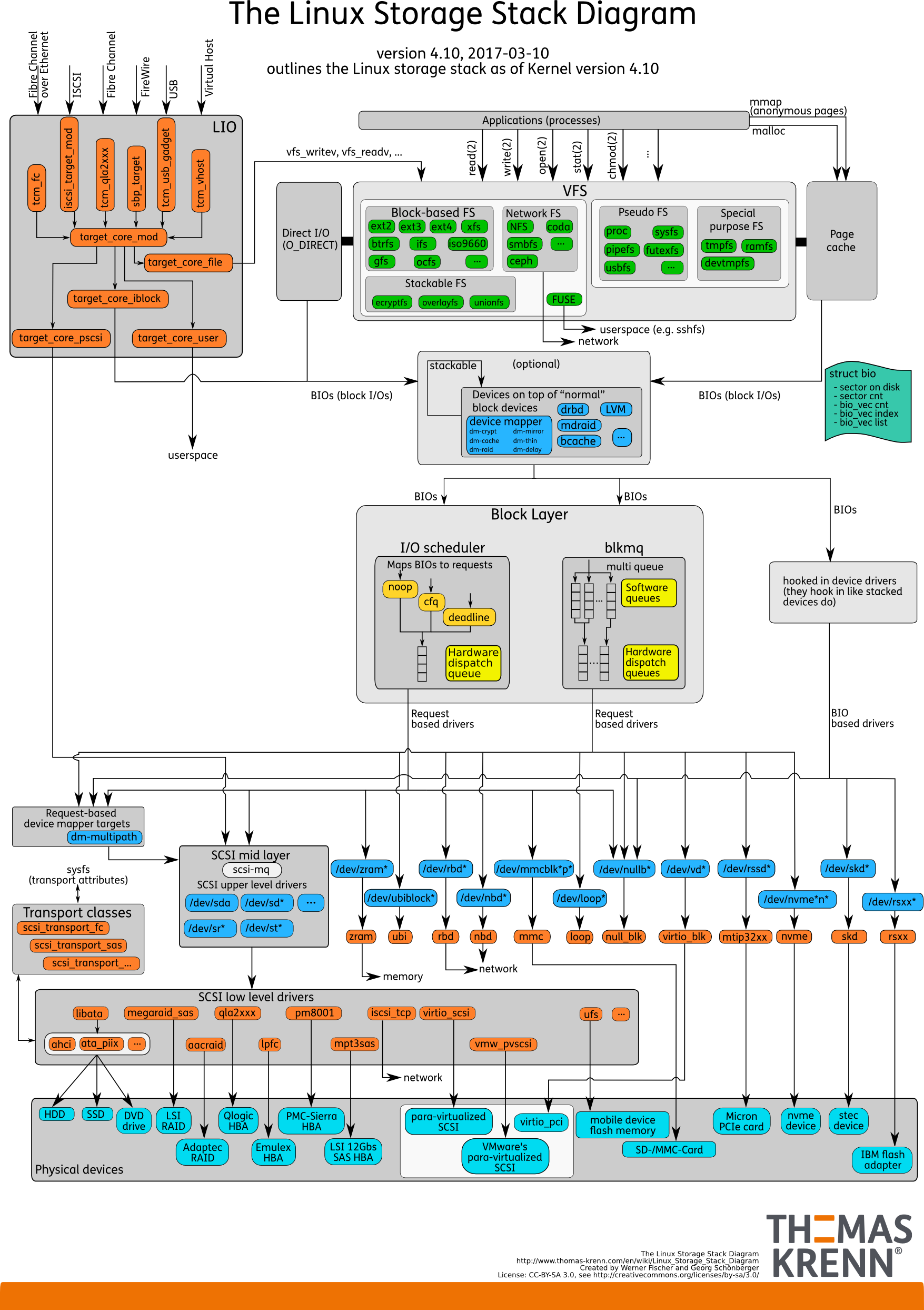

直接先上重点,linux中IO栈的完全图如下:

系统中能够随机访问固定大小数据片的硬件设备称作块设备。固定大小的数据片称为块。常见的块设备就是硬盘了。不能随机访问的就是字符设备了,管理块设备比字符设备要复杂很多。

块设备中最小的可寻址单元是扇区,一般是2的整数倍,最常见的是512字节。不过很多CD-ROM盘的扇区都是2KB大小。

内核执行磁盘操作都是按照块的,但是物理磁盘寻址是按照扇区级进行的。块不能比扇区小,只能数倍于扇区大小,而且不能超过一个页的长度。

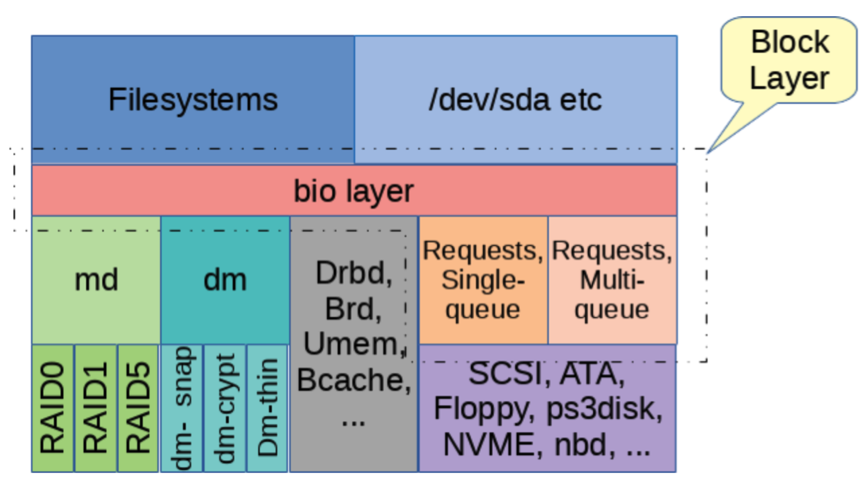

块层是内核中实现的接口,用于在应用和文件系统中来访问不同的存储设备,其代码位于block文件夹中,其拓扑逻辑如下

1.1.1 缓存 I/O (Buffered I/O)

缓存I/O又被称作标准I/O,大多数文件系统的默认 I/O都是缓存 I/O。

操作系统会将 I/O 的数据缓存在文件系统的页缓存( page cache )中,数据会先被拷贝到操作系统内核的缓冲区中,然后才会从操作系统内核的缓冲区拷贝到应用程序的地址空间。

在缓存 I/O 机制中,数据在传输过程中需要在应用程序地址空间和页缓存之间进行多次数据拷贝操作, CPU 以及内存开销是比较大。

1.1.2 直接I/O(Direct I/O)

某些应用程序,会有它自己的数据缓存机制,比如oracle数据库。它会将数据缓存在应用程序地址空间,不需要使用操作系统内核的高速缓冲存储器,这类应用程序就被称作是自缓存应用程序( self-caching applications )。

自缓存应用程序来说,使用直接 I/O(DIO) 技术。省略掉缓存 I/O 技术中操作系统内核缓冲区的使用,数据直接在应用程序地址空间和磁盘之间进行传输,从而省略掉复杂的系统级别的缓存结构,而执行应用自己定义的数据读写管理。

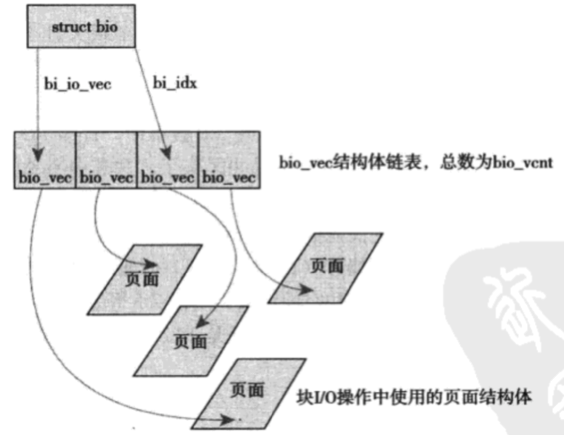

1.1.3 bio结构体

在2.4内核以前使用缓冲头的方式,该方式下会将每个I/O请求分解成512字节的块,所以不能创建高性能IO子系统。2.5中一个重要的工作就是支持高性能I/O,于是有了现在的BIO结构体。

bio结构体是request结构体的实际数据,一个request结构体中包含一个或者多个bio结构体,在底层实际是按bio来对设备进行操作的,传递给驱动。

代码会把它合并到一个已经存在的request结构体中,或者需要的话会再创建一个新的request结构体;bio结构体包含了驱动程序执行请求的全部信息。

一个bio包含多个page,这些page对应磁盘上一段连续的空间。由于文件在磁盘上并不连续存放,文件I/O提交到块设备之前,极有可能被拆成多个bio结构;

该结构体定义在include/linux/blk_types.h文件中,不幸的是该结构和以往发生了一些较大变化,特别是与ldd一书中不匹配了。

/*

* main unit of I/O for the block layer and lower layers (ie drivers and

* stacking drivers)

*/

struct bio {

struct bio *bi_next; /* request queue link */

struct gendisk *bi_disk;

unsigned int bi_opf; /* bottom bits req flags,

* top bits REQ_OP. Use

* accessors.

*/

unsigned short bi_flags; /* status, etc and bvec pool number */

unsigned short bi_ioprio;

unsigned short bi_write_hint;

blk_status_t bi_status;

u8 bi_partno;

/* Number of segments in this BIO after

* physical address coalescing is performed.

*/

unsigned int bi_phys_segments;

/*

* To keep track of the max segment size, we account for the

* sizes of the first and last mergeable segments in this bio.

*/

unsigned int bi_seg_front_size;

unsigned int bi_seg_back_size;

struct bvec_iter bi_iter;

atomic_t __bi_remaining;

bio_end_io_t *bi_end_io;

void *bi_private;

#ifdef CONFIG_BLK_CGROUP

/*

* Optional ioc and css associated with this bio. Put on bio

* release. Read comment on top of bio_associate_current().

*/

struct io_context *bi_ioc;

struct cgroup_subsys_state *bi_css;

#ifdef CONFIG_BLK_DEV_THROTTLING_LOW

void *bi_cg_private;

struct blk_issue_stat bi_issue_stat;

#endif

#endif

union {

#if defined(CONFIG_BLK_DEV_INTEGRITY)

struct bio_integrity_payload *bi_integrity; /* data integrity */

#endif

};

unsigned short bi_vcnt; /* how many bio_vec's */

/*

* Everything starting with bi_max_vecs will be preserved by bio_reset()

*/

unsigned short bi_max_vecs; /* max bvl_vecs we can hold */

atomic_t __bi_cnt; /* pin count */

struct bio_vec *bi_io_vec; /* the actual vec list */

struct bio_set *bi_pool;

/*

* We can inline a number of vecs at the end of the bio, to avoid

* double allocations for a small number of bio_vecs. This member

* MUST obviously be kept at the very end of the bio.

*/

struct bio_vec bi_inline_vecs[0];

};

其中bio_vec结构体位于文件include/linux/bvec.h中:

struct bio_vec {

struct page *bv_page; //指向整个缓冲区所驻留的物理页面

unsigned int bv_len; //以字节为单位的大小

unsigned int bv_offset;//以字节为单位的偏移量

};

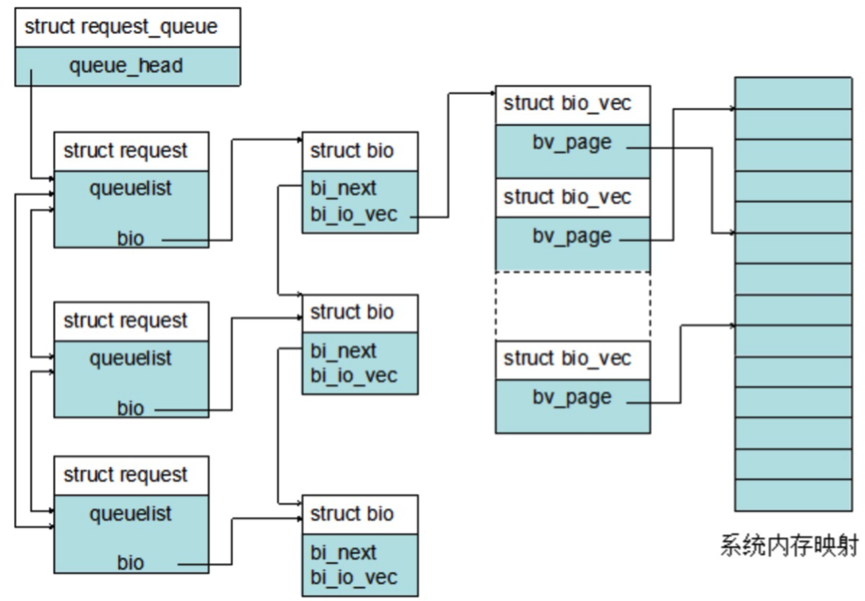

1.1.4 request结构体

request结构体就是请求操作块设备的请求结构体,该结构体被放到request_queue队列中,等到合适的时候再处理。

该结构体定义在include/linux/blkdev.h文件中:

struct request {

struct request_queue *q; //所在队列

struct blk_mq_ctx *mq_ctx;

int cpu;

unsigned int cmd_flags; /* op and common flags */

req_flags_t rq_flags;

int internal_tag;

/* the following two fields are internal, NEVER access directly */

unsigned int __data_len; /* total data len */

int tag;

sector_t __sector; /* sector cursor */

struct bio *bio;

struct bio *biotail;

struct list_head queuelist; //请求结构体队列链表

/*

* The hash is used inside the scheduler, and killed once the

* request reaches the dispatch list. The ipi_list is only used

* to queue the request for softirq completion, which is long

* after the request has been unhashed (and even removed from

* the dispatch list).

*/

union {

struct hlist_node hash; /* merge hash */

struct list_head ipi_list;

};

/*

* The rb_node is only used inside the io scheduler, requests

* are pruned when moved to the dispatch queue. So let the

* completion_data share space with the rb_node.

*/

union {

struct rb_node rb_node; /* sort/lookup */

struct bio_vec special_vec;

void *completion_data;

int error_count; /* for legacy drivers, don't use */

};

/*

* Three pointers are available for the IO schedulers, if they need

* more they have to dynamically allocate it. Flush requests are

* never put on the IO scheduler. So let the flush fields share

* space with the elevator data.

*/

union {

struct {

struct io_cq *icq;

void *priv[2];

} elv;

struct {

unsigned int seq;

struct list_head list;

rq_end_io_fn *saved_end_io;

} flush;

};

struct gendisk *rq_disk;

struct hd_struct *part;

unsigned long start_time;

struct blk_issue_stat issue_stat;

/* Number of scatter-gather DMA addr+len pairs after

* physical address coalescing is performed.

*/

unsigned short nr_phys_segments;

#if defined(CONFIG_BLK_DEV_INTEGRITY)

unsigned short nr_integrity_segments;

#endif

unsigned short write_hint;

unsigned short ioprio;

unsigned int timeout;

void *special; /* opaque pointer available for LLD use */

unsigned int extra_len; /* length of alignment and padding */

/*

* On blk-mq, the lower bits of ->gstate (generation number and

* state) carry the MQ_RQ_* state value and the upper bits the

* generation number which is monotonically incremented and used to

* distinguish the reuse instances.

*

* ->gstate_seq allows updates to ->gstate and other fields

* (currently ->deadline) during request start to be read

* atomically from the timeout path, so that it can operate on a

* coherent set of information.

*/

seqcount_t gstate_seq;

u64 gstate;

/*

* ->aborted_gstate is used by the timeout to claim a specific

* recycle instance of this request. See blk_mq_timeout_work().

*/

struct u64_stats_sync aborted_gstate_sync;

u64 aborted_gstate;

/* access through blk_rq_set_deadline, blk_rq_deadline */

unsigned long __deadline;

struct list_head timeout_list;

union {

struct __call_single_data csd;

u64 fifo_time;

};

/*

* completion callback.

*/

rq_end_io_fn *end_io;

void *end_io_data;

/* for bidi */

struct request *next_rq;

#ifdef CONFIG_BLK_CGROUP

struct request_list *rl; /* rl this rq is alloced from */

unsigned long long start_time_ns;

unsigned long long io_start_time_ns; /* when passed to hardware */

#endif

};

表示块设备驱动层I/O请求,经由I/O调度层转换后的I/O请求,将会发到块设备驱动层进行处理;

1.1.5 request_queue结构体

每一块设备都会有一个队列,当需要对设备操作时,把请求放在队列中。因为对块设备的操作 I/O访问不能及时调用完成,I/O操作比较慢,所以把所有的请求放在队列中,等到合适的时候再处理这些请求;

该结构体定义在include/linux/blkdev.h文件中:

struct request_queue {

/*

* Together with queue_head for cacheline sharing

*/

struct list_head queue_head;

struct request *last_merge;

struct elevator_queue *elevator;

int nr_rqs[2]; /* # allocated [a]sync rqs */

int nr_rqs_elvpriv; /* # allocated rqs w/ elvpriv */

atomic_t shared_hctx_restart;

struct blk_queue_stats *stats;

struct rq_wb *rq_wb;

/*

* If blkcg is not used, @q->root_rl serves all requests. If blkcg

* is used, root blkg allocates from @q->root_rl and all other

* blkgs from their own blkg->rl. Which one to use should be

* determined using bio_request_list().

*/

struct request_list root_rl;

request_fn_proc *request_fn;

make_request_fn *make_request_fn;

poll_q_fn *poll_fn;

prep_rq_fn *prep_rq_fn;

unprep_rq_fn *unprep_rq_fn;

softirq_done_fn *softirq_done_fn;

rq_timed_out_fn *rq_timed_out_fn;

dma_drain_needed_fn *dma_drain_needed;

lld_busy_fn *lld_busy_fn;

/* Called just after a request is allocated */

init_rq_fn *init_rq_fn;

/* Called just before a request is freed */

exit_rq_fn *exit_rq_fn;

/* Called from inside blk_get_request() */

void (*initialize_rq_fn)(struct request *rq);

const struct blk_mq_ops *mq_ops;

unsigned int *mq_map;

/* sw queues */

struct blk_mq_ctx __percpu *queue_ctx;

unsigned int nr_queues;

unsigned int queue_depth;

/* hw dispatch queues */

struct blk_mq_hw_ctx **queue_hw_ctx;

unsigned int nr_hw_queues;

/*

* Dispatch queue sorting

*/

sector_t end_sector;

struct request *boundary_rq;

/*

* Delayed queue handling

*/

struct delayed_work delay_work;

struct backing_dev_info *backing_dev_info;

/*

* The queue owner gets to use this for whatever they like.

* ll_rw_blk doesn't touch it.

*/

void *queuedata;

/*

* various queue flags, see QUEUE_* below

*/

unsigned long queue_flags;

/*

* ida allocated id for this queue. Used to index queues from

* ioctx.

*/

int id;

/*

* queue needs bounce pages for pages above this limit

*/

gfp_t bounce_gfp;

/*

* protects queue structures from reentrancy. ->__queue_lock should

* _never_ be used directly, it is queue private. always use

* ->queue_lock.

*/

spinlock_t __queue_lock;

spinlock_t *queue_lock;

/*

* queue kobject

*/

struct kobject kobj;

/*

* mq queue kobject

*/

struct kobject mq_kobj;

#ifdef CONFIG_BLK_DEV_INTEGRITY

struct blk_integrity integrity;

#endif /* CONFIG_BLK_DEV_INTEGRITY */

#ifdef CONFIG_PM

struct device *dev;

int rpm_status;

unsigned int nr_pending;

#endif

/*

* queue settings

*/

unsigned long nr_requests; /* Max # of requests */

unsigned int nr_congestion_on;

unsigned int nr_congestion_off;

unsigned int nr_batching;

unsigned int dma_drain_size;

void *dma_drain_buffer;

unsigned int dma_pad_mask;

unsigned int dma_alignment;

struct blk_queue_tag *queue_tags;

struct list_head tag_busy_list;

unsigned int nr_sorted;

unsigned int in_flight[2];

/*

* Number of active block driver functions for which blk_drain_queue()

* must wait. Must be incremented around functions that unlock the

* queue_lock internally, e.g. scsi_request_fn().

*/

unsigned int request_fn_active;

unsigned int rq_timeout;

int poll_nsec;

struct blk_stat_callback *poll_cb;

struct blk_rq_stat poll_stat[BLK_MQ_POLL_STATS_BKTS];

struct timer_list timeout;

struct work_struct timeout_work;

struct list_head timeout_list;

struct list_head icq_list;

#ifdef CONFIG_BLK_CGROUP

DECLARE_BITMAP (blkcg_pols, BLKCG_MAX_POLS);

struct blkcg_gq *root_blkg;

struct list_head blkg_list;

#endif

struct queue_limits limits;

/*

* Zoned block device information for request dispatch control.

* nr_zones is the total number of zones of the device. This is always

* 0 for regular block devices. seq_zones_bitmap is a bitmap of nr_zones

* bits which indicates if a zone is conventional (bit clear) or

* sequential (bit set). seq_zones_wlock is a bitmap of nr_zones

* bits which indicates if a zone is write locked, that is, if a write

* request targeting the zone was dispatched. All three fields are

* initialized by the low level device driver (e.g. scsi/sd.c).

* Stacking drivers (device mappers) may or may not initialize

* these fields.

*/

unsigned int nr_zones;

unsigned long *seq_zones_bitmap;

unsigned long *seq_zones_wlock;

/*

* sg stuff

*/

unsigned int sg_timeout;

unsigned int sg_reserved_size;

int node;

#ifdef CONFIG_BLK_DEV_IO_TRACE

struct blk_trace *blk_trace;

struct mutex blk_trace_mutex;

#endif

/*

* for flush operations

*/

struct blk_flush_queue *fq;

struct list_head requeue_list;

spinlock_t requeue_lock;

struct delayed_work requeue_work;

struct mutex sysfs_lock;

int bypass_depth;

atomic_t mq_freeze_depth;

#if defined(CONFIG_BLK_DEV_BSG)

bsg_job_fn *bsg_job_fn;

struct bsg_class_device bsg_dev;

#endif

#ifdef CONFIG_BLK_DEV_THROTTLING

/* Throttle data */

struct throtl_data *td;

#endif

struct rcu_head rcu_head;

wait_queue_head_t mq_freeze_wq;

struct percpu_ref q_usage_counter;

struct list_head all_q_node;

struct blk_mq_tag_set *tag_set;

struct list_head tag_set_list;

struct bio_set *bio_split;

#ifdef CONFIG_BLK_DEBUG_FS

struct dentry *debugfs_dir;

struct dentry *sched_debugfs_dir;

#endif

bool mq_sysfs_init_done;

size_t cmd_size;

void *rq_alloc_data;

struct work_struct release_work;

#define BLK_MAX_WRITE_HINTS 5

u64 write_hints[BLK_MAX_WRITE_HINTS];

};

该结构体还是异常庞大的,都快接近sk_buff结构体了。

维护块设备驱动层I/O请求的队列,所有的request都插入到该队列,每个磁盘设备都只有一个queue(多个分区也只有一个);

一个request_queue中包含多个request,每个request可能包含多个bio,请求的合并就是根据各种原则将多个bio加入到同一个request中。

1.1.6 I/O调度层

每个块设备或者其分区,都有对应的请求队列(request_queue),每个请求队列都可以选择一个I/O调度器来协调所递交的request。

传统I/O调度器的基本目的是将请求按照它们对应在块设备上的扇区号进行排列,以减少磁头的移动,提高效率,但是现在很多设备其实并不使用机械硬盘,所以不存在减少磁头移动一说。

此外,每个调度器自身都维护有不同数量的队列,类似每个队列可以有多个请求一样。

1.1.7 I/O写流程

写流程简单描述如下:

1)用户调用系统调用write写一个文件,会调到sys_write函数

2) 经过VFS虚拟文件系统层,调用vfs_write, 如果是缓存写方式,则写入page cache,然后就返回,后续就是刷脏页的流程;如果是Direct I/O的方式,就会走到do_blockdev_direct_IO的流程;

3)如果操作的设备是逻辑设备如LVM,MDRAID设备等,会进入到对应内核模块的处理函数里进行一些处理,否则就直接构造bio请求,调用submit_bio往具体的块设备下发请求,submit_bio函数通过generic_make_request转发bio,generic_make_request中带有循环,其通过每个块设备下注册的q->make_request_fn函数与块设备进行交互;

4)请求下发到底层块设备,调用块设备请求处理函数__make_request进行处理,在这个函数中就会调用blk_queue_bio,这个函数就是合并bio到request中,也就是I/O调度器的具体实现:如果几个bio要读写的区域是连续的,就合并到一个request;否则就创建一个新的request,把自己挂到这个request下。合并bio请求的请求超过阈值(在/sys/block/xxx/queue/max_sectors_kb),超过后就不能再合并成一个request,而会新分配一个request;

5)接下来由相应的块设备驱动程序进行处理,以scsi设备为例,queue队列的处理函数q->request_fn对应的scsi驱动的就是scsi_request_fn(drivers/scsi/scsi_lib.c)函数,其中q是实际的队列,将请求构造成scsi指令下发到scsi设备进行处理,处理完成后就会依次调用各层的回调函数进行完成状态的一些处理,最后返回给上层用户。

1.1.8 小结

块设备的I/O操作方式引入了request_queue、request、bio等一系列数据结构。在整个块设备的I/O操作中。 “请求” 贯穿于始终的就是,块设备的I/O操作会排队和整合。

I/O调度算法对请求的排队和整合,驱动的任务是处理请求,块设备驱动的核心就是请求处理函数。

1.1.9 参考

A block layer introduction part 1: the bio layer

https://www.thomas-krenn.com/en/wiki/Linux_Storage_Stack_Diagram