import tensorflow as tf

from dataset import *

import time

#时间标记起始点

time.clock()

#导入数据

x_train, y_train, x_validating, y_validating, x_test, y_test = data_set()

#定义参数

layer_dimensions = [784, 30, 50, 25, 33, 25, 15, 20, 10]

regularization_rate = 0.001

w_cache, b_cache = initialization(layer_dimensions)

#数据输入接口

x = tf.placeholder(tf.float32, shape=(None,784), name='x_input')

y_ = tf.placeholder(tf.float32, shape=(None,10), name='y_input')

#前向传播

y, regularizer_cache = propagation_forward(x, w_cache, b_cache, regularization_rate)

#普通损失函数,均方失真

cross_entropy_mean = tf.nn.sparse_softmax_cross_entropy_with_logits( logits=y, labels=tf.argmax(y_, 1)) #logits为未归一化的量

loss = cross_entropy_mean + tf.accumulate_n(regularizer_cache)

#优化方法

global_step = tf.Variable(0)

learning_rate = tf.train.exponential_decay(0.01, global_step, 100, 0.99, staircase=True)

train_step = tf.train.AdamOptimizer(learning_rate).minimize(loss, global_step=global_step)

#正确度计算

correct_prediction = tf.equal(tf.arg_max(y, 1), tf.arg_max(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

#创建会话

with tf.Session() as sess:

#初始化变量

init_op = tf.global_variables_initializer()

sess.run(init_op)

STEPS = 50000

batch_size = 256

x_axis = [i for i in range(STEPS+1) if (i % 100 == 0)]

train_line = []

validating_line = []

test_line = []

for i in range(STEPS+1):

xs, ys = batch(x_train, y_train, batch_size, i)

sess.run(train_step, feed_dict={x: xs, y_: ys})

#计算正确率

if i % 100 == 0:

train_acc = sess.run(accuracy, feed_dict={x: x_train, y_: y_train})

validating_acc = sess.run(accuracy, feed_dict={x: x_validating, y_: y_validating})

test_acc = sess.run(accuracy, feed_dict={x: x_test, y_: y_test})

train_line.append(train_acc)

validating_line.append(validating_acc)

test_line.append(test_acc)

if i % 1000 == 0:

print("after %d train steps, train accuracy on model is:%s" % (i, train_acc))

#print("after %d train steps, validating accuracy on model is:%s" % (i, validating_acc))

print("after %d train steps, test accuracy on model is:%s" % (i, test_acc))

#输出运行时间

print("total_time is:%s" % time.clock())

#性能曲线图

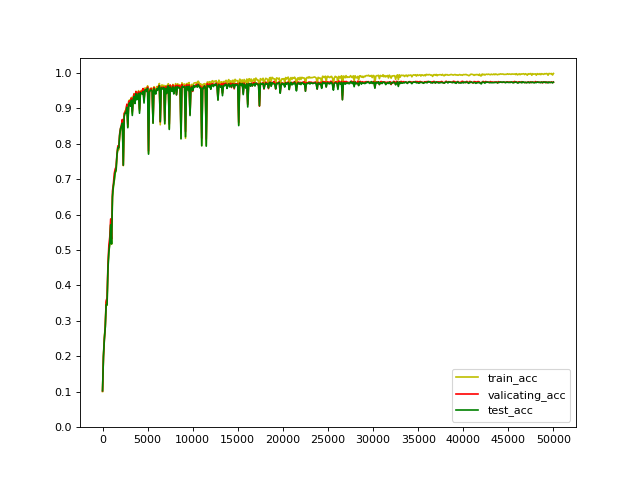

draw(x_axis, train_line, validating_line, test_line)

辅助函数:

from tensorflow.examples.tutorials.mnist import input_data

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

def data_set():

mnist = input_data.read_data_sets("D:/DL_dataset/TensorFlow/", one_hot=True)

x_train = mnist.train.images #(55000,784) 图片为28*28=784像素,以下也是

y_train = mnist.train.labels #(55000,10)

x_validating = mnist.validation.images #(5000,784)

y_validating = mnist.validation.labels #(5000,10)

x_test = mnist.test.images #(10000,784)

y_test = mnist.test.labels #(10000,10)

return x_train, y_train, x_validating, y_validating, x_test, y_test

def initialization(layer_dimensions):

w_cache = []

b_cache = []

for i in range(1, len(layer_dimensions)):

w = tf.Variable(tf.random_normal((layer_dimensions[i-1], layer_dimensions[i]), stddev=1, seed=1))

b = tf.Variable(tf.constant(0., shape=[1, layer_dimensions[i]]))

w_cache.append(w)

b_cache.append(b)

return w_cache, b_cache

#前向传播与正则项计算

def propagation_forward(x, w_cache, b_cache, regularization_rate):

a = x

long = len(w_cache)

regularizer_cache = []

regularizer = tf.contrib.layers.l2_regularizer(regularization_rate)

if long> 1:

for i in range(long-1):

a = tf.nn.tanh(tf.matmul(a, w_cache[i]) + b_cache[i])

regularizer_cache.append(regularizer(w_cache[i]))

a = tf.matmul(a, w_cache[long-1]) + b_cache[long-1]

regularizer_cache.append(regularizer(w_cache[long-1]))

return a, regularizer_cache

#画性能曲线

def draw(total_iteration, train, validating, test):

plt.figure(figsize=(8, 6), dpi=80)

plt.subplot(1, 1, 1)

xmin, xmax = min(total_iteration), max(total_iteration)

ymin, ymax = min(train), max(train)

#dx = (xmax - xmin) * 0.2

#dy = (ymax - ymin) * 0.2

plt.plot(total_iteration, train, '-y', label='train_acc')

plt.plot(total_iteration, validating, '-r', label='valicating_acc')

plt.plot(total_iteration, test, '-g', label='test_acc')

plt.legend(loc='lower right')

# 设置轴记号

plt.xticks(np.linspace(0, xmax, 11, endpoint=True))

plt.yticks(np.linspace(0, 1.0, 11, endpoint=True))

plt.show()

#设置批量

def batch(x_train, y_train, batch_size, i):

#整除运算,返回批数量

batch_nums = x_train.shape[0] // batch_size

i = i % batch_size

#舍弃掉最后批整数倍以外的多余数据

start = batch_size * i

end = batch_size * (i + 1) - 1

xs = x_train[start:end]

ys = y_train[start:end]

return xs, ys

结果:训练集与测试集正确率分别为:99.83%, 97.39%