Jenkins流水线

Jenkins Pipeline

要实现在 Jenkins 中的构建(构建任务)工作,可以有多种方式,我们这里采用比较常用的 Pipeline 这种方式。Pipeline,简单来说,就是一套运行在 Jenkins 上的工作流框架,将原来独立运行于单个或者多个节点的任务连接起来,实现单个任务难以完成的复杂流程编排和可视化的工作。

Jenkins Pipeline 有几个核心概念:

1.Node:节点,一个 Node 就是一个 Jenkins 节点,Master 或者 Agent,是执行 Step 的具体运行环境,比如我们之前动态运行的 Jenkins Slave 就是一个 Node 节点

2.Stage:阶段,一个 Pipeline 可以划分为若干个 Stage,每个 Stage 代表一组操作,比如:Build、Test、Deploy,Stage 是一个逻辑分组的概念,可以跨多个 Node

3.Step:步骤,Step 是最基本的操作单元,可以是打印一句话,也可以是构建一个 Docker 镜像,由各类 Jenkins 插件提供,比如命令:sh ‘make’,就相当于我们平时 shell 终端中执行 make 命令一样。

那么我们如何创建 Jenkins Pipline 呢?

1.Pipeline 脚本是由 Groovy 语言实现的,但是我们没必要单独去学习 Groovy,当然你会的话最好

2.Pipeline 支持两种语法:Declarative(声明式)和 Scripted Pipeline(脚本式)语法

3.Pipeline 也有两种创建方法:可以直接在 Jenkins 的 Web UI 界面中输入脚本;也可以通过创建一个 Jenkinsfile 脚本文件放入项目源码库中

4.一般我们都推荐在 Jenkins 中直接从源代码控制(SCMD)中直接载入 Jenkinsfile Pipeline 这种方法

我们这里来给大家快速创建一个简单的 Pipeline,直接在 Jenkins 的 Web UI 界面中输入脚本运行。

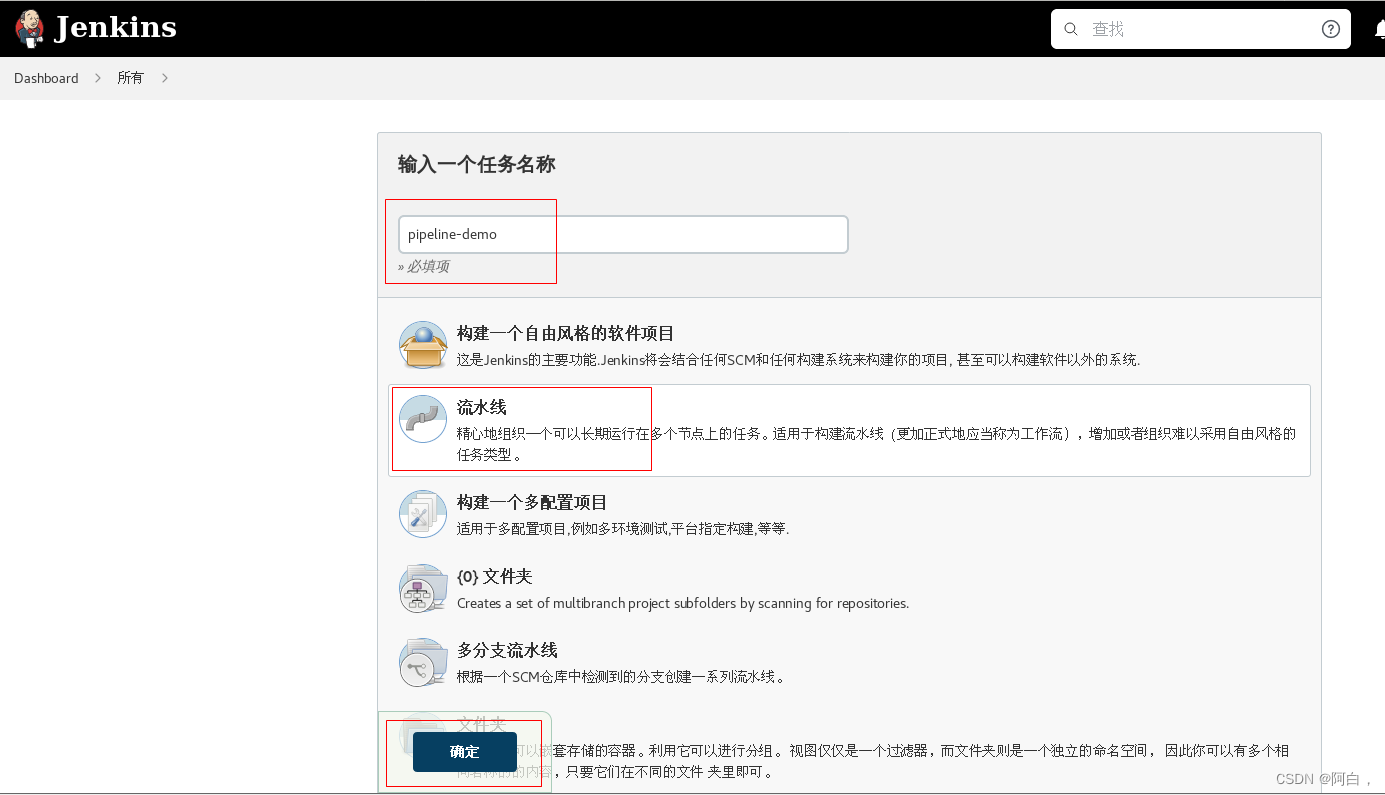

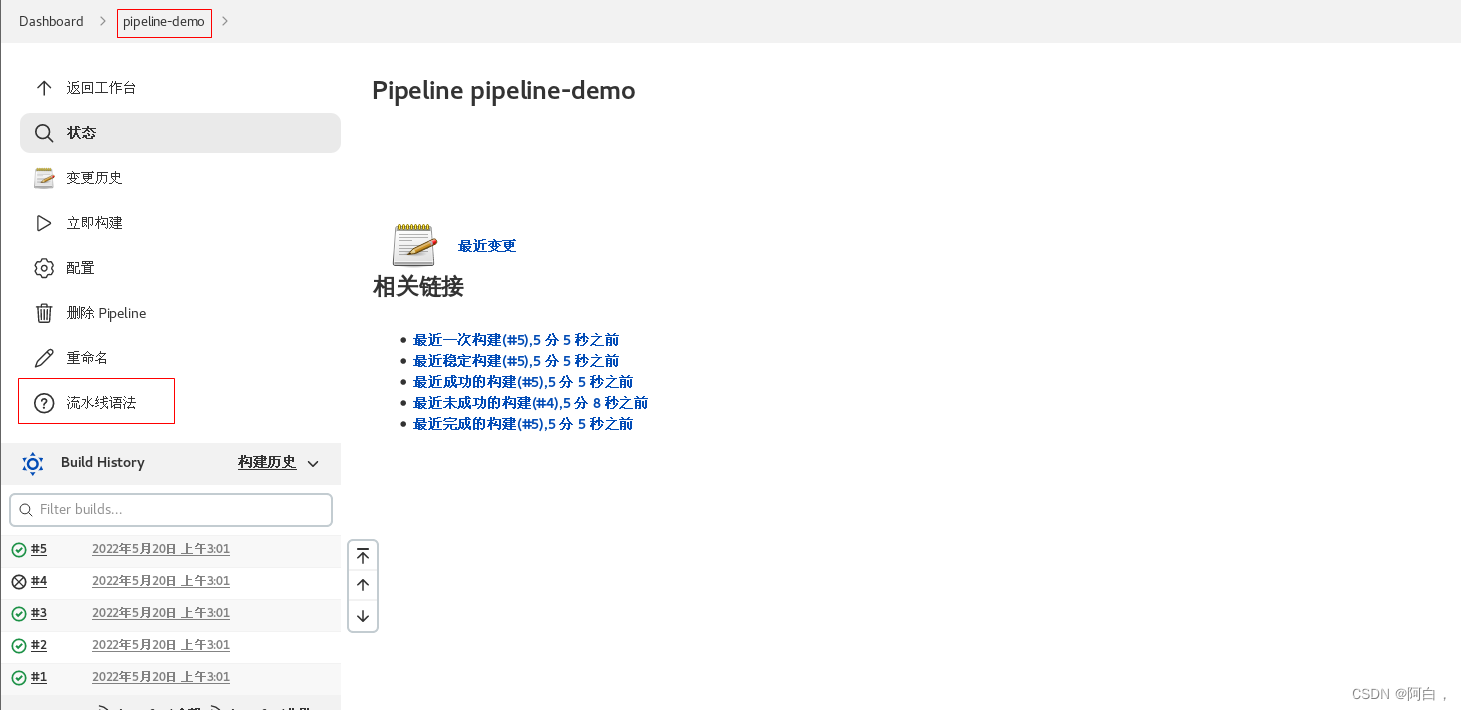

1.新建任务:在 Web UI 中点击 新建任务 -> 输入名称:pipeline-demo -> 选择下面的 流水线 -> 点击 确定

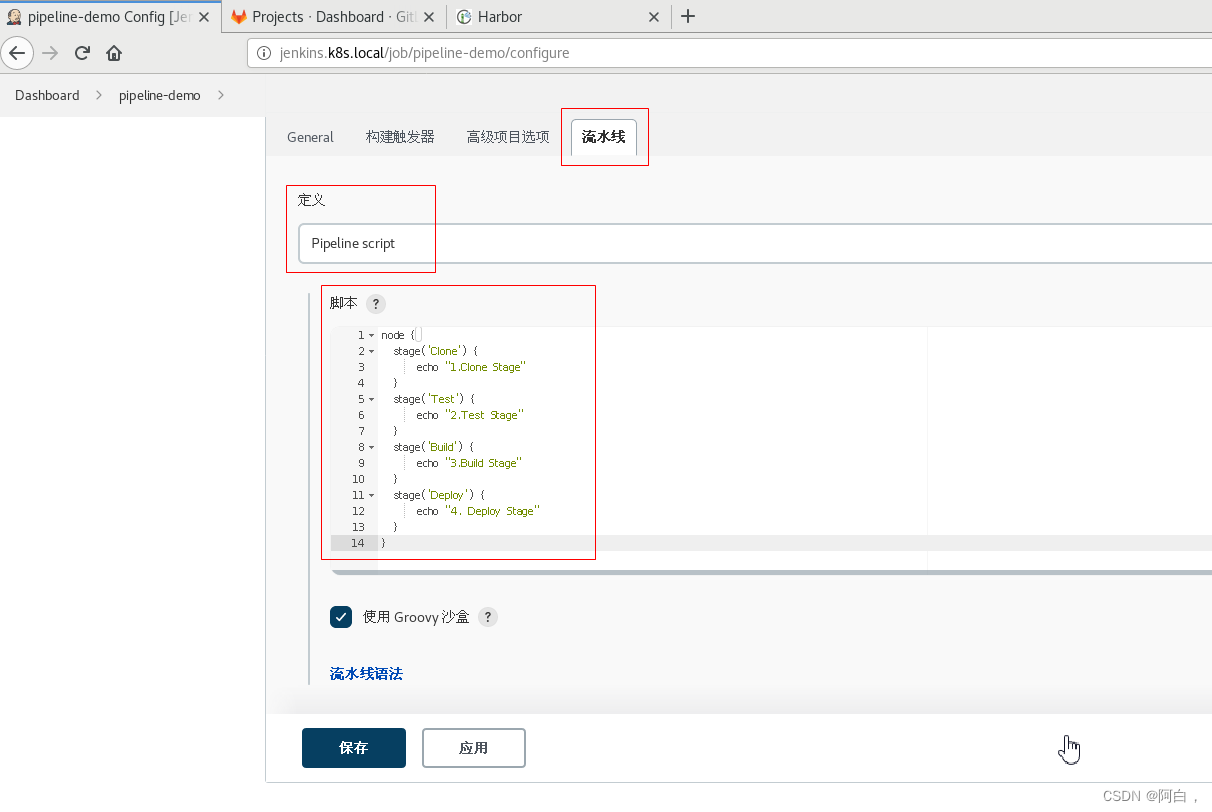

2.配置:在最下方的 Pipeline 区域输入如下 Script 脚本,然后点击保存。

node {

stage('Clone') {

echo "1.Clone Stage"

}

stage('Test') {

echo "2.Test Stage"

}

stage('Build') {

echo "3.Build Stage"

}

stage('Deploy') {

echo "4. Deploy Stage"

}

}

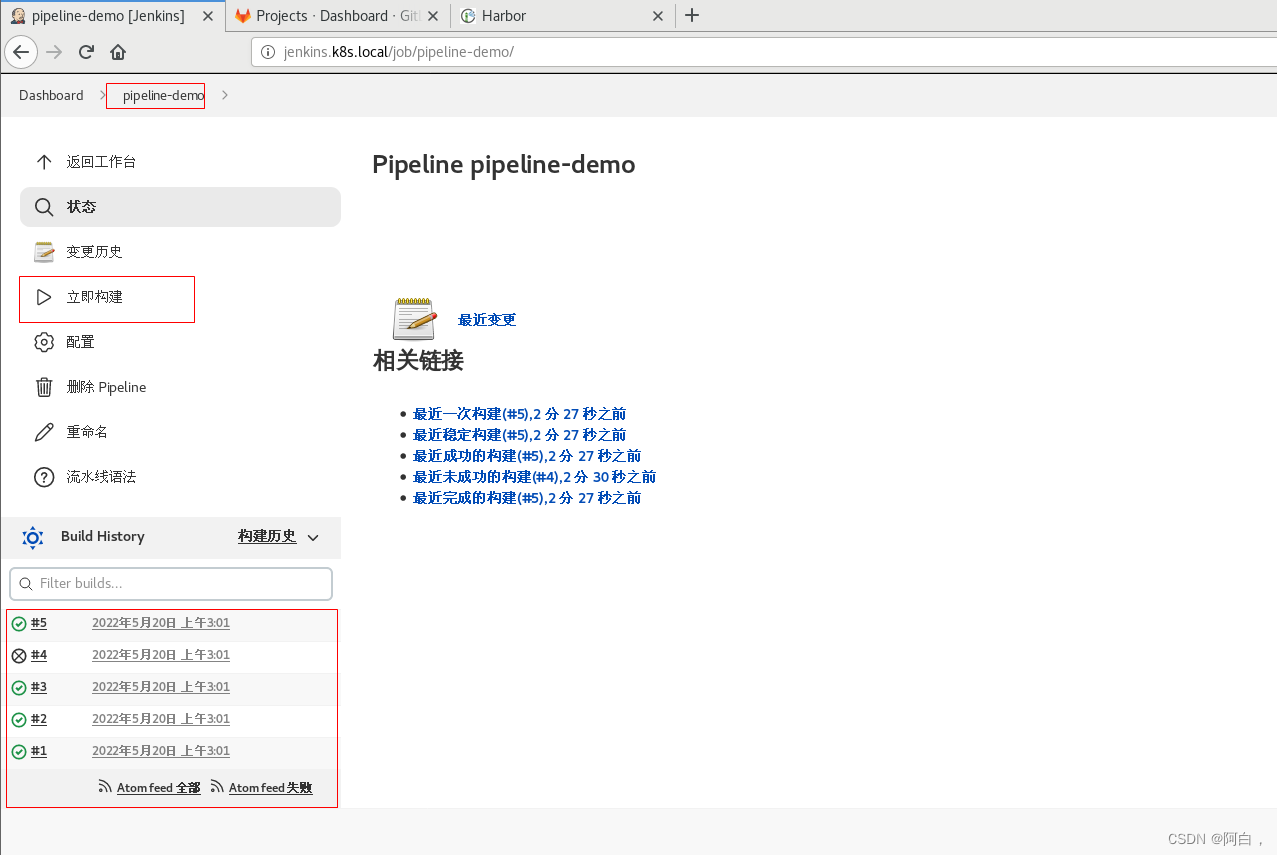

3.构建:点击左侧区域的 立即构建,可以看到 Job 开始构建了

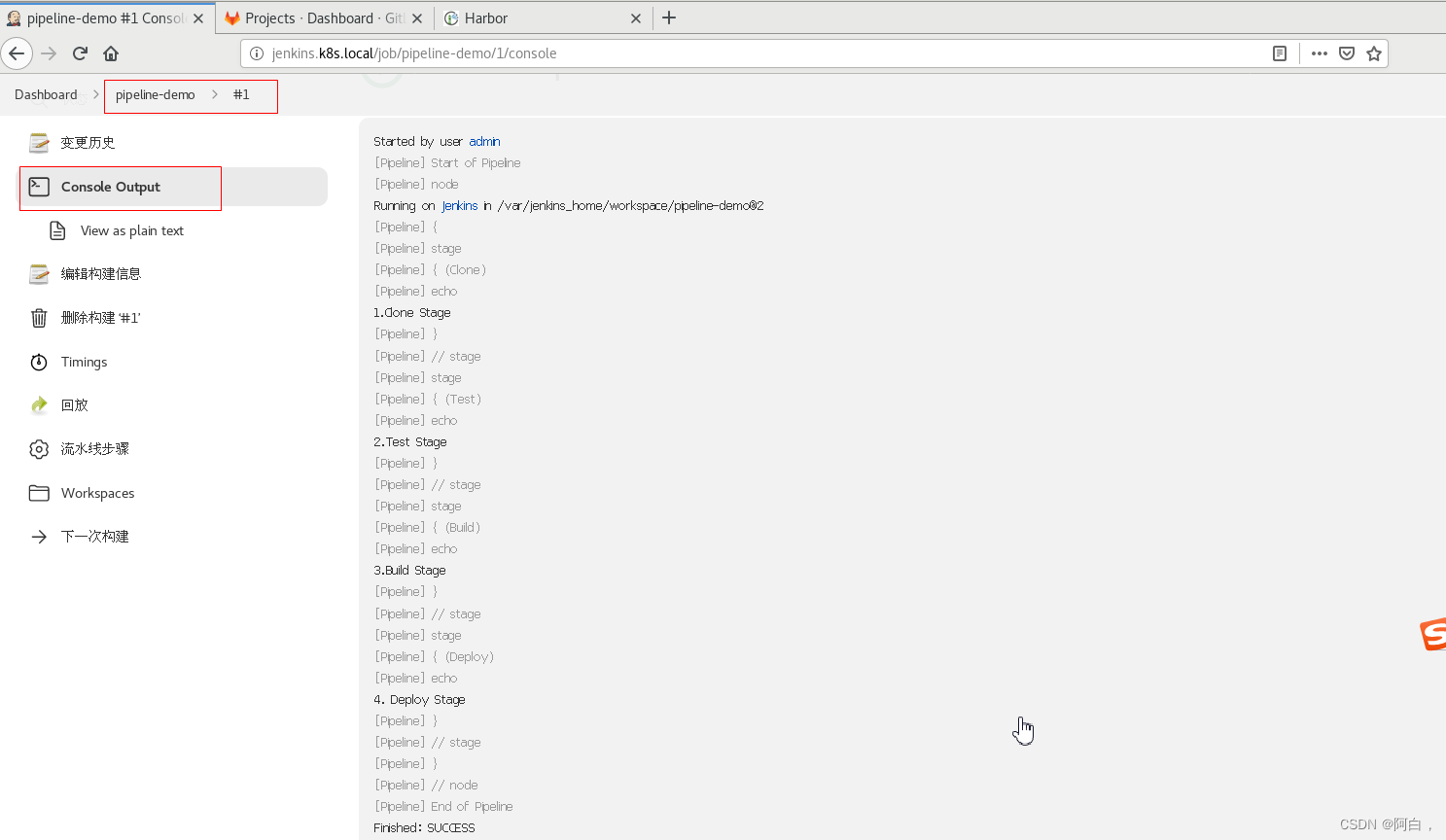

console output 我们可以看到上面我们 Pipeline 脚本中的4条输出语句都打印出来了,证明是符合我们的预期的。

如果大家对 Pipeline 语法不是特别熟悉的,可以前往输入脚本的下面的链接 流水线语法 中进行查看,这里有很多关于 Pipeline 语法的介绍,也可以自动帮我们生成一些脚本。

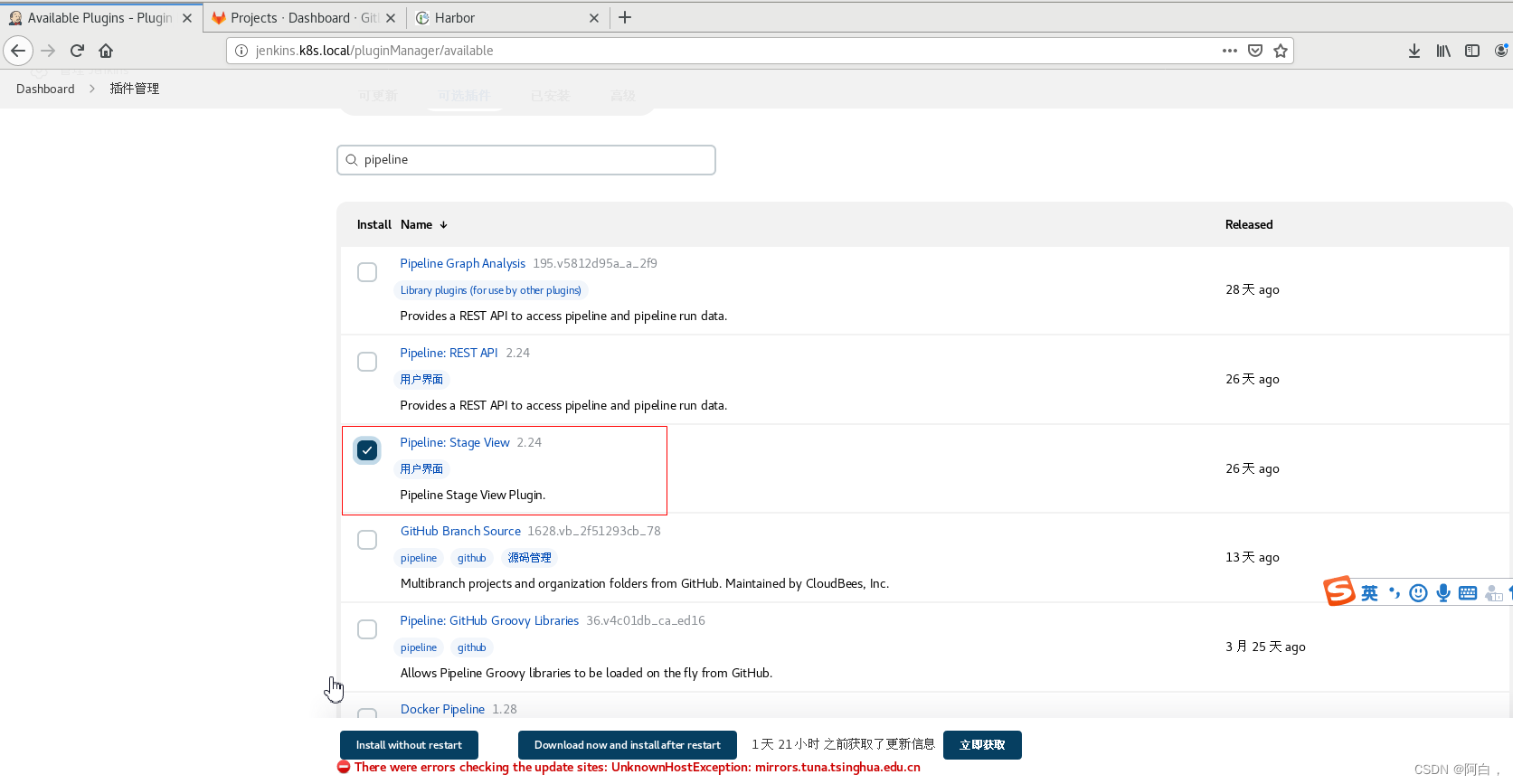

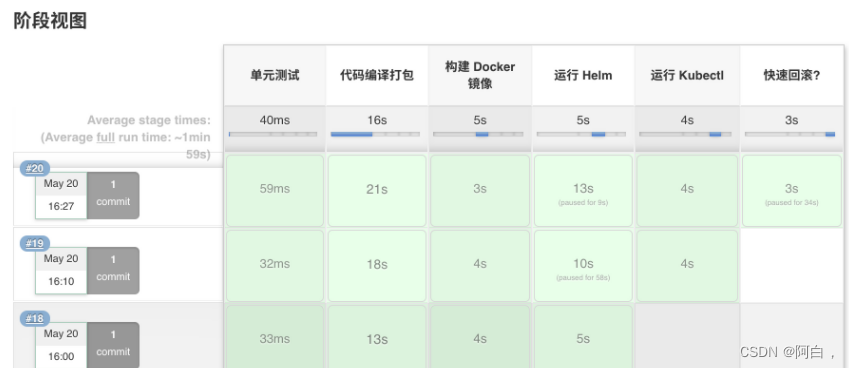

(看不到阶段视图,去安装stage view插件)

在 Slave 中构建任务

上面我们创建了一个简单的 Pipeline 任务,但是我们可以看到这个任务并没有在 Jenkins 的 Slave 中运行,那么如何让我们的任务跑在 Slave 中呢?还记得上节课我们在添加 Slave Pod 的时候,一定要记住添加的 label 吗?没错,我们就需要用到这个 label,我们重新编辑上面创建的 Pipeline 脚本,给 node 添加一个 label 属性(这个是前面定义pod的标签,就是在哪个节点上用pod来跑job),如下:

node('ydzs-jnlp') {

stage('Clone') {

echo "1.Clone Stage"

}

stage('Test') {

echo "2.Test Stage"

}

stage('Build') {

echo "3.Build Stage"

}

stage('Deploy') {

echo "4. Deploy Stage"

}

}

(这里可以直接跳过pipeline脚本agent{})

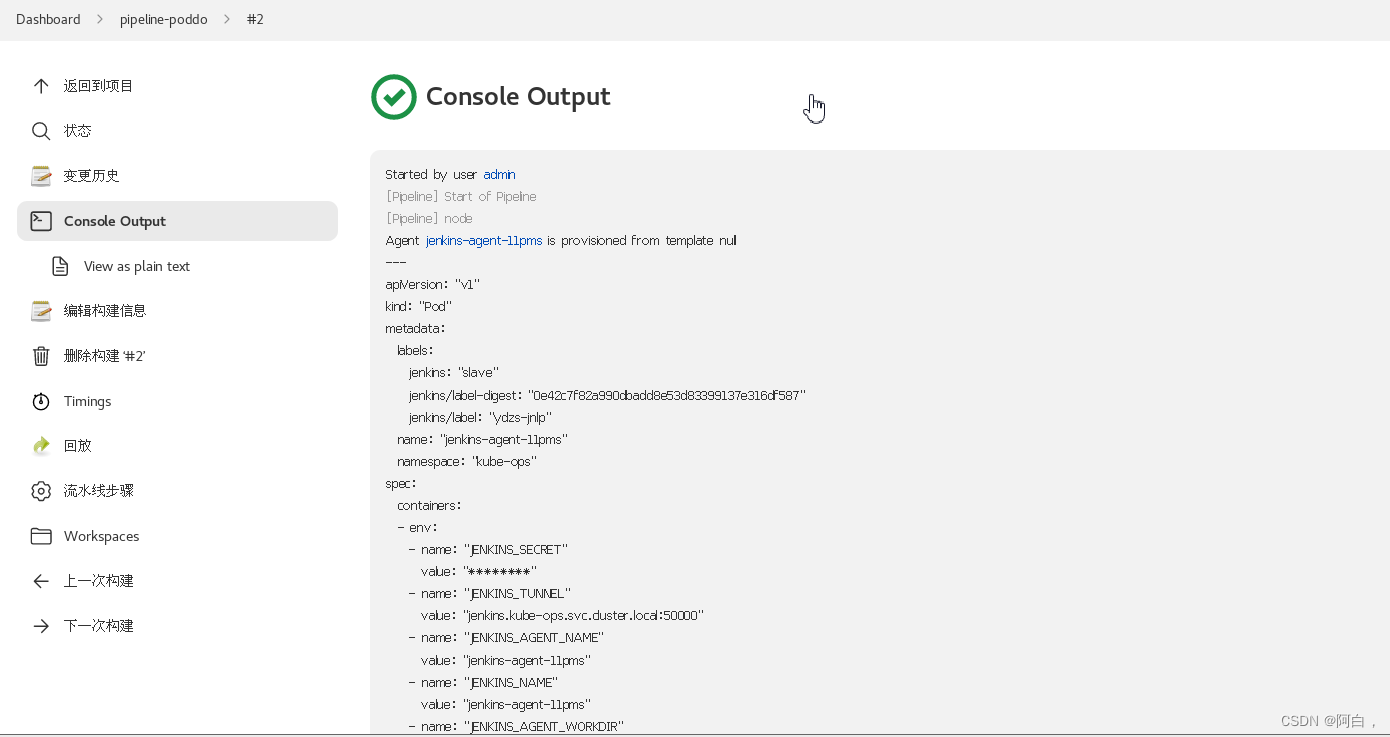

我们这里只是给 node 添加了一个 ydzs-jnlp 这样的一个 label,然后我们保存,构建之前查看下 kubernetes 集群中的 Pod:

kubectl get pod -n kube-ops

我们发现多了一个名叫 jenkins-agent-6gw0w 的 Pod 正在运行(名称一般是jenkins-agent-xxxxx),隔一会儿这个 Pod 就不再了。这也证明我们的 Job 构建完成了,同样回到 Jenkins 的 Web UI 界面中查看 Console Output,可以看到如下的信息:

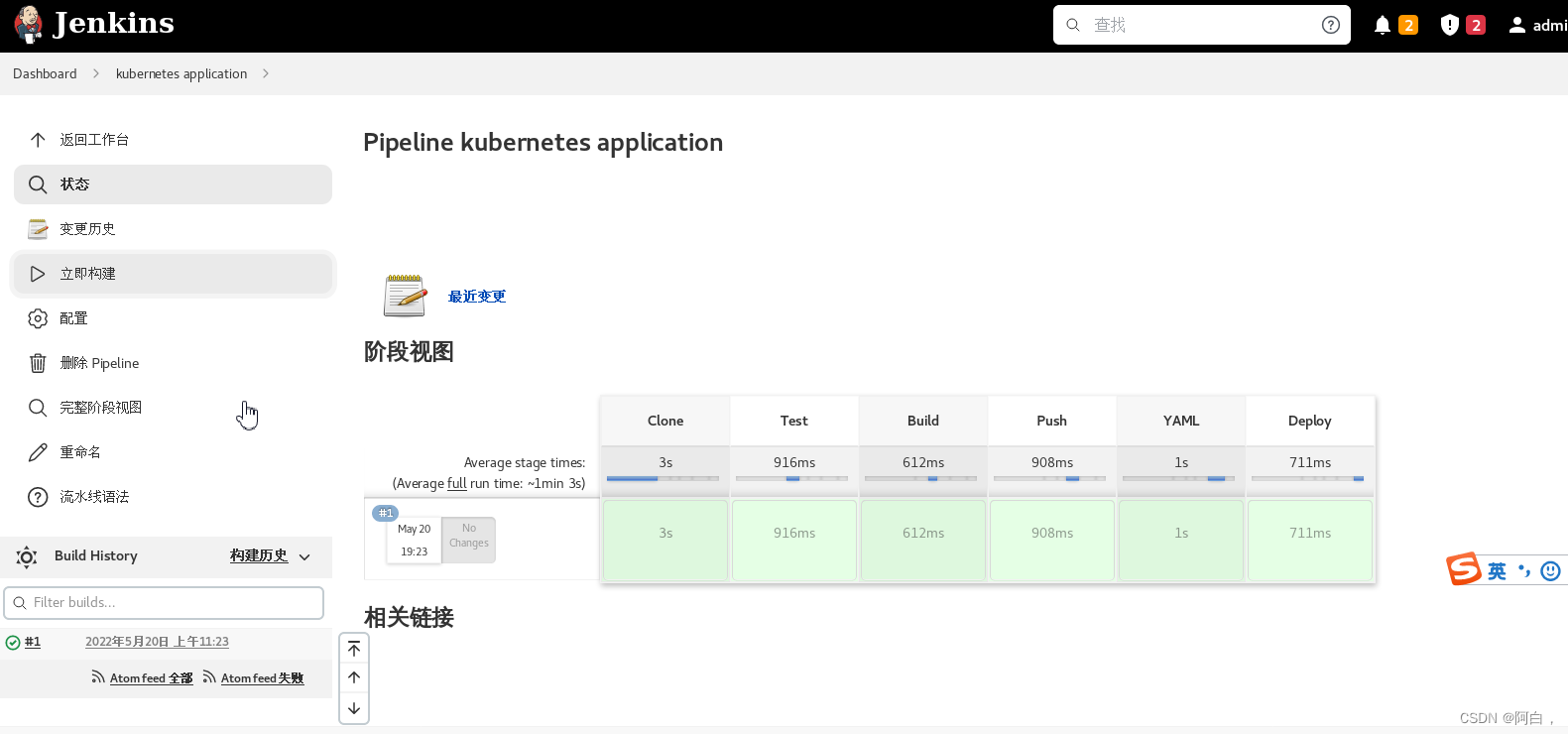

证明我们当前的任务在跑在上面动态生成的这个 Pod 中,也符合我们的预期。我们回到 Job 的主界面,也可以看到大家可能比较熟悉的 阶段视图 界面:

想看阶段视图得安装这个:

部署 Kubernetes 应用

上面我们已经知道了如何在 Jenkins Slave 中构建任务了,那么如何来部署一个原生的 Kubernetes 应用呢? 要部署 Kubernetes 应用,我们就得对我们之前部署应用的流程要非常熟悉才行,我们之前的流程是怎样的:

编写代码

测试

编写 Dockerfile

构建打包 Docker 镜像

推送 Docker 镜像到仓库

编写 Kubernetes YAML 文件

更改 YAML 文件中 Docker 镜像 TAG

利用 kubectl 工具部署应用

我们之前在 Kubernetes 环境中部署一个原生应用的流程应该基本上是上面这些流程吧?现在我们就需要把上面这些流程放入 Jenkins 中来自动帮我们完成(当然编码除外),从测试到更新 YAML 文件属于 CI 流程,后面部署属于 CD 的流程。如果按照我们上面的示例,我们现在要来编写一个 Pipeline 的脚本,应该怎么编写呢?

node('ydzs-jnlp') {

stage('Clone') {

echo "1.Clone Stage"

}

stage('Test') {

echo "2.Test Stage"

}

stage('Build') {

echo "3.Build Docker Image Stage"

}

stage('Push') {

echo "4.Push Docker Image Stage"

}

stage('YAML') {

echo "5.Change YAML File Stage"

}

stage('Deploy') {

echo "6.Deploy Stage"

}

}

现在我们创建一个流水线的作业,直接使用上面的脚本来构建,同样可以得到正确的结果:

克隆项目代码->编写代码,功能实现->编写dockerfile->构建镜像(将代码功能或者说应用做成镜像,以容器方式提供服务)->提交镜像到仓库->设置yaml中的镜像或者修改镜像的标签->以pod方式部署

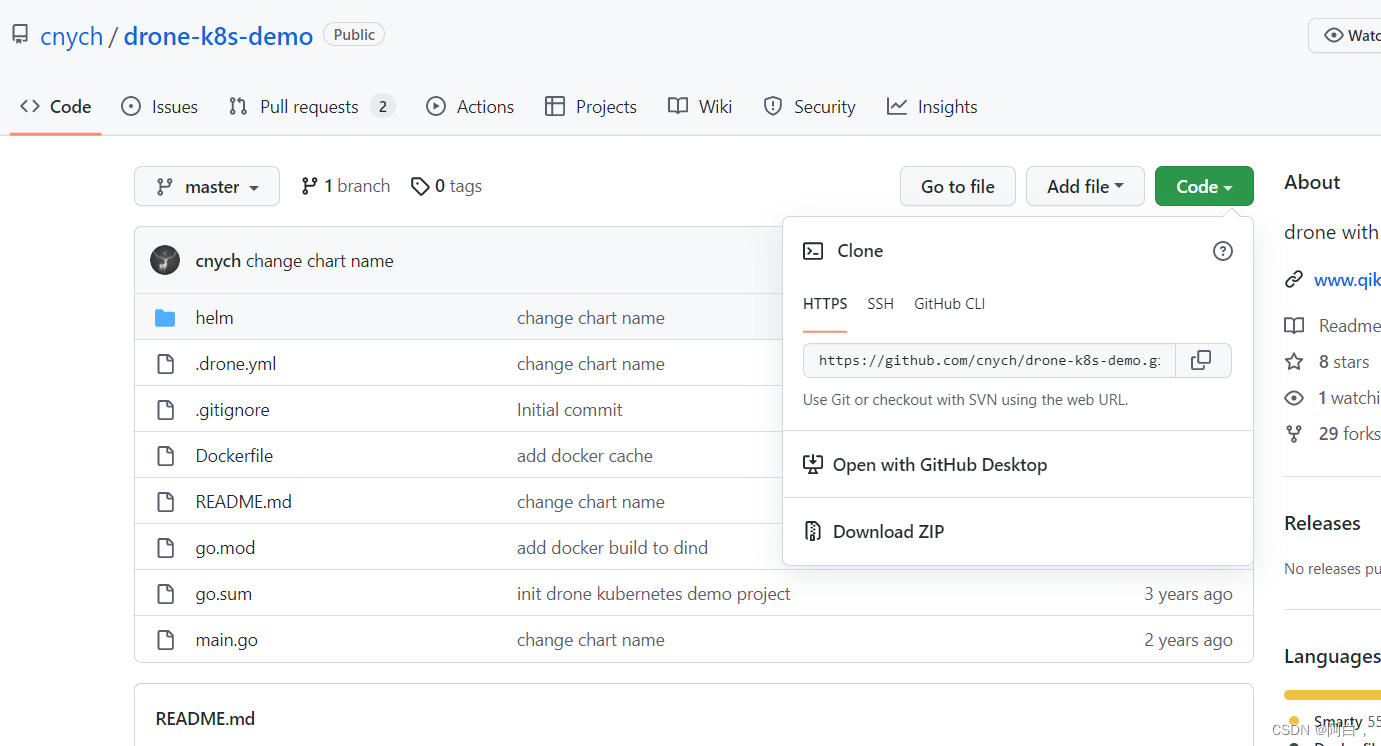

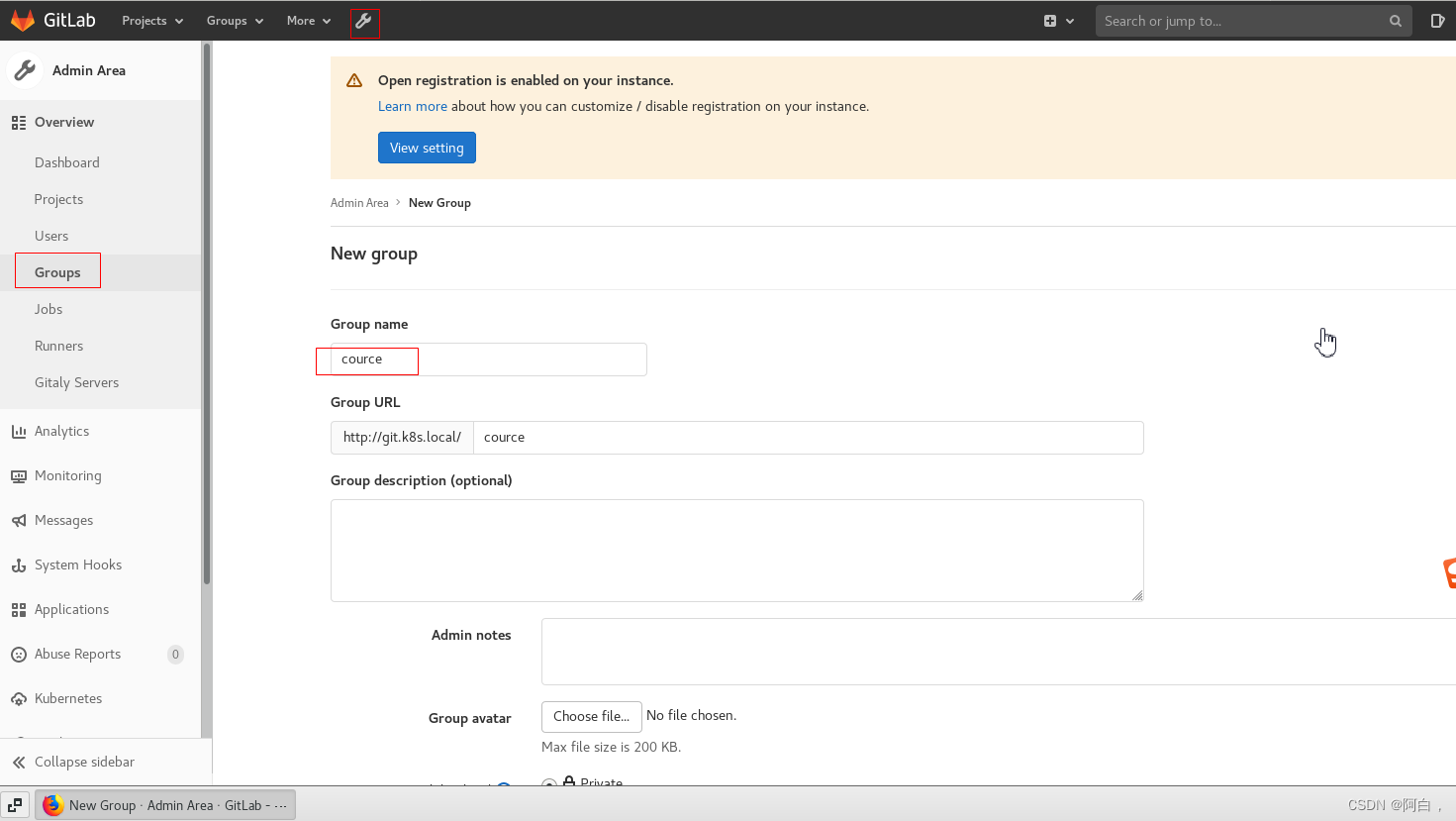

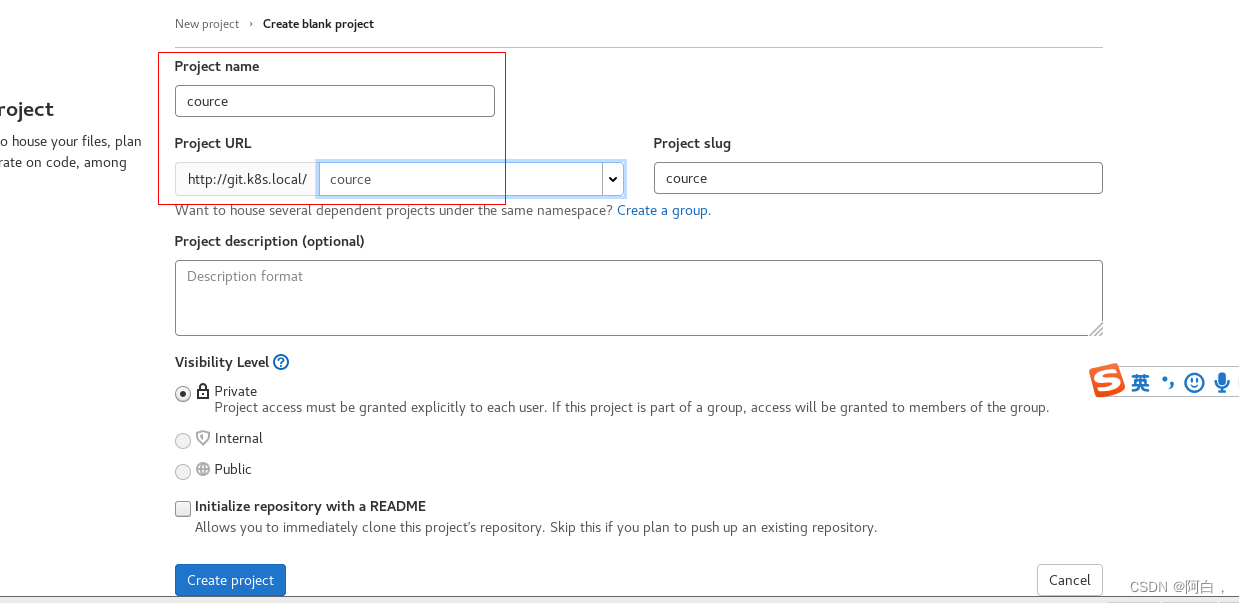

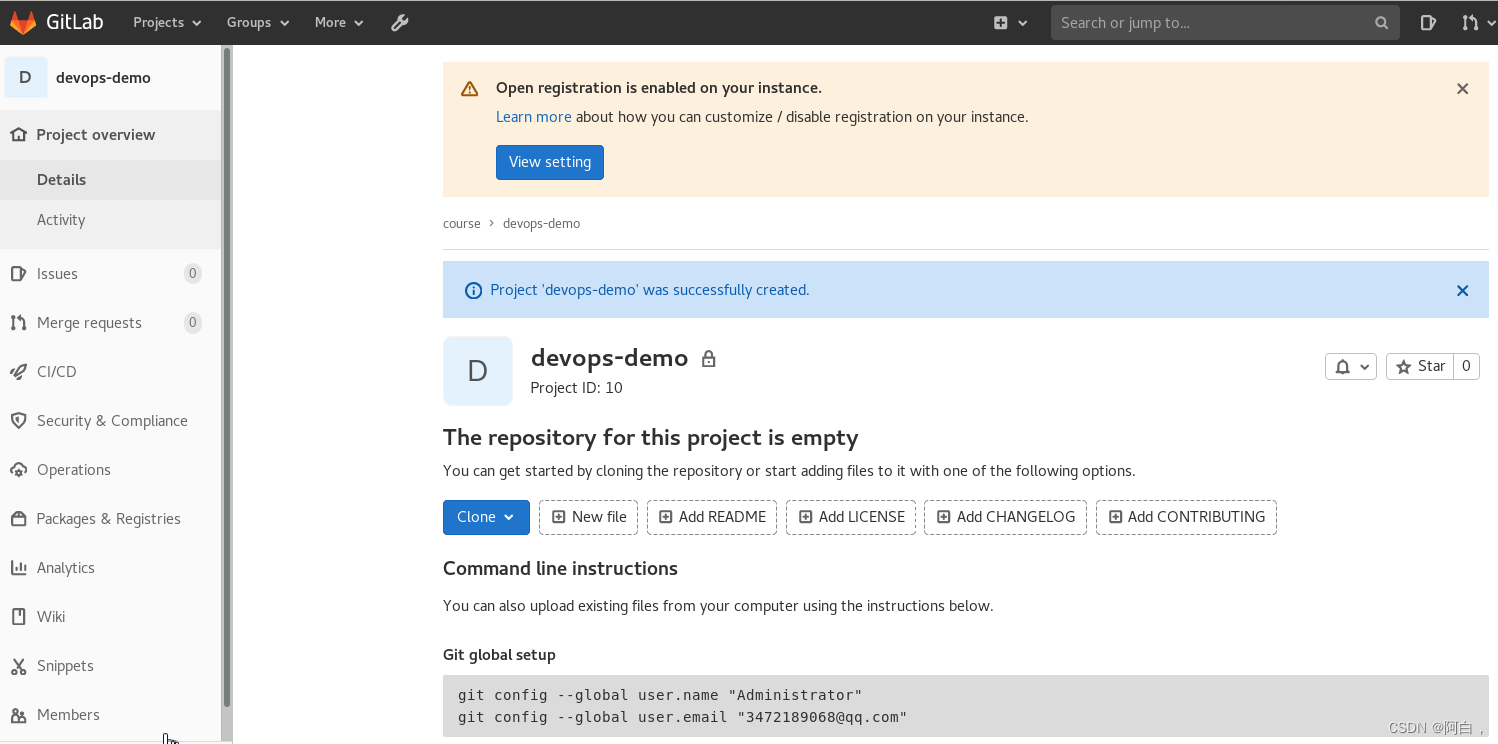

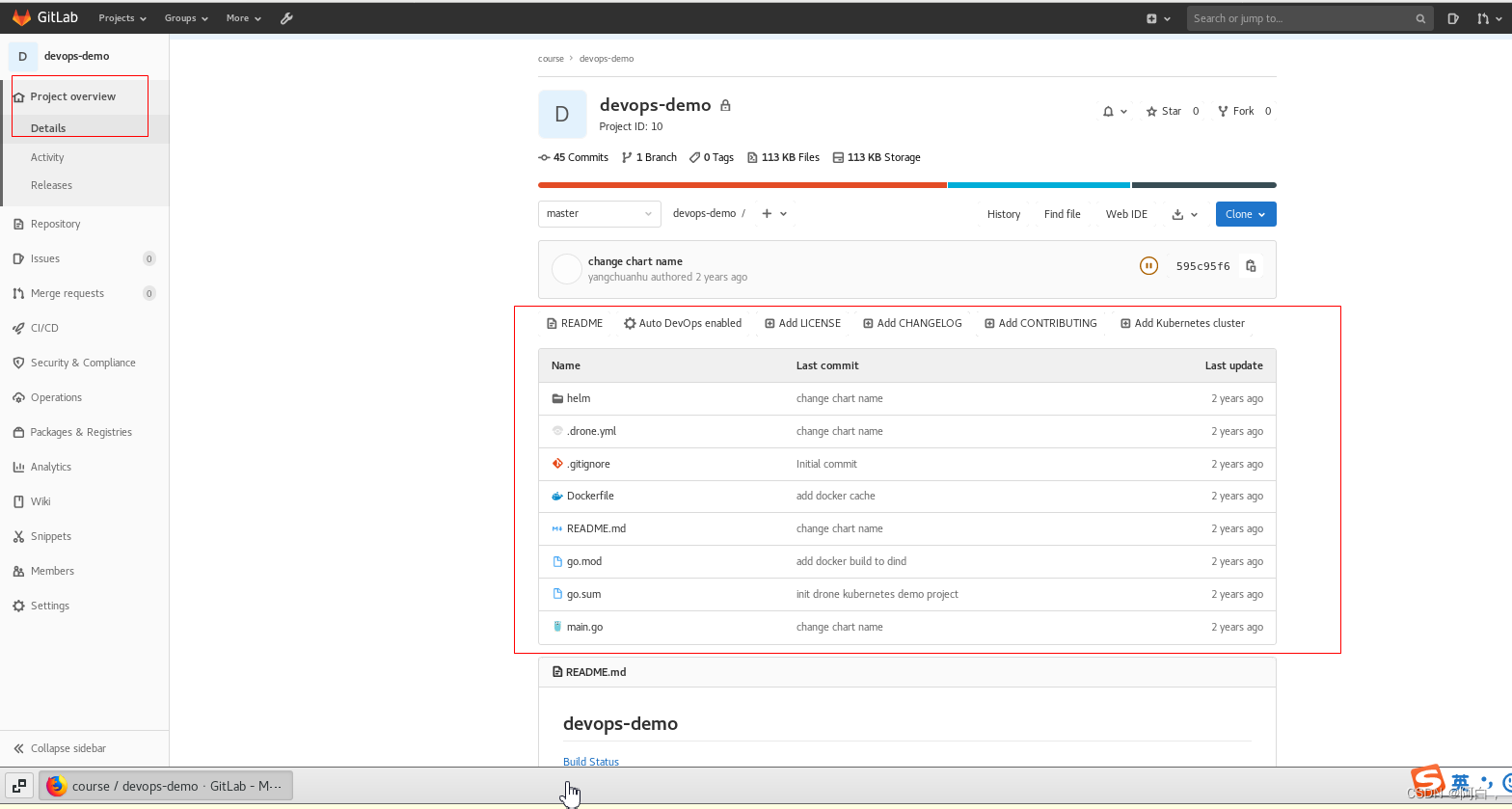

这里我们来将一个简单 golang 程序,部署到 kubernetes 环境中,代码链接:https://github.com/cnych/drone-k8s-demo。我们将代码推送到我们自己的 GitLab 仓库上去,地址:http://git.k8s.local/course/devops-demo,这样让 Jenkins 和 Gitlab 去进行连接进行 CI/CD。

[root@node1 ~]# git clone https://github.com/cnych/drone-k8s-demo.git

正克隆到 'drone-k8s-demo'...

remote: Enumerating objects: 166, done.

remote: Total 166 (delta 0), reused 0 (delta 0), pack-reused 166

接收对象中: 100% (166/166), 22.18 KiB | 0 bytes/s, done.

处理 delta 中: 100% (95/95), done.

[root@node1 ~]# ls

anaconda-ks.cfg drone-k8s-demo initial-setup-ks.cfg volume.yaml 公共 模板 视频 图片 文档 下载 音乐 桌面

[root@node1 ~]# cd drone-k8s-demo/

[root@node1 drone-k8s-demo]# ls

Dockerfile go.mod go.sum helm main.go README.md

注意这里组名应该是course

注意这里项目名改成devops-demo

[root@node2 drone-k8s-demo]# git remote add origin [email protected]:cource/devops-demo.git

fatal: 远程 origin 已经存在。

[root@node2 drone-k8s-demo]# git remote remove origin

[root@node2 drone-k8s-demo]# git remote add origin [email protected]:cource/devops.demo.git

[root@node2 drone-k8s-demo]# git push -u origin master

Counting objects: 166, done.

Delta compression using up to 4 threads.

Compressing objects: 100% (70/70), done.

Writing objects: 100% (166/166), 22.18 KiB | 0 bytes/s, done.

Total 166 (delta 95), reused 166 (delta 95)

remote: Resolving deltas: 100% (95/95), done.

To [email protected]:cource/devops.demo.git

* [new branch] master -> master

分支 master 设置为跟踪来自 origin 的远程分支 master。

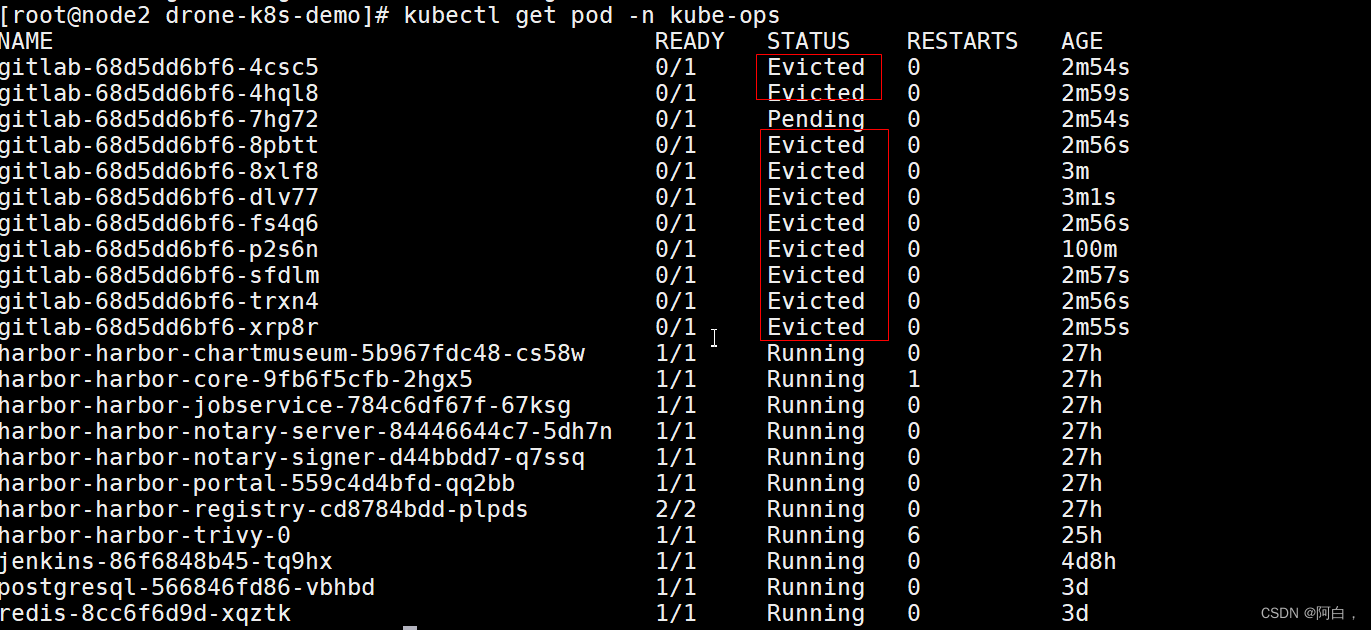

对于这些问题,一般是由于资源紧张引起,实际查看自愿一切正常,删除这些evite的pod就行,有时候可能需要重启下gitlab,就是删除pending那个pod

[root@master1 ~]# for i in $(kubectl get pod -n kube-ops | awk -F " " '{print $1}' | grep '^gitlab' | grep -v 'p9gpj$');do kubectl delete pod -n kube-ops $i ;done

pod "gitlab-68d5dd6bf6-4nktt" deleted

pod "gitlab-68d5dd6bf6-5dtm8" deleted

pod "gitlab-68d5dd6bf6-5l4jh" deleted

pod "gitlab-68d5dd6bf6-897vk" deleted

pod "gitlab-68d5dd6bf6-b2lgl" deleted

pod "gitlab-68d5dd6bf6-brt4k" deleted

pod "gitlab-68d5dd6bf6-j4dfd" deleted

pod "gitlab-68d5dd6bf6-j4rtx" deleted

pod "gitlab-68d5dd6bf6-jkn76" deleted

pod "gitlab-68d5dd6bf6-l2bln" deleted

pod "gitlab-68d5dd6bf6-ld8hd" deleted

pod "gitlab-68d5dd6bf6-lxgp9" deleted

pod "gitlab-68d5dd6bf6-nln4n" deleted

pod "gitlab-68d5dd6bf6-qjtcn" deleted

pod "gitlab-68d5dd6bf6-qvqrp" deleted

pod "gitlab-68d5dd6bf6-rw9st" deleted

pod "gitlab-68d5dd6bf6-sprft" deleted

pod "gitlab-68d5dd6bf6-vgq97" deleted

pod "gitlab-68d5dd6bf6-wrjhf" deleted

pod "gitlab-68d5dd6bf6-xrgt2" deleted

pod "gitlab-68d5dd6bf6-zn7bg" deleted

如果按照之前的示例,我们是不是应该像这样来编写 Pipeline 脚本:

第一步,clone 代码 第二步,进行测试,如果测试通过了才继续下面的任务 第三步,由于 Dockerfile 基本上都是放入源码中进行管理的,所以我们这里就是直接构建 Docker 镜像了 第四步,镜像打包完成,就应该推送到镜像仓库中吧 第五步,镜像推送完成,是不是需要更改 YAML 文件中的镜像 TAG 为这次镜像的 TAG 第六步,万事俱备,只差最后一步,使用 kubectl 命令行工具进行部署了

到这里我们的整个 CI/CD 的流程是不是就都完成了。我们同样可以用上面的我们自定义的一个 jnlp 的镜像来完成我们的整个构建工作,但是我们这里的项目是 golang 代码的,构建需要相应的环境,如果每次需要特定的环境都需要重新去定制下镜像这未免太麻烦了,我们这里来采用一种更加灵活的方式,自定义 podTemplate。我们可以直接在 Pipeline 中去自定义 Slave Pod 中所需要用到的容器模板,这样我们需要什么镜像只需要在 Slave Pod Template 中声明即可,完全不需要去定义一个庞大的 Slave 镜像了。

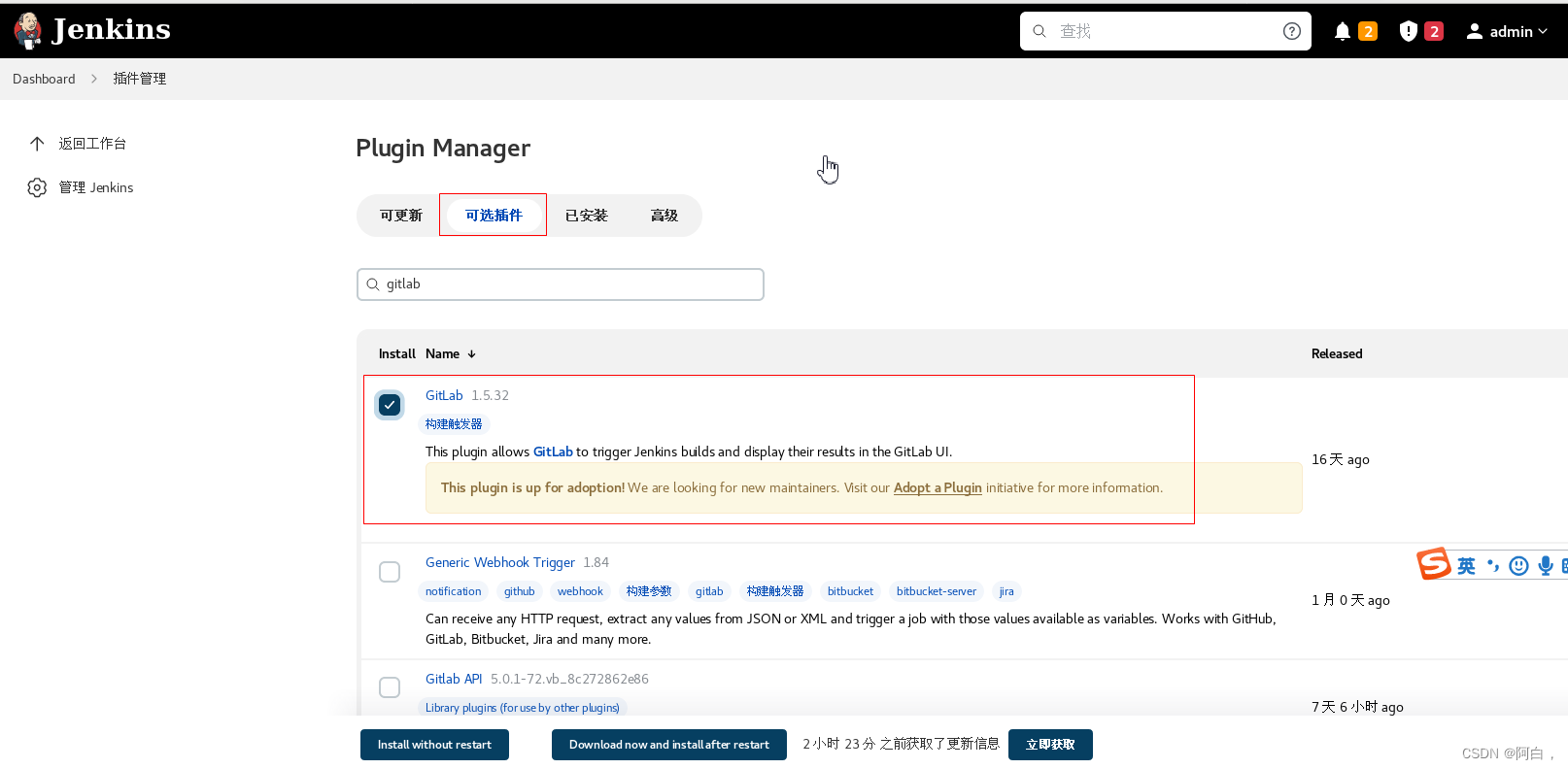

这里我们需要使用到 gitlab 的插件,用于 Gitab 侧代码变动后触发 Jenkins 的构建任务:

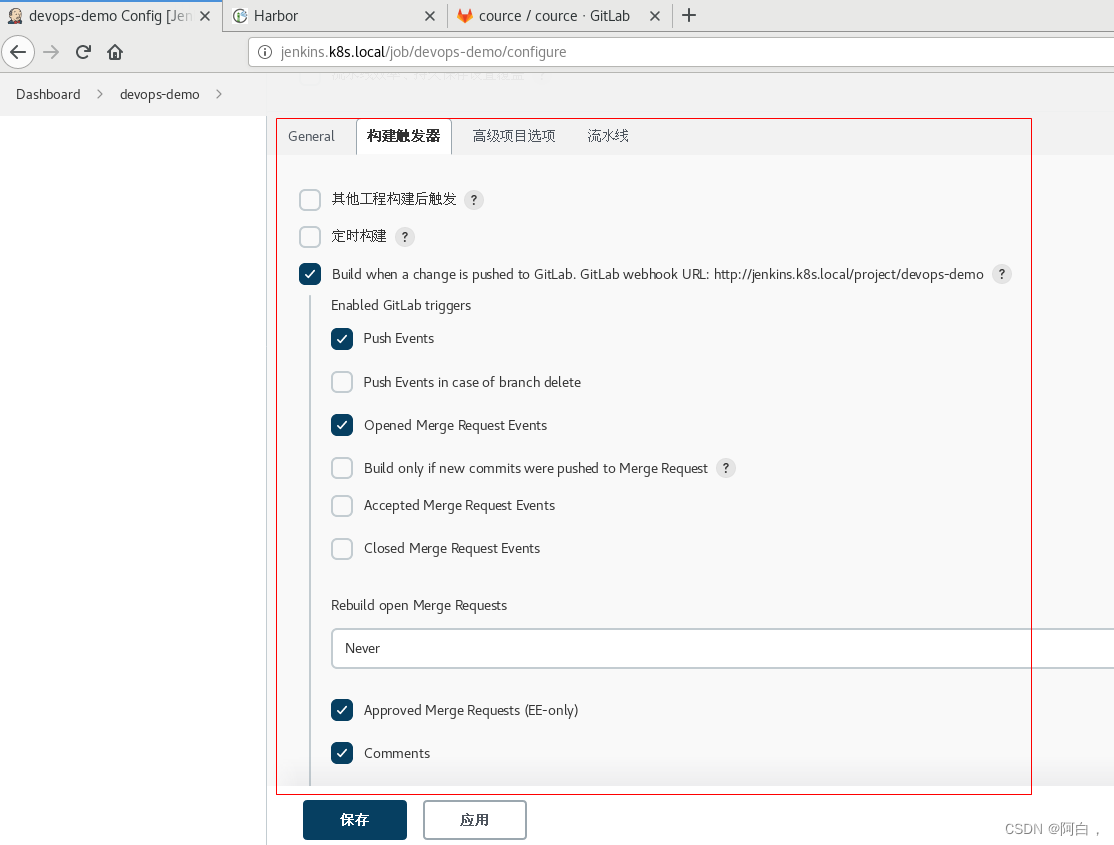

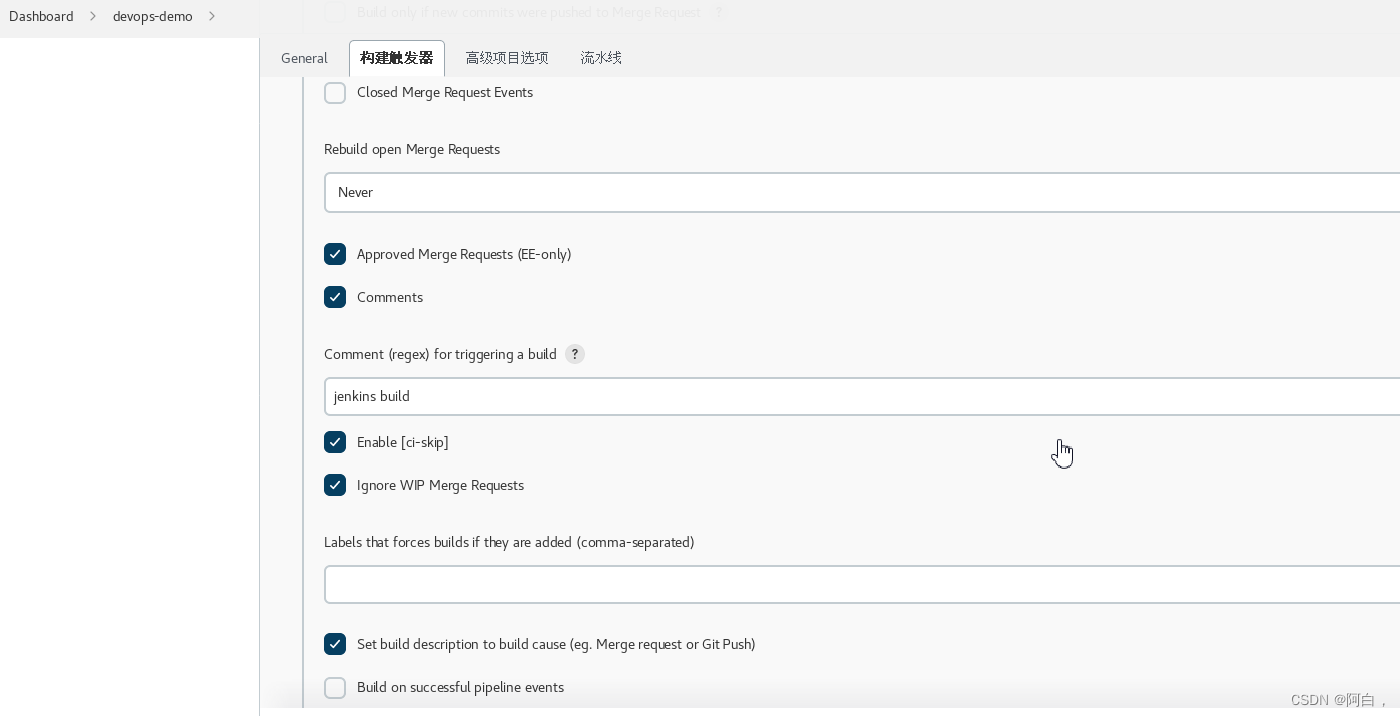

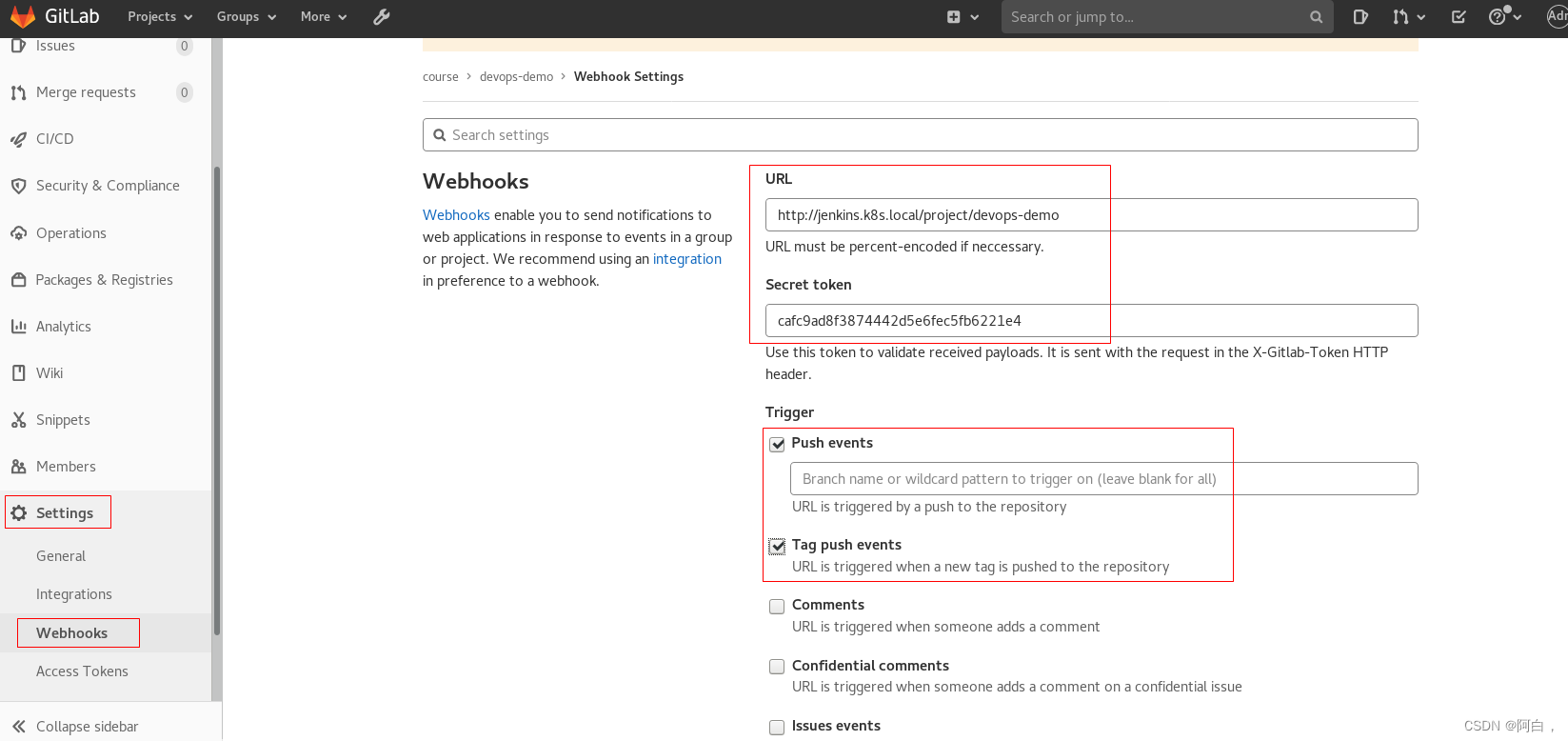

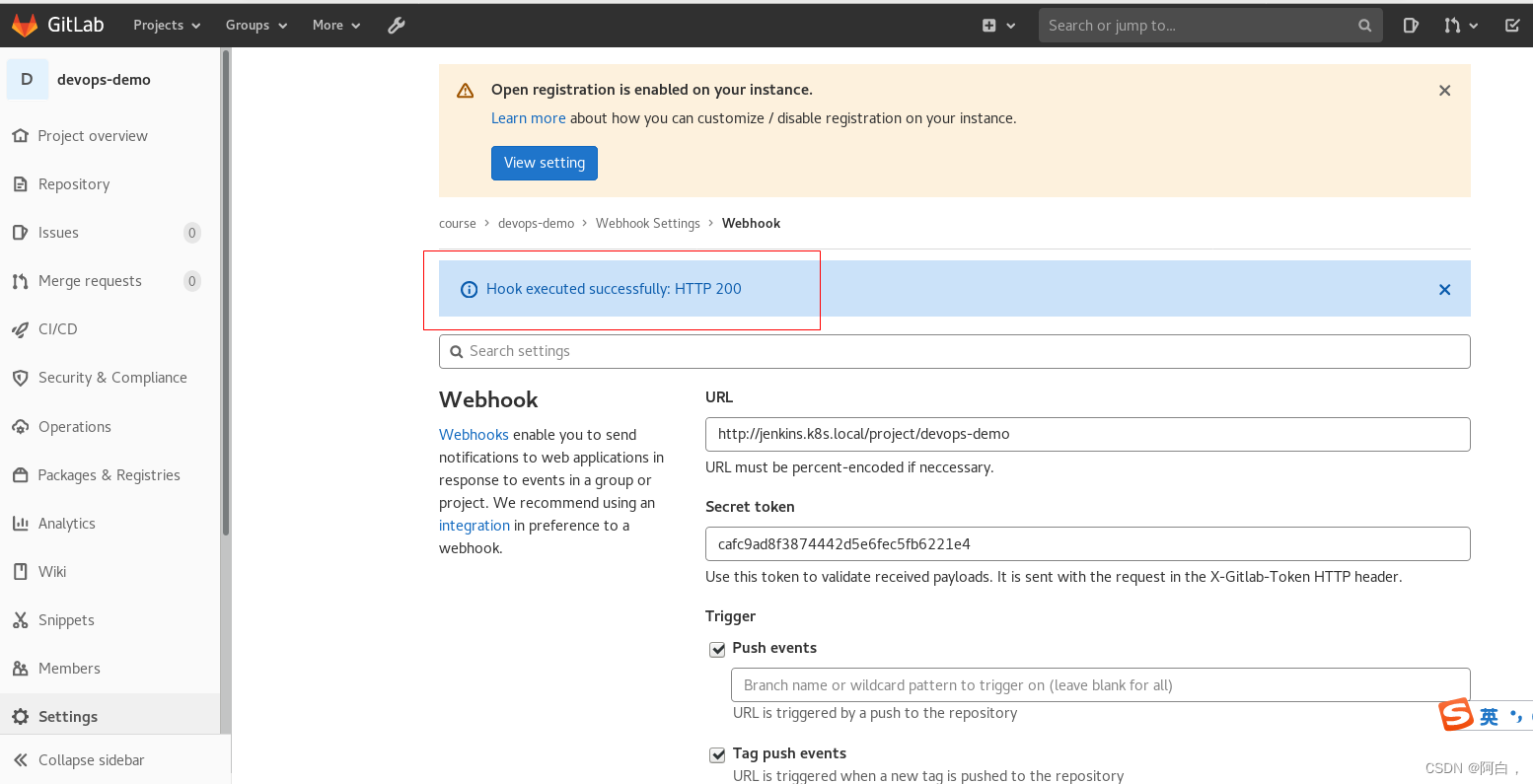

然后新建一个名为 devops-demo 类型为流水线的任务,在 构建触发器 区域选择 Build when a change is pushed to GitLab,后面的 http://jenkins.k8s.local/project/devops-demo 是我们需要在 Gitlab 上配的 Webhook 地址:

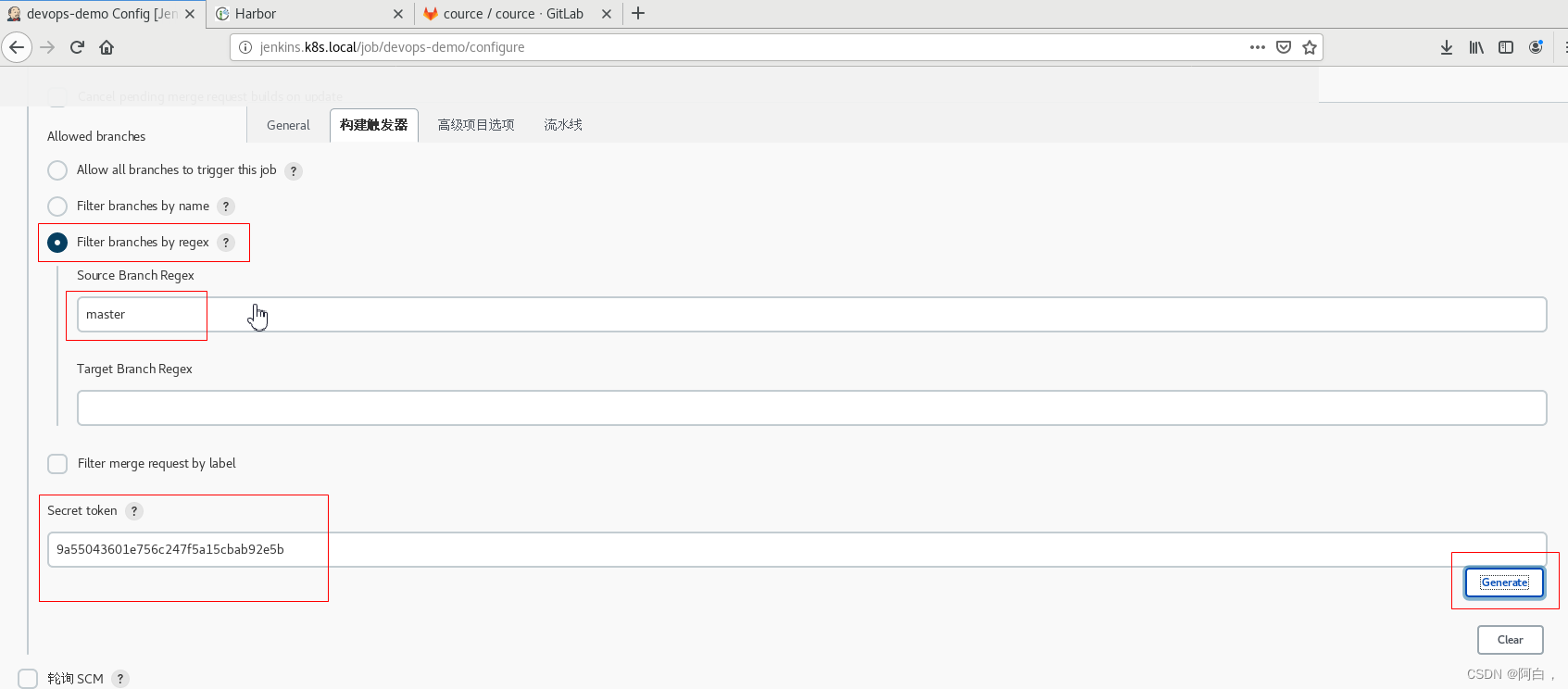

其中Comment (regex) for triggering a build是说在 git 仓库,发送包含 jenkins build 这样的关键字的时候会触发执行此 build 构建。然后点击下面的高级可以生成 token。这里的 url 和 token 是 jenkins 的 api,可以提供给 GtiLab 使用,在代码合并/提交commit/push代码等操作时,通知 Jenkins 执行 build 操作。

注: 复制出 URL 和 Token,我们后面配置 Gitlab 的 Webhook 会用到。

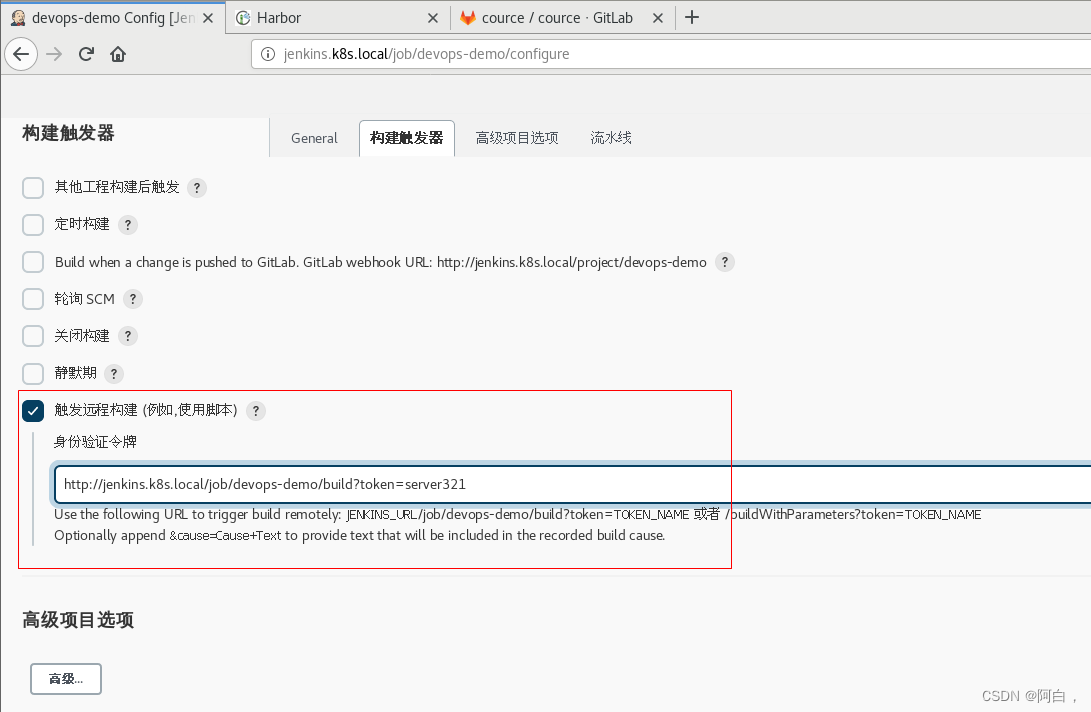

自定义一个token:

webhook:http://jenkins.k8s.local/project/devops-demo

secret token:cafc9ad8f3874442d5e6fec5fb6221e4

身份验证令牌:http://jenkins.k8s.local/job/devops-demo/build?token=server321

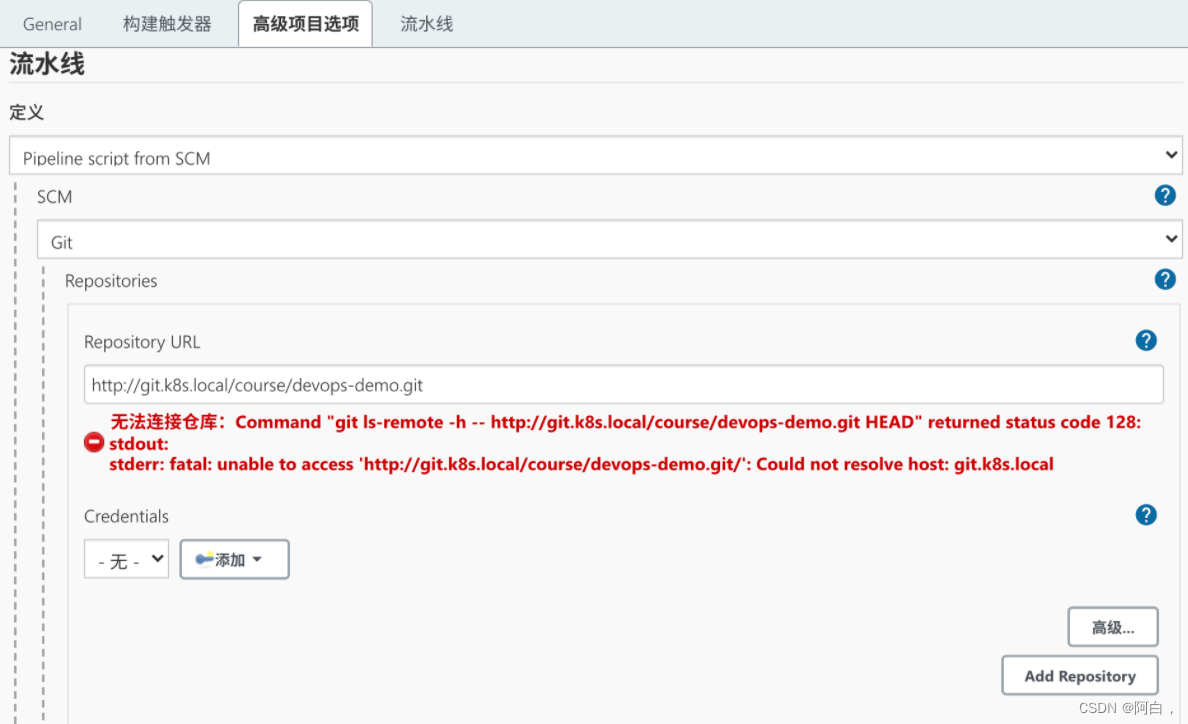

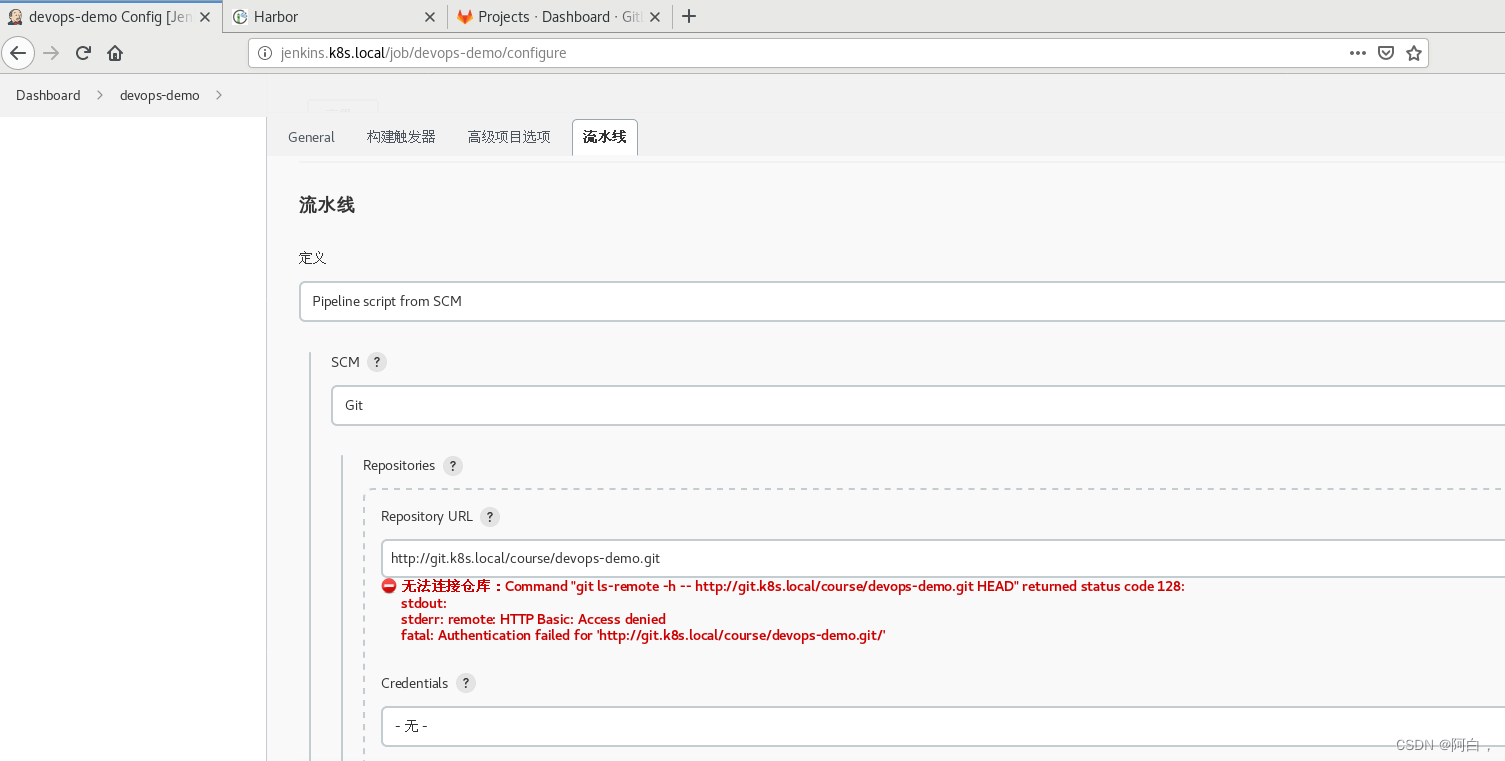

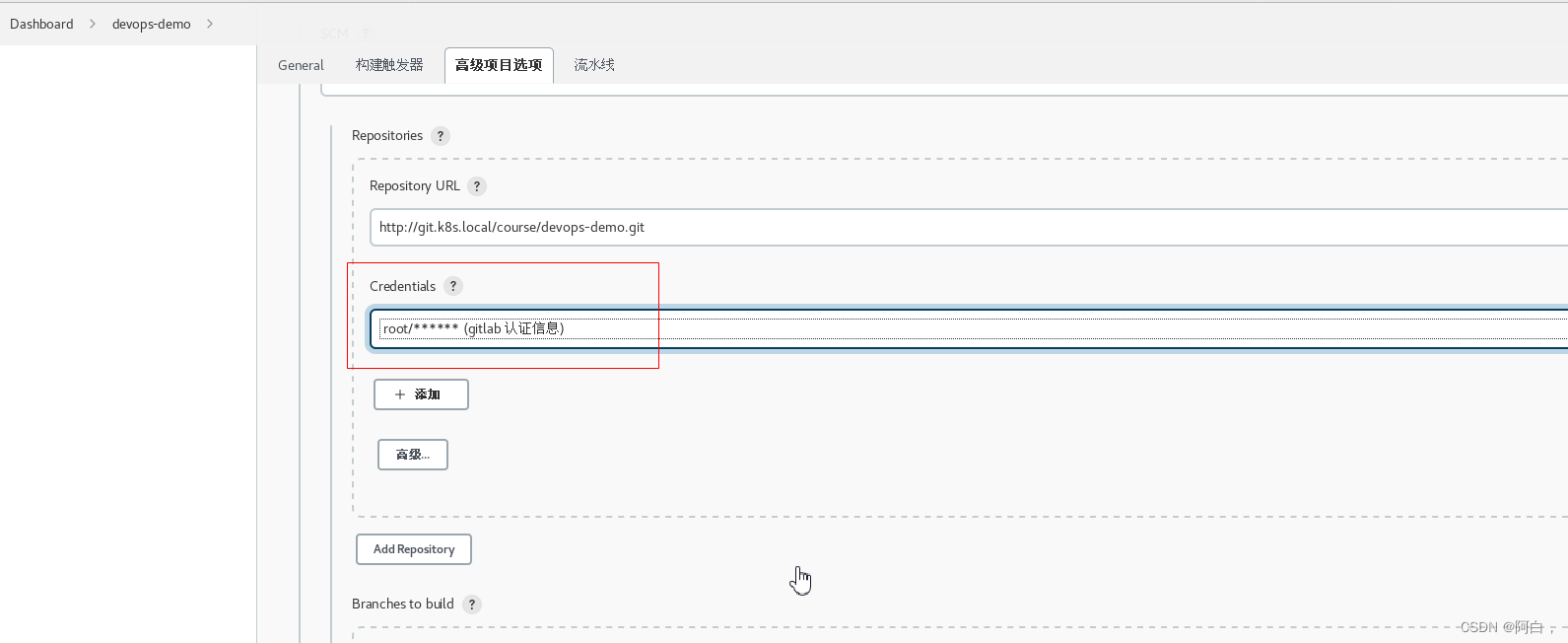

然后在下面的流水线区域我们可以选择 Pipeline script 然后在下面测试流水线脚本,我们这里选择 Pipeline script from SCM,意思就是从代码仓库中通过 Jenkinsfile 文件获取 Pipeline script 脚本定义,然后选择 SCM 来源为 Git,在出现的列表中配置上仓库地址 http://git.k8s.local/course/devops-demo.git,由于我们是在一个 Slave Pod 中去进行构建,所以如果使用 SSH 的方式去访问 Gitlab 代码仓库的话就需要频繁的去更新 SSH-KEY,所以我们这里采用直接使用用户名和密码的形式来方式:

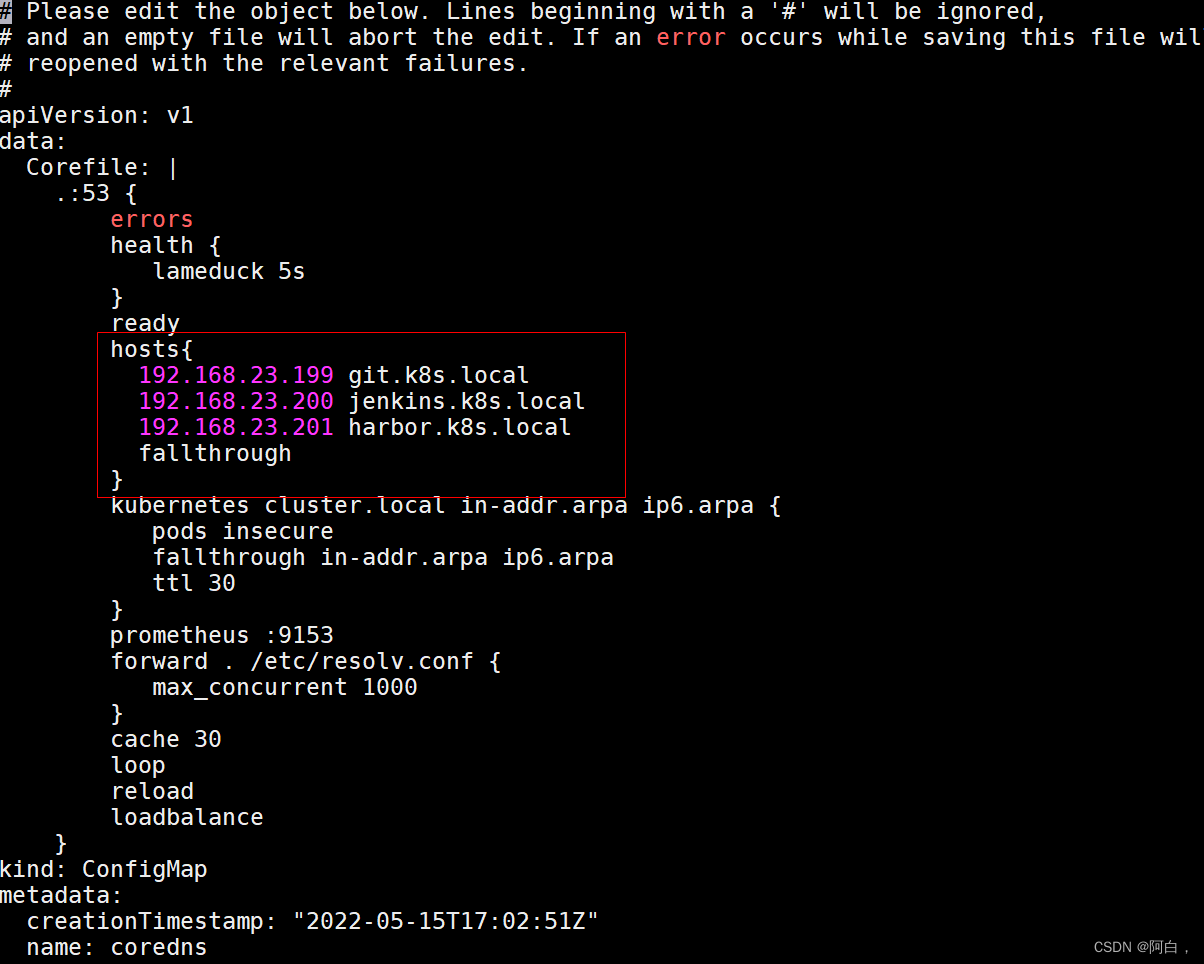

我们可以看到有一个明显的错误 Could not resolve host: git.k8s.local 提示不能解析我们的 GitLab 域名,这是因为我们的域名都是自定义的,我们可以通过在 CoreDNS 中添加自定义域名解析来解决这个问题(如果你的域名是外网可以正常解析的就不会出现这个问题了):

kubectl edit cm coredns -n kube-system

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

hosts {

192.168.23.199 git.k8s.local

192.168.23.199 jenkins.k8s.local

192.168.23.199 harbor.k8s.local

fallthrough

}

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

kind: ConfigMap

metadata:

creationTimestamp: "2022-05-15T17:02:51Z"

name: coredns

注意这里的ip全是199才对,就是我用来解析这三个域名的ingress所在的节点的ip都是192.168.23.199

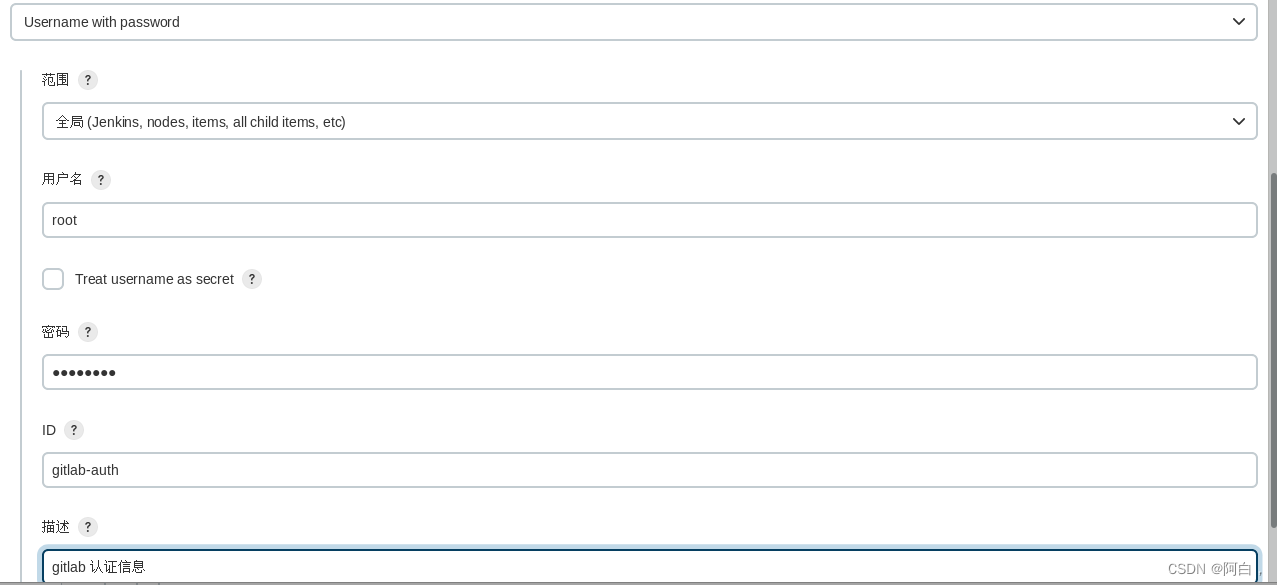

修改完成后,隔一小会儿,CoreDNS 就会自动热加载(cm,volume),我们就可以在集群内访问我们自定义的域名了。然后肯定没有权限,所以需要配置帐号认证信息。

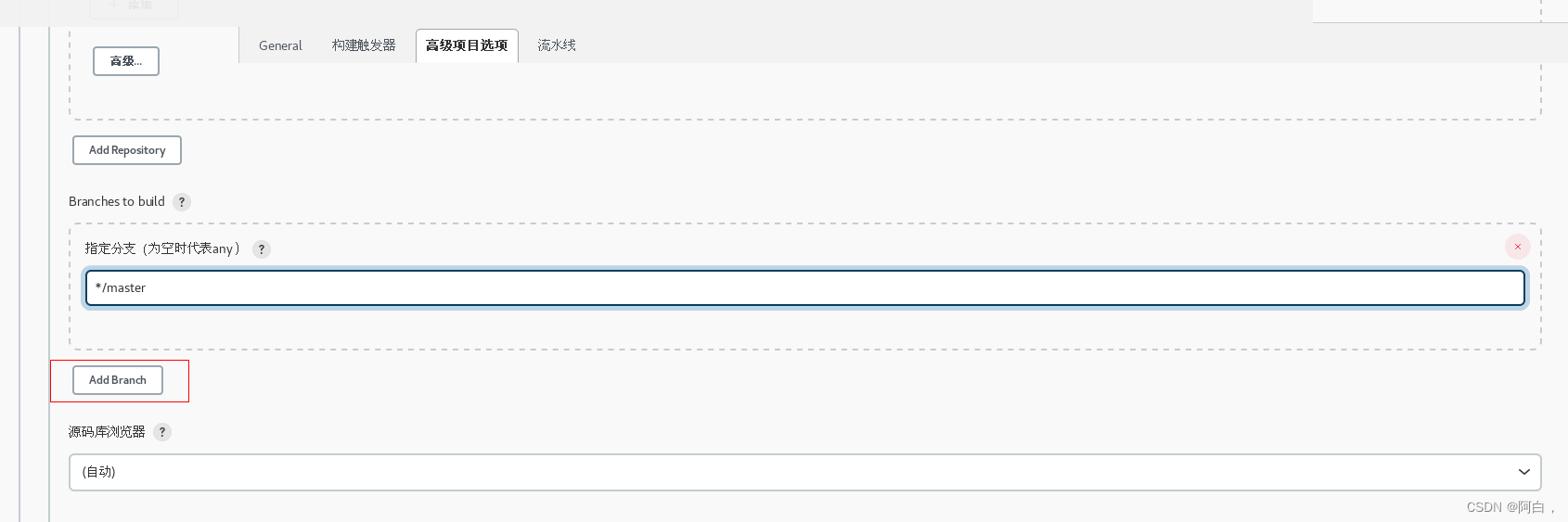

然后需要我们配置用于构建的分支,如果所有的分支我们都想要进行构建的话,只需要将 Branch Specifier 区域留空即可,一般情况下不同的环境对应的分支才需要构建,比如 master、dev、test 等,平时开发的 feature 或者 bugfix 的分支没必要频繁构建,我们这里就只配置 master 个分支用于构建。

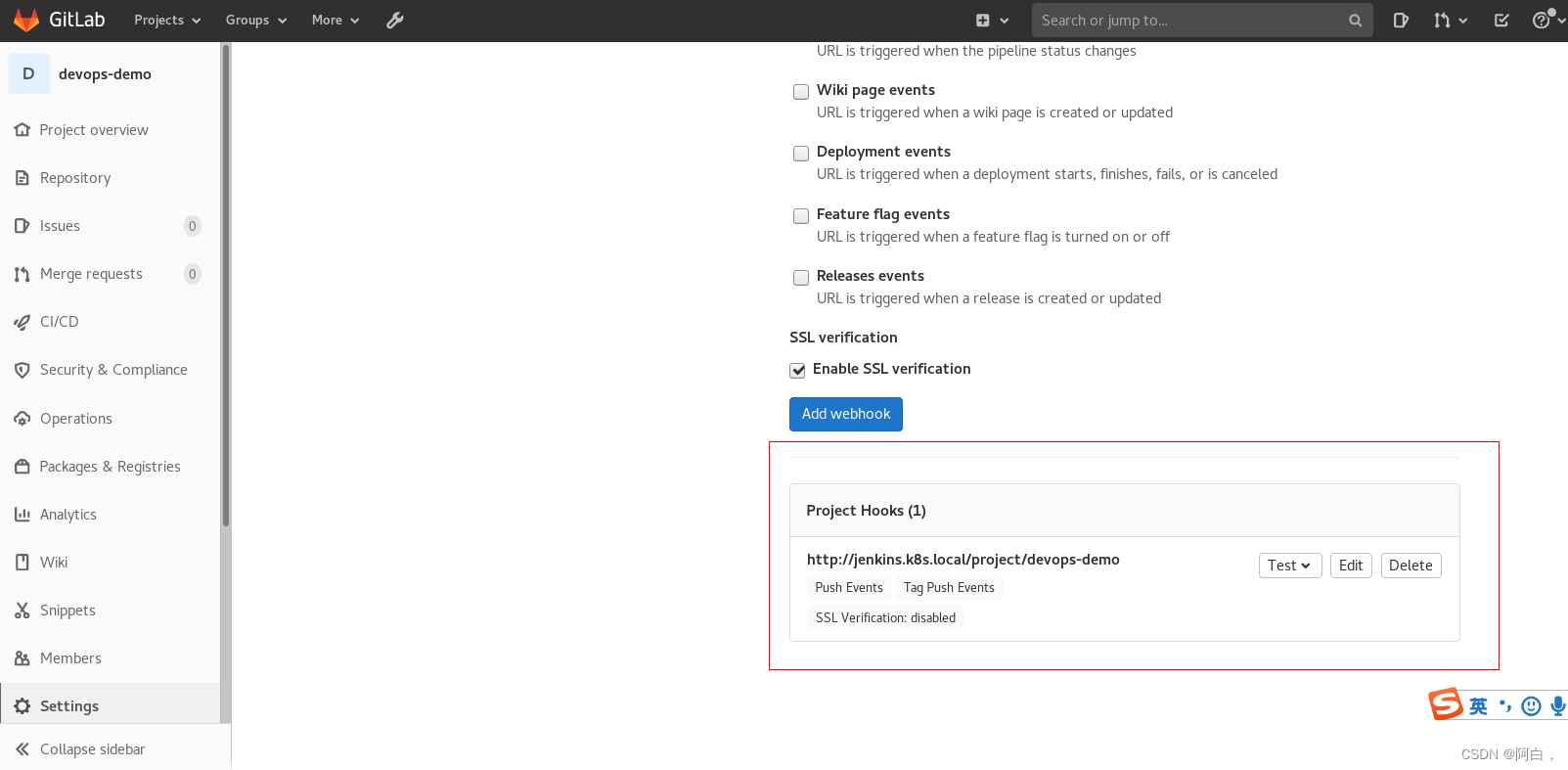

最后点击保存,至此,Jenkins 的持续集成配置好了,还需要配置 Gitlab 的 Webhook,用于代码提交通知 Jenkins。前往 Gitlab 中配置项目 devops-demo 的 Webhook,settings -> Webhooks,填写上面得到的 trigger 地址:

进入该porject或者说该pipeline下

我们这里都是自定义的域名,也没有配置 https 服务,所以记得取消配置下面的 启用SSL验证。

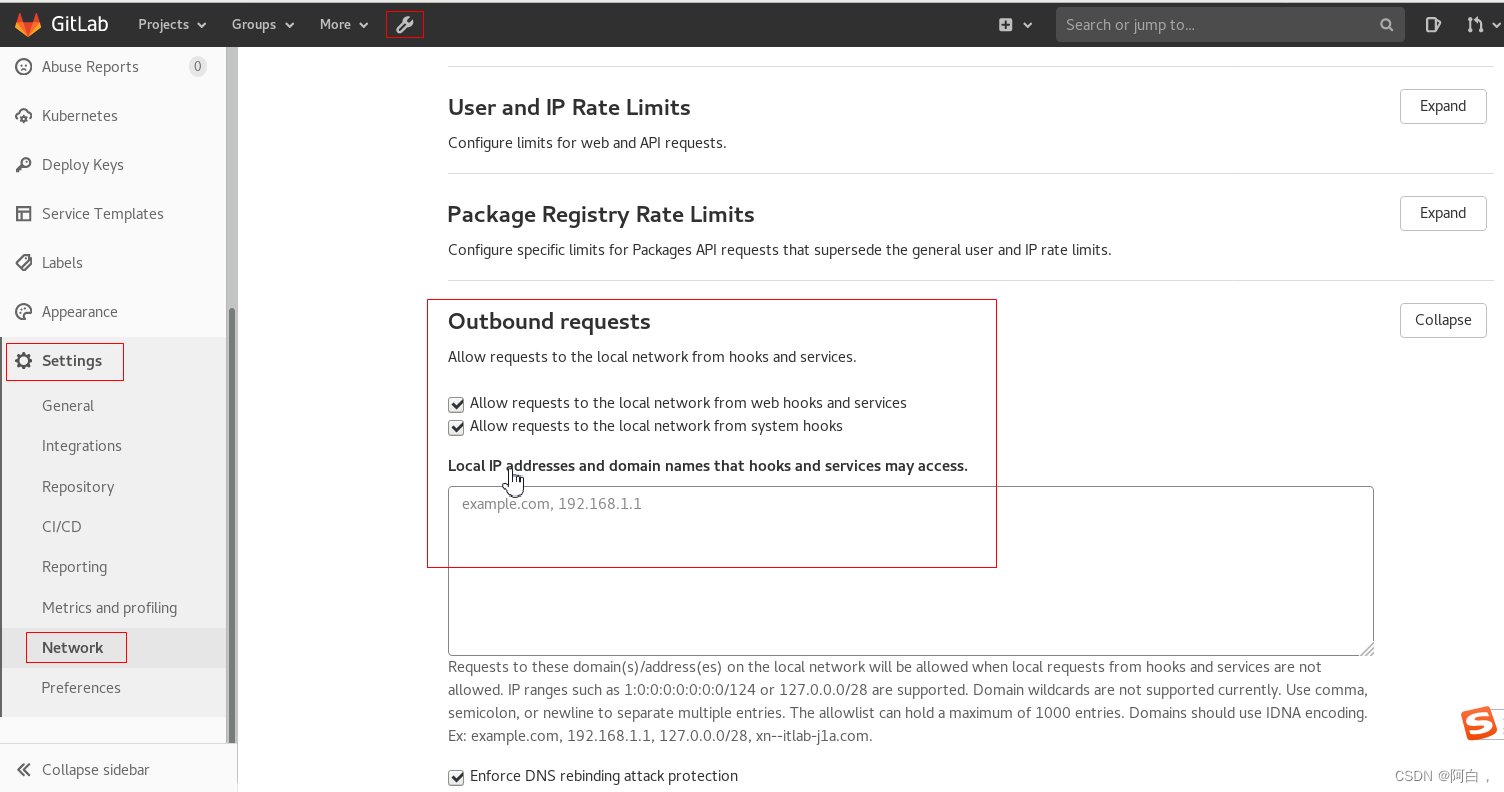

保存后,如果出现 Urlis blocked: Requests to the local network are not allowed 这样的报警信息,则需要进入 GitLab Admin -> 设置 -> 网络 -> 勾选 外发请求,然后保存配置。

现在就可以正常保存了(最好重新走一遍webhook配置),可以直接点击 测试 -> Push Event 测试是否可以正常访问 Webhook 地址,出现了 Hook executed successfully: HTTP 200 则证明 Webhook 配置成功了,否则就需要检查下 Jenkins 的安全配置是否正确了。

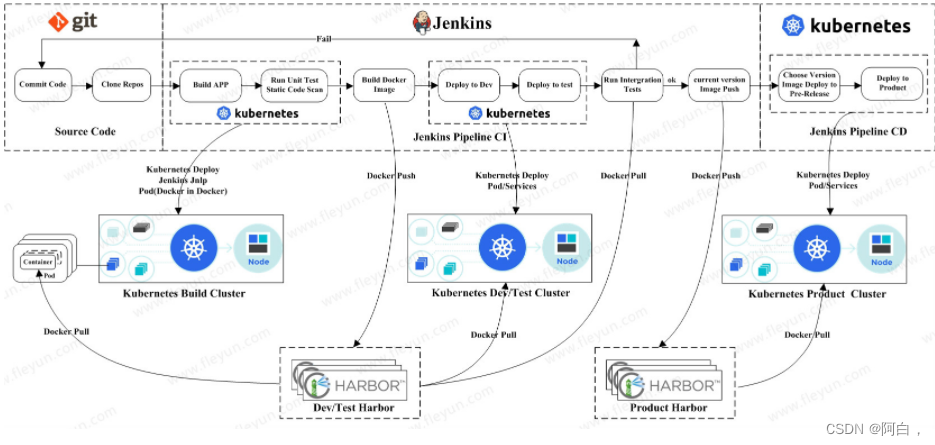

由于当前项目中还没有 Jenkinsfile 文件,所以触发过后会构建失败,接下来我们直接在代码仓库根目录下面添加 Jenkinsfile 文件(jenkins的流水线设置制定了使用代码仓库的Jenkinsfile),用于描述流水线构建流程,整体实现流程如下图所示:

首先定义最简单的流程,要注意这里和前面的不同之处,这里我们使用 podTemplate 来定义不同阶段使用的容器,有哪些阶段呢?

Clone 代码 -> 单元测试 -> Golang 编译打包 -> Docker 镜像构建/推送 -> Kubectl 部署服务。

Clone 代码在默认的 Slave 容器中即可;单元测试我们这里直接忽略,有需要这个阶段的同学自己添加上即可;Golang 编译打包肯定就需要 Golang 的容器了;Docker 镜像构建/推送是不是就需要 Docker 环境了;最后的 Kubectl 更新服务是不是就需要一个有 Kubectl 的容器环境了,所以我们这里就可以很简单的定义 podTemplate 了,如下定义:

def label = "slave-${UUID.randomUUID().toString()}"

podTemplate(label: label, containers: [

containerTemplate(name: 'golang', image: 'golang:1.14.2-alpine3.11', command: 'cat', ttyEnabled: true),

containerTemplate(name: 'docker', image: 'docker:latest', command: 'cat', ttyEnabled: true),

containerTemplate(name: 'kubectl', image: 'cnych/kubectl', command: 'cat', ttyEnabled: true)

], serviceAccount: 'jenkins', volumes: [

hostPathVolume(mountPath: '/home/jenkins/.kube', hostPath: '/root/.kube'),

hostPathVolume(mountPath: '/var/run/docker.sock', hostPath: '/var/run/docker.sock')

]) {

node(label) {

def myRepo = checkout scm

def gitCommit = myRepo.GIT_COMMIT

def gitBranch = myRepo.GIT_BRANCH

stage('单元测试') {

echo "测试阶段"

}

stage('代码编译打包') {

container('golang') {

echo "代码编译打包阶段"

}

}

stage('构建 Docker 镜像') {

container('docker') {

echo "构建 Docker 镜像阶段"

}

}

stage('运行 Kubectl') {

container('kubectl') {

echo "查看 K8S 集群 Pod 列表"

sh "kubectl get pods"

}

}

}

}

直接在 podTemplate 里面定义每个阶段需要用到的容器,volumes 里面将我们需要用到的 docker.sock 文件,需要注意的我们使用的 label 标签是是一个随机生成的,这样有一个好处就是有多个任务来的时候就可以同时构建了。正常来说我们还需要将访问集群的 kubeconfig 文件拷贝到 kubectl 容器的 ~/.kube/config 文件下面去,这样我们就可以在容器中访问 Kubernetes 集群了,但是由于我们构建是在 Slave Pod 中去构建的,Pod 就很有可能每次调度到不同的节点去,这就需要保证每个节点上有 kubeconfig 文件才能挂载成功,所以这里我们使用另外一种方式。

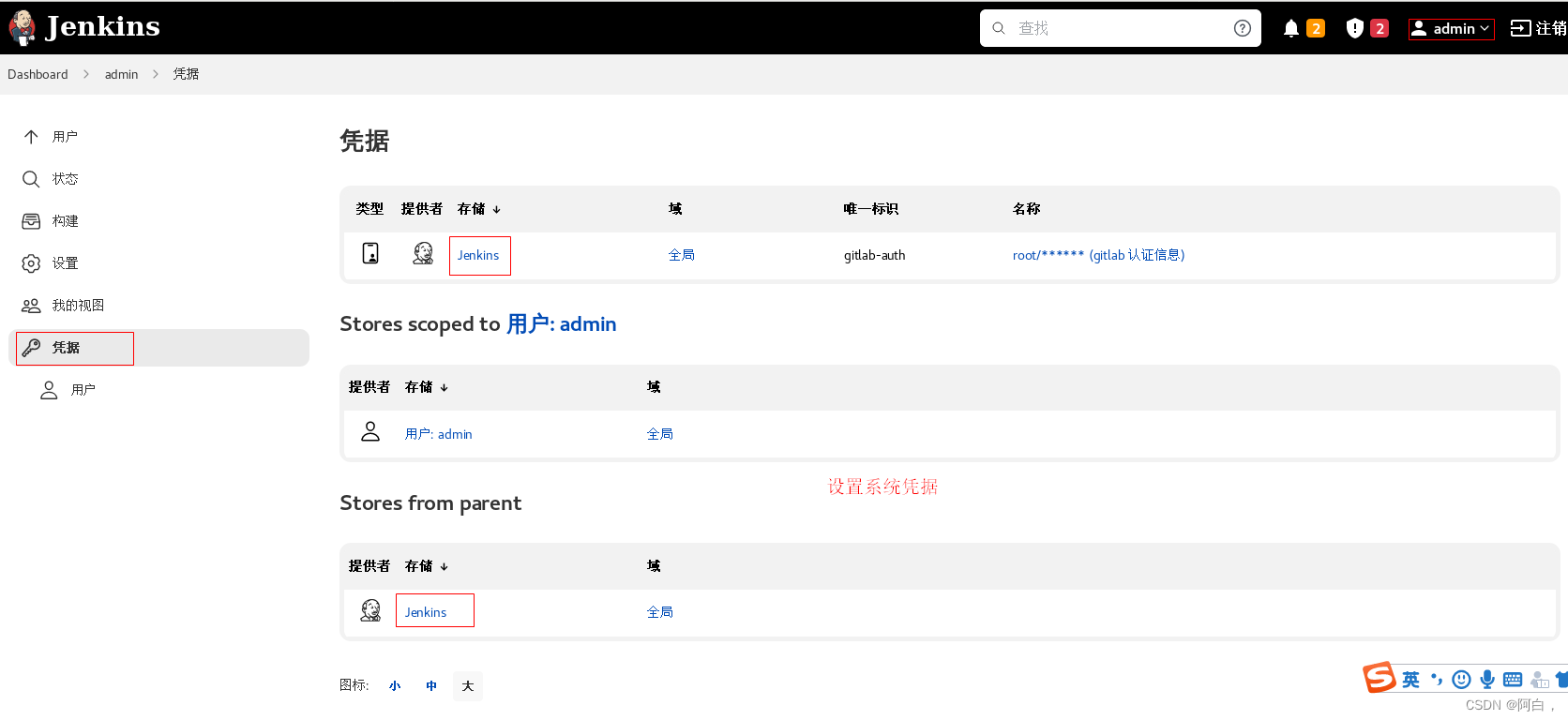

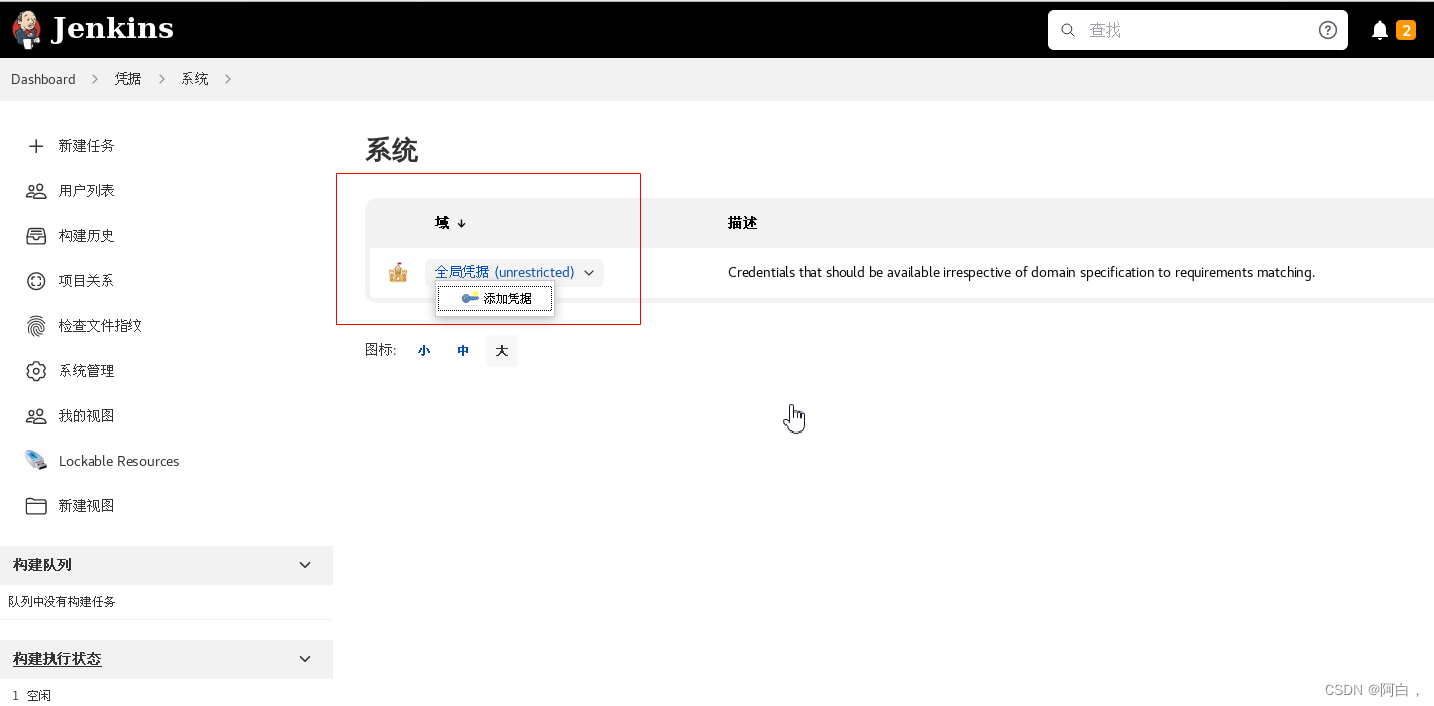

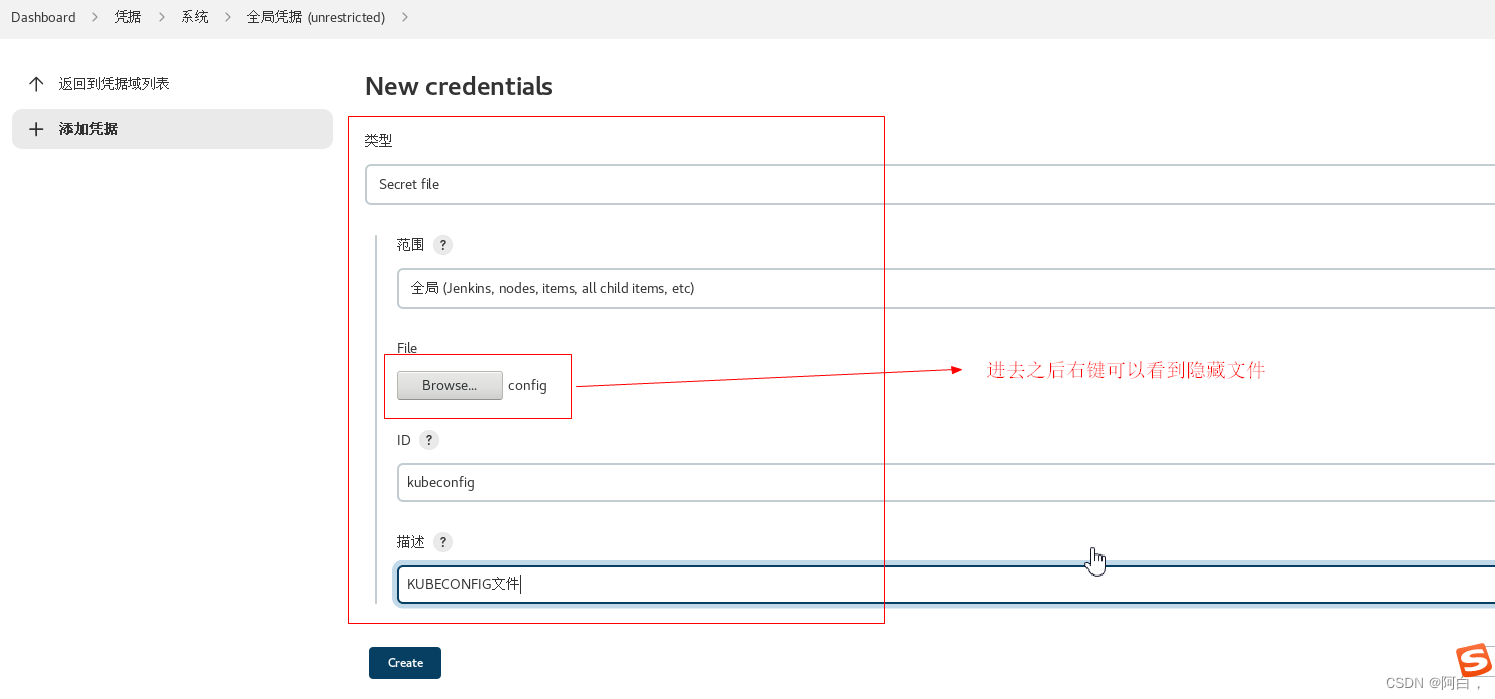

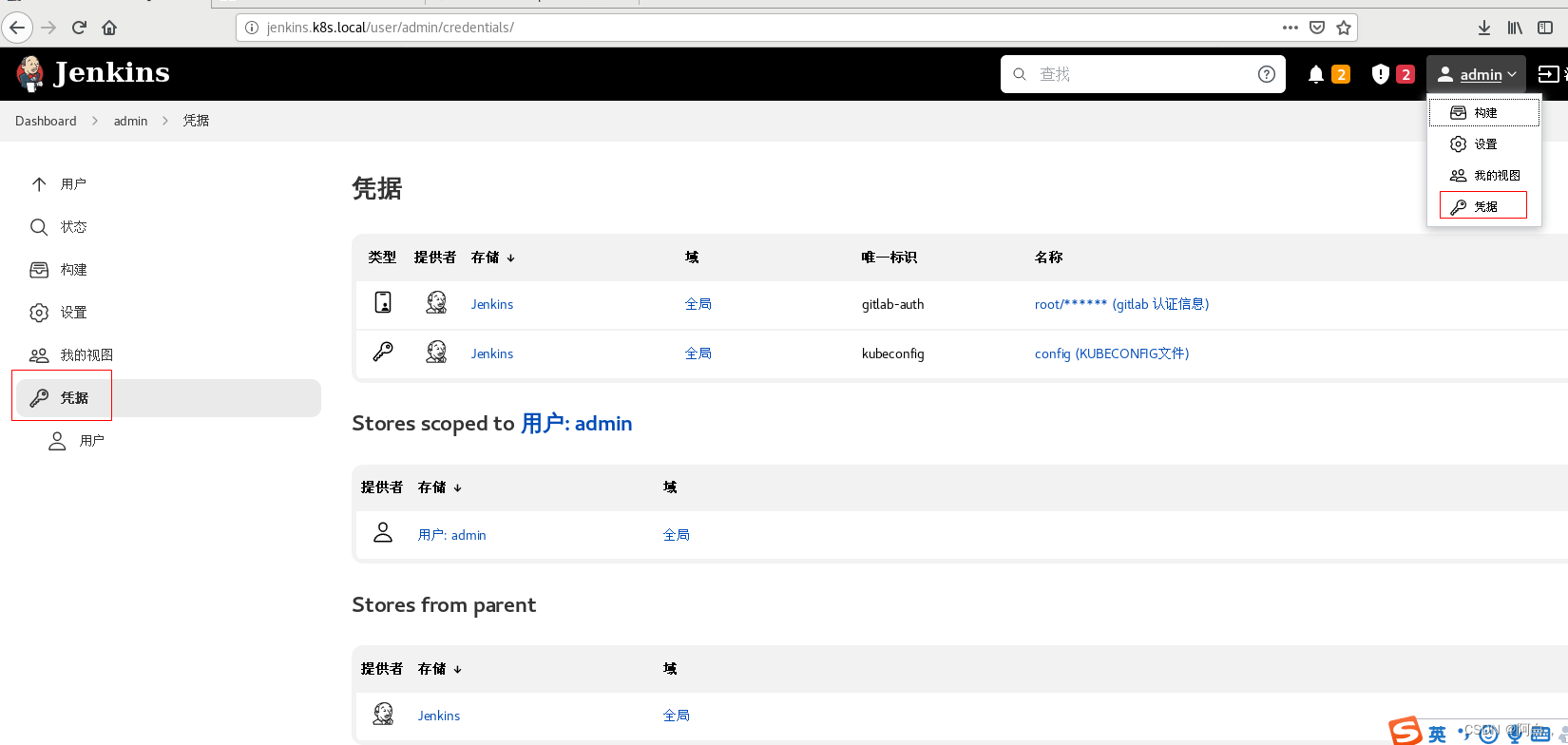

通过将 kubeconfig 文件通过凭证上传到 Jenkins 中,然后在 Jenkinsfile 中读取到这个文件后,拷贝到 kubectl 容器中的 ~/.kube/config 文件中,这样同样就可以正常使用 kubectl 访问集群了。在 Jenkins 页面中添加凭据,选择 Secret file 类型,然后上传 kubeconfig 文件,指定 ID 即可:

那个文件其实无所谓,只要内容,比如你可以将~/.kube/config文件内容拷贝出来到任何文件在上传

然后在 Jenkinsfile 的 kubectl 容器中读取上面添加的 Secret file 文件,拷贝到 ~/.kube/config 即可:

stage('运行 Kubectl') {

container('kubectl') {

withCredentials([file(credentialsId: 'kubeconfig', variable: 'KUBECONFIG')]) {

echo "查看 K8S 集群 Pod 列表"

sh "mkdir -p ~/.kube && cp ${KUBECONFIG} ~/.kube/config"

sh "kubectl get pods"

}

}

}

Jenkinsfile:

def label = "slave-${UUID.randomUUID().toString()}"

podTemplate(label: label, containers: [

containerTemplate(name: 'golang', image: 'golang:1.14.2-alpine3.11', command: 'cat', ttyEnabled: true),

containerTemplate(name: 'docker', image: 'docker:latest', command: 'cat', ttyEnabled: true),

containerTemplate(name: 'kubectl', image: 'cnych/kubectl', command: 'cat', ttyEnabled: true)

], serviceAccount: 'jenkins', volumes: [

hostPathVolume(mountPath: '/home/jenkins/.kube', hostPath: '/root/.kube'),

hostPathVolume(mountPath: '/var/run/docker.sock', hostPath: '/var/run/docker.sock')

]) {

node(label) {

def myRepo = checkout scm

def gitCommit = myRepo.GIT_COMMIT

def gitBranch = myRepo.GIT_BRANCH

stage('单元测试') {

echo "测试阶段"

}

stage('代码编译打包') {

container('golang') {

echo "代码编译打包阶段"

}

}

stage('构建 Docker 镜像') {

container('docker') {

echo "构建 Docker 镜像阶段"

}

}

stage('运行 Kubectl') {

container('kubectl') {

withCredentials([file(credentialsId: 'kubeconfig', variable: 'KUBECONFIG')]) {

echo "查看 K8S 集群 Pod 列表"

sh "mkdir -p ~/.kube && cp ${KUBECONFIG} ~/.kube/config"

sh "kubectl get pods"

}

}

}

}

}

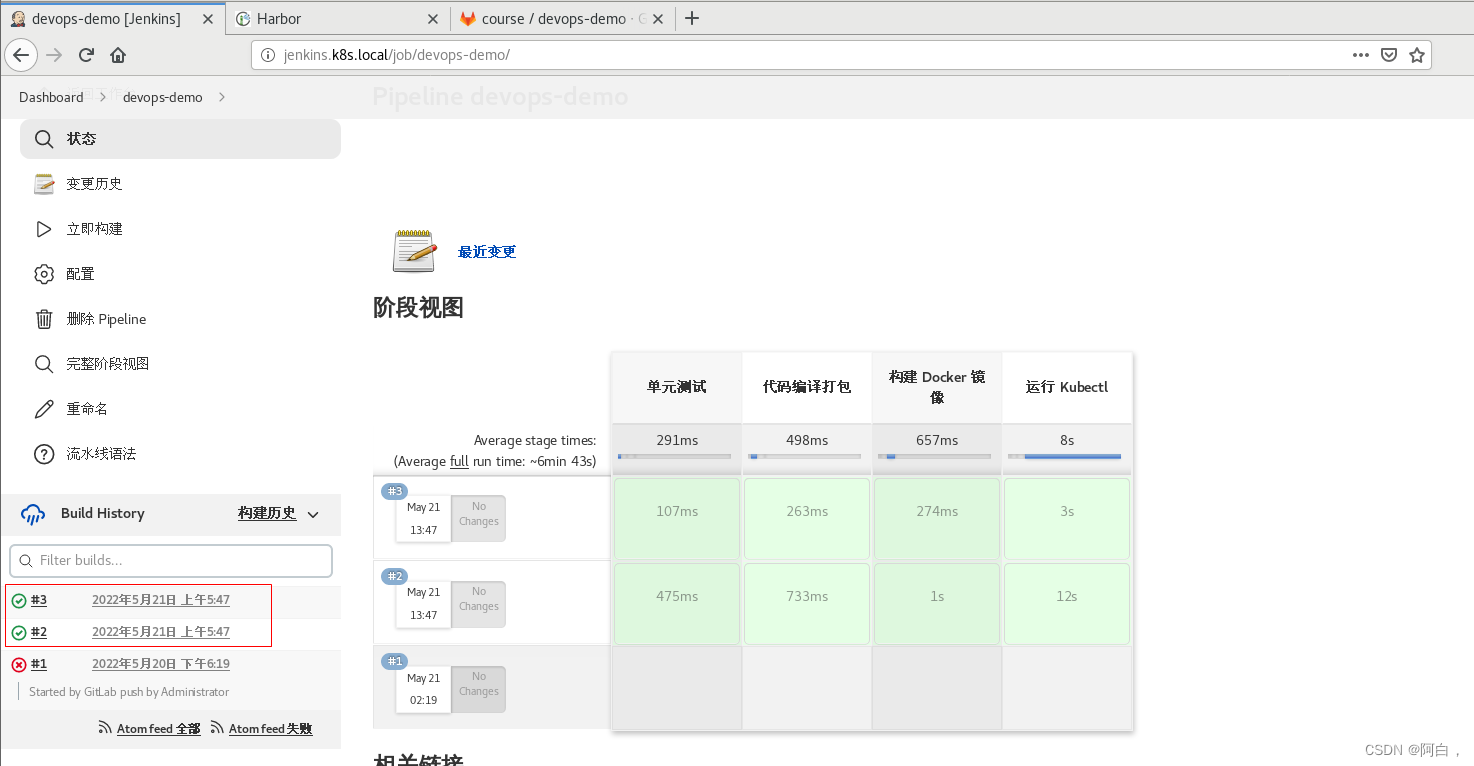

现在我们直接将 Jenkinsfile 文件提交到 GitLab 代码仓库中,正常来说就可以触发 Jenkins 的构建了(如果没有手动触发,可以去devops-demo手动构建):

kubectl get pod -n kube-ops

[root@master1 ~]# kubectl get pod -n kube-ops

NAME READY STATUS RESTARTS AGE

gitlab-68d5dd6bf6-mmxmx 1/1 Running 0 12m

harbor-harbor-chartmuseum-5b967fdc48-cs58w 1/1 Running 0 43h

harbor-harbor-core-9fb6f5cfb-2hgx5 1/1 Running 2 43h

harbor-harbor-jobservice-784c6df67f-67ksg 1/1 Running 0 43h

harbor-harbor-notary-server-84446644c7-5dh7n 1/1 Running 0 43h

harbor-harbor-notary-signer-d44bbdd7-q7ssq 1/1 Running 0 43h

harbor-harbor-portal-559c4d4bfd-qq2bb 1/1 Running 0 43h

harbor-harbor-registry-cd8784bdd-plpds 2/2 Running 0 43h

harbor-harbor-trivy-0 1/1 Running 6 41h

jenkins-86f6848b45-tq9hx 1/1 Running 0 5d

postgresql-566846fd86-vbhbd 1/1 Running 0 3d16h

redis-8cc6f6d9d-xqztk 1/1 Running 0 3d16h

slave-7c8f5d23-fcb0-4a75-a209-12b62186f717-47m12-sqdlm 0/4 ContainerCreating 0 1s

slave-83bc6992-b70e-421e-bfa7-9492ad505395-xkhpk-01sp8 0/4 ContainerCreating 0 1s

我们可以看到生成的 slave Pod 包含了4个容器(4个镜像),就是我们在 podTemplate 指定的加上 slave 的镜像,运行完成后该 Pod 也会自动销毁。

所以这里每个容器对应的一个小应用的,全在一个pod中,一个pod就是一个完整的应用,其实按照微服务的思维,最好还是将这个应用拆分为4个独立的应用pod来部署,这就是最简单的云原生的应用的部署方式,还有基本的jenkins流水线+gitlab+kubernetes的工作流程

[root@master1 ~]# kubectl get pod -n kube-ops

NAME READY STATUS RESTARTS AGE

gitlab-68d5dd6bf6-mmxmx 1/1 Running 0 18m

harbor-harbor-chartmuseum-5b967fdc48-cs58w 1/1 Running 0 43h

harbor-harbor-core-9fb6f5cfb-2hgx5 1/1 Running 2 43h

harbor-harbor-jobservice-784c6df67f-67ksg 1/1 Running 0 43h

harbor-harbor-notary-server-84446644c7-5dh7n 1/1 Running 0 43h

harbor-harbor-notary-signer-d44bbdd7-q7ssq 1/1 Running 0 43h

harbor-harbor-portal-559c4d4bfd-qq2bb 1/1 Running 0 43h

harbor-harbor-registry-cd8784bdd-plpds 2/2 Running 0 43h

harbor-harbor-trivy-0 1/1 Running 6 41h

jenkins-86f6848b45-tq9hx 1/1 Running 0 5d

postgresql-566846fd86-vbhbd 1/1 Running 0 3d16h

redis-8cc6f6d9d-xqztk 1/1 Running 0 3d16h

slave-7c8f5d23-fcb0-4a75-a209-12b62186f717-47m12-sqdlm 4/4 Terminating 0 6m31s

slave-83bc6992-b70e-421e-bfa7-9492ad505395-xkhpk-s0xxs 4/4 Running 0 46s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 6m26s default-scheduler Successfully assigned kube-ops/slave-7c8f5d23-fcb0-4a75-a209-12b62186f717-47m12-sqdlm to node2

Normal Pulling 6m24s kubelet Pulling image "golang:1.14.2-alpine3.11"

Normal Pulled 4m57s kubelet Successfully pulled image "golang:1.14.2-alpine3.11" in 1m26.792061603s

Normal Created 4m54s kubelet Created container golang

Normal Started 4m54s kubelet Started container golang

Normal Pulling 4m54s kubelet Pulling image "cnych/kubectl"

Normal Pulled 4m9s kubelet Successfully pulled image "cnych/kubectl" in 44.912803509s

Normal Created 4m9s kubelet Created container kubectl

Normal Started 4m8s kubelet Started container kubectl

Normal Pulling 4m8s kubelet Pulling image "docker:latest"

Normal Pulled 3m11s kubelet Successfully pulled image "docker:latest" in 57.520470092s

Normal Created 3m10s kubelet Created container docker

Normal Started 3m9s kubelet Started container docker

Normal Pulling 3m9s kubelet Pulling image "jenkins/inbound-agent:4.11-1-jdk11"

Normal Pulled 77s kubelet Successfully pulled image "jenkins/inbound-agent:4.11-1-jdk11" in 1m52.106352015s

Normal Created 76s kubelet Created container jnlp

Normal Started 76s kubelet Started container jnlp

Normal Killing 11s kubelet Stopping container golang

Normal Killing 11s kubelet Stopping container jnlp

Normal Killing 11s kubelet Stopping container docker

Normal Killing 11s kubelet Stopping container kubectl

我这里构建了两次,所以有两个slave

Pipeline

接下来我们就来实现具体的流水线。

第一个阶段:单元测试,我们可以在这个阶段是运行一些单元测试或者静态代码分析的脚本,我们这里直接忽略。

第二个阶段:代码编译打包,我们可以看到我们是在一个 golang 的容器中来执行的,我们只需要在该容器中获取到代码,然后在代码目录下面执行打包命令即可(go build生成一个可执行文件即可),如下所示:

stage('代码编译打包') {

try {

container('golang') {

echo "2.代码编译打包阶段"

sh """

export GOPROXY=https://goproxy.cn

GOOS=linux GOARCH=amd64 go build -v -o demo-app

"""

}

} catch (exc) {

println "构建失败 - ${currentBuild.fullDisplayName}"

throw(exc)

}

}

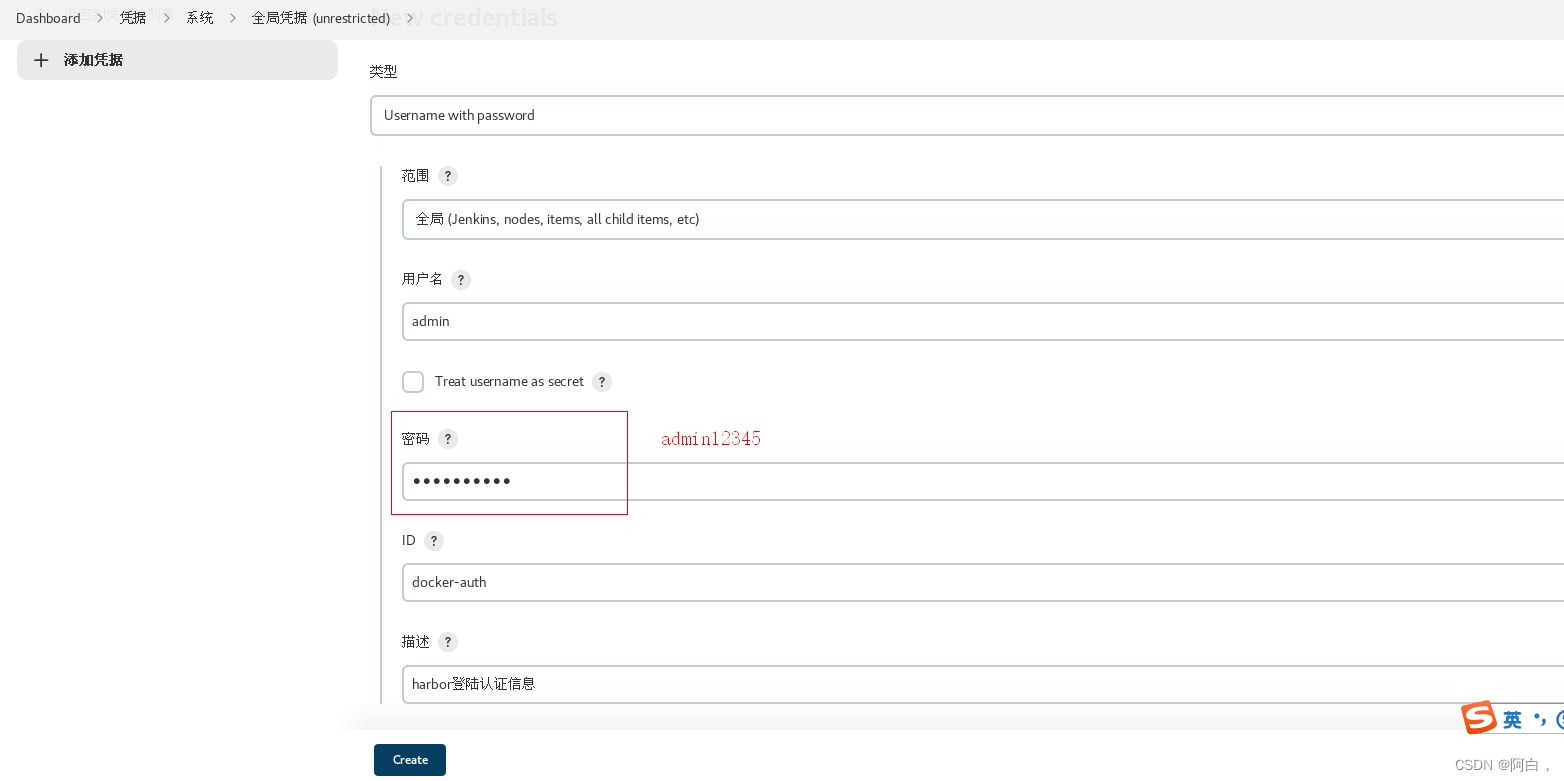

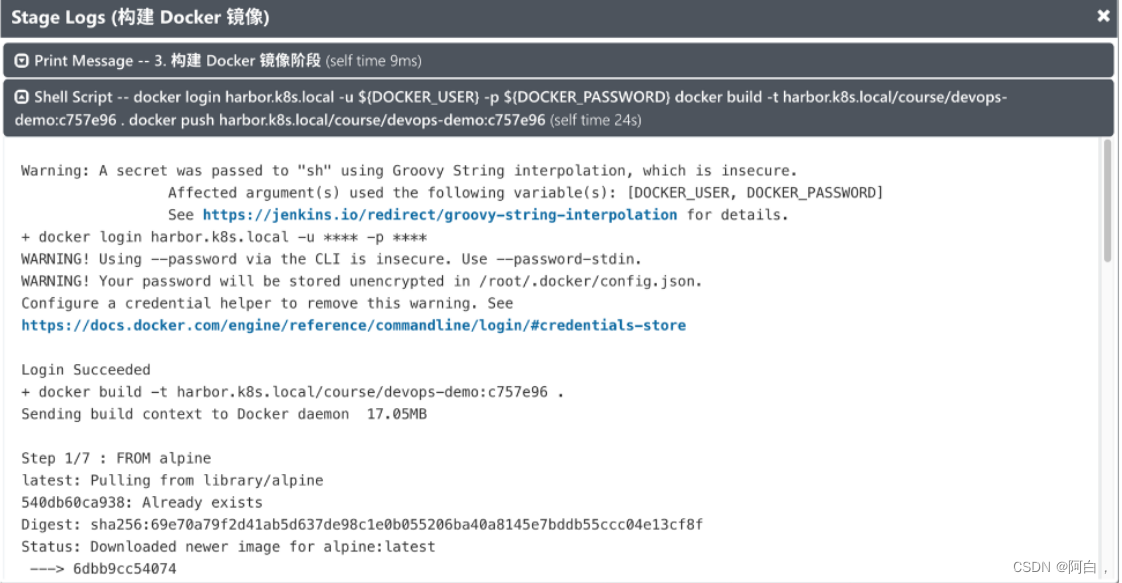

第三个阶段:构建 Docker 镜像,要构建 Docker 镜像,就需要提供镜像的名称和 tag,要推送到 Harbor 仓库,就需要提供登录的用户名和密码,所以我们这里使用到了 withCredentials 方法,在里面可以提供一个credentialsId 为 dockerhub 的认证信息,如下:

stage('构建 Docker 镜像') {

withCredentials([[$class: 'UsernamePasswordMultiBinding',

credentialsId: 'docker-auth',

usernameVariable: 'DOCKER_USER',

passwordVariable: 'DOCKER_PASSWORD']]) {

container('docker') {

echo "3. 构建 Docker 镜像阶段"

sh """

docker login ${registryUrl} -u ${DOCKER_USER} -p ${DOCKER_PASSWORD}

docker build -t ${image} .

docker push ${image}

"""

}

}

}

其中 ${image} 和 ${imageTag} 我们可以在上面定义成全局变量:

// 获取 git commit id 作为镜像标签

def imageTag = sh(script: "git rev-parse --short HEAD", returnStdout: true).trim()

// 仓库地址

def registryUrl = "harbor.k8s.local"

def imageEndpoint = "course/devops-demo"

// 镜像

def image = "${registryUrl}/${imageEndpoint}:${imageTag}"

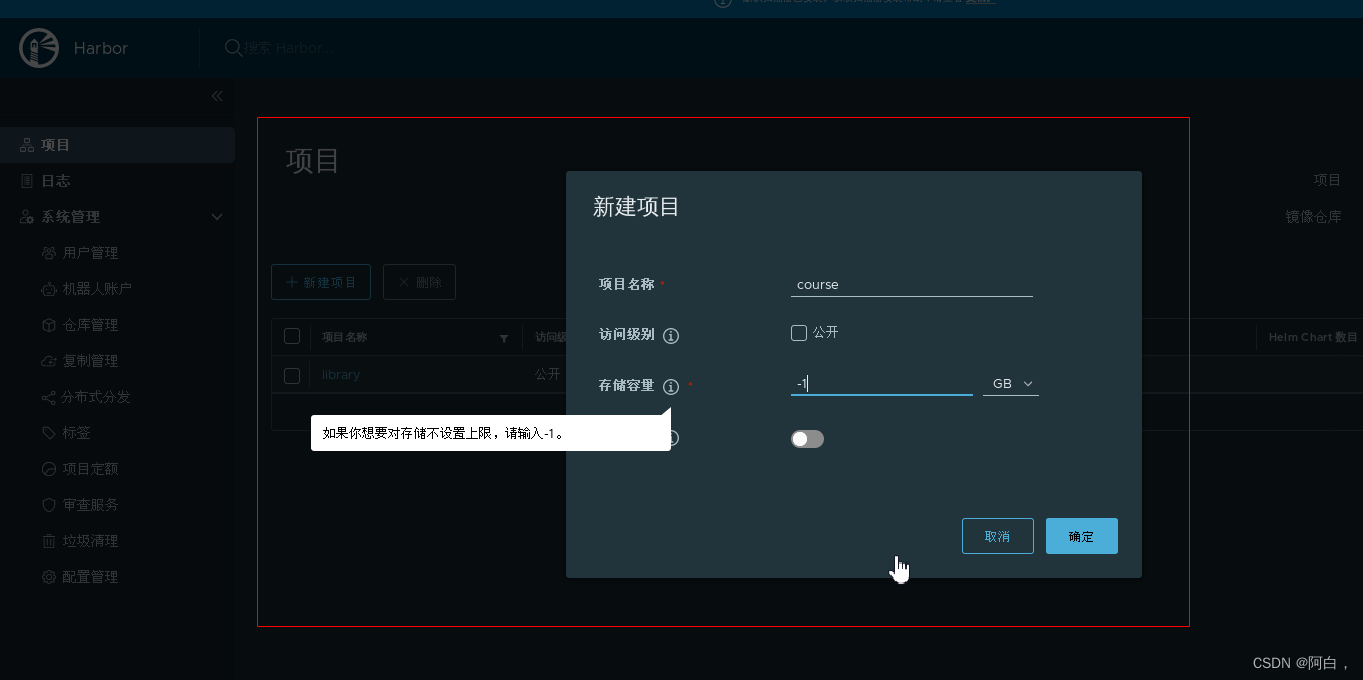

这里定义的镜像名称为 course/devops-demo,所以需要提前在 Harbor 中新建一个名为 course 的私有项目:

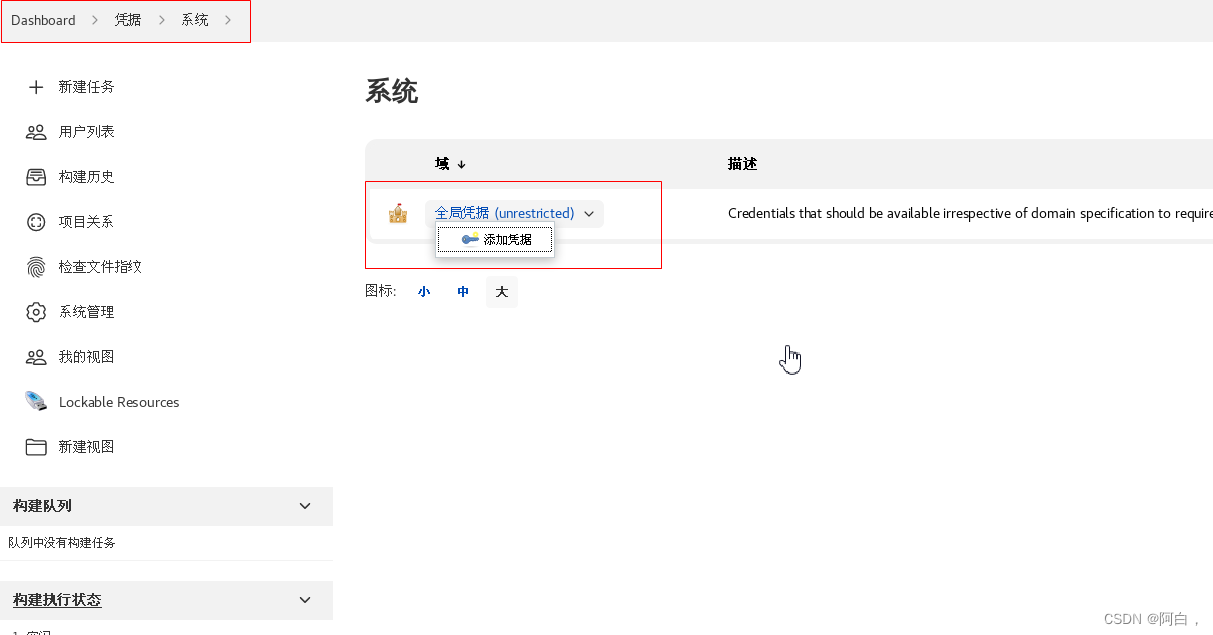

Docker 的用户名和密码信息则需要通过凭据来进行添加,进入 jenkins 首页 -> 左侧菜单凭据 -> 添加凭据,选择用户名和密码类型的,其中 ID 一定要和上面的 credentialsId 的值保持一致:

这里密码应该是Harbor12345,是你登录harbor的密码

不过需要注意的是我们这里使用的是 Docker IN Docker 模式来构建 Docker 镜像,通过将宿主机的 docker.sock 文件挂载到容器中来共享 Docker Daemon,所以我们也需要提前在节点上配置对 Harbor 镜像仓库的信任:

(该docker容器运行在那个节点上,就哪个节点进行设置,当然也可以所有节点都设置)

cat /etc/docker/daemon.json

{

"insecure-registries" : [ # 配置忽略 Harobr 镜像仓库的证书校验

"harbor.k8s.local"

],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"registry-mirrors" : [

"https://xxx.mirror.aliyuncs.com"

]

}

systemctl daemon-reload

systemctl restart docker

配置生效过后我们就可以正常在流水线中去操作 Docker 命令,否则会出现如下所示的错误:

(出现这个错误可以下凭据,我这里设置系统凭据,或者重试一下,也可以在命令docker login测试下)

现在镜像我们都已经推送到了 Harbor 仓库中去了,接下来就可以部署应用到 Kubernetes 集群中了,当然可以直接通过 kubectl 工具去操作 YAML 文件来部署,我们这里的示例,编写了一个 Helm Chart 模板,所以我们也可以直接通过 Helm 来进行部署,所以当然就需要一个具有 helm 命令的容器,这里我们使用 cnych/helm 这个镜像,这个镜像也非常简单,就是简单的将 helm 二进制文件下载下来放到 PATH 路径下面去即可,对应的 Dockerfile 文件如下所示,大家也可以根据自己的需要来进行定制(定制个镜像):

FROM alpine

MAINTAINER cnych <[email protected]>

ARG HELM_VERSION="v3.2.1"

RUN apk add --update ca-certificates \

&& apk add --update -t deps wget git openssl bash \

&& wget https://get.helm.sh/helm-${HELM_VERSION}-linux-amd64.tar.gz \

&& tar -xvf helm-${HELM_VERSION}-linux-amd64.tar.gz \

&& mv linux-amd64/helm /usr/local/bin \

&& apk del --purge deps \

&& rm /var/cache/apk/* \

&& rm -f /helm-${HELM_VERSION}-linux-amd64.tar.gz

ENTRYPOINT ["helm"]

CMD ["help"]

我们这里使用的是 Helm3 版本,所以要想用 Helm 来部署应用,同样的需要配置一个 kubeconfig 文件在容器中,这样才能访问到 Kubernetes 集群。所以我们可以将 运行 Kubectl 的阶段做如下更改:

stage('运行 Helm') {

withCredentials([file(credentialsId: 'kubeconfig', variable: 'KUBECONFIG')]) {

container('helm') {

sh "mkdir -p ~/.kube && cp ${KUBECONFIG} ~/.kube/config"

echo "4.开始 Helm 部署"

helmDeploy(

debug : false,

name : "devops-demo",

chartDir : "./helm",

namespace : "kube-ops",

valuePath : "./helm/my-value.yaml",

imageTag : "${imageTag}"

)

echo "[INFO] Helm 部署应用成功..."

}

}

}

其中 helmDeploy 方法可以在全局中进行定义封装:

def helmLint(String chartDir) {

println "校验 chart 模板"

sh "helm lint ${chartDir}"

}

def helmDeploy(Map args) {

if (args.debug) {

println "Debug 应用"

sh "helm upgrade --dry-run --debug --install ${args.name} ${args.chartDir} -f ${args.valuePath} --set image.tag=${args.imageTag} --namespace ${args.namespace}"

} else {

println "部署应用"

sh "helm upgrade --install ${args.name} ${args.chartDir} -f ${args.valuePath} --set image.tag=${args.imageTag} --namespace ${args.namespace}"

echo "应用 ${args.name} 部署成功. 可以使用 helm status ${args.name} 查看应用状态"

}

}

我们在 Chart 模板中定义了一个名为 my-values.yaml 的 Values 文件,用来覆盖默认的值,比如这里我们需要使用 Harbor 私有仓库的镜像,则必然需要定义 imagePullSecrets,所以需要在目标 namespace 下面创建一个 Harbor 登录认证的 Secret 对象:

[root@node2 drone-k8s-demo]# kubectl create secret docker-registry harbor-auth --docker-server=harbor.k8s.local --docker-username=admin --docker-password=Harbor12345 [email protected] --namespace kube-ops

secret/harbor-auth created

然后由于每次我们构建的镜像 tag 都会变化,所以我们可以通过 --set 来动态设置。

不过需要记得在上面容器模板中添加 helm 容器:

containerTemplate(name: 'helm', image: 'cnych/helm', command: 'cat', ttyEnabled: true)

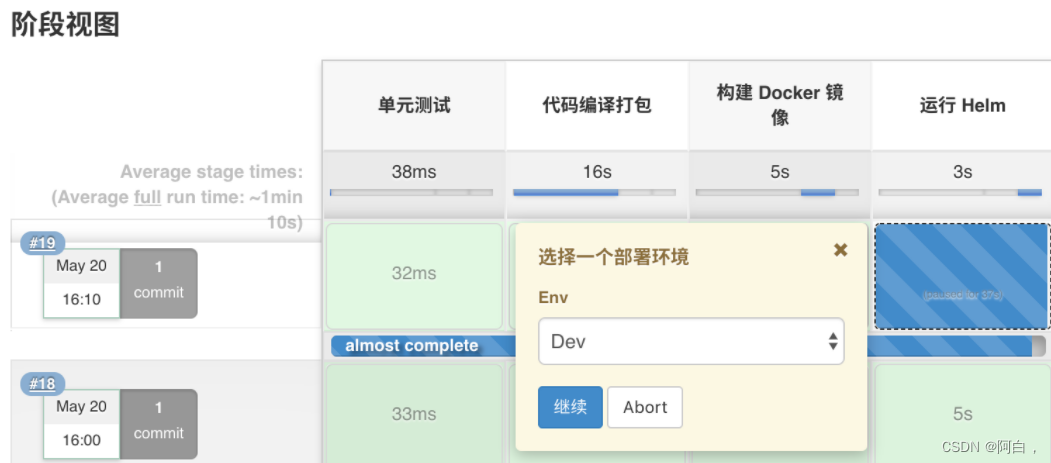

对于不同的环境我们可以使用不同的 values 文件来进行区分,这样当我们部署的时候可以手动选择部署到某个环境下面去。

def userInput = input(

id: 'userInput',

message: '选择一个部署环境',

parameters: [

[

$class: 'ChoiceParameterDefinition',

choices: "Dev\nQA\nProd",

name: 'Env'

]

]

)

echo "部署应用到 ${userInput} 环境"

// 选择不同环境下面的 values 文件

if (userInput == "Dev") {

// deploy dev stuff

} else if (userInput == "QA"){

// deploy qa stuff

} else {

// deploy prod stuff

}

// 根据 values 文件再去使用 Helm 进行部署

然后去构建应用的时候,在 Helm 部署阶段就会看到 Stage View 界面出现了暂停的情况,需要我们选择一个环境来进行部署:

选择完成后再去部署应用。最后我们还可以添加一个 kubectl 容器来查看应用的相关资源对象:

stage('运行 Kubectl') {

withCredentials([file(credentialsId: 'kubeconfig', variable: 'KUBECONFIG')]) {

container('kubectl') {

sh "mkdir -p ~/.kube && cp ${KUBECONFIG} ~/.kube/config"

echo "5.查看应用"

sh "kubectl get all -n kube-ops -l app=devops-demo"

}

}

}

有时候我们部署的应用即使有很多测试,但是也难免会出现一些错误,这个时候如果我们是部署到线上的话,就需要要求能够立即进行回滚,这里我们同样可以使用 Helm 来非常方便的操作,添加如下一个回滚的阶段:

stage('快速回滚?') {

withCredentials([file(credentialsId: 'kubeconfig', variable: 'KUBECONFIG')]) {

container('helm') {

sh "mkdir -p ~/.kube && cp ${KUBECONFIG} ~/.kube/config"

def userInput = input(

id: 'userInput',

message: '是否需要快速回滚?',

parameters: [

[

$class: 'ChoiceParameterDefinition',

choices: "Y\nN",

name: '回滚?'

]

]

)

if (userInput == "Y") {

sh "helm rollback devops-demo --namespace kube-ops"

}

}

}

}

最后一条完整的流水线就完成了。

我们可以在本地加上应用域名 devops-demo.k8s.local 的映射就可以访问应用了:

$ curl http://devops-demo.k8s.local

{

"msg":"Hello DevOps On Kubernetes"}

完整的 Jenkinsfile 文件如下所示:

def label = "slave-${UUID.randomUUID().toString()}"

def helmLint(String chartDir) {

println "校验 chart 模板"

sh "helm lint ${chartDir}"

}

def helmDeploy(Map args) {

if (args.debug) {

println "Debug 应用"

sh "helm upgrade --dry-run --debug --install ${args.name} ${args.chartDir} -f ${args.valuePath} --set image.tag=${args.imageTag} --namespace ${args.namespace}"

} else {

println "部署应用"

sh "helm upgrade --install ${args.name} ${args.chartDir} -f ${args.valuePath} --set image.tag=${args.imageTag} --namespace ${args.namespace}"

echo "应用 ${args.name} 部署成功. 可以使用 helm status ${args.name} 查看应用状态"

}

}

podTemplate(label: label, containers: [

containerTemplate(name: 'golang', image: 'golang:1.14.2-alpine3.11', command: 'cat', ttyEnabled: true),

containerTemplate(name: 'docker', image: 'docker:latest', command: 'cat', ttyEnabled: true),

containerTemplate(name: 'helm', image: 'cnych/helm', command: 'cat', ttyEnabled: true),

containerTemplate(name: 'kubectl', image: 'cnych/kubectl', command: 'cat', ttyEnabled: true)

], serviceAccount: 'jenkins', volumes: [

hostPathVolume(mountPath: '/var/run/docker.sock', hostPath: '/var/run/docker.sock')

]) {

node(label) {

// 对于multi-brach pipeline时,可以在job中的配置指定源代码的来源,比兔git url,就是前面的定义scm,然后再pipeline中直接调用checkout scm,直接只写checkout scm也可以

def myRepo = checkout scm

// 获取 git commit id 作为镜像标签,trim()是java的方法,去除头尾的空白符,使用到这条命令的容器会调用它的时候执行脚本命令(def像声明定义,留着后边调用)

def imageTag = sh(script: "git rev-parse --short HEAD", returnStdout: true).trim()

// 仓库地址

def registryUrl = "harbor.k8s.local"

def imageEndpoint = "course/devops-demo"

// 镜像

def image = "${registryUrl}/${imageEndpoint}:${imageTag}"

stage('单元测试') {

echo "测试阶段"

}

stage('代码编译打包') {

try {

container('golang') {

echo "2.代码编译打包阶段"

sh """

export GOPROXY=https://goproxy.cn

GOOS=linux GOARCH=amd64 go build -v -o demo-app

"""

}

} catch (exc) {

println "构建失败 - ${currentBuild.fullDisplayName}"

throw(exc)

}

}

stage('构建 Docker 镜像') {

withCredentials([[$class: 'UsernamePasswordMultiBinding',

credentialsId: 'docker-auth',

usernameVariable: 'DOCKER_USER',

passwordVariable: 'DOCKER_PASSWORD']]) {

container('docker') {

echo "3. 构建 Docker 镜像阶段"

sh """

cat /etc/resolv.conf

docker login ${registryUrl} -u ${DOCKER_USER} -p ${DOCKER_PASSWORD}

docker build -t ${image} .

docker push ${image}

"""

}

}

}

stage('运行 Helm') {

withCredentials([file(credentialsId: 'kubeconfig', variable: 'KUBECONFIG')]) {

container('helm') {

sh "mkdir -p ~/.kube && cp ${KUBECONFIG} ~/.kube/config"

echo "4.开始 Helm 部署"

//input用户在执行各个阶段的时候,由人工确认是否继续进行。

//message 呈现给用户的提示信息。

//id 可选,默认为stage名称。

//ok 默认表单上的ok文本。

//submitter 可选的,以逗号分隔的用户列表或允许提交的外部组名。默认允许任何用户。

//submitterParameter 环境变量的可选名称。如果存在,用submitter 名称设置。

//parameters 提示提交者提供的一个可选的参数列表。

//input的返回值,是用户的选项,比如dev

def userInput = input(

id: 'userInput',

message: '选择一个部署环境',

parameters: [

[

$class: 'ChoiceParameterDefinition',

choices: "Dev\nQA\nProd",

name: 'Env'

]

]

)

echo "部署应用到 ${userInput} 环境"

// 选择不同环境下面的 values 文件

if (userInput == "Dev") {

// deploy dev stuff

} else if (userInput == "QA"){

// deploy qa stuff

} else {

// deploy prod stuff

}

helmDeploy(

debug : false,

name : "devops-demo",

chartDir : "./helm",

namespace : "kube-ops",

valuePath : "./helm/my-values.yaml",

imageTag : "${imageTag}"

)

}

}

}

stage('运行 Kubectl') {

withCredentials([file(credentialsId: 'kubeconfig', variable: 'KUBECONFIG')]) {

container('kubectl') {

sh "mkdir -p ~/.kube && cp ${KUBECONFIG} ~/.kube/config"

echo "5.查看应用"

sh "kubectl get all -n kube-ops -l app=devops-demo"

}

}

}

stage('快速回滚?') {

withCredentials([file(credentialsId: 'kubeconfig', variable: 'KUBECONFIG')]) {

container('helm') {

sh "mkdir -p ~/.kube && cp ${KUBECONFIG} ~/.kube/config"

def userInput = input(

id: 'userInput',

message: '是否需要快速回滚?',

parameters: [

[

$class: 'ChoiceParameterDefinition',

choices: "Y\nN",

name: '回滚?'

]

]

)

if (userInput == "Y") {

sh "helm rollback devops-demo --namespace kube-ops"

}

}

}

}

}

}

我们用的是Jenkinsfile,是脚本式pipeline,比声明式更灵活

jenkins核心语法

Jenkins pipeline声明式与脚本式语法

更多语法去jenkins官网https://www.jenkins.io/doc/book/pipeline/syntax/

Tekton

Tekton 是一款功能非常强大而灵活的 CI/CD 开源的云原生框架。Tekton 的前身是 Knative 项目的 build-pipeline 项目,这个项目是为了给 build 模块增加 pipeline 的功能,但是随着不同的功能加入到 Knative build 模块中,build 模块越来越变得像一个通用的 CI/CD 系统,于是,索性将 build-pipeline 剥离出 Knative,就变成了现在的 Tekton,而 Tekton 也从此致力于提供全功能、标准化的云原生 CI/CD 解决方案。Tekton 为 CI/CD 系统提供了诸多好处:

1.可定制:Tekton 是完全可定制的,具有高度的灵活性,我们可以定义非常详细的构建块目录,供开发人员在各种场景中使用。

2.可重复使用:Tekton 是完全可移植的,任何人都可以使用给定的流水线并重用其构建块,可以使得开发人员无需"造轮子"就可以快速构建复杂的流水线。

可扩展:Tekton Catalog 是社区驱动的 Tekton 构建块存储库,我们可以使用 Tekton Catalog 中定义的组件快速创建新的流水线并扩展现有管道。

3.标准化:Tekton 在你的 Kubernetes 集群上作为扩展安装和运行,并使用完善的 Kubernetes 资源模型,Tekton 工作负载在 Kubernetes Pod 内执行。

4.伸缩性:要增加工作负载容量,只需添加新的节点到集群即可,Tekton 可随集群扩展,无需重新定义资源分配或对管道进行任何其他修改。

组件

Tekton 由一些列组件组成:

1.Tekton Pipelines 是 Tekton 的基础,它定义了一组 Kubernetes CRD 作为构建块,我们可以使用这些对象来组装 CI/CD 流水线。

2.Tekton Triggers 允许我们根据事件来实例化流水线,例如,可以我们在每次将 PR 合并到 GitHub 仓库的时候触发流水线实例和构建工作。(trigger触发器)

3.Tekton CLI 提供了一个名为 tkn 的命令行界面,它构建在 Kubernetes CLI 之上,运行和 Tekton 进行交互。

Tekton Dashboard 是 Tekton Pipelines 的基于 Web 的一个图形界面,可以线上有关流水线执行的相关信息。

Tekton Catalog 是一个由社区贡献的高质量 Tekton 构建块(任务、流水线等)存储库,可以直接在我们自己的流水线中使用这些构建块。

4.Tekton Hub 是一个用于访问 Tekton Catalog 的 Web 图形界面工具。

5.Tekton Operator 是一个 Kubernetes Operator,可以让我们在 Kubernetes 集群上安装、更新、删除 Tekton 项目。

安装

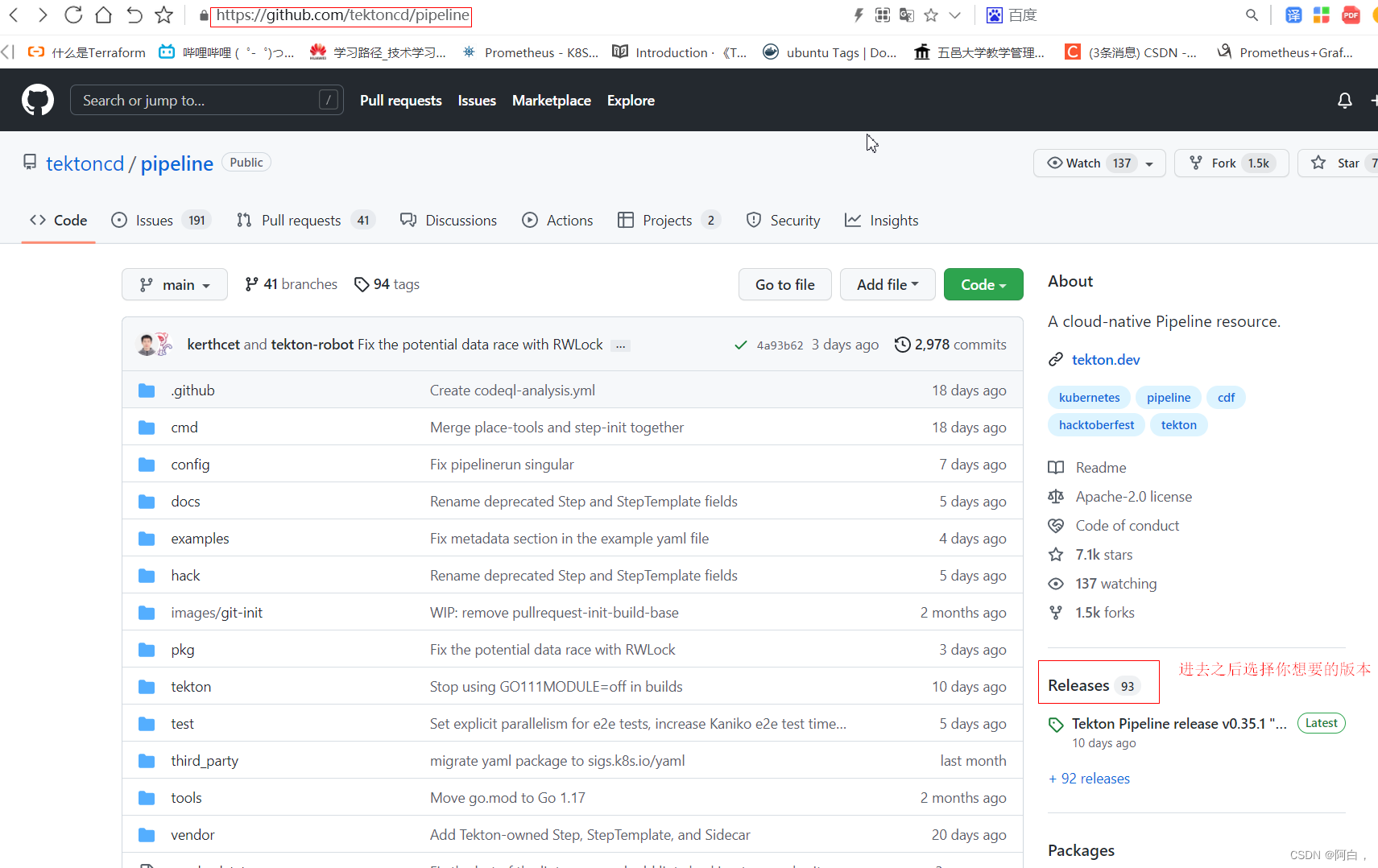

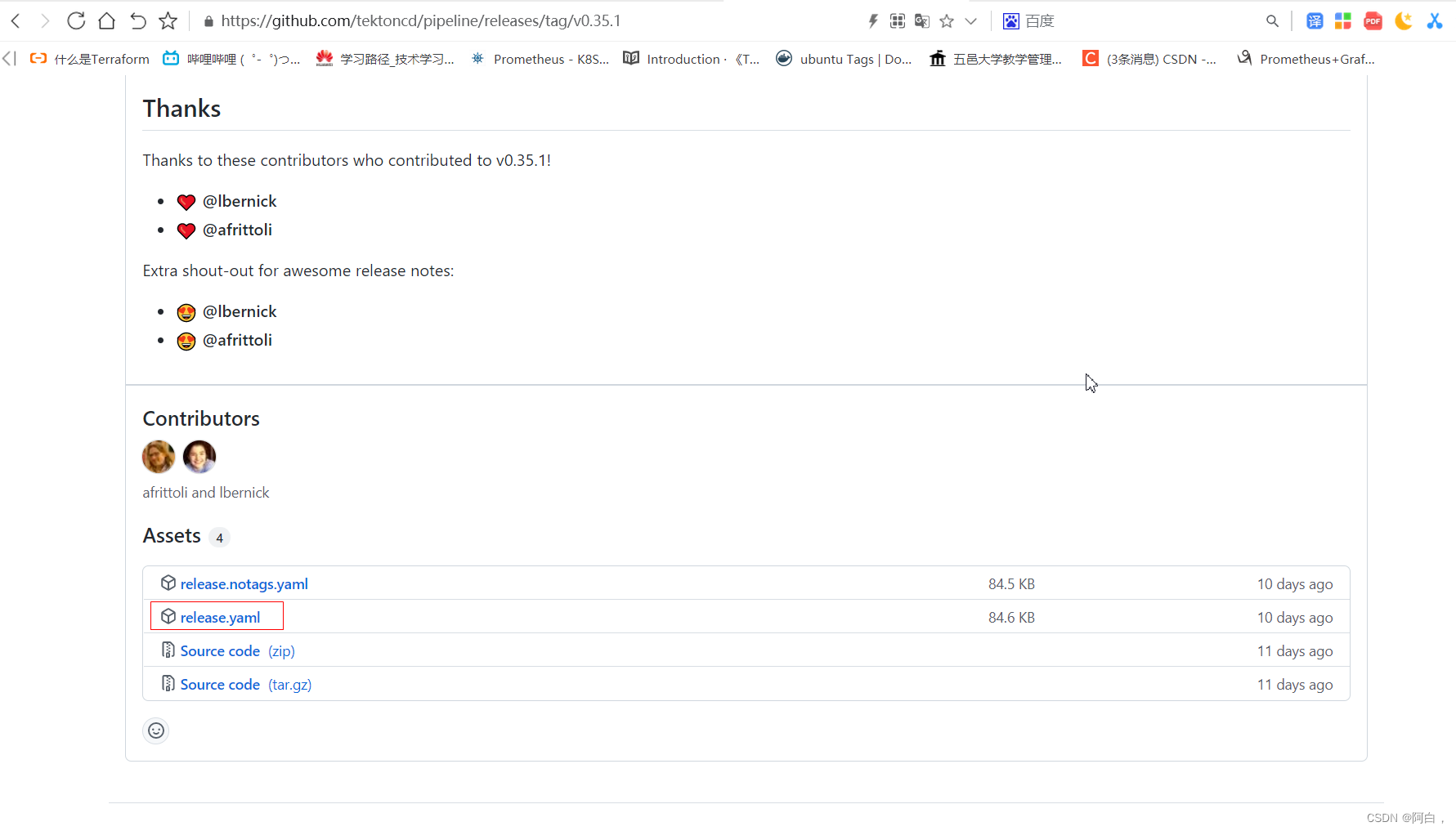

安装 Tekton 非常简单,可以直接通过 tektoncd/pipeline 的 GitHub 仓库中的 release.yaml 文件进行安装,如下所示的命令:

kubectl apply --filename https://storage.googleapis.com/tekton-releases/pipeline/previous/v0.24.1/release.yaml

选择想要的部署方式,这里直接用kubectl操作yaml文件方式部署

由于官方使用的镜像是 gcr 的镜像(镜像下载不了,可以去阿里云手动下载镜像下来再打标签即可),所以正常情况下我们是获取不到的,如果你的集群由于某些原因获取不到镜像,可以使用下面的资源清单文件,我已经将镜像替换成了 Docker Hub 上面的镜像:

kubectl apply -f https://www.qikqiak.com/k8strain2/devops/manifests/tekton/release.yaml

这里放这个文件内容出来,下面全是文件的内容,接下来接篇(三)

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: tekton-pipelines

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: tekton-pipelines

spec:

privileged: false

allowPrivilegeEscalation: false

volumes:

- 'emptyDir'

- 'configMap'

- 'secret'

hostNetwork: false

hostIPC: false

hostPID: false

runAsUser:

rule: 'RunAsAny'

seLinux:

rule: 'RunAsAny'

supplementalGroups:

rule: 'MustRunAs'

ranges:

- min: 1

max: 65535

fsGroup:

rule: 'MustRunAs'

ranges:

- min: 1

max: 65535

---

# Copyright 2020 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tekton-pipelines-controller-cluster-access

rules:

- apiGroups: [""]

# Namespace access is required because the controller timeout handling logic

# iterates over all namespaces and times out any PipelineRuns that have expired.

# Pod access is required because the taskrun controller wants to be updated when

# a Pod underlying a TaskRun changes state.

resources: ["namespaces", "pods"]

verbs: ["list", "watch"]

# Controller needs cluster access to all of the CRDs that it is responsible for

# managing.

- apiGroups: ["tekton.dev"]

resources: ["tasks", "clustertasks", "taskruns", "pipelines", "pipelineruns", "pipelineresources",

"conditions"]

verbs: ["get", "list", "create", "update", "delete", "patch", "watch"]

- apiGroups: ["tekton.dev"]

resources: ["taskruns/finalizers", "pipelineruns/finalizers"]

verbs: ["get", "list", "create", "update", "delete", "patch", "watch"]

- apiGroups: ["tekton.dev"]

resources: ["tasks/status", "clustertasks/status", "taskruns/status", "pipelines/status",

"pipelineruns/status", "pipelineresources/status"]

verbs: ["get", "list", "create", "update", "delete", "patch", "watch"]

- apiGroups: ["policy"]

resources: ["podsecuritypolicies"]

resourceNames: ["tekton-pipelines"]

verbs: ["use"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

# This is the access that the controller needs on a per-namespace basis.

name: tekton-pipelines-controller-tenant-access

rules:

- apiGroups: [""]

resources: ["pods", "pods/log", "secrets", "events", "serviceaccounts", "configmaps",

"persistentvolumeclaims", "limitranges"]

verbs: ["get", "list", "create", "update", "delete", "patch", "watch"]

# Unclear if this access is actually required. Simply a hold-over from the previous

# incarnation of the controller's ClusterRole.

- apiGroups: ["apps"]

resources: ["deployments"]

verbs: ["get", "list", "create", "update", "delete", "patch", "watch"]

- apiGroups: ["apps"]

resources: ["deployments/finalizers"]

verbs: ["get", "list", "create", "update", "delete", "patch", "watch"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tekton-pipelines-webhook-cluster-access

rules:

- # The webhook needs to be able to list and update customresourcedefinitions,

# mainly to update the webhook certificates.

apiGroups: ["apiextensions.k8s.io"]

resources: ["customresourcedefinitions", "customresourcedefinitions/status"]

verbs: ["get", "list", "update", "patch", "watch"]

- apiGroups: ["admissionregistration.k8s.io"]

# The webhook performs a reconciliation on these two resources and continuously

# updates configuration.

resources: ["mutatingwebhookconfigurations", "validatingwebhookconfigurations"]

# knative starts informers on these things, which is why we need get, list and watch.

verbs: ["list", "watch"]

- apiGroups: ["admissionregistration.k8s.io"]

resources: ["mutatingwebhookconfigurations"]

# This mutating webhook is responsible for applying defaults to tekton objects

# as they are received.

resourceNames: ["webhook.pipeline.tekton.dev"]

# When there are changes to the configs or secrets, knative updates the mutatingwebhook config

# with the updated certificates or the refreshed set of rules.

verbs: ["get", "update"]

- apiGroups: ["admissionregistration.k8s.io"]

resources: ["validatingwebhookconfigurations"]

# validation.webhook.pipeline.tekton.dev performs schema validation when you, for example, create TaskRuns.

# config.webhook.pipeline.tekton.dev validates the logging configuration against knative's logging structure

resourceNames: ["validation.webhook.pipeline.tekton.dev", "config.webhook.pipeline.tekton.dev"]

# When there are changes to the configs or secrets, knative updates the validatingwebhook config

# with the updated certificates or the refreshed set of rules.

verbs: ["get", "update"]

---

# Copyright 2020 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tekton-pipelines-controller

namespace: tekton-pipelines

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["list", "watch"]

- # The controller needs access to these configmaps for logging information and runtime configuration.

apiGroups: [""]

resources: ["configmaps"]

verbs: ["get"]

resourceNames: ["config-logging", "config-observability", "config-artifact-bucket",

"config-artifact-pvc", "feature-flags", "config-leader-election"]

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tekton-pipelines-webhook

namespace: tekton-pipelines

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["list", "watch"]

- # The webhook needs access to these configmaps for logging information.

apiGroups: [""]

resources: ["configmaps"]

verbs: ["get"]

resourceNames: ["config-logging", "config-observability"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["list", "watch"]

- # The webhook daemon makes a reconciliation loop on webhook-certs. Whenever

# the secret changes it updates the webhook configurations with the certificates

# stored in the secret.

apiGroups: [""]

resources: ["secrets"]

verbs: ["get", "update"]

resourceNames: ["webhook-certs"]

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: ServiceAccount

metadata:

name: tekton-pipelines-controller

namespace: tekton-pipelines

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: tekton-pipelines-webhook

namespace: tekton-pipelines

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: tekton-pipelines-controller-cluster-access

subjects:

- kind: ServiceAccount

name: tekton-pipelines-controller

namespace: tekton-pipelines

roleRef:

kind: ClusterRole

name: tekton-pipelines-controller-cluster-access

apiGroup: rbac.authorization.k8s.io

---

# If this ClusterRoleBinding is replaced with a RoleBinding

# then the ClusterRole would be namespaced. The access described by

# the tekton-pipelines-controller-tenant-access ClusterRole would

# be scoped to individual tenant namespaces.

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: tekton-pipelines-controller-tenant-access

subjects:

- kind: ServiceAccount

name: tekton-pipelines-controller

namespace: tekton-pipelines

roleRef:

kind: ClusterRole

name: tekton-pipelines-controller-tenant-access

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: tekton-pipelines-webhook-cluster-access

subjects:

- kind: ServiceAccount

name: tekton-pipelines-webhook

namespace: tekton-pipelines

roleRef:

kind: ClusterRole

name: tekton-pipelines-webhook-cluster-access

apiGroup: rbac.authorization.k8s.io

---

# Copyright 2020 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: tekton-pipelines-controller

namespace: tekton-pipelines

subjects:

- kind: ServiceAccount

name: tekton-pipelines-controller

namespace: tekton-pipelines

roleRef:

kind: Role

name: tekton-pipelines-controller

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: tekton-pipelines-webhook

namespace: tekton-pipelines

subjects:

- kind: ServiceAccount

name: tekton-pipelines-webhook

namespace: tekton-pipelines

roleRef:

kind: Role

name: tekton-pipelines-webhook

apiGroup: rbac.authorization.k8s.io

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: clustertasks.tekton.dev

labels:

pipeline.tekton.dev/release: "devel"

version: "devel"

spec:

group: tekton.dev

preserveUnknownFields: false

validation:

openAPIV3Schema:

type: object

# One can use x-kubernetes-preserve-unknown-fields: true

# at the root of the schema (and inside any properties, additionalProperties)

# to get the traditional CRD behaviour that nothing is pruned, despite

# setting spec.preserveUnknownProperties: false.

#

# See https://kubernetes.io/blog/2019/06/20/crd-structural-schema/

# See issue: https://github.com/knative/serving/issues/912

x-kubernetes-preserve-unknown-fields: true

versions:

- name: v1alpha1

served: true

storage: true

- name: v1beta1

served: true

storage: false

names:

kind: ClusterTask

plural: clustertasks

categories:

- tekton

- tekton-pipelines

scope: Cluster

# Opt into the status subresource so metadata.generation

# starts to increment

subresources:

status: {

}

conversion:

strategy: Webhook

webhookClientConfig:

service:

name: tekton-pipelines-webhook

namespace: tekton-pipelines

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: conditions.tekton.dev

labels:

pipeline.tekton.dev/release: "devel"

version: "devel"

spec:

group: tekton.dev

names:

kind: Condition

plural: conditions

categories:

- tekton

- tekton-pipelines

scope: Namespaced

# Opt into the status subresource so metadata.generation

# starts to increment

subresources:

status: {

}

version: v1alpha1

---

# Copyright 2018 The Knative Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: images.caching.internal.knative.dev

labels:

knative.dev/crd-install: "true"

spec:

group: caching.internal.knative.dev

version: v1alpha1

names:

kind: Image

plural: images

singular: image

categories:

- knative-internal

- caching

shortNames:

- img

scope: Namespaced

subresources:

status: {

}

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: pipelines.tekton.dev

labels:

pipeline.tekton.dev/release: "devel"

version: "devel"

spec:

group: tekton.dev

preserveUnknownFields: false

validation:

openAPIV3Schema:

type: object

# One can use x-kubernetes-preserve-unknown-fields: true

# at the root of the schema (and inside any properties, additionalProperties)

# to get the traditional CRD behaviour that nothing is pruned, despite

# setting spec.preserveUnknownProperties: false.

#

# See https://kubernetes.io/blog/2019/06/20/crd-structural-schema/

# See issue: https://github.com/knative/serving/issues/912

x-kubernetes-preserve-unknown-fields: true

versions:

- name: v1alpha1

served: true

storage: true

- name: v1beta1

served: true

storage: false

names:

kind: Pipeline

plural: pipelines

categories:

- tekton

- tekton-pipelines

scope: Namespaced

# Opt into the status subresource so metadata.generation

# starts to increment

subresources:

status: {

}

conversion:

strategy: Webhook

webhookClientConfig:

service:

name: tekton-pipelines-webhook

namespace: tekton-pipelines

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: pipelineruns.tekton.dev

labels:

pipeline.tekton.dev/release: "devel"

version: "devel"

spec:

group: tekton.dev

preserveUnknownFields: false

validation:

openAPIV3Schema:

type: object

# One can use x-kubernetes-preserve-unknown-fields: true

# at the root of the schema (and inside any properties, additionalProperties)

# to get the traditional CRD behaviour that nothing is pruned, despite

# setting spec.preserveUnknownProperties: false.

#

# See https://kubernetes.io/blog/2019/06/20/crd-structural-schema/

# See issue: https://github.com/knative/serving/issues/912

x-kubernetes-preserve-unknown-fields: true

versions:

- name: v1alpha1

served: true

storage: true

- name: v1beta1

served: true

storage: false

names:

kind: PipelineRun

plural: pipelineruns

categories:

- tekton

- tekton-pipelines

shortNames:

- pr

- prs

scope: Namespaced

additionalPrinterColumns:

- name: Succeeded

type: string

JSONPath: ".status.conditions[?(@.type==\"Succeeded\")].status"

- name: Reason

type: string

JSONPath: ".status.conditions[?(@.type==\"Succeeded\")].reason"

- name: StartTime

type: date

JSONPath: .status.startTime

- name: CompletionTime

type: date

JSONPath: .status.completionTime

# Opt into the status subresource so metadata.generation

# starts to increment

subresources:

status: {

}

conversion:

strategy: Webhook

webhookClientConfig:

service:

name: tekton-pipelines-webhook

namespace: tekton-pipelines

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: pipelineresources.tekton.dev

labels:

pipeline.tekton.dev/release: "devel"

version: "devel"

spec:

group: tekton.dev

names:

kind: PipelineResource

plural: pipelineresources

categories:

- tekton

- tekton-pipelines

scope: Namespaced

# Opt into the status subresource so metadata.generation

# starts to increment

subresources:

status: {

}

version: v1alpha1

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: tasks.tekton.dev

labels:

pipeline.tekton.dev/release: "devel"

version: "devel"

spec:

group: tekton.dev

preserveUnknownFields: false

validation:

openAPIV3Schema:

type: object

# One can use x-kubernetes-preserve-unknown-fields: true

# at the root of the schema (and inside any properties, additionalProperties)

# to get the traditional CRD behaviour that nothing is pruned, despite

# setting spec.preserveUnknownProperties: false.

#

# See https://kubernetes.io/blog/2019/06/20/crd-structural-schema/

# See issue: https://github.com/knative/serving/issues/912

x-kubernetes-preserve-unknown-fields: true

versions:

- name: v1alpha1

served: true

storage: true

- name: v1beta1

served: true

storage: false

names:

kind: Task

plural: tasks

categories:

- tekton

- tekton-pipelines

scope: Namespaced

# Opt into the status subresource so metadata.generation

# starts to increment

subresources:

status: {

}

conversion:

strategy: Webhook

webhookClientConfig:

service:

name: tekton-pipelines-webhook

namespace: tekton-pipelines

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: taskruns.tekton.dev

labels:

pipeline.tekton.dev/release: "devel"

version: "devel"

spec:

group: tekton.dev

preserveUnknownFields: false

validation:

openAPIV3Schema:

type: object

# One can use x-kubernetes-preserve-unknown-fields: true

# at the root of the schema (and inside any properties, additionalProperties)

# to get the traditional CRD behaviour that nothing is pruned, despite

# setting spec.preserveUnknownProperties: false.

#

# See https://kubernetes.io/blog/2019/06/20/crd-structural-schema/

# See issue: https://github.com/knative/serving/issues/912

x-kubernetes-preserve-unknown-fields: true

versions:

- name: v1alpha1

served: true

storage: true

- name: v1beta1

served: true

storage: false

names:

kind: TaskRun

plural: taskruns

categories:

- tekton

- tekton-pipelines

shortNames:

- tr

- trs

scope: Namespaced

additionalPrinterColumns:

- name: Succeeded

type: string

JSONPath: ".status.conditions[?(@.type==\"Succeeded\")].status"

- name: Reason

type: string

JSONPath: ".status.conditions[?(@.type==\"Succeeded\")].reason"

- name: StartTime

type: date

JSONPath: .status.startTime

- name: CompletionTime

type: date

JSONPath: .status.completionTime

# Opt into the status subresource so metadata.generation

# starts to increment

subresources:

status: {

}

conversion:

strategy: Webhook

webhookClientConfig:

service:

name: tekton-pipelines-webhook

namespace: tekton-pipelines

---

# Copyright 2020 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Secret

metadata:

name: webhook-certs

namespace: tekton-pipelines

labels:

pipeline.tekton.dev/release: devel

# The data is populated at install time.

---

apiVersion: admissionregistration.k8s.io/v1beta1

kind: ValidatingWebhookConfiguration

metadata:

name: validation.webhook.pipeline.tekton.dev

labels:

pipeline.tekton.dev/release: devel

webhooks:

- admissionReviewVersions:

- v1beta1

clientConfig:

service:

name: tekton-pipelines-webhook

namespace: tekton-pipelines

failurePolicy: Fail

sideEffects: None

name: validation.webhook.pipeline.tekton.dev

---

apiVersion: admissionregistration.k8s.io/v1beta1

kind: MutatingWebhookConfiguration

metadata:

name: webhook.pipeline.tekton.dev

labels:

pipeline.tekton.dev/release: devel

webhooks:

- admissionReviewVersions:

- v1beta1

clientConfig:

service:

name: tekton-pipelines-webhook

namespace: tekton-pipelines

failurePolicy: Fail

sideEffects: None

name: webhook.pipeline.tekton.dev

---

apiVersion: admissionregistration.k8s.io/v1beta1

kind: ValidatingWebhookConfiguration

metadata:

name: config.webhook.pipeline.tekton.dev

labels:

pipeline.tekton.dev/release: devel

webhooks:

- admissionReviewVersions:

- v1beta1

clientConfig:

service:

name: tekton-pipelines-webhook

namespace: tekton-pipelines

failurePolicy: Fail

sideEffects: None

name: config.webhook.pipeline.tekton.dev

namespaceSelector:

matchExpressions:

- key: pipeline.tekton.dev/release

operator: Exists

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: tekton-aggregate-edit

labels:

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rules:

- apiGroups:

- tekton.dev

resources:

- tasks

- taskruns

- pipelines

- pipelineruns

- pipelineresources

- conditions

verbs:

- create

- delete

- deletecollection

- get

- list

- patch

- update

- watch

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: tekton-aggregate-view

labels:

rbac.authorization.k8s.io/aggregate-to-view: "true"

rules:

- apiGroups:

- tekton.dev

resources:

- tasks

- taskruns

- pipelines

- pipelineruns

- pipelineresources

- conditions

verbs:

- get

- list

- watch

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: ConfigMap

metadata:

name: config-artifact-bucket

namespace: tekton-pipelines

# data:

# # location of the gcs bucket to be used for artifact storage

# location: "gs://bucket-name"

# # name of the secret that will contain the credentials for the service account

# # with access to the bucket

# bucket.service.account.secret.name:

# # The key in the secret with the required service account json

# bucket.service.account.secret.key:

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: ConfigMap

metadata:

name: config-artifact-pvc

namespace: tekton-pipelines

# data:

# # size of the PVC volume

# size: 5Gi

#

# # storage class of the PVC volume

# storageClassName: storage-class-name

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: ConfigMap

metadata:

name: config-defaults

namespace: tekton-pipelines

data:

_example: |-

################################

# #

# EXAMPLE CONFIGURATION #

# #

################################

# This block is not actually functional configuration,

# but serves to illustrate the available configuration

# options and document them in a way that is accessible

# to users that `kubectl edit` this config map.

#

# These sample configuration options may be copied out of

# this example block and unindented to be in the data block

# to actually change the configuration.

# default-timeout-minutes contains the default number of

# minutes to use for TaskRun and PipelineRun, if none is specified.

default-timeout-minutes: "60" # 60 minutes

# default-service-account contains the default service account name

# to use for TaskRun and PipelineRun, if none is specified.

default-service-account: "default"

# default-managed-by-label-value contains the default value given to the

# "app.kubernetes.io/managed-by" label applied to all Pods created for

# TaskRuns. If a user's requested TaskRun specifies another value for this

# label, the user's request supercedes.

default-managed-by-label-value: "tekton-pipelines"

# default-pod-template contains the default pod template to use

# TaskRun and PipelineRun, if none is specified. If a pod template

# is specified, the default pod template is ignored.

# default-pod-template:

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: ConfigMap

metadata:

name: feature-flags

namespace: tekton-pipelines

data:

# Setting this flag to "true" will prevent Tekton overriding your

# Task container's $HOME environment variable.

#

# The default behaviour currently is for Tekton to override the

# $HOME environment variable but this will change in an upcoming

# release.

#

# See https://github.com/tektoncd/pipeline/issues/2013 for more

# info.

disable-home-env-overwrite: "false"

# Setting this flag to "true" will prevent Tekton overriding your

# Task container's working directory.

#

# The default behaviour currently is for Tekton to override the

# working directory if not set by the user but this will change

# in an upcoming release.

#

# See https://github.com/tektoncd/pipeline/issues/1836 for more

# info.

disable-working-directory-overwrite: "false"

---

# Copyright 2020 Tekton Authors LLC

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: ConfigMap

metadata:

name: config-leader-election

namespace: tekton-pipelines

data:

# An inactive but valid configuration follows; see example.

resourceLock: "leases"

leaseDuration: "15s"

renewDeadline: "10s"

retryPeriod: "2s"

---

# Copyright 2019 Tekton Authors LLC

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: ConfigMap

metadata:

name: config-logging

namespace: tekton-pipelines

data:

# Common configuration for all knative codebase

zap-logger-config: |

{

"level": "info",

"development": false,

"sampling": {

"initial": 100,

"thereafter": 100

},

"outputPaths": ["stdout"],

"errorOutputPaths": ["stderr"],

"encoding": "json",

"encoderConfig": {

"timeKey": "",

"levelKey": "level",

"nameKey": "logger",

"callerKey": "caller",

"messageKey": "msg",

"stacktraceKey": "stacktrace",

"lineEnding": "",

"levelEncoder": "",

"timeEncoder": "",

"durationEncoder": "",

"callerEncoder": ""

}

}

# Log level overrides

loglevel.controller: "info"

loglevel.webhook: "info"

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: ConfigMap

metadata:

name: config-observability

namespace: tekton-pipelines

data:

_example: |

################################

# #

# EXAMPLE CONFIGURATION #

# #

################################

# This block is not actually functional configuration,

# but serves to illustrate the available configuration

# options and document them in a way that is accessible

# to users that `kubectl edit` this config map.

#

# These sample configuration options may be copied out of

# this example block and unindented to be in the data block

# to actually change the configuration.

# metrics.backend-destination field specifies the system metrics destination.

# It supports either prometheus (the default) or stackdriver.

# Note: Using Stackdriver will incur additional charges.

metrics.backend-destination: prometheus

# metrics.stackdriver-project-id field specifies the Stackdriver project ID. This

# field is optional. When running on GCE, application default credentials will be

# used and metrics will be sent to the cluster's project if this field is

# not provided.

metrics.stackdriver-project-id: "<your stackdriver project id>"

# metrics.allow-stackdriver-custom-metrics indicates whether it is allowed

# to send metrics to Stackdriver using "global" resource type and custom

# metric type. Setting this flag to "true" could cause extra Stackdriver

# charge. If metrics.backend-destination is not Stackdriver, this is

# ignored.

metrics.allow-stackdriver-custom-metrics: "false"

---

# Copyright 2019 The Tekton Authors

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: apps/v1

kind: Deployment

metadata:

name: tekton-pipelines-controller

namespace: tekton-pipelines

labels:

app.kubernetes.io/name: tekton-pipelines

app.kubernetes.io/component: controller

pipeline.tekton.dev/release: "v0.12.0"

version: "v0.12.0"

spec:

replicas: 1

selector:

matchLabels:

app: tekton-pipelines-controller

template:

metadata:

annotations:

cluster-autoscaler.kubernetes.io/safe-to-evict: "false"

labels:

app: tekton-pipelines-controller

app.kubernetes.io/name: tekton-pipelines

app.kubernetes.io/component: controller

# tekton.dev/release value replaced with inputs.params.versionTag in pipeline/tekton/publish.yaml

pipeline.tekton.dev/release: "v0.12.0"

version: "v0.12.0"

spec:

serviceAccountName: tekton-pipelines-controller

containers:

- name: tekton-pipelines-controller

image: cnych/tekton-controller:v0.12.0

args: [

# These images are built on-demand by `ko resolve` and are replaced

# by image references by digest.

"-kubeconfig-writer-image", "cnych/tekton-kubeconfigwriter:v0.12.0",

"-creds-image", "cnych/tekton-creds-init:v0.12.0",

"-git-image", "cnych/tekton-git-init:v0.12.0",

"-entrypoint-image", "cnych/tekton-entrypoint:v0.12.0",

"-imagedigest-exporter-image", "cnych/tekton-imagedigestexporter:v0.12.0",

"-pr-image", "cnych/tekton-pullrequest-init:v0.12.0",

"-build-gcs-fetcher-image", "cnych/tekton-gcs-fetcher:v0.12.0",

# These images are pulled from Dockerhub, by digest, as of April 15, 2020.

"-nop-image", "tianon/true@sha256:009cce421096698832595ce039aa13fa44327d96beedb84282a69d3dbcf5a81b",

"-shell-image", "busybox@sha256:a2490cec4484ee6c1068ba3a05f89934010c85242f736280b35343483b2264b6",

"-gsutil-image", "google/cloud-sdk@sha256:6e8676464c7581b2dc824956b112a61c95e4144642bec035e6db38e3384cae2e"]

volumeMounts:

- name: config-logging

mountPath: /etc/config-logging

env:

- name: SYSTEM_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- # If you are changing these names, you will also need to update

# the controller's Role in 200-role.yaml to include the new

# values in the "configmaps" "get" rule.

name: CONFIG_LOGGING_NAME

value: config-logging

- name: CONFIG_OBSERVABILITY_NAME

value: config-observability

- name: CONFIG_ARTIFACT_BUCKET_NAME

value: config-artifact-bucket