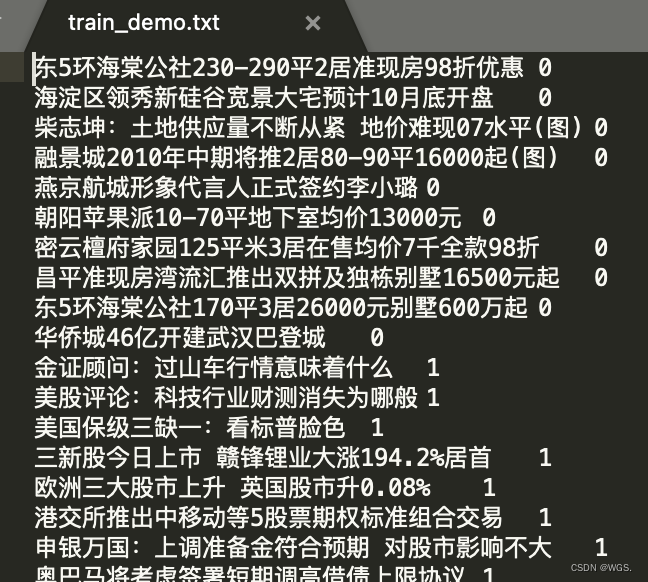

demo数据结构

THUCNews中抽取了20万条新闻标题,文本长度在20到30之间。一共10个类别,每类2万条。

类别:财经、房产、股票、教育、科技、社会、时政、体育、游戏、娱乐。

数据集划分:

| 数据集 | 数据量 |

|---|---|

| 训练集 | 18万 |

| 验证集 | 1万 |

| 测试集 | 1万 |

数据链接:https://download.csdn.net/download/qq_42363032/86511652

demo示例如下:

封装数据预处理

demo流程如下,本博文公开封装代码,可根据需要自行嵌入

- 构建词表

- 提取预训练向量

MAX_VOCAB_SIZE = 10000 # 词表长度限制

UNK, PAD = '<UNK>', '<PAD>' # 未知字,padding

''' 获取已使用时间 '''

def get_time_dif(start_time):

end_time = time.time()

time_dif = end_time - start_time

return timedelta(seconds=int(round(time_dif)))

''' 构建词表 '''

def build_vocab(file_path, tokenizer, max_size, min_freq):

'''

构建词表

exp:{' ': 0, '0': 1, '1': 2, '2': 3, ':': 4, '大': 5, '国': 6, '图': 7, '(': 8, ')': 9, '3': 10, '人': 11, '年': 12, '5': 13, '中': 14, '新': 15,...

:param file_path: 数据路径

:param tokenizer: 构建词表的切分方式:word level(以词为单位构建,词之间以空格隔开)、char level(以字为单位构建)

:param max_size: 词表长度限制

:param min_freq: 词频阈值,小于阈值的不放入词频,即低频过滤了

:return:

'''

vocab_dic = {

}

with open(file_path, 'r', encoding='UTF-8') as f:

# 读取每行

for line in tqdm(f):

lin = line.strip() # 去掉最后的\n

if not lin: # 如果是空格直接跳过

continue

content = lin.split('\t')[0] # 文本数据用\t分割,第一个[0]为文本,[1]为标签

for word in tokenizer(content):

# 频次字典,如果有就返回结果出现次数+1,没有就是1

vocab_dic[word] = vocab_dic.get(word, 0) + 1

# 降序,截取到max_size

vocab_list = sorted([_ for _ in vocab_dic.items() if _[1] >= min_freq], key=lambda x: x[1], reverse=True)[:max_size]

# 生成词表字典

vocab_dic = {

word_count[0]: idx for idx, word_count in enumerate(vocab_list)}

# 更新两个字符:unk、pad

vocab_dic.update({

UNK: len(vocab_dic), PAD: len(vocab_dic) + 1})

return vocab_dic

''' 获取&保存词表 '''

def get_vocab(train_dir, tokenizer, vocab_dir):

if os.path.exists(vocab_dir):

word_to_id = pkl.load(open(vocab_dir, 'rb'))

else:

word_to_id = build_vocab(train_dir, tokenizer=tokenizer, max_size=MAX_VOCAB_SIZE, min_freq=1)

pkl.dump(word_to_id, open(vocab_dir, 'wb'))

return word_to_id, len(word_to_id)

''' 提取预训练词向量 '''

def get_pre_emb(vocab, emb_dim, pretrain_dir, filename_trimmed_dir):

if os.path.exists(filename_trimmed_dir):

return None

embeddings = np.random.rand(len(vocab), emb_dim)

f = open(pretrain_dir, "r", encoding='UTF-8')

for i, line in enumerate(f.readlines()):

# if i == 0: # 若第一行是标题,则跳过

# continue

lin = line.strip().split(" ")

if lin[0] in vocab:

idx = vocab[lin[0]]

# emb = [float(x) for x in lin[1:301]]

emb = [float(x) for x in lin[1:emb_dim + 1]]

embeddings[idx] = np.asarray(emb, dtype='float32')

f.close()

# 以.npz压缩保存

np.savez_compressed(filename_trimmed_dir, embeddings=embeddings)

print(embeddings.shape)

MyDataSet

class MyDataSet(Dataset):

def __init__(self, path, vocab_file, pad_size, tokenizer):

self.contents = []

self.labels = []

# 读取数据

file = open(path, 'r')

for line in file.readlines():

line = line.strip().split('\t')

content, label = line[0], line[1]

self.contents.append(content)

self.labels.append(label)

file.close()

self.pad_size = pad_size

self.tokenizer = tokenizer

self.vocab = vocab_file

def __len__(self):

return len(self.contents)

# 该函数是返回单条样本

def __getitem__(self, idx):

content, label = self.contents[idx], self.labels[idx]

token = self.tokenizer(content)

seq_len = len(token)

words_line = []

# 数据预处理的时候统一padding

# 如果当前句子小于指定的长度,就补长

if len(token) < self.pad_size:

token.extend([PAD] * (self.pad_size - len(token)))

else:

# 如果不是的话,就截断

token = token[:self.pad_size]

seq_len = self.pad_size

for word in token:

words_line.append(self.vocab.get(word, self.vocab.get(UNK)))

tensor = torch.Tensor(words_line).long()

label = int(label)

seq_len = int(seq_len)

return (tensor, seq_len), label

封装训练、评估

def train_model(net, train_loader, val_loader, config, model_name):

'''

:param net:

:param train_loader:

:param val_loader:

:param config:

:return:

'''

loss_function = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=config.learning_rate)

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=30, gamma=0.1, last_epoch=-1)

early_stopping = EarlyStopping(savepath='./data/{}_checkpoint.pt'.format(model_name), patience=1)

start_time = time.time()

print("\n" + "********** start training **********")

writer = SummaryWriter(log_dir=config.log_path + '/' + time.strftime('%m-%d_%H.%M', time.localtime()))

columns = ["epoch", "loss", * config.metric_name, "val_loss"] + ['val_' + mn for mn in config.metric_name]

dfhistory = pd.DataFrame(columns=columns)

for epoch in range(1, config.epochs + 1):

''' 训练 '''

print("Epoch {0} / {1}".format(epoch, config.epochs))

step_start = time.time()

step_num = 0

train_loss, train_probs, train_y, train_pre = [], [], [], []

net.train()

for batch, (x, y) in enumerate(train_loader):

step_num += 1

optimizer.zero_grad()

pred_probs = net(x)

loss = loss_function(pred_probs, y)

loss.backward()

optimizer.step()

train_loss.append(loss.item())

train_probs.extend(pred_probs.tolist())

train_y.extend(y.tolist())

# train_pre.extend(torch.where(pred_probs > 0.5, torch.ones_like(pred_probs), torch.zeros_like(pred_probs)))

train_pre.extend(torch.argmax(pred_probs.data, dim=1))

if step_num % 100 == 0:

step_train_acc = accuracy_score(y_true=y.tolist(), y_pred=torch.argmax(pred_probs.data))

writer.add_scalar('loss/train', loss.item(), step_num)

writer.add_scalar('acc/val', step_train_acc, step_num)

''' 验证 '''

val_loss, val_probs, val_y, val_pre = [], [], [], []

net.eval()

with torch.no_grad():

for batch, (x, y) in enumerate(val_loader):

pred_probs = net(x)

loss = loss_function(pred_probs, y)

val_loss.append(loss.item())

val_probs.extend(pred_probs.tolist())

val_y.extend(y.tolist())

# val_pre.extend(torch.where(pred_probs > 0.5, torch.ones_like(pred_probs), torch.zeros_like(pred_probs)))

val_pre.extend(torch.argmax(pred_probs.data, dim=1))

''' 一次epoch结束 记录日志 '''

epoch_loss, epoch_val_loss = np.mean(train_loss), np.mean(val_loss)

# train_auc = roc_auc_score(y_true=train_y, y_score=train_probs)

train_acc = accuracy_score(y_true=train_y, y_pred=train_pre)

# val_auc = roc_auc_score(y_true=val_y, y_score=val_probs)

val_acc = accuracy_score(y_true=val_y, y_pred=val_pre)

# dfhistory.loc[epoch - 1] = (epoch, epoch_loss, train_acc, train_auc, epoch_val_loss, val_acc, val_auc)

dfhistory.loc[epoch - 1] = (epoch, epoch_loss, train_acc, epoch_val_loss, val_acc)

step_end = time.time()

# print("step_num: %s - %.1fs - loss: %.5f accuracy: %.5f auc: %.5f - val_loss: %.5f val_accuracy: %.5f val_auc: %.5f"% (step_num, (step_end - step_start) % 60, epoch_loss, train_acc, train_auc, epoch_val_loss, val_acc, val_auc))

print("step_num: %s - %.1fs - loss: %.5f accuracy: %.5f - val_loss: %.5f val_accuracy: %.5f"% (step_num, (step_end - step_start) % 60, epoch_loss, train_acc, epoch_val_loss, val_acc))

# if scheduler is not None:

# scheduler.step()

#

# if early_stopping is not None:

# early_stopping(epoch_val_loss, net)

# if early_stopping.early_stop:

# print("Early stopping")

# break

writer.close()

end_time = time.time()

print('********** end of training run time: {:.0f}分 {:.0f}秒 **********'.format((end_time - start_time) // 60, (end_time - start_time) % 60))

print()

return dfhistory

def evaluate_model(net, text_loader, config, device='cpu', show=False):

'''

测试集评估demo

待修改:注释部分多分类评估

:param net:

:param text_loader:

:param config:

:param device:

:param show:

:return:

'''

val_loss, val_probs, val_y, val_pre = [], [], [], []

net.eval()

with torch.no_grad():

for batch, (x, y) in enumerate(text_loader):

pred_probs = net(x)

loss = F.cross_entropy(pred_probs, y)

val_loss.append(loss.item())

val_probs.extend(pred_probs.tolist())

val_y.extend(y.tolist())

val_pre.extend(torch.argmax(pred_probs.data, dim=1))

acc_condition, precision_condition, recall_condition = accDealWith2(val_y, val_pre)

# precision = np.around(metrics.precision_score(val_y, val_pre), 4)

# recall = np.around(metrics.recall_score(val_y, val_pre), 4)

# accuracy = np.around(metrics.accuracy_score(val_y, val_pre), 4)

# f1 = np.around(metrics.f1_score(val_y, val_pre), 4)

if show:

print('=' * 30)

print('test eval')

print(' loss: ', loss)

# print(' accuracy: ', accuracy)

# print(' precision: ', precision)

# print(' recall: ', recall)

# print(' f1: ', f1)

print(' ', acc_condition)

print(' ', precision_condition)

print(' ', recall_condition)

report = metrics.classification_report(val_y, val_pre, target_names=config.target_names)

print(report)

# return precision, recall, accuracy, f1, loss, acc_condition, precision_condition, recall_condition

return loss, acc_condition, precision_condition, recall_condition

def predict(net, content, vocab, tcfig):

'''

单条预测demo

:param net:

:param content:

:param vocab:

:param tcfig:

:return:

'''

token = tcfig.char_tokenizer(content)

print(token)

seq_len = len(token)

words_line = []

if len(token) < tcfig.pad_size:

token.extend([PAD] * (tcfig.pad_size - len(token)))

else:

token = token[:tcfig.pad_size]

seq_len = tcfig.pad_size

for word in token:

words_line.append(vocab.get(word, vocab.get(UNK)))

x = (torch.Tensor([words_line]).long(), seq_len)

print(x)

out = net(x)

outs = torch.max(out.data, dim=1)

pre_probs, pre = outs[0], outs[1]

return pre_probs, pre

run.py

# coding:utf-8

# @Time: 2022/9/8 10:46 上午

# @File: run.py

'''

run model

'''

import torch

from torch.autograd import Variable

from torch import nn, optim

import torch.nn.functional as F

from torch.utils.data import Dataset, DataLoader

from torchkeras import summary

import os

import numpy as np

import pickle as pkl

from tqdm import tqdm

import time

from datetime import timedelta

from importlib import import_module

import argparse

from utils import *

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# device = torch.device('cuda')

# gpus = [0, 1]

np.random.seed(1)

torch.manual_seed(1)

torch.cuda.manual_seed_all(1)

torch.backends.cudnn.deterministic = True # 保证每次结果一样

class TotalConfig():

'''

total config

:param train_dir: 训练集

:param val_dir: 验证集

:param vocab_dir: 词表

:param sougou_pretrain_dir: 搜狗新闻预训练emb

:param filename_trimmed_dir: 提取搜狗预训练词向量

:param device: 单卡/CPU/单击多卡

:param gpus: 单击多卡

:param emb_way: emb初始化方式,搜狗新闻:embedding_SougouNews.npz, 腾讯:embedding_Tencent.npz, 随机初始化:random

:param word_tokenizer: 以词为单位构建词表(数据集中词之间以空格隔开)

:param char_tokenizer: 以字为单位构建词表

:param emb_dim:

:param pad_size: 每句话处理成的长度(短填长切)

:param num_classes: 类别数

:param n_vocab: 词表大小,运行时赋值

:param epochs:

:param batch_size:

:param learning_rate:

'''

def __init__(self, model_name='TextCNN', doka_device=False, gpus=None):

'''

:param doka_device: True:一机多卡,False:单卡/cpu

:param gpus: 多卡

'''

self.train_dir = "./data/train_demo.txt"

self.val_dir = "./data/val_demo.txt"

self.vocab_dir = "./data/vocab_demo.pkl"

self.sougou_pretrain_dir = "./data/sgns.sogou.char"

self.filename_trimmed_dir = "./data/embedding_SougouNews_demo"

self.target_names = [x.strip() for x in open('./data/class_demo.txt', encoding='utf-8').readlines()]

self.model_name = model_name

self.save_path = './saved_dict/{}.ckpt'

self.log_path = './log/{}'

self.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') \

if doka_device is False else torch.device('cuda')

self.gpus = gpus

self.emb_way = 'embedding_SougouNews.npz'

# self.emb_way = 'random'

self.word_tokenizer = lambda x: x.split(' ')

self.char_tokenizer = lambda x: [y for y in x]

self.emb_dim = 300

self.pad_size = 32 # seq_len

self.num_classes = 5

self.n_vocab = 0

self.epochs = 3

self.batch_size = 2

self.learning_rate = 1e-3

self.metric_name = ['accuracy']

def get_dataloader(train_dir, val_dir, vocab_dir, pad_size, tokenizer, batch_size):

start_time = time.time()

print("Loading DataLoader...")

# 读词表

vocab = pkl.load(open(vocab_dir, 'rb'))

train_set = MyDataSet(path=train_dir, vocab_file=vocab, pad_size=pad_size, tokenizer=tokenizer)

val_set = MyDataSet(path=val_dir, vocab_file=vocab, pad_size=pad_size, tokenizer=tokenizer)

train_loader = DataLoader(train_set, batch_size=batch_size, shuffle=True)

val_loader = DataLoader(val_set, batch_size=batch_size, shuffle=False)

time_dif = get_time_dif(start_time)

print("Loading DataLoader Time usage:", time_dif)

return train_loader, val_loader

if __name__ == '__main__':

print()

tcfig = TotalConfig()

'''构建词表'''

vocab, n_vocab = get_vocab(tcfig.train_dir, tokenizer=tcfig.char_tokenizer, vocab_dir=tcfig.vocab_dir)

tcfig.n_vocab = n_vocab

# '''提取预训练词向量'''

# get_pre_emb(vocab, tcfig.emb_dim, tcfig.sougou_pretrain_dir, tcfig.filename_trimmed_dir)

'''DataLoader'''

train_loader, val_loader = get_dataloader(tcfig.train_dir, tcfig.val_dir, tcfig.vocab_dir, tcfig.pad_size, tcfig.char_tokenizer, tcfig.batch_size)

'''init model'''

model_name = 'TextRNN'

tcfig.model_name = model_name

tcfig.save_path = tcfig.save_path.format(model_name)

tcfig.log_path = tcfig.log_path.format(model_name)

obj = import_module('models.' + model_name)

config = obj.Config(emb_way=tcfig.emb_way, emb_dim=tcfig.emb_dim, num_classes=tcfig.num_classes, n_vocab=tcfig.n_vocab)

net = obj.TextRNN(config=config)

print(net)

# '''train'''

# dfhistory = train_model(net=net, train_loader=train_loader, val_loader=val_loader, config=tcfig, model_name=model_name)

#

# '''eval'''

# evaluate_model(net=net, text_loader=val_loader, config=tcfig, show=True)

'''test'''

# torch.save(net.state_dict(), tcfig.save_path)

net.load_state_dict(torch.load(tcfig.save_path))

content = '细节显品质 热门全能型实用本本推荐'

pre_probs, pre = predict(net=net, content=content, vocab=vocab, tcfig=tcfig)

print(pre_probs, pre)

完整代码Git链接

https://github.com/WGS-note/TextClassification

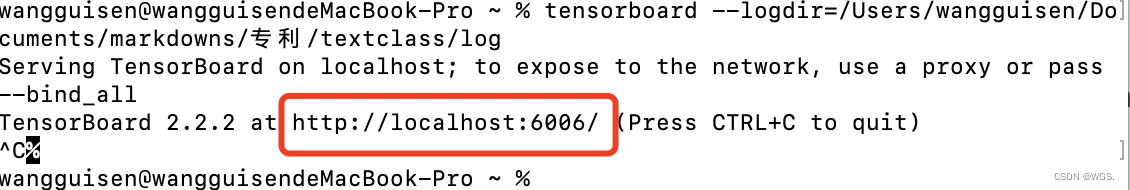

tensorboard使用

tensorboard --logdir=/Users/wangguisen/Documents/markdowns/专利/textclass/log

扫描二维码关注公众号,回复:

14521454 查看本文章

注意:此log为训练时保存的,打开页面访问即可