一、系统资源初始化

ceph可以实现的存储方式:

- 块存储:提供像普通硬盘一样的存储,为使用者提供 “硬盘”。

- 文件系统存储:类似于 NFS 的共享方式,为使用者提供共享文件夹。

- 对象存储:像百度云盘一样,需要使用单独的客户端。

ceph 还是一个分布式的存储系统,非常灵活。如果需要扩容,只要向 ceph 集中增加服务器即可。ceph 存储数据时采用多副本的方式进行存储,生产环境下,一个文件至少要存 3 份,ceph 默认也是三副本存储。

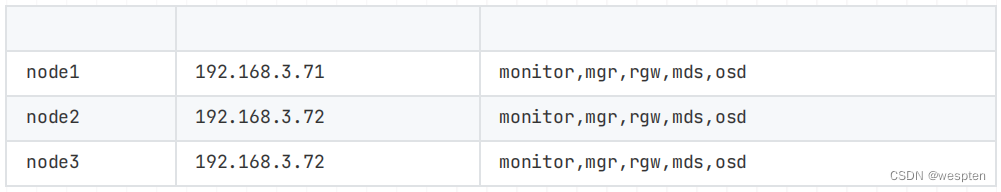

节点规划:

ceph 的构成:

- Ceph Mon 监控器:承担Ceph集群重要的管理任务,一般需要3或5个节点。Ceph Mon 维护 Ceph 存储集群映射的主副本和 Ceph 存储群集的当前状态。监控器需要高度一致性,确保对 Ceph 存储集群状态达成一致。维护着展示集群状态的各种图表,包括监视器图、 OSD 图、归置组( PG )图、和 CRUSH 图。

- Ceph OSD 守护进程: Ceph OSD 实际负责数据存储的节点。此外,Ceph OSD 利用 Ceph 节点的 CPU、内存和网络来执行数据复制、纠删代码、重新平衡、恢复、监控和报告功能。存储节点有几块硬盘用于存储,该节点就会有几个 osd 进程。

- mgr:Ceph 集群管理节点(manager),为外界提供统一的入口。

- mds:Ceph 元数据服务器( MDS )为 Ceph 文件系统存储元数据。

- rgw:对象存储网关。主要为访问 ceph 的软件提供 API 接口,使客户端能够利用标准对象存储API来访问Ceph集群mds。Ceph元数据服务器 MetaData Server,主要保存的文件系统服务的元数据,使用文件存储时才需要该组件。

Ceph官方文档:

架构指南 Red Hat Ceph Storage 5 | Red Hat Customer Portal

主机名设置:

hostnamectl set-hostname node1

hostnamectl set-hostname node2

hostnamectl set-hostname node3各节点配置hosts:

cat >> /etc/hosts <<EOF

192.168.3.71 node1

192.168.3.72 node2

192.168.3.73 node3

EOF基础配置:

systemctl disable --now firewalld

setenforce 0

sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config时间同步:

yum -y install chrony

sed -i "/^server/d" /etc/chrony.conf;sed -i "/# Please/a\server ntp1.aliyun.com iburst"

/etc/chrony.conf

#主时间同步加这个

sed -i "/^#allow/a\allow 192.168.3.0/24" /etc/chrony.conf

# 副时间同步到主(暂不做)

sed -i "/^server/d" /etc/chrony.conf;sed -i "/# Please/a\server 192.168.3.71 iburst"

/etc/chrony.conf

# 启动服务并查看

systemctl enable chronyd --now;chronyc sources添加yum源:

[epel]

name=epel

baseurl=https:!%mirrors.tuna.tsinghua.edu.cn/epel/8/Everything/x86_64/

gpgcheck=0

cat > /etc/yum.repos.d/ceph.repo<<END

[Ceph]

name=Ceph packages for \$basearch

baseurl=http://mirrors.aliyun.com/ceph/rpm-octopus/el8/\$basearch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-octopus/el8/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-octopus/el8/SRPMS

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

ENDssh免密配置:

ssh-keygen

ssh-copy-id root@localhost

scp -r .ssh root@node2:~/

scp -r .ssh root@node3:~/安装docker:(所有节点操作,包括新增)

# 配置docker源

cat > /etc/yum.repos.d/docker.repo <<END

[docker-ce-stable]

name=docker-ce-stable

baseurl=https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/centos/8/x86_64/stable/

gpgcheck=0

END

# 安装 docker

yum -y install docker-ce

# 配置镜像加速器

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://puwtocmd.mirror.aliyuncs.com"]

}

EOF

systemctl daemon-reload

systemctl restart docker二、cephadm 安装配置

安装需要的包:

yum install epel* -y

yum install -y ceph-mon ceph-osd ceph-mds ceph-radosgw

yum install -y python3安装cephadm:

mkdir my-cluster

cd my-cluster

# 下载速度快,可以科学上网

curl --silent --remote-name --location

https://github.com/ceph/ceph/raw/quincy/src/cephadm/cephadm

chmod +x cephadm

# 其实也可以yum安装呢cephadm -> [Ceph-noarch]

dnf install --assumeyes cephadm

#cephadm通过运行which以下命令来确认该路径现在位于哪个PATH

which cephadm

//返回

/usr/sbin/cephadm引导新的集群:

cephadm bootstrap --mon-ip 192.168.3.71

Creating directory /etc/ceph for ceph.conf

Verifying podman|docker is present...

Verifying lvm2 is present...

Verifying time synchronization is in place...

Unit chronyd.service is enabled and running

Repeating the final host check...

docker (/usr/bin/docker) is present

systemctl is present

lvcreate is present

Unit chronyd.service is enabled and running

Host looks OK

Cluster fsid: d775a908-89c9-11ed-b579-500000010000

Verifying IP 192.168.3.71 port 3300 ...

Verifying IP 192.168.3.71 port 6789 ...

Mon IP `192.168.3.71` is in CIDR network `192.168.3.0/24`

Mon IP `192.168.3.71` is in CIDR network `192.168.3.0/24`

Internal network (!$cluster-network) has not been provided, OSD replication will default

to the public_network

Pulling container image quay.io/ceph/ceph:v17...

Ceph version: ceph version 17.2.5 (98318ae89f1a893a6ded3a640405cdbb33e08757) quincy

(stable)

Extracting ceph user uid/gid from container image...

Creating initial keys...

Creating initial monmap...

Creating mon...

Waiting for mon to start...

Waiting for mon...

mon is available

Assimilating anything we can from ceph.conf...

Generating new minimal ceph.conf...

Restarting the monitor...

Setting mon public_network to 192.168.3.0/24

Wrote config to /etc/ceph/ceph.conf

Wrote keyring to /etc/ceph/ceph.client.admin.keyring

Creating mgr...

Verifying port 9283 ...

Waiting for mgr to start...

Waiting for mgr...

mgr not available, waiting (1/15)...

mgr not available, waiting (2/15)...

mgr not available, waiting (3/15)...

mgr is available

Enabling cephadm module...

Waiting for the mgr to restart...

Waiting for mgr epoch 5...

mgr epoch 5 is available

Setting orchestrator backend to cephadm...

Generating ssh key...

Wrote public SSH key to /etc/ceph/ceph.pub

Adding key to root@localhost authorized_keys...

Adding host node1...

Deploying mon service with default placement...

Deploying mgr service with default placement...

Deploying crash service with default placement...

Deploying prometheus service with default placement...

Deploying grafana service with default placement...

Deploying node-exporter service with default placement...

Deploying alertmanager service with default placement...

Enabling the dashboard module...

Waiting for the mgr to restart...

Waiting for mgr epoch 9...

mgr epoch 9 is available

Generating a dashboard self-signed certificate...

Creating initial admin user...

Fetching dashboard port number...

Ceph Dashboard is now available at:

URL: https://node1:8443/

User: admin

Password: hktdc38ogw

Enabling client.admin keyring and conf on hosts with "admin" label

Saving cluster configuration to /var/lib/ceph/d775a908-89c9-11ed-b579-500000010000/config directory

Enabling autotune for osd_memory_target

You can access the Ceph CLI as following in case of multi-cluster or non-default config:

sudo /usr/sbin/cephadm shell --fsid d775a908-89c9-11ed-b579-500000010000 -c

/etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring Or, if you are only running a single cluster on this host:

sudo /usr/sbin/cephadm shell

Please consider enabling telemetry to help improve Ceph:

ceph telemetry on

For more information see:

https://docs.ceph.com/docs/master/mgr/telemetry/

Bootstrap complete.将主机添加到集群中:

要将每个新主机添加到群集,请执行两个步骤:

在新主机的根用户authorized_keys文件中安装群集的公共SSH密钥:

ssh-copy-id -f -i /etc/ceph/ceph.pub root@node2

ssh-copy-id -f -i /etc/ceph/ceph.pub root@node3

# 告诉ceph,新节点是集群的一部分

cephadm shell -- ceph orch host add node2

cephadm shell -- ceph orch host add node3

[ceph: root@node1 /]# ceph orch host ls

HOST ADDR LABELS STATUS

node1 192.168.3.71 _admin

node2 192.168.3.72

node3 192.168.3.73

3 hosts in cluster将磁盘转化为osd:

#第一种初始化:告诉Ceph使用任何可用和未使用的存储设备:

[ceph: root@node1 /]# ceph orch apply osd --all-available-devices

Scheduled osd.all-available-devices update...

# 第二种初始化:从特定主机上的特定设备创建OSD:

#ceph orch daemon add osd *<host>*:*<device-path>*

[ceph: root@node1 /]# ceph orch daemon add osd node2:/dev/vdb

Created osd(s) O on host 'node2'

查看容器:

[root@node1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

quay.io/ceph/ceph v17 cc65afd6173a 2 months ago 1.36GB

quay.io/ceph/ceph-grafana 8.3.5 dad864ee21e9 9 months ago 558MB

quay.io/prometheus/prometheus v2.33.4 514e6a882f6e 10 months ago 204MB

quay.io/prometheus/node-exporter v1.3.1 1dbe0e931976 13 months ago 20.9MB

quay.io/prometheus/alertmanager v0.23.0 ba2b418f427c 16 months ago 57.5MB

[root@node1 ~]#

[root@node1 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

41a980ad57b6 quay.io/ceph/ceph-grafana:8.3.5 "/bin/sh -c 'grafana…" 32 seconds ago Up 31 seconds ceph-976e04fe-9315-11ed-a275-e29e49e9189c-grafana-node1

c1d92377e2f2 quay.io/prometheus/alertmanager:v0.23.0 "/bin/alertmanager -…" 33 seconds ago Up 32 seconds ceph-976e04fe-9315-11ed-a275-e29e49e9189c-alertmanager-node1

9262faff37be quay.io/prometheus/prometheus:v2.33.4 "/bin/prometheus --c…" 42 seconds ago Up 41 seconds ceph-976e04fe-9315-11ed-a275-e29e49e9189c-prometheus-node1

2601411f95a6 quay.io/prometheus/node-exporter:v1.3.1 "/bin/node_exporter …" About a minute ago Up About a minute ceph-976e04fe-9315-11ed-a275-e29e49e9189c-node-exporter-node1

a6ca018a7620 quay.io/ceph/ceph "/usr/bin/ceph-crash…" 2 minutes ago Up 2 minutes ceph-976e04fe-9315-11ed-a275-e29e49e9189c-crash-node1

f9e9de110612 quay.io/ceph/ceph:v17 "/usr/bin/ceph-mgr -…" 3 minutes ago Up 3 minutes ceph-976e04fe-9315-11ed-a275-e29e49e9189c-mgr-node1-svfnsm

cac707c88b83 quay.io/ceph/ceph:v17 "/usr/bin/ceph-mon -…" 3 minutes ago Up 3 minutes ceph-976e04fe-9315-11ed-a275-e29e49e9189c-mon-node1

[root@node1 ~]# 三、Ceph 使用

常用命令:

- ceph orch ls:列出集群内运行的组件

- ceph orch host ls:列出集群内的主机

- ceph orch ps:列出集群内容器的详细信息

- ceph orch apply mon --placement="3 node1 node2 node3":调整组件的数量

- ceph orch ps --daemon-type rgw:--daemon-type:指定查看的组件

- ceph orch host label add node1 mon:给某个主机指定标签

- ceph orch apply mon label:mon:告诉cephadm根据标签部署mon,修改后只有包含mon的主机才会成为mon,不过原来启动的mon现在暂时不会关闭

- ceph orch device ls:列出集群内的存储设备。例如,要在newhost1IP地址10.1.2.123上部署第二台监视器,并newhost2在网络10.1.2.0/24中部署第三台monitor

- ceph orch apply mon --unmanaged:禁用mon自动部署

- ceph orch daemon add mon newhost1:10.1.2.123

- ceph orch daemon add mon newhost2:10.1.2.0/24

使用shell命令:

[root@node1 ~]# cephadm shell #切换模式

Inferring fsid 976e04fe-9315-11ed-a275-e29e49e9189c

Inferring config /var/lib/ceph/976e04fe-9315-11ed-a275-e29e49e9189c/mon.node1/config

Using ceph image with id 'cc65afd6173a' and tag 'v17' created on 2021-01-30 07:41:41 +0800 CST

quay.io/ceph/ceph@sha256:0560b16bec6e84345f29fb6693cd2430884e6efff16a95d5bdd0bb06d7661c45

[ceph: root@node1 /]#

[ceph: root@node1 /]#

[ceph: root@node1 /]#

[ceph: root@node1 /]# ceph -s

cluster:

id: 976e04fe-9315-11ed-a275-e29e49e9189c

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum node1 (age 4m)

mgr: node1.svfnsm(active, since 2m)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

[ceph: root@node1 /]#

[ceph: root@node1 /]# ceph orch ps #查看目前集群内运行的组件(包括其他节点)

NAME HOST PORTS STATUS REFRESHED AGE MEM USE MEM LIM VERSION IMAGE ID CONTAINER ID

alertmanager.node1 node11 *:9093,9094 running (2m) 2m ago 4m 15.1M - ba2b418f427c c1d92377e2f2

crash.node1 node11 running (4m) 2m ago 4m 6676k - 17.2.5 cc65afd6173a a6ca018a7620

grafana.node1 node11 *:3000 running (2m) 2m ago 3m 39.1M - 8.3.5 dad864ee21e9 41a980ad57b6

mgr.node1.svfnsm node11 *:9283 running (5m) 2m ago 5m 426M - 17.2.5 cc65afd6173a f9e9de110612

mon.node1 node11 running (5m) 2m ago 5m 29.0M 2048M 17.2.5 cc65afd6173a cac707c88b83

node-exporter.node1 node11 *:9100 running (3m) 2m ago 3m 13.2M - 1dbe0e931976 2601411f95a6

prometheus.node1 node11 *:9095 running (3m) 2m ago 3m 34.4M - 514e6a882f6e 9262faff37be

[ceph: root@node1 /]#

[ceph: root@node1 /]#

[ceph: root@node1 /]# ceph orch ps --daemon-type mon #查看某一组件的状态

NAME HOST PORTS STATUS REFRESHED AGE MEM USE MEM LIM VERSION IMAGE ID CONTAINER ID

mon.node1 node11 running (5m) 2m ago 5m 29.0M 2048M 17.2.5 cc65afd6173a cac707c88b83

[ceph: root@node1 /]#

[ceph: root@node1 /]# exit #退出命令模式

exit

[root@node1 ~]#

# ceph命令的第二种应用

[root@cnode1 ~]# cephadm shell -- ceph -s

Inferring fsid 976e04fe-9315-11ed-a275-e29e49e9189c

Inferring config /var/lib/ceph/976e04fe-9315-11ed-a275-e29e49e9189c/mon.node1/config

Using ceph image with id 'cc65afd6173a' and tag 'v17' created on 2021-01-30 07:41:41 +0800 CST

quay.io/ceph/ceph@sha256:0560b16bec6e84345f29fb6693cd2430884e6efff16a95d5bdd0bb06d7661c45

cluster:

id: 976e04fe-9315-11ed-a275-e29e49e9189c

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum node1 (age 6m)

mgr: node1.svfnsm(active, since 4m)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

[root@node1 ~]#

安装ceph-common包:

# 安装ceph-common包

[root@node1 ~]# cephadm install ceph-common

Installing packages ['ceph-common']...

[root@node1 ~]#

[root@node1 ~]# ceph -v

ceph version 17.2.5 (98318ae89f1a893a6ded3a640405cdbb33e08757) quincy (stable)

[root@node1 ~]#

# 启用ceph组件

ssh-copy-id -f -i /etc/ceph/ceph.pub root@node2

ssh-copy-id -f -i /etc/ceph/ceph.pub root@node3创建mon和mgr:

# 创建mon和mgr

ceph orch host add node2

ceph orch host add node3

#查看目前集群纳管的节点

[root@node1 ~]# ceph orch host ls

HOST ADDR LABELS STATUS

node1 192.168.3.71 _admin

node2 192.168.3.72

node3 192.168.3.73

3 hosts in cluster

[root@node1 ~]#

#ceph集群一般默认会允许存在5个mon和2个mgr;可以使用ceph orch apply mon --placement="3 node1 node2 node3"进行手动修改

[root@node1 ~]# ceph orch apply mon --placement="3 node1 node2 node3"

Scheduled mon update...

[root@node1 ~]#

[root@node1 ~]# ceph orch apply mgr --placement="3 node1 node2 node3"

Scheduled mgr update...

[root@node1 ~]#

[root@node1 ~]# ceph orch ls

NAME PORTS RUNNING REFRESHED AGE PLACEMENT

alertmanager ?:9093,9094 1/1 30s ago 17m count:1

crash 3/3 4m ago 17m *

grafana ?:3000 1/1 30s ago 17m count:1

mgr 3/3 4m ago 46s node1;node2;node3;count:3

mon 3/3 4m ago 118s node1;node2;node3;count:3

node-exporter ?:9100 3/3 4m ago 17m *

prometheus ?:9095 1/1 30s ago 17m count:1

[root@node1 ~]#

创建osd:

# 创建osd

[root@node1 ~]# ceph orch daemon add osd node1:/dev/sdb

Created osd(s) 0 on host 'node1'

[root@node1 ~]# ceph orch daemon add osd node2:/dev/sdb

Created osd(s) 1 on host 'node2'

[root@node1 ~]# ceph orch daemon add osd node3:/dev/sdb

Created osd(s) 2 on host 'node3'

[root@node1 ~]#

创建mds:

# 创建mds

#首先创建cephfs,不指定pg的话,默认自动调整

[root@node1 ~]# ceph osd pool create cephfs_data

pool 'cephfs_data' created

[root@node1 ~]# ceph osd pool create cephfs_metadata

pool 'cephfs_metadata' created

[root@node1 ~]# ceph fs new cephfs cephfs_metadata cephfs_data

new fs with metadata pool 3 and data pool 2

[root@node1 ~]#

#开启mds组件,cephfs:文件系统名称;–placement:指定集群内需要几个mds,后面跟主机名

[root@node1 ~]# ceph orch apply mds cephfs --placement="3 node1 node2 node3"

Scheduled mds.cephfs update...

[root@node1 ~]#

#查看各节点是否已启动mds容器;还可以使用ceph orch ps 查看某一节点运行的容器

[root@node1 ~]# ceph orch ps --daemon-type mds

NAME HOST PORTS STATUS REFRESHED AGE MEM USE MEM LIM VERSION IMAGE ID CONTAINER ID

mds.cephfs.node1.zgcgrw node1 running (52s) 44s ago 52s 17.0M - 17.2.5 cc65afd6173a aba28ef97b9a

mds.cephfs.node2.vvpuyk node2 running (51s) 45s ago 51s 14.1M - 17.2.5 cc65afd6173a 940a019d4c75

mds.cephfs.node3.afnozf node3 running (54s) 45s ago 54s 14.2M - 17.2.5 cc65afd6173a bd17d6414aa9

[root@node1 ~]#

[root@node1 ~]# 创建rgw:

# 创建rgw

#首先创建一个领域

[root@node1 ~]# radosgw-admin realm create --rgw-realm=myorg --default

{

"id": "a6607d08-ac44-45f0-95b0-5435acddfba2",

"name": "myorg",

"current_period": "16769237-0ed5-4fad-8822-abc444292d0b",

"epoch": 1

}

[root@node1 ~]#

#创建区域组

[root@node1 ~]# radosgw-admin zonegroup create --rgw-zonegroup=default --master --default

{

"id": "4d978fe1-b158-4b3a-93f7-87fbb31f6e7a",

"name": "default",

"api_name": "default",

"is_master": "true",

"endpoints": [],

"hostnames": [],

"hostnames_s3website": [],

"master_zone": "",

"zones": [],

"placement_targets": [],

"default_placement": "",

"realm_id": "a6607d08-ac44-45f0-95b0-5435acddfba2",

"sync_policy": {

"groups": []

}

}

[root@node1 ~]#

#创建区域

[root@node1 ~]# radosgw-admin zone create --rgw-zonegroup=default --rgw-zone=cn-east-1 --master --default

{

"id": "5ac7f118-a69c-4dec-b174-f8432e7115b7",

"name": "cn-east-1",

"domain_root": "cn-east-1.rgw.meta:root",

"control_pool": "cn-east-1.rgw.control",

"gc_pool": "cn-east-1.rgw.log:gc",

"lc_pool": "cn-east-1.rgw.log:lc",

"log_pool": "cn-east-1.rgw.log",

"intent_log_pool": "cn-east-1.rgw.log:intent",

"usage_log_pool": "cn-east-1.rgw.log:usage",

"roles_pool": "cn-east-1.rgw.meta:roles",

"reshard_pool": "cn-east-1.rgw.log:reshard",

"user_keys_pool": "cn-east-1.rgw.meta:users.keys",

"user_email_pool": "cn-east-1.rgw.meta:users.email",

"user_swift_pool": "cn-east-1.rgw.meta:users.swift",

"user_uid_pool": "cn-east-1.rgw.meta:users.uid",

"otp_pool": "cn-east-1.rgw.otp",

"system_key": {

"access_key": "",

"secret_key": ""

},

"placement_pools": [

{

"key": "default-placement",

"val": {

"index_pool": "cn-east-1.rgw.buckets.index",

"storage_classes": {

"STANDARD": {

"data_pool": "cn-east-1.rgw.buckets.data"

}

},

"data_extra_pool": "cn-east-1.rgw.buckets.non-ec",

"index_type": 0

}

}

],

"realm_id": "a6607d08-ac44-45f0-95b0-5435acddfba2",

"notif_pool": "cn-east-1.rgw.log:notif"

}

[root@node1 ~]#

#为特定领域和区域部署radosgw守护程序

[root@node1 ~]# ceph orch apply rgw myorg cn-east-1 --placement="3 node1 node2 node3"

Scheduled rgw.myorg update...

[root@node1 ~]#

#验证各节点是否启动rgw容器

[root@node1 ~]# ceph orch ps --daemon-type rgw

NAME HOST PORTS STATUS REFRESHED AGE MEM USE MEM LIM VERSION IMAGE ID CONTAINER ID

rgw.myorg.node1.tzzauo node1 *:80 running (60s) 50s ago 60s 18.6M - 17.2.5 cc65afd6173a 2ce31e5c9d35

rgw.myorg.node-2.zxwpfj node2 *:80 running (61s) 51s ago 61s 20.0M - 17.2.5 cc65afd6173a a334e346ae5c

rgw.myorg.node3.bvsydw node3 *:80 running (58s) 51s ago 58s 18.6M - 17.2.5 cc65afd6173a 97b09ba01821

[root@node1 ~]#

为所有节点安装ceph-common包:

# 为所有节点安装ceph-common包

scp /etc/yum.repos.d/ceph.repo node2:/etc/yum.repos.d/ #将主节点的ceph源同步至其他节点

scp /etc/yum.repos.d/ceph.repo node3:/etc/yum.repos.d/ #将主节点的ceph源同步至其他节点

yum -y install ceph-common #在节点安装ceph-common,ceph-common包会提供ceph命令并在etc下创建ceph目录

scp /etc/ceph/ceph.conf node2:/etc/ceph/ #将ceph.conf文件传输至对应节点

scp /etc/ceph/ceph.conf node3:/etc/ceph/ #将ceph.conf文件传输至对应节点

scp /etc/ceph/ceph.client.admin.keyring node2:/etc/ceph/ #将密钥文件传输至对应节点

scp /etc/ceph/ceph.client.admin.keyring node3:/etc/ceph/ #将密钥文件传输至对应节点测试:

# 测试

[root@node3 ~]# ceph -s

cluster:

id: 976e04fe-9315-11ed-a275-e29e49e9189c

health: HEALTH_OK

services:

mon: 3 daemons, quorum node1,node2,node3 (age 17m)

mgr: node1.svfnsm(active, since 27m), standbys: node2.zuetkd, node3.vntnlf

mds: 1/1 daemons up, 2 standby

osd: 3 osds: 3 up (since 8m), 3 in (since 8m)

rgw: 3 daemons active (3 hosts, 1 zones)

data:

volumes: 1/1 healthy

pools: 7 pools, 177 pgs

objects: 226 objects, 585 KiB

usage: 108 MiB used, 300 GiB / 300 GiB avail

pgs: 177 active+clean

[root@node3 ~]# 访问界面:

# 页面访问

https://192.168.3.71:8443

http://192.168.3.72:9095/

https://192.168.3.73:3000/

User: admin

Password: dsvi6yiat7