声明:本文为本人在学习过程中,遇到的问题进行整理,若有不正确之处,还请大牛不吝赐教。

本文就 TensorFlow 构建卷积网络后,对大规模数据的训练方法进行整理。

众所周知,在训练卷积网络模型的过程中,为了保证模型的准确率,大量的数据是必须的。TensorFlow 中也提供了几种数据加载的方式,最简单最暴力的方式便是将所有的数据一次性加载到内存中进行训练,但如果数据量过大,以CoCo数据集为例,有将近14G的数据量(Coco数据集的Github下载地址:https://github.com/pdollar/coco),显然,将数据全部填入到内存中进行训练,是不现实的;且卷积过程中,需要消耗大量的内存,以本人练习过程中,构建的模型为例,使用了三层卷积层,两个全连接层,其中,每次卷积使用8个卷积核,一次卷积将在原图像的基础上,再生成24幅图像,且正式的模型构建中,需要的卷积核,卷积层数要比练习过程中,多的多。

本人在训练过程中,使用之前的做项目用过的人脸库进行训练卷积模型,图像为180*180大小的灰度图像,共26个类,每个类取100个样本进行训练,导致内存直接被爆,整个系统卡死的情况;为了解决上述问题,TensorFlow中提供了小批量样本进行训练的方法,可以一次只加载小批量的样本数据进行训练,最终,在不会因内存耗尽的情况下,对所有的样本进行训练。并且,TensorFlow中可实现数据加载与训练的异步处理,加快了程序处理的效率。其中,最常用的方式是从TFRecord文件中加载数据,这也是TensorFlow加载数据的标准格式。

但是,网上大部分的案例和例程中,只是对TFRecord格式的文件进行加载的讲解,并没有训练的例程,且网上有些例程并不正确。本人在编写程序的过程中走了不少弯路。现在将程序代码进行发表,一是作为自己以后的参考,二是希望能有人指点,三是希望后来的初学者少走弯路。

本文以本人练习过程中的人脸识别程序为例,共选取了其中的5类,每类选取10个样本,其中部分样本图片如下所示:

代码如下所示:

import os

import tensorflow as tf

import numpy as np

import cv2

import matplotlib.pyplot as plt

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

Class_Nums = 5 # 共有 5 个种类

Sample_Nums = 10 # 每类取 10 个样本,共 50 个样本

def load_images():

img_list = []

for i in range(Class_Nums):

path = 'F:\Python WorkSpace\FaceRecognize\\train\%d\\' % (i+1)

for j in range(Sample_Nums):

file_name = '%03d.jpg' % (j+1)

file = path + file_name

image = cv2.imread(file, cv2.IMREAD_GRAYSCALE)

img_list.append(image)

return img_list

def get_accuracy(logits, targets):

batch_prediction = np.argmax(logits, axis=1)

num_correct = np.sum(np.equal(batch_prediction, targets))

return 100.* num_correct / batch_prediction.shape[0]

# 以下代码用于实现卷积网络

def weight_init(shape, name):

return tf.get_variable(name, shape, initializer=tf.random_normal_initializer(mean=0.0, stddev=0.1))

def bias_init(shape, name):

return tf.get_variable(name, shape, initializer=tf.constant_initializer(0.0))

def conv2d(input_data, conv_w):

return tf.nn.conv2d(input_data, conv_w, strides=[1, 1, 1, 1], padding='SAME')

def max_pool(input_data, size):

return tf.nn.max_pool(input_data, ksize=[1, size, size, 1], strides=[1, size, size, 1], padding='SAME')

def conv_net(input_data):

with tf.name_scope('conv1'):

w_conv1 = weight_init([3, 3, 1, 8], 'conv1_w') # 卷积核大小是 3*3 输入是 1 通道,输出为 8 通道,即提取8特征

b_conv1 = bias_init([8], 'conv1_b')

h_conv1 = tf.nn.relu(tf.nn.bias_add(conv2d(input_data, w_conv1), b_conv1))

bn1 = tf.contrib.layers.batch_norm(h_conv1)

h_pool1 = max_pool(bn1, 2)

with tf.name_scope('conv2'):

w_conv2 = weight_init([5, 5, 8, 8], 'conv2_w') # 卷积核大小是 5*5 输入是64,输出为 32

b_conv2 = bias_init([8], 'conv2_b')

h_conv2 = tf.nn.relu(conv2d(h_pool1, w_conv2) + b_conv2)

bn2 = tf.contrib.layers.batch_norm(h_conv2)

h_pool2 = max_pool(bn2, 2)

with tf.name_scope('conv3'):

w_conv3 = weight_init([5, 5, 8, 8], 'conv3_w') # 卷积核大小是 5*5 输入是8,输出为 8

b_conv3 = bias_init([8], 'conv3_b')

h_conv3 = tf.nn.relu(conv2d(h_pool2, w_conv3) + b_conv3)

bn3 = tf.contrib.layers.batch_norm(h_conv3)

h_pool3 = max_pool(bn3, 2)

with tf.name_scope('fc1'):

w_fc1 = weight_init([23 * 23 * 8, 120], 'fc1_w') # 三层卷积后得到的图像大小为 22 * 22,共 50 个样本

b_fc1 = bias_init([120], 'fc1_b')

h_fc1 = tf.nn.relu(tf.matmul(tf.reshape(h_pool3, [-1, 23 * 23 * 8]), w_fc1) + b_fc1)

with tf.name_scope('fc2'):

w_fc2 = weight_init([120, Class_Nums], 'fc2_w') # 将 130 个特征映射到 26 个类别上

b_fc2 = bias_init([Class_Nums], 'fc2_b')

h_fc2 = tf.nn.softmax(tf.matmul(h_fc1, w_fc2) + b_fc2)

return h_fc2

# 生成 tfrecord 文件

def gen_tfrecord(path):

tf_writer = tf.python_io.TFRecordWriter(path)

for i in range(Class_Nums):

path = 'F:\Python WorkSpace\FaceRecognize\\train\%d\\' % (i + 1)

label_data = i

for j in range(Sample_Nums):

file_name = '%03d.jpg' % (j + 1)

file = path + file_name

image = cv2.imread(file, cv2.IMREAD_GRAYSCALE)

image_bytes= image.tostring()

height = image.shape[0]

width = image.shape[1]

channels = 1

example = tf.train.Example()

feature = example.features.feature

feature['height'].int64_list.value.append(height)

feature['width'].int64_list.value.append(width)

feature['channels'].int64_list.value.append(channels)

feature['image_data'].bytes_list.value.append(image_bytes)

feature['label'].int64_list.value.append(label_data)

tf_writer.write(example.SerializeToString())

tf_writer.close()

def read_and_decode(filename_queue):

reader = tf.TFRecordReader()

_, serialized_example = reader.read(filename_queue)

features = tf.parse_single_example(

serialized_example,

features={

'height': tf.FixedLenFeature([], tf.int64),

'width': tf.FixedLenFeature([], tf.int64),

'channels': tf.FixedLenFeature([], tf.int64),

'image_data':tf.FixedLenFeature([], tf.string),

'label': tf.FixedLenFeature([], tf.int64)

}

)

image = tf.decode_raw(features['image_data'], tf.uint8)

image = tf.reshape(image, [180, 180, 1])

image = tf.cast(image, tf.float32)

label = tf.cast(features['label'], tf.int32)

return image, label

# 该函数用于统计 TFRecord 文件中的样本数量(总数)

def total_sample(file_name):

sample_nums = 0

for record in tf.python_io.tf_record_iterator(file_name):

sample_nums += 1

return sample_nums

def train_data():

batch_size = 10

batch_num = total_sample('Faces.tfrecord') / batch_size

filename_queue = tf.train.string_input_producer(['Faces.tfrecord'], shuffle=False)

image, label = read_and_decode(filename_queue)

image_train, label_train = tf.train.batch([image, label], batch_size=batch_size, num_threads=1, capacity=32)

#image_train, label_train = tf.train.shuffle_batch([image, label], batch_size, num_threads=1, capacity=5+batch_size*3, min_after_dequeue=5)

train_labels_one_hot = tf.one_hot(label_train, 5, on_value=1.0, off_value=0.0)

x_data = tf.placeholder(tf.float32, shape=[None, 180, 180, 1])

y_target = tf.placeholder(tf.float32, shape=[None, Class_Nums])

global_step = tf.get_variable('global_step', [], initializer=tf.constant_initializer(0), trainable=False, dtype=tf.int32)

model_output = conv_net(x_data)

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(labels=y_target, logits=model_output))

optimizer = tf.train.AdamOptimizer(10e-5).minimize(loss, global_step=global_step)

train_correct_prediction = tf.equal(tf.argmax(model_output, 1), tf.argmax(y_target, 1))

train_accuracy = tf.reduce_mean(tf.cast(train_correct_prediction, tf.float32))

init = tf.global_variables_initializer()

session = tf.Session()

session.run(init)

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=session, coord=coord)

loss_list = []

acc_list = []

try:

for i in range(100):

cost_avg = 0

acc_avg = 0

for j in range(int(batch_num)):

image_batch, label_batch = session.run([image_train, train_labels_one_hot])

_,step, acc, cost = session.run([optimizer, global_step,train_accuracy, loss], feed_dict={x_data: image_batch, y_target:label_batch})

acc_avg += (acc/batch_num)

cost_avg += (cost/batch_num)

print("step %d, training accuracy %0.10f loss %0.10f" % (i, acc_avg, cost_avg))

loss_list.append(cost_avg)

acc_list.append(acc_avg)

except tf.errors.OutOfRangeError:

print('Done training --epoch limit reached')

finally:

coord.request_stop()

coord.join(threads)

session.close()

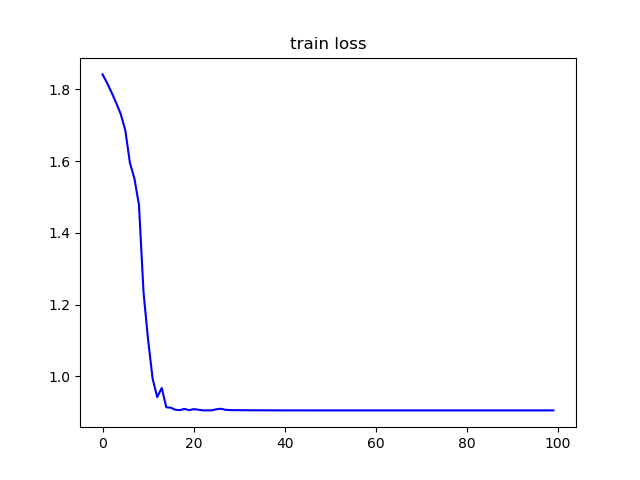

plt.title('train loss')

plt.plot(range(0, 100), loss_list, 'b-')

plt.show()

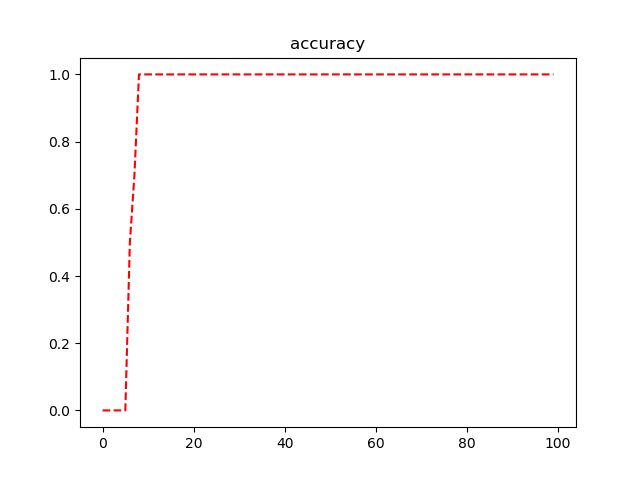

plt.title('accuracy')

plt.plot(range(0, 100), acc_list, 'r--')

plt.show()

def main():

gen_tfrecord('Faces.tfrecord')

print('生成 .tfrecord 文件成功')

train_data()

if __name__ == '__main__':

main()

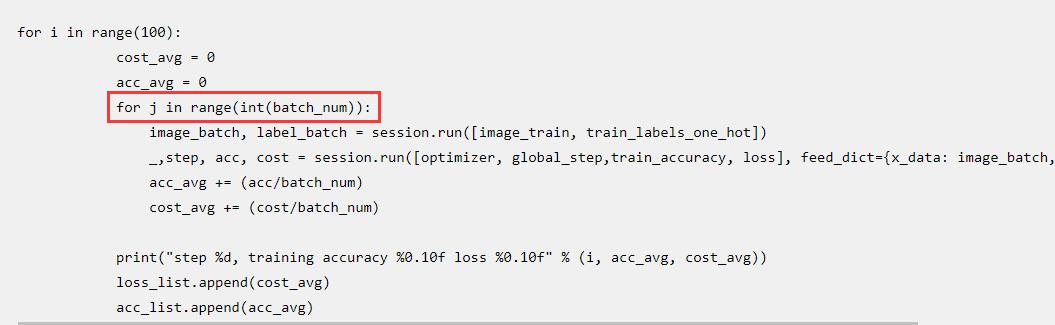

如上图所示,红色方框中标记出的代码,尤为重要。根据个人的理解,在训练过程中,需要将所有样本训练完成一次之后,才能再进行下一次的迭代。且批量训练过程中,得到的准确率与损失值为小批量样本的平均值,所以,计算时,需要除以总的批量数,即该样本按现有的batch_size共分了batch_num批;

同时,在批量处理的过程中,一定要注意,数据的批量归一化(BN)处理,如果不进行归一化处理,将会导致梯度收敛过慢或不收敛甚至是梯度弥散。

如上代码所示,训练结果如下图所示:

因为只是练习,所以,只做了100次迭代,且样本数据量太小,容易造成过拟合等现象。