激活函数学习(2022.2.28)

所用软件及环境(Matlab+PyCharm+Python+Tensorflow+Keras+PyTorch)

|

|

1、激活函数简介

1.1 Activation Function

Activation Function(激活函数)就是一个从x到y的映射函数y=f(x),它主要应用在深度学习和神经网络中的神经元,负责将输入Input通过函数作用后映射到输出Output。

1.2 PyTorch激活函数API

PyTorch官网上API文档可以看到torch.nn和torch.nn.functional都介绍到所用的激活函数,包含nn.Softmin、 nn.Softmax、nn.Softmax2d、nn.LogSoftmax、nn.AdaptiveLogSoftmaxWithLoss、nn.GLU、nn.Threshold、nn.Tanhshrink、nn.Tanh、nn.Softsign、nn.Softshrink、nn.Softplus、nn.Mish、nn.SiLU、nn.Sigmoid、nn.GELU、nn.CELU、nn.SELU、nn.RReLU、nn.ReLU6、nn.ReLU、nn.PReLU、nn.MultiheadAttention、nn.LogSigmoid、nn.LeakyReLU、nn.Hardswish、nn.Hardswish、nn.Hardtanh、nn.Hardsigmoid、nn.Hardshrink、nn.ELU等。

1.3 TensorFlow + Keras激活函数API

Keras官网tf.keras.activations和TensorFlow官网tf.keras.activations的API文档介绍其所用到的激活函数有:relu、sigmoid、softmax、softplus、softsign、tanh、selu、elu、exponential,deserialize、elu、exponential、gelu、get、hard_sigmoid、linear、reluseluserializesigmoidsoftmax、softplus、softsign、swish、tanh等。

|

|

2、常用的激活函数(公式+曲线图)

2.1 线性激活函数

2.1.1 Linear

y = x y=x y=x

import tensorflow as tf

a = tf.constant([-3.0,-1.0, 0.0,1.0,3.0], dtype = tf.float32)

b = tf.keras.activations.linear(a)

print(b)

运行结果:

tf.Tensor([-3. -1. 0. 1. 3.], shape=(5,), dtype=float32)

2.2 非线性激活函数

与线性激活函数不同,非线性激活函数的引入可以增加神经网络的非线性,就不仅仅是简单的线性矩阵相乘。

2.2.1 Exponential

e x p o n e n t i a l ( x ) = e x exponential(x) = e^{x} exponential(x)=ex

import tensorflow as tf

a = tf.constant([-3.0,-1.0, 0.0,1.0,3.0], dtype = tf.float32)

b = tf.keras.activations.exponential(a)

print(b)

运行结果:

tf.Tensor([ 0.04978707 0.36787945 1. 2.7182817 20.085537 ], shape=(5,), dtype=float32)

2.2.2 Hard_sigmoid

H a r d ‾ s i g m o i d ( x ) = { 0 x < − 2.5 0.2 x + 0.5 − 2.5 ≤ x ≤ 2.5 1 x > 2.5 Hard\underline{~~}sigmoid(x)=\begin{cases} 0 & x<-2.5 \\ 0.2x+0.5 & -2.5≤x≤2.5 \\ 1& x>2.5 \\ \end{cases} Hard sigmoid(x)=⎩⎪⎨⎪⎧00.2x+0.51x<−2.5−2.5≤x≤2.5x>2.5

import tensorflow as tf

a = tf.constant([-3.0,-1.0, 0.0,1.0,3.0], dtype = tf.float32)

b = tf.keras.activations.hard_sigmoid(a)

print(b)

运行结果:

tf.Tensor([0. 0.3 0.5 0.7 1. ], shape=(5,), dtype=float32)

2.2.3 Sigmoid

S i g m o i d ( x ) = 1 1 + e − x Sigmoid(x) = \frac{1}{1+e^{-x}} Sigmoid(x)=1+e−x1

import tensorflow as tf

print('sigmoid(x) = 1 / (1 + exp(-x))')

a = tf.constant([-20, -1.0, 0.0, 1.0, 20], dtype = tf.float32)

b = tf.keras.activations.sigmoid(a).numpy()

print(b)

运行结果:

sigmoid(x) = 1 / (1 + exp(-x))

[2.0611535e-09 2.6894143e-01 5.0000000e-01 7.3105860e-01 1.0000000e+00]

2.2.4 Swish

S w i s h ( x ) = x ⋅ S i g m o i d ( x ) = x 1 + e − x Swish(x) = x\cdot Sigmoid(x) = \frac{x}{1+e^{-x}} Swish(x)=x⋅Sigmoid(x)=1+e−xx

import tensorflow as tf

a = tf.constant([-20, -1.0, 0.0, 1.0, 20], dtype = tf.float32)

b = tf.keras.activations.swish(a)

print(b)

运行结果:

tf.Tensor([-4.1223068e-08 -2.6894143e-01 0.0000000e+00 7.3105860e-01 2.0000000e+01], shape=(5,), dtype=float32)

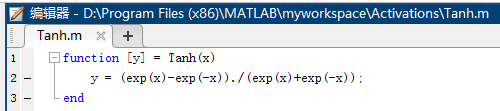

2.2.5 Tanh

Hyperbolic tangent activation function

T a n h ( x ) = e x − e − x e x + e − x Tanh(x) = \frac{e^{x}-e^{-x}}{e^{x}+e^{-x}} Tanh(x)=ex+e−xex−e−x

import tensorflow as tf

a = tf.constant([-3.0,-1.0, 0.0,1.0,3.0], dtype = tf.float32)

b = tf.keras.activations.tanh(a)

print(b)

运行结果:

tf.Tensor([-0.9950548 -0.7615942 0. 0.7615942 0.9950548], shape=(5,), dtype=float32)

2.2.6 Softmax

Softmax converts a vector of values to a probability distribution.

S o f t m a x ( x i ) = e x i ∑ j = 1 n e x j Softmax(x_{i}) = \frac{e^{x_i}}{\sum_{j=1}^n e^{x_j}} Softmax(xi)=∑j=1nexjexi S o f t m a x ( x ⃗ ) = [ S o f t m a x ( x 1 ) , . . . , S o f t m a x ( x i ) , . . . , S o f t m a x ( x n ) ] T Softmax(\vec{x}) =[Softmax(x_{1}),...,Softmax(x_{i}),...,Softmax(x_{n})]^{T} Softmax(x)=[Softmax(x1),...,Softmax(xi),...,Softmax(xn)]T

import tensorflow as tf

inputs = tf.random.normal(shape=(3,4))

print(inputs)

outputs = tf.keras.activations.softmax(inputs)

print(outputs)

print(tf.reduce_sum(outputs[0,:]))

运行结果:

tf.Tensor([[-1.4463423 -1.2136649 0.37711483 -1.5163935 ]

[ 1.1458701 0.69421154 0.5825411 -0.6992794 ]

[ 0.90473056 0.9367949 0.5104403 0.40904504]], shape=(3, 4), dtype=float32)

tf.Tensor([[0.10652403 0.13443059 0.6597281 0.09931726]

[0.4230326 0.26929048 0.240837 0.06683987]

[0.3015777 0.31140423 0.20331109 0.183707 ]], shape=(3, 4), dtype=float32)

tf.Tensor(1.0, shape=(), dtype=float32)

import tensorflow as tf

x = tf.constant([-3.0, -1.0, 0.0, 2.0], dtype = tf.float32)

layer = tf.keras.layers.Softmax()

output = layer(x)

list(output.numpy())

print(tf.reduce_sum(output))

运行结果:

[0.0056533027, 0.041772567, 0.11354961, 0.8390245]

tf.Tensor(0.99999994, shape=(), dtype=float32)

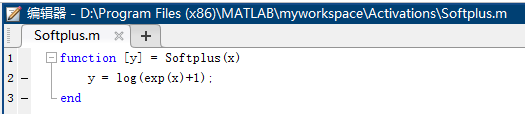

2.2.7 Softplus

S o f t p l u s ( x ) = l o g ( e x + 1 ) Softplus(x) = log(e^{x}+1) Softplus(x)=log(ex+1)

import tensorflow as tf

a = tf.constant([-20, -1.0, 0.0, 1.0, 20], dtype = tf.float32)

b = tf.keras.activations.softplus(a)

print(b)

运行结果:

tf.Tensor([2.0611535e-09 3.1326169e-01 6.9314718e-01 1.3132616e+00 2.0000000e+01], shape=(5,), dtype=float32)

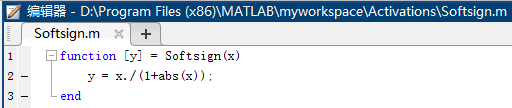

2.2.8 Softsign

S o f t s i g n ( x ) = x 1 + ∣ x ∣ Softsign(x) = \frac{x}{1+|x|} Softsign(x)=1+∣x∣x

import tensorflow as tf

a = tf.constant([-1.0, 0.0, 1.0], dtype=tf.float32)

b = tf.keras.activations.softsign(a)

print(b)

运行结果:

tf.Tensor([-0.5 0. 0.5], shape=(3,), dtype=float32)

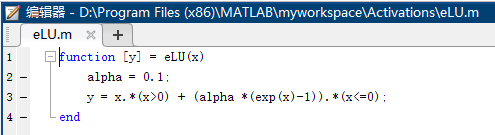

2.2.9 eLU

The exponential linear unit (ELU) activation function:

alpha: Scale for the negative factor.

e L U ( x ) = { x x ≥ 0 α ⋅ ( e x − 1 ) x < 0 ( α > 0 ) eLU(x)=\begin{cases} x & x≥0 \\ \alpha \cdot (e^{x}-1)& x<0 \\ \end{cases} ~~~~~~(\alpha > 0) eLU(x)={ xα⋅(ex−1)x≥0x<0 (α>0)

import tensorflow as tf

a = tf.constant([-4.0,-2.0, 0.0,2.0,4.0], dtype = tf.float32)

b = tf.keras.activations.elu(a)

print(b)

运行结果:

tf.Tensor([-0.9816844 -0.86466473 0. 2. 4.], shape=(5,), dtype=float32)

import tensorflow as tf

x = tf.constant([-3.0, -1.0, 0.0, 2.0], dtype = tf.float32)

layer = tf.keras.layers.ELU()

output = layer(x)

list(output.numpy())

x = tf.constant([-3.0, -1.0, 0.0, 2.0], dtype = tf.float32)

layer = tf.keras.layers.ELU(alpha = 1.5)

output = layer(x)

list(output.numpy())

运行结果:

[-0.95021296, -0.63212055, 0.0, 2.0]

[-1.4253194, -0.9481808, 0.0, 2.0]

2.2.10 GeLU

the Gaussian error linear unit (GELU) activation function

G e L U ( x ) = x ⋅ Φ ( x ) = x 2 [ 1 + e r f ( x 2 ) ] GeLU(x) = x\cdotΦ(x) = \frac{x}{2}[1+erf(\frac{x}{\sqrt{2}})] GeLU(x)=x⋅Φ(x)=2x[1+erf(2x)]

G e L U ( x ) = x ⋅ Φ ( x ) = x 2 ( 1 + t a n h [ 2 π ( x + 0.044715 x 3 ) ] ) GeLU(x) = x\cdotΦ(x) = \frac{x}{2}(1+tanh[\frac{2}{\sqrt{\pi}}(x+0.044715x^{3})]) GeLU(x)=x⋅Φ(x)=2x(1+tanh[π2(x+0.044715x3)])

The gaussian error linear activation: 0.5 * x * (1 + tanh(sqrt(2 / pi) * (x + 0.044715 * x^3))) if approximate is True or x * P(X <= x) = 0.5 * x * (1 + erf(x / sqrt(2))), where P(X) ~ N(0, 1), if approximate is False.

import tensorflow as tf

x = tf.constant([-3.0, -1.0, 0.0, 1.0, 3.0], dtype=tf.float32)

y = tf.keras.activations.gelu(x)

y.numpy()

y = tf.keras.activations.gelu(x, approximate=True)

y.numpy()

2.2.11 PReLU

Parametric Rectified Linear Unit.

P R e L U ( x ) = { α ⋅ x x < 0 x x ≥ 0 PReLU(x)=\begin{cases} \alpha \cdot x & x<0 \\ x & x≥0 \\ \end{cases} PReLU(x)={ α⋅xxx<0x≥0

import tensorflow as tf

x = tf.constant([-3.0, -1.0, 0.0, 2.0], dtype = tf.float32)

layer = tf.keras.layers.PReLU()

output = layer(x)

list(output.numpy())

运行结果:

[0.0, 0.0, 0.0, 2.0]

2.2.12 ReLU

第一种(默认)

the rectified linear unit activation function:max(x, 0)

R e L U ( x ) = { x x ≥ 0 0 x < 0 ReLU(x)=\begin{cases} x & x≥0 \\ 0 & x<0 \\ \end{cases} ReLU(x)={ x0x≥0x<0

import tensorflow as tf

print('relu(x) = max(x, 0)')

x = tf.constant([-10, -5, 0.0, 5, 10], dtype=tf.float32)

y = tf.keras.activations.relu(x).numpy()

print(y)

y = tf.keras.activations.relu(x, alpha=0.5).numpy()

print(y)

y = tf.keras.activations.relu(x, max_value=5.).numpy()

print(y)

tf.keras.activations.relu(x, threshold=5.).numpy()

print(y)

第二种

Rectified Linear Unit activation function.

R e L U ( x ) = { m a x ‾ v a l u e x ≥ m a x ‾ v a l u e x t h r e s h o l d ≤ x < m a x ‾ v a l u e n e g a t i v e ‾ s l o p e ⋅ ( x − t h r e s h o l d ) x ≤ t h r e s h o l d ReLU(x)=\begin{cases} max\underline{~~} value & x≥max\underline{~~} value \\ x & threshold≤x< max\underline{~~} value\\ negative\underline{~~}slope\cdot (x-threshold) & x≤threshold \\ \end{cases} ReLU(x)=⎩⎪⎨⎪⎧max valuexnegative slope⋅(x−threshold)x≥max valuethreshold≤x<max valuex≤threshold

import tensorflow as tf

x = tf.constant([-3.0, -1.0, 0.0, 2.0], dtype = tf.float32)

layer = tf.keras.layers.ReLU()

output = layer(x)

list(output.numpy())

layer = tf.keras.layers.ReLU(max_value=1.0)

output = layer(x)

list(output.numpy())

layer = tf.keras.layers.ReLU(negative_slope=1.0)

output = layer(x)

list(output.numpy())

layer = tf.keras.layers.ReLU(threshold=1.5)

output = layer(x)

list(output.numpy())

运行结果:

[0.0, 0.0, 0.0, 2.0]

[0.0, 0.0, 0.0, 1.0]

[-3.0, -1.0, 0.0, 2.0]

[-0.0, -0.0, 0.0, 2.0]

max_value: Float >= 0. Maximum activation value. Default to None, which means unlimited.

negative_slope: Float >= 0. Negative slope coefficient. Default to 0.

threshold: Float >= 0. Threshold value for thresholded activation. Default to 0.

2.2.13 SeLU

S e L U ( x ) = { s c a l e ⋅ x x > 0 s c a l e ⋅ α ⋅ ( e x − 1 ) x ≤ 0 SeLU(x)=\begin{cases} scale \cdot x & x>0 \\ scale \cdot \alpha \cdot (e^{x}-1) & x≤0 \\ \end{cases} SeLU(x)={

scale⋅xscale⋅α⋅(ex−1)x>0x≤0

α = 1.67326324 , s c a l e = 1.05070098 \alpha=1.67326324,scale=1.05070098 α=1.67326324,scale=1.05070098

α = 1.6732632423543772848170429916717 α=1.6732632423543772848170429916717 α=1.6732632423543772848170429916717 s c a l e = 1.0507009873554804934193349852946 scale=1.0507009873554804934193349852946 scale=1.0507009873554804934193349852946

import tensorflow as tf

a = tf.constant([-4.0,-2.0, 0.0,2.0,4.0], dtype = tf.float32)

b = tf.keras.activations.selu(a)

print(b)

运行结果:

tf.Tensor([-1.7258986 -1.5201665 0. 2.101402 4.202804 ], shape=(5,), dtype=float32)

2.2.14 LeakyReLU

alpha: Float >= 0. Negative slope coefficient. Default to 0.3.

L e a k y R e L U ( x ) = { α ⋅ x x < 0 x x ≥ 0 LeakyReLU(x)=\begin{cases} \alpha \cdot x & x<0 \\ x & x≥0 \\ \end{cases} LeakyReLU(x)={

α⋅xxx<0x≥0

import tensorflow as tf

layer = tf.keras.layers.LeakyReLU()

output = layer([-3.0, -1.0, 0.0, 2.0])

list(output.numpy())

layer = tf.keras.layers.LeakyReLU(alpha=0.1)

output = layer([-3.0, -1.0, 0.0, 2.0])

list(output.numpy())

运行结果:

[-0.90000004, -0.3, 0.0, 2.0]

[-0.3, -0.1, 0.0, 2.0]

2.2.15 ThresholdReLU

T h r e s h o l d R e L U ( x ) = { x x ≥ θ 0 x < 0 ThresholdReLU(x)=\begin{cases} x & x≥θ \\ 0 & x<0 \\ \end{cases} ThresholdReLU(x)={ x0x≥θx<0

import tensorflow as tf

x = tf.constant([-3.0, -1.0, 0.0, 2.0], dtype = tf.float32)

layer = tf.keras.layers.ThresholdedReLU()

output = layer(x)

print(output)

x = tf.constant([-3.0, -1.0, 0.0, 2.0], dtype = tf.float32)

layer = tf.keras.layers.ThresholdedReLU(theta=2.0)

output = layer(x)

list(output.numpy())

运行结果:

tf.Tensor([-0. -0. 0. 2.], shape=(4,), dtype=float32)

[-0.0, -0.0, 0.0, 0.0]

2.3 tensorflow.keras调用激活函数示例

Python Console控制台执行记录:

2022-02-28 15:39:49.131279: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library cudart64_101.dll

import tensorflow as tf

import tensorflow as tf

... a = tf.constant([-3.0,-1.0, 0.0,1.0,3.0], dtype = tf.float32)

... b = tf.keras.activations.linear(a)

... print(b)

2022-02-28 16:03:14.273093: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library nvcuda.dll

2022-02-28 16:03:14.693210: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1716] Found device 0 with properties:

pciBusID: 0000:01:00.0 name: GeForce GTX 1050 Ti computeCapability: 6.1

coreClock: 1.62GHz coreCount: 6 deviceMemorySize: 4.00GiB deviceMemoryBandwidth: 104.43GiB/s

2022-02-28 16:03:14.693808: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library cudart64_101.dll

2022-02-28 16:03:15.480102: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library cublas64_10.dll

2022-02-28 16:03:15.838580: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library cufft64_10.dll

2022-02-28 16:03:15.882722: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library curand64_10.dll

2022-02-28 16:03:16.317725: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library cusolver64_10.dll

2022-02-28 16:03:16.580472: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library cusparse64_10.dll

2022-02-28 16:03:16.997519: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library cudnn64_7.dll

2022-02-28 16:03:17.007124: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1858] Adding visible gpu devices: 0

2022-02-28 16:03:17.150775: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN)to use the following CPU instructions in performance-critical operations: AVX2

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2022-02-28 16:03:17.496633: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x265569f8df0 initialized for platform Host (this does not guarantee that XLA will be used). Devices:

2022-02-28 16:03:17.497183: I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): Host, Default Version

2022-02-28 16:03:17.530457: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1716] Found device 0 with properties:

pciBusID: 0000:01:00.0 name: GeForce GTX 1050 Ti computeCapability: 6.1

coreClock: 1.62GHz coreCount: 6 deviceMemorySize: 4.00GiB deviceMemoryBandwidth: 104.43GiB/s

2022-02-28 16:03:17.532243: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library cudart64_101.dll

2022-02-28 16:03:17.533180: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library cublas64_10.dll

2022-02-28 16:03:17.534105: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library cufft64_10.dll

2022-02-28 16:03:17.534959: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library curand64_10.dll

2022-02-28 16:03:17.535379: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library cusolver64_10.dll

2022-02-28 16:03:17.535577: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library cusparse64_10.dll

2022-02-28 16:03:17.535778: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library cudnn64_7.dll

2022-02-28 16:03:17.536006: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1858] Adding visible gpu devices: 0

2022-02-28 16:03:22.696972: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1257] Device interconnect StreamExecutor with strength 1 edge matrix:

2022-02-28 16:03:22.697300: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1263] 0

2022-02-28 16:03:22.697470: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1276] 0: N

2022-02-28 16:03:22.751106: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1402] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 2983 MB memory) -> physical GPU (device: 0, name: GeForce GTX 1050 Ti, pci bus id: 0000:01:00.0, compute capability: 6.1)

2022-02-28 16:03:22.848184: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x2651942c3e0 initialized for platform CUDA (this does not guarantee that XLA will be used). Devices:

2022-02-28 16:03:22.849428: I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): GeForce GTX 1050 Ti, Compute Capability 6.1

tf.Tensor([-3. -1. 0. 1. 3.], shape=(5,), dtype=float32)

import tensorflow as tf

... a = tf.constant([-3.0,-1.0, 0.0,1.0,3.0], dtype = tf.float32)

... b = tf.keras.activations.linear(a)

... print(b)

tf.Tensor([-3. -1. 0. 1. 3.], shape=(5,), dtype=float32)

import tensorflow as tf

... a = tf.constant([-3.0,-1.0, 0.0,1.0,3.0], dtype = tf.float32)

... b = tf.keras.activations.exponential(a)

... print(b)

tf.Tensor([ 0.04978707 0.36787945 1. 2.7182817 20.085537 ], shape=(5,), dtype=float32)

import tensorflow as tf

... a = tf.constant([-3.0,-1.0, 0.0,1.0,3.0], dtype = tf.float32)

... b = tf.keras.activations.hard_sigmoid(a)

... print(b)

tf.Tensor([0. 0.3 0.5 0.7 1. ], shape=(5,), dtype=float32)

import tensorflow as tf

... print('sigmoid(x) = 1 / (1 + exp(-x))')

... a = tf.constant([-20, -1.0, 0.0, 1.0, 20], dtype = tf.float32)

... b = tf.keras.activations.sigmoid(a).numpy()

... print(b)

sigmoid(x) = 1 / (1 + exp(-x))

[2.0611535e-09 2.6894143e-01 5.0000000e-01 7.3105860e-01 1.0000000e+00]

import tensorflow as tf

... a = tf.constant([-20, -1.0, 0.0, 1.0, 20], dtype = tf.float32)

... b = tf.keras.activations.swish(a)

... print(b)

tf.Tensor(

[-4.1223068e-08 -2.6894143e-01 0.0000000e+00 7.3105860e-01

2.0000000e+01], shape=(5,), dtype=float32)

import tensorflow as tf

... a = tf.constant([-3.0,-1.0, 0.0,1.0,3.0], dtype = tf.float32)

... b = tf.keras.activations.tanh(a)

... print(b)

tf.Tensor([-0.9950548 -0.7615942 0. 0.7615942 0.9950548], shape=(5,), dtype=float32)

import tensorflow as tf

... inputs = tf.random.normal(shape=(3,4))

... print(inputs)

... outputs = tf.keras.activations.softmax(inputs)

... print(outputs)

... print(tf.reduce_sum(outputs[0,:]))

tf.Tensor(

[[-0.8800855 0.3194108 0.04307271 -0.48217866]

[ 1.8659052 1.2268127 0.28712898 1.509416 ]

[ 2.414535 0.6283009 0.6566324 1.4816595 ]], shape=(3, 4), dtype=float32)

tf.Tensor(

[[0.1201291 0.3986418 0.3023923 0.17883682]

[0.4108246 0.21682139 0.08472326 0.28763065]

[0.57689524 0.09668192 0.09946025 0.22696257]], shape=(3, 4), dtype=float32)

tf.Tensor(1.0, shape=(), dtype=float32)

import tensorflow as tf

... x = tf.constant([-3.0, -1.0, 0.0, 2.0], dtype = tf.float32)

... layer = tf.keras.layers.Softmax()

... output = layer(x)

... list(output.numpy())

... print(tf.reduce_sum(output))

tf.Tensor(0.99999994, shape=(), dtype=float32)

import tensorflow as tf

... a = tf.constant([-20, -1.0, 0.0, 1.0, 20], dtype = tf.float32)

... b = tf.keras.activations.softplus(a)

... print(b)

tf.Tensor([2.0611535e-09 3.1326169e-01 6.9314718e-01 1.3132616e+00 2.0000000e+01], shape=(5,), dtype=float32)

import tensorflow as tf

... a = tf.constant([-1.0, 0.0, 1.0], dtype=tf.float32)

... b = tf.keras.activations.softsign(a)

... print(b)

tf.Tensor([-0.5 0. 0.5], shape=(3,), dtype=float32)

import tensorflow as tf

... a = tf.constant([-4.0,-2.0, 0.0,2.0,4.0], dtype = tf.float32)

... b = tf.keras.activations.elu(a)

... print(b)

tf.Tensor([-0.9816844 -0.86466473 0. 2. 4. ], shape=(5,), dtype=float32)

import tensorflow as tf

... x = tf.constant([-3.0, -1.0, 0.0, 2.0], dtype = tf.float32)

... layer = tf.keras.layers.ELU()

... output = layer(x)

... list(output.numpy())

... x = tf.constant([-3.0, -1.0, 0.0, 2.0], dtype = tf.float32)

... layer = tf.keras.layers.ELU(alpha = 1.5)

... output = layer(x)

... list(output.numpy())

import tensorflow as tf

... x = tf.constant([-3.0, -1.0, 0.0, 1.0, 3.0], dtype=tf.float32)

... y = tf.keras.activations.gelu(x)

... y.numpy()

... y = tf.keras.activations.gelu(x, approximate=True)

... y.numpy()

Traceback (most recent call last):

File "<input>", line 3, in <module>

AttributeError: module 'tensorflow.keras.activations' has no attribute 'gelu'

import tensorflow as tf

... x = tf.constant([-3.0, -1.0, 0.0, 2.0], dtype = tf.float32)

... layer = tf.keras.layers.PReLU()

... output = layer(x)

... list(output.numpy())

print(output)

tf.Tensor([0. 0. 0. 2.], shape=(4,), dtype=float32)

import tensorflow as tf

... print('relu(x) = max(x, 0)')

... x = tf.constant([-10, -5, 0.0, 5, 10], dtype=tf.float32)

... y = tf.keras.activations.relu(x).numpy()

... print(y)

... y = tf.keras.activations.relu(x, alpha=0.5).numpy()

... print(y)

... y = tf.keras.activations.relu(x, max_value=5.).numpy()

... print(y)

... tf.keras.activations.relu(x, threshold=5.).numpy()

... print(y)

relu(x) = max(x, 0)

[ 0. 0. 0. 5. 10.]

[-5. -2.5 0. 5. 10. ]

[0. 0. 0. 5. 5.]

[0. 0. 0. 5. 5.]

import tensorflow as tf

... x = tf.constant([-3.0, -1.0, 0.0, 2.0], dtype = tf.float32)

... layer = tf.keras.layers.ReLU()

... output = layer(x)

... list(output.numpy())

... layer = tf.keras.layers.ReLU(max_value=1.0)

... output = layer(x)

... list(output.numpy())

... layer = tf.keras.layers.ReLU(negative_slope=1.0)

... output = layer(x)

... list(output.numpy())

... layer = tf.keras.layers.ReLU(threshold=1.5)

... output = layer(x)

... list(output.numpy())

print(output)

tf.Tensor([-0. -0. 0. 2.], shape=(4,), dtype=float32)

import tensorflow as tf

... a = tf.constant([-4.0,-2.0, 0.0,2.0,4.0], dtype = tf.float32)

... b = tf.keras.activations.selu(a)

... print(b)

tf.Tensor([-1.7258986 -1.5201665 0. 2.101402 4.202804 ], shape=(5,), dtype=float32)

import tensorflow as tf

... layer = tf.keras.layers.LeakyReLU()

... output = layer([-3.0, -1.0, 0.0, 2.0])

... list(output.numpy())

... layer = tf.keras.layers.LeakyReLU(alpha=0.1)

... output = layer([-3.0, -1.0, 0.0, 2.0])

... list(output.numpy())

import tensorflow as tf

... x = tf.constant([-3.0, -1.0, 0.0, 2.0], dtype = tf.float32)

... layer = tf.keras.layers.ThresholdedReLU()

... output = layer(x)

... print(output)

... x = tf.constant([-3.0, -1.0, 0.0, 2.0], dtype = tf.float32)

... layer = tf.keras.layers.ThresholdedReLU(theta=2.0)

... output = layer(x)

... list(output.numpy())

tf.Tensor([-0. -0. 0. 2.], shape=(4,), dtype=float32)

2.4 PyTorch调用激活函数示例

Python Console控制台执行记录:

.. from torch import nn

...

... m = nn.Sigmoid()

... input = torch.randn(2)

... print(input)

... output = m(input)

... print(output)

tensor([-0.0109, 0.6313])

tensor([0.4973, 0.6528])

m = nn.Tanh()

... input = torch.randn(2)

... print(input)

... output = m(input)

... print(output)

tensor([ 0.2257, -1.2074])

tensor([ 0.2219, -0.8359])

m = nn.LogSigmoid()

... input = torch.randn(2)

... print(input)

... output = m(input)

... print(output)

tensor([ 0.5643, -0.8422])

tensor([-0.4503, -1.2004])

m = nn.Softplus()

... input = torch.randn(2)

... print(input)

... output = m(input)

... print(output)

tensor([-0.3040, -0.5406])

tensor([0.5526, 0.4590])

m = nn.Softsign()

... input = torch.randn(2)

... print(input)

... output = m(input)

... print(output)

tensor([-0.6471, -0.8597])

tensor([-0.3929, -0.4623])

m = nn.Softmax(dim=1)

... input = torch.randn(2, 3)

... print(input)

... output = m(input)

... print(output)

tensor([[-0.1806, 0.3937, 2.6605],

[ 0.4659, -0.2937, -0.2631]])

tensor([[0.0502, 0.0892, 0.8606],

[0.5127, 0.2399, 0.2474]])

m = nn.ELU()

... input = torch.randn(2)

... print(input)

... output = m(input)

... print(output)

tensor([-1.9608, -0.4370])

tensor([-0.8593, -0.3540])

m = nn.CELU()

... input = torch.randn(2)

... print(input)

... output = m(input)

... print(output)

tensor([ 0.0709, -1.7760])

tensor([ 0.0709, -0.8307])

m = nn.GELU()

... input = torch.randn(2)

... print(input)

... output = m(input)

... print(output)

tensor([-1.5844, -0.7257])

tensor([-0.0896, -0.1698])

m = nn.PReLU()

... input = torch.randn(2)

... print(input)

... output = m(input)

... print(output)

tensor([ 0.5621, -1.0673])

tensor([ 0.5621, -0.2668], grad_fn=<PreluBackward>)

m = nn.ReLU()

... input = torch.randn(2)

... print(input)

... output = m(input)

... print(output)

tensor([-0.4151, 1.8254])

tensor([0.0000, 1.8254])

m = nn.SELU()

... input = torch.randn(2)

... print(input)

... output = m(input)

... print(output)

tensor([ 0.9586, -1.1335])

tensor([ 1.0073, -1.1922])

m = nn.LeakyReLU(0.1)

... input = torch.randn(2)

... print(input)

... output = m(input)

... print(output)

tensor([ 0.8062, -0.9858])

tensor([ 0.8062, -0.0986])

m = nn.Threshold(0.1, 20)

... input = torch.randn(2)

... print(input)

... output = m(input)

... print(output)

tensor([ 0.0560, -1.3952])

tensor([20., 20.])

3 Matlab代码绘制各激活函数曲线图

3.1 Matlab各激活函数定义

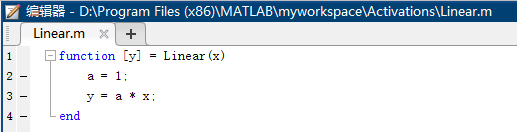

Linear.m

function [y] = Linear(x)

a = 1;

y = a * x;

end

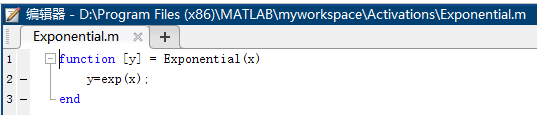

Exponential.m

function [y] = Exponential(x)

y=exp(x);

end

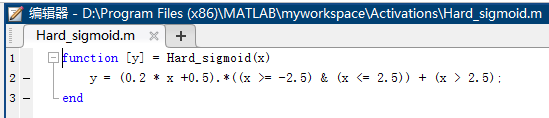

Hard_sigmoid.m

function [y] = Hard_sigmoid(x)

y = (0.2 * x +0.5).*((x >= -2.5) & (x <= 2.5)) + (x > 2.5);

end

Sigmoid.m

function [y] = Sigmoid(x)

y = 1 ./(1+exp(-x));

end

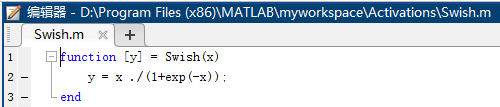

Swish.m

function [y] = Swish(x)

y = x ./(1+exp(-x));

end

Tanh.m

function [y] = Tanh(x)

y = (exp(x)-exp(-x))./(exp(x)+exp(-x));

end

Softplus.m

function [y] = Softplus(x)

y = log(exp(x)+1);

end

Softsign.m

function [y] = Softsign(x)

y = x./(1+abs(x));

end

Softmax.m

function [y] = Softmax(x)

n = length(x(:));

y = x;

sum = 0;

for i=1:n

sum = exp(x(i))+ sum;

end

for i=1:n

y(i) = exp(y(i))/sum;

end

end

eLU.m

function [y] = eLU(x)

alpha = 0.1;

y = x.*(x>0) + (alpha *(exp(x)-1)).*(x<=0);

end

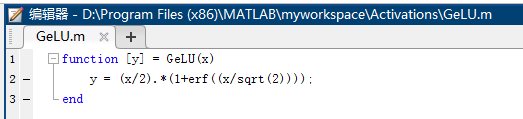

GeLU.m

function [y] = GeLU(x)

y = (x/2).*(1+erf((x/sqrt(2))));

end

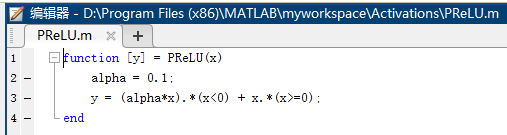

PReLU.m

function [y] = PReLU(x)

alpha = 0.1;

y = (alpha*x).*(x<0) + x.*(x>=0);

end

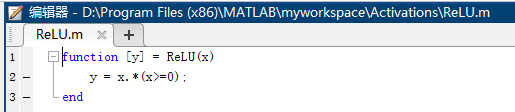

ReLU.m

function [y] = ReLU(x)

y = x.*(x>=0);

end

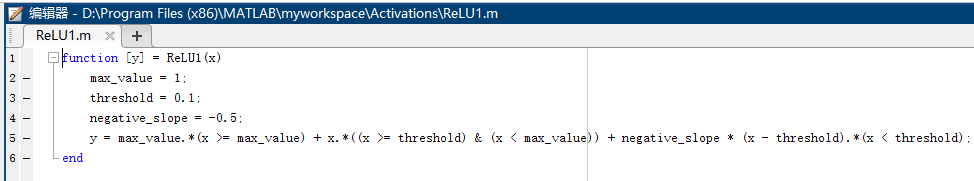

ReLU1.m

function [y] = ReLU1(x)

max_value = 1;

threshold = 0.1;

negative_slope = -0.5;

y = max_value.*(x >= max_value) + x.*((x >= threshold) & (x < max_value)) + negative_slope * (x - threshold).*(x < threshold);

end

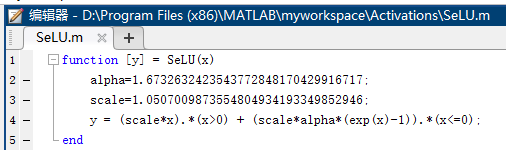

SeLU.m

function [y] = SeLU(x)

alpha=1.6732632423543772848170429916717;

scale=1.0507009873554804934193349852946;

y = (scale*x).*(x>0) + (scale*alpha*(exp(x)-1)).*(x<=0);

end

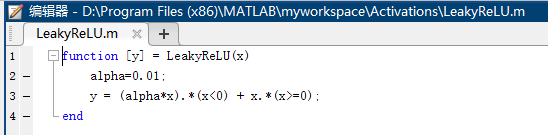

LeakyReLU.m

function [y] = LeakyReLU(x)

alpha=0.01;

y = (alpha*x).*(x<0) + x.*(x>=0);

end

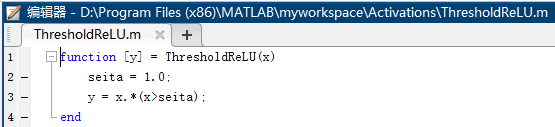

ThresholdReLU.m

function [y] = ThresholdReLU(x)

seita = 1.0;

y = x.*(x>seita);

end

3.2 Matlab各激活函数分别绘制(多个图)

activationsMultiPlot.m

figure

x = -10:0.1:10;

y = Linear(x);

plot(x,y)

title('Linear(x)');

saveas(gcf,'Linear.jpg');

figure

y = Exponential(x);

plot(x,y)

title('Exponential(x)');

saveas(gcf,'Exponential.jpg');

figure

y = Hard_sigmoid(x);

plot(x,y)

title('Hard_sigmoid(x)');

saveas(gcf,'Hard_sigmoid.jpg');

figure

y = Sigmoid(x);

plot(x,y)

title('Sigmoid(x)');

saveas(gcf,'Sigmoid.jpg');

figure

y = Swish(x);

plot(x,y)

title('Swish(x)');

saveas(gcf,'Swish.jpg');

figure

y = Tanh(x);

plot(x,y)

title('Tanh(x)');

saveas(gcf,'Tanh.jpg');

figure

y = Softmax(x);

plot(x,y)

title('Softmax(x)');

saveas(gcf,'Softmax.jpg');

figure

y = Softplus(x);

plot(x,y)

title('Softplus(x)');

saveas(gcf,'Softplus.jpg');

figure

y = Softsign(x);

plot(x,y)

title('Softsign(x)');

saveas(gcf,'Softsign.jpg');

figure

y = eLU(x);

plot(x,y)

title('eLU(x)');

saveas(gcf,'eLU.jpg');

figure

y = GeLU(x);

plot(x,y)

title('GeLU(x)');

saveas(gcf,'GeLU.jpg');

figure

y = PReLU(x);

plot(x,y)

title('PReLU(x)');

saveas(gcf,'PReLU.jpg');

figure

y = ReLU(x);

plot(x,y)

title('ReLU(x)');

saveas(gcf,'ReLU.jpg');

figure

y = ReLU1(x);

plot(x,y)

title('ReLU1(x)');

saveas(gcf,'ReLU1.jpg');

figure

y = SeLU(x);

plot(x,y)

title('SeLU(x)');

saveas(gcf,'SeLU.jpg');

figure

y = LeakyReLU(x);

plot(x,y)

title('LeakyReLU(x)');

saveas(gcf,'LeakyReLU.jpg');

figure

y = ThresholdReLU(x);

plot(x,y)

title('ThresholdReLU(x)');

saveas(gcf,'ThresholdReLU.jpg');

3.3 Matlab绘制各激活函数一张图

activationsOnePlot.m

x = -10:0.1:10;

figure

subplot(3,6,1)

y = Linear(x);

plot(x,y)

title('Linear(x)');

subplot(3,6,2)

y = Exponential(x);

plot(x,y)

title('Exponential(x)');

subplot(3,6,3)

y = Hard_sigmoid(x);

plot(x,y)

title('Hard_sigmoid(x)');

subplot(3,6,4)

y = Sigmoid(x);

plot(x,y)

title('Sigmoid(x)');

subplot(3,6,5)

y = Swish(x);

plot(x,y)

title('Swish(x)');

subplot(3,6,6)

y = Tanh(x);

plot(x,y)

title('Tanh(x)');

subplot(3,6,7)

y = Softmax(x);

plot(x,y)

title('Softmax(x)');

subplot(3,6,8)

y = Softplus(x);

plot(x,y)

title('Softplus(x)');

subplot(3,6,9)

y = Softsign(x);

plot(x,y)

title('Softsign(x)');

subplot(3,6,10)

y = eLU(x);

plot(x,y)

title('eLU(x)');

subplot(3,6,11)

y = GeLU(x);

plot(x,y)

title('GeLU(x)');

subplot(3,6,12)

y = PReLU(x);

plot(x,y)

title('PReLU(x)');

subplot(3,6,13)

y = ReLU(x);

plot(x,y)

title('ReLU(x)');

subplot(3,6,14)

y = ReLU1(x);

plot(x,y)

title('ReLU1(x)');

subplot(3,6,15)

y = SeLU(x);

plot(x,y)

title('SeLU(x)');

subplot(3,6,16)

y = LeakyReLU(x);

plot(x,y)

title('LeakyReLU(x)');

subplot(3,6,17)

y = ThresholdReLU(x);

plot(x,y)

title('ThresholdReLU(x)');

4、Python代码绘制各函数曲线图

4.1 Python各激活函数分别绘制(多个图)

import numpy as np

import matplotlib.pyplot as plt

from scipy.special import erf

def Linear(x,a=1):

y = a * x

return x

def Exponential(x):

return np.exp(x)

def Hard_sigmoid(x):

y = []

for i in x:

if i < -2.5:

y_i = 0

elif i >= -2.5 and i <= 2.5:

y_i = 0.2 * i + 0.5

else:

y_i = 1

y.append(y_i)

return y

def Sigmoid(x):

return 1.0 / (1 + np.exp(-x))

def Swish(x):

return x / (1 + np.exp(-x))

def Tanh(x):

return (np.exp(x) - np.exp(-x)) / (np.exp(x) + np.exp(-x))

def Softmax(x):

y = []

sum = 0

for i in x:

sum += np.exp(i)

for i in x:

y_i = np.exp(i) / sum

y.append(y_i)

return y;

def Softplus(x):

return np.log(np.exp(x)+1)

def Softsign(x):

return x /( 1 + np.abs(x))

def eLU(x,alpha = 0.1):

y = []

for i in x:

if i >= 0:

y_i = i;

else:

y_i = alpha * (np.exp(i) - 1)

y.append(y_i)

return y

def GeLU(x):

return (x / 2) * ( 1 + erf(x/np.sqrt(2)))

def PReLU(x,alpha = 0.1):

y = []

for i in x:

if i < 0:

y_i = alpha * i

else:

y_i = i

y.append(y_i)

return y

def ReLU(x):

y = []

for i in x:

if i >= 0:

y_i = i

else:

y_i = 0

y.append(y_i)

return y

def ReLU1(x,max_value=1,threshold=0.1,negative_slope=-0.5):

y = []

for i in x:

if i >= max_value:

y_i = max_value

elif i >= threshold and i < max_value:

y_i = i

else:

y_i = negative_slope * (i - threshold)

y.append(y_i)

return y

def SeLU(x,alpha=1.6732632423543772848170429916717,scale=1.0507009873554804934193349852946):

y = []

for i in x:

if i >= 0:

y_i = scale * i

else:

y_i = scale * alpha * (np.exp(i) - 1)

y.append(y_i)

return y

def LeakyReLU(x,alpha=0.01):

y = []

for i in x:

if i < 0:

y_i = alpha * i

else:

y_i = i

y.append(y_i)

return y

def ThresholdReLU(x,seita):

y = []

for i in x:

if i >= seita:

y_i = i

else:

y_i = 0

y.append(y_i)

return y

inputs = np.arange(-10, 10, 0.1)

linear_outputs = Linear(inputs,a=3)

print("Linear Function Input :: {}".format(inputs))

print("Linear Function Output :: {}".format(linear_outputs))

plt.plot(inputs, linear_outputs,color = 'r')

plt.title('Linear(x)')

plt.xlabel("Linear Inputs")

plt.ylabel("Linear Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

Exponential_outputs = Exponential(inputs)

print("Exponential Function Input :: {}".format(inputs))

print("Exponential Function Output :: {}".format(Exponential_outputs))

plt.plot(inputs, Exponential_outputs,color = 'r')

plt.title('Exponential(x)')

plt.xlabel("Exponential Inputs")

plt.ylabel("Exponential Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

hard_sigmoid_outputs = Hard_sigmoid(inputs)

print("Hard_sigmoid Function Input :: {}".format(inputs))

print("Hard_sigmoid Function Output :: {}".format(hard_sigmoid_outputs))

plt.plot(inputs, hard_sigmoid_outputs,color = 'r')

plt.title('Hard_sigmoid(x)')

plt.xlabel("Hard_sigmoid Inputs")

plt.ylabel("Hard_sigmoid Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

sigmoid_outputs = Sigmoid(inputs)

print("Sigmoid Function Input :: {}".format(inputs))

print("Sigmoid Function Output :: {}".format(sigmoid_outputs))

plt.plot(inputs, sigmoid_outputs,color = 'r')

plt.title('Sigmoid(x)')

plt.xlabel("Sigmoid Inputs")

plt.ylabel("Sigmoid Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

swish_outputs = Swish(inputs)

print("Swish Function Input :: {}".format(inputs))

print("Swish Function Output :: {}".format(swish_outputs))

plt.plot(inputs, swish_outputs,color = 'r')

plt.title('Swish(x)')

plt.xlabel("Swish Inputs")

plt.ylabel("Swish Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

tanh_outputs = Tanh(inputs)

print("Tanh Function Input :: {}".format(inputs))

print("Tanh Function Output :: {}".format(tanh_outputs))

plt.plot(inputs, tanh_outputs,color = 'r')

plt.title('Tanh(x)')

plt.xlabel("Tanh Inputs")

plt.ylabel("Tanh Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

softmax_outputs = Softmax(inputs)

print("Softmax Function Input :: {}".format(inputs))

print("Softmax Function Output :: {}".format(softmax_outputs))

plt.plot(inputs, softmax_outputs,color = 'r')

plt.title('Softmax(x)')

plt.xlabel("Softmax Inputs")

plt.ylabel("Softmax Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

softplus_outputs = Softplus(inputs)

print("Softplus Function Input :: {}".format(inputs))

print("Softplus Function Output :: {}".format(softplus_outputs))

plt.plot(inputs, softplus_outputs,color = 'r')

plt.title('Softplus(x)')

plt.xlabel("Softplus Inputs")

plt.ylabel("Softplus Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

softsign_outputs = Softsign(inputs)

print("Softsign Function Input :: {}".format(inputs))

print("Softsign Function Output :: {}".format(softsign_outputs))

plt.plot(inputs, softsign_outputs,color = 'r')

plt.title('Softsign(x)')

plt.xlabel("Softsign Inputs")

plt.ylabel("Softsign Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

elu_outputs = eLU(inputs)

print("eLU Function Input :: {}".format(inputs))

print("eLU Function Output :: {}".format(elu_outputs))

plt.plot(inputs, elu_outputs,color = 'r')

plt.title('eLU(x)')

plt.xlabel("eLU Inputs")

plt.ylabel("eLU Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

gelu_outputs = GeLU(inputs)

print("GeLU Function Input :: {}".format(inputs))

print("GeLU Function Output :: {}".format(gelu_outputs))

plt.plot(inputs, gelu_outputs,color = 'r')

plt.title('GeLU(x)')

plt.xlabel("GeLU Inputs")

plt.ylabel("GeLU Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

prelu_outputs = PReLU(inputs)

print("PReLU Function Input :: {}".format(inputs))

print("PReLU Function Output :: {}".format(prelu_outputs))

plt.plot(inputs, prelu_outputs,color = 'r')

plt.title('PReLU(x)')

plt.xlabel("PReLU Inputs")

plt.ylabel("PReLU Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

relu_outputs = ReLU(inputs)

print("ReLU Function Input :: {}".format(inputs))

print("ReLU Function Output :: {}".format(relu_outputs))

plt.plot(inputs, relu_outputs,color = 'r')

plt.title('ReLU(x)')

plt.xlabel("ReLU Inputs")

plt.ylabel("ReLU Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

relu1_outputs = ReLU1(inputs)

print("ReLU1 Function Input :: {}".format(inputs))

print("ReLU1 Function Output :: {}".format(relu1_outputs))

plt.plot(inputs, relu1_outputs,color = 'r')

plt.title('ReLU1(x)')

plt.xlabel("ReLU1 Inputs")

plt.ylabel("ReLU1 Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

selu_outputs = SeLU(inputs)

print("SeLU Function Input :: {}".format(inputs))

print("SeLU Function Output :: {}".format(selu_outputs))

plt.plot(inputs, selu_outputs,color = 'r')

plt.title('SeLU(x)')

plt.xlabel("SeLU Inputs")

plt.ylabel("SeLU Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

leakyrelu_outputs = LeakyReLU(inputs)

print("LeakyReLU Function Input :: {}".format(inputs))

print("LeakyReLU Function Output :: {}".format(leakyrelu_outputs))

plt.plot(inputs, leakyrelu_outputs,color = 'r')

plt.title('LeakyReLU(x)')

plt.xlabel("LeakyReLU Inputs")

plt.ylabel("LeakyReLU Outputs")

# plt.grid()

plt.show()

inputs = np.arange(-10, 10, 0.1)

thresholdrelu_outputs = ThresholdReLU(inputs,seita=1.0)

print("ThresholdReLU Function Input :: {}".format(inputs))

print("ThresholdReLU Function Output :: {}".format(thresholdrelu_outputs))

plt.plot(inputs, thresholdrelu_outputs,color = 'r')

plt.title('ThresholdReLU(x)')

plt.xlabel("ThresholdReLU Inputs")

plt.ylabel("ThresholdReLU Outputs")

# plt.grid()

plt.show()

4.2 Python绘制各激活函数一张图

import numpy as np

import matplotlib.pyplot as plt

from scipy.special import erf

def Linear(x,a=1):

y = a * x

return x

def Exponential(x):

return np.exp(x)

def Hard_sigmoid(x):

y = []

for i in x:

if i < -2.5:

y_i = 0

elif i >= -2.5 and i <= 2.5:

y_i = 0.2 * i + 0.5

else:

y_i = 1

y.append(y_i)

return y

def Sigmoid(x):

return 1.0 / (1 + np.exp(-x))

def Swish(x):

return x / (1 + np.exp(-x))

def Tanh(x):

return (np.exp(x) - np.exp(-x)) / (np.exp(x) + np.exp(-x))

def Softmax(x):

y = []

sum = 0

for i in x:

sum += np.exp(i)

for i in x:

y_i = np.exp(i) / sum

y.append(y_i)

return y;

def Softplus(x):

return np.log(np.exp(x)+1)

def Softsign(x):

return x /( 1 + np.abs(x))

def eLU(x,alpha = 0.1):

y = []

for i in x:

if i >= 0:

y_i = i;

else:

y_i = alpha * (np.exp(i) - 1)

y.append(y_i)

return y

def GeLU(x):

return (x / 2) * ( 1 + erf(x/np.sqrt(2)))

def PReLU(x,alpha = 0.1):

y = []

for i in x:

if i < 0:

y_i = alpha * i

else:

y_i = i

y.append(y_i)

return y

def ReLU(x):

y = []

for i in x:

if i >= 0:

y_i = i

else:

y_i = 0

y.append(y_i)

return y

def ReLU1(x,max_value=1,threshold=0.1,negative_slope=-0.5):

y = []

for i in x:

if i >= max_value:

y_i = max_value

elif i >= threshold and i < max_value:

y_i = i

else:

y_i = negative_slope * (i - threshold)

y.append(y_i)

return y

def SeLU(x,alpha=1.6732632423543772848170429916717,scale=1.0507009873554804934193349852946):

y = []

for i in x:

if i >= 0:

y_i = scale * i

else:

y_i = scale * alpha * (np.exp(i) - 1)

y.append(y_i)

return y

def LeakyReLU(x,alpha=0.01):

y = []

for i in x:

if i < 0:

y_i = alpha * i

else:

y_i = i

y.append(y_i)

return y

def ThresholdReLU(x,seita):

y = []

for i in x:

if i >= seita:

y_i = i

else:

y_i = 0

y.append(y_i)

return y

p1 = plt.subplot(3,6,1)

inputs = np.arange(-10, 10, 0.1)

linear_outputs = Linear(inputs,a=3)

print("Linear Function Input :: {}".format(inputs))

print("Linear Function Output :: {}".format(linear_outputs))

p1.plot(inputs, linear_outputs,color = 'r')

p1.set_title('Linear(x)')

plt.grid()

p2 = plt.subplot(3,6,2)

inputs = np.arange(-10, 10, 0.1)

Exponential_outputs = Exponential(inputs)

print("Exponential Function Input :: {}".format(inputs))

print("Exponential Function Output :: {}".format(Exponential_outputs))

p2.plot(inputs, Exponential_outputs,color = 'r')

p2.set_title('Exponential(x)')

plt.grid()

p3 = plt.subplot(3,6,3)

inputs = np.arange(-10, 10, 0.1)

hard_sigmoid_outputs = Hard_sigmoid(inputs)

print("Hard_sigmoid Function Input :: {}".format(inputs))

print("Hard_sigmoid Function Output :: {}".format(hard_sigmoid_outputs))

p3.plot(inputs, hard_sigmoid_outputs,color = 'r')

p3.set_title('Hard_sigmoid(x)')

plt.grid()

p4 = plt.subplot(3,6,4)

inputs = np.arange(-10, 10, 0.1)

sigmoid_outputs = Sigmoid(inputs)

print("Sigmoid Function Input :: {}".format(inputs))

print("Sigmoid Function Output :: {}".format(sigmoid_outputs))

p4.plot(inputs, sigmoid_outputs,color = 'r')

p4.set_title('Sigmoid(x)')

plt.grid()

p5 = plt.subplot(3,6,5)

inputs = np.arange(-10, 10, 0.1)

swish_outputs = Swish(inputs)

print("Swish Function Input :: {}".format(inputs))

print("Swish Function Output :: {}".format(swish_outputs))

p5.plot(inputs, swish_outputs,color = 'r')

p5.set_title('Swish(x)')

plt.grid()

p6 = plt.subplot(3,6,6)

inputs = np.arange(-10, 10, 0.1)

tanh_outputs = Tanh(inputs)

print("Tanh Function Input :: {}".format(inputs))

print("Tanh Function Output :: {}".format(tanh_outputs))

p6.plot(inputs, tanh_outputs,color = 'r')

p6.set_title('Tanh(x)')

plt.grid()

p7 = plt.subplot(3,6,7)

inputs = np.arange(-10, 10, 0.1)

softmax_outputs = Softmax(inputs)

print("Softmax Function Input :: {}".format(inputs))

print("Softmax Function Output :: {}".format(softmax_outputs))

p7.plot(inputs, softmax_outputs,color = 'r')

p7.set_title('Softmax(x)')

plt.grid()

p8 = plt.subplot(3,6,8)

inputs = np.arange(-10, 10, 0.1)

softplus_outputs = Softplus(inputs)

print("Softplus Function Input :: {}".format(inputs))

print("Softplus Function Output :: {}".format(softplus_outputs))

p8.plot(inputs, softplus_outputs,color = 'r')

p8.set_title('Softplus(x)')

plt.grid()

p9 = plt.subplot(3,6,9)

inputs = np.arange(-10, 10, 0.1)

softsign_outputs = Softsign(inputs)

print("Softsign Function Input :: {}".format(inputs))

print("Softsign Function Output :: {}".format(softsign_outputs))

p9.plot(inputs, softsign_outputs,color = 'r')

p9.set_title('Softsign(x)')

plt.grid()

p10 = plt.subplot(3,6,10)

inputs = np.arange(-10, 10, 0.1)

elu_outputs = eLU(inputs)

print("eLU Function Input :: {}".format(inputs))

print("eLU Function Output :: {}".format(elu_outputs))

p10.plot(inputs, elu_outputs,color = 'r')

p10.set_title('eLU(x)')

plt.grid()

p11 = plt.subplot(3,6,11)

inputs = np.arange(-10, 10, 0.1)

gelu_outputs = GeLU(inputs)

print("GeLU Function Input :: {}".format(inputs))

print("GeLU Function Output :: {}".format(gelu_outputs))

p11.plot(inputs, gelu_outputs,color = 'r')

p11.set_title('GeLU(x)')

plt.grid()

p12 = plt.subplot(3,6,12)

inputs = np.arange(-10, 10, 0.1)

prelu_outputs = PReLU(inputs)

print("PReLU Function Input :: {}".format(inputs))

print("PReLU Function Output :: {}".format(prelu_outputs))

p12.plot(inputs, prelu_outputs,color = 'r')

p12.set_title('PReLU(x)')

plt.grid()

p13 = plt.subplot(3,6,13)

inputs = np.arange(-10, 10, 0.1)

relu_outputs = ReLU(inputs)

print("ReLU Function Input :: {}".format(inputs))

print("ReLU Function Output :: {}".format(relu_outputs))

p13.plot(inputs, relu_outputs,color = 'r')

p13.set_title('ReLU(x)')

plt.grid()

p14 = plt.subplot(3,6,14)

inputs = np.arange(-10, 10, 0.1)

relu1_outputs = ReLU1(inputs)

print("ReLU1 Function Input :: {}".format(inputs))

print("ReLU1 Function Output :: {}".format(relu1_outputs))

p14.plot(inputs, relu1_outputs,color = 'r')

p14.set_title('ReLU1(x)')

plt.grid()

p15 = plt.subplot(3,6,15)

inputs = np.arange(-10, 10, 0.1)

selu_outputs = SeLU(inputs)

print("SeLU Function Input :: {}".format(inputs))

print("SeLU Function Output :: {}".format(selu_outputs))

p15.plot(inputs, selu_outputs,color = 'r')

p15.set_title('SeLU(x)')

plt.grid()

p16 = plt.subplot(3,6,16)

inputs = np.arange(-10, 10, 0.1)

leakyrelu_outputs = LeakyReLU(inputs)

print("LeakyReLU Function Input :: {}".format(inputs))

print("LeakyReLU Function Output :: {}".format(leakyrelu_outputs))

p16.plot(inputs, leakyrelu_outputs,color = 'r')

p16.set_title('LeakyReLU(x)')

plt.grid()

p17 = plt.subplot(3,6,17)

inputs = np.arange(-10, 10, 0.1)

thresholdrelu_outputs = ThresholdReLU(inputs,seita=1.0)

print("ThresholdReLU Function Input :: {}".format(inputs))

print("ThresholdReLU Function Output :: {}".format(thresholdrelu_outputs))

p17.plot(inputs, thresholdrelu_outputs,color = 'r')

p17.set_title('ThresholdReLU(x)')

plt.grid()

plt.show()