搭建filebeat+redis+logstash+es+kibana架构,拓扑图参考ELK(3)

安装redis,并启动

(1)准备安装和数据目录

[root@es ~]# mkdir -p /opt/redis_cluster/redis_6379/{conf,logs,pid}(2)下载redis安装包

这里我已经提前下载好了,所以直接跳过此步。如没有安装包执行下面命名下载。

wget http://download.redis.io/releases/redis-5.0.7.tar.gz(3)解压redis到/opt/redis_cluster/

[root@es ~]# tar zxf /media/redis-5.0.7.tar.gz -C /opt/redis_cluster/

[root@es ~]# ln -s /opt/redis_cluster/redis-5.0.7 /opt/redis_cluster/redis(4)切换目录安装redis

[root@es ~]# cd /opt/redis_cluster/redis

[root@es redis]# make && make install (5)编写配置文件

[root@es redis]# vim /opt/redis_cluster/redis_6379/conf/6379.conf

添加:

bind 127.0.0.1 192.168.8.1

port 6379

daemonize yes

pidfile /opt/redis_cluster/redis_6379/pid/redis_6379.pid

logfile /opt/redis_cluster/redis_6379/logs/redis_6379.log

databases 16

dbfilename redis.rdb

dir /opt/redis_cluster/redis_6379

(6)启动当前redis服务

[root@es redis]# redis-server /opt/redis_cluster/redis_6379/conf/6379.confweb主机修改filebeat配置文件,output给redis

(参考文档:https://www.elastic.co/guide/en/beats/filebeat/6.6/index.html)

(1)修改filebeat配置output指向redis,重启

[root@web ~]# vim /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

setup.template.settings:

index.number_of_shards: 3

setup.kibana:

output.redis:

hosts: ["192.168.8.1"] //指向redis地址

key: "filebeat"

db: 0

timeout: 5

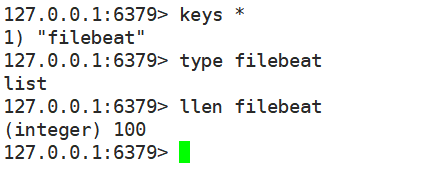

[root@web ~]# systemctl restart filebeat(2)es主机测试访问网站,登录redis,查看键值

测试前需要先用client主机ab工具发出请求,而后使用redis命令查看。

[root@client ~]# ab -n 100 -c 20 http://192.168.8.2/index.html

redis-cli #登录

keys * #列出所有键

type filebeat #filebeat为键值名

LLEN filebeat #查看list长度

LRANGE filebeat 0 -1 #查看list所有内容

安装logstash,收集redis的日志,提交给es

(1)安装logstash

[root@es ~]# rpm -ivh /media/elk-6.6/logstash-6.6.0.rpm(2)配置logstash

[root@es ~]# vim /etc/logstash/conf.d/redis.conf

添加:

input {

redis {

host => "192.168.8.1"

port => "6379"

db => "0"

key => "filebeat"

data_type => "list"

}

}

filter {

mutate {

convert => ["upstream_time","float"]

convert => ["request_time","float"]

}

}

output {

stdout {}

elasticsearch {

hosts => ["http://192.168.8.1:9200"]

index => "nginx_access-%{+YYYY.MM.dd}"

manage_template => false

}

}(3)启动logstash

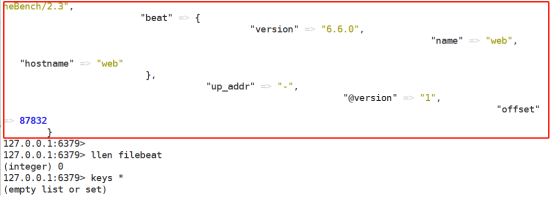

执行下面命令后,redis中的数据将会被取走。

[root@es ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/redis.conf &下图中看到先执行llen命令,可以看到数据还存在,稍等片刻等待被取走,出现红框中的内容后,再次查看,数据已经被取走了。

(4)修改logstash配置文件,实现access和error日志分离

[root@es ~]# vim /etc/logstash/conf.d/redis.conf

添加:

input {

redis {

host => "192.168.8.1"

port => "6379"

db => "0"

key => "filebeat"

data_type => "list"

}

}

filter {

mutate {

convert => ["upstream_time","float"]

convert => ["request_time","float"]

}

}

output {

stdout {}

if "access" in [tags] {

elasticsearch {

hosts => ["http://192.168.8.1:9200"]

index => "nginx_access-%{+YYYY.MM.dd}"

manage_template => false

}

}

if "error" in [tags] {

elasticsearch {

hosts => ["http://192.168.8.1:9200"]

index => "nginx_error-%{+YYYY.MM.dd}"

manage_template => false

}

}

}

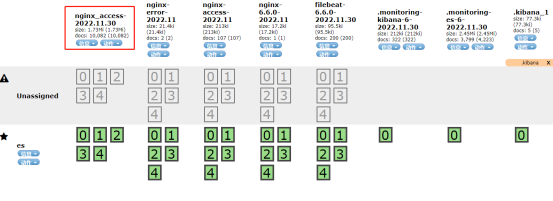

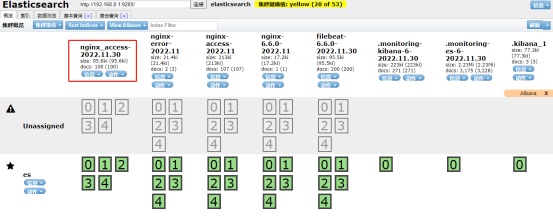

[root@es ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/redis.conf &启动后在client主机创建多个连接数测试,刷新elasticsearch查看请求数量(数量较多,稍等片刻)。

[root@client ~]# ab -n 10000 -c 1000 http://192.168.8.2/index.html