可执行案例可参考gitee仓库:tutorials/classification/i3d · Yanlq/zjut_mindvideo - 码云 - 开源中国 (gitee.com)

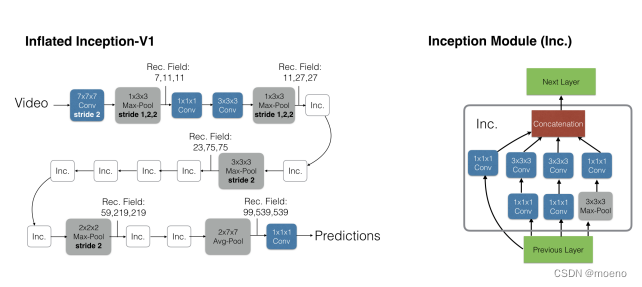

I3D,全名《Quo Vadis,Action Recognition? A New Model and the Kinetics Dataset》。该论文是一篇CVPR2018年的论文。使用了新的数据集Kinetics重新评估了当前最新的模型架构,Kinetics数集有400个人体行为类别,每个类别有400多个clips,这些数据来自真实有挑战的YouTube视频。作者提出的双流膨胀3D卷积网络(I3D),该网络是对一个非常深的图像分类网络中的卷积和池化kernel从2D扩展到了3D,来无缝的学习时空特征。并且模型I3D在Kinetics预训之后,I3D在基准数据集HMDB-51和UCF-101达到了80.9%和98.0%的准确率。

在ImageNet上训练好的深度结构网络可以用于其他任务,同时随着深度结构的改进,效果也越来越好。然而,在视频领域,有一个公开的问题,在一个足够大的数据集上训练好的行为识别网络应用于不同的时序任务或数据集上是否有类似性能的提升。为了回答这个问题,作者通过实验重新实现了许多有代表性的神经网络结构,之后通过对这些网络在Kinetics上预训练,之后对这些网络在HMDB-51和UCF-101数据集上进行微调来分析他们的迁移行为,最终作者发现通过预训练这些模型表现上有很大提升,但是性能的提升很大程度上与网络的结构有关。正是基于此发现,作者提出了I3D,在Kinetics充分的预训练之后,可以实现很好的表现。I3D主要依据最优的图像网络架构实现的,并对他们的卷积和池化核从2D扩展到3D,并选择使用它们的参数,最终得到了非常深的时空分类网络。作者也发现,基于在Kinetics预训练的InceptionV1的I3D模型的表现远远超过了其他最优的模型的I3D。

inflate如何实现

2d网络里2d的卷积kernel直接变成3d的卷积kernel,2d的池化层直接变成3d的池化层就好了,剩下的网络结构统统都不变,这样我们就可以选择在图像领域里用过比较好的2d网络结构(vgg,res50 etc.),得到在视频理解领域下可以使用的3d的网络结构。将同一张图片反复地复制粘贴得到一个视频,假设视频的长度是N,每一帧的输入为x,将图片的2d filter在时间维度上也复制N遍,那么就得到了wNx,那么最后再进行缩放,除以N,那么就得到了wx。虽然前面所说的,pooling的时候 33对应的转为333,但在实现上,还是稍微有点区别的,时间维度上最好不要做下采样,所以前面2个max pooling层对应的kernel是133,stride是12*2,后续的就kernel和stride就是正常inflate了。

数据集加载

Kinetics400数据集包含400个行为类别的视频片段,是一个大规模的视频行为识别数据集。每个视频片段的长度在10秒到30秒之间,视频的分辨率为240x320或320x240。 通过ParseKinetic400接口来加载Kinetics400数据集。

import os

import csv

import json

from src.data import transforms

from src.data.meta import ParseDataset

from src.data.video_dataset import VideoDataset

from src.utils.class_factory import ClassFactory, ModuleType

__all__ = ["Kinetic400", "ParseKinetic400"]

@ClassFactory.register(ModuleType.DATASET)

class Kinetic400(VideoDataset):

"""

Args:

path (string): Root directory of the Mnist dataset or inference image.

split (str): The dataset split supports "train", "test" or "infer". Default: None.

transform (callable, optional): A function transform that takes in a video. Default:None.

target_transform (callable, optional): A function transform that takes in a label.

Default: None.

seq(int): The number of frames of captured video. Default: 16.

seq_mode(str): The way of capture video frames,"part") or "discrete" fetch. Default: "part".

align(boolean): The video contains multiple actions. Default: False.

batch_size (int): Batch size of dataset. Default:32.

repeat_num (int): The repeat num of dataset. Default:1.

shuffle (bool, optional): Whether or not to perform shuffle on the dataset. Default:None.

num_parallel_workers (int): Number of subprocess used to fetch the dataset in parallel.

Default: 1.

num_shards (int, optional): Number of shards that the dataset will be divided into.

Default: None.

shard_id (int, optional): The shard ID within num_shards. Default: None.

download (bool): Whether to download the dataset. Default: False.

frame_interval (int): Frame interval of the sample strategy. Default: 1.

num_clips (int): Number of clips sampled in one video. Default: 1.

Examples:

>>> from mindvision.msvideo.dataset.hmdb51 import HMDB51

>>> dataset = HMDB51("./data/","train")

>>> dataset = dataset.run()

The directory structure of Kinetic-400 dataset looks like:

.

|-kinetic-400

|-- train

| |-- ___qijXy2f0_000011_000021.mp4 // video file

| |-- ___dTOdxzXY_000022_000032.mp4 // video file

| ...

|-- test

| |-- __Zh0xijkrw_000042_000052.mp4 // video file

| |-- __zVSUyXzd8_000070_000080.mp4 // video file

|-- val

| |-- __wsytoYy3Q_000055_000065.mp4 // video file

| |-- __vzEs2wzdQ_000026_000036.mp4 // video file

| ...

|-- kinetics-400_train.csv //training dataset label file.

|-- kinetics-400_test.csv //testing dataset label file.

|-- kinetics-400_val.csv //validation dataset label file.

...

"""

def __init__(self,

path,

split=None,

transform=None,

target_transform=None,

seq=16,

seq_mode="part",

align=False,

batch_size=16,

repeat_num=1,

shuffle=None,

num_parallel_workers=1,

num_shards=None,

shard_id=None,

download=False,

frame_interval=1,

num_clips=1

):

load_data = ParseKinetic400(os.path.join(path, split)).parse_dataset

super().__init__(path=path,

split=split,

load_data=load_data,

transform=transform,

target_transform=target_transform,

seq=seq,

seq_mode=seq_mode,

align=align,

batch_size=batch_size,

repeat_num=repeat_num,

shuffle=shuffle,

num_parallel_workers=num_parallel_workers,

num_shards=num_shards,

shard_id=shard_id,

download=download,

frame_interval=frame_interval,

num_clips=num_clips

)

@property

def index2label(self):

"""Get the mapping of indexes and labels."""

csv_file = os.path.join(self.path, f"kinetics-400_{self.split}.csv")

mapping = []

with open(csv_file, "r")as f:

f_csv = csv.DictReader(f)

c = 0

for row in f_csv:

if not cls:

cls = row['label']

mapping.append(cls)

if row['label'] != cls:

c += 1

cls = row['label']

mapping.append(cls)

return mapping

def download_dataset(self):

"""Download the HMDB51 data if it doesn't exist already."""

raise ValueError("HMDB51 dataset download is not supported.")

def default_transform(self):

"""Set the default transform for UCF101 dataset."""

size = 256

order = (3, 0, 1, 2)

trans = [

transforms.VideoShortEdgeResize(size=size, interpolation='linear'),

transforms.VideoCenterCrop(size=(224, 224)),

transforms.VideoRescale(shift=0),

transforms.VideoReOrder(order=order),

transforms.VideoNormalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.255])

]

return trans

class ParseKinetic400(ParseDataset):

"""

Parse kinetic-400 dataset.

"""

urlpath = "https://storage.googleapis.com/deepmind-media/Datasets/kinetics400.tar.gz"

def load_cls_file(self):

"""Parse the category file."""

base_path = os.path.dirname(self.path)

csv_file = os.path.join(base_path, f"kinetics-400_train.csv")

cls2id = {}

id2cls = []

cls_file = os.path.join(base_path, "cls2index.json")

print(cls_file)

if os.path.isfile(cls_file):

with open(cls_file, "r")as f:

cls2id = json.load(f)

id2cls = [*cls2id]

return id2cls, cls2id

with open(csv_file, "r")as f:

f_csv = csv.DictReader(f)

for row in f_csv:

if row['label'] not in cls2id:

cls2id.setdefault(row['label'], len(cls2id))

id2cls.append(row['label'])

f.close()

os.mknod(cls_file)

with open(cls_file, "w")as f:

f.write(json.dumps(cls2id))

return id2cls, cls2id

def parse_dataset(self, *args):

"""Traverse the HMDB51 dataset file to get the path and label."""

parse_kinetic400 = ParseKinetic400(self.path)

split = os.path.split(parse_kinetic400.path)[-1]

video_label, video_path = [], []

_, cls2id = self.load_cls_file()

with open(os.path.join(os.path.dirname(parse_kinetic400.path),

f"kinetics-400_{split}.csv"), "rt")as f:

f_csv = csv.DictReader(f)

for row in f_csv:

start = row['time_start'].zfill(6)

end = row['time_end'].zfill(6)

file_name = f"{row['youtube_id']}_{start}_{end}.mp4"

video_path.append(os.path.join(self.path, file_name))

video_label.append(cls2id[row['label']])

return video_path, video_label构建网络

Inception3dModule是在I3D网络中使用的一种特殊的卷积神经网络模块,用于在三维卷积神经网络中处理视频数据。与传统的卷积神经网络不同,Inception3d Module使用了多个不同大小的卷积核,并将它们的输出连接起来,以提高模型的特征提取能力。以下是实现Inception3dModule的代码

from mindspore import nn

from mindspore import ops

from typing import Union, List, Tuple

from src.models.avgpool3d import AvgPool3D

from src.models.layers.unit3d import Unit3D

from src.models.builder import build_layer,build_model

from src.utils.class_factory import ClassFactory, ModuleType

__all__ = ['I3D']

@ClassFactory.register(ModuleType.LAYER)

class Inception3dModule(nn.Cell):

"""

Inception3dModule definition.

Args:

in_channels (int): The number of channels of input frame images.

out_channels (int): The number of channels of output frame images.

Returns:

Tensor, output tensor.

Examples:

Inception3dModule(in_channels=3, out_channels=3)

"""

def __init__(self, in_channels, out_channels):

super(Inception3dModule, self).__init__()

self.cat = ops.Concat(axis=1)

self.b0 = Unit3D(

in_channels=in_channels,

out_channels=out_channels[0],

kernel_size=(1, 1, 1))

self.b1a = Unit3D(

in_channels=in_channels,

out_channels=out_channels[1],

kernel_size=(1, 1, 1))

self.b1b = Unit3D(

in_channels=out_channels[1],

out_channels=out_channels[2],

kernel_size=(3, 3, 3))

self.b2a = Unit3D(

in_channels=in_channels,

out_channels=out_channels[3],

kernel_size=(1, 1, 1))

self.b2b = Unit3D(

in_channels=out_channels[3],

out_channels=out_channels[4],

kernel_size=(3, 3, 3))

self.b3a = ops.MaxPool3D(

kernel_size=(3, 3, 3),

strides=(1, 1, 1),

pad_mode="same")

self.b3b = Unit3D(

in_channels=in_channels,

out_channels=out_channels[5],

kernel_size=(1, 1, 1))

def construct(self, x):

b0 = self.b0(x)

b1 = self.b1b(self.b1a(x))

b2 = self.b2b(self.b2a(x))

b3 = self.b3b(self.b3a(x))

return self.cat((b0, b1, b2, b3))

@ClassFactory.register(ModuleType.LAYER)

class InceptionI3d(nn.Cell):

"""

InceptionI3d architecture. TODO: i3d Inception backbone just in 3d?what about 2d. and two steam.

Args:

in_channels (int): The number of channels of input frame images(default 3).

Returns:

Tensor, output tensor.

Examples:

>>> InceptionI3d(in_channels=3)

"""

def __init__(self, in_channels=3):

super(InceptionI3d, self).__init__()

self.conv3d_1a_7x7 = Unit3D(

in_channels=in_channels,

out_channels=64,

kernel_size=(7, 7, 7),

stride=(2, 2, 2))

self.maxpool3d_2a_3x3 = ops.MaxPool3D(

kernel_size=(1, 3, 3),

strides=(1, 2, 2),

pad_mode="same")

self.conv3d_2b_1x1 = Unit3D(

in_channels=64,

out_channels=64,

kernel_size=(1, 1, 1))

self.conv3d_2c_3x3 = Unit3D(

in_channels=64,

out_channels=192,

kernel_size=(3, 3, 3))

self.maxpool3d_3a_3x3 = ops.MaxPool3D(

kernel_size=(1, 3, 3),

strides=(1, 2, 2),

pad_mode="same")

self.mixed_3b = build_layer(

{

"type": "Inception3dModule",

"in_channels": 192,

"out_channels": [64, 96, 128, 16, 32, 32]})

self.mixed_3c = build_layer(

{

"type": "Inception3dModule",

"in_channels": 256,

"out_channels": [128, 128, 192, 32, 96, 64]})

self.maxpool3d_4a_3x3 = ops.MaxPool3D(

kernel_size=(3, 3, 3),

strides=(2, 2, 2),

pad_mode="same")

self.mixed_4b = build_layer(

{

"type": "Inception3dModule",

"in_channels": 128 + 192 + 96 + 64,

"out_channels": [192, 96, 208, 16, 48, 64]})

self.mixed_4c = build_layer(

{

"type": "Inception3dModule",

"in_channels": 192 + 208 + 48 + 64,

"out_channels": [160, 112, 224, 24, 64, 64]})

self.mixed_4d = build_layer(

{

"type": "Inception3dModule",

"in_channels": 160 + 224 + 64 + 64,

"out_channels": [128, 128, 256, 24, 64, 64]})

self.mixed_4e = build_layer(

{

"type": "Inception3dModule",

"in_channels": 128 + 256 + 64 + 64,

"out_channels": [112, 144, 288, 32, 64, 64]})

self.mixed_4f = build_layer(

{

"type": "Inception3dModule",

"in_channels": 112 + 288 + 64 + 64,

"out_channels": [256, 160, 320, 32, 128, 128]})

self.maxpool3d_5a_2x2 = ops.MaxPool3D(

kernel_size=(2, 2, 2),

strides=(2, 2, 2),

pad_mode="same")

self.mixed_5b = build_layer(

{

"type": "Inception3dModule",

"in_channels": 256 + 320 + 128 + 128,

"out_channels": [256, 160, 320, 32, 128, 128]})

self.mixed_5c = build_layer(

{

"type": "Inception3dModule",

"in_channels": 256 + 320 + 128 + 128,

"out_channels": [384, 192, 384, 48, 128, 128]})

self.mean_op = ops.ReduceMean(keep_dims=True)

self.concat_op = ops.Concat(axis=2)

self.stridedslice_op = ops.StridedSlice()

def construct(self, x):

"""Average pooling 3D construct."""

x = self.conv3d_1a_7x7(x)

x = self.maxpool3d_2a_3x3(x)

x = self.conv3d_2b_1x1(x)

x = self.conv3d_2c_3x3(x)

x = self.maxpool3d_3a_3x3(x)

x = self.mixed_3b(x)

x = self.mixed_3c(x)

x = self.maxpool3d_4a_3x3(x)

x = self.mixed_4b(x)

x = self.mixed_4c(x)

x = self.mixed_4d(x)

x = self.mixed_4e(x)

x = self.mixed_4f(x)

x = self.maxpool3d_5a_2x2(x)

x = self.mixed_5b(x)

x = self.mixed_5c(x)

return x平均3D池化(Average 3D Pooling)是一种在三维卷积神经网络中常用的操作,它通过将特征图中每个3D卷积核对应的区域取平均值来减小特征图的尺寸。与2D池化类似,平均3D池化可以降低模型的计算量,减少特征图的尺寸,并提高模型的鲁棒性和泛化能力。下面的代码实现平均3D池化:

class AvgPooling3D(nn.Cell):

"""

A module of average pooling for 3D video features.

Args:

kernel_size(Union[int, List[int], Tuple[int]]): The size of kernel window used to take the

average value, Default: (1, 1, 1).

strides(Union[int, List[int], Tuple[int]]): The distance of kernel moving. Default: (1, 1, 1).

Inputs:

x(Tensor): The input Tensor.

Returns:

Tensor, the pooled Tensor.

"""

def __init__(self,

kernel_size: Union[int, List[int], Tuple[int]] = (1, 1, 1),

strides: Union[int, List[int], Tuple[int]] = (1, 1, 1),

) -> None:

super(AvgPooling3D, self).__init__()

if isinstance(kernel_size, int):

kernel_size = (kernel_size, kernel_size, kernel_size)

kernel_size = tuple(kernel_size)

if isinstance(strides, int):

strides = (strides, strides, strides)

strides = tuple(strides)

self.pool = AvgPool3D(kernel_size, strides)

def construct(self, x):

x = self.pool(x)

return x通过Inception3dModule与平均3D池化进行I3D头部网络与网络整体的构建:

@ClassFactory.register(ModuleType.LAYER)

class I3dHead(nn.Cell):

"""

I3dHead definition

Args:

in_channels: Input channel.

num_classes (int): The number of classes .

dropout_keep_prob (float): A float value of prob.

Returns:

Tensor, output tensor.

Examples:

I3dHead(in_channels=2048, num_classes=400, dropout_keep_prob=0.5)

"""

def __init__(self, in_channels, num_classes=400, dropout_keep_prob=0.5):

super(I3dHead, self).__init__()

self._num_classes = num_classes

self.dropout = nn.Dropout(dropout_keep_prob)

self.logits = Unit3D(

in_channels=in_channels,

out_channels=self._num_classes,

kernel_size=(1, 1, 1),

activation=None,

norm=None,

has_bias=True)

self.mean_op = ops.ReduceMean()

self.squeeze = ops.Squeeze(3)

def construct(self, x):

x = self.logits(self.dropout(x))

x = self.squeeze(self.squeeze(x))

x = self.mean_op(x, 2)

return x

@ClassFactory.register(ModuleType.MODEL)

class I3D(nn.Cell):

"""

TODO: introduction i3d network.

Args:

in_channel(int): Number of channel of input data. Default: 3.

num_classes(int): Number of classes, it is the size of classfication score for every sample,

i.e. :math:`CLASSES_{out}`. Default: 400.

keep_prob(float): Probability of dropout for multi-dense-layer head, the number of probabilities equals

the number of dense layers. Default: 0.5.

pooling_keep_dim: whether to keep dim when pooling. Default: True.

pretrained(bool): If `True`, it will create a pretrained model, the pretrained model will be loaded

from network. If `False`, it will create a i3d model with uniform initialization for weight and bias. Default: False.

Inputs:

- **x** (Tensor) - Tensor of shape :math:`(N, C_{in}, D_{in}, H_{in}, W_{in})`.

Outputs:

Tensor of shape :math:`(N, CLASSES_{out})`.

Supported Platforms:

``GPU``

Examples:

>>> import numpy as np

>>> import mindspore as ms

>>> from mindvision.msvideo.models import i3d

>>>

>>> net = i3d()

>>> x = ms.Tensor(np.ones([1, 3, 32, 224, 224]), ms.float32)

>>> output = net(x)

>>> print(output.shape)

(1, 400)

About i3d:

TODO: i3d introduction.

Citation:

.. code-block::

TODO: i3d Citation.

"""

def __init__(self,

in_channel: int = 3,

num_classes: int = 400,

keep_prob: float = 0.5,

#pooling_keep_dim: bool = True,

backbone_output_channel=1024):

super(I3D, self).__init__()

self.backbone = InceptionI3d(in_channels=in_channel)

#self.neck = ops.AvgPool3D(kernel_size=(2,7,7),strides=(1,1,1))

self.neck = AvgPooling3D(kernel_size=(2,7,7))

#self.neck = ops.ReduceMean(keep_dims=pooling_keep_dim)

self.head = I3dHead(in_channels=backbone_output_channel,

num_classes=num_classes,

dropout_keep_prob=keep_prob)

def construct(self, x):

x = self.backbone(x)

x = self.neck(x)

x = self.head(x)

return x模型训练与评估

本节对于以上构建的I3D网络进行训练与评估:

from mindspore import context, load_checkpoint, load_param_into_net

from mindspore.context import ParallelMode

from mindspore.train.callback import ModelCheckpoint, CheckpointConfig, LossMonitor

from mindspore.train import Model

from mindspore.nn.metrics import Accuracy

from mindspore.communication.management import init, get_rank, get_group_size

from src.utils.check_param import Validator, Rel

from src.utils.config import parse_args, Config

from src.loss.builder import build_loss

from src.schedule.builder import get_lr

from src.optim.builder import build_optimizer

from src.data.builder import build_dataset, build_transforms

from src.models import build_model

def main(pargs):

# set config context

config = Config(pargs.config)

context.set_context(**config.context)

# run distribute

if config.train.run_distribute:

if config.device_target == "Ascend":

init()

else:

init("nccl")

context.set_auto_parallel_context(device_num=get_group_size(),

parallel_mode=ParallelMode.DATA_PARALLEL,

gradients_mean=True)

ckpt_save_dir = config.train.ckpt_path + "ckpt_" + str(get_rank()) + "/"

else:

ckpt_save_dir = config.train.ckpt_path

# perpare dataset

transforms = build_transforms(config.data_loader.train.map.operations)

data_set = build_dataset(config.data_loader.train.dataset)

data_set.transform = transforms

dataset_train = data_set.run()

Validator.check_int(dataset_train.get_dataset_size(), 0, Rel.GT)

batches_per_epoch = dataset_train.get_dataset_size()

# set network

network = build_model(config.model)

# set loss

network_loss = build_loss(config.loss)

# set lr

lr_cfg = config.learning_rate

lr_cfg.steps_per_epoch = int(batches_per_epoch / config.data_loader.group_size)

lr = get_lr(lr_cfg)

# set optimizer

config.optimizer.params = network.trainable_params()

config.optimizer.learning_rate = lr

network_opt = build_optimizer(config.optimizer)

if config.train.pre_trained:

# load pretrain model

param_dict = load_checkpoint(config.train.pretrained_model)

load_param_into_net(network, param_dict)

# set checkpoint for the network

ckpt_config = CheckpointConfig(

save_checkpoint_steps=config.train.save_checkpoint_steps,

keep_checkpoint_max=config.train.keep_checkpoint_max)

ckpt_callback = ModelCheckpoint(prefix=config.model_name,

directory=ckpt_save_dir,

config=ckpt_config)

# init the whole Model

model = Model(network,

network_loss,

network_opt,

metrics={"Accuracy": Accuracy()})

# begin to train

print('[Start training `{}`]'.format(config.model_name))

print("=" * 80)

model.train(config.train.epochs,

dataset_train,

callbacks=[ckpt_callback, LossMonitor()],

dataset_sink_mode=config.dataset_sink_mode)

print('[End of training `{}`]'.format(config.model_name))

if __name__ == '__main__':

args = parse_args()

main(args)可视化模型预测

本节对于训练后模型进行可视化预测并输出结果

from PIL import Image

import numpy as np

import cv2

import decord

import moviepy.editor as mpy

import mindspore as ms

from mindspore import context, nn, load_checkpoint, load_param_into_net, ops, Tensor

from mindspore.train import Model

from mindspore.dataset.vision import py_transforms as T_p

from src.utils.check_param import Validator, Rel

from src.utils.config import parse_args, Config

from src.loss.builder import build_loss

from src.data.builder import build_dataset, build_transforms

from src.models import build_model

FONTFACE = cv2.FONT_HERSHEY_DUPLEX

FONTSCALE = 0.6

FONTCOLOR = (255, 255, 255)

BGBLUE = (0, 119, 182)

THICKNESS = 1

LINETYPE = 1

def infer_classification(pargs):

# set config context

config = Config(pargs.config)

context.set_context(**config.context)

cast = ops.Cast()

# perpare dataset

transforms = build_transforms(config.data_loader.eval.map.operations)

#transforms = T_p.ToTensor()

data_set = build_dataset(config.data_loader.eval.dataset)

data_set.transform = transforms

dataset_infer = data_set.run()

Validator.check_int(dataset_infer.get_dataset_size(), 0, Rel.GT)

# set network

network = build_model(config.model)

# load pretrain model

param_dict = load_checkpoint(config.infer.pretrained_model)

load_param_into_net(network, param_dict)

# set loss

network_loss = build_loss(config.loss)

# init the whole Model

model = Model(network,

network_loss)

expand_dims = ops.ExpandDims()

concat = ops.Concat(axis=0)

# 随机生成一个指定视频

vis_num = len(data_set.video_path)

vid_idx = np.random.randint(vis_num)

video_path = data_set.video_path[vid_idx]

video_reader = decord.VideoReader(video_path, num_threads=1)

img_set = []

for k in range(16):

im = video_reader[k].asnumpy()

img_set.append(im)

video = np.stack(img_set, axis=0)

# video = video.transpose(3, 0, 1, 2)

# video = Tensor(video, ms.float32)

# video = expand_dims(video, 0)

for t in transforms:

video = t(video)

# Begin to eval.

video = Tensor(video, ms.float32)

video = expand_dims(video, 0)

result = network(video)

result.asnumpy()

print("This is {}-th category".format(result.argmax()))

return result, video_path

def add_label(frame, label, BGCOLOR=BGBLUE):

threshold = 30

def split_label(label):

label = label.split()

lines, cline = [], ''

for word in label:

if len(cline) + len(word) < threshold:

cline = cline + ' ' + word

else:

lines.append(cline)

cline = word

if cline != '':

lines += [cline]

return lines

if len(label) > 30:

label = split_label(label)

else:

label = [label]

label = ['Action: '] + label

sizes = []

for line in label:

sizes.append(cv2.getTextSize(line, FONTFACE, FONTSCALE, THICKNESS)[0])

box_width = max([x[0] for x in sizes]) + 10

text_height = sizes[0][1]

box_height = len(sizes) * (text_height + 6)

cv2.rectangle(frame, (0, 0), (box_width, box_height), BGCOLOR, -1)

for i, line in enumerate(label):

location = (5, (text_height + 6) * i + text_height + 3)

cv2.putText(frame, line, location, FONTFACE, FONTSCALE, FONTCOLOR, THICKNESS, LINETYPE)

return frame

if __name__ == '__main__':

import json

cls_file = '/home/publicfile/kinetics-400/cls2index.json'

with open(cls_file, "r")as f:

cls2id = json.load(f)

className = {v:k for k, v in cls2id.items()}

args = parse_args()

result, video_path = infer_classification(args)

label = className[int(result.argmax())]

video = decord.VideoReader(video_path)

frames = [x.asnumpy() for x in video]

vid_frames = []

for i in range(1, 50):

vis_frame = add_label(frames[i], label)

vid_frames.append(vis_frame)

vid = mpy.ImageSequenceClip(vid_frames, fps=24)

vid.write_gif('/home/i3d_mindspore-main/src/result.gif')训练表现

epoch: 1 step: 1, loss is 5.988250255584717 epoch: 1 step: 2, loss is 6.022036075592041 epoch: 1 step: 3, loss is 5.980734348297119 epoch: 1 step: 4, loss is 5.944761276245117 epoch: 1 step: 5, loss is 5.96290922164917 epoch: 1 step: 6, loss is 6.018253326416016 epoch: 1 step: 7, loss is 6.002189636230469 epoch: 1 step: 8, loss is 5.987124443054199 epoch: 1 step: 9, loss is 5.987508773803711 epoch: 1 step: 10, loss is 6.022692680358887 epoch: 1 step: 11, loss is 5.963132381439209 epoch: 1 step: 12, loss is 5.998828411102295 epoch: 1 step: 13, loss is 6.029492378234863 epoch: 1 step: 14, loss is 5.980365753173828 epoch: 1 step: 15, loss is 6.032135963439941 epoch: 1 step: 16, loss is 6.006852626800537 epoch: 1 step: 17, loss is 6.035465240478516 epoch: 1 step: 18, loss is 5.993041038513184 epoch: 1 step: 19, loss is 6.0041608810424805 epoch: 1 step: 20, loss is 6.035679340362549

性能表现

step:[ 1240/ 1242], metrics:['Top_1_Accuracy: 0.6719', 'Top_5_Accuracy: 0.8705'], loss:[0.861/1.585], time:14687.10 2 ms,

step:[ 1241/ 1242], metrics:['Top_1_Accuracy: 0.6720', 'Top_5_Accuracy: 0.8706'], loss:[1.857/1.585], time:15122.631 ms,

step:[ 1242/ 1242], metrics:['Top_1_Accuracy: 0.6719', 'Top_5_Accuracy: 0.8706'], loss:[2.374/1.586], time:13412.127 ms,

Epoch time: 19056676.745 ms, per step time: 15343.540 ms, avg loss: 1.586

{'Top_1_Accuracy': 0.6717995169082126, 'Top_5_Accuracy': 0.8705213365539453}

preprocess_batch: 1242; batch_queue: 0, 0, 0, 0, 0, 0, 0, 0, 0, 0; push_start_time: 2023-02-09-10:06:25.384.821, 2023-02-09-10:06:42.071.273, 2023-02-09-10:06:56.265.424, 2023-02-09-10:07:11.964.719, 2023-02-09-10:07:28.630.509, 2023-02-09-10:07:43.888.313, 2023-02-09-10:07:58.374.929, 2023-02-09-10:08:13.298.385, 2023-02-09-10:08:28.411.211, 2023-02-09-10:08:41.837.745; push_end_time: 2023-02-09-10:06:25.384.904, 2023-02-09-10:06:42.073.247, 2023-02-09-10:06:56.265.505, 2023-02-09-10:07:11.964.839, 2023-02-09-10:07:28.632.493, 2023-02-09-10:07:43.888.420, 2023-02-09-10:07:58.375.017, 2023-02-09-10:08:13.298.464, 2023-02-09-10:08:28.411.292, 2023-02-09-10:08:41.837.828.