前言

首先利用linux平台编译ffmpeg的so库,具体详情请查看文章:Android NDK(ndk-r16b)交叉编译FFmpeg(3.3.9)_jszlittlecat_720的博客-CSDN博客

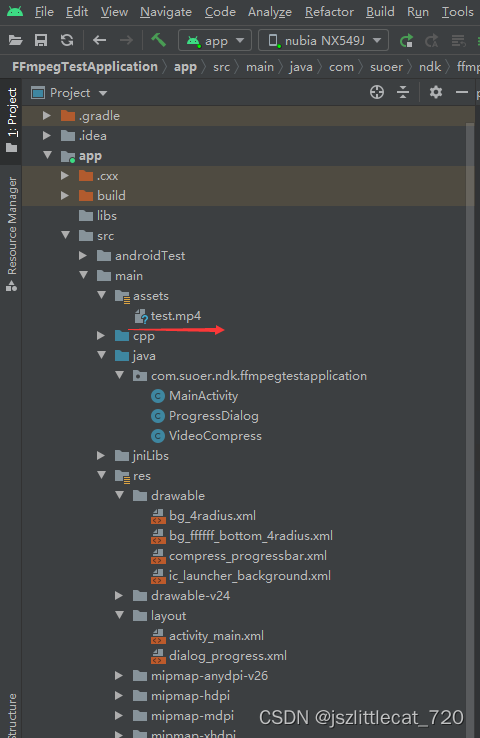

1.创建VideoCompress类

package com.suoer.ndk.ffmpegtestapplication;

public class VideoCompress {

/**

* compressVideo native 方法

* @param compressCommand 压缩命令

* @param callback 压缩回调

*/

public native void compressVideo(String[] compressCommand,CompressCallback callback);

//CompressCallback 压缩回调

public interface CompressCallback{

/**

* onCompress

* @param current 压缩的当前进度

* @param total 总进度

*/

public void onCompress(int current,int total);

}

}

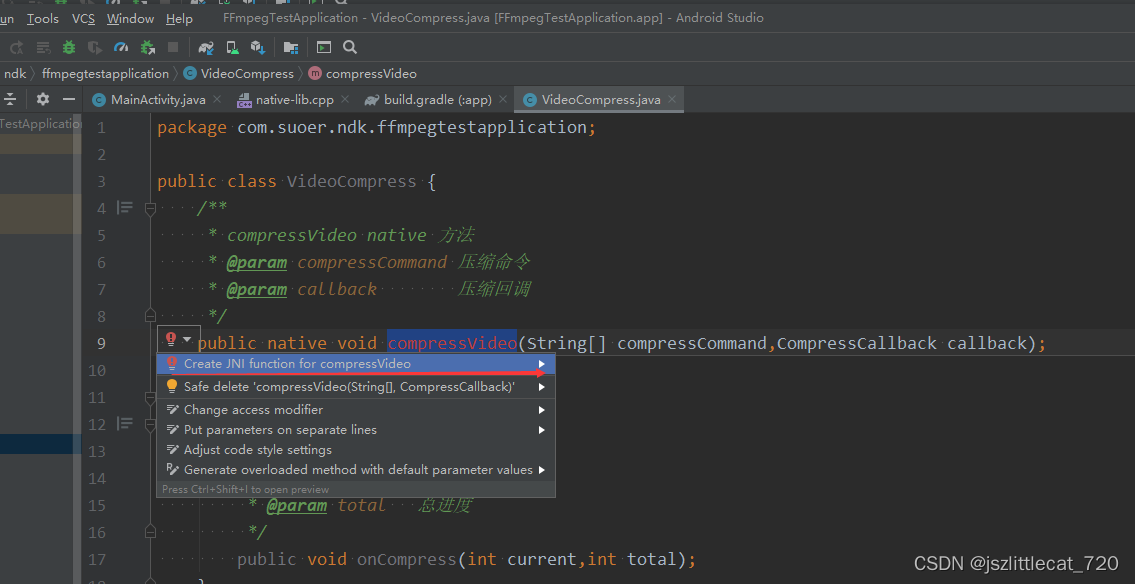

compressVideo报红,鼠标停在上面,左边会出现红色小灯泡,点击红色小灯泡

点击Create JNI function for compressVideo

自动打开native-lib.cpp并创建完成Java_com_suoer_ndk_ffmpegtestapplication_VideoCompress_compressVideo 方法

在此方法下实现压缩视频

2.MainActivity实现点击TextView实现压缩视频

2.1权限问题处理

压缩视频需要读写文件的权限

打开AndroidManifest.xml

添加读写文件权限

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE"></uses-permission>

<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE"></uses-permission>

权限处理使用rxpermissions

rxpermissions github地址:GitHub - tbruyelle/RxPermissions: Android runtime permissions powered by RxJava2

rxpermissions使用方式

打开项目下的build.gradle添加如下代码:

maven { url 'https://jitpack.io' }

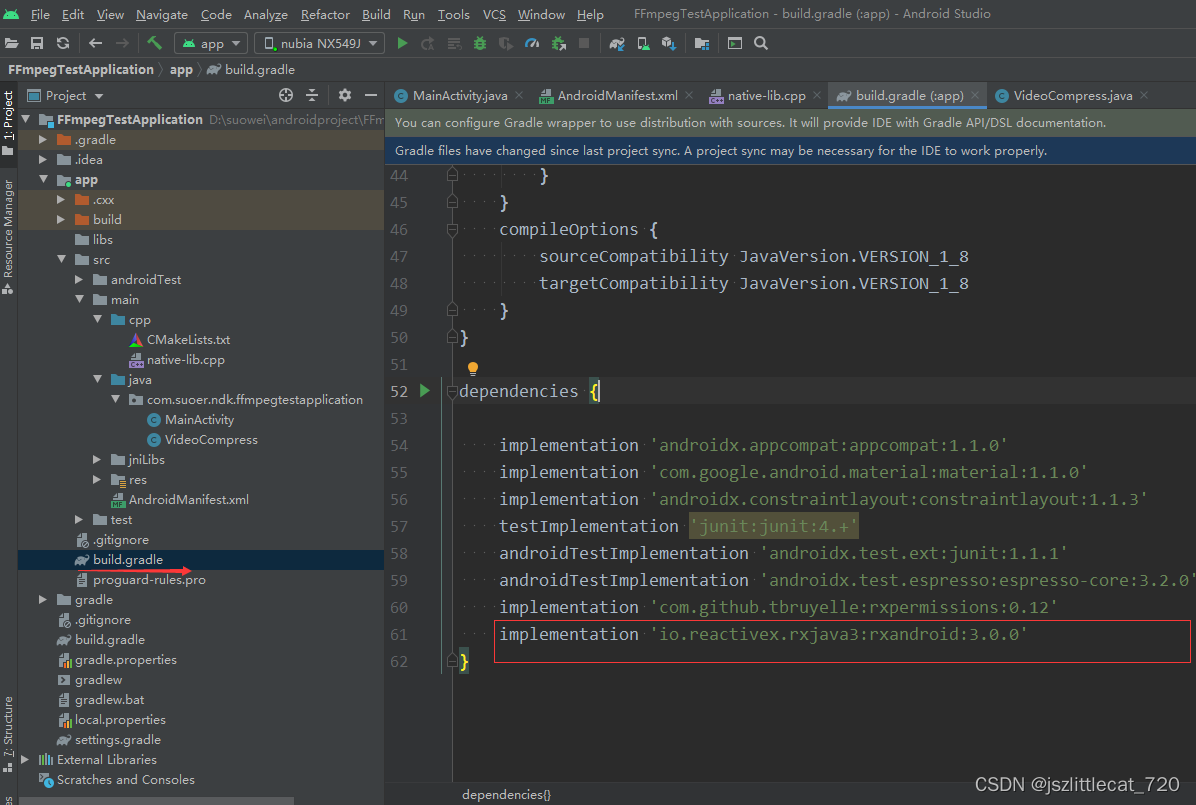

打开app下的build.gradle添加如下代码:

implementation 'com.github.tbruyelle:rxpermissions:0.12'

点击同步按钮,同步文件

2.2子线程处理耗时操作

压缩视频是耗时操作,子线程处理耗时操作。

使用rxandroid实现子线程耗时操作并实现子线程和主线程切换

rxandroid github地址:GitHub - ReactiveX/RxAndroid: RxJava bindings for Android

rxandroid使用方式:

打开项目下的build.gradle添加如下代码:

maven { url "https://oss.jfrog.org/libs-snapshot" }

打开app下的build.gradle添加如下代码:

implementation 'io.reactivex.rxjava3:rxandroid:3.0.0'

点击同步按钮同步文件

MainActivity.java内容如下:

package com.suoer.ndk.ffmpegtestapplication;

import android.Manifest;

import android.os.Bundle;

import android.os.Environment;

import android.util.Log;

import android.view.View;

import android.widget.TextView;

import com.tbruyelle.rxpermissions3.RxPermissions;

import java.io.File;

import androidx.appcompat.app.AppCompatActivity;

import io.reactivex.rxjava3.android.schedulers.AndroidSchedulers;

import io.reactivex.rxjava3.core.Observable;

import io.reactivex.rxjava3.functions.Consumer;

import io.reactivex.rxjava3.functions.Function;

import io.reactivex.rxjava3.schedulers.Schedulers;

public class MainActivity extends AppCompatActivity {

private File mInFile=new File(Environment.getExternalStorageDirectory(),"test.mp4");//mInFile 需要压缩的文件路径

private File mOutFile=new File(Environment.getExternalStorageDirectory(),"out.mp4");//mOutFile压缩后的文件路径

// Used to load the 'native-lib' library on application startup.

static {

System.loadLibrary("native-lib");

}

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

// Example of a call to a native method

TextView tv = findViewById(R.id.sample_text);

//tv.setText("ffmpeg版本:"+stringFromJNI());

tv.setText("压缩");

//tv的点击事件 点击按钮实现视频压缩

tv.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

// 压缩文件 需要读写文件权限 申请权限

RxPermissions rxPermissions=new RxPermissions(MainActivity.this);

rxPermissions.request(Manifest.permission.READ_EXTERNAL_STORAGE, Manifest.permission.WRITE_EXTERNAL_STORAGE).subscribe(new Consumer<Boolean>() {

@Override

public void accept(Boolean aBoolean) throws Throwable {

if(aBoolean){

//权限已经获取 压缩视频

compressVideo();

}

}

});

}

});

}

/**

* 开启子线程处理耗时压缩问题

*/

private void compressVideo() {

//ffmpeg的压缩命令:ffmpeg -i test.mp4 -b:v 1024k out.mp4

//ffmpeg -i test.mp4 -b:v 1024k out.mp4

//-b:v 1024k 1024k为码率 码率越高视频越清晰,而且视频越大

//test.mp4需要压缩的文件

//out.mp4 压缩之后的文件

String[] compressCommand={"ffmpeg","-i",mInFile.getAbsolutePath(),"-b:v","1024k",mOutFile.getAbsolutePath()};

//压缩是耗时的,需要子线程处理

Observable.just(compressCommand).map(new Function<String[], File>() {

@Override

public File apply(String[] compressCommand) throws Throwable {

VideoCompress videoCompress=new VideoCompress();

videoCompress.compressVideo(compressCommand, new VideoCompress.CompressCallback() {

@Override

public void onCompress(int current, int total) {

Log.e("TAG", "onCompress: 压缩进度:"+current+"/"+total);

}

});

return mOutFile;

}

}).subscribeOn(Schedulers.io())

.observeOn(AndroidSchedulers.mainThread())

.subscribe(new Consumer<File>() {

@Override

public void accept(File file) throws Throwable {

//压缩完成

Log.e("TAG", "accept: 压缩完成!" );

}

});

}

/**

* A native method that is implemented by the 'native-lib' native library,

* which is packaged with this application.

*/

public native String stringFromJNI();

}3.拷贝视频压缩使用命令实现需要的其他文件

include目录下创建compat文件夹把所需要的头文件 os2threads.h va_copy.h w32pthreads.h拷贝此文件夹中

这三个头文件存在于下载之后的ffmpeg-3.3.9.tar.gz解压之后的文件夹ffmpeg-3.3.9的compat文件夹中

在jniLibs文件夹中创建other文件夹

拷贝linux平台编译后的文件cmdutils.h cmdutils_common_opts.h config.h ffmpeg.h 拷贝至other文件夹中

将下载之后的ffmpeg-3.3.9.tar.gz解压之后的文件夹ffmpeg-3.3.9中的cmdutils.c

ffmpeg.c ffmpeg_filter.c ffmpeg_opt.c 四个文件拷贝至cpp文件夹中

配置CMakeLists.txt文件修改如下内容

CMakeLists.txt内容如下:

# For more information about using CMake with Android Studio, read the

# documentation: https://d.android.com/studio/projects/add-native-code.html

# Sets the minimum version of CMake required to build the native library.

cmake_minimum_required(VERSION 3.10.2)

# Declares and names the project.

project("ffmpegtestapplication")

#判断编译器类型,如果是gcc编译器,则在编译选项中加入c++11支持

if(CMAKE_COMPILER_IS_GNUCXX)

set(CMAKE_CXX_FLAGS "-std=c++11 ${CMAKE_CXX_FLAGS}")

message(STATUS "optional:-std=c++11")

endif(CMAKE_COMPILER_IS_GNUCXX)

#需要引入我们头文件,以这个配置的目录为基准

include_directories(${CMAKE_SOURCE_DIR}/../jniLibs/include)

include_directories(${CMAKE_SOURCE_DIR}/../jniLibs/other)

# Creates and names a library, sets it as either STATIC

# or SHARED, and provides the relative paths to its source code.

# You can define multiple libraries, and CMake builds them for you.

# Gradle automatically packages shared libraries with your APK.

add_library( # Sets the name of the library.

native-lib

# Sets the library as a shared library.

SHARED

# Provides a relative path to your source file(s).

native-lib.cpp

#添加额外的c文件

cmdutils.c

ffmpeg.c

ffmpeg_filter.c

ffmpeg_opt.c

)

# 编解码(最重要的库)

add_library(

avcodec

SHARED

IMPORTED)

set_target_properties(

avcodec

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/armeabi-v7a/libavcodec-57.so)

# 设备信息

add_library(

avdevice

SHARED

IMPORTED)

set_target_properties(

avdevice

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/armeabi-v7a/libavdevice-57.so)

# 滤镜特效处理库

add_library(

avfilter

SHARED

IMPORTED)

set_target_properties(

avfilter

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/armeabi-v7a/libavfilter-6.so)

# 封装格式处理库

add_library(

avformat

SHARED

IMPORTED)

set_target_properties(

avformat

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/armeabi-v7a/libavformat-57.so)

# 重采样处理库

add_library(

avresample

SHARED

IMPORTED)

set_target_properties(

avresample

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/armeabi-v7a/libavresample-3.so)

# 工具库(大部分库都需要这个库的支持)

add_library(

avutil

SHARED

IMPORTED)

set_target_properties(

avutil

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/armeabi-v7a/libavutil-55.so)

# 后期处理

add_library(

postproc

SHARED

IMPORTED)

set_target_properties(

postproc

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/armeabi-v7a/libpostproc-54.so)

# 音频采样数据格式转换库

add_library(

swresample

SHARED

IMPORTED)

set_target_properties(

swresample

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/armeabi-v7a/libswresample-2.so)

# 视频像素数据格式转换

add_library(

swscale

SHARED

IMPORTED)

set_target_properties(

swscale

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/armeabi-v7a/libswscale-4.so)

# Searches for a specified prebuilt library and stores the path as a

# variable. Because CMake includes system libraries in the search path by

# default, you only need to specify the name of the public NDK library

# you want to add. CMake verifies that the library exists before

# completing its build.

find_library( # Sets the name of the path variable.

log-lib

# Specifies the name of the NDK library that

# you want CMake to locate.

log )

# Specifies libraries CMake should link to your target library. You

# can link multiple libraries, such as libraries you define in this

# build script, prebuilt third-party libraries, or system libraries.

target_link_libraries( # Specifies the target library.

native-lib avcodec avdevice avfilter avformat avresample avutil postproc swresample swscale

# Links the target library to the log library

# included in the NDK.

${log-lib} )运行Run app至手机设备,出现如下如所示错误

D:\suowei\androidproject\FFmpegTestApplication\app\src\main\cpp\cmdutils.c:47:10: fatal error: 'libavutil/libm.h' file not found

解决方式:将下载之后的ffmpeg-3.3.9.tar.gz解压之后的文件夹ffmpeg-3.3.9中libavutil中的libm.h拷贝至android项目jniLibs下的libavutil中

D:\suowei\androidproject\FFmpegTestApplication\app\src\main\cpp\ffmpeg.c:52:10: fatal error: 'libavutil/internal.h' file not found

解决方式:将下载之后的ffmpeg-3.3.9.tar.gz解压之后的文件夹ffmpeg-3.3.9中libavutil中的internal.h拷贝至android项目jniLibs下的libavutil中

重新Run app至手机设备,出现如下图所示错误

D:\suowei\androidproject\FFmpegTestApplication\app\src\main\cpp\cmdutils.c:58:10: fatal error: 'libavformat/network.h' file not found

解决方式:将下载之后的ffmpeg-3.3.9.tar.gz解压之后的文件夹ffmpeg-3.3.9中libavformat中的network.h拷贝至android项目jniLibs下的libavformat中

D:\suowei\androidproject\FFmpegTestApplication\app\src\main\jniLibs\include\libavutil\internal.h:42:10: fatal error: 'timer.h' file not found

解决方式:将下载之后的ffmpeg-3.3.9.tar.gz解压之后的文件夹ffmpeg-3.3.9中libavutil中的timer.h拷贝至android项目jniLibs下的libavutil中

重新Run app至手机设备,出现如下图所示错误

D:/suowei/androidproject/FFmpegTestApplication/app/src/main/cpp/../jniLibs/include\libavformat/network.h:29:10: fatal error: 'os_support.h' file not found

#include "os_support.h"

解决方式:将下载之后的ffmpeg-3.3.9.tar.gz解压之后的文件夹ffmpeg-3.3.9中libavformat中的os_support.h拷贝至android项目jniLibs下的libavformat中

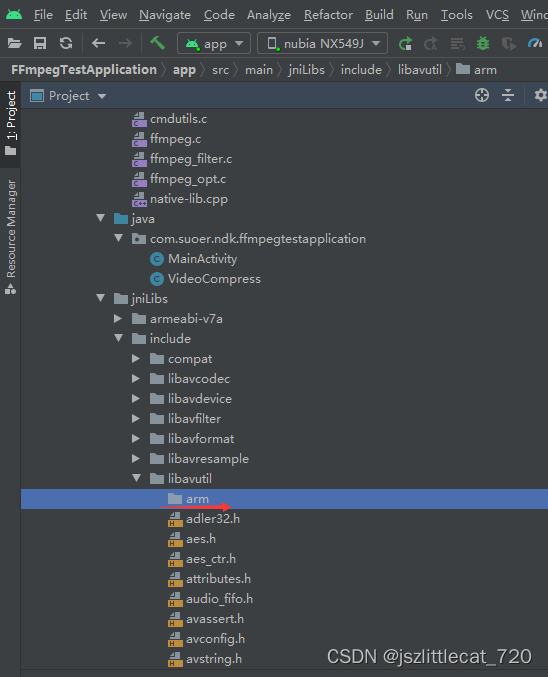

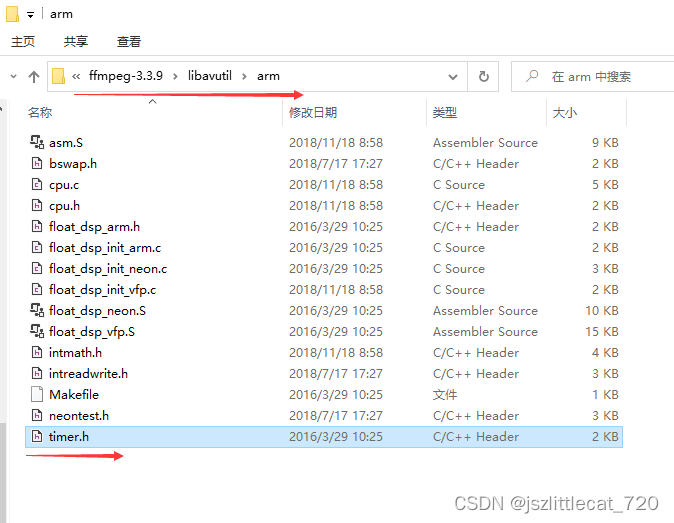

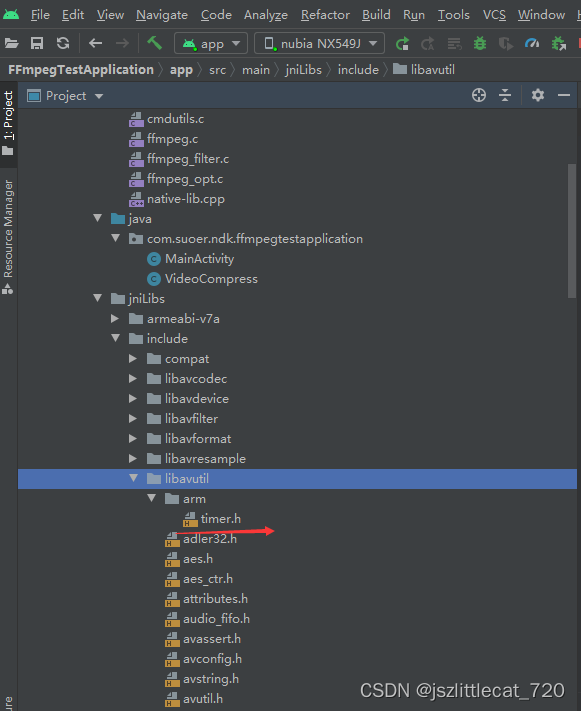

D:/suowei/androidproject/FFmpegTestApplication/app/src/main/cpp/../jniLibs/include\libavutil/timer.h:44:13: fatal error: 'arm/timer.h' file not found

解决方式:android项目jniLibs目录下的libavutil文件夹下创建arm文件夹

将下载之后的ffmpeg-3.3.9.tar.gz解压之后的文件夹ffmpeg-3.3.9中libavutil中的arm文件夹下的timer.h拷贝至android项目jniLibs下的libavutil中的arm文件夹下

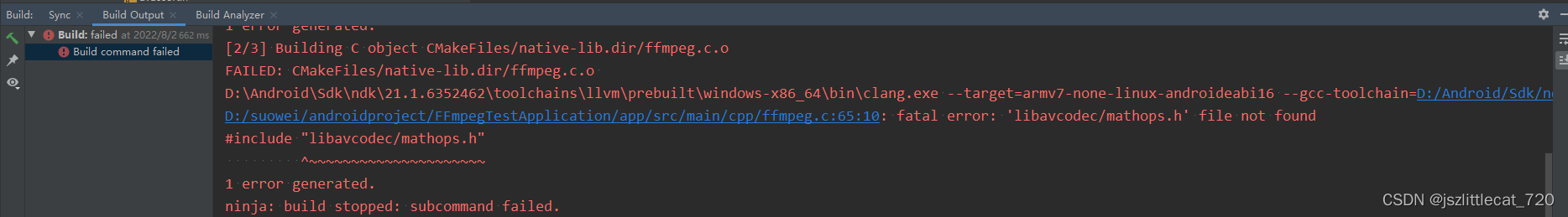

重新Run app至手机设备,出现如下图所示错误

D:/suowei/androidproject/FFmpegTestApplication/app/src/main/cpp/ffmpeg.c:65:10: fatal error: 'libavcodec/mathops.h' file not found

解决方式:将下载之后的ffmpeg-3.3.9.tar.gz解压之后的文件夹ffmpeg-3.3.9中libavcodec中的mathops.h拷贝至android项目jniLibs下的libavcodec中

重新Run app至手机设备,出现如下图所示错误

D:/suowei/androidproject/FFmpegTestApplication/app/src/main/cpp/../jniLibs/include\libavformat/network.h:31:10: fatal error: 'url.h' file not found

解决方式:将下载之后的ffmpeg-3.3.9.tar.gz解压之后的文件夹ffmpeg-3.3.9中libavformat中的url.h拷贝至android项目jniLibs下的libavformat中

D:/suowei/androidproject/FFmpegTestApplication/app/src/main/cpp/../jniLibs/include\libavcodec/mathops.h:28:10: fatal error: 'libavutil/reverse.h' file not found

解决方式:将下载之后的ffmpeg-3.3.9.tar.gz解压之后的文件夹ffmpeg-3.3.9中libavutil中的reverse.h拷贝至android项目jniLibs下的libavutil中

重新Run app至手机设备,出现如下图所示错误

D:/suowei/androidproject/FFmpegTestApplication/app/src/main/cpp/../jniLibs/include\libavcodec/mathops.h:40:13: fatal error: 'arm/mathops.h' file not found

解决方式:android项目jniLibs目录下的libavcodec文件夹下创建arm文件夹

将下载之后的ffmpeg-3.3.9.tar.gz解压之后的文件夹ffmpeg-3.3.9中libavcodec中的arm文件夹下的mathops.h拷贝至android项目jniLibs下的libavcodec中的arm文件夹下

重新Run app至手机设备,android项目运行成功。

4.编写native-cpp实现视频压缩

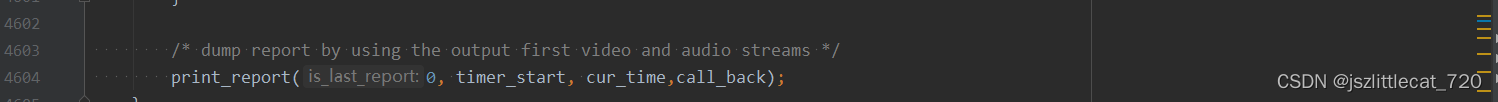

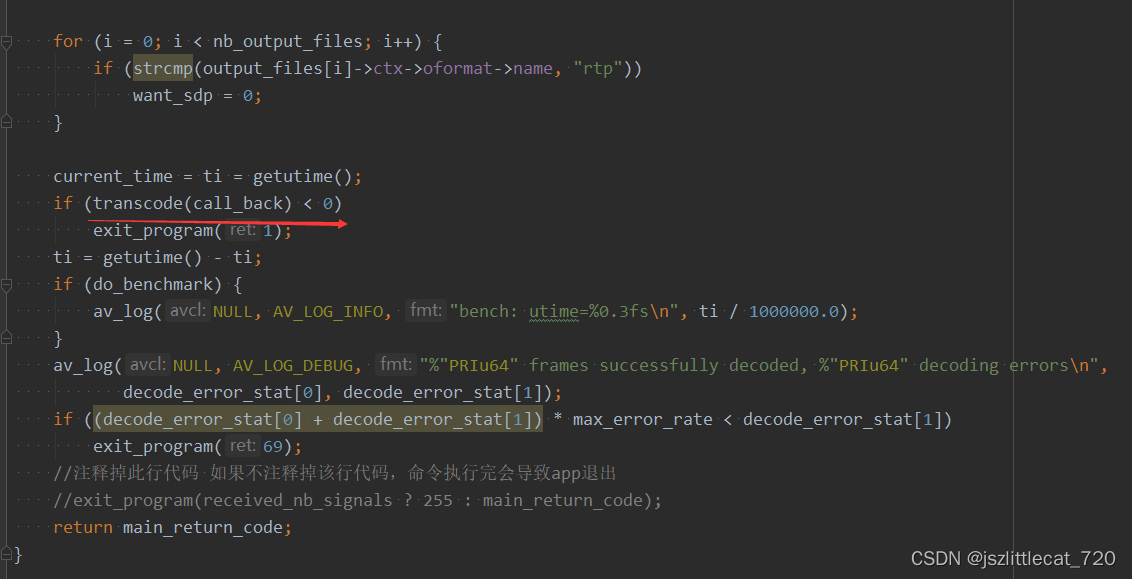

修改ffmpeg.c,找到main方法修改方法名称为:run_ffmpeg_command 并注释掉此行代码

exit_program(received_nb_signals ? 255 : main_return_code);

//命令函数的入口

int run_ffmpeg_command(int argc, char **argv)

{

int i, ret;

int64_t ti;

init_dynload();

register_exit(ffmpeg_cleanup);

setvbuf(stderr,NULL,_IONBF,0); /* win32 runtime needs this */

av_log_set_flags(AV_LOG_SKIP_REPEATED);

parse_loglevel(argc, argv, options);

if(argc>1 && !strcmp(argv[1], "-d")){

run_as_daemon=1;

av_log_set_callback(log_callback_null);

argc--;

argv++;

}

avcodec_register_all();

#if CONFIG_AVDEVICE

avdevice_register_all();

#endif

avfilter_register_all();

av_register_all();

avformat_network_init();

show_banner(argc, argv, options);

/* parse options and open all input/output files */

ret = ffmpeg_parse_options(argc, argv);

if (ret < 0)

exit_program(1);

if (nb_output_files <= 0 && nb_input_files == 0) {

show_usage();

av_log(NULL, AV_LOG_WARNING, "Use -h to get full help or, even better, run 'man %s'\n", program_name);

exit_program(1);

}

/* file converter / grab */

if (nb_output_files <= 0) {

av_log(NULL, AV_LOG_FATAL, "At least one output file must be specified\n");

exit_program(1);

}

// if (nb_input_files == 0) {

// av_log(NULL, AV_LOG_FATAL, "At least one input file must be specified\n");

// exit_program(1);

// }

for (i = 0; i < nb_output_files; i++) {

if (strcmp(output_files[i]->ctx->oformat->name, "rtp"))

want_sdp = 0;

}

current_time = ti = getutime();

if (transcode() < 0)

exit_program(1);

ti = getutime() - ti;

if (do_benchmark) {

av_log(NULL, AV_LOG_INFO, "bench: utime=%0.3fs\n", ti / 1000000.0);

}

av_log(NULL, AV_LOG_DEBUG, "%"PRIu64" frames successfully decoded, %"PRIu64" decoding errors\n",

decode_error_stat[0], decode_error_stat[1]);

if ((decode_error_stat[0] + decode_error_stat[1]) * max_error_rate < decode_error_stat[1])

exit_program(69);

//注释掉此行代码 如果不注释掉该行代码,命令执行完会导致app退出

//exit_program(received_nb_signals ? 255 : main_return_code);

return main_return_code;

}native-lib.cpp代码如下:

#include <jni.h>

#include <string>

#include <android/log.h>

#define TAG "JNI_TAG"

#define LOGE(...) __android_log_print(ANDROID_LOG_ERROR,TAG,__VA_ARGS__);

extern "C" {

#include "libavutil/avutil.h"

//声明方法 argc命令的个数 argv 二维数组

int run_ffmpeg_command(int argc, char **argv);

}

extern "C" JNIEXPORT jstring JNICALL

Java_com_suoer_ndk_ffmpegtestapplication_MainActivity_stringFromJNI(

JNIEnv* env,

jobject /* this */) {

//std::string hello = "Hello from C++";

return env->NewStringUTF(av_version_info());

}extern "C"

JNIEXPORT void JNICALL

Java_com_suoer_ndk_ffmpegtestapplication_VideoCompress_compressVideo(JNIEnv *env, jobject thiz,

jobjectArray compress_command,

jobject callback) {

//ffmpeg 处理视频压缩

//arm这个里面的so都是用来处理音视频的,include都是头文件

//还有几个没有被打包编译成so,因为这些不算是音视频的处理代码,只是我们现在支持命令(封装)

//1.获取命令个数

int argc=env->GetArrayLength(compress_command);

//2.给char **argv填充数据

char **argv=(char **)malloc(sizeof(char*)*argc);

for (int i = 0; i <argc ; ++i) {

jstring j_param=(jstring)env->GetObjectArrayElement(compress_command,i);

argv[i]= (char *)(env->GetStringUTFChars(j_param, NULL));

LOGE("参数:%s",argv[i]);

}

//3.调用命令函数去压缩

run_ffmpeg_command(argc,argv);

//4.释放内存

for (int i = 0; i <argc ; ++i) {

free(argv[i]);

}

free(argv);

}视频压缩回调处理,视频压缩进度回调

修改ffmpeg.c内容如下:

print_report方法

transcode方法

run_ffmpeg_command方法

ffmpeg.c内容如下:

/*

* Copyright (c) 2000-2003 Fabrice Bellard

*

* This file is part of FFmpeg.

*

* FFmpeg is free software; you can redistribute it and/or

* modify it under the terms of the GNU Lesser General Public

* License as published by the Free Software Foundation; either

* version 2.1 of the License, or (at your option) any later version.

*

* FFmpeg is distributed in the hope that it will be useful,

* but WITHOUT ANY WARRANTY; without even the implied warranty of

* MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

* Lesser General Public License for more details.

*

* You should have received a copy of the GNU Lesser General Public

* License along with FFmpeg; if not, write to the Free Software

* Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA

*/

/**

* @file

* multimedia converter based on the FFmpeg libraries

*/

#include "config.h"

#include <ctype.h>

#include <string.h>

#include <math.h>

#include <stdlib.h>

#include <errno.h>

#include <limits.h>

#include <stdatomic.h>

#include <stdint.h>

#if HAVE_IO_H

#include <io.h>

#endif

#if HAVE_UNISTD_H

#include <unistd.h>

#endif

#include "libavformat/avformat.h"

#include "libavdevice/avdevice.h"

#include "libswresample/swresample.h"

#include "libavutil/opt.h"

#include "libavutil/channel_layout.h"

#include "libavutil/parseutils.h"

#include "libavutil/samplefmt.h"

#include "libavutil/fifo.h"

#include "libavutil/hwcontext.h"

#include "libavutil/internal.h"

#include "libavutil/intreadwrite.h"

#include "libavutil/dict.h"

#include "libavutil/display.h"

#include "libavutil/mathematics.h"

#include "libavutil/pixdesc.h"

#include "libavutil/avstring.h"

#include "libavutil/libm.h"

#include "libavutil/imgutils.h"

#include "libavutil/timestamp.h"

#include "libavutil/bprint.h"

#include "libavutil/time.h"

#include "libavutil/threadmessage.h"

#include "libavcodec/mathops.h"

#include "libavformat/os_support.h"

# include "libavfilter/avfilter.h"

# include "libavfilter/buffersrc.h"

# include "libavfilter/buffersink.h"

#if HAVE_SYS_RESOURCE_H

#include <sys/time.h>

#include <sys/types.h>

#include <sys/resource.h>

#elif HAVE_GETPROCESSTIMES

#include <windows.h>

#endif

#if HAVE_GETPROCESSMEMORYINFO

#include <windows.h>

#include <psapi.h>

#endif

#if HAVE_SETCONSOLECTRLHANDLER

#include <windows.h>

#endif

#if HAVE_SYS_SELECT_H

#include <sys/select.h>

#endif

#if HAVE_TERMIOS_H

#include <fcntl.h>

#include <sys/ioctl.h>

#include <sys/time.h>

#include <termios.h>

#elif HAVE_KBHIT

#include <conio.h>

#endif

#if HAVE_PTHREADS

#include <pthread.h>

#endif

#include <time.h>

#include <android/log.h>

#include "ffmpeg.h"

#include "cmdutils.h"

#include "libavutil/avassert.h"

const char program_name[] = "ffmpeg";

const int program_birth_year = 2000;

static FILE *vstats_file;

const char *const forced_keyframes_const_names[] = {

"n",

"n_forced",

"prev_forced_n",

"prev_forced_t",

"t",

NULL

};

static void do_video_stats(OutputStream *ost, int frame_size);

static int64_t getutime(void);

static int64_t getmaxrss(void);

static int ifilter_has_all_input_formats(FilterGraph *fg);

static int run_as_daemon = 0;

static int nb_frames_dup = 0;

static unsigned dup_warning = 1000;

static int nb_frames_drop = 0;

static int64_t decode_error_stat[2];

static int want_sdp = 1;

static int current_time;

AVIOContext *progress_avio = NULL;

static uint8_t *subtitle_out;

InputStream **input_streams = NULL;

int nb_input_streams = 0;

InputFile **input_files = NULL;

int nb_input_files = 0;

OutputStream **output_streams = NULL;

int nb_output_streams = 0;

OutputFile **output_files = NULL;

int nb_output_files = 0;

FilterGraph **filtergraphs;

int nb_filtergraphs;

#if HAVE_TERMIOS_H

/* init terminal so that we can grab keys */

static struct termios oldtty;

static int restore_tty;

#endif

#if HAVE_PTHREADS

static void free_input_threads(void);

#endif

/* sub2video hack:

Convert subtitles to video with alpha to insert them in filter graphs.

This is a temporary solution until libavfilter gets real subtitles support.

*/

static int sub2video_get_blank_frame(InputStream *ist)

{

int ret;

AVFrame *frame = ist->sub2video.frame;

av_frame_unref(frame);

ist->sub2video.frame->width = ist->dec_ctx->width ? ist->dec_ctx->width : ist->sub2video.w;

ist->sub2video.frame->height = ist->dec_ctx->height ? ist->dec_ctx->height : ist->sub2video.h;

ist->sub2video.frame->format = AV_PIX_FMT_RGB32;

if ((ret = av_frame_get_buffer(frame, 32)) < 0)

return ret;

memset(frame->data[0], 0, frame->height * frame->linesize[0]);

return 0;

}

static void sub2video_copy_rect(uint8_t *dst, int dst_linesize, int w, int h,

AVSubtitleRect *r)

{

uint32_t *pal, *dst2;

uint8_t *src, *src2;

int x, y;

if (r->type != SUBTITLE_BITMAP) {

av_log(NULL, AV_LOG_WARNING, "sub2video: non-bitmap subtitle\n");

return;

}

if (r->x < 0 || r->x + r->w > w || r->y < 0 || r->y + r->h > h) {

av_log(NULL, AV_LOG_WARNING, "sub2video: rectangle (%d %d %d %d) overflowing %d %d\n",

r->x, r->y, r->w, r->h, w, h

);

return;

}

dst += r->y * dst_linesize + r->x * 4;

src = r->data[0];

pal = (uint32_t *)r->data[1];

for (y = 0; y < r->h; y++) {

dst2 = (uint32_t *)dst;

src2 = src;

for (x = 0; x < r->w; x++)

*(dst2++) = pal[*(src2++)];

dst += dst_linesize;

src += r->linesize[0];

}

}

static void sub2video_push_ref(InputStream *ist, int64_t pts)

{

AVFrame *frame = ist->sub2video.frame;

int i;

av_assert1(frame->data[0]);

ist->sub2video.last_pts = frame->pts = pts;

for (i = 0; i < ist->nb_filters; i++)

av_buffersrc_add_frame_flags(ist->filters[i]->filter, frame,

AV_BUFFERSRC_FLAG_KEEP_REF |

AV_BUFFERSRC_FLAG_PUSH);

}

void sub2video_update(InputStream *ist, AVSubtitle *sub)

{

AVFrame *frame = ist->sub2video.frame;

int8_t *dst;

int dst_linesize;

int num_rects, i;

int64_t pts, end_pts;

if (!frame)

return;

if (sub) {

pts = av_rescale_q(sub->pts + sub->start_display_time * 1000LL,

AV_TIME_BASE_Q, ist->st->time_base);

end_pts = av_rescale_q(sub->pts + sub->end_display_time * 1000LL,

AV_TIME_BASE_Q, ist->st->time_base);

num_rects = sub->num_rects;

} else {

pts = ist->sub2video.end_pts;

end_pts = INT64_MAX;

num_rects = 0;

}

if (sub2video_get_blank_frame(ist) < 0) {

av_log(ist->dec_ctx, AV_LOG_ERROR,

"Impossible to get a blank canvas.\n");

return;

}

dst = frame->data [0];

dst_linesize = frame->linesize[0];

for (i = 0; i < num_rects; i++)

sub2video_copy_rect(dst, dst_linesize, frame->width, frame->height, sub->rects[i]);

sub2video_push_ref(ist, pts);

ist->sub2video.end_pts = end_pts;

}

static void sub2video_heartbeat(InputStream *ist, int64_t pts)

{

InputFile *infile = input_files[ist->file_index];

int i, j, nb_reqs;

int64_t pts2;

/* When a frame is read from a file, examine all sub2video streams in

the same file and send the sub2video frame again. Otherwise, decoded

video frames could be accumulating in the filter graph while a filter

(possibly overlay) is desperately waiting for a subtitle frame. */

for (i = 0; i < infile->nb_streams; i++) {

InputStream *ist2 = input_streams[infile->ist_index + i];

if (!ist2->sub2video.frame)

continue;

/* subtitles seem to be usually muxed ahead of other streams;

if not, subtracting a larger time here is necessary */

pts2 = av_rescale_q(pts, ist->st->time_base, ist2->st->time_base) - 1;

/* do not send the heartbeat frame if the subtitle is already ahead */

if (pts2 <= ist2->sub2video.last_pts)

continue;

if (pts2 >= ist2->sub2video.end_pts || !ist2->sub2video.frame->data[0])

sub2video_update(ist2, NULL);

for (j = 0, nb_reqs = 0; j < ist2->nb_filters; j++)

nb_reqs += av_buffersrc_get_nb_failed_requests(ist2->filters[j]->filter);

if (nb_reqs)

sub2video_push_ref(ist2, pts2);

}

}

static void sub2video_flush(InputStream *ist)

{

int i;

if (ist->sub2video.end_pts < INT64_MAX)

sub2video_update(ist, NULL);

for (i = 0; i < ist->nb_filters; i++)

av_buffersrc_add_frame(ist->filters[i]->filter, NULL);

}

/* end of sub2video hack */

static void term_exit_sigsafe(void)

{

#if HAVE_TERMIOS_H

if(restore_tty)

tcsetattr (0, TCSANOW, &oldtty);

#endif

}

void term_exit(void)

{

av_log(NULL, AV_LOG_QUIET, "%s", "");

term_exit_sigsafe();

}

static volatile int received_sigterm = 0;

static volatile int received_nb_signals = 0;

static atomic_int transcode_init_done = ATOMIC_VAR_INIT(0);

static volatile int ffmpeg_exited = 0;

static int main_return_code = 0;

static void

sigterm_handler(int sig)

{

received_sigterm = sig;

received_nb_signals++;

term_exit_sigsafe();

if(received_nb_signals > 3) {

write(2/*STDERR_FILENO*/, "Received > 3 system signals, hard exiting\n",

strlen("Received > 3 system signals, hard exiting\n"));

exit(123);

}

}

#if HAVE_SETCONSOLECTRLHANDLER

static BOOL WINAPI CtrlHandler(DWORD fdwCtrlType)

{

av_log(NULL, AV_LOG_DEBUG, "\nReceived windows signal %ld\n", fdwCtrlType);

switch (fdwCtrlType)

{

case CTRL_C_EVENT:

case CTRL_BREAK_EVENT:

sigterm_handler(SIGINT);

return TRUE;

case CTRL_CLOSE_EVENT:

case CTRL_LOGOFF_EVENT:

case CTRL_SHUTDOWN_EVENT:

sigterm_handler(SIGTERM);

/* Basically, with these 3 events, when we return from this method the

process is hard terminated, so stall as long as we need to

to try and let the main thread(s) clean up and gracefully terminate

(we have at most 5 seconds, but should be done far before that). */

while (!ffmpeg_exited) {

Sleep(0);

}

return TRUE;

default:

av_log(NULL, AV_LOG_ERROR, "Received unknown windows signal %ld\n", fdwCtrlType);

return FALSE;

}

}

#endif

void term_init(void)

{

#if HAVE_TERMIOS_H

if (!run_as_daemon && stdin_interaction) {

struct termios tty;

if (tcgetattr (0, &tty) == 0) {

oldtty = tty;

restore_tty = 1;

tty.c_iflag &= ~(IGNBRK|BRKINT|PARMRK|ISTRIP

|INLCR|IGNCR|ICRNL|IXON);

tty.c_oflag |= OPOST;

tty.c_lflag &= ~(ECHO|ECHONL|ICANON|IEXTEN);

tty.c_cflag &= ~(CSIZE|PARENB);

tty.c_cflag |= CS8;

tty.c_cc[VMIN] = 1;

tty.c_cc[VTIME] = 0;

tcsetattr (0, TCSANOW, &tty);

}

signal(SIGQUIT, sigterm_handler); /* Quit (POSIX). */

}

#endif

signal(SIGINT , sigterm_handler); /* Interrupt (ANSI). */

signal(SIGTERM, sigterm_handler); /* Termination (ANSI). */

#ifdef SIGXCPU

signal(SIGXCPU, sigterm_handler);

#endif

#if HAVE_SETCONSOLECTRLHANDLER

SetConsoleCtrlHandler((PHANDLER_ROUTINE) CtrlHandler, TRUE);

#endif

}

/* read a key without blocking */

static int read_key(void)

{

unsigned char ch;

#if HAVE_TERMIOS_H

int n = 1;

struct timeval tv;

fd_set rfds;

FD_ZERO(&rfds);

FD_SET(0, &rfds);

tv.tv_sec = 0;

tv.tv_usec = 0;

n = select(1, &rfds, NULL, NULL, &tv);

if (n > 0) {

n = read(0, &ch, 1);

if (n == 1)

return ch;

return n;

}

#elif HAVE_KBHIT

# if HAVE_PEEKNAMEDPIPE

static int is_pipe;

static HANDLE input_handle;

DWORD dw, nchars;

if(!input_handle){

input_handle = GetStdHandle(STD_INPUT_HANDLE);

is_pipe = !GetConsoleMode(input_handle, &dw);

}

if (is_pipe) {

/* When running under a GUI, you will end here. */

if (!PeekNamedPipe(input_handle, NULL, 0, NULL, &nchars, NULL)) {

// input pipe may have been closed by the program that ran ffmpeg

return -1;

}

//Read it

if(nchars != 0) {

read(0, &ch, 1);

return ch;

}else{

return -1;

}

}

# endif

if(kbhit())

return(getch());

#endif

return -1;

}

static int decode_interrupt_cb(void *ctx)

{

return received_nb_signals > atomic_load(&transcode_init_done);

}

const AVIOInterruptCB int_cb = { decode_interrupt_cb, NULL };

static void ffmpeg_cleanup(int ret)

{

int i, j;

if (do_benchmark) {

int maxrss = getmaxrss() / 1024;

av_log(NULL, AV_LOG_INFO, "bench: maxrss=%ikB\n", maxrss);

}

for (i = 0; i < nb_filtergraphs; i++) {

FilterGraph *fg = filtergraphs[i];

avfilter_graph_free(&fg->graph);

for (j = 0; j < fg->nb_inputs; j++) {

while (av_fifo_size(fg->inputs[j]->frame_queue)) {

AVFrame *frame;

av_fifo_generic_read(fg->inputs[j]->frame_queue, &frame,

sizeof(frame), NULL);

av_frame_free(&frame);

}

av_fifo_free(fg->inputs[j]->frame_queue);

if (fg->inputs[j]->ist->sub2video.sub_queue) {

while (av_fifo_size(fg->inputs[j]->ist->sub2video.sub_queue)) {

AVSubtitle sub;

av_fifo_generic_read(fg->inputs[j]->ist->sub2video.sub_queue,

&sub, sizeof(sub), NULL);

avsubtitle_free(&sub);

}

av_fifo_free(fg->inputs[j]->ist->sub2video.sub_queue);

}

av_buffer_unref(&fg->inputs[j]->hw_frames_ctx);

av_freep(&fg->inputs[j]->name);

av_freep(&fg->inputs[j]);

}

av_freep(&fg->inputs);

for (j = 0; j < fg->nb_outputs; j++) {

av_freep(&fg->outputs[j]->name);

av_freep(&fg->outputs[j]->formats);

av_freep(&fg->outputs[j]->channel_layouts);

av_freep(&fg->outputs[j]->sample_rates);

av_freep(&fg->outputs[j]);

}

av_freep(&fg->outputs);

av_freep(&fg->graph_desc);

av_freep(&filtergraphs[i]);

}

av_freep(&filtergraphs);

av_freep(&subtitle_out);

/* close files */

for (i = 0; i < nb_output_files; i++) {

OutputFile *of = output_files[i];

AVFormatContext *s;

if (!of)

continue;

s = of->ctx;

if (s && s->oformat && !(s->oformat->flags & AVFMT_NOFILE))

avio_closep(&s->pb);

avformat_free_context(s);

av_dict_free(&of->opts);

av_freep(&output_files[i]);

}

for (i = 0; i < nb_output_streams; i++) {

OutputStream *ost = output_streams[i];

if (!ost)

continue;

for (j = 0; j < ost->nb_bitstream_filters; j++)

av_bsf_free(&ost->bsf_ctx[j]);

av_freep(&ost->bsf_ctx);

av_freep(&ost->bsf_extradata_updated);

av_frame_free(&ost->filtered_frame);

av_frame_free(&ost->last_frame);

av_dict_free(&ost->encoder_opts);

av_parser_close(ost->parser);

avcodec_free_context(&ost->parser_avctx);

av_freep(&ost->forced_keyframes);

av_expr_free(ost->forced_keyframes_pexpr);

av_freep(&ost->avfilter);

av_freep(&ost->logfile_prefix);

av_freep(&ost->audio_channels_map);

ost->audio_channels_mapped = 0;

av_dict_free(&ost->sws_dict);

avcodec_free_context(&ost->enc_ctx);

avcodec_parameters_free(&ost->ref_par);

if (ost->muxing_queue) {

while (av_fifo_size(ost->muxing_queue)) {

AVPacket pkt;

av_fifo_generic_read(ost->muxing_queue, &pkt, sizeof(pkt), NULL);

av_packet_unref(&pkt);

}

av_fifo_freep(&ost->muxing_queue);

}

av_freep(&output_streams[i]);

}

#if HAVE_PTHREADS

free_input_threads();

#endif

for (i = 0; i < nb_input_files; i++) {

avformat_close_input(&input_files[i]->ctx);

av_freep(&input_files[i]);

}

for (i = 0; i < nb_input_streams; i++) {

InputStream *ist = input_streams[i];

av_frame_free(&ist->decoded_frame);

av_frame_free(&ist->filter_frame);

av_dict_free(&ist->decoder_opts);

avsubtitle_free(&ist->prev_sub.subtitle);

av_frame_free(&ist->sub2video.frame);

av_freep(&ist->filters);

av_freep(&ist->hwaccel_device);

av_freep(&ist->dts_buffer);

avcodec_free_context(&ist->dec_ctx);

av_freep(&input_streams[i]);

}

if (vstats_file) {

if (fclose(vstats_file))

av_log(NULL, AV_LOG_ERROR,

"Error closing vstats file, loss of information possible: %s\n",

av_err2str(AVERROR(errno)));

}

av_freep(&vstats_filename);

av_freep(&input_streams);

av_freep(&input_files);

av_freep(&output_streams);

av_freep(&output_files);

uninit_opts();

avformat_network_deinit();

if (received_sigterm) {

av_log(NULL, AV_LOG_INFO, "Exiting normally, received signal %d.\n",

(int) received_sigterm);

} else if (ret && atomic_load(&transcode_init_done)) {

av_log(NULL, AV_LOG_INFO, "Conversion failed!\n");

}

term_exit();

ffmpeg_exited = 1;

}

void remove_avoptions(AVDictionary **a, AVDictionary *b)

{

AVDictionaryEntry *t = NULL;

while ((t = av_dict_get(b, "", t, AV_DICT_IGNORE_SUFFIX))) {

av_dict_set(a, t->key, NULL, AV_DICT_MATCH_CASE);

}

}

void assert_avoptions(AVDictionary *m)

{

AVDictionaryEntry *t;

if ((t = av_dict_get(m, "", NULL, AV_DICT_IGNORE_SUFFIX))) {

av_log(NULL, AV_LOG_FATAL, "Option %s not found.\n", t->key);

exit_program(1);

}

}

static void abort_codec_experimental(AVCodec *c, int encoder)

{

exit_program(1);

}

static void update_benchmark(const char *fmt, ...)

{

if (do_benchmark_all) {

int64_t t = getutime();

va_list va;

char buf[1024];

if (fmt) {

va_start(va, fmt);

vsnprintf(buf, sizeof(buf), fmt, va);

va_end(va);

av_log(NULL, AV_LOG_INFO, "bench: %8"PRIu64" %s \n", t - current_time, buf);

}

current_time = t;

}

}

static void close_all_output_streams(OutputStream *ost, OSTFinished this_stream, OSTFinished others)

{

int i;

for (i = 0; i < nb_output_streams; i++) {

OutputStream *ost2 = output_streams[i];

ost2->finished |= ost == ost2 ? this_stream : others;

}

}

static void write_packet(OutputFile *of, AVPacket *pkt, OutputStream *ost, int unqueue)

{

AVFormatContext *s = of->ctx;

AVStream *st = ost->st;

int ret;

/*

* Audio encoders may split the packets -- #frames in != #packets out.

* But there is no reordering, so we can limit the number of output packets

* by simply dropping them here.

* Counting encoded video frames needs to be done separately because of

* reordering, see do_video_out().

* Do not count the packet when unqueued because it has been counted when queued.

*/

if (!(st->codecpar->codec_type == AVMEDIA_TYPE_VIDEO && ost->encoding_needed) && !unqueue) {

if (ost->frame_number >= ost->max_frames) {

av_packet_unref(pkt);

return;

}

ost->frame_number++;

}

if (!of->header_written) {

AVPacket tmp_pkt = {0};

/* the muxer is not initialized yet, buffer the packet */

if (!av_fifo_space(ost->muxing_queue)) {

int new_size = FFMIN(2 * av_fifo_size(ost->muxing_queue),

ost->max_muxing_queue_size);

if (new_size <= av_fifo_size(ost->muxing_queue)) {

av_log(NULL, AV_LOG_ERROR,

"Too many packets buffered for output stream %d:%d.\n",

ost->file_index, ost->st->index);

exit_program(1);

}

ret = av_fifo_realloc2(ost->muxing_queue, new_size);

if (ret < 0)

exit_program(1);

}

ret = av_packet_ref(&tmp_pkt, pkt);

if (ret < 0)

exit_program(1);

av_fifo_generic_write(ost->muxing_queue, &tmp_pkt, sizeof(tmp_pkt), NULL);

av_packet_unref(pkt);

return;

}

if ((st->codecpar->codec_type == AVMEDIA_TYPE_VIDEO && video_sync_method == VSYNC_DROP) ||

(st->codecpar->codec_type == AVMEDIA_TYPE_AUDIO && audio_sync_method < 0))

pkt->pts = pkt->dts = AV_NOPTS_VALUE;

if (st->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {

int i;

uint8_t *sd = av_packet_get_side_data(pkt, AV_PKT_DATA_QUALITY_STATS,

NULL);

ost->quality = sd ? AV_RL32(sd) : -1;

ost->pict_type = sd ? sd[4] : AV_PICTURE_TYPE_NONE;

for (i = 0; i<FF_ARRAY_ELEMS(ost->error); i++) {

if (sd && i < sd[5])

ost->error[i] = AV_RL64(sd + 8 + 8*i);

else

ost->error[i] = -1;

}

if (ost->frame_rate.num && ost->is_cfr) {

if (pkt->duration > 0)

av_log(NULL, AV_LOG_WARNING, "Overriding packet duration by frame rate, this should not happen\n");

pkt->duration = av_rescale_q(1, av_inv_q(ost->frame_rate),

ost->mux_timebase);

}

}

av_packet_rescale_ts(pkt, ost->mux_timebase, ost->st->time_base);

if (!(s->oformat->flags & AVFMT_NOTIMESTAMPS)) {

if (pkt->dts != AV_NOPTS_VALUE &&

pkt->pts != AV_NOPTS_VALUE &&

pkt->dts > pkt->pts) {

av_log(s, AV_LOG_WARNING, "Invalid DTS: %"PRId64" PTS: %"PRId64" in output stream %d:%d, replacing by guess\n",

pkt->dts, pkt->pts,

ost->file_index, ost->st->index);

pkt->pts =

pkt->dts = pkt->pts + pkt->dts + ost->last_mux_dts + 1

- FFMIN3(pkt->pts, pkt->dts, ost->last_mux_dts + 1)

- FFMAX3(pkt->pts, pkt->dts, ost->last_mux_dts + 1);

}

if ((st->codecpar->codec_type == AVMEDIA_TYPE_AUDIO || st->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) &&

pkt->dts != AV_NOPTS_VALUE &&

!(st->codecpar->codec_id == AV_CODEC_ID_VP9 && ost->stream_copy) &&

ost->last_mux_dts != AV_NOPTS_VALUE) {

int64_t max = ost->last_mux_dts + !(s->oformat->flags & AVFMT_TS_NONSTRICT);

if (pkt->dts < max) {

int loglevel = max - pkt->dts > 2 || st->codecpar->codec_type == AVMEDIA_TYPE_VIDEO ? AV_LOG_WARNING : AV_LOG_DEBUG;

av_log(s, loglevel, "Non-monotonous DTS in output stream "

"%d:%d; previous: %"PRId64", current: %"PRId64"; ",

ost->file_index, ost->st->index, ost->last_mux_dts, pkt->dts);

if (exit_on_error) {

av_log(NULL, AV_LOG_FATAL, "aborting.\n");

exit_program(1);

}

av_log(s, loglevel, "changing to %"PRId64". This may result "

"in incorrect timestamps in the output file.\n",

max);

if (pkt->pts >= pkt->dts)

pkt->pts = FFMAX(pkt->pts, max);

pkt->dts = max;

}

}

}

ost->last_mux_dts = pkt->dts;

ost->data_size += pkt->size;

ost->packets_written++;

pkt->stream_index = ost->index;

if (debug_ts) {

av_log(NULL, AV_LOG_INFO, "muxer <- type:%s "

"pkt_pts:%s pkt_pts_time:%s pkt_dts:%s pkt_dts_time:%s size:%d\n",

av_get_media_type_string(ost->enc_ctx->codec_type),

av_ts2str(pkt->pts), av_ts2timestr(pkt->pts, &ost->st->time_base),

av_ts2str(pkt->dts), av_ts2timestr(pkt->dts, &ost->st->time_base),

pkt->size

);

}

ret = av_interleaved_write_frame(s, pkt);

if (ret < 0) {

print_error("av_interleaved_write_frame()", ret);

main_return_code = 1;

close_all_output_streams(ost, MUXER_FINISHED | ENCODER_FINISHED, ENCODER_FINISHED);

}

av_packet_unref(pkt);

}

static void close_output_stream(OutputStream *ost)

{

OutputFile *of = output_files[ost->file_index];

ost->finished |= ENCODER_FINISHED;

if (of->shortest) {

int64_t end = av_rescale_q(ost->sync_opts - ost->first_pts, ost->enc_ctx->time_base, AV_TIME_BASE_Q);

of->recording_time = FFMIN(of->recording_time, end);

}

}

static void output_packet(OutputFile *of, AVPacket *pkt, OutputStream *ost)

{

int ret = 0;

/* apply the output bitstream filters, if any */

if (ost->nb_bitstream_filters) {

int idx;

ret = av_bsf_send_packet(ost->bsf_ctx[0], pkt);

if (ret < 0)

goto finish;

idx = 1;

while (idx) {

/* get a packet from the previous filter up the chain */

ret = av_bsf_receive_packet(ost->bsf_ctx[idx - 1], pkt);

if (ret == AVERROR(EAGAIN)) {

ret = 0;

idx--;

continue;

} else if (ret < 0)

goto finish;

/* HACK! - aac_adtstoasc updates extradata after filtering the first frame when

* the api states this shouldn't happen after init(). Propagate it here to the

* muxer and to the next filters in the chain to workaround this.

* TODO/FIXME - Make aac_adtstoasc use new packet side data instead of changing

* par_out->extradata and adapt muxers accordingly to get rid of this. */

if (!(ost->bsf_extradata_updated[idx - 1] & 1)) {

ret = avcodec_parameters_copy(ost->st->codecpar, ost->bsf_ctx[idx - 1]->par_out);

if (ret < 0)

goto finish;

ost->bsf_extradata_updated[idx - 1] |= 1;

}

/* send it to the next filter down the chain or to the muxer */

if (idx < ost->nb_bitstream_filters) {

/* HACK/FIXME! - See above */

if (!(ost->bsf_extradata_updated[idx] & 2)) {

ret = avcodec_parameters_copy(ost->bsf_ctx[idx]->par_out, ost->bsf_ctx[idx - 1]->par_out);

if (ret < 0)

goto finish;

ost->bsf_extradata_updated[idx] |= 2;

}

ret = av_bsf_send_packet(ost->bsf_ctx[idx], pkt);

if (ret < 0)

goto finish;

idx++;

} else

write_packet(of, pkt, ost, 0);

}

} else

write_packet(of, pkt, ost, 0);

finish:

if (ret < 0 && ret != AVERROR_EOF) {

av_log(NULL, AV_LOG_ERROR, "Error applying bitstream filters to an output "

"packet for stream #%d:%d.\n", ost->file_index, ost->index);

if(exit_on_error)

exit_program(1);

}

}

static int check_recording_time(OutputStream *ost)

{

OutputFile *of = output_files[ost->file_index];

if (of->recording_time != INT64_MAX &&

av_compare_ts(ost->sync_opts - ost->first_pts, ost->enc_ctx->time_base, of->recording_time,

AV_TIME_BASE_Q) >= 0) {

close_output_stream(ost);

return 0;

}

return 1;

}

static void do_audio_out(OutputFile *of, OutputStream *ost,

AVFrame *frame)

{

AVCodecContext *enc = ost->enc_ctx;

AVPacket pkt;

int ret;

av_init_packet(&pkt);

pkt.data = NULL;

pkt.size = 0;

if (!check_recording_time(ost))

return;

if (frame->pts == AV_NOPTS_VALUE || audio_sync_method < 0)

frame->pts = ost->sync_opts;

ost->sync_opts = frame->pts + frame->nb_samples;

ost->samples_encoded += frame->nb_samples;

ost->frames_encoded++;

av_assert0(pkt.size || !pkt.data);

update_benchmark(NULL);

if (debug_ts) {

av_log(NULL, AV_LOG_INFO, "encoder <- type:audio "

"frame_pts:%s frame_pts_time:%s time_base:%d/%d\n",

av_ts2str(frame->pts), av_ts2timestr(frame->pts, &enc->time_base),

enc->time_base.num, enc->time_base.den);

}

ret = avcodec_send_frame(enc, frame);

if (ret < 0)

goto error;

while (1) {

ret = avcodec_receive_packet(enc, &pkt);

if (ret == AVERROR(EAGAIN))

break;

if (ret < 0)

goto error;

update_benchmark("encode_audio %d.%d", ost->file_index, ost->index);

av_packet_rescale_ts(&pkt, enc->time_base, ost->mux_timebase);

if (debug_ts) {

av_log(NULL, AV_LOG_INFO, "encoder -> type:audio "

"pkt_pts:%s pkt_pts_time:%s pkt_dts:%s pkt_dts_time:%s\n",

av_ts2str(pkt.pts), av_ts2timestr(pkt.pts, &enc->time_base),

av_ts2str(pkt.dts), av_ts2timestr(pkt.dts, &enc->time_base));

}

output_packet(of, &pkt, ost);

}

return;

error:

av_log(NULL, AV_LOG_FATAL, "Audio encoding failed\n");

exit_program(1);

}

static void do_subtitle_out(OutputFile *of,

OutputStream *ost,

AVSubtitle *sub)

{

int subtitle_out_max_size = 1024 * 1024;

int subtitle_out_size, nb, i;

AVCodecContext *enc;

AVPacket pkt;

int64_t pts;

if (sub->pts == AV_NOPTS_VALUE) {

av_log(NULL, AV_LOG_ERROR, "Subtitle packets must have a pts\n");

if (exit_on_error)

exit_program(1);

return;

}

enc = ost->enc_ctx;

if (!subtitle_out) {

subtitle_out = av_malloc(subtitle_out_max_size);

if (!subtitle_out) {

av_log(NULL, AV_LOG_FATAL, "Failed to allocate subtitle_out\n");

exit_program(1);

}

}

/* Note: DVB subtitle need one packet to draw them and one other

packet to clear them */

/* XXX: signal it in the codec context ? */

if (enc->codec_id == AV_CODEC_ID_DVB_SUBTITLE)

nb = 2;

else

nb = 1;

/* shift timestamp to honor -ss and make check_recording_time() work with -t */

pts = sub->pts;

if (output_files[ost->file_index]->start_time != AV_NOPTS_VALUE)

pts -= output_files[ost->file_index]->start_time;

for (i = 0; i < nb; i++) {

unsigned save_num_rects = sub->num_rects;

ost->sync_opts = av_rescale_q(pts, AV_TIME_BASE_Q, enc->time_base);

if (!check_recording_time(ost))

return;

sub->pts = pts;

// start_display_time is required to be 0

sub->pts += av_rescale_q(sub->start_display_time, (AVRational){ 1, 1000 }, AV_TIME_BASE_Q);

sub->end_display_time -= sub->start_display_time;

sub->start_display_time = 0;

if (i == 1)

sub->num_rects = 0;

ost->frames_encoded++;

subtitle_out_size = avcodec_encode_subtitle(enc, subtitle_out,

subtitle_out_max_size, sub);

if (i == 1)

sub->num_rects = save_num_rects;

if (subtitle_out_size < 0) {

av_log(NULL, AV_LOG_FATAL, "Subtitle encoding failed\n");

exit_program(1);

}

av_init_packet(&pkt);

pkt.data = subtitle_out;

pkt.size = subtitle_out_size;

pkt.pts = av_rescale_q(sub->pts, AV_TIME_BASE_Q, ost->mux_timebase);

pkt.duration = av_rescale_q(sub->end_display_time, (AVRational){ 1, 1000 }, ost->mux_timebase);

if (enc->codec_id == AV_CODEC_ID_DVB_SUBTITLE) {

/* XXX: the pts correction is handled here. Maybe handling

it in the codec would be better */

if (i == 0)

pkt.pts += av_rescale_q(sub->start_display_time, (AVRational){ 1, 1000 }, ost->mux_timebase);

else

pkt.pts += av_rescale_q(sub->end_display_time, (AVRational){ 1, 1000 }, ost->mux_timebase);

}

pkt.dts = pkt.pts;

output_packet(of, &pkt, ost);

}

}

static void do_video_out(OutputFile *of,

OutputStream *ost,

AVFrame *next_picture,

double sync_ipts)

{

int ret, format_video_sync;

AVPacket pkt;

AVCodecContext *enc = ost->enc_ctx;

AVCodecParameters *mux_par = ost->st->codecpar;

AVRational frame_rate;

int nb_frames, nb0_frames, i;

double delta, delta0;

double duration = 0;

int frame_size = 0;

InputStream *ist = NULL;

AVFilterContext *filter = ost->filter->filter;

if (ost->source_index >= 0)

ist = input_streams[ost->source_index];

frame_rate = av_buffersink_get_frame_rate(filter);

if (frame_rate.num > 0 && frame_rate.den > 0)

duration = 1/(av_q2d(frame_rate) * av_q2d(enc->time_base));

if(ist && ist->st->start_time != AV_NOPTS_VALUE && ist->st->first_dts != AV_NOPTS_VALUE && ost->frame_rate.num)

duration = FFMIN(duration, 1/(av_q2d(ost->frame_rate) * av_q2d(enc->time_base)));

if (!ost->filters_script &&

!ost->filters &&

next_picture &&

ist &&

lrintf(av_frame_get_pkt_duration(next_picture) * av_q2d(ist->st->time_base) / av_q2d(enc->time_base)) > 0) {

duration = lrintf(av_frame_get_pkt_duration(next_picture) * av_q2d(ist->st->time_base) / av_q2d(enc->time_base));

}

if (!next_picture) {

//end, flushing

nb0_frames = nb_frames = mid_pred(ost->last_nb0_frames[0],

ost->last_nb0_frames[1],

ost->last_nb0_frames[2]);

} else {

delta0 = sync_ipts - ost->sync_opts; // delta0 is the "drift" between the input frame (next_picture) and where it would fall in the output.

delta = delta0 + duration;

/* by default, we output a single frame */

nb0_frames = 0; // tracks the number of times the PREVIOUS frame should be duplicated, mostly for variable framerate (VFR)

nb_frames = 1;

format_video_sync = video_sync_method;

if (format_video_sync == VSYNC_AUTO) {

if(!strcmp(of->ctx->oformat->name, "avi")) {

format_video_sync = VSYNC_VFR;

} else

format_video_sync = (of->ctx->oformat->flags & AVFMT_VARIABLE_FPS) ? ((of->ctx->oformat->flags & AVFMT_NOTIMESTAMPS) ? VSYNC_PASSTHROUGH : VSYNC_VFR) : VSYNC_CFR;

if ( ist

&& format_video_sync == VSYNC_CFR

&& input_files[ist->file_index]->ctx->nb_streams == 1

&& input_files[ist->file_index]->input_ts_offset == 0) {

format_video_sync = VSYNC_VSCFR;

}

if (format_video_sync == VSYNC_CFR && copy_ts) {

format_video_sync = VSYNC_VSCFR;

}

}

ost->is_cfr = (format_video_sync == VSYNC_CFR || format_video_sync == VSYNC_VSCFR);

if (delta0 < 0 &&

delta > 0 &&

format_video_sync != VSYNC_PASSTHROUGH &&

format_video_sync != VSYNC_DROP) {

if (delta0 < -0.6) {

av_log(NULL, AV_LOG_WARNING, "Past duration %f too large\n", -delta0);

} else

av_log(NULL, AV_LOG_DEBUG, "Clipping frame in rate conversion by %f\n", -delta0);

sync_ipts = ost->sync_opts;

duration += delta0;

delta0 = 0;

}

switch (format_video_sync) {

case VSYNC_VSCFR:

if (ost->frame_number == 0 && delta0 >= 0.5) {

av_log(NULL, AV_LOG_DEBUG, "Not duplicating %d initial frames\n", (int)lrintf(delta0));

delta = duration;

delta0 = 0;

ost->sync_opts = lrint(sync_ipts);

}

case VSYNC_CFR:

// FIXME set to 0.5 after we fix some dts/pts bugs like in avidec.c

if (frame_drop_threshold && delta < frame_drop_threshold && ost->frame_number) {

nb_frames = 0;

} else if (delta < -1.1)

nb_frames = 0;

else if (delta > 1.1) {

nb_frames = lrintf(delta);

if (delta0 > 1.1)

nb0_frames = lrintf(delta0 - 0.6);

}

break;

case VSYNC_VFR:

if (delta <= -0.6)

nb_frames = 0;

else if (delta > 0.6)

ost->sync_opts = lrint(sync_ipts);

break;

case VSYNC_DROP:

case VSYNC_PASSTHROUGH:

ost->sync_opts = lrint(sync_ipts);

break;

default:

av_assert0(0);

}

}

nb_frames = FFMIN(nb_frames, ost->max_frames - ost->frame_number);

nb0_frames = FFMIN(nb0_frames, nb_frames);

memmove(ost->last_nb0_frames + 1,

ost->last_nb0_frames,

sizeof(ost->last_nb0_frames[0]) * (FF_ARRAY_ELEMS(ost->last_nb0_frames) - 1));

ost->last_nb0_frames[0] = nb0_frames;

if (nb0_frames == 0 && ost->last_dropped) {

nb_frames_drop++;

av_log(NULL, AV_LOG_VERBOSE,

"*** dropping frame %d from stream %d at ts %"PRId64"\n",

ost->frame_number, ost->st->index, ost->last_frame->pts);

}

if (nb_frames > (nb0_frames && ost->last_dropped) + (nb_frames > nb0_frames)) {

if (nb_frames > dts_error_threshold * 30) {

av_log(NULL, AV_LOG_ERROR, "%d frame duplication too large, skipping\n", nb_frames - 1);

nb_frames_drop++;

return;

}

nb_frames_dup += nb_frames - (nb0_frames && ost->last_dropped) - (nb_frames > nb0_frames);

av_log(NULL, AV_LOG_VERBOSE, "*** %d dup!\n", nb_frames - 1);

if (nb_frames_dup > dup_warning) {

av_log(NULL, AV_LOG_WARNING, "More than %d frames duplicated\n", dup_warning);

dup_warning *= 10;

}

}

ost->last_dropped = nb_frames == nb0_frames && next_picture;

/* duplicates frame if needed */

for (i = 0; i < nb_frames; i++) {

AVFrame *in_picture;

av_init_packet(&pkt);

pkt.data = NULL;

pkt.size = 0;

if (i < nb0_frames && ost->last_frame) {

in_picture = ost->last_frame;

} else

in_picture = next_picture;

if (!in_picture)

return;

in_picture->pts = ost->sync_opts;

#if 1

if (!check_recording_time(ost))

#else

if (ost->frame_number >= ost->max_frames)

#endif

return;

#if FF_API_LAVF_FMT_RAWPICTURE

if (of->ctx->oformat->flags & AVFMT_RAWPICTURE &&

enc->codec->id == AV_CODEC_ID_RAWVIDEO) {

/* raw pictures are written as AVPicture structure to

avoid any copies. We support temporarily the older

method. */

if (in_picture->interlaced_frame)

mux_par->field_order = in_picture->top_field_first ? AV_FIELD_TB:AV_FIELD_BT;

else

mux_par->field_order = AV_FIELD_PROGRESSIVE;

pkt.data = (uint8_t *)in_picture;

pkt.size = sizeof(AVPicture);

pkt.pts = av_rescale_q(in_picture->pts, enc->time_base, ost->mux_timebase);

pkt.flags |= AV_PKT_FLAG_KEY;

output_packet(of, &pkt, ost);

} else

#endif

{

int forced_keyframe = 0;

double pts_time;

if (enc->flags & (AV_CODEC_FLAG_INTERLACED_DCT | AV_CODEC_FLAG_INTERLACED_ME) &&

ost->top_field_first >= 0)

in_picture->top_field_first = !!ost->top_field_first;

if (in_picture->interlaced_frame) {

if (enc->codec->id == AV_CODEC_ID_MJPEG)

mux_par->field_order = in_picture->top_field_first ? AV_FIELD_TT:AV_FIELD_BB;

else

mux_par->field_order = in_picture->top_field_first ? AV_FIELD_TB:AV_FIELD_BT;

} else

mux_par->field_order = AV_FIELD_PROGRESSIVE;

in_picture->quality = enc->global_quality;

in_picture->pict_type = 0;

pts_time = in_picture->pts != AV_NOPTS_VALUE ?

in_picture->pts * av_q2d(enc->time_base) : NAN;

if (ost->forced_kf_index < ost->forced_kf_count &&

in_picture->pts >= ost->forced_kf_pts[ost->forced_kf_index]) {

ost->forced_kf_index++;

forced_keyframe = 1;

} else if (ost->forced_keyframes_pexpr) {

double res;

ost->forced_keyframes_expr_const_values[FKF_T] = pts_time;

res = av_expr_eval(ost->forced_keyframes_pexpr,

ost->forced_keyframes_expr_const_values, NULL);

ff_dlog(NULL, "force_key_frame: n:%f n_forced:%f prev_forced_n:%f t:%f prev_forced_t:%f -> res:%f\n",

ost->forced_keyframes_expr_const_values[FKF_N],

ost->forced_keyframes_expr_const_values[FKF_N_FORCED],

ost->forced_keyframes_expr_const_values[FKF_PREV_FORCED_N],

ost->forced_keyframes_expr_const_values[FKF_T],

ost->forced_keyframes_expr_const_values[FKF_PREV_FORCED_T],

res);

if (res) {

forced_keyframe = 1;

ost->forced_keyframes_expr_const_values[FKF_PREV_FORCED_N] =

ost->forced_keyframes_expr_const_values[FKF_N];

ost->forced_keyframes_expr_const_values[FKF_PREV_FORCED_T] =

ost->forced_keyframes_expr_const_values[FKF_T];

ost->forced_keyframes_expr_const_values[FKF_N_FORCED] += 1;

}

ost->forced_keyframes_expr_const_values[FKF_N] += 1;

} else if ( ost->forced_keyframes

&& !strncmp(ost->forced_keyframes, "source", 6)

&& in_picture->key_frame==1) {

forced_keyframe = 1;

}

if (forced_keyframe) {

in_picture->pict_type = AV_PICTURE_TYPE_I;

av_log(NULL, AV_LOG_DEBUG, "Forced keyframe at time %f\n", pts_time);

}

update_benchmark(NULL);

if (debug_ts) {

av_log(NULL, AV_LOG_INFO, "encoder <- type:video "

"frame_pts:%s frame_pts_time:%s time_base:%d/%d\n",

av_ts2str(in_picture->pts), av_ts2timestr(in_picture->pts, &enc->time_base),

enc->time_base.num, enc->time_base.den);

}

ost->frames_encoded++;

ret = avcodec_send_frame(enc, in_picture);

if (ret < 0)

goto error;

while (1) {

ret = avcodec_receive_packet(enc, &pkt);

update_benchmark("encode_video %d.%d", ost->file_index, ost->index);

if (ret == AVERROR(EAGAIN))

break;

if (ret < 0)

goto error;

if (debug_ts) {

av_log(NULL, AV_LOG_INFO, "encoder -> type:video "

"pkt_pts:%s pkt_pts_time:%s pkt_dts:%s pkt_dts_time:%s\n",

av_ts2str(pkt.pts), av_ts2timestr(pkt.pts, &enc->time_base),

av_ts2str(pkt.dts), av_ts2timestr(pkt.dts, &enc->time_base));

}

if (pkt.pts == AV_NOPTS_VALUE && !(enc->codec->capabilities & AV_CODEC_CAP_DELAY))

pkt.pts = ost->sync_opts;

av_packet_rescale_ts(&pkt, enc->time_base, ost->mux_timebase);

if (debug_ts) {

av_log(NULL, AV_LOG_INFO, "encoder -> type:video "

"pkt_pts:%s pkt_pts_time:%s pkt_dts:%s pkt_dts_time:%s\n",

av_ts2str(pkt.pts), av_ts2timestr(pkt.pts, &ost->mux_timebase),

av_ts2str(pkt.dts), av_ts2timestr(pkt.dts, &ost->mux_timebase));

}

frame_size = pkt.size;

output_packet(of, &pkt, ost);

/* if two pass, output log */

if (ost->logfile && enc->stats_out) {

fprintf(ost->logfile, "%s", enc->stats_out);

}

}

}

ost->sync_opts++;

/*

* For video, number of frames in == number of packets out.

* But there may be reordering, so we can't throw away frames on encoder

* flush, we need to limit them here, before they go into encoder.

*/

ost->frame_number++;

if (vstats_filename && frame_size)

do_video_stats(ost, frame_size);

}

if (!ost->last_frame)

ost->last_frame = av_frame_alloc();

av_frame_unref(ost->last_frame);

if (next_picture && ost->last_frame)

av_frame_ref(ost->last_frame, next_picture);

else

av_frame_free(&ost->last_frame);

return;

error:

av_log(NULL, AV_LOG_FATAL, "Video encoding failed\n");

exit_program(1);

}

static double psnr(double d)

{

return -10.0 * log10(d);

}

static void do_video_stats(OutputStream *ost, int frame_size)

{

AVCodecContext *enc;

int frame_number;

double ti1, bitrate, avg_bitrate;

/* this is executed just the first time do_video_stats is called */

if (!vstats_file) {

vstats_file = fopen(vstats_filename, "w");

if (!vstats_file) {

perror("fopen");

exit_program(1);

}

}

enc = ost->enc_ctx;

if (enc->codec_type == AVMEDIA_TYPE_VIDEO) {

frame_number = ost->st->nb_frames;

if (vstats_version <= 1) {

fprintf(vstats_file, "frame= %5d q= %2.1f ", frame_number,

ost->quality / (float)FF_QP2LAMBDA);

} else {

fprintf(vstats_file, "out= %2d st= %2d frame= %5d q= %2.1f ", ost->file_index, ost->index, frame_number,

ost->quality / (float)FF_QP2LAMBDA);

}

if (ost->error[0]>=0 && (enc->flags & AV_CODEC_FLAG_PSNR))

fprintf(vstats_file, "PSNR= %6.2f ", psnr(ost->error[0] / (enc->width * enc->height * 255.0 * 255.0)));

fprintf(vstats_file,"f_size= %6d ", frame_size);

/* compute pts value */

ti1 = av_stream_get_end_pts(ost->st) * av_q2d(ost->st->time_base);

if (ti1 < 0.01)

ti1 = 0.01;

bitrate = (frame_size * 8) / av_q2d(enc->time_base) / 1000.0;

avg_bitrate = (double)(ost->data_size * 8) / ti1 / 1000.0;

fprintf(vstats_file, "s_size= %8.0fkB time= %0.3f br= %7.1fkbits/s avg_br= %7.1fkbits/s ",

(double)ost->data_size / 1024, ti1, bitrate, avg_bitrate);

fprintf(vstats_file, "type= %c\n", av_get_picture_type_char(ost->pict_type));

}

}

static int init_output_stream(OutputStream *ost, char *error, int error_len);

static void finish_output_stream(OutputStream *ost)

{

OutputFile *of = output_files[ost->file_index];

int i;

ost->finished = ENCODER_FINISHED | MUXER_FINISHED;

if (of->shortest) {

for (i = 0; i < of->ctx->nb_streams; i++)

output_streams[of->ost_index + i]->finished = ENCODER_FINISHED | MUXER_FINISHED;

}

}

/**

* Get and encode new output from any of the filtergraphs, without causing

* activity.

*

* @return 0 for success, <0 for severe errors

*/

static int reap_filters(int flush)

{

AVFrame *filtered_frame = NULL;

int i;

/* Reap all buffers present in the buffer sinks */

for (i = 0; i < nb_output_streams; i++) {

OutputStream *ost = output_streams[i];

OutputFile *of = output_files[ost->file_index];

AVFilterContext *filter;

AVCodecContext *enc = ost->enc_ctx;

int ret = 0;

if (!ost->filter || !ost->filter->graph->graph)

continue;

filter = ost->filter->filter;

if (!ost->initialized) {

char error[1024] = "";

ret = init_output_stream(ost, error, sizeof(error));

if (ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Error initializing output stream %d:%d -- %s\n",

ost->file_index, ost->index, error);

exit_program(1);

}

}

if (!ost->filtered_frame && !(ost->filtered_frame = av_frame_alloc())) {

return AVERROR(ENOMEM);

}

filtered_frame = ost->filtered_frame;

while (1) {

double float_pts = AV_NOPTS_VALUE; // this is identical to filtered_frame.pts but with higher precision

ret = av_buffersink_get_frame_flags(filter, filtered_frame,

AV_BUFFERSINK_FLAG_NO_REQUEST);

if (ret < 0) {

if (ret != AVERROR(EAGAIN) && ret != AVERROR_EOF) {

av_log(NULL, AV_LOG_WARNING,

"Error in av_buffersink_get_frame_flags(): %s\n", av_err2str(ret));

} else if (flush && ret == AVERROR_EOF) {

if (av_buffersink_get_type(filter) == AVMEDIA_TYPE_VIDEO)

do_video_out(of, ost, NULL, AV_NOPTS_VALUE);

}

break;

}

if (ost->finished) {

av_frame_unref(filtered_frame);

continue;

}

if (filtered_frame->pts != AV_NOPTS_VALUE) {

int64_t start_time = (of->start_time == AV_NOPTS_VALUE) ? 0 : of->start_time;

AVRational filter_tb = av_buffersink_get_time_base(filter);

AVRational tb = enc->time_base;

int extra_bits = av_clip(29 - av_log2(tb.den), 0, 16);

tb.den <<= extra_bits;

float_pts =

av_rescale_q(filtered_frame->pts, filter_tb, tb) -

av_rescale_q(start_time, AV_TIME_BASE_Q, tb);

float_pts /= 1 << extra_bits;

// avoid exact midoints to reduce the chance of rounding differences, this can be removed in case the fps code is changed to work with integers

float_pts += FFSIGN(float_pts) * 1.0 / (1<<17);

filtered_frame->pts =

av_rescale_q(filtered_frame->pts, filter_tb, enc->time_base) -

av_rescale_q(start_time, AV_TIME_BASE_Q, enc->time_base);

}

//if (ost->source_index >= 0)

// *filtered_frame= *input_streams[ost->source_index]->decoded_frame; //for me_threshold

switch (av_buffersink_get_type(filter)) {

case AVMEDIA_TYPE_VIDEO:

if (!ost->frame_aspect_ratio.num)

enc->sample_aspect_ratio = filtered_frame->sample_aspect_ratio;

if (debug_ts) {

av_log(NULL, AV_LOG_INFO, "filter -> pts:%s pts_time:%s exact:%f time_base:%d/%d\n",

av_ts2str(filtered_frame->pts), av_ts2timestr(filtered_frame->pts, &enc->time_base),

float_pts,

enc->time_base.num, enc->time_base.den);

}

do_video_out(of, ost, filtered_frame, float_pts);

break;

case AVMEDIA_TYPE_AUDIO:

if (!(enc->codec->capabilities & AV_CODEC_CAP_PARAM_CHANGE) &&

enc->channels != av_frame_get_channels(filtered_frame)) {

av_log(NULL, AV_LOG_ERROR,

"Audio filter graph output is not normalized and encoder does not support parameter changes\n");

break;

}

do_audio_out(of, ost, filtered_frame);

break;

default:

// TODO support subtitle filters

av_assert0(0);

}

av_frame_unref(filtered_frame);

}

}

return 0;

}

static void print_final_stats(int64_t total_size)

{

uint64_t video_size = 0, audio_size = 0, extra_size = 0, other_size = 0;

uint64_t subtitle_size = 0;

uint64_t data_size = 0;

float percent = -1.0;

int i, j;

int pass1_used = 1;

for (i = 0; i < nb_output_streams; i++) {

OutputStream *ost = output_streams[i];

switch (ost->enc_ctx->codec_type) {

case AVMEDIA_TYPE_VIDEO: video_size += ost->data_size; break;

case AVMEDIA_TYPE_AUDIO: audio_size += ost->data_size; break;

case AVMEDIA_TYPE_SUBTITLE: subtitle_size += ost->data_size; break;

default: other_size += ost->data_size; break;

}

extra_size += ost->enc_ctx->extradata_size;

data_size += ost->data_size;

if ( (ost->enc_ctx->flags & (AV_CODEC_FLAG_PASS1 | AV_CODEC_FLAG_PASS2))

!= AV_CODEC_FLAG_PASS1)

pass1_used = 0;

}

if (data_size && total_size>0 && total_size >= data_size)

percent = 100.0 * (total_size - data_size) / data_size;

av_log(NULL, AV_LOG_INFO, "video:%1.0fkB audio:%1.0fkB subtitle:%1.0fkB other streams:%1.0fkB global headers:%1.0fkB muxing overhead: ",

video_size / 1024.0,

audio_size / 1024.0,

subtitle_size / 1024.0,

other_size / 1024.0,

extra_size / 1024.0);

if (percent >= 0.0)

av_log(NULL, AV_LOG_INFO, "%f%%", percent);

else

av_log(NULL, AV_LOG_INFO, "unknown");

av_log(NULL, AV_LOG_INFO, "\n");

/* print verbose per-stream stats */

for (i = 0; i < nb_input_files; i++) {

InputFile *f = input_files[i];

uint64_t total_packets = 0, total_size = 0;

av_log(NULL, AV_LOG_VERBOSE, "Input file #%d (%s):\n",

i, f->ctx->filename);

for (j = 0; j < f->nb_streams; j++) {

InputStream *ist = input_streams[f->ist_index + j];

enum AVMediaType type = ist->dec_ctx->codec_type;

total_size += ist->data_size;

total_packets += ist->nb_packets;

av_log(NULL, AV_LOG_VERBOSE, " Input stream #%d:%d (%s): ",

i, j, media_type_string(type));

av_log(NULL, AV_LOG_VERBOSE, "%"PRIu64" packets read (%"PRIu64" bytes); ",

ist->nb_packets, ist->data_size);

if (ist->decoding_needed) {

av_log(NULL, AV_LOG_VERBOSE, "%"PRIu64" frames decoded",

ist->frames_decoded);

if (type == AVMEDIA_TYPE_AUDIO)

av_log(NULL, AV_LOG_VERBOSE, " (%"PRIu64" samples)", ist->samples_decoded);

av_log(NULL, AV_LOG_VERBOSE, "; ");

}

av_log(NULL, AV_LOG_VERBOSE, "\n");

}

av_log(NULL, AV_LOG_VERBOSE, " Total: %"PRIu64" packets (%"PRIu64" bytes) demuxed\n",

total_packets, total_size);

}

for (i = 0; i < nb_output_files; i++) {

OutputFile *of = output_files[i];

uint64_t total_packets = 0, total_size = 0;

av_log(NULL, AV_LOG_VERBOSE, "Output file #%d (%s):\n",

i, of->ctx->filename);

for (j = 0; j < of->ctx->nb_streams; j++) {

OutputStream *ost = output_streams[of->ost_index + j];

enum AVMediaType type = ost->enc_ctx->codec_type;

total_size += ost->data_size;

total_packets += ost->packets_written;

av_log(NULL, AV_LOG_VERBOSE, " Output stream #%d:%d (%s): ",

i, j, media_type_string(type));

if (ost->encoding_needed) {

av_log(NULL, AV_LOG_VERBOSE, "%"PRIu64" frames encoded",

ost->frames_encoded);

if (type == AVMEDIA_TYPE_AUDIO)

av_log(NULL, AV_LOG_VERBOSE, " (%"PRIu64" samples)", ost->samples_encoded);

av_log(NULL, AV_LOG_VERBOSE, "; ");

}

av_log(NULL, AV_LOG_VERBOSE, "%"PRIu64" packets muxed (%"PRIu64" bytes); ",

ost->packets_written, ost->data_size);

av_log(NULL, AV_LOG_VERBOSE, "\n");

}

av_log(NULL, AV_LOG_VERBOSE, " Total: %"PRIu64" packets (%"PRIu64" bytes) muxed\n",

total_packets, total_size);

}

if(video_size + data_size + audio_size + subtitle_size + extra_size == 0){

av_log(NULL, AV_LOG_WARNING, "Output file is empty, nothing was encoded ");

if (pass1_used) {

av_log(NULL, AV_LOG_WARNING, "\n");

} else {

av_log(NULL, AV_LOG_WARNING, "(check -ss / -t / -frames parameters if used)\n");

}

}

}

//打印输出信息的方法

static void print_report(int is_last_report, int64_t timer_start, int64_t cur_time,void(call_back)(int current,int total))

{

char buf[1024];

AVBPrint buf_script;

OutputStream *ost;

AVFormatContext *oc;

int64_t total_size;

AVCodecContext *enc;

int frame_number, vid, i;

double bitrate;

double speed;

int64_t pts = INT64_MIN + 1;

static int64_t last_time = -1;

static int qp_histogram[52];

int hours, mins, secs, us;

int ret;

float t;

if (!print_stats && !is_last_report && !progress_avio)

return;

if (!is_last_report) {

if (last_time == -1) {

last_time = cur_time;

return;

}

if ((cur_time - last_time) < 500000)

return;

last_time = cur_time;

}

t = (cur_time-timer_start) / 1000000.0;

oc = output_files[0]->ctx;

total_size = avio_size(oc->pb);

if (total_size <= 0) // FIXME improve avio_size() so it works with non seekable output too

total_size = avio_tell(oc->pb);

buf[0] = '\0';

vid = 0;

av_bprint_init(&buf_script, 0, 1);

for (i = 0; i < nb_output_streams; i++) {

float q = -1;

ost = output_streams[i];

enc = ost->enc_ctx;

if (!ost->stream_copy)

q = ost->quality / (float) FF_QP2LAMBDA;

if (vid && enc->codec_type == AVMEDIA_TYPE_VIDEO) {

snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf), "q=%2.1f ", q);

av_bprintf(&buf_script, "stream_%d_%d_q=%.1f\n",

ost->file_index, ost->index, q);

}

if (!vid && enc->codec_type == AVMEDIA_TYPE_VIDEO) {

float fps;

frame_number = ost->frame_number;

fps = t > 1 ? frame_number / t : 0;

snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf), "frame=%5d fps=%3.*f q=%3.1f ",

frame_number, fps < 9.95, fps, q);

av_bprintf(&buf_script, "frame=%d\n", frame_number);

av_bprintf(&buf_script, "fps=%.1f\n", fps);

av_bprintf(&buf_script, "stream_%d_%d_q=%.1f\n",

ost->file_index, ost->index, q);

//__android_log_print(ANDROID_LOG_ERROR,"JNI_TAG","当前压缩帧:%d",frame_number);

int total_frame_number=input_files[0]->ctx->streams[0]->nb_frames;

call_back(frame_number,total_frame_number);

if (is_last_report)

snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf), "L");

if (qp_hist) {

int j;

int qp = lrintf(q);

if (qp >= 0 && qp < FF_ARRAY_ELEMS(qp_histogram))

qp_histogram[qp]++;

for (j = 0; j < 32; j++)

snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf), "%X", av_log2(qp_histogram[j] + 1));

}

if ((enc->flags & AV_CODEC_FLAG_PSNR) && (ost->pict_type != AV_PICTURE_TYPE_NONE || is_last_report)) {

int j;

double error, error_sum = 0;

double scale, scale_sum = 0;

double p;

char type[3] = { 'Y','U','V' };

snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf), "PSNR=");

for (j = 0; j < 3; j++) {

if (is_last_report) {

error = enc->error[j];

scale = enc->width * enc->height * 255.0 * 255.0 * frame_number;

} else {

error = ost->error[j];

scale = enc->width * enc->height * 255.0 * 255.0;

}

if (j)

scale /= 4;

error_sum += error;

scale_sum += scale;

p = psnr(error / scale);

snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf), "%c:%2.2f ", type[j], p);

av_bprintf(&buf_script, "stream_%d_%d_psnr_%c=%2.2f\n",

ost->file_index, ost->index, type[j] | 32, p);

}

p = psnr(error_sum / scale_sum);

snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf), "*:%2.2f ", psnr(error_sum / scale_sum));

av_bprintf(&buf_script, "stream_%d_%d_psnr_all=%2.2f\n",

ost->file_index, ost->index, p);

}

vid = 1;

}

/* compute min output value */

if (av_stream_get_end_pts(ost->st) != AV_NOPTS_VALUE)

pts = FFMAX(pts, av_rescale_q(av_stream_get_end_pts(ost->st),

ost->st->time_base, AV_TIME_BASE_Q));

if (is_last_report)

nb_frames_drop += ost->last_dropped;

}

secs = FFABS(pts) / AV_TIME_BASE;

us = FFABS(pts) % AV_TIME_BASE;

mins = secs / 60;

secs %= 60;

hours = mins / 60;

mins %= 60;

bitrate = pts && total_size >= 0 ? total_size * 8 / (pts / 1000.0) : -1;

speed = t != 0.0 ? (double)pts / AV_TIME_BASE / t : -1;

if (total_size < 0) snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf),

"size=N/A time=");

else snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf),

"size=%8.0fkB time=", total_size / 1024.0);

if (pts < 0)

snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf), "-");

snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf),

"%02d:%02d:%02d.%02d ", hours, mins, secs,

(100 * us) / AV_TIME_BASE);

if (bitrate < 0) {

snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf),"bitrate=N/A");

av_bprintf(&buf_script, "bitrate=N/A\n");

}else{

snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf),"bitrate=%6.1fkbits/s", bitrate);

av_bprintf(&buf_script, "bitrate=%6.1fkbits/s\n", bitrate);

}

if (total_size < 0) av_bprintf(&buf_script, "total_size=N/A\n");

else av_bprintf(&buf_script, "total_size=%"PRId64"\n", total_size);

av_bprintf(&buf_script, "out_time_ms=%"PRId64"\n", pts);

av_bprintf(&buf_script, "out_time=%02d:%02d:%02d.%06d\n",

hours, mins, secs, us);

if (nb_frames_dup || nb_frames_drop)

snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf), " dup=%d drop=%d",

nb_frames_dup, nb_frames_drop);

av_bprintf(&buf_script, "dup_frames=%d\n", nb_frames_dup);

av_bprintf(&buf_script, "drop_frames=%d\n", nb_frames_drop);

if (speed < 0) {

snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf)," speed=N/A");

av_bprintf(&buf_script, "speed=N/A\n");

} else {

snprintf(buf + strlen(buf), sizeof(buf) - strlen(buf)," speed=%4.3gx", speed);

av_bprintf(&buf_script, "speed=%4.3gx\n", speed);

}

if (print_stats || is_last_report) {

const char end = is_last_report ? '\n' : '\r';

if (print_stats==1 && AV_LOG_INFO > av_log_get_level()) {

fprintf(stderr, "%s %c", buf, end);

} else

av_log(NULL, AV_LOG_INFO, "%s %c", buf, end);

fflush(stderr);

}

if (progress_avio) {

av_bprintf(&buf_script, "progress=%s\n",

is_last_report ? "end" : "continue");

avio_write(progress_avio, buf_script.str,

FFMIN(buf_script.len, buf_script.size - 1));

avio_flush(progress_avio);

av_bprint_finalize(&buf_script, NULL);

if (is_last_report) {

if ((ret = avio_closep(&progress_avio)) < 0)

av_log(NULL, AV_LOG_ERROR,

"Error closing progress log, loss of information possible: %s\n", av_err2str(ret));

}

}

if (is_last_report)

print_final_stats(total_size);

}

static void flush_encoders(void)

{

int i, ret;

for (i = 0; i < nb_output_streams; i++) {

OutputStream *ost = output_streams[i];

AVCodecContext *enc = ost->enc_ctx;

OutputFile *of = output_files[ost->file_index];

if (!ost->encoding_needed)

continue;

// Try to enable encoding with no input frames.

// Maybe we should just let encoding fail instead.

if (!ost->initialized) {

FilterGraph *fg = ost->filter->graph;

char error[1024] = "";

av_log(NULL, AV_LOG_WARNING,

"Finishing stream %d:%d without any data written to it.\n",

ost->file_index, ost->st->index);

if (ost->filter && !fg->graph) {

int x;

for (x = 0; x < fg->nb_inputs; x++) {

InputFilter *ifilter = fg->inputs[x];

if (ifilter->format < 0) {

AVCodecParameters *par = ifilter->ist->st->codecpar;

// We never got any input. Set a fake format, which will

// come from libavformat.

ifilter->format = par->format;

ifilter->sample_rate = par->sample_rate;

ifilter->channels = par->channels;

ifilter->channel_layout = par->channel_layout;

ifilter->width = par->width;

ifilter->height = par->height;

ifilter->sample_aspect_ratio = par->sample_aspect_ratio;

}

}

if (!ifilter_has_all_input_formats(fg))

continue;

ret = configure_filtergraph(fg);

if (ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Error configuring filter graph\n");

exit_program(1);

}

finish_output_stream(ost);

}