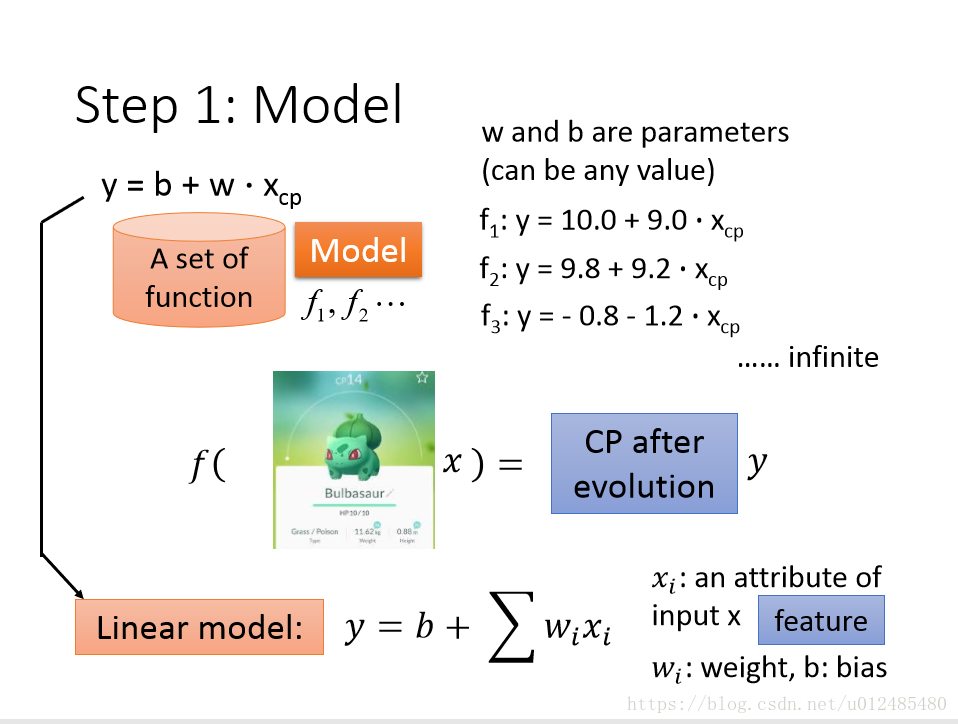

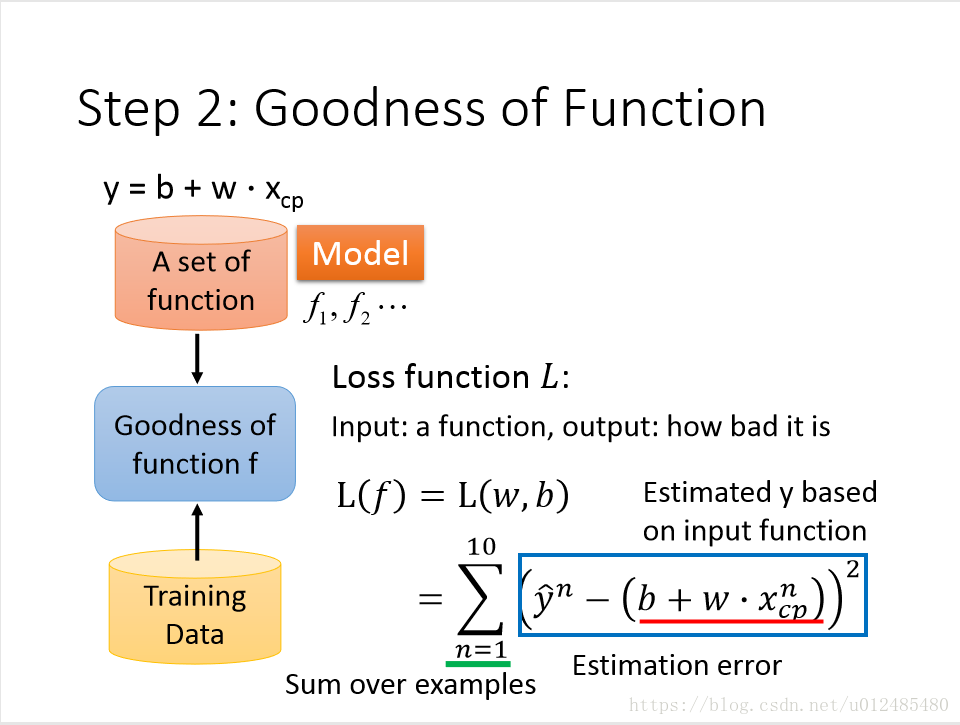

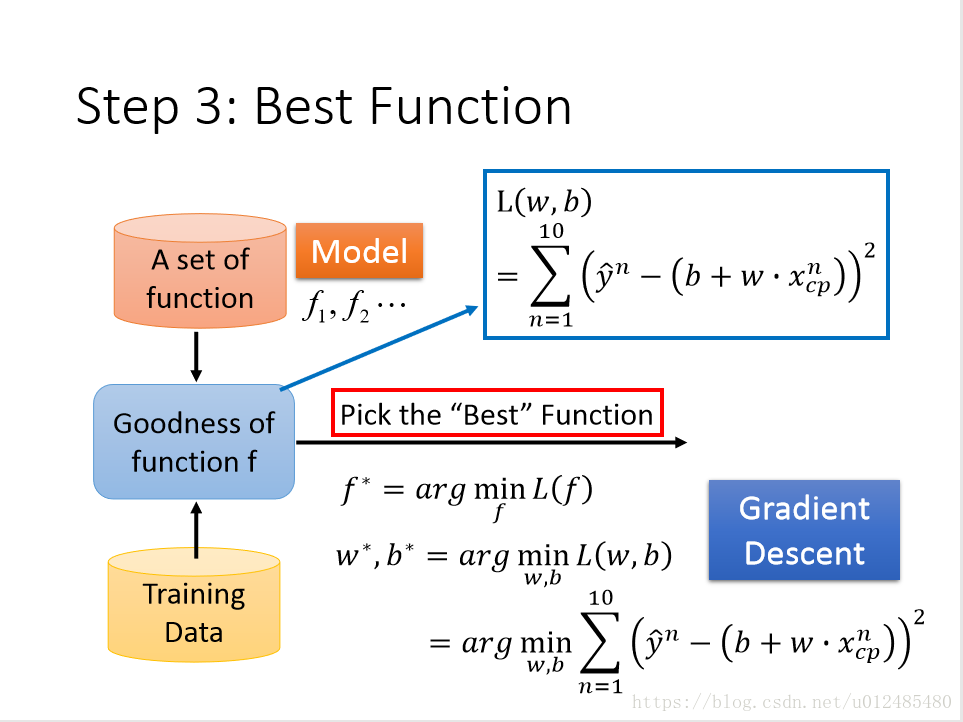

machine learning 有三个步骤:

step 1 :选择 a set of function, 即选择一个 model

step 2 :评价goodness of function

step 3 :选出 best function

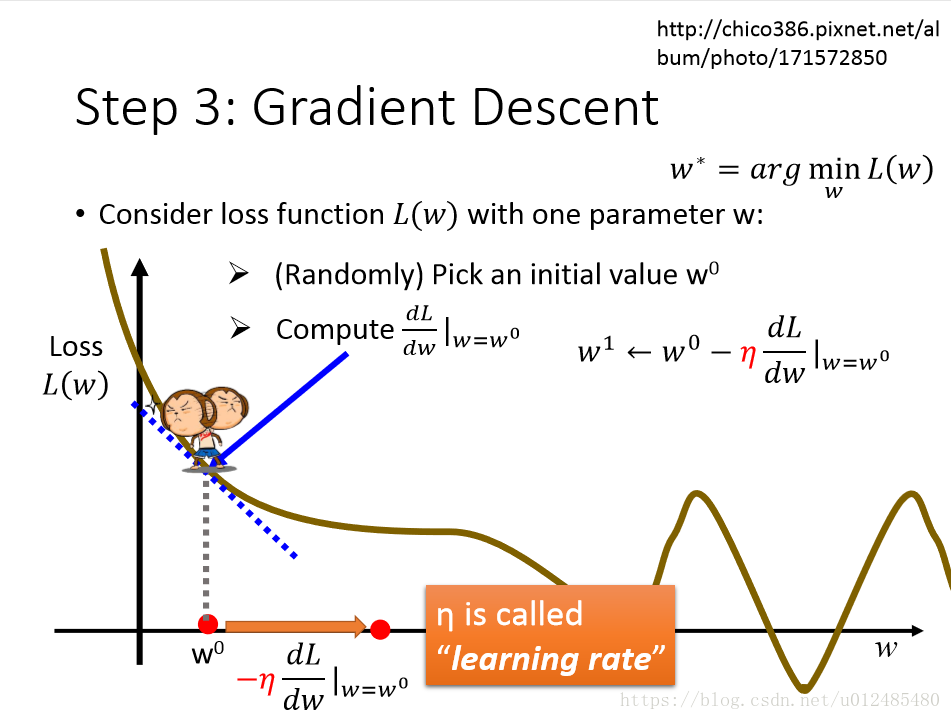

采用梯度下降得到最佳答案

gradient descent 的步骤是:

先选择参数的初始值,再向损失函数对参数的负梯度方向迭代更新,learning rate控制步子大小、学习速度。梯度方向是损失函数等高线的法线方向。

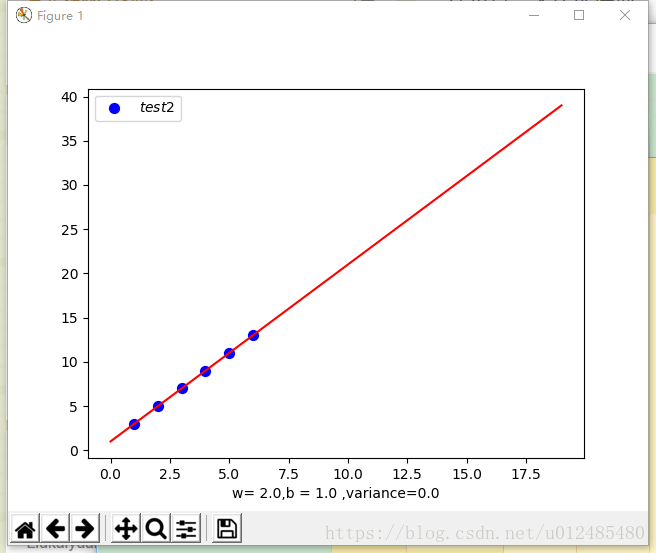

基于以上步骤,测试y=w*x+b的代码实现

import np

import matplotlib.pyplot as plt # plt

x_data = [1,2,3,4,5,6]

y_data = [3,5,7,9,11,13]

# yadata = b + w*xdata

b = -1 # intial b

w = 1 # intial w

lr = 0.000001 # learning rate

iteration = 100000000#iterate times

for i in range(iteration):

b_grad = 0.0

w_grad = 0.0

for n in range(len(x_data)):

b_grad = b_grad - 2.0*(y_data[n] - (b + w*x_data[n]))*1.0

w_grad = w_grad - 2.0*(y_data[n] - (b + w*x_data[n]))*x_data[n]

# update parameters

b = b - lr*b_grad

w = w - lr*w_grad

b = round(b,1)

w = round(w,1)

print(b,w)

plt.scatter(x_data, y_data, s=200, label = '$test1$', c = 'blue', marker='.', alpha = None, edgecolors= 'blue' )

x = np.arange(0, 20, 1)

plt.plot(x, b+w*x,color='red')

plt.legend()

plt.show()实验结果:

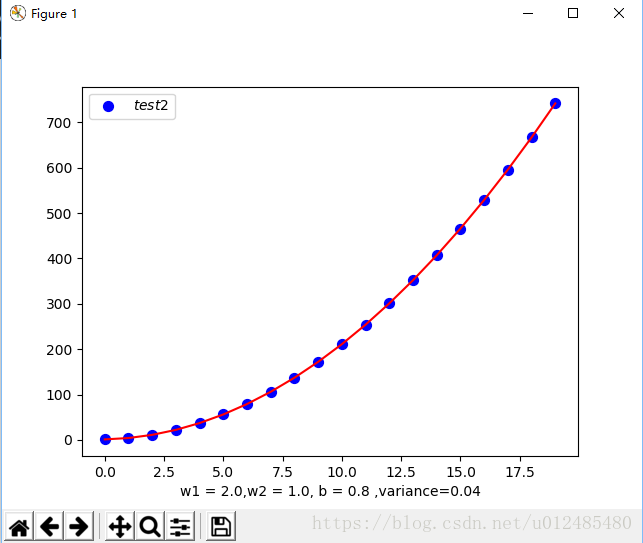

测试y=w1*x^2+w2*x+b的代码实现

import np

import matplotlib.pyplot as plt # plt

x_data = []

y_data = []

for i in range(10):

x_data.append(i)

y_data.append(2*i*i+i+1)

# y= w1*x^2 + w2*x+b

w1 = 1#intial w1

w2 = 0.5#intial w2

b = 0.5#intial b

lr = 0.00000001# learning rate

iteration = 10000000#iterate times

for i in range(iteration):

w1_grad = 0.0

w2_grad = 0.0

b_grad = 0.0

for n in range(len(x_data)):

t = 2*(y_data[n]-(w1*x_data[n]*x_data[n]+w2*x_data[n]+b))

w1_grad = w1_grad + t*(-x_data[n]*x_data[n])

w2_grad = w2_grad + t*(-x_data[n])

b_grad = b_grad + t*(-1)

w1 = w1 - lr*w1_grad

w2 = w2 - lr*w2_grad

b = b - lr*b_grad

w1 = round(w1,1)

w2 = round(w2,1)

b = round(b,1)

print(w1,w2,b)

x = np.arange(0, 20, 1)

v = 0.0

for n in range(len(x_data)):

v = v + (y_data[n] - (w1*x_data[n]*x_data[n]+w2*x_data[n]+b))**2

v = v/(len(x_data))

v = round(v,3)

plt.plot(x, w1*x*x+w2*x+1,color='red')

l = 'w1 = %s,w2 = %s, b = %s ,variance=%s' % (w1,w2,b,v)

plt.scatter(x_data, y_data, s=200, label = '$test2$', c = 'blue', marker='.', alpha = None, edgecolors= 'blue' )

plt.xlabel(l)

plt.legend()

plt.show()实验结果:设置的w1=2,w2=1,b=1,实验结果有误差,可通过调整learning rate 和迭代次数、增加测试数据等调试