一、下载安装需要的yaml文件

metrics-server的官网:https://github.com/kubernetes-sigs/metrics-server

在github上面有metrics-server的安装步骤:

但是如果直接使用这条命令安装的话会因为k8s.gcr.io在国内网络下无法安装metrics-server的镜像而失败。所以我下载的不是最新版,而是v0.3.6。首先下载需要的componenets.yaml文件:

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml

二、准备镜像

像上面所说的,由于国内网络无法直接下载metrics-server的镜像,所以可以通过手动导入一下这个镜像。

首先准备镜像的tar包,0.3.6版本的可以到metrics-img.tar下载。当解压后会得到一个metrics-img.tar的压缩文件。上传metrics-img.tar到每个节点并加载。

[root@vms51 ~]# docker load -i metrics-img.tar

932da5156413: Loading layer [==================================================>] 3.062MB/3.062MB

7bf3709d22bb: Loading layer [==================================================>] 38.13MB/38.13MB

Loaded image: k8s.gcr.io/metrics-server-amd64:v0.3.6

[root@vms51 ~]# docker images |grep metrics-server

k8s.gcr.io/metrics-server-amd64 v0.3.6 9dd718864ce6 22 months ago 39.9MB

[root@vms51 ~]#

在集群的每个节点都操作一遍。

三、修改components.yaml

当把镜像准备好之后可以直接使用components.yaml文件进行部署了,但是如果直接部署的话,会出现报错信息:

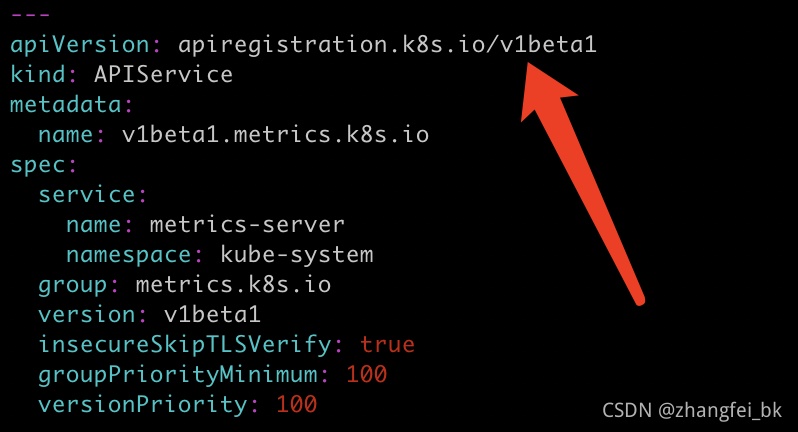

所以在这里需要把components.yaml文件中的apiregistration.k8s.io/v1beta1修改为apiregistration.k8s.io/v1。

现在使用kubectl get pod -n kube-system可以看到metrics-server对应的pod已经处于Running状态了。

使用kubectl top node验证 查看Metrics-server是否运行正常:

[root@vms51 ~]# kubectl top node

W0821 21:26:00.105720 53095 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

error: Metrics API not available

[root@vms51 ~]#

使用kubectl logs [pod] -n kube-system查看日志查找原因:

[root@vms51 ~]# kubectl logs metrics-server-8bbfb4bdb-6295v -n kube-system

I0822 06:28:12.610330 1 serving.go:312] Generated self-signed cert (/tmp/apiserver.crt, /tmp/apiserver.key)

I0822 06:28:13.888333 1 secure_serving.go:116] Serving securely on [::]:4443

E0822 06:29:02.705049 1 reststorage.go:135] unable to fetch node metrics for node "vms51.rhce.cc": no metrics known for node

E0822 06:29:02.705135 1 reststorage.go:135] unable to fetch node metrics for node "vms52.rhce.cc": no metrics known for node

E0822 06:29:02.705152 1 reststorage.go:135] unable to fetch node metrics for node "vms53.rhce.cc": no metrics known for node

这是因为metrics-server默认使用node的主机名,但是coredns里面没有物理机主机名的解析,所以需要修改components.yaml文件,在containers下的args中添加两个参数:

- --kubelet-insecure-tls ##添加--kubelet-insecure

- --kubelet-preferred-address-types=InternalIP ##添加--kubelet-preferred-address-types

–kubelet-preferred-address-types优先使用 InternalIP 来访问 kubelet,这样可以避免节点名称没有 DNS 解析记录时,通过节点名称调用节点 kubelet API 失败的情况。

–kubelet-insecure-tls不验证客户端证书。

修改完后的整个components.yaml文件:

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:aggregated-metrics-reader

labels:

rbac.authorization.k8s.io/aggregate-to-view: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rules:

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

name: v1beta1.metrics.k8s.io

spec:

service:

name: metrics-server

namespace: kube-system

group: metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

emptyDir: {

}

containers:

- name: metrics-server

image: k8s.gcr.io/metrics-server-amd64:v0.3.6

imagePullPolicy: IfNotPresent

args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-insecure-tls ##添加--kubelet-insecure

- --kubelet-preferred-address-types=InternalIP ##添加--kubelet-preferred-address-types

ports:

- name: main-port

containerPort: 4443

protocol: TCP

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- name: tmp-dir

mountPath: /tmp

nodeSelector:

kubernetes.io/os: linux

kubernetes.io/arch: "amd64"

---

apiVersion: v1

kind: Service

metadata:

name: metrics-server

namespace: kube-system

labels:

kubernetes.io/name: "Metrics-server"

kubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: metrics-server

ports:

- port: 443

protocol: TCP

targetPort: main-port

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

四、安装

当镜像和yaml文件准备好之后就可以安装metrics-server了:

kubectl apply -f components.yaml

五、测试

如果正常安装完成后就可以使用kubectl top来监控各种资源的信息了:

[email protected]:/home/scidb/k8s_workplace/test$ kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master 153m 0% 3233Mi 2%

slave1 53m 0% 6624Mi 4%

slave2 58m 0% 7375Mi 4%

⚠️一定要看下面的问题,要不就会被成功的假象给骗了!!!

六、存在的问题及解决方案

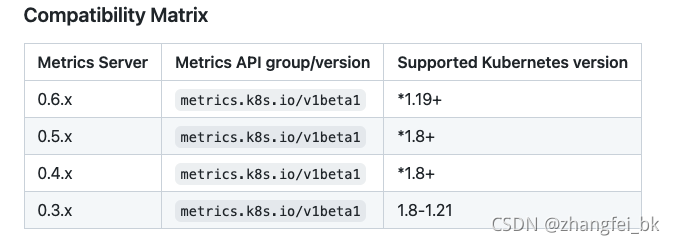

对于上面0.3.6版本的metrics-server对应的k8s的版本只支持1.8到1.21之间,但是对于1.22版本的k8s集群就会报错:“Failed to make webhook authorizer request: the server could not find the request",所以对于高版本的k8s集群需要安装高版本的metrics-server,我安装的是0.5.0。

首先还是需要准备0.5.0的镜像,由于国内的网无法下载该镜像,所以我把下载好的镜像上传到了docker hub上面,直接下载即可:

# 下载metrics-server的镜像

docker pull zfhub/metrics-server:v0.5.0

#下载完成后给镜像打tag

docker tag zfhub/metrics-server:v0.5.0 k8s.gcr.io/metrics-server/metrics-server:v0.5.0

然后下载0.5.0对应的components.yaml文件:

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.5.0/components.yaml

和0.3.6一样添加一个参数:

- --kubelet-insecure-tls ##添加--kubelet-insecure

修改完的components.yaml:

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls ##添加--kubelet-insecure

image: k8s.gcr.io/metrics-server/metrics-server:v0.5.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {

}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

最后使用kubectl apply -f components.yaml命令部署metrics-server,测试方法和0.3.6一样。