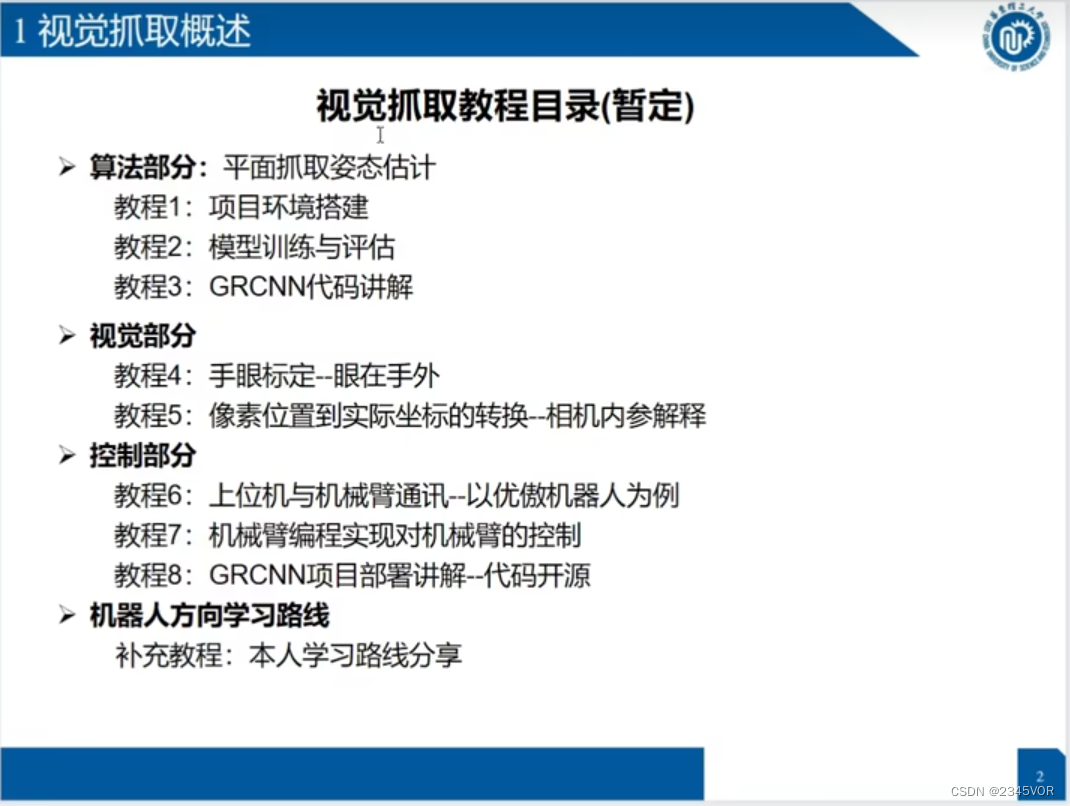

首先声明一下,此项目是参考B站哈萨克斯坦UP的【机械臂视觉抓取从理论到实战】

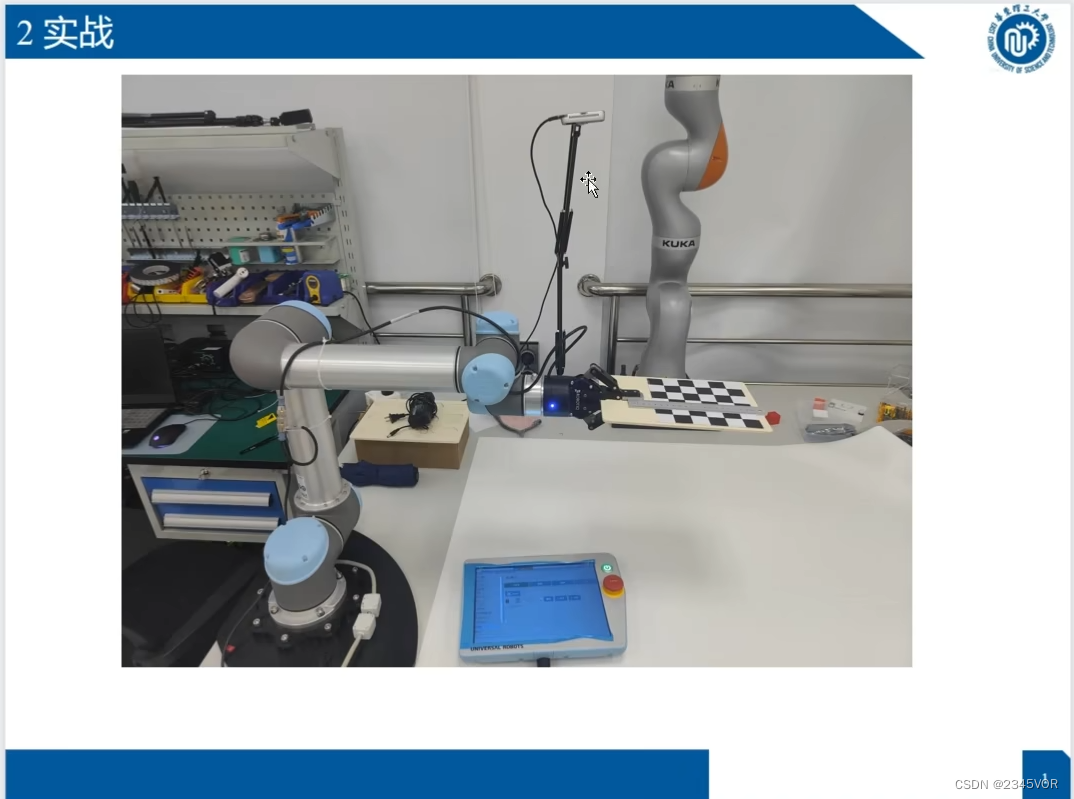

此内容为他研究生生涯的阶段性成果展示和技术分享,所有数据和代码均开源。所以鹏鹏我特此来复现一下,我采用的硬件与之有所不同,UP主使用UR5,我实验室采用的是UR3,下面列出相关材料

UR3CB3.12:https://www.universal-robots.cn/cb3/

UR3CB3.12:https://www.universal-robots.cn/cb3/

【UR3系统升级到CB3.12附带URcap1.05】

硬件支持

| 序号 | 名称 | 功能 |

|---|---|---|

| 1 | UR3机械臂 | 一切行动的执行者 |

| 2 | D435i | 执行者的眼睛 |

| 3 | Ubuntu深度学习环境 | 执行者思维的大脑 |

| 4 | 平面抓取平台 | 实验操作环境 |

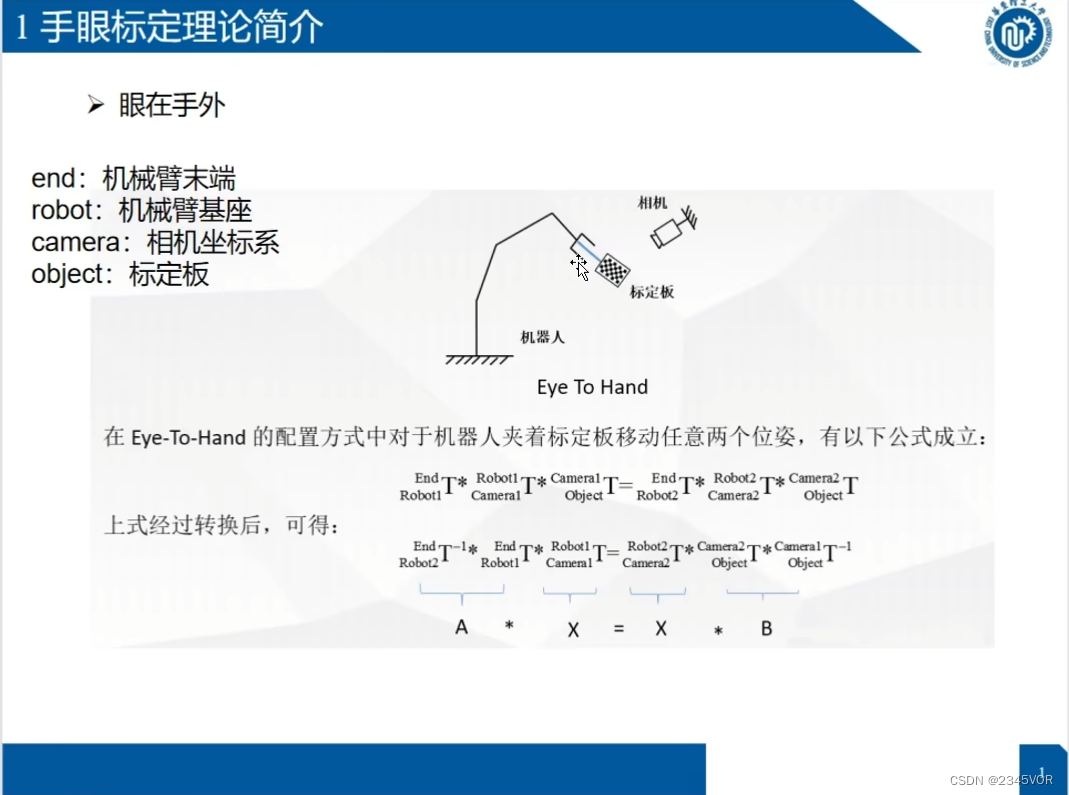

| 5 | 6*6黑白棋盘标定板 | 相机眼在手外标定 |

| 6 | 3x3x3cm 3D打印小方块 | 工件演员 |

代码支持

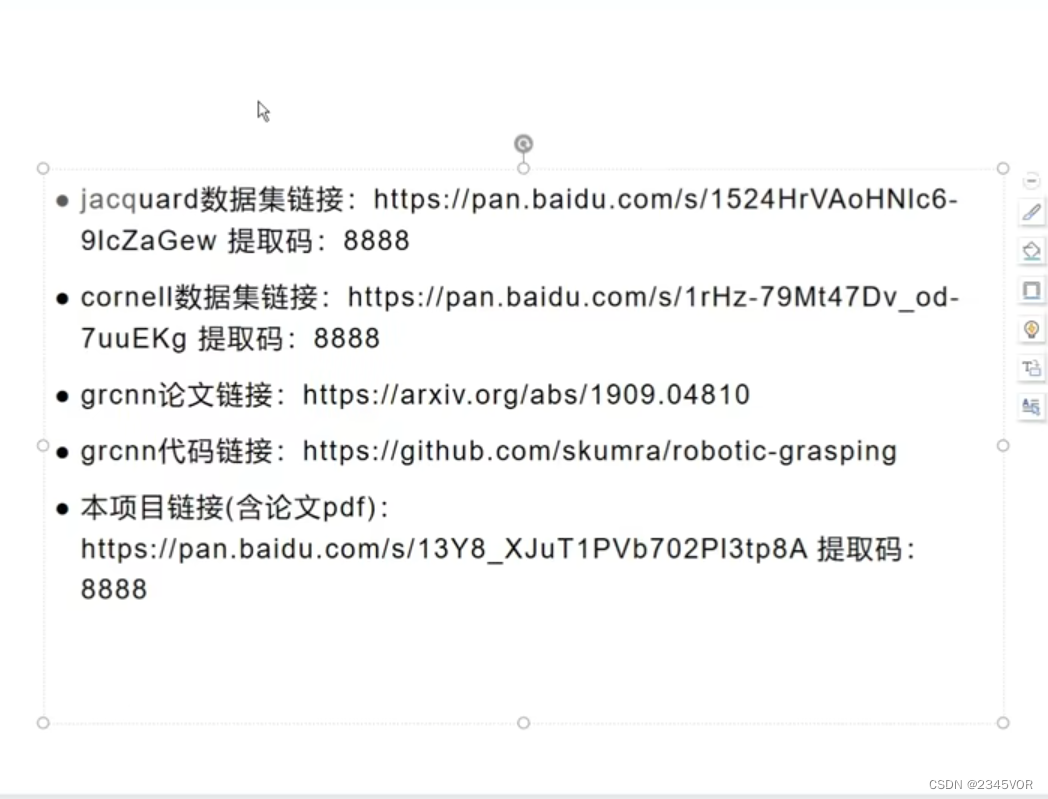

jacquard数据集:https://pan.baidu.com/s/1524HrVAoHNlc6-9lcZaGew

本UR5项目代码:https://pan.baidu.com/s/13Y8_XJuT1PVb702Pl3tp8A

提取码均为:8888

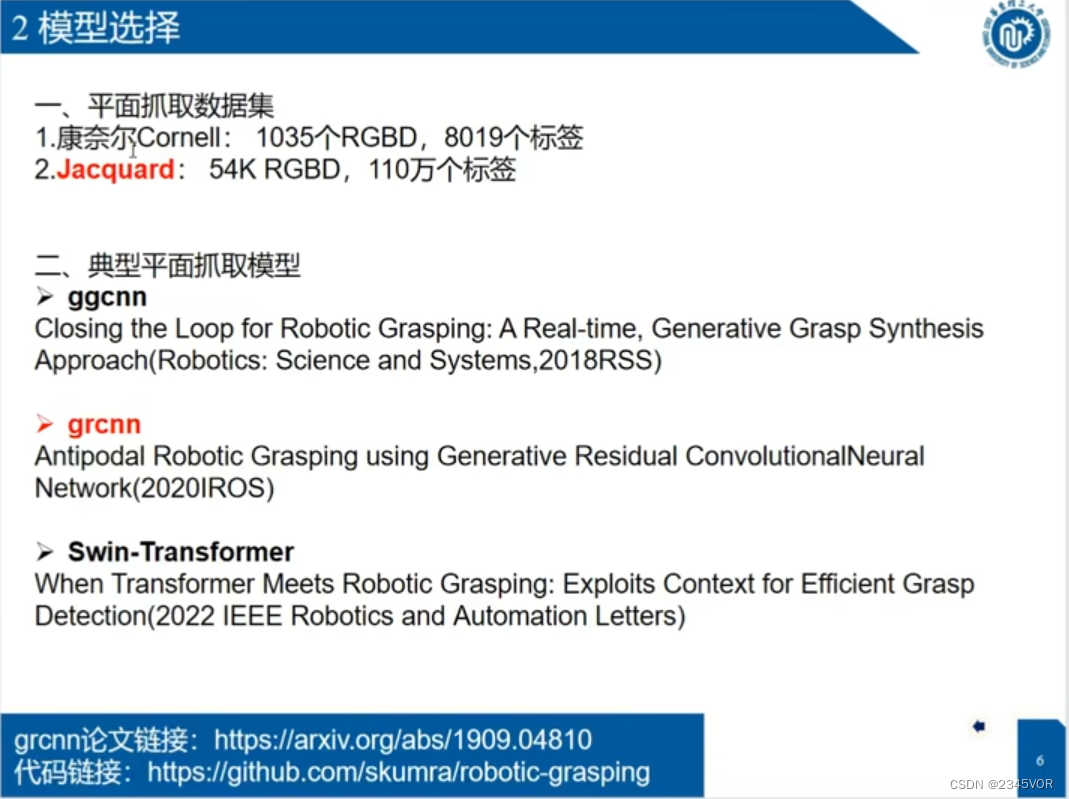

1. 概述

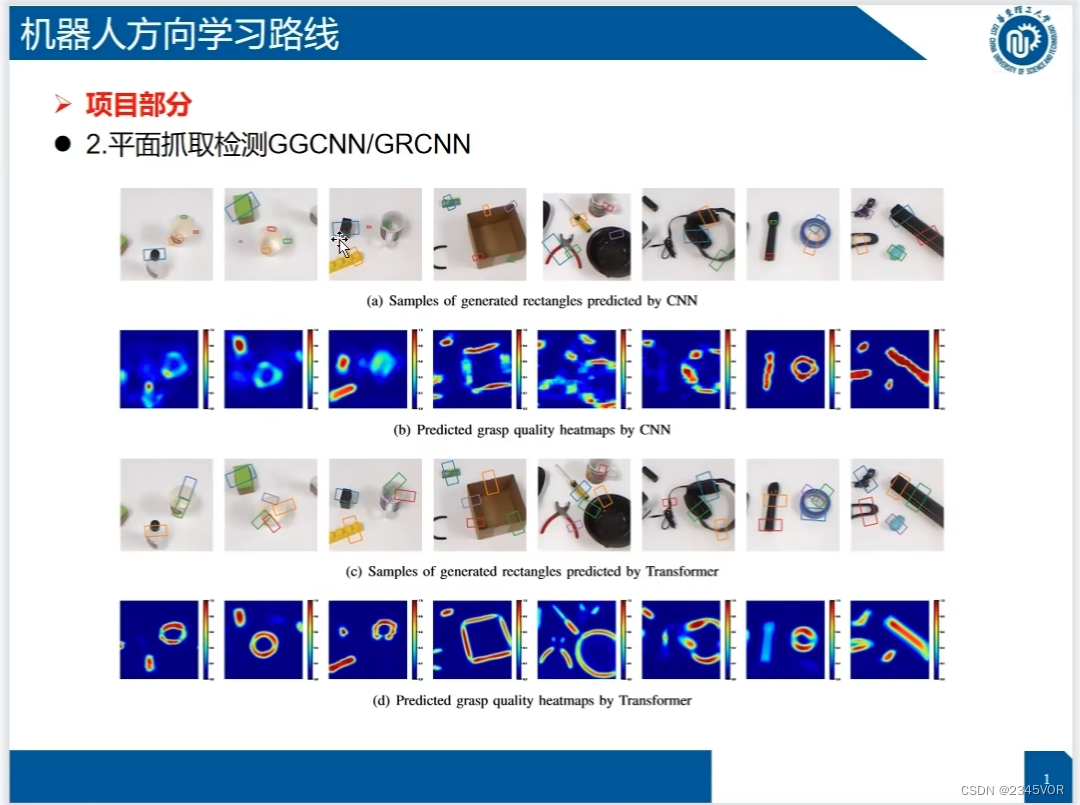

GR-CNN:https://paperswithcode.com/paper/antipodal-robotic-grasping-using-generative

2. 环境搭建及模型训练

GR-CNN:https://github.com/skumra/robotic-grasping

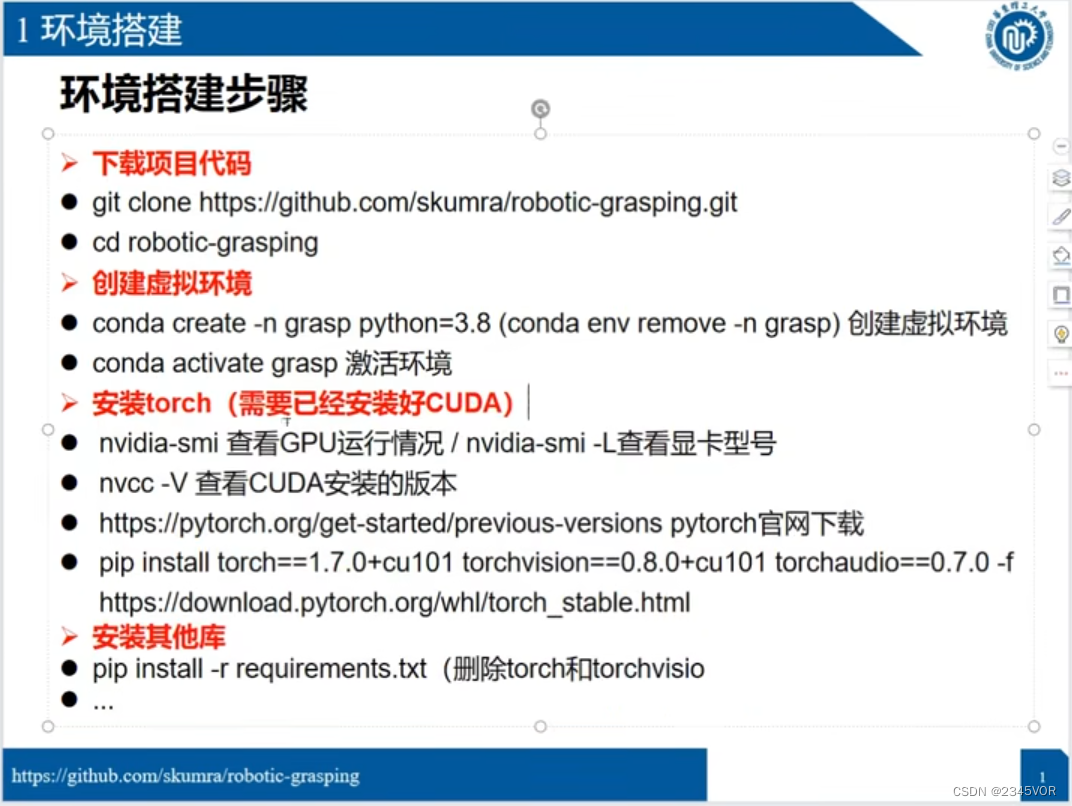

2.1下载源码创建环境

#下载robotic-grasping源码

git clone https://github.com/skumra/robotic-grasping.git

#切换到对应文件夹

cd robotic-grasping/

#创建python3.8的grasp1环境

conda creat -n grasp1 python=3.8

#激活grasp1环境

conda activate grasp1

#关闭grasp1环境

conda deactivate grasp1

2.2 安装torch

pythorch:cuda安装:https://pytorch.org/get-started/previous-versions/#wheel-14

#1.查看系统显卡信息,查看最高支持cuda版本

nvidia-smi

#2.查看已经安装的cuda版本

nvcc -V

#3.安装cuda,注意30系需要11以上

##NVIDIA 显卡30系11.0

conda install pytorch==1.7.0 torchvision==0.8.0 torchaudio==0.7.0 cudatoolkit=11.0 -c pytorch

##NVIDIA 显卡20系及以下10.2

conda install pytorch==1.7.0 torchvision==0.8.1 torchaudio==0.7.0 cudatoolkit=10.2 -c pytorch

## CPU Only

conda install pytorch==1.7.0 torchvision==0.8.0 torchaudio==0.7.0 cpuonly -c pytorch

#4.安装相关依赖库

pip install -r requirements.txt

#5.模型训练

## Cornell训练

python train_network.py --dataset cornell --dataset-path <Path To Dataset> --description training_cornell

## jacquard训练

python train_network.py --dataset jacquard --dataset-path '/home/robot/robotic-grasping/data/Jacquard' --description training_jacquard --use-dropout 0 --input-size 100

#6.出现requires the 'inagecodecs' package

pip install imagecodecs-lite

2.3 部分参考

深度学习反馈这个,是因为np.float从1.24起被删除。所用的代码是依赖于旧版本的Numpy。您可以:更新sklearn到一个不使用np.float的新版本(如果它存在)或者将你的Numpy版本降级到1.23.5.

操作:

pip uninstall numpy

pip install -U numpy==1.23.4

安装的库可参照

Package Version

------------------------------- -----------

absl-py 1.4.0

actionlib 1.12.1

aiohttp 3.8.1

aiosignal 1.3.1

angles 1.9.12

async-timeout 4.0.2

attrs 23.1.0

base_local_planner 1.16.7

blinker 1.6.2

bondpy 1.8.5

brotlipy 0.7.0

cachetools 5.3.1

camera_calibration 1.15.2

camera_calibration_parsers 1.11.13

catkin 0.7.29

certifi 2023.5.7

cffi 1.15.0

charset-normalizer 3.1.0

click 8.1.3

contourpy 1.0.7

controller_manager 0.18.4

controller_manager_msgs 0.18.4

cryptography 3.4.8

cv_bridge 1.13.1

cycler 0.11.0

dataclasses 0.6

diagnostic_analysis 1.9.7

diagnostic_common_diagnostics 1.9.7

diagnostic_updater 1.9.7

drone_wrapper 1.3.10

dynamic_reconfigure 1.6.5

fonttools 4.39.4

frozenlist 1.3.3

future 0.18.3

gazebo_plugins 2.8.7

gazebo_ros 2.8.7

gencpp 0.6.6

geneus 2.2.6

genlisp 0.4.16

genmsg 0.5.17

gennodejs 2.0.1

genpy 0.6.16

google-auth 2.19.1

google-auth-oauthlib 1.0.0

grpcio 1.54.2

idna 3.4

image_geometry 1.13.1

imagecodecs 2023.3.16

imagecodecs-lite 2022.9.26

imageio 2.30.0

importlib-metadata 6.6.0

importlib-resources 5.12.0

interactive-markers 1.11.5

joint_state_publisher 1.12.15

joint_state_publisher_gui 1.12.15

kdl-parser-py 1.13.3

kiwisolver 1.4.4

laser_geometry 1.6.7

lazy_loader 0.2

Markdown 3.4.3

MarkupSafe 2.1.3

matplotlib 3.7.1

mavros 1.15.0

message_filters 1.14.13

mkl-fft 1.3.6

mkl-random 1.2.2

mkl-service 2.4.0

moveit-core 1.0.11

moveit_ros_planning_interface 1.0.11

moveit_ros_visualization 1.0.11

multidict 6.0.2

networkx 3.1

numpy 1.23.4

oauthlib 3.2.2

opencv-python 4.7.0.72

packaging 23.1

Pillow 9.4.0

pip 23.0.1

protobuf 3.20.3

py-trees 0.6.9

pyasn1 0.5.0

pyasn1-modules 0.3.0

pycparser 2.21

PyJWT 2.7.0

pyOpenSSL 20.0.1

pyparsing 3.0.9

pyrealsense2 2.53.1.4623

PySocks 1.7.1

python-dateutil 2.8.2

python_qt_binding 0.4.4

pyu2f 0.1.5

PyWavelets 1.4.1

qt-dotgraph 0.4.2

qt-gui 0.4.2

qt-gui-cpp 0.4.2

qt-gui-py-common 0.4.2

requests 2.31.0

requests-oauthlib 1.3.1

resource_retriever 1.12.7

rosbag 1.14.13

rosboost-cfg 1.14.9

rosclean 1.14.9

roscreate 1.14.9

rosgraph 1.14.13

roslaunch 1.14.13

roslib 1.14.9

roslint 0.11.2

roslz4 1.14.13

rosmake 1.14.9

rosmaster 1.14.13

rosmsg 1.14.13

rosnode 1.14.13

rosparam 1.14.13

rospy 1.14.13

rosserial_arduino 0.8.0

rosserial_client 0.8.0

rosserial_python 0.8.0

rosservice 1.14.13

rostest 1.14.13

rostopic 1.14.13

rosunit 1.14.9

roswtf 1.14.13

rqt_action 0.4.9

rqt_bag 0.5.1

rqt_bag_plugins 0.5.1

rqt_console 0.4.9

rqt_controller_manager 0.18.4

rqt_dep 0.4.9

rqt_drone_teleop 1.3.10

rqt_ez_publisher 0.5.0

rqt_graph 0.4.11

rqt_ground_robot_teleop 1.3.10

rqt_gui 0.5.3

rqt_gui_py 0.5.3

rqt-image-view 0.4.17

rqt_joint_trajectory_controller 0.17.3

rqt_joint_trajectory_plot 0.0.5

rqt_launch 0.4.8

rqt_launchtree 0.2.0

rqt_logger_level 0.4.8

rqt-moveit 0.5.10

rqt_msg 0.4.8

rqt_multiplot 0.0.10

rqt_nav_view 0.5.7

rqt_paramedit 1.0.1

rqt_play_motion_builder 1.0.2

rqt_plot 0.4.13

rqt_pose_view 0.5.8

rqt_publisher 0.4.8

rqt_py_common 0.5.3

rqt_py_console 0.4.8

rqt_py_trees 0.3.1

rqt-reconfigure 0.5.4

rqt_robot_dashboard 0.5.7

rqt-robot-monitor 0.5.14

rqt_robot_steering 0.5.10

rqt_rotors 2.2.3

rqt_runtime_monitor 0.5.7

rqt-rviz 0.7.0

rqt_service_caller 0.4.8

rqt_shell 0.4.9

rqt_srv 0.4.8

rqt_tf_tree 0.6.0

rqt_top 0.4.8

rqt_topic 0.4.11

rqt_virtual_joy 0.1.2

rqt_web 0.4.8

rsa 4.9

rviz 1.13.29

scikit-image 0.21.0

scipy 1.9.3

sensor-msgs 1.12.8

setuptools 67.8.0

six 1.16.0

smach 2.0.1

smach_ros 2.0.1

smclib 1.8.5

srdfdom 0.5.2

tensorboard 2.13.0

tensorboard-data-server 0.7.0

tensorboardX 2.6

tf 1.12.1

tf_conversions 1.12.1

tf2_geometry_msgs 0.6.5

tf2_kdl 0.6.5

tf2_py 0.6.5

tf2_ros 0.6.5

tifffile 2023.4.12

topic_tools 1.14.13

torch 1.7.1+cu110

torchaudio 0.7.2

torchsummary 1.5.1

torchvision 0.8.2+cu110

typing_extensions 4.5.0

unique_id 1.0.6

urdfdom-py 0.4.6

urllib3 1.26.16

Werkzeug 2.3.4

wheel 0.38.4

xacro 1.13.19

yarl 1.7.2

zipp 3.15.0

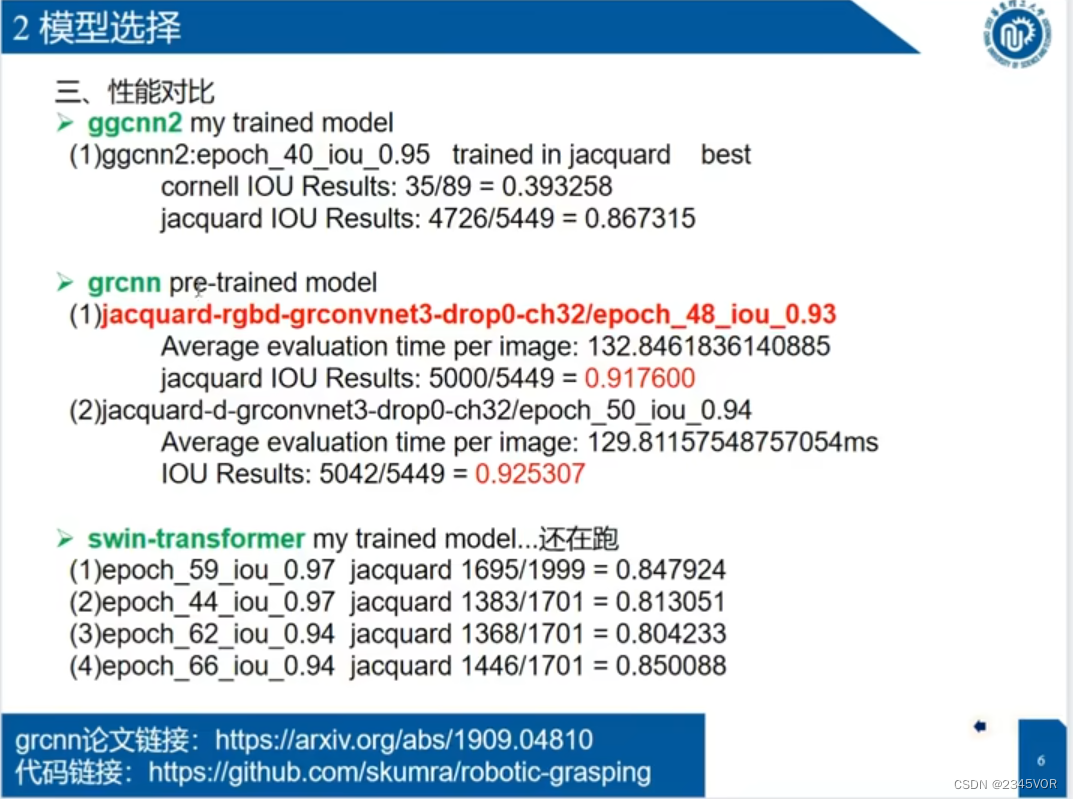

2.4 模型评估

python evaluate.py --network 'trained-models/jacquard-rgbd-grconvnet3-drop0-ch32/epoch_48_iou_0.93' --dataset jacquard --dataset-path '/home/robot/robotic-grasping/data/Jacquard' --iou-eval --input-size 100

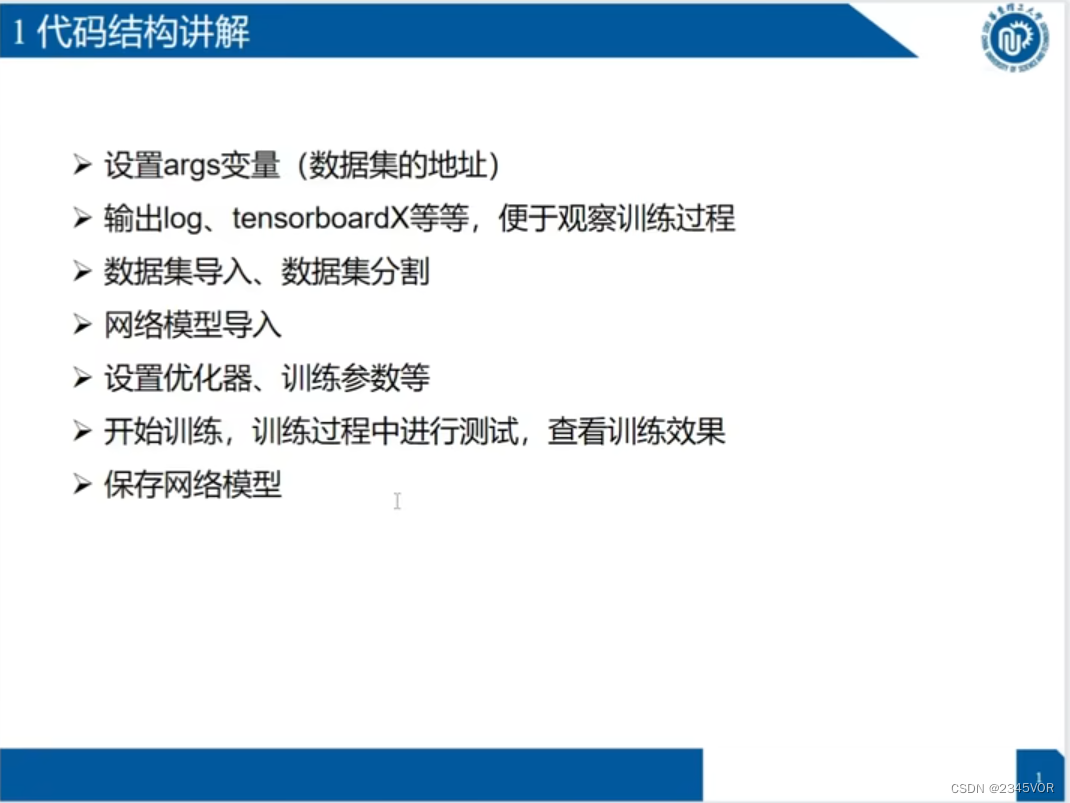

3. GR-CNN源码讲解

3.1 硬件连接

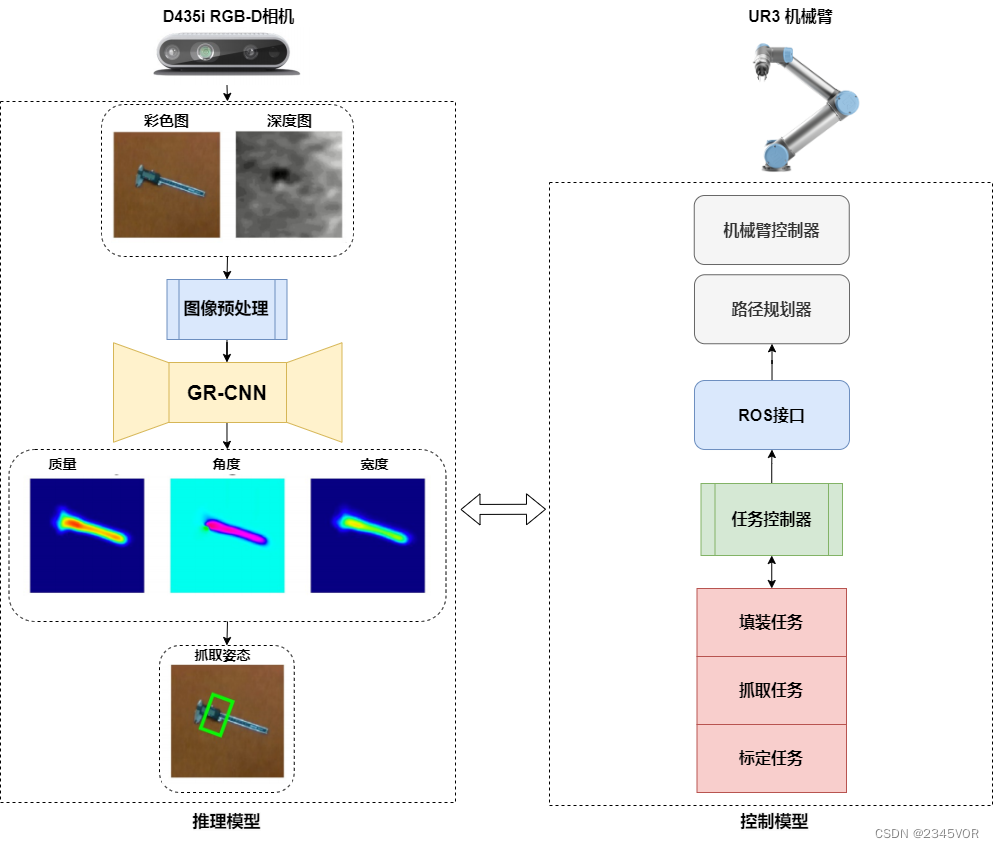

由两个主要模块组成:推理模块和控制模块。推理模块从RGB-D摄像机中预处理获取场景的RGB和对齐的深度图像。这些图像经过预处理,以匹配GR-CNN的输入格式。该网络生成高质量、角度和宽度的图像,这些图像被用于生成抓取的姿态信息。控制模块由一个任务控制器组成,该任务控制器使用由推理模块生成的抓取姿态来准备和执行一个计划,来执行挑选和放置任务。它通过一个路径规划器和控制器,通过一个ROS接口向机器人传达所需的动作。

3.2 网络结构

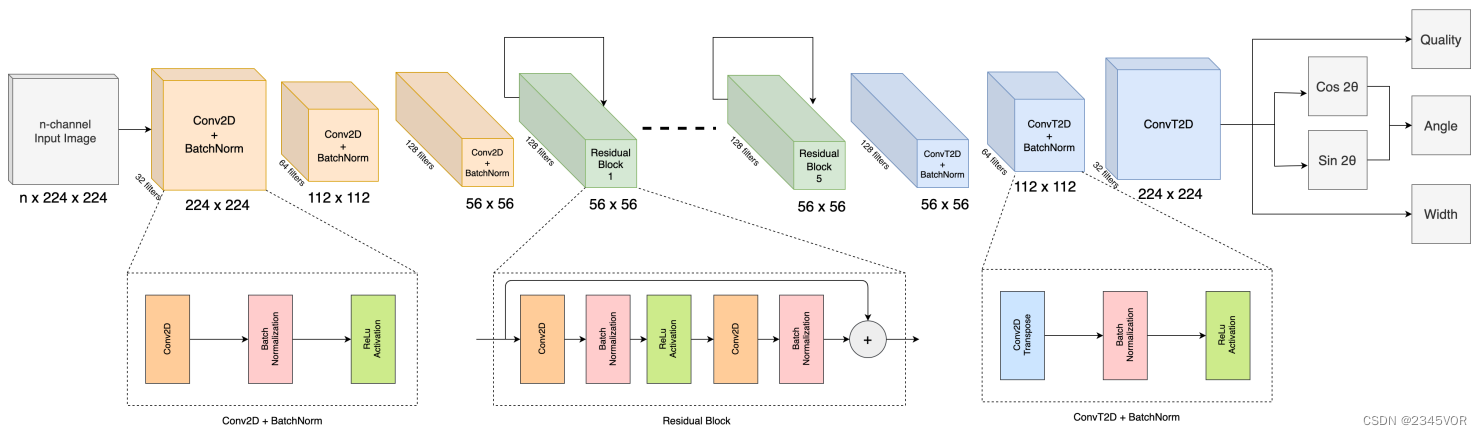

GR-CNN模型是一种生成架构,它接受了一个n个通道的输入图像,并以三幅图像的形式生成像素级的抓取。n通道图像经过三个卷积层,然后经过5个残差层和卷积转置层,生成4幅图像。这些输出图像包括抓取质量分数,所获得的角度由cos 2Θ和sin 2Θ以及末端执行器的所需宽度。

GR-CNN模型是一种生成架构,它接受了一个n个通道的输入图像,并以三幅图像的形式生成像素级的抓取。n通道图像经过三个卷积层,然后经过5个残差层和卷积转置层,生成4幅图像。这些输出图像包括抓取质量分数,所获得的角度由cos 2Θ和sin 2Θ以及末端执行器的所需宽度。

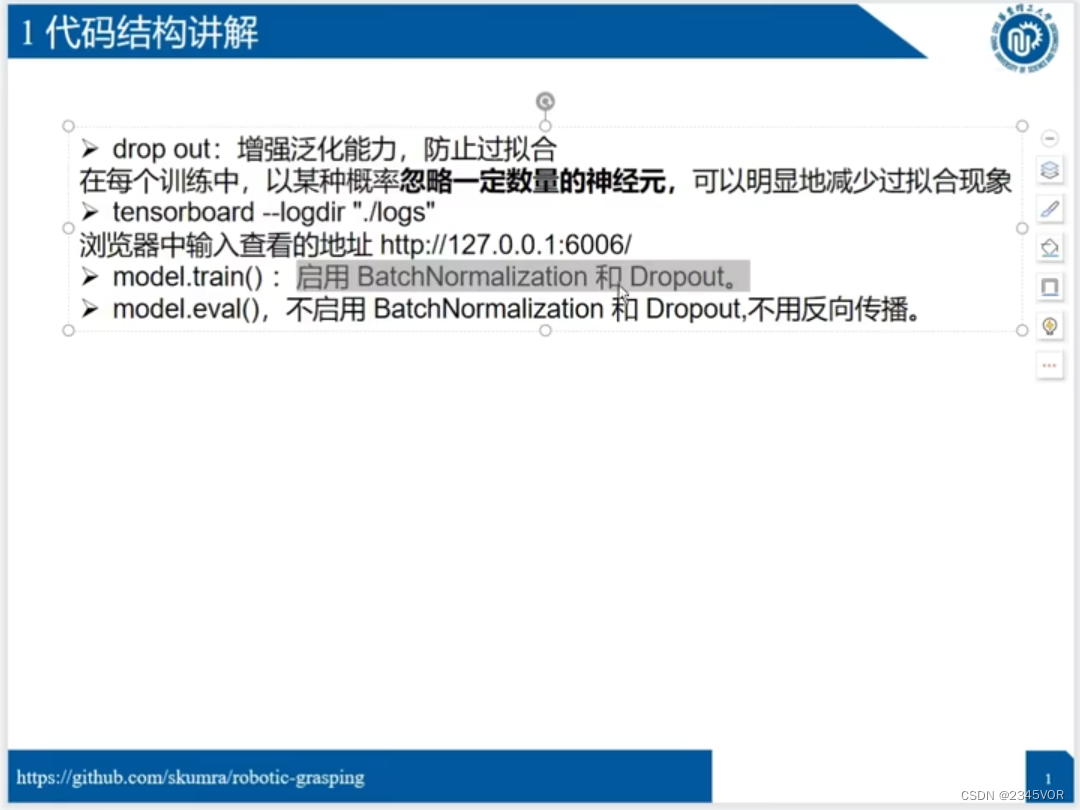

3.3 查看训练日志

采用tensorboard查看训练日志

sudo pip install tensorflow

tensorboard --logdir="./logs"

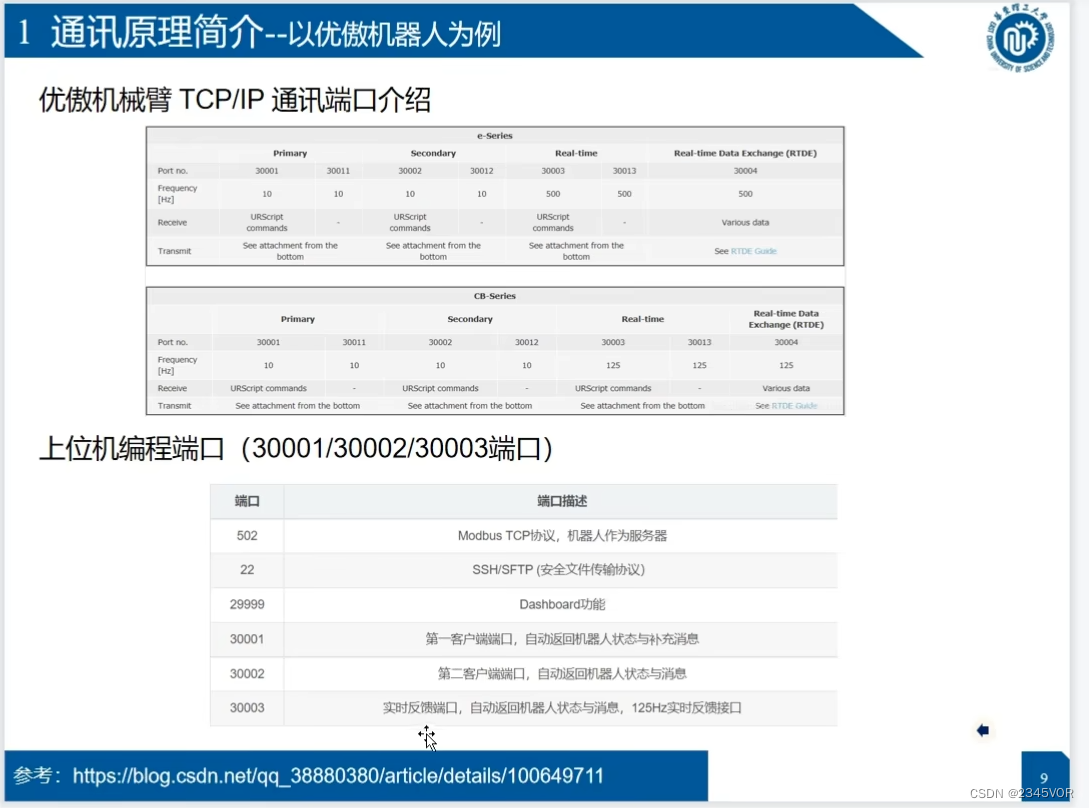

4. 上位机与机械臂通讯

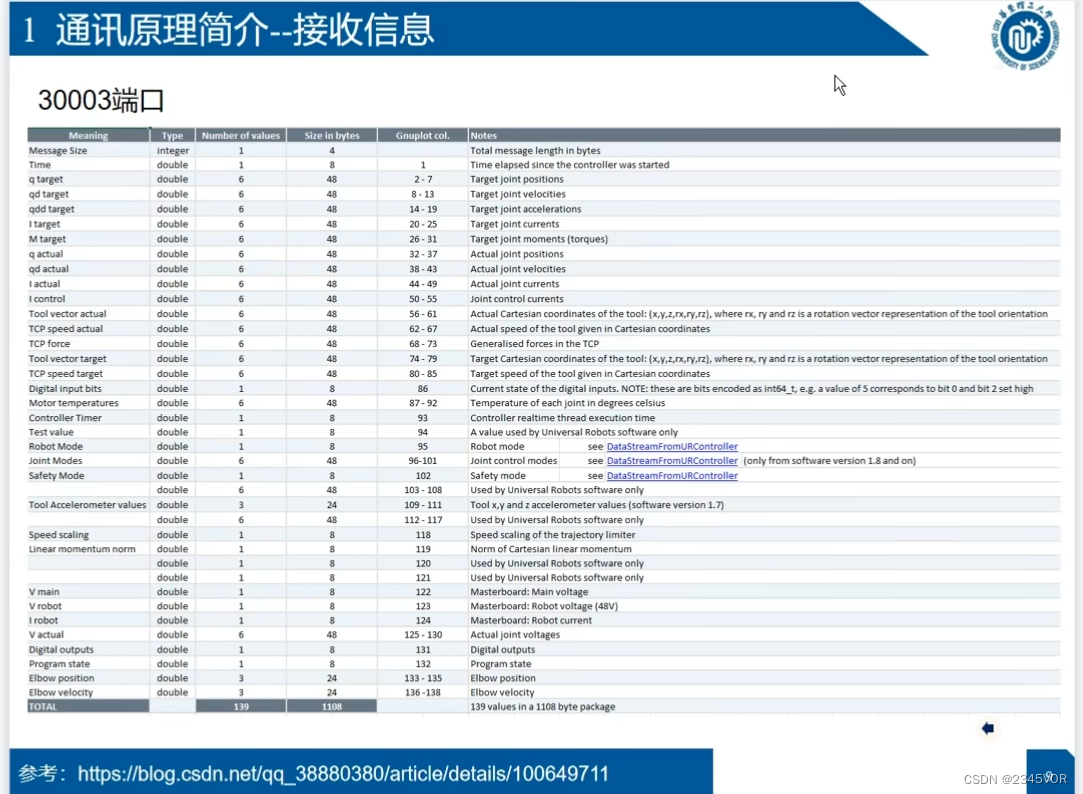

具体通讯协议参照:UR机器人通信端口和协议

UR机器人作为目前使用广泛的协作机器人,其开放了基于TCP/IP的远程控制功能,提供了多个多类型的端口,用于工业总线控制,或者用户自行编程控制,以下记录整理此方面的信息。

30003 实时反馈端口 客户端可发送脚本代安全文件传输协议,服务器自动以125Hz的频率返回机器人状态与消息到客户端

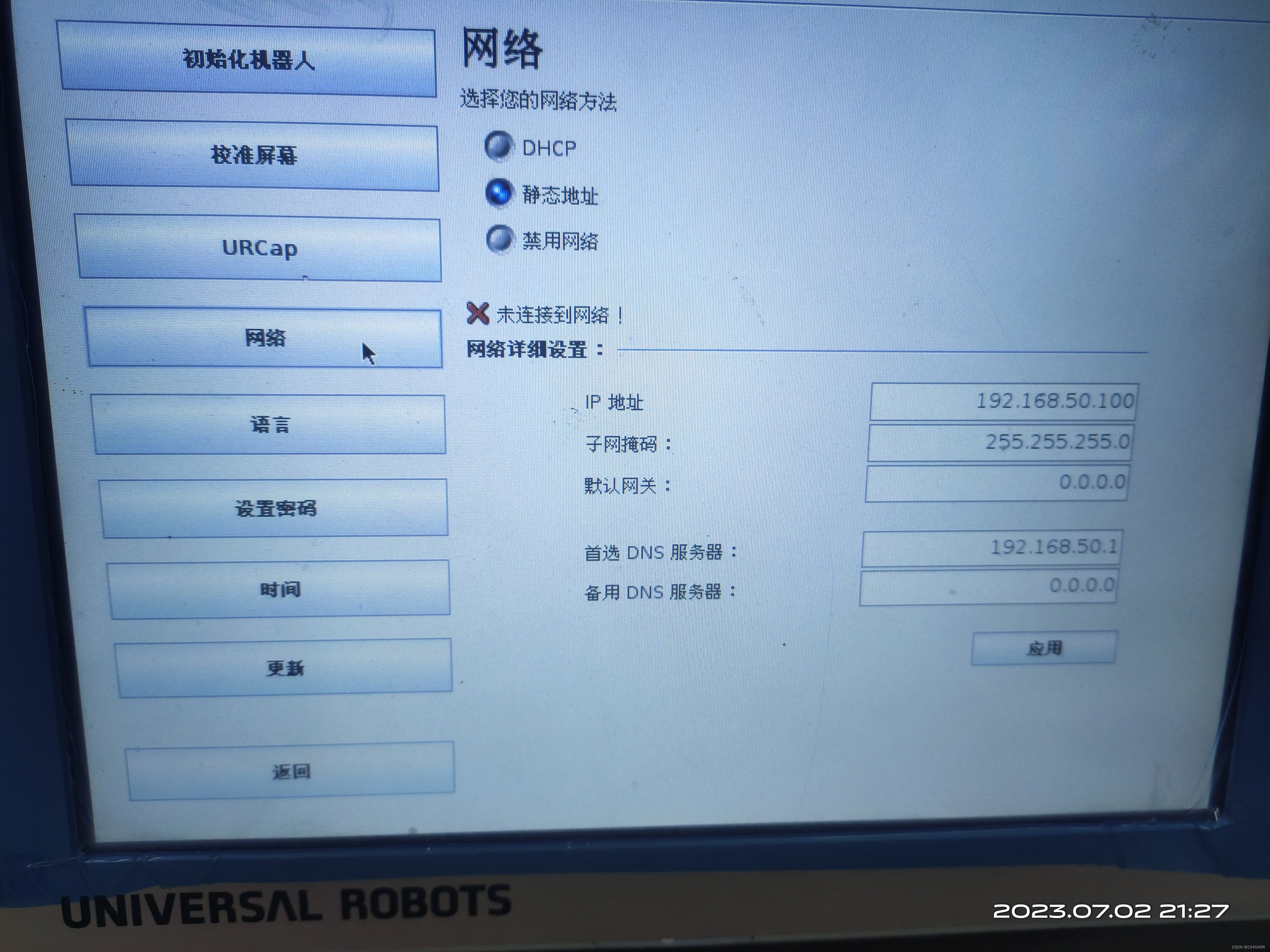

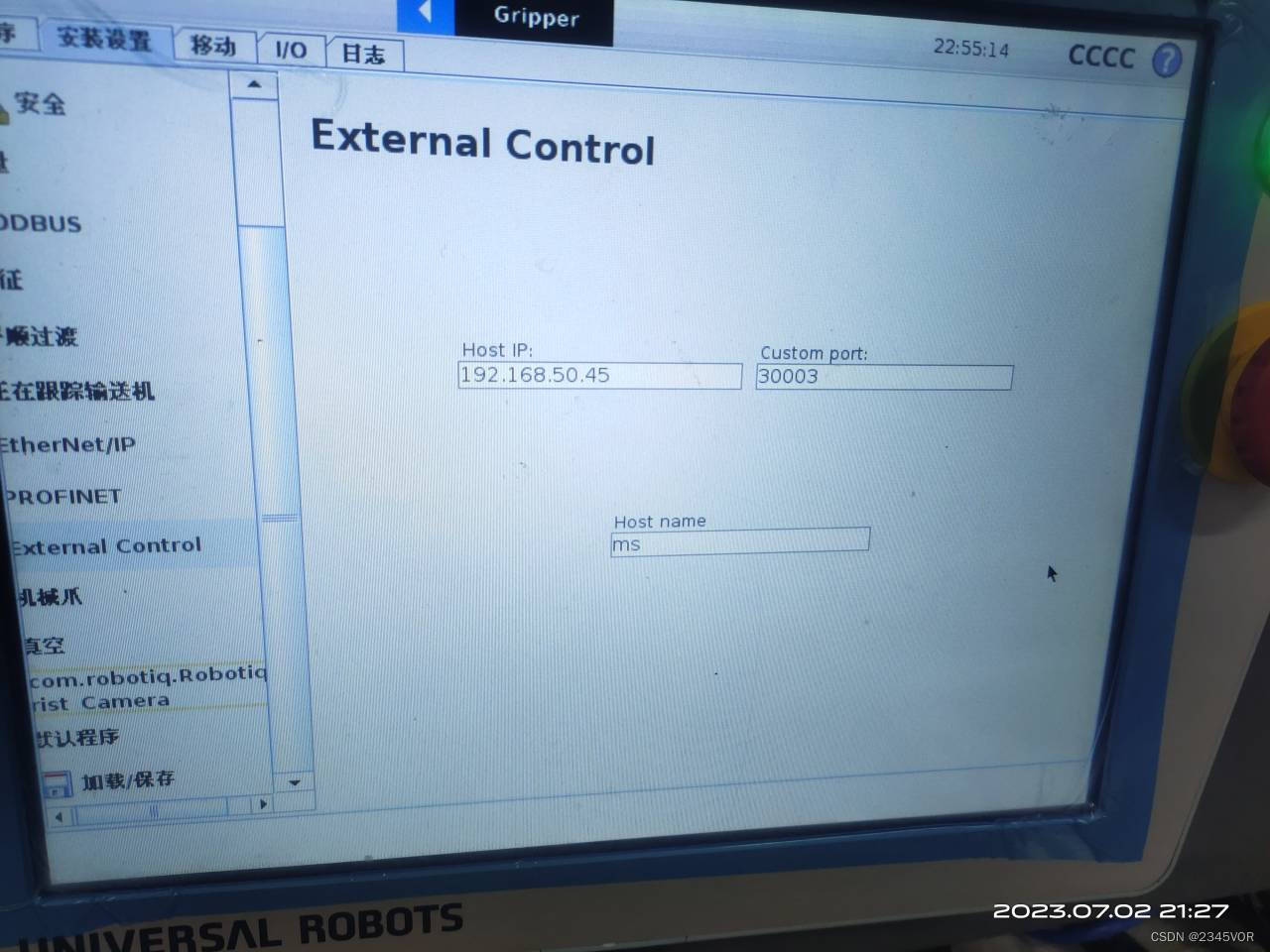

4.1 UR设置端口

还需设置拓展控制的IP端口哦,Host name是Ubuntu电脑名字

还需设置拓展控制的IP端口哦,Host name是Ubuntu电脑名字

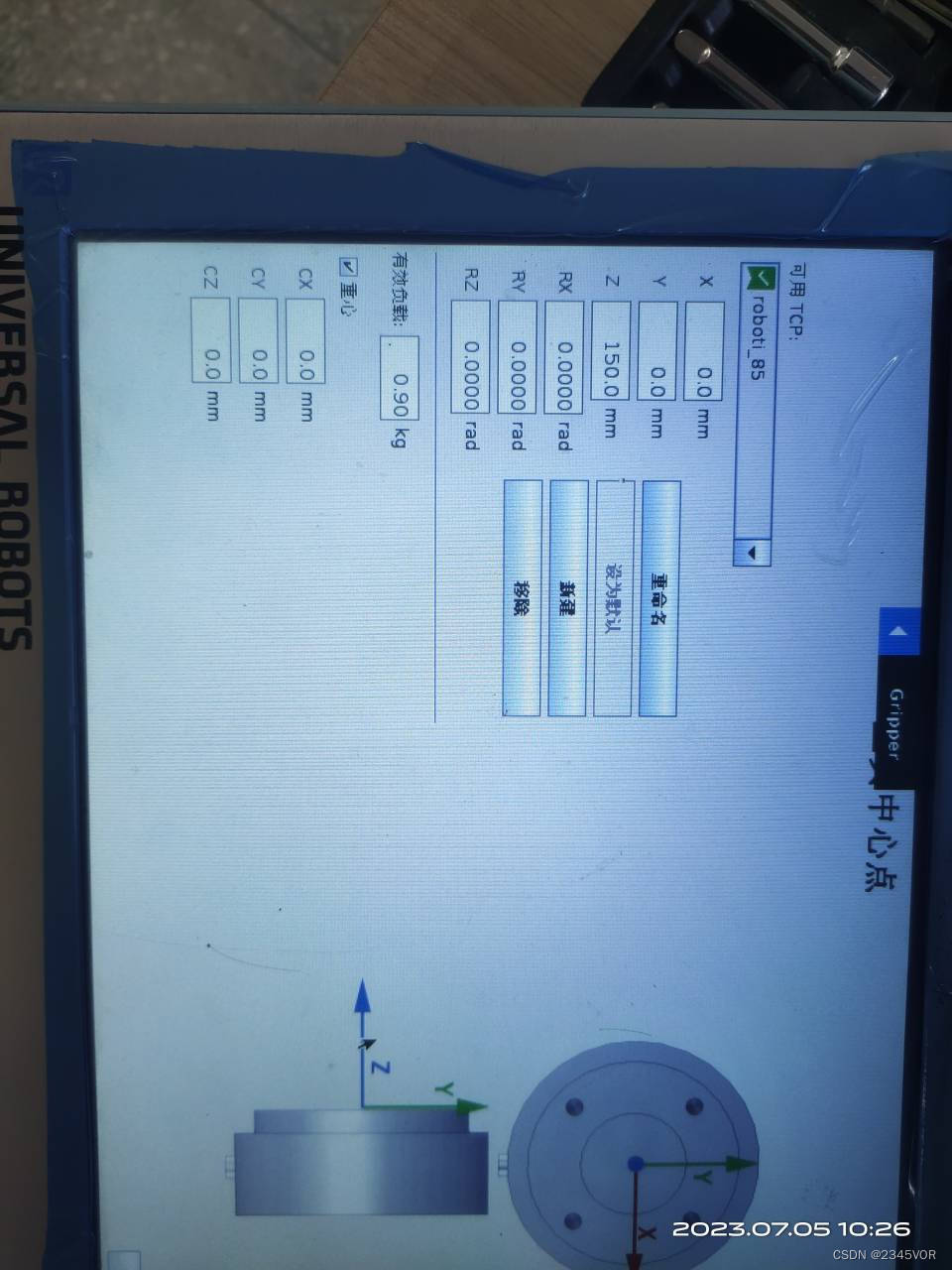

4.2 设置TCP

测量法兰盘到工具中心点位置,配置工具重量

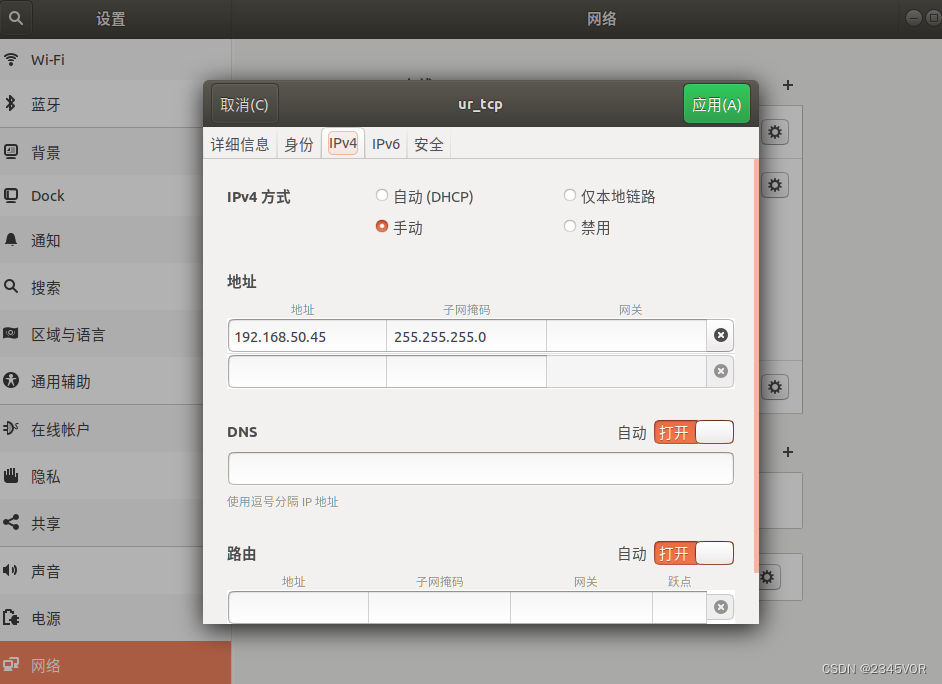

4.3 电脑设置

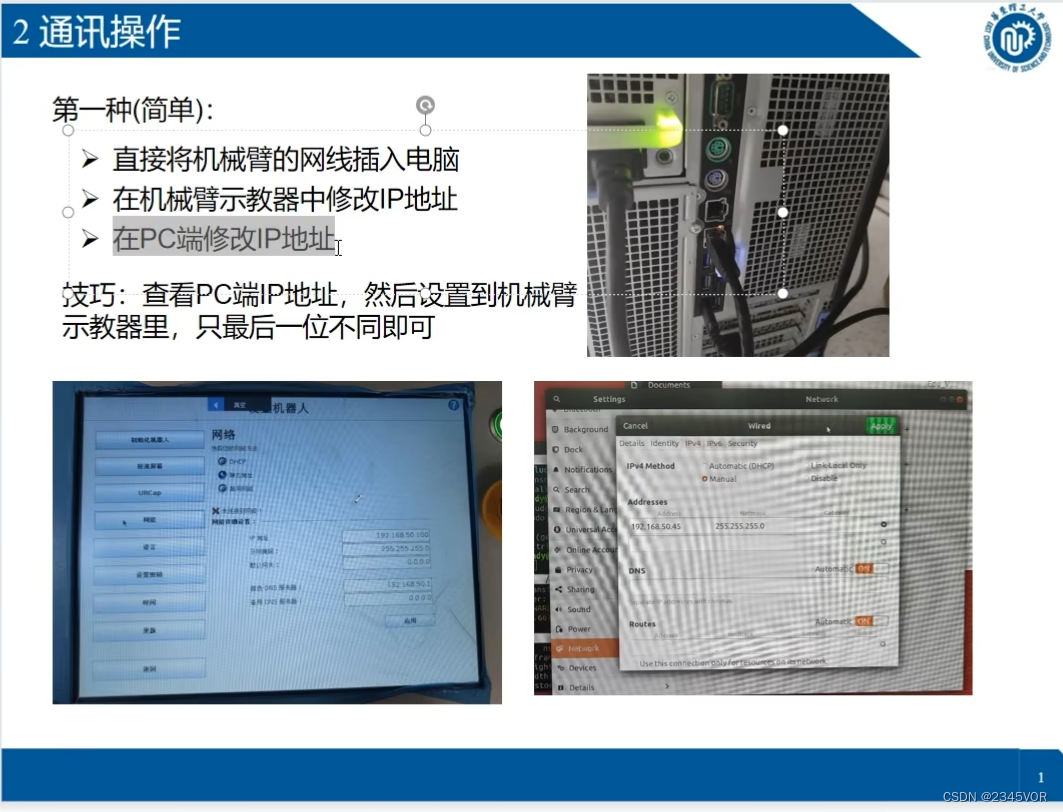

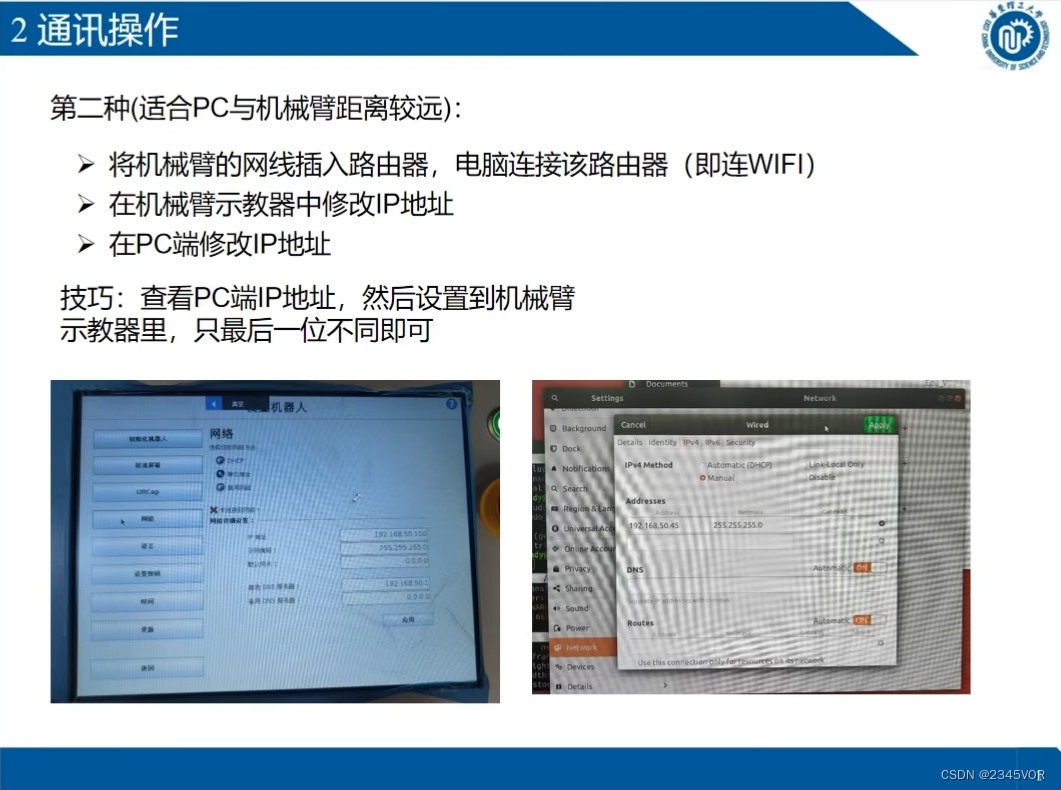

在网络部分设置IPv4参数,我采用台式机,联网方法只有网口所以被UR网线占用,推荐手机通过用USB共享网络,这样一边可以上网一边调试,巧妙避免拔来吧去!

4.4 设备安装

底盘采用30x30mm型材,UR3反向相对工作平面,相机固定在工作平面上方

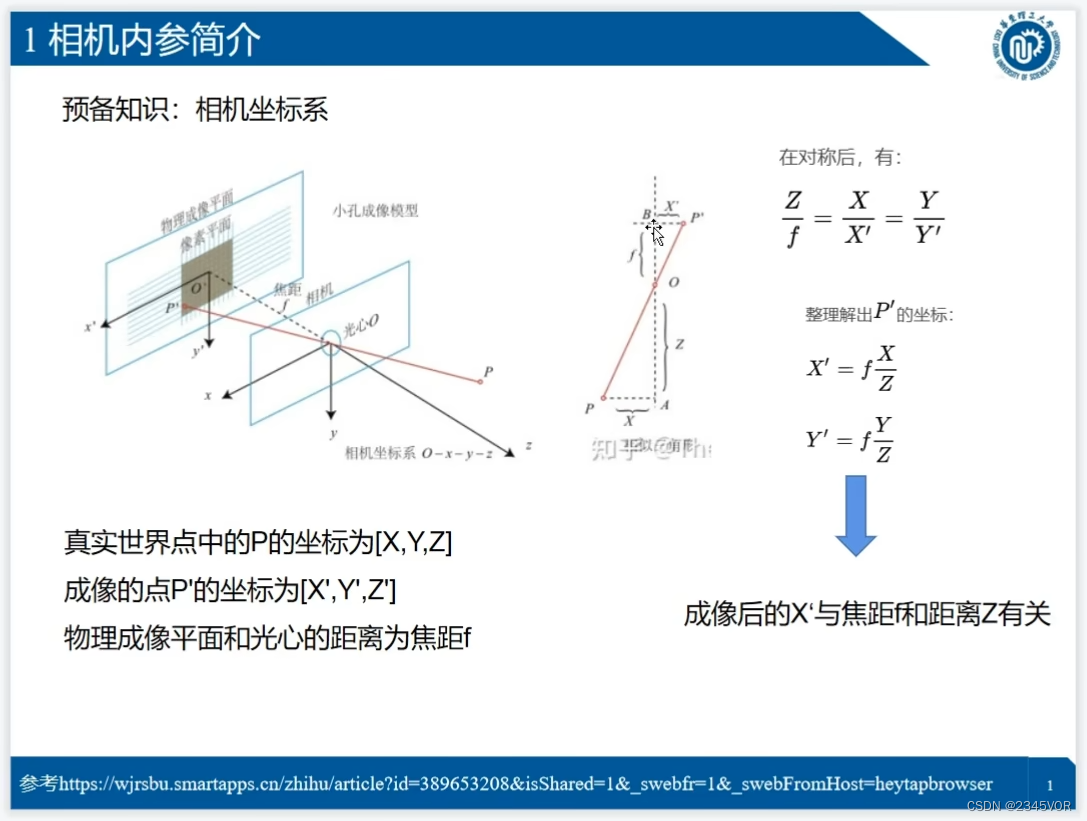

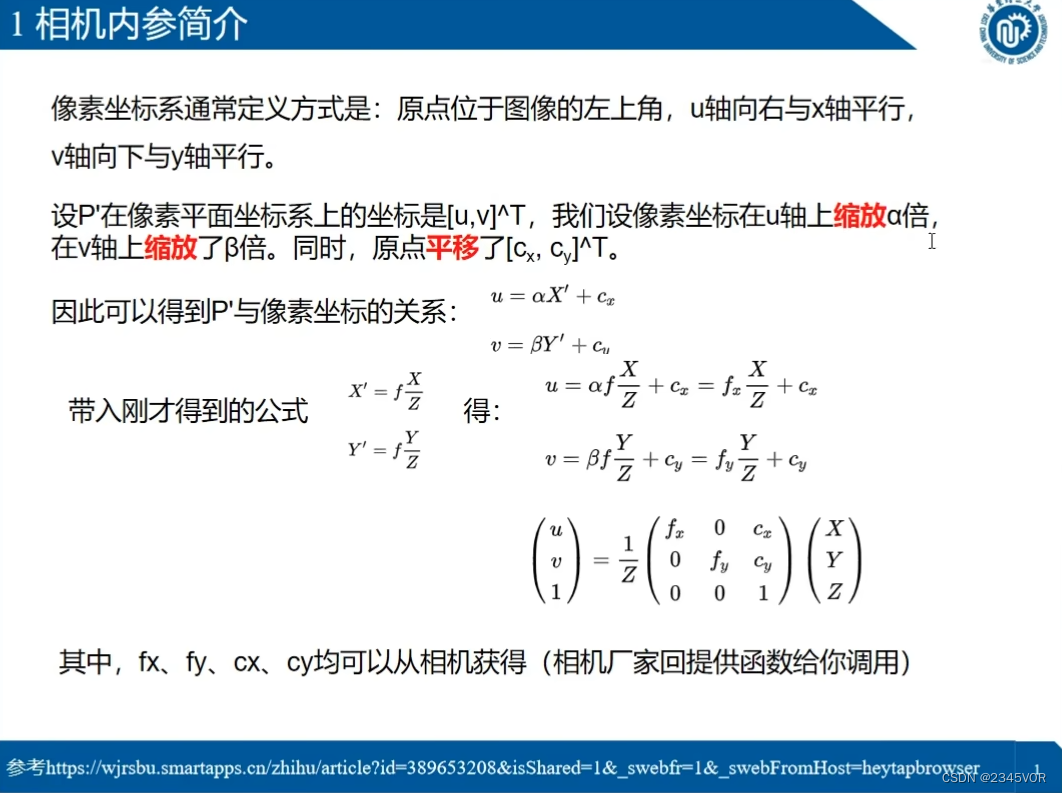

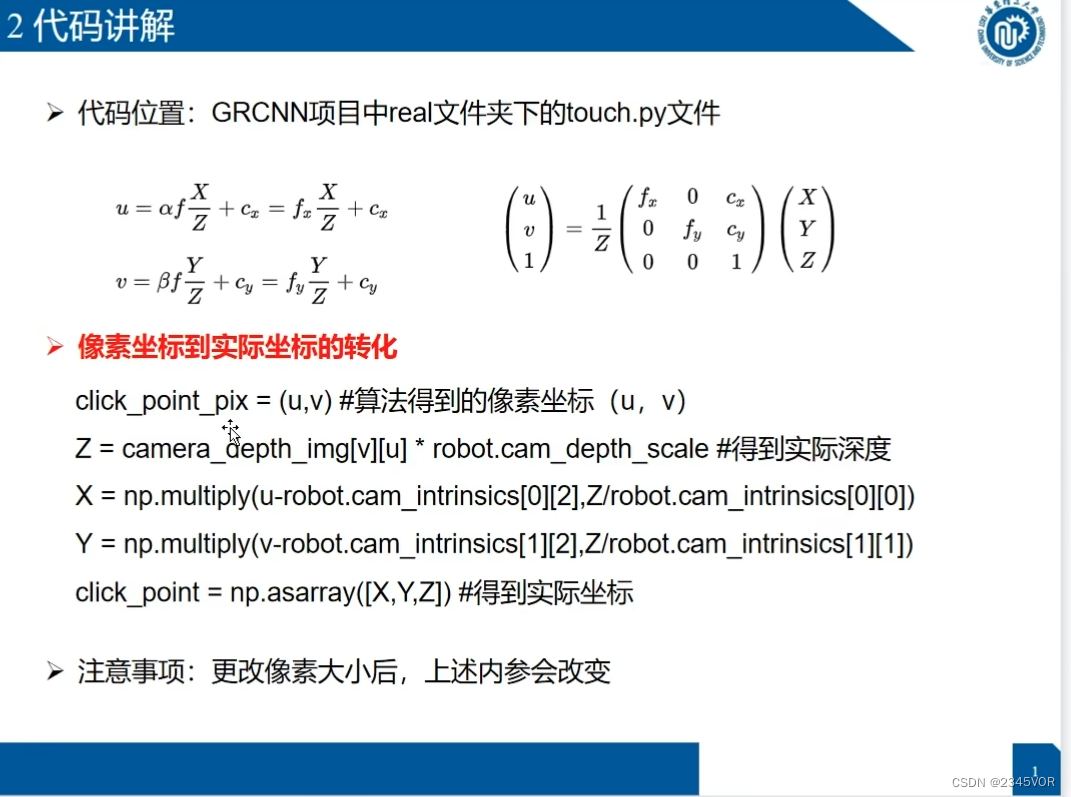

5. 相机内参的原理及应用

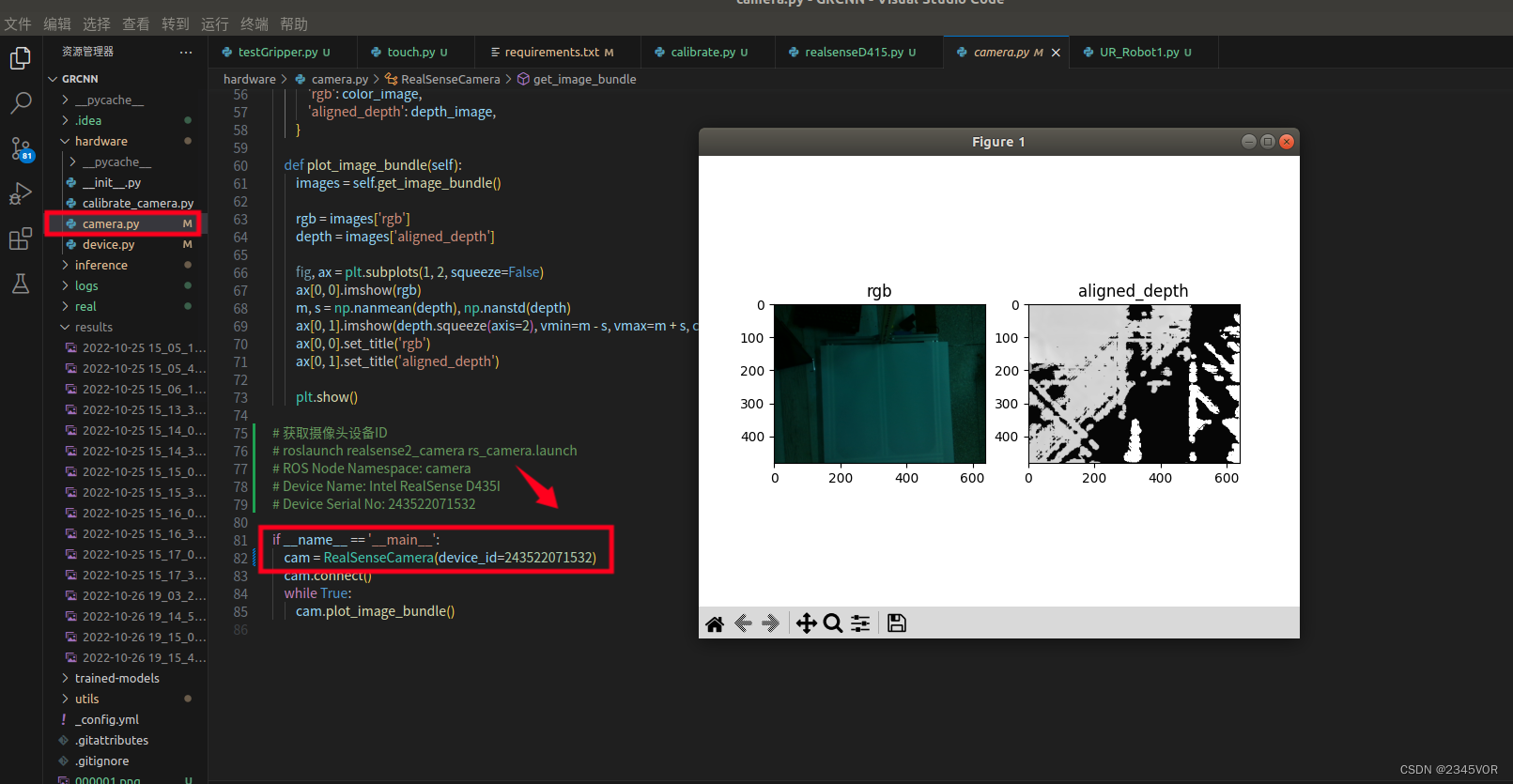

5.1 配置D435i的id

运行camera.py时,需要配置D435i的id

我采用方法是

【基于Ubuntu18.04+Melodic的realsense D435i安装】

# 获取摄像头设备ID

# roslaunch realsense2_camera rs_camera.launch

# ROS Node Namespace: camera

# Device Name: Intel RealSense D435I

# Device Serial No: 243522071532

5.2 注释伪深度

记得修改realsenseD415.py,45行注释,不然后期标定会处理数据维度不一致。

import numpy as np

import pyrealsense2 as rs

import cv2

class Camera(object):

def __init__(self,width=640,height=480,fps=15):

self.im_height = height

self.im_width = width

self.fps = fps

self.intrinsics = None

self.scale = None

self.pipeline = None

self.connect()

# color_img, depth_img = self.get_data()

#print(color_img, depth_img)

def connect(self):

# Configure depth and color streams

self.pipeline = rs.pipeline()

config = rs.config()

config.enable_stream(rs.stream.depth, self.im_width, self.im_height, rs.format.z16, self.fps)

config.enable_stream(rs.stream.color, self.im_width, self.im_height, rs.format.bgr8, self.fps)

# Start streaming

cfg = self.pipeline.start(config)

# Determine intrinsics

rgb_profile = cfg.get_stream(rs.stream.color)

self.intrinsics = self.get_intrinsics(rgb_profile)

# Determine depth scale

self.scale = cfg.get_device().first_depth_sensor().get_depth_scale()

print("camera depth scale:",self.scale)

print("D415 have connected ...")

def get_data(self):

# Wait for a coherent pair of frames: depth and color

frames = self.pipeline.wait_for_frames()

# align

align = rs.align(align_to=rs.stream.color)

aligned_frames = align.process(frames)

aligned_depth_frame = aligned_frames.get_depth_frame()

color_frame = aligned_frames.get_color_frame()

# no align

# depth_frame = frames.get_depth_frame()

# color_frame = frames.get_color_frame()

# Convert images to numpy arrays

depth_image = np.asanyarray(aligned_depth_frame.get_data(),dtype=np.float32)

# depth_image *= self.scale

# depth_image = np.expand_dims(depth_image, axis=2)

color_image = np.asanyarray(color_frame.get_data())

return color_image, depth_image

def plot_image(self):

color_image,depth_image = self.get_data()

# Apply colormap on depth image (image must be converted to 8-bit per pixel first)

depth_colormap = cv2.applyColorMap(cv2.convertScaleAbs(depth_image, alpha=0.03), cv2.COLORMAP_JET)

depth_colormap_dim = depth_colormap.shape

color_colormap_dim = color_image.shape

# If depth and color resolutions are different, resize color image to match depth image for display

if depth_colormap_dim != color_colormap_dim:

resized_color_image = cv2.resize(color_image, dsize=(depth_colormap_dim[1], depth_colormap_dim[0]),

interpolation=cv2.INTER_AREA)

images = np.hstack((resized_color_image, depth_colormap))

else:

images = np.hstack((color_image, depth_colormap))

# Show images

cv2.namedWindow('RealSense', cv2.WINDOW_AUTOSIZE)

cv2.imshow('RealSense', images)

# cv2.imwrite('color_image.png', color_image)

cv2.waitKey(5000)

def get_intrinsics(self,rgb_profile):

raw_intrinsics = rgb_profile.as_video_stream_profile().get_intrinsics()

print("camera intrinsics:", raw_intrinsics)

# camera intrinsics form is as follows.

#[[fx,0,ppx],

# [0,fy,ppy],

# [0,0,1]]

# intrinsics = np.array([615.284,0,309.623,0,614.557,247.967,0,0,1]).reshape(3,3) #640 480

intrinsics = np.array([raw_intrinsics.fx, 0, raw_intrinsics.ppx, 0, raw_intrinsics.fy, raw_intrinsics.ppy, 0, 0, 1]).reshape(3, 3)

return intrinsics

if __name__== '__main__':

mycamera = Camera()

# mycamera.get_data()

mycamera.plot_image()

# print(mycamera.intrinsics)

6. 手眼标定

6.1 测试UR3和夹爪的代码

UR_Robot.py,大家根据自己的UR环境配置相关限制参数,我的UR3活动半径是0.5m,后期设置的位置都必须受限,

如果

self.cam_pose = np.loadtxt('camera_pose.txt', delimiter=' ')

self.cam_depth_scale = np.loadtxt('camera_depth_scale.txt', delimiter=' ')

涉及这一块报错,可先注释,测试UR机械臂和robotiq_85的控制是否有效,后期标定后恢复。

#coding=utf8

import time

import copy

import socket

import struct

import numpy as np

import math

from robotiq_gripper import RobotiqGripper

from realsenseD415 import Camera

class UR_Robot1:

def __init__(self, tcp_host_ip="192.168.50.100", tcp_port=30003, workspace_limits=None,

is_use_robotiq85=True, is_use_camera=True):

# Init varibles

if workspace_limits is None:

workspace_limits = [[-0.5, 0.5], [-0.5, 0.5], [0.015, 0.5]]#[[-0.7, 0.7], [-0.7, 0.7], [0.00, 0.6]]change

self.workspace_limits = workspace_limits

self.tcp_host_ip = tcp_host_ip

self.tcp_port = tcp_port

self.is_use_robotiq85 = is_use_robotiq85

self.is_use_camera = is_use_camera

# UR3 robot configuration

# Default joint/tool speed configuration

self.joint_acc = 1.4 # Safe: 1.4 8

self.joint_vel = 1.05 # Safe: 1.05 3

# Joint tolerance for blocking calls

self.joint_tolerance = 0.01

# Default tool speed configuration

self.tool_acc = 0.5 # Safe: 0.5

self.tool_vel = 0.2 # Safe: 0.2

# Tool pose tolerance for blocking calls

self.tool_pose_tolerance = [0.002, 0.002, 0.002, 0.01, 0.01, 0.01]

# robotiq85 gripper configuration

if(self.is_use_robotiq85):

# reference https://gitlab.com/sdurobotics/ur_rtde

# Gripper activate

self.gripper = RobotiqGripper()

self.gripper.connect(self.tcp_host_ip, 63352) # don't change the 63352 port

self.gripper._reset()

print("Activating gripper...")

self.gripper.activate()

time.sleep(1.5)

# realsense configuration

if(self.is_use_camera):

# Fetch RGB-D data from RealSense camera

self.camera = Camera()

self.cam_intrinsics = self.camera.intrinsics # get camera intrinsics

# self.cam_intrinsics = np.array([615.284,0,309.623,0,614.557,247.967,0,0,1]).reshape(3,3)

# # Load camera pose (from running calibrate.py), intrinsics and depth scale

self.cam_pose = np.loadtxt('camera_pose.txt', delimiter=' ')

self.cam_depth_scale = np.loadtxt('camera_depth_scale.txt', delimiter=' ')

# Default robot home joint configuration (the robot is up to air)

self.home_joint_config = [-(0 / 360.0) * 2 * np.pi, -(90 / 360.0) * 2 * np.pi,

(0 / 360.0) * 2 * np.pi, -(90 / 360.0) * 2 * np.pi,

-(0 / 360.0) * 2 * np.pi, 0.0]

# test

self.testRobot()

# Test for robot controlmove_and_wait_for_pos

def testRobot(self):

try:

print("Test for robot...")

self.move_j([-(0 / 360.0) * 2 * np.pi, -(90 / 360.0) * 2 * np.pi,

(0 / 360.0) * 2 * np.pi, -(90 / 360.0) * 2 * np.pi,

-(0 / 360.0) * 2 * np.pi, 0.0])# return home

# self.move_j([(57.04 / 360.0) * 2 * np.pi, (-65.26/ 360.0) * 2 * np.pi,

# (73.52/ 360.0) * 2 * np.pi, (-100.89/ 360.0) * 2 * np.pi,

# (-86.93/ 360.0) * 2 * np.pi, (-0.29/360)*2*np.pi])# go to top of aim

# self.open_gripper()

# self.move_j([(57.03 / 360.0) * 2 * np.pi, (-56.67 / 360.0) * 2 * np.pi,

# (88.72 / 360.0) * 2 * np.pi, (-124.68 / 360.0) * 2 * np.pi,

# (-86.96/ 360.0) * 2 * np.pi, (-0.3/ 360) * 2 * np.pi])#fall down to approach aim

# self.close_gripper()

# self.move_j([(57.04 / 360.0) * 2 * np.pi, (-65.26 / 360.0) * 2 * np.pi,

# (73.52 / 360.0) * 2 * np.pi, (-100.89 / 360.0) * 2 * np.pi,

# (-86.93 / 360.0) * 2 * np.pi, (-0.29 / 360) * 2 * np.pi])# go to top of aim

# self.move_j([-(0 / 360.0) * 2 * np.pi, -(90 / 360.0) * 2 * np.pi,

# (0 / 360.0) * 2 * np.pi, -(90 / 360.0) * 2 * np.pi,

# -(0 / 360.0) * 2 * np.pi, 0.0])# return home

# self.move_j_p([0.3,0,0.3,np.pi/2,0,0],0.5,0.5)

# for i in range(10):

# self.move_j_p([0.3, 0, 0.3, np.pi, 0, i*0.1], 0.5, 0.5)

# time.sleep(1)

# self.move_j_p([0.3, 0, 0.3, -np.pi, 0, 0],0.5,0.5)

# self.move_p([0.3, 0.3, 0.3, -np.pi, 0, 0],0.5,0.5)

# self.move_l([0.2, 0.2, 0.3, -np.pi, 0, 0],0.5,0.5)

# self.plane_grasp([0.3, 0.3, 0.1])

# self.plane_push([0.3, 0.3, 0.1])

except:

print("Test fail! ")

# joint control

'''

input:joint_configuration = joint angle

'''

def move_j(self, joint_configuration,k_acc=1,k_vel=1,t=0,r=0):

self.tcp_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

self.tcp_socket.connect((self.tcp_host_ip, self.tcp_port))

tcp_command = "movej([%f" % joint_configuration[0] #"movej([]),a=,v=,\n"

for joint_idx in range(1,6):

tcp_command = tcp_command + (",%f" % joint_configuration[joint_idx])

tcp_command = tcp_command + "],a=%f,v=%f,t=%f,r=%f)\n" % (k_acc*self.joint_acc, k_vel*self.joint_vel,t,r)

self.tcp_socket.send(str.encode(tcp_command))

# Block until robot reaches home state

state_data = self.tcp_socket.recv(1500)

actual_joint_positions = self.parse_tcp_state_data(state_data, 'joint_data')

while not all([np.abs(actual_joint_positions[j] - joint_configuration[j]) < self.joint_tolerance for j in range(6)]):

state_data = self.tcp_socket.recv(1500)

actual_joint_positions = self.parse_tcp_state_data(state_data, 'joint_data')

time.sleep(0.01)

self.tcp_socket.close()

# joint control

'''

move_j_p(self, tool_configuration,k_acc=1,k_vel=1,t=0,r=0)

input:tool_configuration=[x y z r p y]

其中x y z为三个轴的目标位置坐标,单位为米

r p y ,单位为弧度

'''

def move_j_p(self, tool_configuration,k_acc=1,k_vel=1,t=0,r=0):

self.tcp_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

self.tcp_socket.connect((self.tcp_host_ip, self.tcp_port))

print(f"movej_p([{

tool_configuration}])")

# command: movej([joint_configuration],a,v,t,r)\n

tcp_command = "def process():\n"

tcp_command +=" array = rpy2rotvec([%f,%f,%f])\n" %(tool_configuration[3],tool_configuration[4],tool_configuration[5])

tcp_command += "movej(get_inverse_kin(p[%f,%f,%f,array[0],array[1],array[2]]),a=%f,v=%f,t=%f,r=%f)\n" % (tool_configuration[0],

tool_configuration[1],tool_configuration[2],k_acc * self.joint_acc, k_vel * self.joint_vel,t,r ) # "movej([]),a=,v=,\n"

tcp_command += "end\n"

self.tcp_socket.send(str.encode(tcp_command))

# Block until robot reaches home state

state_data = self.tcp_socket.recv(1500)

actual_tool_positions = self.parse_tcp_state_data(state_data, 'cartesian_info')

while not all([np.abs(actual_tool_positions[j] - tool_configuration[j]) < self.tool_pose_tolerance[j] for j in

range(3)]):

state_data = self.tcp_socket.recv(1500)

# print(f"tool_position_error{actual_tool_positions - tool_configuration}")

actual_tool_positions = self.parse_tcp_state_data(state_data, 'cartesian_info')

time.sleep(0.01)

time.sleep(1.5)

self.tcp_socket.close()

# move_l is mean that the robot keep running in a straight line

def move_l(self, tool_configuration,k_acc=1,k_vel=1,t=0,r=0):

print(f"movel([{

tool_configuration}])")

self.tcp_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

self.tcp_socket.connect((self.tcp_host_ip, self.tcp_port))

# command: movel([tool_configuration],a,v,t,r)\n

tcp_command = "def process():\n"

tcp_command += " array = rpy2rotvec([%f,%f,%f])\n" % (

tool_configuration[3], tool_configuration[4], tool_configuration[5])

tcp_command += "movel(p[%f,%f,%f,array[0],array[1],array[2]],a=%f,v=%f,t=%f,r=%f)\n" % (

tool_configuration[0], tool_configuration[1], tool_configuration[2],

k_acc * self.joint_acc, k_vel * self.joint_vel,t,r) # "movel([]),a=,v=,\n"

tcp_command += "end\n"

self.tcp_socket.send(str.encode(tcp_command))

# Block until robot reaches home state

state_data = self.tcp_socket.recv(1500)

actual_tool_positions = self.parse_tcp_state_data(state_data, 'cartesian_info')

while not all([np.abs(actual_tool_positions[j] - tool_configuration[j]) < self.tool_pose_tolerance[j] for j in range(3)]):

state_data = self.tcp_socket.recv(1500)

actual_tool_positions = self.parse_tcp_state_data(state_data, 'cartesian_info')

time.sleep(0.01)

time.sleep(1.5)

self.tcp_socket.close()

# Usually, We don't use move_c

# move_c is mean that the robot move circle

# mode 0: Unconstrained mode. Interpolate orientation from current pose to target pose (pose_to)

# 1: Fixed mode. Keep orientation constant relative to the tangent of the circular arc (starting from current pose)

def move_c(self,pose_via,tool_configuration,k_acc=1,k_vel=1,r=0,mode=0):

self.tcp_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

self.tcp_socket.connect((self.tcp_host_ip, self.tcp_port))

print(f"movec([{

pose_via},{

tool_configuration}])")

# command: movec([pose_via,tool_configuration],a,v,t,r)\n

tcp_command = "def process():\n"

tcp_command += " via_pose = rpy2rotvec([%f,%f,%f])\n" % (

pose_via[3],pose_via[4] ,pose_via[5] )

tcp_command += " tool_pose = rpy2rotvec([%f,%f,%f])\n" % (

tool_configuration[3], tool_configuration[4], tool_configuration[5])

tcp_command = f" movec([{

pose_via[0]},{

pose_via[1]},{

pose_via[2]},via_pose[0],via_pose[1],via_pose[2]], \

[{

tool_configuration[0]},{

tool_configuration[1]},{

tool_configuration[2]},tool_pose[0],tool_pose[1],tool_pose[2]], \

a={

k_acc * self.tool_acc},v={

k_vel * self.tool_vel},r={

r})\n"

tcp_command += "end\n"

self.tcp_socket.send(str.encode(tcp_command))

# Block until robot reaches home state

state_data = self.tcp_socket.recv(1500)

actual_tool_positions = self.parse_tcp_state_data(state_data, 'cartesian_info')

while not all([np.abs(actual_tool_positions[j] - tool_configuration[j]) < self.tool_pose_tolerance[j] for j in range(3)]):

state_data = self.tcp_socket.recv(1500)

actual_tool_positions = self.parse_tcp_state_data(state_data, 'cartesian_info')

time.sleep(0.01)

self.tcp_socket.close()

time.sleep(1.5)

def go_home(self):

self.move_j(self.home_joint_config)

def restartReal(self):

self.go_home()

# robotiq85 gripper configuration

if (self.is_use_robotiq85):

# reference https://gitlab.com/sdurobotics/ur_rtde

# Gripper activate

self.gripper = RobotiqGripper()

self.gripper.connect(self.tcp_host_ip, 63352) # don't change the 63352 port

self.gripper._reset()

print("Activating gripper...")

self.gripper.activate()

time.sleep(1.5)

# realsense configuration

if (self.is_use_camera):

# Fetch RGB-D data from RealSense camera

self.camera = Camera()

# self.cam_intrinsics = self.camera.intrinsics # get camera intrinsics

self.cam_intrinsics = self.camera.color_intr

# # Load camera pose (from running calibrate.py), intrinsics and depth scale

self.cam_pose = np.loadtxt('real/camera_pose.txt', delimiter=' ')

self.cam_depth_scale = np.loadtxt('real/camera_depth_scale.txt', delimiter=' ')

# get robot current state and information

def get_state(self):

self.tcp_cket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

self.tcp_socket.connect((self.tcp_host_ip, self.tcp_port))

state_data = self.tcp_socket.recv(1500)

self.tcp_socket.close()

return state_data

# get robot current joint angles and cartesian pose

def parse_tcp_state_data(self, data, subpasckage):

dic = {

'MessageSize': 'i', 'Time': 'd', 'q target': '6d', 'qd target': '6d', 'qdd target': '6d',

'I target': '6d',

'M target': '6d', 'q actual': '6d', 'qd actual': '6d', 'I actual': '6d', 'I control': '6d',

'Tool vector actual': '6d', 'TCP speed actual': '6d', 'TCP force': '6d', 'Tool vector target': '6d',

'TCP speed target': '6d', 'Digital input bits': 'd', 'Motor temperatures': '6d', 'Controller Timer': 'd',

'Test value': 'd', 'Robot Mode': 'd', 'Joint Modes': '6d', 'Safety Mode': 'd', 'empty1': '6d',

'Tool Accelerometer values': '3d',

'empty2': '6d', 'Speed scaling': 'd', 'Linear momentum norm': 'd', 'SoftwareOnly': 'd',

'softwareOnly2': 'd',

'V main': 'd',

'V robot': 'd', 'I robot': 'd', 'V actual': '6d', 'Digital outputs': 'd', 'Program state': 'd',

'Elbow position': 'd', 'Elbow velocity': '3d'}

ii = range(len(dic))

for key, i in zip(dic, ii):

fmtsize = struct.calcsize(dic[key])

data1, data = data[0:fmtsize], data[fmtsize:]

fmt = "!" + dic[key]

dic[key] = dic[key], struct.unpack(fmt, data1)

if subpasckage == 'joint_data': # get joint data

q_actual_tuple = dic["q actual"]

joint_data= np.array(q_actual_tuple[1])

return joint_data

elif subpasckage == 'cartesian_info':

Tool_vector_actual = dic["Tool vector actual"] # get x y z rx ry rz

cartesian_info = np.array(Tool_vector_actual[1])

return cartesian_info

def rpy2rotating_vector(self,rpy):

# rpy to R

R = self.rpy2R(rpy)

# R to rotating_vector

return self.R2rotating_vector(R)

def rpy2R(self,rpy): # [r,p,y] 单位rad

rot_x = np.array([[1, 0, 0],

[0, math.cos(rpy[0]), -math.sin(rpy[0])],

[0, math.sin(rpy[0]), math.cos(rpy[0])]])

rot_y = np.array([[math.cos(rpy[1]), 0, math.sin(rpy[1])],

[0, 1, 0],

[-math.sin(rpy[1]), 0, math.cos(rpy[1])]])

rot_z = np.array([[math.cos(rpy[2]), -math.sin(rpy[2]), 0],

[math.sin(rpy[2]), math.cos(rpy[2]), 0],

[0, 0, 1]])

R = np.dot(rot_z, np.dot(rot_y, rot_x))

return R

def R2rotating_vector(self,R):

theta = math.acos((R[0, 0] + R[1, 1] + R[2, 2] - 1) / 2)

print(f"theta:{

theta}")

rx = (R[2, 1] - R[1, 2]) / (2 * math.sin(theta))

ry = (R[0, 2] - R[2, 0]) / (2 * math.sin(theta))

rz = (R[1, 0] - R[0, 1]) / (2 * math.sin(theta))

return np.array([rx, ry, rz]) * theta

def R2rpy(self,R):

# assert (isRotationMatrix(R))

sy = math.sqrt(R[0, 0] * R[0, 0] + R[1, 0] * R[1, 0])

singular = sy < 1e-6

if not singular:

x = math.atan2(R[2, 1], R[2, 2])

y = math.atan2(-R[2, 0], sy)

z = math.atan2(R[1, 0], R[0, 0])

else:

x = math.atan2(-R[1, 2], R[1, 1])

y = math.atan2(-R[2, 0], sy)

z = 0

return np.array([x, y, z])

## robotiq85 gripper

# get gripper position [0-255] open:0 ,close:255

def get_current_tool_pos(self):

return self.gripper.get_current_position()

def log_gripper_info(self):

print(f"Pos: {

str(self.gripper.get_current_position())}")

def close_gripper(self,speed=255,force=255):

# position: int[0-255], speed: int[0-255], force: int[0-255]

self.gripper.move_and_wait_for_pos(255, speed, force)

print("gripper had closed!")

time.sleep(1.2)

self.log_gripper_info()

def open_gripper(self,speed=255,force=255):

# position: int[0-255], speed: int[0-255], force: int[0-255]

self.gripper.move_and_wait_for_pos(0, speed, force)

print("gripper had opened!")

time.sleep(1.2)

self.log_gripper_info()

## get camera data

def get_camera_data(self):

color_img, depth_img = self.camera.get_data()

return color_img, depth_img

# Note: must be preceded by close_gripper()

def check_grasp(self):

# if the robot grasp unsuccessfully ,then the gripper close

return self.get_current_tool_pos()>220

def plane_grasp(self, position, yaw=0, open_size=0.65, k_acc=0.8,k_vel=0.8,speed=255, force=125):

rpy = [-np.pi, 0, 1.57 - yaw]

# 判定抓取的位置是否处于工作空间

for i in range(3):

position[i] = min(max(position[i],self.workspace_limits[i][0]),self.workspace_limits[i][1])

# 判定抓取的角度RPY是否在规定范围内 [-pi,pi]

for i in range(3):

if rpy[i] > np.pi:

rpy[i] -= 2*np.pi

elif rpy[i] < -np.pi:

rpy[i] += 2*np.pi

print('Executing: grasp at (%f, %f, %f) by the RPY angle (%f, %f, %f)' \

% (position[0], position[1], position[2],rpy[0],rpy[1],rpy[2]))

# pre work

grasp_home = [-0.1, 0.4, 0.2, -np.pi, 0, 0] #[0.4,0,0.4,-np.pi,0,0] # you can change me

self.move_j_p(grasp_home,k_acc,k_vel)

open_pos = int(-258*open_size +230) # open size:0~0.85cm --> open pos:230~10

self.gripper.move_and_wait_for_pos(open_pos, speed, force)

print("gripper open size:")

self.log_gripper_info()

# Firstly, achieve pre-grasp position

pre_position = copy.deepcopy(position)

pre_position[2] = pre_position[2] + 0.1 # z axis

print(pre_position)

self.move_j_p(pre_position + rpy,k_acc,k_vel)

# Second,achieve grasp position

self.move_l(position+rpy,0.6*k_acc,0.6*k_vel)

self.close_gripper(speed,force)

self.move_l(pre_position + rpy, 0.6*k_acc,0.6*k_vel)

if(self.check_grasp()):

print("Check grasp fail! ")

self.move_j_p(grasp_home)

return False

# Third,put the object into box

box_position = [-0.1, 0.4, 0.2, -np.pi, 0, 0]#[0.63,0,0.25,-np.pi,0,0] # you can change me!

self.move_j_p(box_position,k_acc,k_vel)

box_position[2] = 0.1 # down to the 10cm

self.move_j_p(box_position, k_acc, k_vel)

self.open_gripper(speed,force)

box_position[2] = 0.25

self.move_j_p(box_position, k_acc, k_vel)

self.move_j_p(grasp_home)

print("grasp success!")

return True

def plane_push(self, position, move_orientation=0, length=0.1):

for i in range(2):

position[i] = min(max(position[i],self.workspace_limits[i][0]+0.1),self.workspace_limits[i][1]-0.1)

position[2] = min(max(position[2],self.workspace_limits[2][0]),self.workspace_limits[2][1])

print('Executing: push at (%f, %f, %f) and the orientation is %f' % (position[0], position[1], position[2],move_orientation))

push_home = [0.4, 0, 0.4, -np.pi, 0, 0]

self.move_j_p(push_home,k_acc=1, k_vel=1) # pre push position(push home)

# self.close_gripper()

self.move_j_p([position[0],position[1],position[2]+0.1,-np.pi,0,0],k_acc=1,k_vel=1)

self.move_j_p([position[0], position[1], position[2], -np.pi, 0, 0], k_acc=0.6, k_vel=0.6)

# compute the destination pos

destination_pos = [position[0] + length * math.cos(move_orientation),position[1] + length * math.sin(move_orientation),position[2]]

self.move_l(destination_pos+[-np.pi, 0, 0], k_acc=0.5, k_vel=0.5)

self.move_j_p([destination_pos[0],destination_pos[1],destination_pos[2]+0.1,-np.pi,0,0],k_acc=0.6, k_vel=0.6)

# go back push-home

self.move_j_p(push_home, k_acc=1, k_vel=1)

def grasp(self, position, rpyNone, open_size=0.85, k_acc=0.8, k_vel=0.8, speed=255, force=125):

# 判定抓取的位置是否处于工作空间

if rpy is None:

rpy = [-np.pi, 0, 0]

for i in range(3):

position[i] = min(max(position[i], self.workspace_limits[i][0]), self.workspace_limits[i][1])

# 判定抓取的角度RPY是否在规定范围内 [0.5*pi,1.5*pi]

for i in range(3):

if rpy[i] > np.pi:

rpy[i] -= 2 * np.pi

elif rpy[i] < -np.pi:

rpy[i] += 2 * np.pi

print('Executing: grasp at (%f, %f, %f) by the RPY angle (%f, %f, %f)' \

% (position[0], position[1], position[2], rpy[0], rpy[1], rpy[2]))

# pre work

grasp_home = [0.4, 0, 0.4, -np.pi, 0, 0] # you can change me

self.move_j_p(grasp_home, k_acc, k_vel)

open_pos = int(-300 * open_size + 255) # open size:0~0.85cm --> open pos:255~0

self.gripper.move_and_wait_for_pos(open_pos, speed, force)

self.log_gripper_info()

# Firstly, achieve pre-grasp position

pre_position = copy.deepcopy(position)

pre_position[2] = pre_position[2] + 0.1 # z axis

print(pre_position)

self.move_j_p(pre_position + rpy, k_acc, k_vel)

# Second,achieve grasp position

self.move_l(position + rpy, 0.6 * k_acc, 0.6 * k_vel)

self.close_gripper(speed, force)

self.move_l(pre_position + rpy, 0.6 * k_acc, 0.6 * k_vel)

if (self.check_grasp()):

print("Check grasp fail! ")

self.move_j_p(grasp_home)

return False

# Third,put the object into box

box_position = [0.63, 0, 0.25, -np.pi, 0, 0] # you can change me!

self.move_j_p(box_position, k_acc, k_vel)

box_position[2] = 0.1 # down to the 10cm

self.move_l(box_position, k_acc, k_vel)

self.open_gripper(speed, force)

box_position[2] = 0.25

self.move_l(box_position, k_acc, k_vel)

self.move_j_p(grasp_home)

print("grasp success!")

if __name__ =="__main__":

ur_robot = UR_Robot1()

标定版:棋盘格文件及标定矫正程序(链接直接下载,CAD文件可修改)

标定版:棋盘格文件及标定矫正程序(链接直接下载,CAD文件可修改)

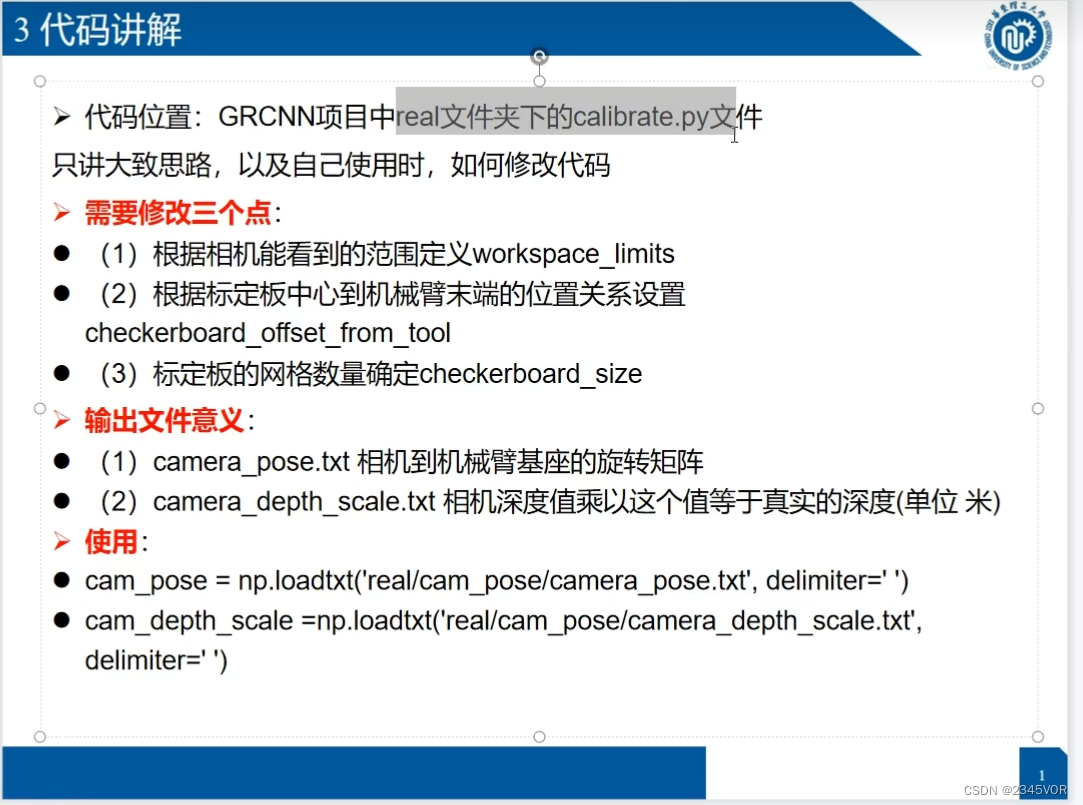

6.2 相机标定

参考calibrate.py,自己修改标定数量大小位置

#!/usr/bin/env python

import matplotlib.pyplot as plt

import numpy as np

import time

import cv2

from UR_Robot1 import UR_Robot1

from scipy import optimize

from mpl_toolkits.mplot3d import Axes3D

# User options (change me)

# --------------- Setup options ---------------

tcp_host_ip = '192.168.50.100' # IP and port to robot arm as TCP client (UR3)

tcp_port = 30003

# workspace_limits = np.asarray([[0.3, 0.75], [0.05, 0.4], [-0.2, -0.1]]) # Cols: min max, Rows: x y z (define workspace limits in robot coordinates)

# workspace_limits = np.asarray([[0.35, 0.55], [0, 0.35], [0.15, 0.25]])

workspace_limits = np.asarray([[-0.05, 0.05], [0.35, 0.40], [0.275, 0.30]])#([[0.2, 0.4], [0.4, 0.6], [0.05, 0.1]])5y*5x*2z=50

calib_grid_step = 0.025#0.05

#checkerboard_offset_from_tool = [0,-0.13,0.02] # change me!

checkerboard_offset_from_tool = [0,0.113,0]

tool_orientation = [-np.pi/2,0,0] # [0,-2.22,2.22] # [2.22,2.22,0]

# tool_orientation = [np.pi/2,np.pi/2,np.pi/2]

# home [0,-np.pi/2,0,-np.pi/2,0,0]

# Move robot to each calibration point in workspace

reference_location = [-np.pi, -np.pi/2, np.pi/2, 0, np.pi/2, np.pi]#[np.pi/2, -np.pi/2, np.pi/2, 0, np.pi/2, np.pi]

#---------------------------------------------

# Construct 3D calibration grid across workspace

print(1 + (workspace_limits[0][1] - workspace_limits[0][0])/calib_grid_step)

gridspace_x = np.linspace(workspace_limits[0][0], workspace_limits[0][1], int(1 + (workspace_limits[0][1] - workspace_limits[0][0])/calib_grid_step))

gridspace_y = np.linspace(workspace_limits[1][0], workspace_limits[1][1], int(1 + (workspace_limits[1][1] - workspace_limits[1][0])/calib_grid_step))

gridspace_z = np.linspace(workspace_limits[2][0], workspace_limits[2][1], int(1 + (workspace_limits[2][1] - workspace_limits[2][0])/calib_grid_step))

calib_grid_x, calib_grid_y, calib_grid_z = np.meshgrid(gridspace_x, gridspace_y, gridspace_z)

num_calib_grid_pts = calib_grid_x.shape[0]*calib_grid_x.shape[1]*calib_grid_x.shape[2]

calib_grid_x.shape = (num_calib_grid_pts,1)

calib_grid_y.shape = (num_calib_grid_pts,1)

calib_grid_z.shape = (num_calib_grid_pts,1)

calib_grid_pts = np.concatenate((calib_grid_x, calib_grid_y, calib_grid_z), axis=1)

measured_pts = []

observed_pts = []

observed_pix = []

# Move robot to home pose

print('Connecting to robot...')

robot = UR_Robot1(tcp_host_ip,tcp_port,workspace_limits,is_use_robotiq85=False)

# Slow down robot

robot.joint_acc = 1.4

robot.joint_vel = 1.05

# home point

robot.move_j([-(0 / 360.0) * 2 * np.pi, -(90 / 360.0) * 2 * np.pi,

(0 / 360.0) * 2 * np.pi, -(90 / 360.0) * 2 * np.pi,

-(0 / 360.0) * 2 * np.pi, 0.0])# return home

# robot.open_gripper()

# time.sleep(5) # wait calibrate board to adjust suitable position

# robot.close_gripper()

# Make robot gripper point upwards

robot.move_j(reference_location)#robot.move_j([-np.pi, -np.pi/2, np.pi/2, 0, np.pi/2, np.pi])

# self.home_joint_config = [-(0 / 360.0) * 2 * np.pi, -(90 / 360.0) * 2 * np.pi,

# (0 / 360.0) * 2 * np.pi, -(90 / 360.0) * 2 * np.pi,

# -(0 / 360.0) * 2 * np.pi, 0.0]

# [0,-np.pi/2,0,-np.pi/2,0,0]

# Move robot to each calibration point in workspace

print('Collecting data...')

for calib_pt_idx in range(num_calib_grid_pts):

tool_position = calib_grid_pts[calib_pt_idx,:]

tool_config = [tool_position[0],tool_position[1],tool_position[2],

tool_orientation[0],tool_orientation[1],tool_orientation[2]]

tool_config1 = [tool_position[0], tool_position[1], tool_position[2],

tool_orientation[0], tool_orientation[1], tool_orientation[2]]

print(f"tool position and orientation:{

tool_config1}")

robot.move_j_p(tool_config)

time.sleep(2) # k

# Find checkerboard center

checkerboard_size = (5,5)

refine_criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 27, 0.001)#30

camera_color_img, camera_depth_img = robot.get_camera_data()

bgr_color_data = cv2.cvtColor(camera_color_img, cv2.COLOR_RGB2BGR)

gray_data = cv2.cvtColor(bgr_color_data, cv2.COLOR_RGB2GRAY)

checkerboard_found, corners = cv2.findChessboardCorners(gray_data, checkerboard_size, None, cv2.CALIB_CB_ADAPTIVE_THRESH)

print(checkerboard_found)

if checkerboard_found:

corners_refined = cv2.cornerSubPix(gray_data, corners, (5,5), (-1,-1), refine_criteria)

# Get observed checkerboard center 3D point in camera space

checkerboard_pix = np.round(corners_refined[12,0,:]).astype(int)

checkerboard_z = camera_depth_img[checkerboard_pix[1]][checkerboard_pix[0]]

checkerboard_x = np.multiply(checkerboard_pix[0]-robot.cam_intrinsics[0][2],checkerboard_z/robot.cam_intrinsics[0][0])

checkerboard_y = np.multiply(checkerboard_pix[1]-robot.cam_intrinsics[1][2],checkerboard_z/robot.cam_intrinsics[1][1])

if checkerboard_z == 0:

continue

# Save calibration point and observed checkerboard center

observed_pts.append([checkerboard_x,checkerboard_y,checkerboard_z])

# tool_position[2] += checkerboard_offset_from_tool

tool_position = tool_position + checkerboard_offset_from_tool

measured_pts.append(tool_position)

observed_pix.append(checkerboard_pix)

# Draw and display the corners

# vis = cv2.drawChessboardCorners(robot.camera.color_data, checkerboard_size, corners_refined, checkerboard_found)

vis = cv2.drawChessboardCorners(bgr_color_data, (1,1), corners_refined[12,:,:], checkerboard_found)

cv2.imwrite('%06d.png' % len(measured_pts), vis)

cv2.imshow('Calibration',vis)

cv2.waitKey(1000)

# Move robot back to home pose

# robot.go_home()

measured_pts = np.asarray(measured_pts)

observed_pts = np.asarray(observed_pts)

observed_pix = np.asarray(observed_pix)

world2camera = np.eye(4)

# Estimate rigid transform with SVD (from Nghia Ho)

def get_rigid_transform(A, B):

assert len(A) == len(B)

N = A.shape[0] # Total points

centroid_A = np.mean(A, axis=0)

centroid_B = np.mean(B, axis=0)

AA = A - np.tile(centroid_A, (N, 1)) # Centre the points

BB = B - np.tile(centroid_B, (N, 1))

H = np.dot(np.transpose(AA), BB) # Dot is matrix multiplication for array

U, S, Vt = np.linalg.svd(H)

R = np.dot(Vt.T, U.T)

if np.linalg.det(R) < 0: # Special reflection case

Vt[2,:] *= -1

R = np.dot(Vt.T, U.T)

t = np.dot(-R, centroid_A.T) + centroid_B.T

return R, t

def get_rigid_transform_error(z_scale):

global measured_pts, observed_pts, observed_pix, world2camera, camera

# Apply z offset and compute new observed points using camera intrinsics

observed_z = observed_pts[:,2:] * z_scale

observed_x = np.multiply(observed_pix[:,[0]]-robot.cam_intrinsics[0][2],observed_z/robot.cam_intrinsics[0][0])

observed_y = np.multiply(observed_pix[:,[1]]-robot.cam_intrinsics[1][2],observed_z/robot.cam_intrinsics[1][1])

new_observed_pts = np.concatenate((observed_x, observed_y, observed_z), axis=1)

# Estimate rigid transform between measured points and new observed points

R, t = get_rigid_transform(np.asarray(measured_pts), np.asarray(new_observed_pts))

t.shape = (3,1)

world2camera = np.concatenate((np.concatenate((R, t), axis=1),np.array([[0, 0, 0, 1]])), axis=0)

# Compute rigid transform error

registered_pts = np.dot(R,np.transpose(measured_pts)) + np.tile(t,(1,measured_pts.shape[0]))

error = np.transpose(registered_pts) - new_observed_pts

error = np.sum(np.multiply(error,error))

rmse = np.sqrt(error/measured_pts.shape[0])

return rmse

# Optimize z scale w.r.t. rigid transform error

print('Calibrating...')

z_scale_init = 1

optim_result = optimize.minimize(get_rigid_transform_error, np.asarray(z_scale_init), method='Nelder-Mead')

camera_depth_offset = optim_result.x

# Save camera optimized offset and camera pose

print('Saving...')

np.savetxt('camera_depth_scale.txt', camera_depth_offset, delimiter=' ')

get_rigid_transform_error(camera_depth_offset)

camera_pose = np.linalg.inv(world2camera)

np.savetxt('camera_pose.txt', camera_pose, delimiter=' ')

print('Done.')

# DEBUG CODE -----------------------------------------------------------------------------------

# np.savetxt('measured_pts.txt', np.asarray(measured_pts), delimiter=' ')

# np.savetxt('observed_pts.txt', np.asarray(observed_pts), delimiter=' ')

# np.savetxt('observed_pix.txt', np.asarray(observed_pix), delimiter=' ')

# measured_pts = np.loadtxt('measured_pts.txt', delimiter=' ')

# observed_pts = np.loadtxt('observed_pts.txt', delimiter=' ')

# observed_pix = np.loadtxt('observed_pix.txt', delimiter=' ')

# fig = plt.figure()

# ax = fig.add_subplot(111, projection='3d')

# ax.scatter(measured_pts[:,0],measured_pts[:,1],measured_pts[:,2], c='blue')

# print(camera_depth_offset)

# R, t = get_rigid_transform(np.asarray(measured_pts), np.asarray(observed_pts))

# t.shape = (3,1)

# camera_pose = np.concatenate((np.concatenate((R, t), axis=1),np.array([[0, 0, 0, 1]])), axis=0)

# camera2robot = np.linalg.inv(camera_pose)

# t_observed_pts = np.transpose(np.dot(camera2robot[0:3,0:3],np.transpose(observed_pts)) + np.tile(camera2robot[0:3,3:],(1,observed_pts.shape[0])))

# ax.scatter(t_observed_pts[:,0],t_observed_pts[:,1],t_observed_pts[:,2], c='red')

# new_observed_pts = observed_pts.copy()

# new_observed_pts[:,2] = new_observed_pts[:,2] * camera_depth_offset[0]

# R, t = get_rigid_transform(np.asarray(measured_pts), np.asarray(new_observed_pts))

# t.shape = (3,1)

# camera_pose = np.concatenate((np.concatenate((R, t), axis=1),np.array([[0, 0, 0, 1]])), axis=0)

# camera2robot = np.linalg.inv(camera_pose)

# t_new_observed_pts = np.transpose(np.dot(camera2robot[0:3,0:3],np.transpose(new_observed_pts)) + np.tile(camera2robot[0:3,3:],(1,new_observed_pts.shape[0])))

# ax.scatter(t_new_observed_pts[:,0],t_new_observed_pts[:,1],t_new_observed_pts[:,2], c='green')

# plt.show()

7. GRCNN部署真实机械臂

上面一切调试就绪,就可以根据自己的环境配置平面抓取的工作

plane_grasp_real.py

import os

import time

import matplotlib.pyplot as plt

import numpy as np

import torch

from UR_Robot import UR_Robot

from inference.post_process import post_process_output

from utils.data.camera_data import CameraData

from utils.dataset_processing.grasp import detect_grasps

from utils.visualisation.plot import plot_grasp

import cv2

from PIL import Image

import torchvision.transforms as transforms

class PlaneGraspClass:

def __init__(self, saved_model_path=None,use_cuda=True,visualize=False,include_rgb=True,include_depth=True,output_size=300):

if saved_model_path==None:

saved_model_path = 'trained-models/jacquard-rgbd-grconvnet3-drop0-ch32/epoch_48_iou_0.93'

self.model = torch.load(saved_model_path)

self.device = "cuda:0" if use_cuda else "cpu"

self.visualize = visualize

self.cam_data = CameraData(include_rgb=include_rgb,include_depth=include_depth,output_size=output_size)

# Load camera pose and depth scale (from running calibration)

self.ur_robot = UR_Robot(tcp_host_ip="192.168.50.100", tcp_port=30003, workspace_limits=None, is_use_robotiq85=True,

is_use_camera=True)

self.cam_pose = self.ur_robot.cam_pose

self.cam_depth_scale = self.ur_robot.cam_depth_scale

self.intrinsic = self.ur_robot.cam_intrinsics

if self.visualize:

self.fig = plt.figure(figsize=(6, 6))

else:

self.fig = None

def generate(self):

## if you want to use RGBD from camera,use me

# Get RGB-D image from camera

rgb, depth= self.ur_robot.get_camera_data() # meter

depth = depth *self.cam_depth_scale

depth[depth >1]=0 # distance > 1.2m ,remove it

## if you don't have realsense camera, use me

# num =2 # change me num=[1:6],and you can see the result in '/result' file

# rgb_path = f"./cmp{num}.png"

# depth_path = f"./hmp{num}.png"

# rgb = np.array(Image.open(rgb_path))

# depth = np.array(Image.open(depth_path)).astype(np.float32)

# depth = depth * self.cam_depth_scale

# depth[depth > 1.2] = 0 # distance > 1.2m ,remove it

depth= np.expand_dims(depth, axis=2)

x, depth_img, rgb_img = self.cam_data.get_data(rgb=rgb, depth=depth)

rgb = cv2.cvtColor(rgb,cv2.COLOR_BGR2RGB)

with torch.no_grad():

xc = x.to(self.device)

pred = self.model.predict(xc)

q_img, ang_img, width_img = post_process_output(pred['pos'], pred['cos'], pred['sin'], pred['width'])

grasps = detect_grasps(q_img, ang_img, width_img)

if len(grasps) ==0:

print("Detect 0 grasp pose!")

if self.visualize:

plot_grasp(fig=self.fig, rgb_img=self.cam_data.get_rgb(rgb, False), grasps=grasps, save=True)

return False

## For real UR robot

# Get grasp position from model output

pos_z = depth[grasps[0].center[0] + self.cam_data.top_left[0], grasps[0].center[1] + self.cam_data.top_left[1]]

pos_x = np.multiply(grasps[0].center[1] + self.cam_data.top_left[1] - self.intrinsic[0][2],

pos_z / self.intrinsic[0][0])

pos_y = np.multiply(grasps[0].center[0] + self.cam_data.top_left[0] - self.intrinsic[1][2],

pos_z / self.intrinsic[1][1])

if pos_z == 0:

return False

target = np.asarray([pos_x, pos_y, pos_z])

target.shape = (3, 1)

# Convert camera to robot coordinates

camera2robot = self.cam_pose

target_position = np.dot(camera2robot[0:3, 0:3], target) + camera2robot[0:3, 3:]

target_position = target_position[0:3, 0]

# Convert camera to robot angle

angle = np.asarray([0, 0, grasps[0].angle])

angle.shape = (3, 1)

target_angle = np.dot(camera2robot[0:3, 0:3], angle)

# compute gripper width

width = grasps[0].length # mm

if width < 25: # detect error

width = 70

elif width <40:

width =45

elif width > 85:

width = 85

# Concatenate grasp pose with grasp angle

grasp_pose = np.append(target_position, target_angle[2])

print('grasp_pose: ', grasp_pose)

print('grasp_width: ',grasps[0].length)

# np.save(self.grasp_pose, grasp_pose)

if self.visualize:

plot_grasp(fig=self.fig, rgb_img=self.cam_data.get_rgb(rgb, False), grasps=grasps, save=True)

success = self.ur_robot.plane_grasp([grasp_pose[0],grasp_pose[1],grasp_pose[2]], yaw=grasp_pose[3], open_size=width/100)

return success

## For having not real robot

# if self.visualize:

# plot_grasp(fig=self.fig, rgb_img=self.cam_data.get_rgb(rgb, False), grasps=grasps, save=True)

# return True

if __name__ == '__main__':

g = PlaneGraspClass(

# saved_model_path='trained-models/jacquard-rgbd-grconvnet3-drop0-ch32/epoch_48_iou_0.93',

saved_model_path='trained-models/jacquard-rgbd-grconvnet3-drop0-ch32/epoch_42_iou_0.93',

# saved_model_path='trained-models/jacquard-rgbd-grconvnet3-drop0-ch32/epoch_35_iou_0.92',

visualize=True,

include_rgb=True

)

g.generate()

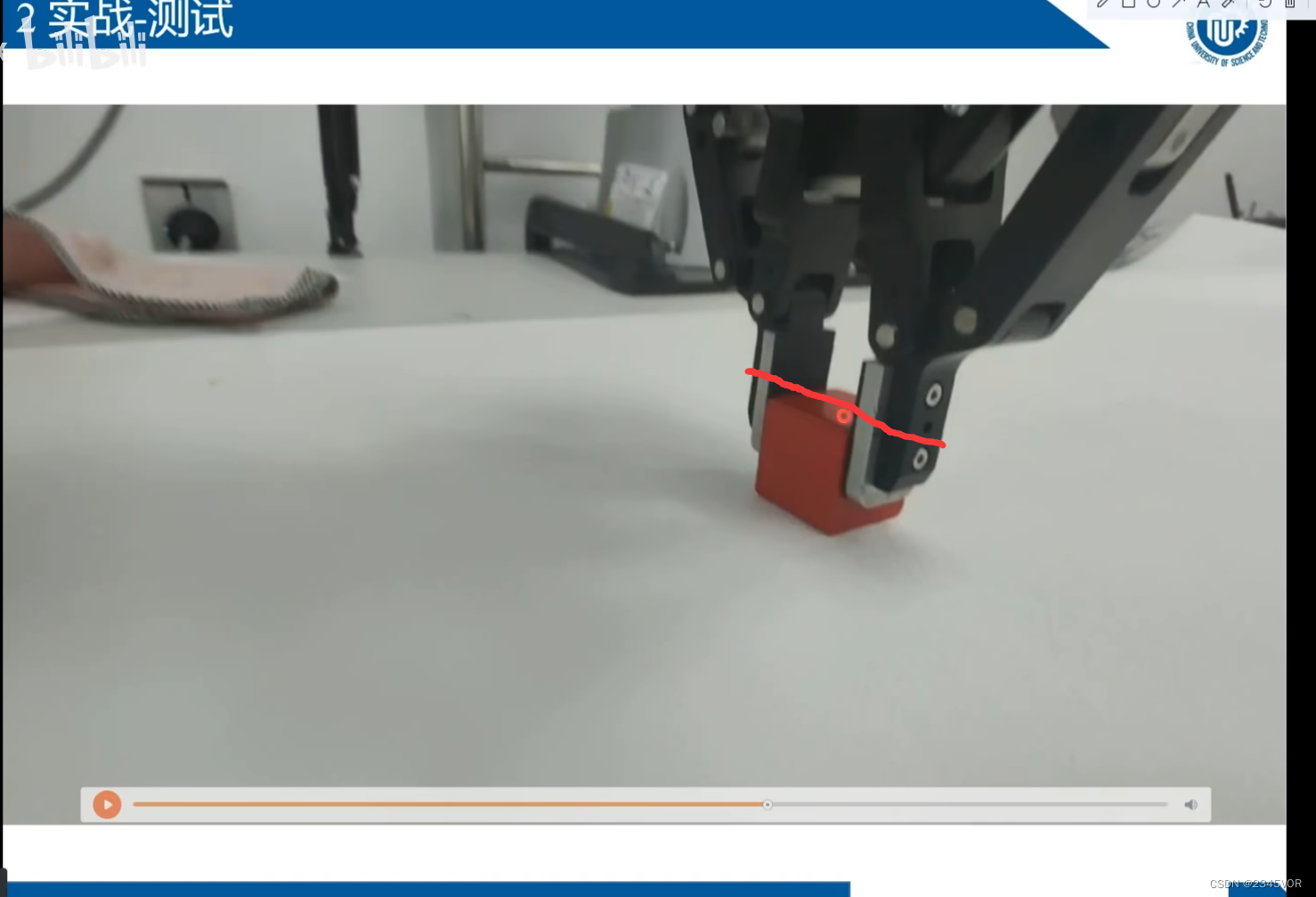

实验效果

【机械臂视觉抓取从理论到实战】

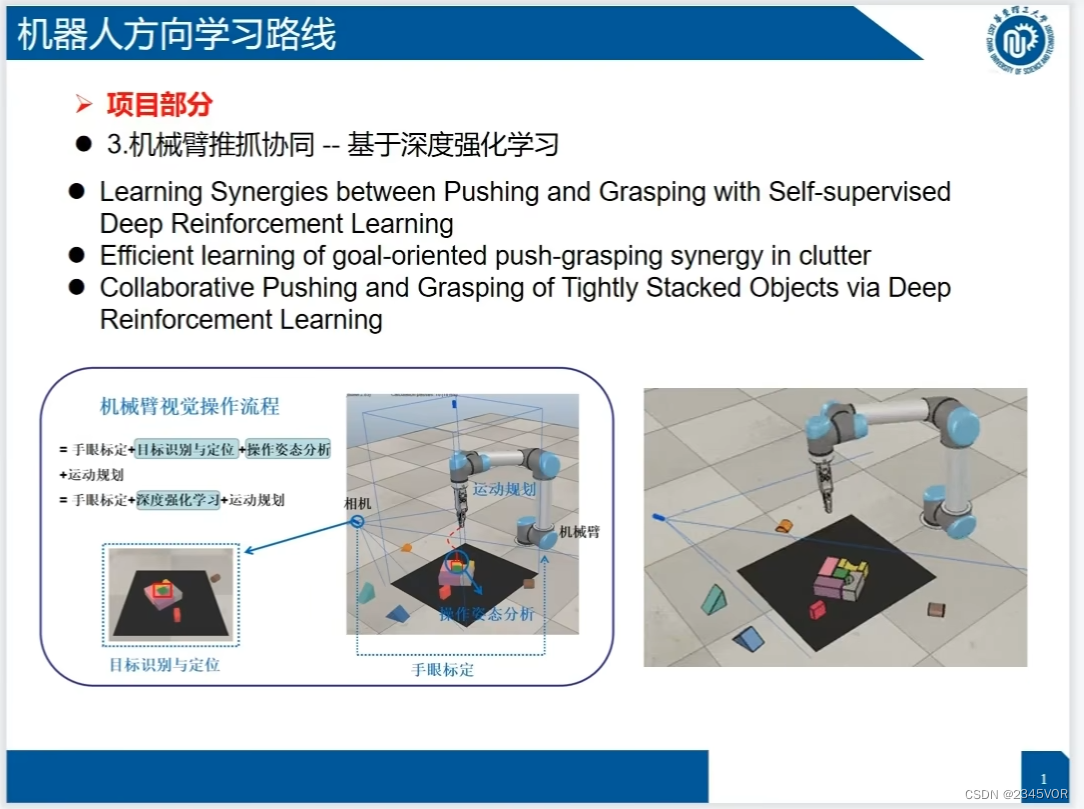

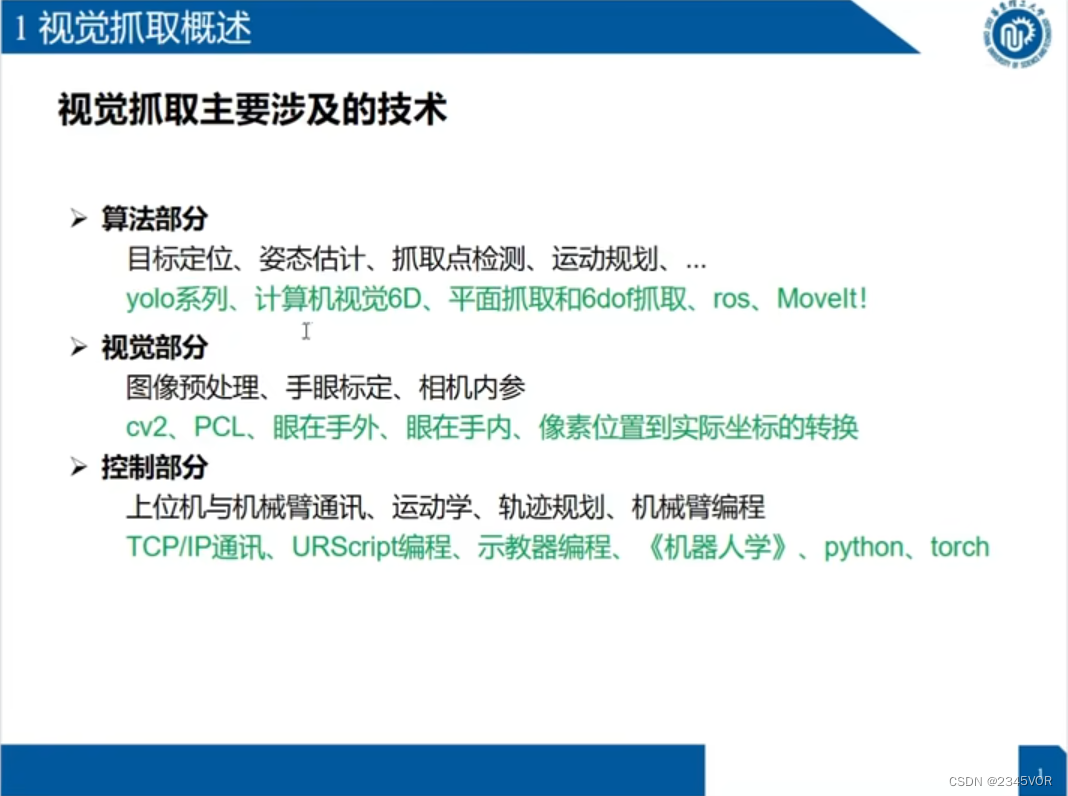

8. 机器人学习路线