基于MINIST数据集训练简单的深度多层感知机

代码注释

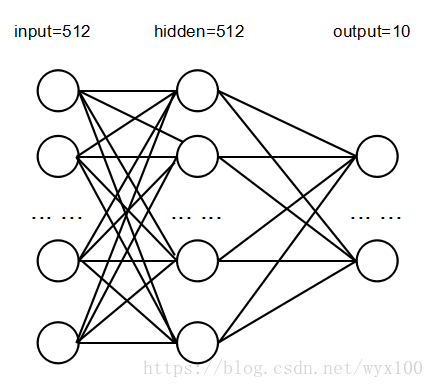

代码中神经网络(多次感知机)结构

'''Trains a simple deep NN on the MNIST dataset.

基于MINIST数据集训练简单的深度多层感知机

Gets to 98.40% test accuracy after 20 epochs

(there is *a lot* of margin for parameter tuning).

2 seconds per epoch on a K520 GPU.

20个周期后获取98.40%的准确度(通过参数调整,还有提升空间)

2秒/每个周期,基于一个K520 GPU(Graphics Processing Unit,图形处理器)

'''

from __future__ import print_function

import keras

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers import Dense, Dropout

from keras.optimizers import RMSprop

batch_size = 128

num_classes = 10

epochs = 20

# the data, shuffled and split between train and test sets

# 用于训练和测试的数据集,经过了筛选(清洗、数据样本顺序打乱)和划分(划分为训练和测试集)

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train = x_train.reshape(60000, 784)

x_test = x_test.reshape(10000, 784)

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

x_train /= 255

x_test /= 255

print(x_train.shape[0], 'train samples')

print(x_test.shape[0], 'test samples')

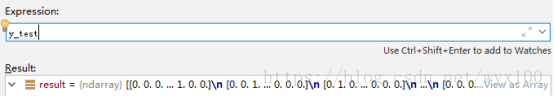

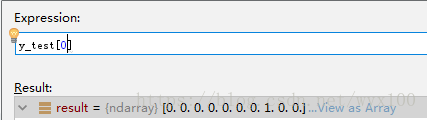

# convert class vectors to binary class matrices

# 类别向量转为多(num_classes = 10)分类矩阵

y_train = keras.utils.to_categorical(y_train, num_classes)

y_test = keras.utils.to_categorical(y_test, num_classes)

model = Sequential()

model.add(Dense(512, activation='relu', input_shape=(784,)))

model.add(Dropout(0.2))

model.add(Dense(512, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(num_classes, activation='softmax'))

model.summary()

model.compile(loss='categorical_crossentropy',

optimizer=RMSprop(),

metrics=['accuracy'])

history = model.fit(x_train, y_train,

batch_size=batch_size,

epochs=epochs,

verbose=1,

validation_data=(x_test, y_test))

score = model.evaluate(x_test, y_test, verbose=0)

print('Test loss:', score[0])

print('Test accuracy:', score[1])

# convert class vectors to binary class matrices # 类别向量转为多(num_classes = 10)分类矩阵 y_train = keras.utils.to_categorical(y_train, num_classes) y_test = keras.utils.to_categorical(y_test, num_classes)

代码执行

C:\ProgramData\Anaconda3\python.exe E:/keras-master/examples/mnist_mlp.py Using TensorFlow backend. 60000 train samples 10000 test samples _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= dense_1 (Dense) (None, 512) 401920 _________________________________________________________________ dropout_1 (Dropout) (None, 512) 0 _________________________________________________________________ dense_2 (Dense) (None, 512) 262656 _________________________________________________________________ dropout_2 (Dropout) (None, 512) 0 _________________________________________________________________ dense_3 (Dense) (None, 10) 5130 ================================================================= Total params: 669,706 Trainable params: 669,706 Non-trainable params: 0 _________________________________________________________________ Train on 60000 samples, validate on 10000 samples Epoch 1/20 128/60000 [..............................] - ETA: 2:36 - loss: 2.3678 - acc: 0.0625 512/60000 [..............................] - ETA: 46s - loss: 1.9186 - acc: 0.3164 896/60000 [..............................] - ETA: 30s - loss: 1.6323 - acc: 0.4319 1280/60000 [..............................] - ETA: 23s - loss: 1.4030 - acc: 0.5305 1664/60000 [..............................] - ETA: 20s - loss: 1.2462 - acc: 0.5841 2048/60000 [>.............................] - ETA: 18s - loss: 1.1259 - acc: 0.6250 2432/60000 [>.............................] - ETA: 16s - loss: 1.0272 - acc: 0.6608 2816/60000 [>.............................] - ETA: 15s - loss: 0.9782 - acc: 0.6793 3200/60000 [>.............................] - ETA: 14s - loss: 0.9190 - acc: 0.7013 3584/60000 [>.............................] - ETA: 14s - loss: 0.8761 - acc: 0.7157 3968/60000 [>.............................] - ETA: 13s - loss: 0.8447 - acc: 0.7251 4352/60000 [=>............................] - ETA: 12s - loss: 0.8090 - acc: 0.7360 4736/60000 [=>............................] - ETA: 12s - loss: 0.7856 - acc: 0.7456 5120/60000 [=>............................] - ETA: 12s - loss: 0.7541 - acc: 0.7555 5504/60000 [=>............................] - ETA: 11s - loss: 0.7313 - acc: 0.7644 5888/60000 [=>............................] - ETA: 11s - loss: 0.7116 - acc: 0.7712 6272/60000 [==>...........................] - ETA: 11s - loss: 0.6956 - acc: 0.7777 6656/60000 [==>...........................] - ETA: 10s - loss: 0.6776 - acc: 0.7838 7040/60000 [==>...........................] - ETA: 10s - loss: 0.6650 - acc: 0.7862 7424/60000 [==>...........................] - ETA: 10s - loss: 0.6504 - acc: 0.7912 7808/60000 [==>...........................] - ETA: 10s - loss: 0.6358 - acc: 0.7960 8192/60000 [===>..........................] - ETA: 10s - loss: 0.6193 - acc: 0.8015 8576/60000 [===>..........................] - ETA: 10s - loss: 0.6073 - acc: 0.8060 8960/60000 [===>..........................] - ETA: 9s - loss: 0.5984 - acc: 0.8098 9344/60000 [===>..........................] - ETA: 9s - loss: 0.5883 - acc: 0.8140 9728/60000 [===>..........................] - ETA: 9s - loss: 0.5789 - acc: 0.8167 10112/60000 [====>.........................] - ETA: 9s - loss: 0.5677 - acc: 0.8201 10496/60000 [====>.........................] - ETA: 9s - loss: 0.5583 - acc: 0.8224 10880/60000 [====>.........................] - ETA: 9s - loss: 0.5503 - acc: 0.8254 11264/60000 [====>.........................] - ETA: 9s - loss: 0.5410 - acc: 0.8287 11648/60000 [====>.........................] - ETA: 8s - loss: 0.5351 - acc: 0.8307 12032/60000 [=====>........................] - ETA: 8s - loss: 0.5254 - acc: 0.8340 12416/60000 [=====>........................] - ETA: 8s - loss: 0.5169 - acc: 0.8366 12800/60000 [=====>........................] - ETA: 8s - loss: 0.5095 - acc: 0.8387 13184/60000 [=====>........................] - ETA: 8s - loss: 0.5015 - acc: 0.8412 13568/60000 [=====>........................] - ETA: 8s - loss: 0.4955 - acc: 0.8433 13952/60000 [=====>........................] - ETA: 8s - loss: 0.4892 - acc: 0.8454 14336/60000 [======>.......................] - ETA: 8s - loss: 0.4826 - acc: 0.8477 14720/60000 [======>.......................] - ETA: 8s - loss: 0.4764 - acc: 0.8498 15104/60000 [======>.......................] - ETA: 8s - loss: 0.4704 - acc: 0.8519 15488/60000 [======>.......................] - ETA: 7s - loss: 0.4650 - acc: 0.8536 15872/60000 [======>.......................] - ETA: 7s - loss: 0.4598 - acc: 0.8552 16256/60000 [=======>......................] - ETA: 7s - loss: 0.4555 - acc: 0.8567 16640/60000 [=======>......................] - ETA: 7s - loss: 0.4498 - acc: 0.8582 17024/60000 [=======>......................] - ETA: 7s - loss: 0.4442 - acc: 0.8598 17408/60000 [=======>......................] - ETA: 7s - loss: 0.4404 - acc: 0.8609 17792/60000 [=======>......................] - ETA: 7s - loss: 0.4377 - acc: 0.8620 18176/60000 [========>.....................] - ETA: 7s - loss: 0.4328 - acc: 0.8634 18560/60000 [========>.....................] - ETA: 7s - loss: 0.4293 - acc: 0.8647 18944/60000 [========>.....................] - ETA: 7s - loss: 0.4249 - acc: 0.8663 19328/60000 [========>.....................] - ETA: 7s - loss: 0.4217 - acc: 0.8671 19712/60000 [========>.....................] - ETA: 7s - loss: 0.4168 - acc: 0.8688 20096/60000 [=========>....................] - ETA: 6s - loss: 0.4121 - acc: 0.8704 20480/60000 [=========>....................] - ETA: 6s - loss: 0.4077 - acc: 0.8717 20864/60000 [=========>....................] - ETA: 6s - loss: 0.4038 - acc: 0.8728 21248/60000 [=========>....................] - ETA: 6s - loss: 0.4001 - acc: 0.8738 21632/60000 [=========>....................] - ETA: 6s - loss: 0.3966 - acc: 0.8753 22016/60000 [==========>...................] - ETA: 6s - loss: 0.3922 - acc: 0.8768 22400/60000 [==========>...................] - ETA: 6s - loss: 0.3889 - acc: 0.8777 22784/60000 [==========>...................] - ETA: 6s - loss: 0.3855 - acc: 0.8789 23168/60000 [==========>...................] - ETA: 6s - loss: 0.3826 - acc: 0.8798 23552/60000 [==========>...................] - ETA: 6s - loss: 0.3794 - acc: 0.8807 23936/60000 [==========>...................] - ETA: 6s - loss: 0.3756 - acc: 0.8819 24320/60000 [===========>..................] - ETA: 6s - loss: 0.3724 - acc: 0.8828 24704/60000 [===========>..................] - ETA: 6s - loss: 0.3708 - acc: 0.8833 25088/60000 [===========>..................] - ETA: 6s - loss: 0.3689 - acc: 0.8839 25472/60000 [===========>..................] - ETA: 5s - loss: 0.3663 - acc: 0.8846 25856/60000 [===========>..................] - ETA: 5s - loss: 0.3644 - acc: 0.8852 26240/60000 [============>.................] - ETA: 5s - loss: 0.3624 - acc: 0.8859 26624/60000 [============>.................] - ETA: 5s - loss: 0.3591 - acc: 0.8870 27008/60000 [============>.................] - ETA: 5s - loss: 0.3570 - acc: 0.8875 27392/60000 [============>.................] - ETA: 5s - loss: 0.3544 - acc: 0.8884 27776/60000 [============>.................] - ETA: 5s - loss: 0.3514 - acc: 0.8893 28160/60000 [=============>................] - ETA: 5s - loss: 0.3499 - acc: 0.8898 28544/60000 [=============>................] - ETA: 5s - loss: 0.3471 - acc: 0.8906 28928/60000 [=============>................] - ETA: 5s - loss: 0.3445 - acc: 0.8914 29312/60000 [=============>................] - ETA: 5s - loss: 0.3417 - acc: 0.8921 29696/60000 [=============>................] - ETA: 5s - loss: 0.3395 - acc: 0.8927 30080/60000 [==============>...............] - ETA: 5s - loss: 0.3382 - acc: 0.8930 30464/60000 [==============>...............] - ETA: 5s - loss: 0.3369 - acc: 0.8933 30848/60000 [==============>...............] - ETA: 4s - loss: 0.3345 - acc: 0.8942 31232/60000 [==============>...............] - ETA: 4s - loss: 0.3325 - acc: 0.8947 31616/60000 [==============>...............] - ETA: 4s - loss: 0.3307 - acc: 0.8953 32000/60000 [===============>..............] - ETA: 4s - loss: 0.3289 - acc: 0.8959 32384/60000 [===============>..............] - ETA: 4s - loss: 0.3271 - acc: 0.8965 32768/60000 [===============>..............] - ETA: 4s - loss: 0.3251 - acc: 0.8971 33152/60000 [===============>..............] - ETA: 4s - loss: 0.3230 - acc: 0.8979 33536/60000 [===============>..............] - ETA: 4s - loss: 0.3214 - acc: 0.8985 33920/60000 [===============>..............] - ETA: 4s - loss: 0.3198 - acc: 0.8988 34304/60000 [================>.............] - ETA: 4s - loss: 0.3175 - acc: 0.8995 34688/60000 [================>.............] - ETA: 4s - loss: 0.3164 - acc: 0.9000 35072/60000 [================>.............] - ETA: 4s - loss: 0.3144 - acc: 0.9006 35456/60000 [================>.............] - ETA: 4s - loss: 0.3133 - acc: 0.9011 35840/60000 [================>.............] - ETA: 4s - loss: 0.3115 - acc: 0.9017 36224/60000 [=================>............] - ETA: 4s - loss: 0.3098 - acc: 0.9022 36608/60000 [=================>............] - ETA: 3s - loss: 0.3080 - acc: 0.9028 36992/60000 [=================>............] - ETA: 3s - loss: 0.3063 - acc: 0.9034 37376/60000 [=================>............] - ETA: 3s - loss: 0.3050 - acc: 0.9038 37760/60000 [=================>............] - ETA: 3s - loss: 0.3028 - acc: 0.9044 38144/60000 [==================>...........] - ETA: 3s - loss: 0.3008 - acc: 0.9050 38528/60000 [==================>...........] - ETA: 3s - loss: 0.2996 - acc: 0.9055 38912/60000 [==================>...........] - ETA: 3s - loss: 0.2984 - acc: 0.9058 39296/60000 [==================>...........] - ETA: 3s - loss: 0.2971 - acc: 0.9063 39680/60000 [==================>...........] - ETA: 3s - loss: 0.2962 - acc: 0.9066 40064/60000 [===================>..........] - ETA: 3s - loss: 0.2951 - acc: 0.9070 40448/60000 [===================>..........] - ETA: 3s - loss: 0.2939 - acc: 0.9074 40832/60000 [===================>..........] - ETA: 3s - loss: 0.2930 - acc: 0.9077 41216/60000 [===================>..........] - ETA: 3s - loss: 0.2920 - acc: 0.9081 41600/60000 [===================>..........] - ETA: 3s - loss: 0.2908 - acc: 0.9085 41984/60000 [===================>..........] - ETA: 3s - loss: 0.2894 - acc: 0.9089 42368/60000 [====================>.........] - ETA: 2s - loss: 0.2882 - acc: 0.9094 42752/60000 [====================>.........] - ETA: 2s - loss: 0.2878 - acc: 0.9097 43136/60000 [====================>.........] - ETA: 2s - loss: 0.2866 - acc: 0.9102 43520/60000 [====================>.........] - ETA: 2s - loss: 0.2858 - acc: 0.9104 43904/60000 [====================>.........] - ETA: 2s - loss: 0.2846 - acc: 0.9107 44288/60000 [=====================>........] - ETA: 2s - loss: 0.2840 - acc: 0.9110 44672/60000 [=====================>........] - ETA: 2s - loss: 0.2829 - acc: 0.9113 45056/60000 [=====================>........] - ETA: 2s - loss: 0.2816 - acc: 0.9118 45440/60000 [=====================>........] - ETA: 2s - loss: 0.2800 - acc: 0.9122 45824/60000 [=====================>........] - ETA: 2s - loss: 0.2788 - acc: 0.9126 46208/60000 [======================>.......] - ETA: 2s - loss: 0.2780 - acc: 0.9129 46592/60000 [======================>.......] - ETA: 2s - loss: 0.2771 - acc: 0.9133 46976/60000 [======================>.......] - ETA: 2s - loss: 0.2760 - acc: 0.9136 47360/60000 [======================>.......] - ETA: 2s - loss: 0.2751 - acc: 0.9139 47744/60000 [======================>.......] - ETA: 2s - loss: 0.2741 - acc: 0.9143 48128/60000 [=======================>......] - ETA: 1s - loss: 0.2733 - acc: 0.9145 48512/60000 [=======================>......] - ETA: 1s - loss: 0.2722 - acc: 0.9149 48896/60000 [=======================>......] - ETA: 1s - loss: 0.2711 - acc: 0.9152 49280/60000 [=======================>......] - ETA: 1s - loss: 0.2704 - acc: 0.9154 49664/60000 [=======================>......] - ETA: 1s - loss: 0.2690 - acc: 0.9158 50048/60000 [========================>.....] - ETA: 1s - loss: 0.2681 - acc: 0.9161 50432/60000 [========================>.....] - ETA: 1s - loss: 0.2672 - acc: 0.9165 50816/60000 [========================>.....] - ETA: 1s - loss: 0.2663 - acc: 0.9168 51200/60000 [========================>.....] - ETA: 1s - loss: 0.2654 - acc: 0.9171 51584/60000 [========================>.....] - ETA: 1s - loss: 0.2646 - acc: 0.9174 51968/60000 [========================>.....] - ETA: 1s - loss: 0.2641 - acc: 0.9176 52352/60000 [=========================>....] - ETA: 1s - loss: 0.2627 - acc: 0.9181 52736/60000 [=========================>....] - ETA: 1s - loss: 0.2616 - acc: 0.9184 53120/60000 [=========================>....] - ETA: 1s - loss: 0.2606 - acc: 0.9188 53504/60000 [=========================>....] - ETA: 1s - loss: 0.2596 - acc: 0.9191 53888/60000 [=========================>....] - ETA: 1s - loss: 0.2587 - acc: 0.9194 54272/60000 [==========================>...] - ETA: 0s - loss: 0.2576 - acc: 0.9197 54656/60000 [==========================>...] - ETA: 0s - loss: 0.2571 - acc: 0.9200 55040/60000 [==========================>...] - ETA: 0s - loss: 0.2560 - acc: 0.9203 55424/60000 [==========================>...] - ETA: 0s - loss: 0.2551 - acc: 0.9206 55808/60000 [==========================>...] - ETA: 0s - loss: 0.2543 - acc: 0.9209 56192/60000 [===========================>..] - ETA: 0s - loss: 0.2534 - acc: 0.9212 56576/60000 [===========================>..] - ETA: 0s - loss: 0.2527 - acc: 0.9214 56960/60000 [===========================>..] - ETA: 0s - loss: 0.2520 - acc: 0.9217 57344/60000 [===========================>..] - ETA: 0s - loss: 0.2514 - acc: 0.9218 57728/60000 [===========================>..] - ETA: 0s - loss: 0.2506 - acc: 0.9220 58112/60000 [============================>.] - ETA: 0s - loss: 0.2497 - acc: 0.9223 58496/60000 [============================>.] - ETA: 0s - loss: 0.2490 - acc: 0.9224 58880/60000 [============================>.] - ETA: 0s - loss: 0.2485 - acc: 0.9227 59264/60000 [============================>.] - ETA: 0s - loss: 0.2475 - acc: 0.9230 59648/60000 [============================>.] - ETA: 0s - loss: 0.2470 - acc: 0.9232 60000/60000 [==============================] - 10s 173us/step - loss: 0.2465 - acc: 0.9234 - val_loss: 0.1060 - val_acc: 0.9671 Epoch 2/20 128/60000 [..............................] - ETA: 9s - loss: 0.0739 - acc: 0.9766 512/60000 [..............................] - ETA: 9s - loss: 0.0777 - acc: 0.9766 896/60000 [..............................] - ETA: 9s - loss: 0.0915 - acc: 0.9688 58880/60000 [============================>.] - ETA: 0s - loss: 0.0166 - acc: 0.9956 59264/60000 [============================>.] - ETA: 0s - loss: 0.0165 - acc: 0.9956 59648/60000 [============================>.] - ETA: 0s - loss: 0.0165 - acc: 0.9956 60000/60000 [==============================] - 10s 162us/step - loss: 0.0164 - acc: 0.9957 - val_loss: 0.1177 - val_acc: 0.9824 Test loss: 0.117675279252 Test accuracy: 0.9824 Process finished with exit code 0

Keras详细介绍

扫描二维码关注公众号,回复:

1566580 查看本文章

中文:http://keras-cn.readthedocs.io/en/latest/

实例下载

https://github.com/keras-team/keras

https://github.com/keras-team/keras/tree/master/examples

完整项目下载

方便没积分童鞋,请加企鹅452205574,共享文件夹。

包括:代码、数据集合(图片)、已生成model、安装库文件等。