以下是hadoop 基本API使用,包括文件上传,文件下载,查看文件目录,创建文件夹,删除文件。

package com.lqq.hadoop;

import java.io.ByteArrayOutputStream;

import java.io.InputStream;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.junit.Test;

public class TestHdfs {

final static String HDFS_URL = "hdfs://192.168.1.210:9000/";

public static void main(String[] args) throws Exception {

System.out.println(System.getenv("USERNAME"));

/**

* 查看文件结构

*/

showFiles(new Path("/"),1);

}

/**

* 上传文件到hdfs

* @throws Exception

*/

@Test

public void putFile2Hdfs() throws Exception {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://192.168.1.210:9000/");

FileSystem fs = FileSystem.get(conf);

fs.copyFromLocalFile(new Path("H:/ff.txt"), new Path("/user/hadoop/"));

}

/**

* 从hdfs读取文件内容

*/

@Test

public void readFileFromHdfs() throws Exception{

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://192.168.1.210:9000/");

FileSystem fs = FileSystem.get(conf);

FSDataInputStream is = fs.open(new Path("/user/hadoop/ff.txt"));

byte[] buff = new byte[1024];

int len = -1;

ByteArrayOutputStream baos = new ByteArrayOutputStream();

while( (len = is.read(buff)) != -1) {

baos.write(buff, 0, len);

}

is.close();

baos.close();

System.out.println(baos.size());

System.out.println(new String(baos.toByteArray()));

}

/**

* 从hdfs读取文件内容

* version2

*/

@Test

public void readFileFromHdfs_2() {

InputStream in = null;

try {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://192.168.1.210:9000/");

FileSystem fs = FileSystem.get(conf);

in = fs.open(new Path("/user/hadoop/ff.txt"));

IOUtils.copyBytes(in, System.out, conf, false);

} catch (Exception e) {}

finally {

IOUtils.closeStream(in);

}

}

/**

* 测试创建文件夹

*/

@Test

public void mkdirOnHdfs() throws Exception {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://192.168.1.210:9000/");

FileSystem fs = FileSystem.get(conf);

fs.mkdirs(new Path("/user/hadoop/test"));

}

/**

* 删除文件

*/

@Test

public void deleteFileOnHdfs() throws Exception{

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://192.168.1.210:9000/");

FileSystem fs = FileSystem.get(conf);

fs.deleteOnExit(new Path("/user/hadoop/test"));

}

/**

* 递归整个文件系统

*/

public static void showFiles(Path p,int dep) throws Exception {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://192.168.1.210:9000/");

FileSystem fs = FileSystem.get(conf);

FileStatus[] fileStatus = fs.listStatus(p);

for(int i=0;i<fileStatus.length;i++) {

FileStatus status = fileStatus[i];

String line = "";

for(int j=0;j<dep;j++) {

line += "----";

}

if(status.isDirectory()) {

dep = dep + 1;

System.out.println(line + status.getPath());

showFiles(status.getPath(),dep);

}else if(status.isFile()) {

System.out.println(line + status.getPath());

}

}

}

}

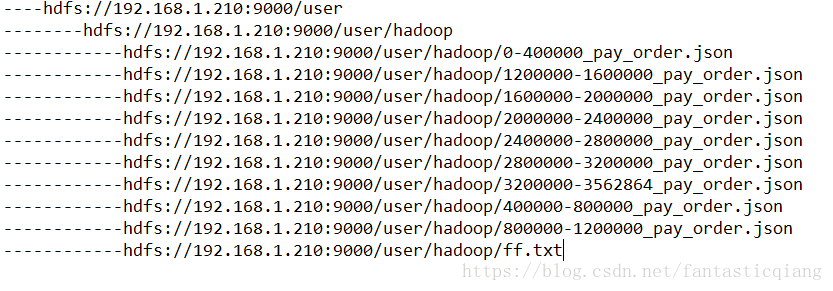

递归遍历文件系统,展示文件